Why you can trust Tom's Hardware

AMD Ryzen 7 5800X3D Boost Frequencies, Thermal Throttling Tests

Thermal dissipation can limit a chip's peak performance, particularly as process nodes become denser. Adding in the complexity of a 3D-stacked design adds thermal challenges, in this case, exacerbated by the silicon shim stacked atop the CPU cores — this shim transfers heat from the cores to the integrated heat spreader (IHS), but could inevitably reduce the efficiency of the heat transfer from the cores. In effect, it could trap a small amount of heat. Signs of those challenges cropped up in our thermal and boost testing, leading us to conduct more in-depth testing to back up our findings.

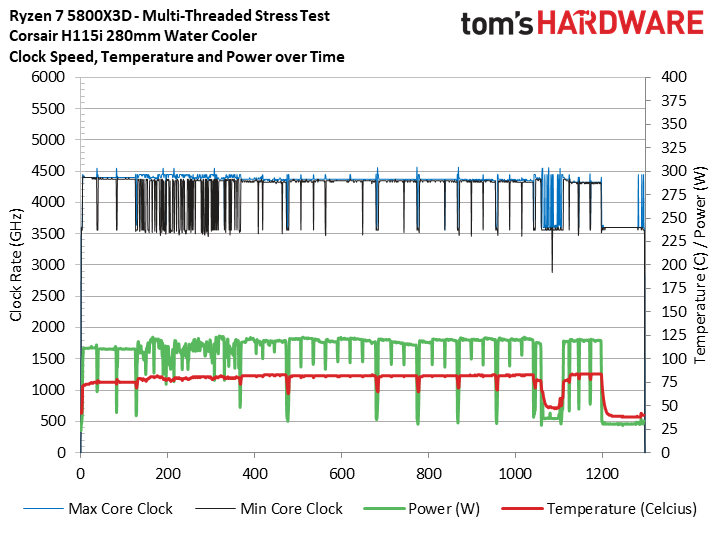

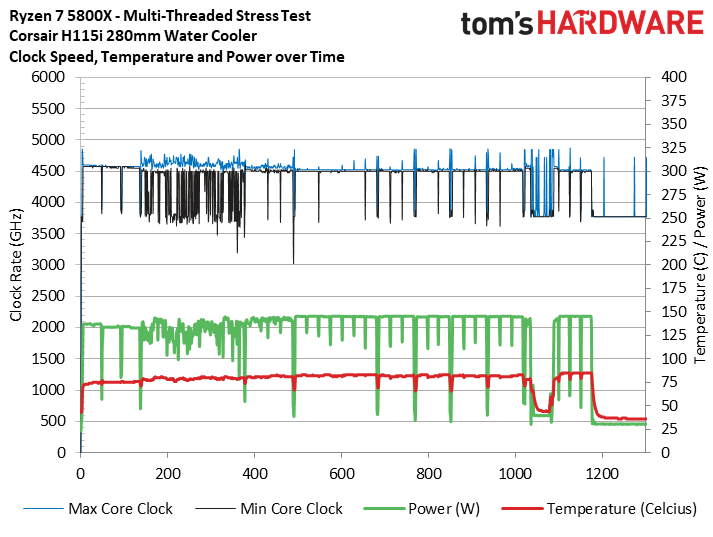

The slides above show the Ryzen 7 5800X3D and its nearly-similar counterpart, the Ryzen 7 5800X, which doesn't have a 3D V-Cache design, running through a spate of standard heavily threaded applications (Cinebench, HandBrake, AVX-heavy y-cruncher) to measure power and thermals. We used a Corsair H115i 280mm AIO with the fans cranked to 100% to keep the chips as cool as possible during this test run.

The 5800X3D has a 400 MHz lower base and 200 MHz lower boost clock than the Ryzen 7 5800X, and we can see that the 5800X3D runs at 4.35 GHz during the heaviest multi-core workloads while the 5800X runs at 4.5 GHz. This is expected given the specifications, but we also noticed that the 5800X draws up to 145W while reaching those higher clock speeds, while the 5800X3D only peaks around 120W. This despite both chips having the same 105W TDP and 142W PPT.

Both chips reach the same peak around 80C during the heavy parts of the test, showing that the 5800X3D runs at the same temperature even though the 5800X is consuming 25W more power and running at higher clocks. AMD tells us that thermals aren't the limiting factor that prevents higher clock speeds or the allowance of higher voltages (and thus more heat) for overclocking, but these results certainly imply that the 3D-stacked design doesn't dissipate heat as well as the standard design. To investigate further, we ran a more intense test below.

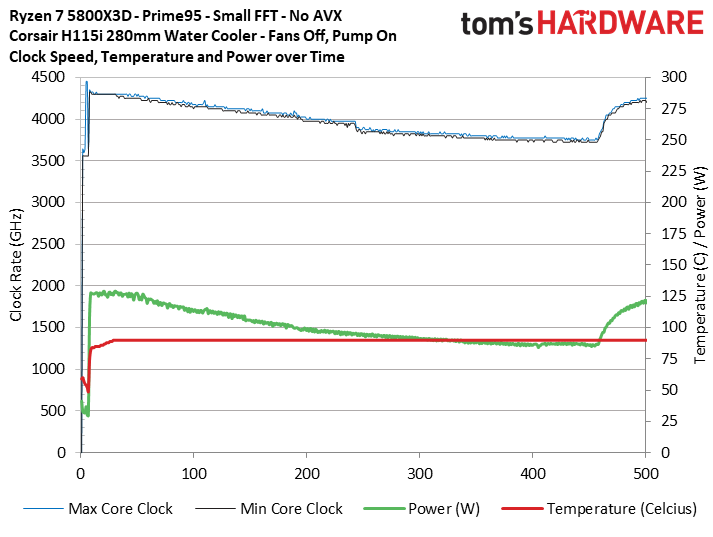

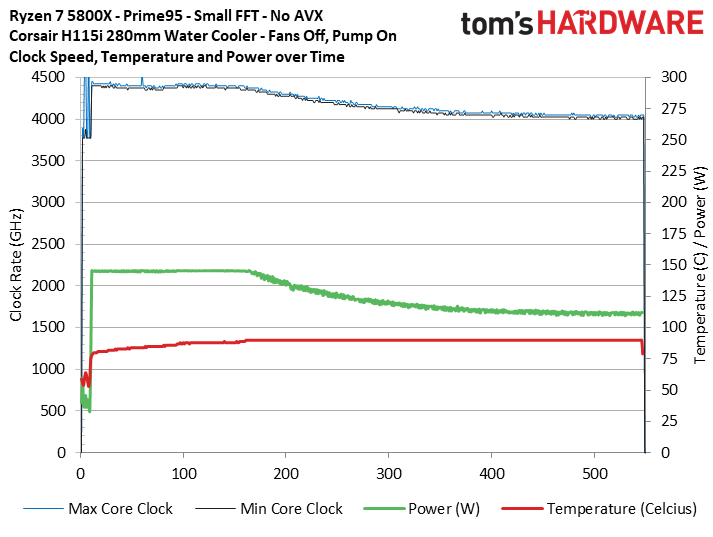

We turned to a Prime95 test under rigorous conditions to take a closer look at thermal dissipation. We often don't include Prime95 power measurements in our standard CPU reviews, largely because there is a massive disconnect between this extremely rigorous stress test and the power consumption and thermal load generated by most real-world applications. Still, we're specifically looking to push the chips to their throttle point for this test.

To push the processor's limits even harder, we unplugged the fans on the Corsair H115i cooler but left the pump running (unplugging the pump caused clocks to drop too rapidly for our logging to provide any granularity). We then kicked off a Prime95 run with small FFTs, but with AVX instructions disabled. This type of Prime 95 stress test is brutal, and you won't see this type of stress during even the heaviest normal use. As such, remember that we're doing this for science, and not as an indicator of how these chips would function in your PC.

The results clearly show that the 5800X3D peaks at 130W while the 5800X peaks at 145W. Then both chips reach a steady-state temperature of 90C before they begin aggressively throttling power, and thus clock speeds, to remain at this temperature threshold. You'll notice that the 5800X3D encounters this high temperature sooner than the 5800X, and it drops to lower clocks than the 5800X before the end of the test.

Additionally, the 5800X3D remains at 90C while drawing 86W at its lowest point, but the 5800X drew 110W at its lowest point at the same 90C. This shows that the 5800X3D doesn't dissipate as much thermal load as the 5800X within the same temperature threshold. In other words, if all else were equal, the 5800X3D would run hotter than the 5800X under identical conditions. In fact, even when consuming less power, the 5800X3D is undoubtedly hotter than the 5800X.

But to be clear: You would never encounter these conditions during normal use, and the Ryzen 7 5800X3D runs perfectly fine within its specifications. In fact, the 5800X3D is much cooler than the competing Core i9 processors.

Thermal dissipation has been one of the major sticking points that have prevented high-performance 3D chips from going mainstream, but AMD has done an amazing engineering job in bringing thermals under control enough to deliver a chip that provides excellent performance within an acceptable TDP threshold.

Our results certainly heavily imply that thermal dissipation will remain a serious challenge at higher power thresholds, and while AMD contends that voltage is the limiting factor that reigns in the 5800X3D's clock speeds and prohibits overclocking, there could be some room for interpretation of that statement. Most have taken AMD's statement to mean that heat isn't an issue, even though the company has also cited heat as a factor with 3D V-Cache at other times.

As a reminder, voltage, frequency, and thermals are all interrelated. Higher clock frequencies require more voltage, but more voltage results in more heat. A higher voltage may simply push the chip outside its comfortable thermal envelope. As such, 3D V-Cache's 1.35V limit may simply be a product definition designed to keep thermals in check given the chip's thermal design, and not be an actual physical limitation of the technology itself.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

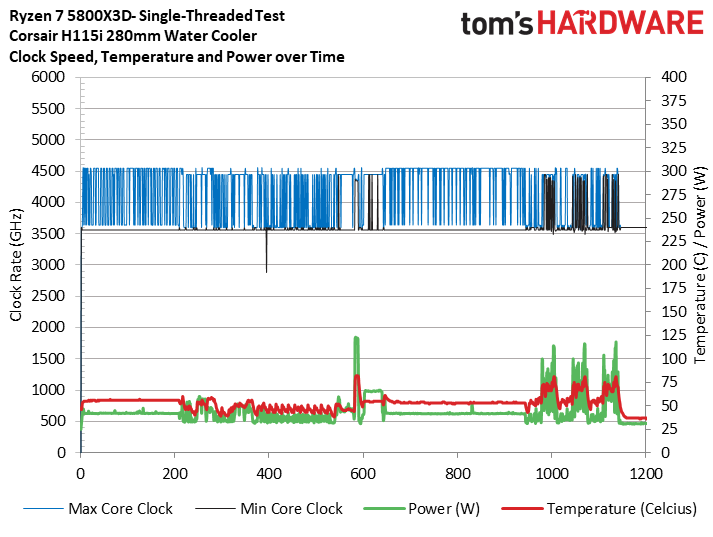

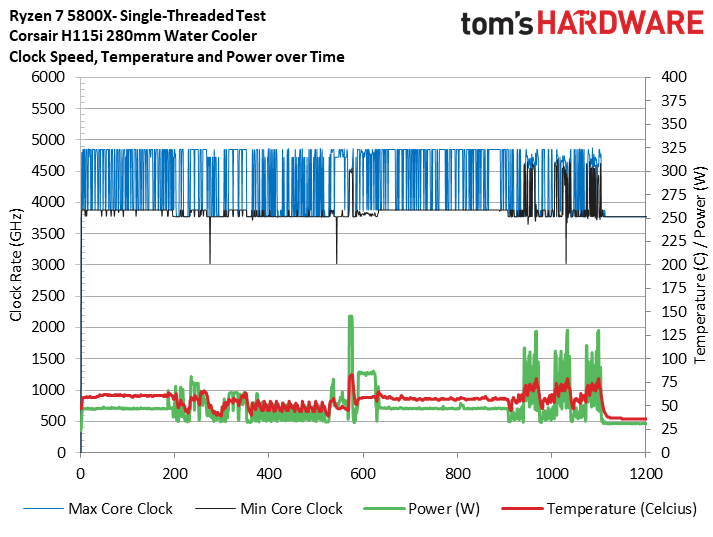

As part of our normal test regimen, we tested performance in lightly-threaded work with the H115i cooler. To assess peak boost speeds, we ran through our standard series of lightly-threaded tests (LAME, PCMark10, Geekbench, VRMark, and single-threaded Cinebench).

The 5800X3D reached its peak 4.5 GHz frequency frequently, while the 5800X actually exceeded its 4.7 GHz spec and regularly hit 4.8 GHz. Temperatures and power draw aren't a major concern through most of this test, but there are a series of multi-threaded Geekbench workloads near the 1000-second mark. Again, the 5800X draws more power and runs at higher clocks than the 5800X3D during these periods of heavy load, but it has nearly identical temperatures.

- MORE: Best CPUs for Gaming

- MORE: CPU Benchmark Hierarchy

- MORE: AMD vs Intel

- MORE: All CPUs Content

Current page: AMD Ryzen 7 5800X3D Boost Frequencies and Thermal Throttling Tests

Prev Page Ryzen 7 5800X3D: 3D V-Cache Tech, Design and Latency Testing Next Page AMD Ryzen 7 5800X3D Power Consumption, Efficiency, Thermals, Test Setup

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Kamen Rider Blade Depending on what applications you're using, there are potentially HUGE performance gains, even on non common workloads.Reply

Level1Techs & Hardware UnBoxed has shown that the 5800X3D is a good value compared to the 12900KS or 12900K -

Makaveli ReplyTom Sunday said:The 5800X3D on the surface looks good. Not the $449 price tag to be sure as many of us given our ongoing dilemma at the gas pumps leaves little or no cash available for higher-end PC fares. Besides AMD being a latecomer to the party with a practically outdated and niche CPU and especially with an all new CPU and hardware generation sitting virtually on our doorstep! At this point in time I would think that many will ‘hold and fold’ until better economic times are in sight and mind. At the latest local computer show the 5800X3D came up in discussion and it was said: “Looks like a very nice chip, but at this late time it’s not a good investment!”

I see this the other way its a fantastic upgrade for those on AM4 that may still be on Zen+ or Zen 2 and will prolong the life of those systems a few more years. Leaving time for pricing to go down on DDR5 when its time to upgrade. -

M42 Clearly, something is wrong with the 12900ks sample used (or the setup) if it can't be overclocked at all, and especially if it is not faster than an overclocked 12700k.Reply

Also, how could any conclusion be made without including 12900k/ks + DDR5 tests?

For example, from a TechSpot review, 12900k FarCry 6 performance was the following (no overclocking):

157 frames/sec - 12900k DDR4-3200

170 frames/sec - 12900k DDR5-6400

Details can be found in the "Gaming Benchmarks" section here:

https://www.techspot.com/review/2443-intel-core-i9-12900ks/

Even after benchmarking the 12900k/ks with DDR5, the 5800X3D might still be ahead in the geometric mean. But since DDR5 prices are dropping I think most people buying a 12900k/ks may end up using higher-end motherboards with DDR5 to squeeze out every last drop of performance. So, can you add some Alder Lake + DDR5 results, please? (thanks!) -

sizzling Reply

This is me. I just upgraded from a 3700X to a 5800X3D. I’m going to get a couple more years out my B450 Tomahawk Max and 2x16gb DDR4. This is paired with a 3080 and 1440p 240Hz monitor. By the time I need to upgrade cpu DDR5 will hopefully be more mature, cheaper and actually bring beneficial improvements for games.Makaveli said:I see this the other way its a fantastic upgrade for those on AM4 that may still be on Zen+ or Zen 2 and will prolong the life of those systems a few more years. Leaving time for pricing to go down on DDR5 when its time to upgrade.

The only thing that I have noticed is my RAM seem to run hotter on the 5800X3D than the 3700X. It now runs at about 45-49c on stock XMP, previously 40-43. -

Alvar "Miles" Udell While it may be the best in gaming for AMD's current offerings, other reviews, such as Techpowerup's, which use an RTX 3080, show the 5800X3D to lead by only 7.4% on vs the 5900X in gaming at 1920x1080 on average. Assuming you aren't using a ~$1500 3090 but a ~$900 3080, are you really telling us that you should buy the 3080X3D instead of spending, currently, $80 more on the 5950X, for twice the number of cores and a much better all around system?Reply -

sizzling Reply

The 5950X is outperformed by the 5900X for gaming, so if gaming is the main concern then it makes sense to compare to a 5800X or 5900X.Alvar Miles Udell said:While it may be the best in gaming for AMD's current offerings, other reviews, such as Techpowerup's, which use an RTX 3080, show the 5800X3D to lead by only 7.4% on vs the 5900X in gaming at 1920x1080 on average. Assuming you aren't using a ~$1500 3090 but a ~$900 3080, are you really telling us that you should buy the 3080X3D instead of spending, currently, $80 more on the 5950X, for twice the number of cores and a much better all around system? -

Alvar "Miles" Udell Replysizzling said:The 5950X is outperformed by the 5900X for gaming, so if gaming is the main concern then it makes sense to compare to a 5800X or 5900X.

True, but not in applications, which is half of this test, and the 5950X beats the 5900X quite handily due to having more cores. And since they compared it against an Intel processor with 16 cores, the 12900K, as well as the 12900KS variant, then they should have included a 16 core AMD processor as well for good measure, even though the 12900K and KS are quite a bit faster anyway. -

sizzling Reply

True, but this review is about the 5800X3D which is being pushed as a gaming cpu, nothing more. Therefore it’s reasonable to compare on that basis. If you are not after a purely gaming cpu the 5800X3D probably does not make sense.Alvar Miles Udell said:True, but not in applications, which is half of this test, and the 5950X beats the 5900X quite handily due to having more cores. And since they compared it against an Intel processor with 16 cores, the 12900K, as well as the 12900KS variant, then they should have included a 16 core AMD processor as well for good measure, even though the 12900K and KS are quite a bit faster anyway. -

-Fran- The biggest winners with the 5800X3D are AM4 owners; the people that actually trusted AMD and, well, they have fully delivered, I'd say. I personally didn't go with the 5800X3D, because the 5900X dropped to under $400 and that's just way too good as an upgrade (I got it for £370). I've gone through 3 Zen generations (2700X, 3800XT and now the 5900X) and while I still think the 9K gen from Intel is still good, I can't help but feel kind of sorry for them. Almost the same for 10K gen owners, but the 10700K is still a great CPU in my eyes and let's not talk about 11K gen.Reply

Also, this thing is still 8 cores and 16 threads, it's not like it suddenly got degraded to a 4 core 8 threads CPU. I'm sure it should be fairly similar to the 11700K or at least 10700K and those you wouldn't say are slouches, no? Perspective is as common as the common sense, innit?

Regards. -

Alvar "Miles" Udell The biggest losers are people like me who bought a high end X370 motherboard trusting AMD to support all AM4 processors on all AM4 motherboards like they said at the beginning, then bought a X570 motherboard after they said no Zen 3 support on X370 motherboards only for them to then change their minds and actually support Zen 3 on X370 motherboards...Reply