The Crucial M550 SSD Review: Striking Back With More Performance

Crucial's M500 brought mainstream performance, enhanced features, and rock-bottom pricing together in one of the most-recommended SSDs of 2013. Following up, Crucial has a refined version called the M550, juiced-up for performance-hungry enthusiasts.

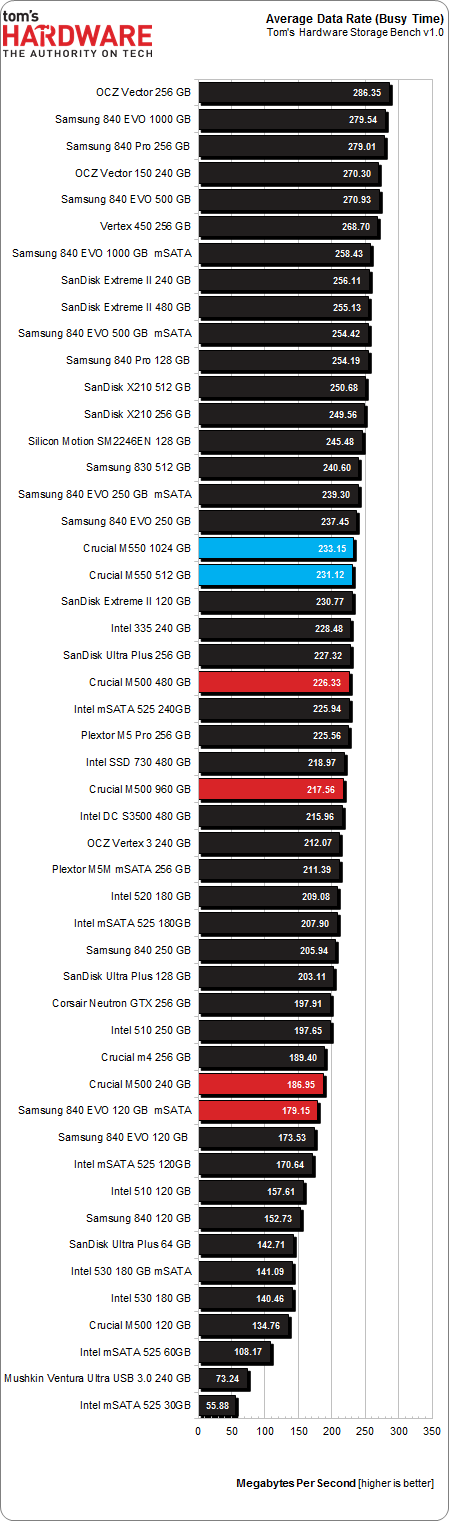

Results: Tom's Storage Bench v 1.0

Storage Bench v1.0 (Background Info)

Our Storage Bench incorporates all of the I/O from a trace recorded over two weeks. The process of replaying this sequence to capture performance gives us a bunch of numbers that aren't really intuitive at first glance. Most idle time gets expunged, leaving only the time that each benchmarked drive is actually busy working on host commands. So, by taking the ratio of that busy time and the the amount of data exchanged during the trace, we arrive at an average data rate (in MB/s) metric we can use to compare drives.

It's not quite a perfect system. The original trace captures the TRIM command in transit, but since the trace is played on a drive without a file system, TRIM wouldn't work even if it were sent during the trace replay (which, sadly, it isn't). Still, trace testing is a great way to capture periods of actual storage activity, a great companion to synthetic testing like Iometer.

Incompressible Data and Storage Bench v1.0

Also worth noting is the fact that our trace testing pushes incompressible data through the system's buffers to the drive getting benchmarked. So, when the trace replay plays back write activity, it's writing largely incompressible data. If we run our storage bench on a SandForce-based SSD, we can monitor the SMART attributes for a bit more insight.

| Mushkin Chronos Deluxe 120 GBSMART Attributes | RAW Value Increase |

|---|---|

| #242 Host Reads (in GB) | 84 GB |

| #241 Host Writes (in GB) | 142 GB |

| #233 Compressed NAND Writes (in GB) | 149 GB |

Host reads are greatly outstripped by host writes to be sure. That's all baked into the trace. But with SandForce's inline deduplication/compression, you'd expect that the amount of information written to flash would be less than the host writes (unless the data is mostly incompressible, of course). For every 1 GB the host asked to be written, Mushkin's drive is forced to write 1.05 GB.

If our trace replay was just writing easy-to-compress zeros out of the buffer, we'd see writes to NAND as a fraction of host writes. This puts the tested drives on a more equal footing, regardless of the controller's ability to compress data on the fly.

Average Data Rate

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The Storage Bench trace generates more than 140 GB worth of writes during testing. Obviously, this tends to penalize drives smaller than 180 GB and reward those with more than 256 GB of capacity. Speaking of which, it's not a good idea to take a trace made on a 240 GB disk and wrap it around a smaller disk, say, a 40 GB disk. Going the other way, using a smaller trace on a larger SSD is no problem, but going from larger to significantly smaller leads to radically different trace timing, and thus, results.

The M550s show up above the M500s, but still appear mid-pack. Most of the drives ahead of Crucial's new SSD family probably need asterisks next to their model names, though. OCZ, SanDisk, and Samsung all employ technologies to boost performance with the equivalent of emulated SLC flash. Crucial, however, does not.

It's also interesting that only one drive ranked higher than the M550s uses IMFT-manufactured flash. That SSD owes its speed to OCZ's unique firmware. Otherwise, Toggle-mode DDR is the more prevalent performance-oriented interface.

Current page: Results: Tom's Storage Bench v 1.0

Prev Page Results: Random Performance Next Page Results: Tom's Storage Bench v 1.0, Continued-

ikyung Heard rumors of Samsung planning to market the 850 with aggressive pricing this year. Would like to see Crucial and Samsung duke it out in pricing.Reply -

cryan ReplyHeard rumors of Samsung planning to market the 850 with aggressive pricing this year. Would like to see Crucial and Samsung duke it out in pricing.

They already have IMHO. The Samsung 840 EVO is significantly cheaper than it was at launch. It and the M500 have seemed to move in lockstep. Along the way, we've seen other manufacturers follow suit. Even Intel's 530 series, which has been on the more expensive side of mainstream products has been seen for just $140 for the 240 GB version here in the State.Regards,Christopher Ryan -

venk90 INSANELY GOOD DEAL ON AMAZON ! The 512 GB SSD is listed at 169$ incorrectly ! Grab them before they change it. I ordered 20 myself ! Will e-bay all of it or feel bad and return it to Amazon !Reply -

cryan ReplyI just splashed $250 (delivered to Oz) on a M500 480GB mSATA, eh, can't complain.

I hope Crucial continues to sell the M500 right where it is. The deals are just too good, and it'd be truly sad were Crucial/Micron to up the price on us.And they're not slow. I know it seems like they're sub par compared to some of the last few drives we've tested, but the reality is most users are never going to notice the speeds between different SSD models. The only exception is jumping from an older SATA II drive to a modern SATA III SSD. Even then, you'd need solid hardware in the system.Regards,Christopher Ryan -

Ankursh287 M500 available at $240 (amazon)..damn good drive for the price, performance difference between M500 , M550 & 840/840 pro won't visible to normal user.Reply -

Nada190 When I look at SSD's I want price to performance because I won't even notice a difference.Reply -

Drejeck Specifically for gaming which would be the best? All sort of tricks are allowed, from tweaks to samsung's magician (ram caching).Reply -

RedJaron ReplyOf course, we're in the throes of post-launch pricing. In a few weeks, it's possible that the gap between M500 and M500 will narrow.

Typo on the last page. One of those should be 550.

Happy to see Crucial with this update. I'm with a lot of people, you don't see a difference in SSD performance outside benchmarks. Give me something reasonably fast with great durability and I'm sold. With all this talk of the maturing of 20nm manufacturing, I'd love to see an M500 V2 with less overprovisioning.

-

gizmoguru Hay Tom's the chart for Sequential Reads Benchmark is labled "Random Writes", please correctReply