Why you can trust Tom's Hardware

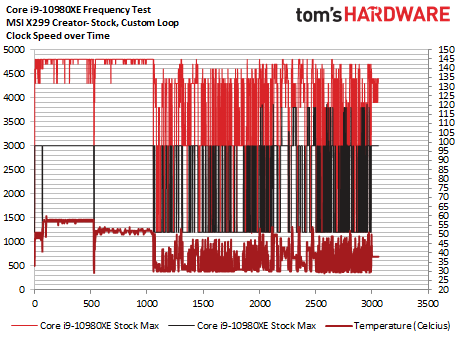

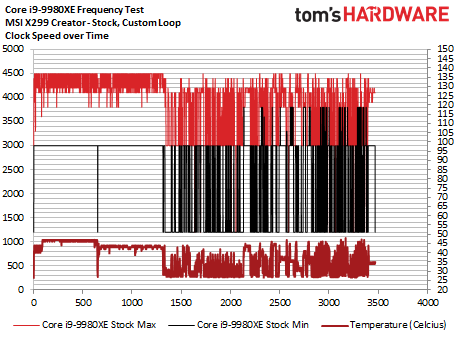

Core i9-10980XE Boost Frequency

We ran a few tests to measure the Core i9-10980XE's ability to hit its rated boost speeds. Intel targets the fastest cores, in this case four of them, with lightly-threaded workloads with its Turbo Boost Max 3.0 feature. However, the frequency for the second two fastest cores drops 100 MHz from the listed Turbo Boost Max 3.0 frequency. Intel pioneered this technique for the desktop, but AMD has also adopted the tactic to extract more performance from its third-gen Ryzen chips.

We begin by recording the frequencies of each core during a series of commonly-used tests that should expose the peak frequencies. The first two tests are LAME and Cinebench in single-core test mode. These programs only execute on one core of the processor, which typically allows the chip to reach its peak boost frequency within its power, current, and thermal envelope. We also used tests with intermittent "bursty" workloads: PCMark 10, Geekbench, and VRMark run in rapid succession after the first two tests.

The album above only includes the maximum and minimum frequencies recorded during each 1-second measurement interval (100ms sampling). That means these measurements could come from any one core, but it makes the charts easier to digest. We've also plotted chip temperature on the right axis (the dark red line).

As you can see, both chips can hit their rated turbo frequencies rather easily, and frequently, with the Core i9-10980XE offering a 300MHz improvement over the previous-gen -9980XE in single-threaded work. We also noticed the impact of the expanded four-core Turbo Boost Max 3.0 as more cores engage in boost activity during lightly-threaded workloads.

Core i9-10980XE Overclocking

Overclocking still remains a key Intel advantage in the face of AMD's brutally-powerful Zen 2 processors. Intel's chips have far higher overclocking headroom and are realtively easy to tune manually, and deliver a much larger performance improvement than we see with AMD procesors. However, you'll need beefy cooling to wring out peak overclocking performance from the -10980XE, but it generally offers much higher overclocking headroom than the -9980XE.

We used our go-to Corsair H115i cooler for stock testing, but switched over to a custom loop with two 360mm radiators for overclocking. The -10980XE allows you to adjust bins for three types of instructions: IA/SSE, AVX2, and AVX-512.

| All-core Ratios (GHz) | IA/SSE | AVX2 | AVX-512 |

| i9-10980XE stock | 3.8 | 3.3 | 2.8 |

| 19-10980XE Overclocked | 4.8 | 4.0 | 3.3 |

| i9-9980XE Overclocked | 4.4 | 3.3 | 2.8 |

We adjusted the -10980XE turbo ratios to 4.8, 4.0, and 3.3 GHz, respectively. We dialed these in as all-core overclock values, which means we do lose a bit of steam in lightly-threaded AVX-512 workloads that boost up to 3.8GHz on two cores, but you can also assign these values on a per-core basis to recoup those losses. As you can see, our -10980XE sample overclocked far beyond the limits we found with our -9980XE, but your mileage may vary.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Overclocking was a straightforward affair: We bumped memory speeds up to DDR4-3600 and used a 2.1v VCCIN paired with a 1.2V vCore. We also bumped up the mesh multiplier to 32 and increased vMesh to 1.2V, which imparts a nice performance kicker due to better cache latencies. We also uncorked all of the power limits in the BIOS. Temperatures with our custom loop peaked at 80C during AVX2 workloads, but be aware that standard closed-loop coolers will be a limiting factor.

Even with relaxed settings, heat easily overwhelmed our H115i after small voltage increases, and that was with its fans cranking away at maximum speed. Just like the first- and second-gen Skylake-X chips, thermals limited our overclocking efforts before hitting the silicon's limits, despite solder-based TIM. Build your own custom loop if you plan on serious overclocking. Also, we advise forced air or water cooling on the power delivery subsystem. Invest in a PSU able to deliver at least 20A on the +12V rail. MSI’s BIOS warns that you need a power supply capable of providing up to 1000W through the eight-pin EPS cable; a beefy PSU is non-negotiable.

Our overclocking technique for AMD's 3000-series processors is drastically different. These chips offer improved single-threaded performance, but you'll lose that benefit if you manually overclock. That's because the 7nm chips can't be manually overclocked on all cores to reach the same frequency as the single-core boost frequency. In fact, the all-core overclock ceiling is often 200 to 300 MHz lower than the single-core boost speeds, which is likely due to AMD’s new binning strategy that finds the Ryzen 3000 chips with a mix of both faster and slower cores.

We turned to AMD's auto-overclocking Precision Boost Overdrive feature for our battery of tests. This auto-overclocking algorithm preserves the benefits of the single core boost, as seen in our boost testing above, while speeding up threaded workloads. We paired our PBO-enabled configurations with our custom watercooling loop and a Phanteks full-coverage Glacier C399A TR4 wateblock, enabling the utmost performance possible with our available cooling solutions. As with all Zen 2-based chips, PBO performance will vary based upon your cooling solution, motherboard, and firmware.

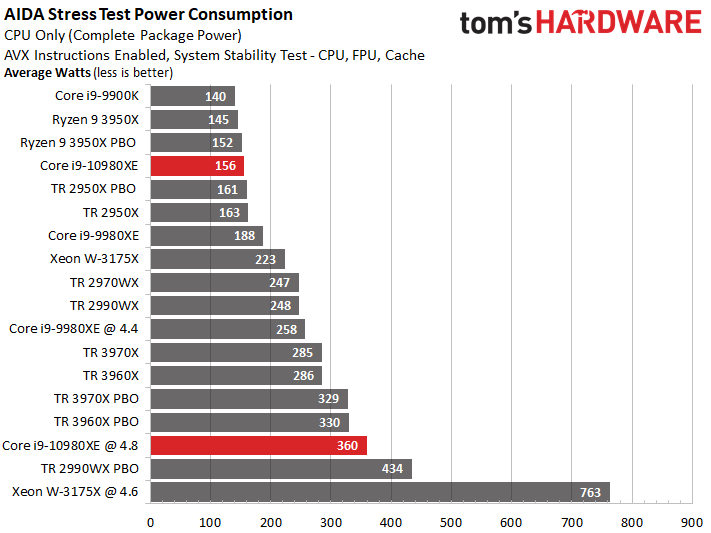

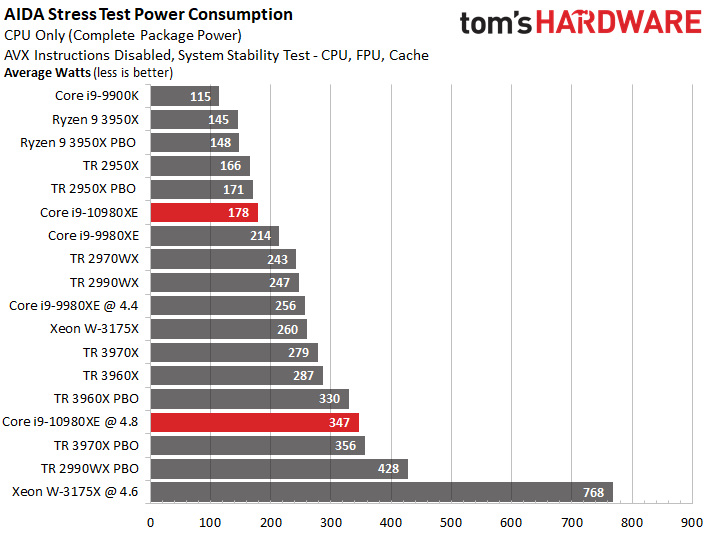

Core i9-10980XE Power Consumption

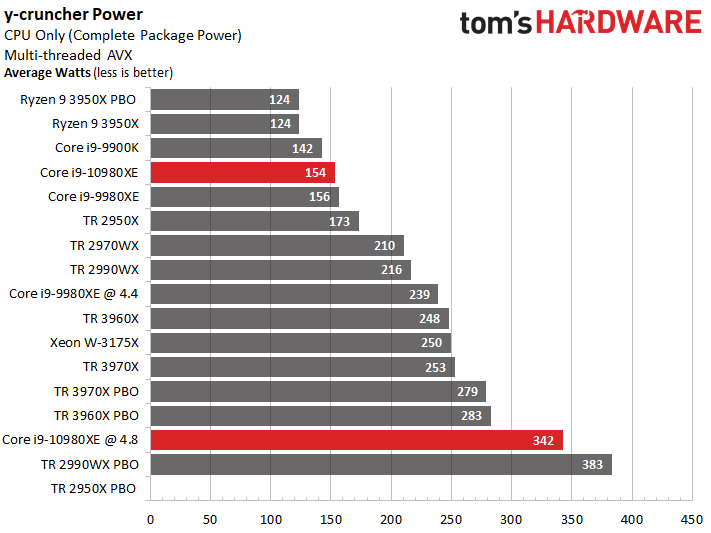

Sometimes we encounter results that simply seem to good to be true, and that is exactly what transpired with the -10980XE's power measurements. We retested both the -10980XE and -9980XE on multiple motherboards to verify the power consumption deltas, and while they vary based on motherboard, there is no doubt the -10980XE is significantly more power efficient. We also used Intel's XTU to monitor both chips during stress testing and noticed that the -9980XE encountered current/EDP limit throttling much more frequently than the -10980XE during extended AVX workloads, indicating that motherboard vendors have a bit more budget to play with on the Cascade Lake-X chip. This could be due to refinements Intel has made to power thresholds, but most of the secret sauce behind these power reductions are hidden in the lines of BIOS code.

In either case, the -9980XE draws ~20% more power than the -10980XE with both SSE and AVX2 instructions. We also measured peak AVX-512 power draw (not in the charts) at 149W for the -10980XE and 162W for the -9980XE, an 8% increase for the older chip. Normally we would expect these kind of power enhancements to stem from a newer, small process, but Intel has definitely mastered its 14nm, which you would expect after five long years and countless revisions.

However, those large deltas in stress tests don't always carry over to all real world workloads. The -9980XE only drew 2W less than the -10980XE during the y-cruncher workload, though it is noteworthy that the latter was ~9% faster during the test, indicating that it is more efficient. Power efficiency gains will definitely vary based upon workload.

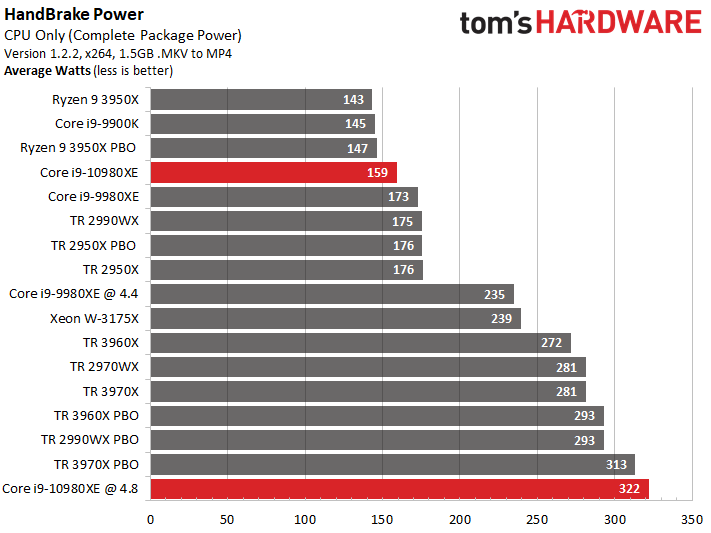

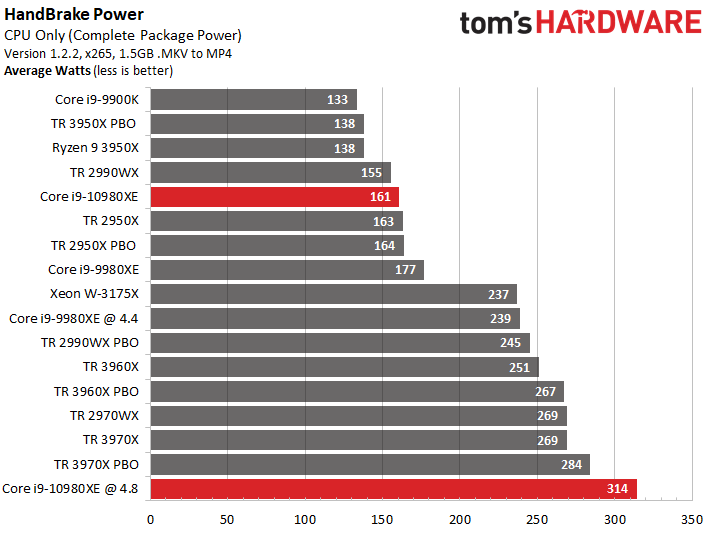

Flipping over to the AVX-enabled HandBrake tests finds the -10980XE drawing 16 fewer watts in the x265 workload, which has a heavier distribution of AVX instructions than the x264 test. In x264, the -10980Xe drew 14 fewer watts.

At idle, the -10980XE measured 26.5W, compared to the -9980XE's 28.8W.

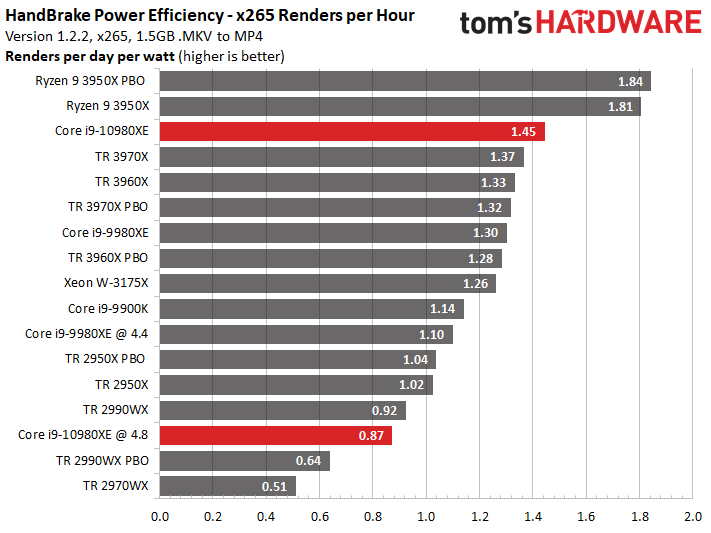

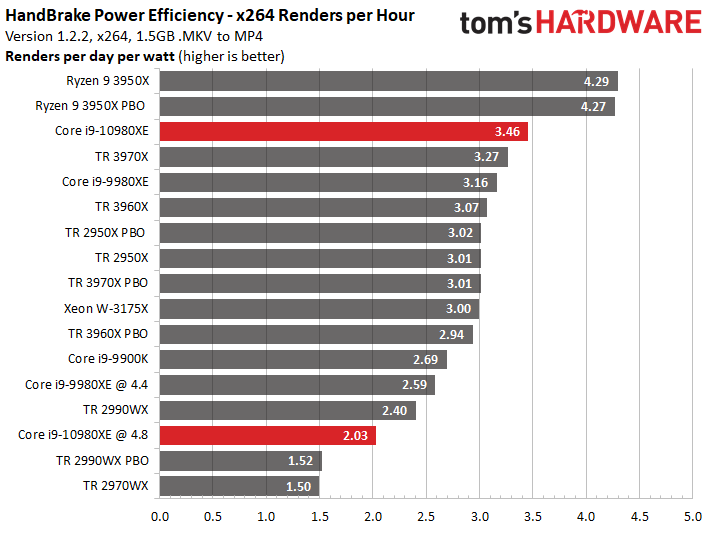

These improvements are impressive, but a glance at the Ryzen 9 3950X reveals the power efficiency of the new 7nm process, albeit paired with a 12nm I/O die. When we compare that chip to the -10980XE in the final two charts, which calculate power efficiency based on performance, the 3950X tops the chart for the Handbrake tests. The -10980XE also notches an impressive efficiency gain over the -9980XE in these real-world workloads, but the more we see of the 3950X, the more we like it.

By virtue of its drastically lower price point, the -10980XE doesn't compete directly with the 3970X and 3960X, but it is more power efficient. There is some overhead for AMD's Threadripper chips due to a larger 12nm central I/O die and the Infinity Fabric that it uses to tie together the four chiplets, but they are even more power efficient than the eight-core -9900K with its monolithic die, which is impressive. Also, a glance at the 2990WX and 2970WX in the efficiency charts really highlights the transformational gen-on-gen efficiency improvement.

Test Setup

| AMD Socket sTRX4 (TRX40) | Threadripper 3970X, 3960X |

| Row 1 - Cell 0 | MSI Creator TRX40 |

| Row 2 - Cell 0 | 4x 8GB G.Skill FlareX DDR4-3200 - Stock: DDR4-3200, OC: DDR4-3600 |

| Intel Socket 2066 (X299) | Core i9-10980XE, Core i9-9980XE |

| Row 4 - Cell 0 | MSI Creator X299 |

| Row 5 - Cell 0 | 4x 8GB G.Skill FlareX DDR4-3200 - Stock: DDR4-2933, OC: DDR4-3600 |

| AMD Socket AM4 (X570) | AMD Ryzen 9 3950X |

| MSI MEG X570 Godlike | |

| Row 8 - Cell 0 | 2x 8GB G.Skill FlareX DDR4-3200 - Stock: DDR4-3200, OC: DDR4-3600 |

| Intel LGA 3647 (C621) | Intel Xeon W-3175X |

| Row 10 - Cell 0 | ROG Dominus Extreme |

| Row 11 - Cell 0 | 6x 8GB Corsair Vengeance RGB DDR4-2666 - Stock: DDR4-2666, OC: DDR4-3600 |

| AMD Socket SP3 (TR4) | Threadripper 2990WX, 2970WX |

| Row 13 - Cell 0 | MSI MEG X399 Creation |

| Row 14 - Cell 0 | 2x 8GB G.Skill FlareX DDR4-3200 - Stock: DDR4-2933, OC: DDR4-3466 |

| Intel LGA 1151 (Z390) | Intel Core i9-9900K |

| MSI MEG Z390 Godlike | |

| 2x 8GB G.Skill FlareX DDR4-3200 - Stock: DDR4-2666, OC: DDR4-3600 | |

| All Systems | Nvidia GeForce RTX 2080 Ti |

| 2TB Intel DC4510 SSD | |

| EVGA Supernova 1600 T2, 1600W | |

| Windows 10 Pro (1903 - All Updates) | |

| Cooling | Corsair H115i, Enermax Liqtech 360 TR4 II, Custom Loop |

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Current page: Boost Speeds, Power Consumption, Test Setup

Prev Page Is This All You've Got? Next Page Core i9-10980XE Gaming

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Ful4n1t0c0sme Games benchmarks on a non gamer CPU. There is no sense. Please do compiling benchmarks and other stuff that make sense.Reply

And please stop using Windows to do that. -

Pat Flynn ReplyFul4n1t0c0sme said:Games benchmarks on a non gamer CPU. There is no sense. Please do compiling benchmarks and other stuff that make sense.

And please stop using Windows to do that.

While I agree that some Linux/Unix benchmarks should be present, the inclusion of gaming benchmarks helps not only pro-sumers, but game developers as well. It'll let them know how the CPU handles certain game engines, and whether or not they should waste tons of money on upgrading their dev teams systems.

Re: I used to build systems for Bioware... -

Paul Alcorn ReplyFul4n1t0c0sme said:Games benchmarks on a non gamer CPU. There is no sense. Please do compiling benchmarks and other stuff that make sense.

And please stop using Windows to do that.

Intel markets these chips at gamers, so we check the goods.

9 game benchmarks

28 workstation-focused benchmarks

40 consumer-class application tests

boost testing

power/thermal testing, including efficiency metrics

overclocking testing/data. I'm happy with that mix. -

Disclaimer: I badly want to dump Intel and go AMD. But are the conditions right?Reply

The AMD 3950X has 16 PCIe lanes, right? So for those of us who have multiple adapters such as RAID cards, USB or SATA port adapters, 10G NICs, etc, HEDT is the only way to go.

Someone once told me "No one in the world needs more than 16PCIe lanes, that's why mainstream CPUs have never gone over 16 lanes". If that were true the HEDT CPUs would not exist.

So we can say the 3950X destroys the Intel HEDT lineup, but only if you don't have anything other than ONE graphics card. As soon as you add other devices, you're blown.

The 3970X is $3199 where I am. That will drop by $100 by 2021.

The power consumption of 280w will cost me an extra $217 per year per PC. There are 3 HEDT PCs, so an extra $651 per year.

AMD: 1 PC @ 280w for 12 hours per day for 365 days at 43c per kilowatt hour = $527.74

Intel: 1 PC @ 165w for 12 hours per day for 365 days at 43c per kilowatt hour = $310.76

My 7900X is overclocked to 4.5GHZ all cores. Can I do that with any AMD HEDT CPU?

In summer the ambient temp here is 38 - 40 degrees Celsius. With a 280mm cooler and 11 case fans my system runs 10 degrees over ambient on idle, so 50c is not uncommon during the afternoons on idle. Put the system under load it easily sits at 80c and is very loud.

With a 280w CPU, how can I cool that? The article says that "Intel still can't deal with heat". Errr... Isn't 280w going to produce more heat than 165w. And isn't 165w much easier to cool? Am I missing something?

I'm going to have to replace motherboard and RAM too. That's another $2000 - $3000. With Intel my current memory will work and a new motherboard will set me back $900.

Like I said, I really want to go AMD, but I think the heat, energy and changeover costs are going to be prohibitive. PCIe4 is a big draw for AMD as it means I don't have to replace again when Intel finally gets with the program, but the other factors I fear are just too overwhelming to make AMD viable at this stage.

Darn it Intel is way cheaper when looked at from this perspective. -

redgarl ReplyFul4n1t0c0sme said:Games benchmarks on a non gamer CPU. There is no sense. Please do compiling benchmarks and other stuff that make sense.

And please stop using Windows to do that.

It's over pal... done, there is not even a single way to look at it the bright way, the 3950x is making the whole Intel HEDT offering a joke.

I would have give this chip a 2 stars, but we know toms and their double standards. The only time they cannot do it is when the data is just plain dead impossible to contest... like Anandtech described, it is a bloodbath.

I don't believe Intel will get back from this anytime soon. -

Paul Alcorn ReplyIceQueen0607 said:<snip>

The AMD 3950X has 16 PCIe lanes, right? So for those of us who have multiple adapters such as RAID cards, USB or SATA port adapters, 10G NICs, etc, HEDT is the only way to go.

<snip>

The article says that "Intel still can't deal with heat".

<snip>

I agree with the first point here, which is why we point out that Intel has an advantage there for users that need the I/O.

On the second point, can you point me to where it says that in the article? I must've missed it. Taken in context, it says that Intel can't deal with the heat of adding more 14nm cores in the same physical package, which is accurate if it wants to maintain a decent clock rate. -

ezst036 I'm surprised nobody caught this from the second paragraph of the article.Reply

Intel's price cuts come as a byproduct of AMD's third-gen Ryzen and Threadripper processors, with the former bringing HEDT-class levels of performance to mainstream 400- and 500-series motherboards, while the latter lineup is so powerful that Intel, for once, doesn't even have a response.

For twice? This is a recall of the olden days of the first-gen slot-A Athlon processors. Now I'm not well-versed in TomsHardware articles circa 1999, but this was not hard to find at all:

Coppermine's architecture is still based on the architecture of Pentium Pro. This architecture won't be good enough to catch up with Athlon. It will be very hard for Intel to get Coppermine to clock frequencies of 700 and above and the P6-architecture may not benefit too much from even higher core clocks anymore. Athlon however is already faster than a Pentium III at the same clock speed, which will hardly change with Coppermine, and Athlon is designed to go way higher than 600 MHz. This design screams for higher clock speeds! AMD is probably for the first time in the very situation that Intel used to enjoy for such a long time. AMD might already be able to supply Athlons at even higher clock rates right now (650 MHz is currently the fastest Athlon), but there is no reason to do so.

https://www.tomshardware.com/reviews/athlon-processor,121-16.html

Intel didn't have a response back then either. -

bigpinkdragon286 Reply

TDP is the wrong way to directly compare an Intel CPU with an AMD CPU. Neither vendor measures TDP in the same fashion so you should not compare them directly. On the most recent platforms, per watt consumed, you get more work done on the new AMD platform, plus most users don't have their chips running at max power 24/7, so why would you calculate your power usage against TDP even if it were comparable across brands?IceQueen0607 said:Disclaimer: I badly want to dump Intel and go AMD. But are the conditions right?

The AMD 3950X has 16 PCIe lanes, right? So for those of us who have multiple adapters such as RAID cards, USB or SATA port adapters, 10G NICs, etc, HEDT is the only way to go.

Someone once told me "No one in the world needs more than 16PCIe lanes, that's why mainstream CPUs have never gone over 16 lanes". If that were true the HEDT CPUs would not exist.

So we can say the 3950X destroys the Intel HEDT lineup, but only if you don't have anything other than ONE graphics card. As soon as you add other devices, you're blown.

The 3970X is $3199 where I am. That will drop by $100 by 2021.

The power consumption of 280w will cost me an extra $217 per year per PC. There are 3 HEDT PCs, so an extra $651 per year.

AMD: 1 PC @ 280w for 12 hours per day for 365 days at 43c per kilowatt hour = $527.74

Intel: 1 PC @ 165w for 12 hours per day for 365 days at 43c per kilowatt hour = $310.76

My 7900X is overclocked to 4.5GHZ all cores. Can I do that with any AMD HEDT CPU?

In summer the ambient temp here is 38 - 40 degrees Celsius. With a 280mm cooler and 11 case fans my system runs 10 degrees over ambient on idle, so 50c is not uncommon during the afternoons on idle. Put the system under load it easily sits at 80c and is very loud.

With a 280w CPU, how can I cool that? The article says that "Intel still can't deal with heat". Errr... Isn't 280w going to produce more heat than 165w. And isn't 165w much easier to cool? Am I missing something?

I'm going to have to replace motherboard and RAM too. That's another $2000 - $3000. With Intel my current memory will work and a new motherboard will set me back $900.

Like I said, I really want to go AMD, but I think the heat, energy and changeover costs are going to be prohibitive. PCIe4 is a big draw for AMD as it means I don't have to replace again when Intel finally gets with the program, but the other factors I fear are just too overwhelming to make AMD viable at this stage.

Darn it Intel is way cheaper when looked at from this perspective.

Also, your need to have all of your cores clocked to a particular, arbitrarily chosen speed is a less than ideal metric to use if speed is not directly correlated to completed work, which after all is essentially what we want from a CPU.

If you really need to get so much work done that your CPU runs at it's highest power usage perpetually, the higher cost of the power consumption is hardly going to be your biggest concern.

How about idle and average power consumption, or completed work per watt, or even overall completed work in a given time-frame, which make a better case about AMD's current level of competitiveness. -

Crashman Reply

Fun times. The Tualatin was based on Coppermine and went to 1.4 GHz, outclassing Williamette at 1.8GHz by a wide margin. Northwood came out and beat it, but at the same time Intel was developing Pentium M based on...guess what? Tualatin.ezst036 said:I'm surprised nobody caught this from the second paragraph of the article.

Intel's price cuts come as a byproduct of AMD's third-gen Ryzen and Threadripper processors, with the former bringing HEDT-class levels of performance to mainstream 400- and 500-series motherboards, while the latter lineup is so powerful that Intel, for once, doesn't even have a response.

For twice? This is a recall of the olden days of the first-gen slot-A Athlon processors. Now I'm not well-versed in TomsHardware articles circa 1999, but this was not hard to find at all:

Coppermine's architecture is still based on the architecture of Pentium Pro. This architecture won't be good enough to catch up with Athlon. It will be very hard for Intel to get Coppermine to clock frequencies of 700 and above and the P6-architecture may not benefit too much from even higher core clocks anymore. Athlon however is already faster than a Pentium III at the same clock speed, which will hardly change with Coppermine, and Athlon is designed to go way higher than 600 MHz. This design screams for higher clock speeds! AMD is probably for the first time in the very situation that Intel used to enjoy for such a long time. AMD might already be able to supply Athlons at even higher clock rates right now (650 MHz is currently the fastest Athlon), but there is no reason to do so.

https://www.tomshardware.com/reviews/athlon-processor,121-16.html

Intel didn't have a response back then either.

And then Core came out of Pentium M, etc etc etc and it wasn't long before AMD couldn't keep up.

Ten years we waited for AMD to settle the score, and it's our time to enjoy their time in the sun. -

ReplyPaulAlcorn said:I agree with the first point here, which is why we point out that Intel has an advantage there for users that need the I/O.

On the second point, can you point me to where it says that in the article? I must've missed it. Taken in context, it says that Intel can't deal with the heat of adding more 14nm cores in the same physical package, which is accurate if it wants to maintain a decent clock rate.

yes, sorry, my interpretation was not worded accurately.

Intel simply doesn't have room to add more cores, let alone deal with the increased heat, within the same package.

My point was that Intel is still going to be easier to cool producing only 165w vs AMD's 280w.

How do you calculate the watts, or heat for an overclocked CPU? I'm assuming the Intel is still more over-clockable than the AMD, so given the 10980XE's base clock of 3.00ghz, I wonder if I could still overclock it over 4.00ghz. How much heat would it produce then compared to the AMD?

Not that I can afford to spend $6000 to upgrade to the 3970X or $5000 to upgrade to the 3960X... And the 3950X is out because of PCIe lane limitations.

It looks like I'm stuck with Intel, unless I save my coins to go AMD. Makes me sick to the pit of my stomach :)