Tom's Talks Moorestown With The Father Of Centrino

With its new Atom platform, Moorestown, Intel has made a lot of exciting claims and hinted at significant changes in our computing future. All good stuff, but we need more answers. To find them, we sat down with Intel’s godfather of ultra-mobility.

The Need For SoC

TH: We’ve had system-on-chip designs for many years, obviously. Why did it take Intel until now to come out with its own SoC?

TT: That’s kind of a complex question. Let’s talk about the notebook, which was my last platform before I worked on this one. The notebook platform has very little motivation to shrink in size, especially in desktop replacements. Several years ago, we were trying to get the desktop side to adopt a lot of the notebook’s capabilities. [Ed.: Presumably, this refers to the mobile-on-desktop effort back from the Core Duo days.]

But the desktop industry and users had very little motivation because of the developed component ecosystem for power delivery, heatsinks—the whole nine yards needed to build a desktop. And a similar thing has evolved around the notebook. The need for space and capabilities are very different between these platforms. When not driven by the constraints of size and capability, these platforms can use existing components and programable logic to do, for example, video decode and encode. There’s little motivation for them to move to an SoC-like environment.

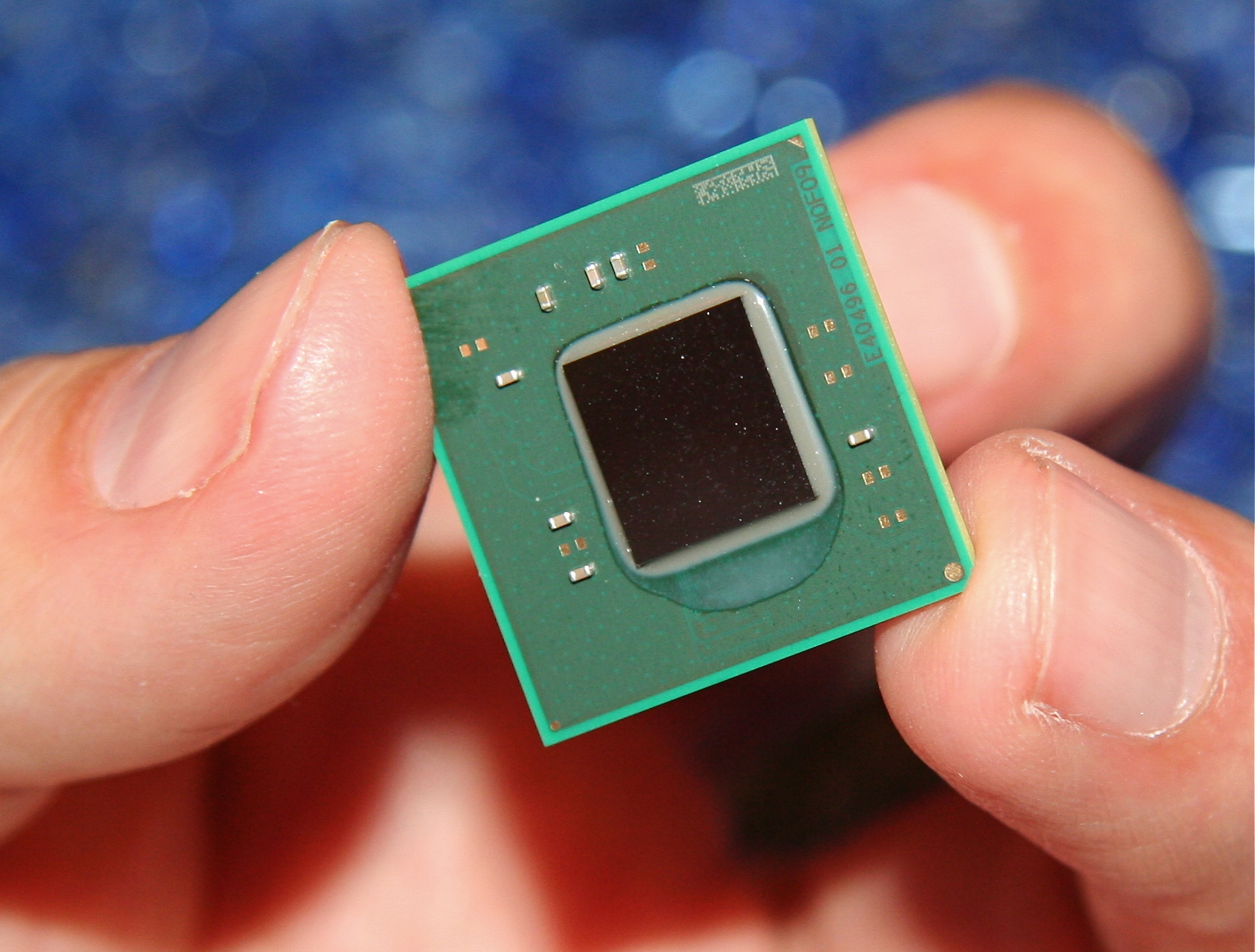

But when you come to such things as handheld devices, set-top boxes, and embedded systems, all of these have size and power constraints. Constraint is the mother of necessity that drove us to designing SoCs. We needed a such-and-such size chip with certain capabilities and power—high performance CPU, memory controller, graphics controller, video controller, decoder/encoder. You have to wire all of those things up into that limited real estate. That’s what drove us into building an SoC for this class of devices—need more than anything else.

Also, I should add that this wasn’t a focus area for us 10 years ago. Phone was not an Intel priority segment until we decided to take on the battle. When we saw the phone becoming more of a handheld computer, then it became a focus segment and put us down this road.

TH: Are there limits to what makes sense to integrate onto an SoC?

TT: No. It really depends on the size. For a phone, your size is constrained by the silicon area and what will be allowed on the footprint of the phone device. If it’s a 12 x 12 mm chip, we’ll try and put as many things as possible in it.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

whitecrowro "Why are we all here today? What is the meaning of Moorestown?Reply

Ticky Thakkar: Our vision was to.."

- pardon me, but all this naming sound like a Star Trek interview, on Tau Cygna (M class planet in Orion Nebula). -

cmcghee358 It would be nice to see Intel take a jab at discrete desktop graphics. If anything just to provide more competition for the consumer.Reply -

liquidsnake718 It would be nice to see that Zune HD ver 2.0 or even 3.0 with an updated Moorestown and a better Nvidia chip than the ion or ion2, with capabilities of at least 2.0ghz and 2gb of ram all the size of the zune.... imagine with 48hours on music, and 5 hours of video, this will only get larger as time goes by.... hopefully in a year or a year and a half we can see some TRUE iphone competition now with the new windows mobile out! We just need more appsReply -

Onus It never occurred to me to want an iPhone, but I definitely see one of these in my future.Reply -

matt314 cmcghee358It would be nice to see Intel take a jab at discrete desktop graphics. If anything just to provide more competition for the consumer....discrete desktop graphics is a pretty niche market. Without any experience in the field or specialized engineers, it would cost them alot of money in R&D, and they would not be able to beat ATI or nVidia (neither in performance nor sales)Reply -

cknobman Maybe its just me but I read the entire thing and Mr. Shreekant (Ticky) Thakkar came off as a arrogant ********.Reply -

Onus cknobmanMaybe its just me but I read the entire thing and Mr. Shreekant (Ticky) Thakkar came off as a arrogant dickhead.Merely disagreeing with you doesn't merit a "thumbs-down," but I didn't get that impression. Confidence, maybe; his experience no doubt backs that up, but I didn't find him arrogant. I liked how he called BS on the FUD.Reply

-

zodiacfml I read his comments carefully and found that those were carefully chosen words. Confidence is very much needed to get the support everyone while remaining factual.Reply

In summary, I expect their device to be better performing than anything else in the future at the expense of a huge and heavy battery to power the Atom and the Huge screen making use of excess performance.

cknobmanMaybe its just me but I read the entire thing and Mr. Shreekant (Ticky) Thakkar came off as a arrogant dickhead. -

cjl zodiacfmlI read his comments carefully and found that those were carefully chosen words. Confidence is very much needed to get the support everyone while remaining factual.In summary, I expect their device to be better performing than anything else in the future at the expense of a huge and heavy battery to power the Atom and the Huge screen making use of excess performance.Did you read the article? One of the points raised was that the battery life should be just fine, contrary to many people's assumptions.Reply -

eyemaster He knows his product, the targets to meet and what they have accomplished. I'm sure they experimented on competing devices too. The man knows that they have a great product in their hands right now that beats all the others. That makes him confident, not arrogant.Reply