Intel launches Gaudi 3 accelerator for AI: Slower than Nvidia's H100 AI GPU, but also cheaper

Intel's next-generation AI accelerator is finally here.

Update 10/1/2024: Added more information on Intel's Guadi 3 and corrected supported data formats.

Intel formally introduced its Gaudi 3 accelerator for AI workloads today. The new processors are slower than Nvidia's popular H100 and H200 GPUs for AI and HPC, so Intel is betting the success of its Gaudi 3 on its lower price and lower total cost of ownership (TCO).

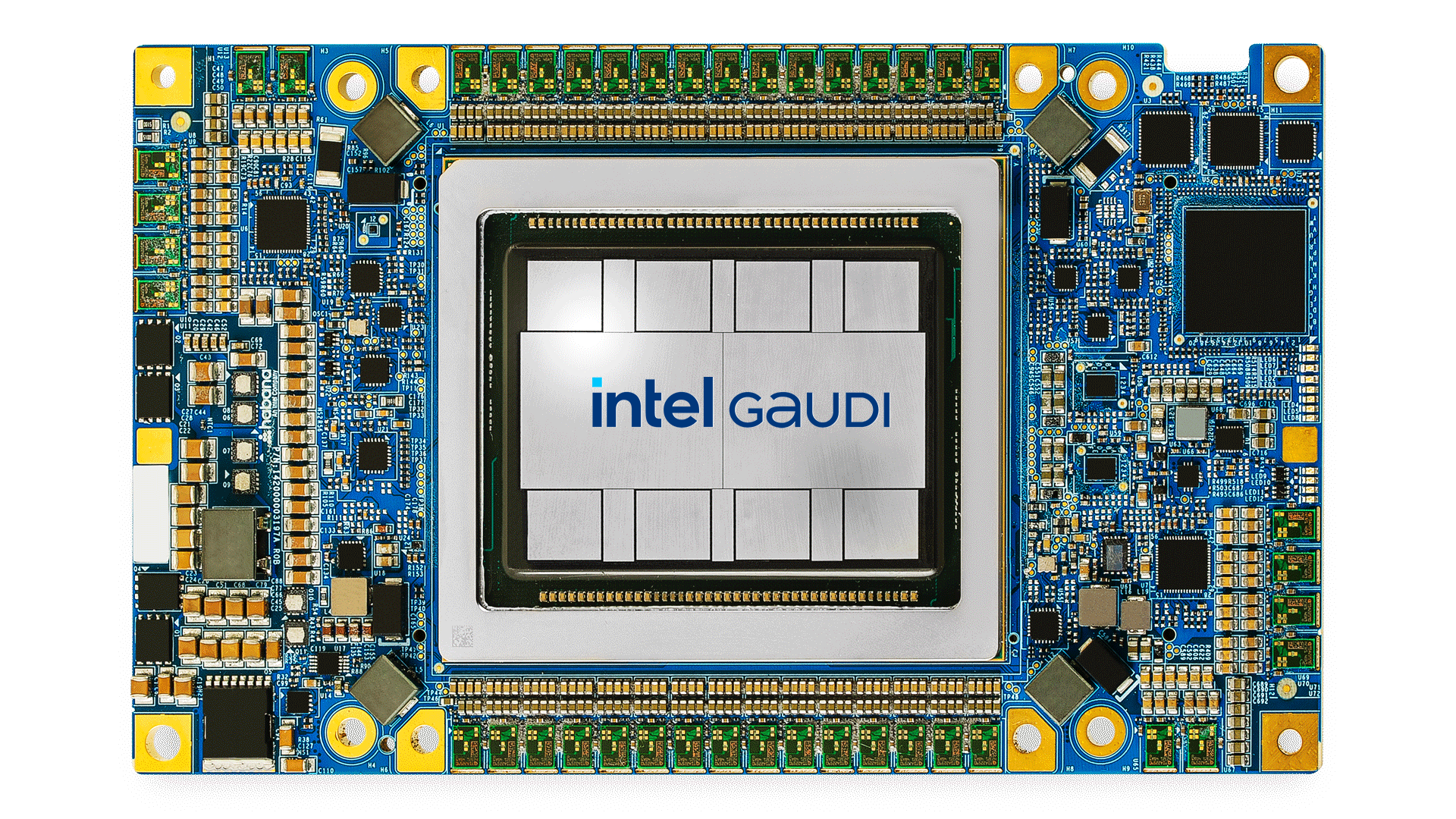

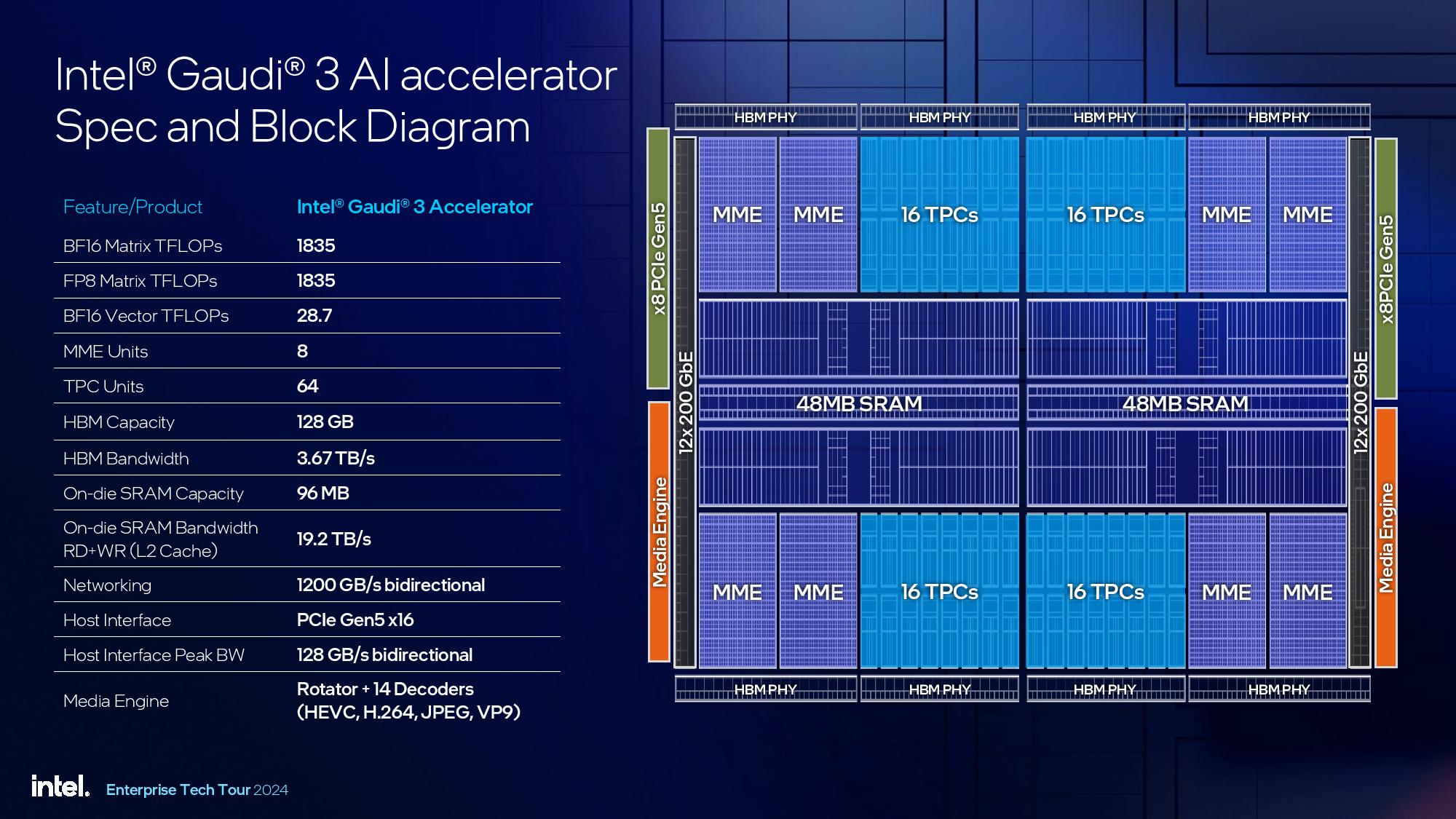

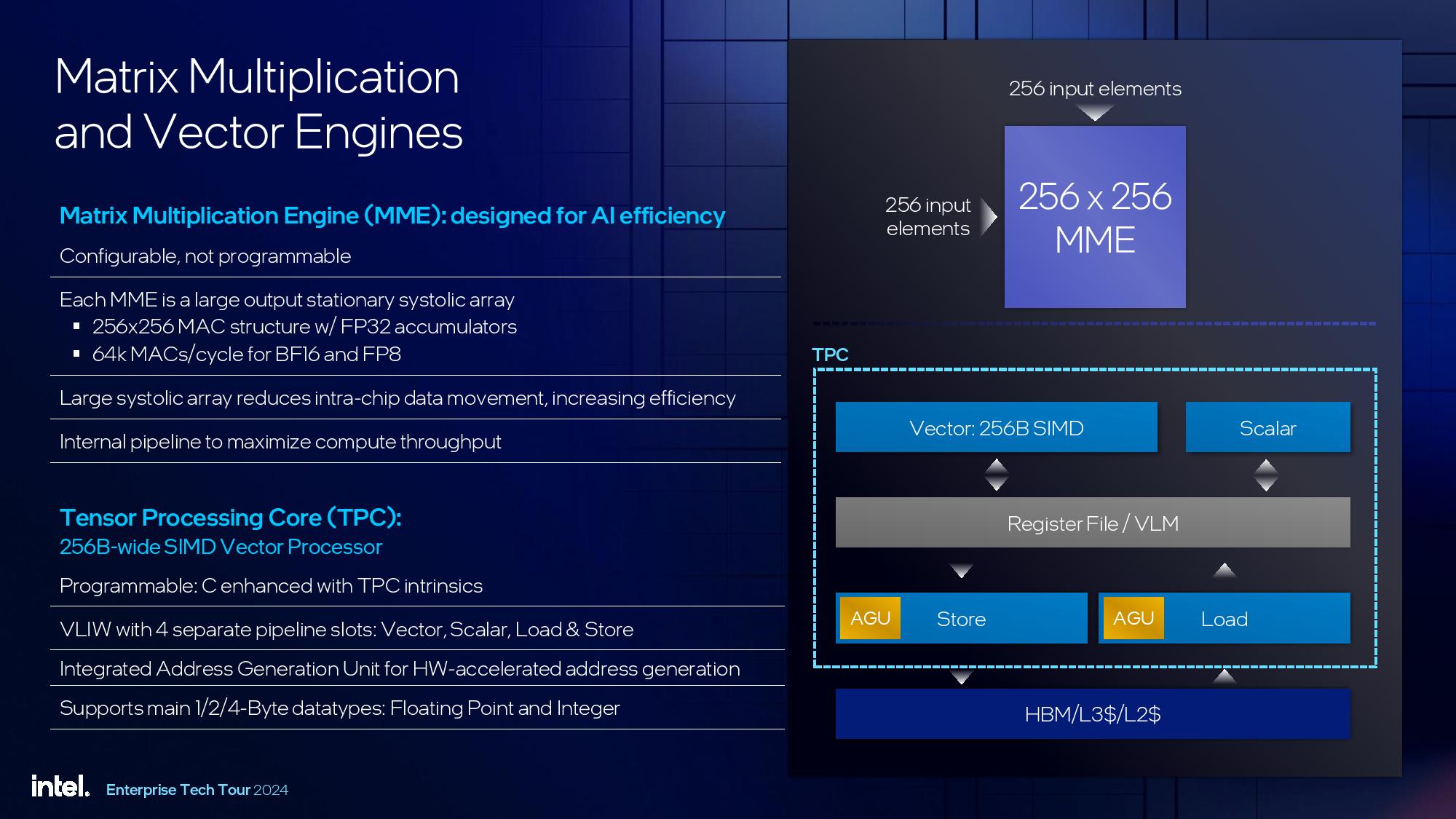

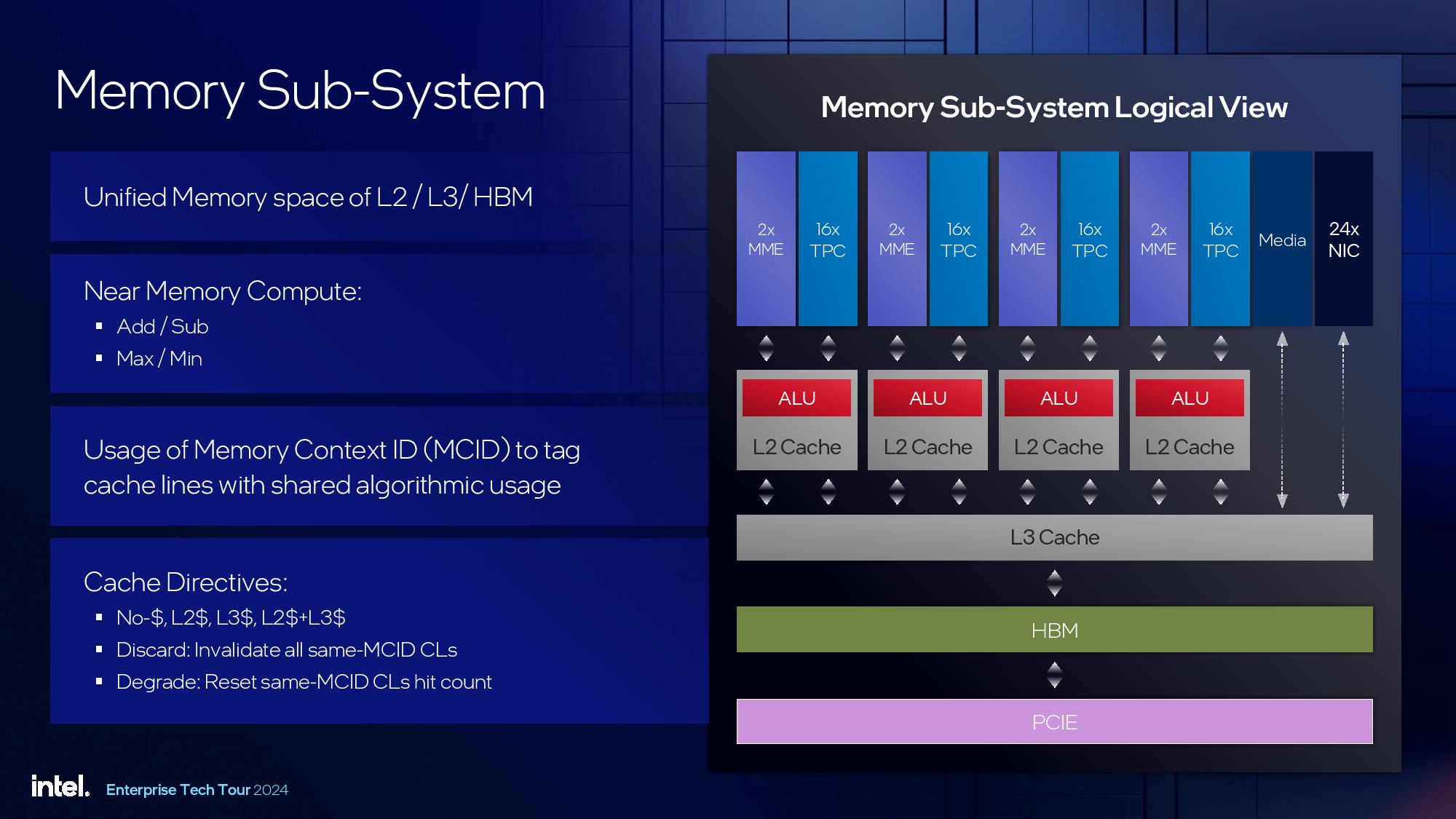

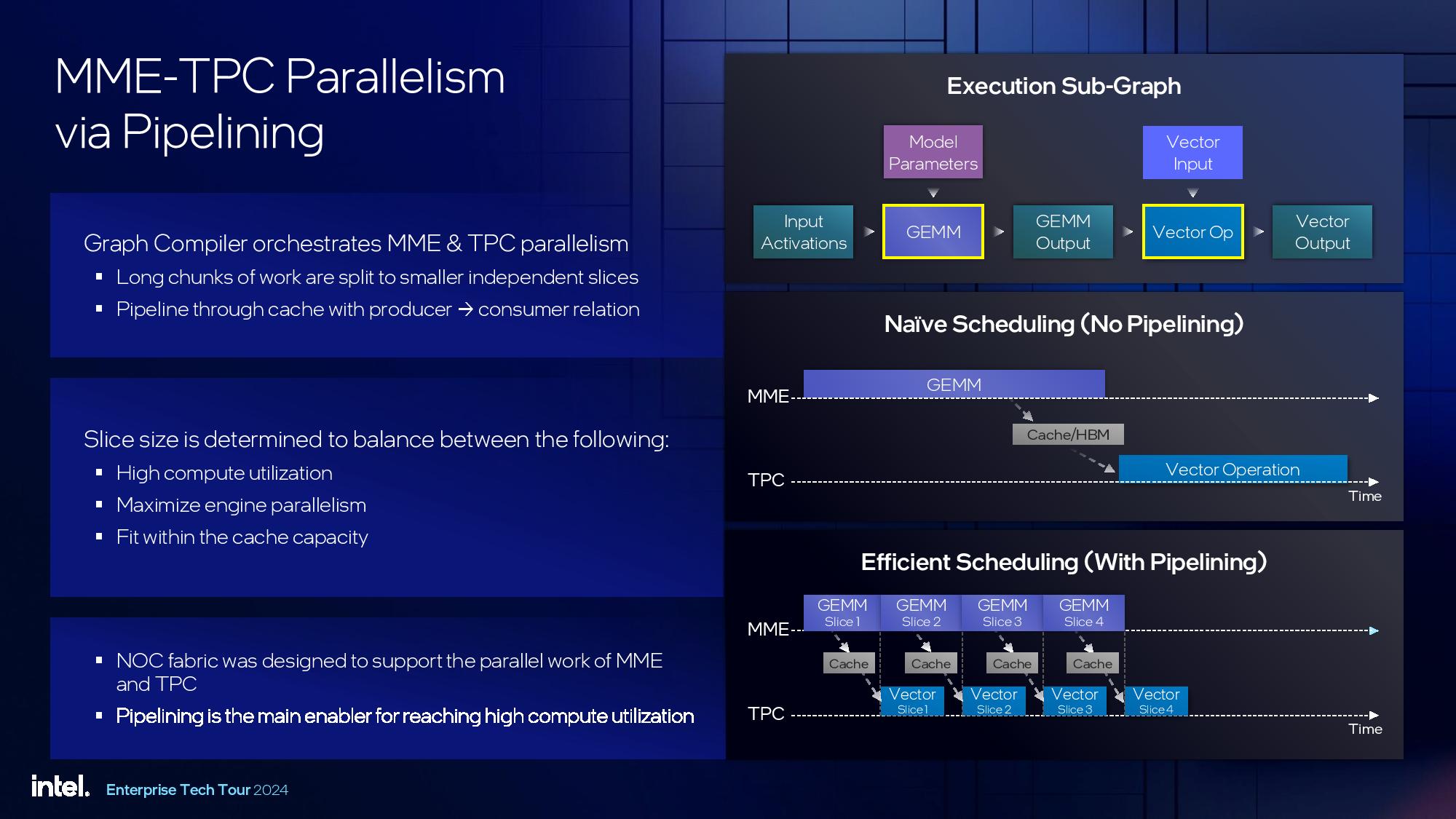

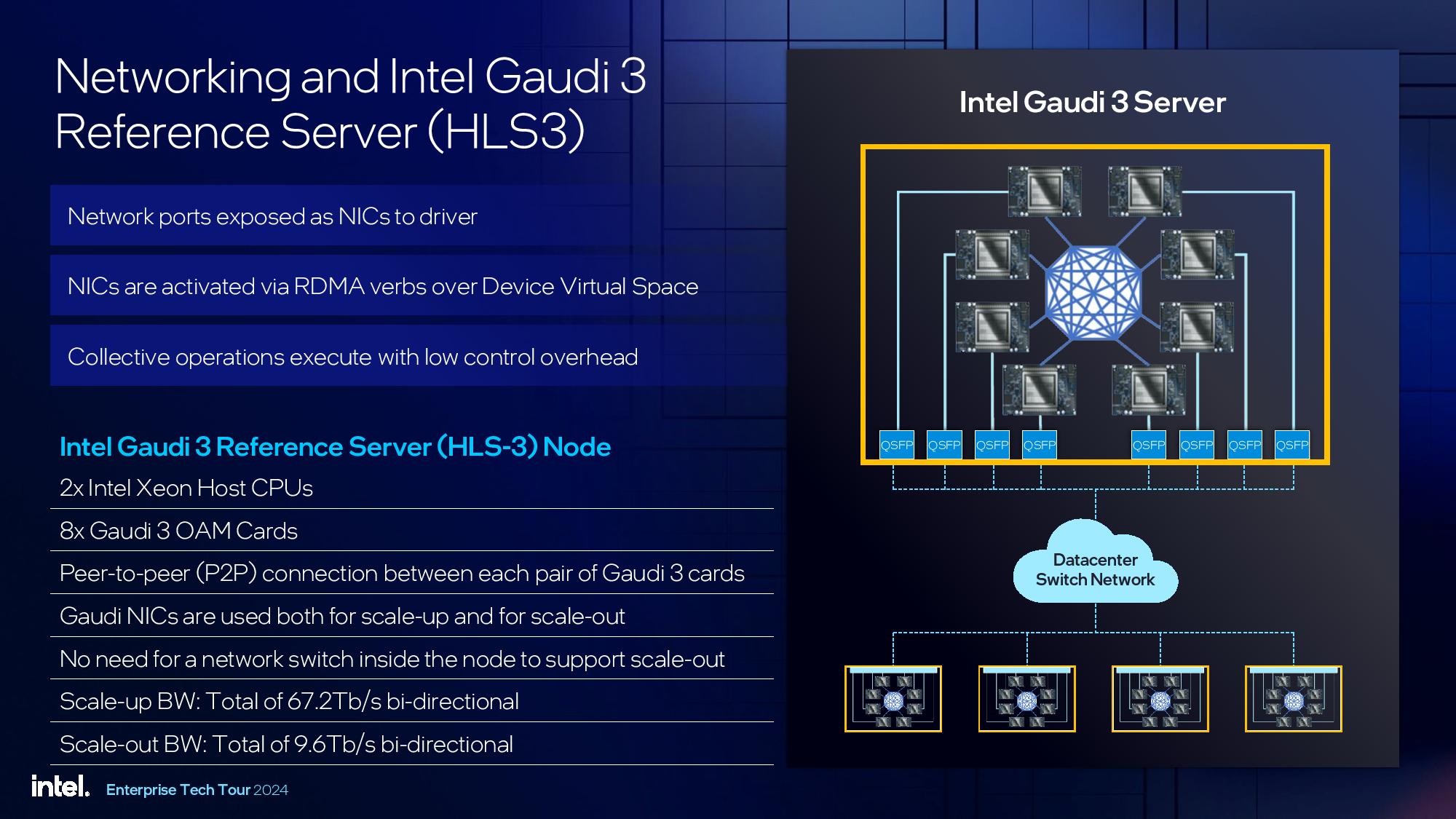

Intel's Gaudi 3 processor uses two chiplets that pack 64 tensor processor cores (TPCs, 256x256 MAC structure with FP32 accumulators), eight matrix multiplication engines (MMEs, 256-bit wide vector processor), and 96MB of on-die SRAM cache with a 19.2 TB/s bandwidth. Also, Gaudi 3 integrates 24 200 GbE networking interfaces and 14 media engines — with the latter capable of handling H.265, H.264, JPEG, and VP9 to support vision processing. The processor is accompanied by 128GB of HBM2E memory in eight memory stacks, offering a massive bandwidth of 3.67 TB/s.

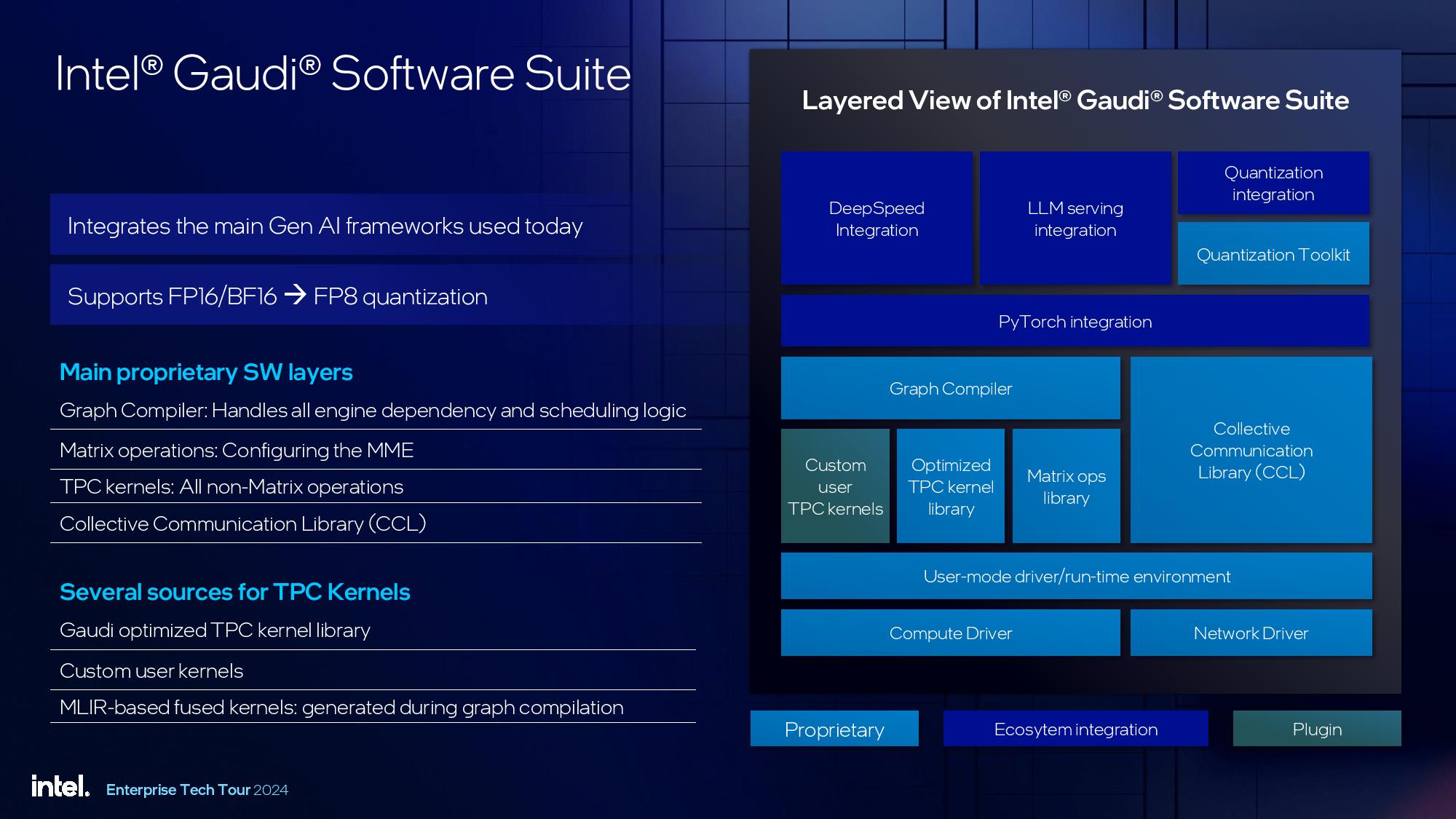

Intel's Gaudi 3 represents a massive improvement when compared to Gaudi 2, which has 24 TPCs and two MMEs and carries 96GB of HBM2E memory. Intel did not simplify its TPCs and MMEs as the Gaudi 3 processor supports FP8, BF16, FP16, TF32, and FP32 matrix operations, as well as FP8, BF16, FP16, and FP32 vector operations.

| Row 0 - Cell 0 | Gaudi 3 | Gaudi 2 | H100 |

| Matrix | FP8 | 1835 TFLOPS | 865 TFLOPS | 1978.9 | 3957.8* TFLOPS |

| Matrix | BF16 MME | 1835 TFLOPS | 432 TFLOPS | 989.4 | 1978.9* TFLOPS |

| Matrix | FP16 | 459 TFLOPS | ? | 989.4 | 1978.9* TFLOPS |

| Matrix | TF32 | 459 TFLOPS | ? | 497.7 | 989.4* TFLOPS |

| Matrix | FP32 | 229 TFLOPS | ? | - |

| Vector | FP8 | 57.3 TFLOPS | ? | - |

| Vector | BF16 | 28.7 TFLOPS | 11 TFLOPS | 133.8 TFLOPS |

| Vector | FP16 | 28.7 | ? | 133.8 TFLOPS |

| Vector | FP32 | 14.3 | ? | 66.9 TFLOPS |

*with sparsity.

When it comes to performance, Intel says that Gaudi 3 can offer up to 1,835 BF16/FP8 matrix TFLOPS as well as up to 28.7 BF16 vector TFLOPS at around 600W TDP. Compared to Nvidia's H100, at least on paper, Gaudi 3 offers higher BF16 matrix performance or just slightly lower when Nvidia's hardware uses sparsity feature (1,835 vs 1,979 TFLOPS), slightly lower FP8 matrix performance or two times lower FP8 matrix performance when H100 uses sparsity (1,835 vs 3,958 TFLOPS), and significantly lower BF16 vector performance (28.7 vs 133.8 TFLOPS).

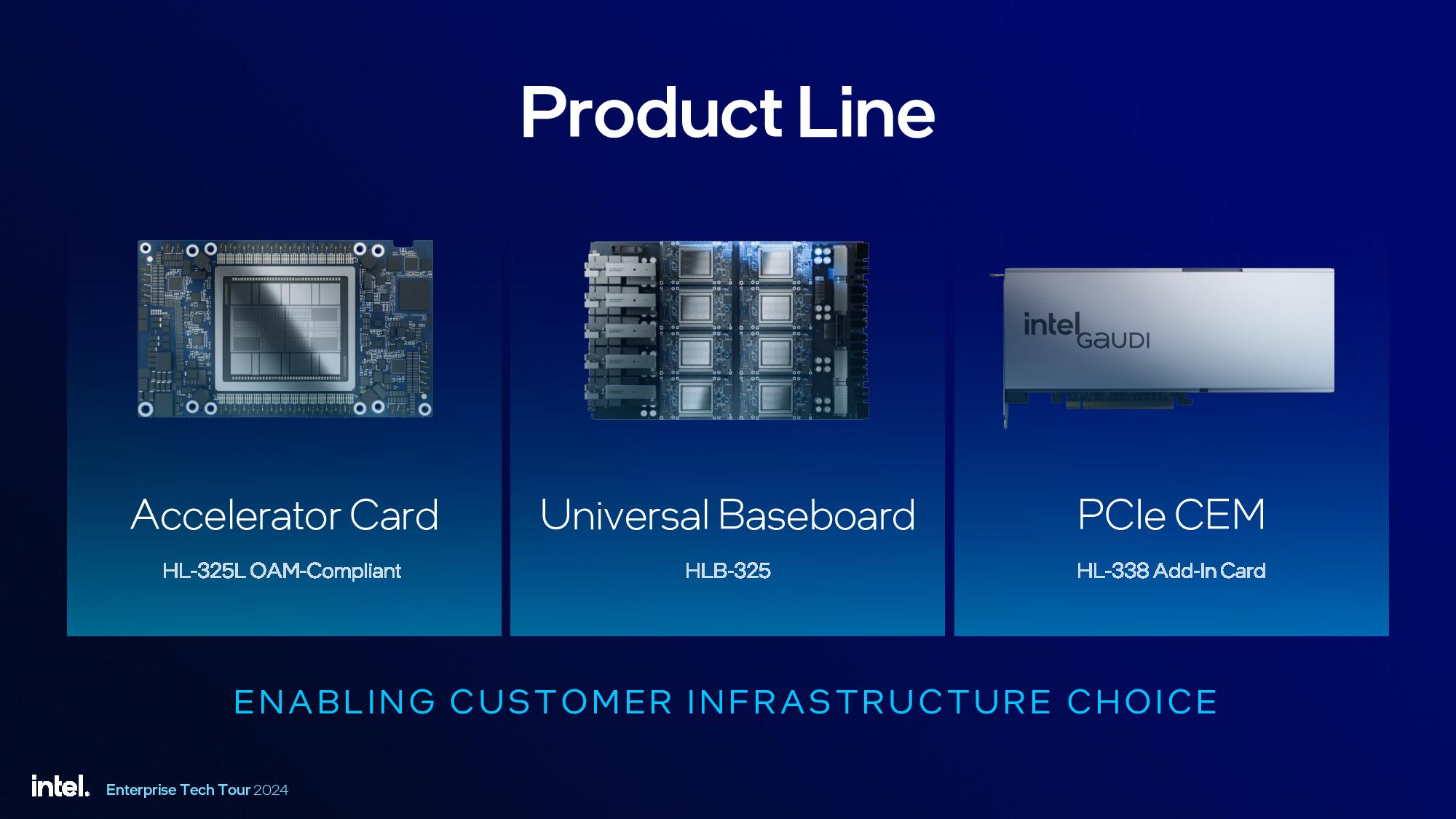

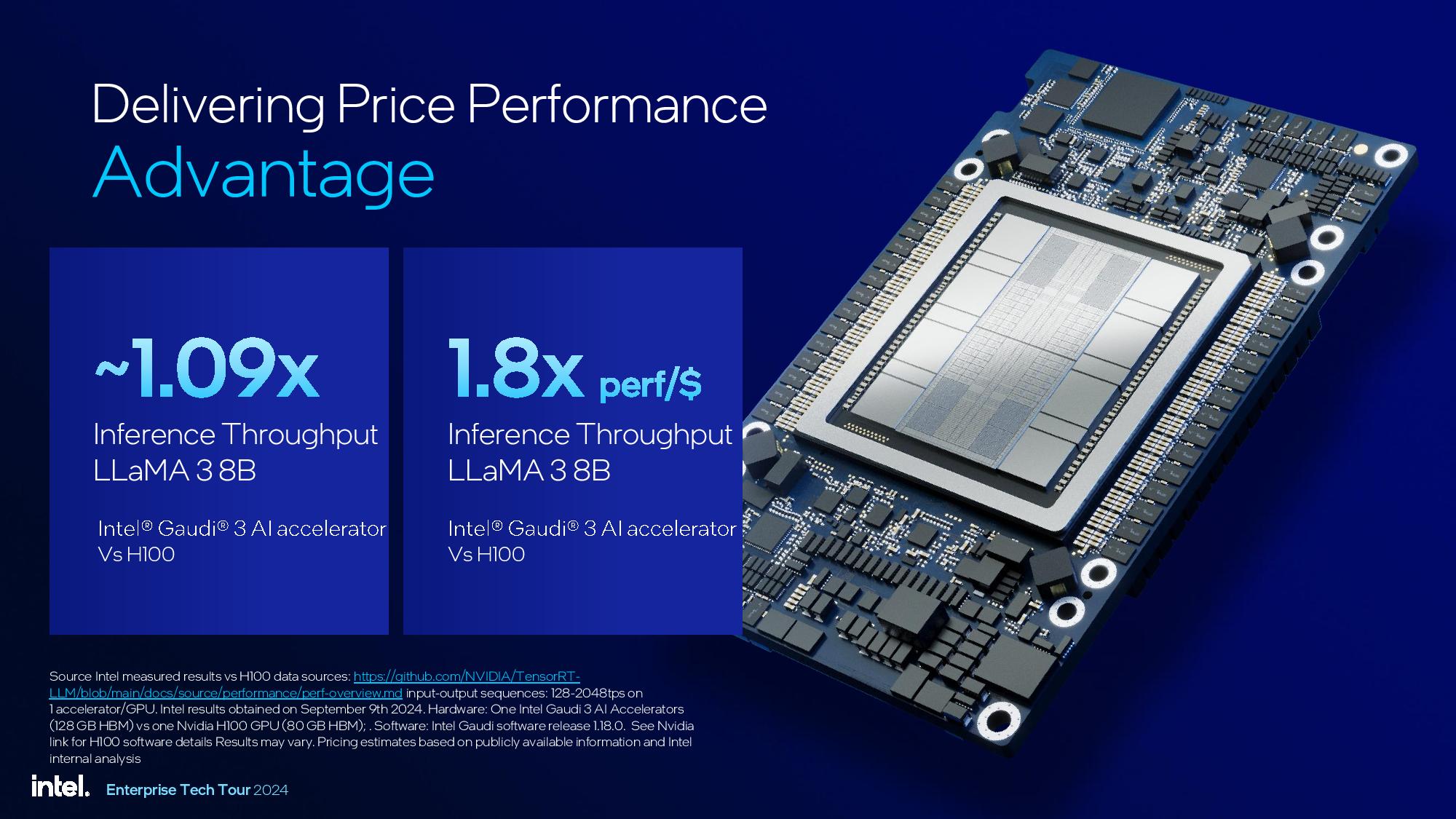

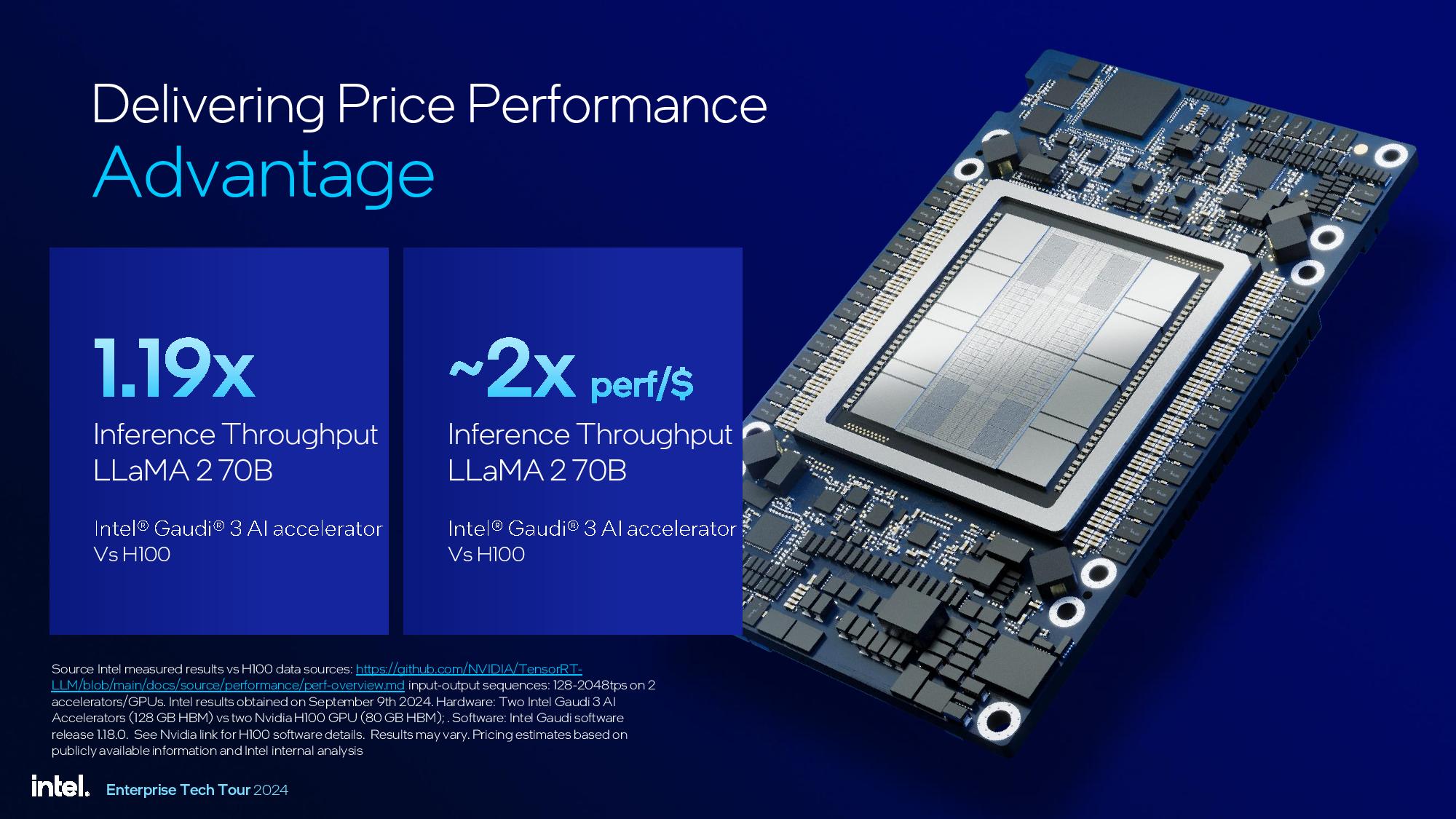

More important than the raw specs will be the actual real-world performance of Gaudi 3. It needs to compete with AMD's Instinct MI300-series and Nvidia's H100 and B100/B200 processors. This remains to be seen, as a lot depends on software and other factors. For now, Intel showed some slides claiming that Gaudi 3 can offer a significant price-performance advantage compared to Nvidia's H100.

Earlier this year, Intel indicated that an accelerator kit based on eight Gaudi 3 processors on a baseboard will cost $125,000, which means that each one will cost around $15,625. By contrast, an Nvidia H100 card is currently available for $30,678, so Intel plans to have a big price advantage over its competitor. Yet, with the potentially massive performance advantages offered by Blackwell-based B100/B200 GPUs, it remains to be seen whether the blue company will be able to maintain its advantage over its rival.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

"Demand for AI is leading to a massive transformation in the datacenter, and the industry is asking for choice in hardware, software, and developer tools," said Justin Hotard, Intel executive vice president and general manager of the Data Center and Artificial Intelligence Group. "With our launch of Xeon 6 with P-cores and Gaudi 3 AI accelerators, Intel is enabling an open ecosystem that allows our customers to implement all of their workloads with greater performance, efficiency, and security."

Intel's Gaudi 3 AI accelerators will be available from IBM Cloud and Intel Tiber Developer Cloud. Also, systems based on Intel's Xeon 6 and Gaudi 3 will be generally available from Dell, HPE, and Supermicro in the fourth quarter, with systems from Dell and Supermicro shipping in October and machines from Supermicro shipping in December.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

JayNor Intel claimsReply

"New Gaudi 3 AI accelerators offer up to 20 percent more throughput and 2x price/performance vs H100 for inference of LLaMa 2 70B" -

Findecanor Whoever owns the rights to Antoni Gaudí's legacy, I think they should sue Intel for the use of his name for something that is intended for stealing art.Reply -

A Stoner It can have value even being slower if it scales well, and is energy efficient enough to allow more of them to work together to get the same or better overall results. I like nVidia more than Intel, but I like competition!Reply -

JayNor hothardware says:Reply

"According to Intel, Gaudi 3 delivers an average 50% improvement in inferencing performance with an approximate 40% improvement in power efficiency versus NVIDIA’s H100, but at a fraction of the cost." -

cyrusfox Not hard to beat Nvidia on cost, but in terms of software plug and play and performance + scalability, good luck.Reply

Intel was forecasted to do $500 million only on Gaudi 3 in revenue for this year, paltry amount compared to AMD($4.5 billion), and miniscule compared to NVidia during this AI goldrush($100+ billion by my count). If Gaudi is good, it needs to show it on the market and not on a press deck. Go win some mind share and market share, especially outside of china, as China is largely blocked from many of the top AI solutions thanks to US government restrictions. -

Stomx Good memoty size but no FP64 , even no FP32 and 10x price than probably a bit slower Tenstorrent Wormhole GPU . This means unlikely it will find good applications in HPC.Reply -

loverofGd I kind of seeing your info misinformation, because what I see from nextplatform differ from your claims.. In Intel's test, too, Intel Gaudi3 is MUCH faster than H100.. I doubt it is only for software..Reply

Peak FP16/BF16 is much faster than H100 and even B100.. Nvidia's are 8 & 14, Gaudi3's is 14.68

In peak FP8 H100 is 16 B100 is 28 and Intel's Gaudi 3 is 14.68 again..

In peak FP32, H100 is 4 and Gaudi 3 is 2.39

These are the numbers that I read in the Next Platform, I added the link, please check it out from below..

https://www.nextplatform.com/wp-content/uploads/2024/06/intel-computex-gaudi-vs-nvidia-tnp-table-2.jpg