Battlefield 2042 PC Benchmarks, Performance, and Settings

Probably skip ultra quality and ray tracing

Battlefield 2042 brings near-future warfare to the series, and you might just want a near-future GPU to run it at ultra settings. We'll get into the details in a moment, but pushing everything to maximum quality can cause GPUs that don't have enough VRAM to take a dirt nap. But you don't actually need to max out the settings, and unless you have one of the fastest options among the best graphics cards, like the RTX 3090, RTX 3080 Ti, or RX 6900 XT, you'll probably want to skip 4K and ray tracing.

We've tested Battlefield 2042 performance on a collection of GPUs and ran some detailed settings benchmarks to see how each of the options affects performance and image quality. We'll start with the settings, but first, let's quickly run through our testing equipment and methodology.

Our test equipment consists of a Core i9-9900K CPU with 32GB of DDR4-3600 CL16 memory, a 2TB SSD, and of course, the various GPUs we've tested. Benchmarking Battlefield 2042 is, frankly, horrible—this is pretty much the opposite of most of the things we like to see, as we discussed in how to make a good game benchmark. Of course, there isn't a built-in benchmark, which means we had to run every test manually—good for getting a true look at performance, bad for repeatability. Weather effects can also impact performance, and just the general mayhem of a 64-player or even 128-player match means there's far more variability between runs.

We've attempted to mitigate that variability by running each test multiple times and also tried to get a "clear" day for the battle on the Discarded map. That means that besides trying to avoid heavy clouds in the sky (which drops performance), or if a tornado shows up, we exit and restart. We also ran the game in solo/co-op mode and played against bots because we didn't want other humans wondering why one player kept running in circles around one area of the map. Even so, the margin of error is going to be slightly higher than the normal 1–2% range we'd get from a good built-in benchmark.

Battlefield 2042 Settings Analysis

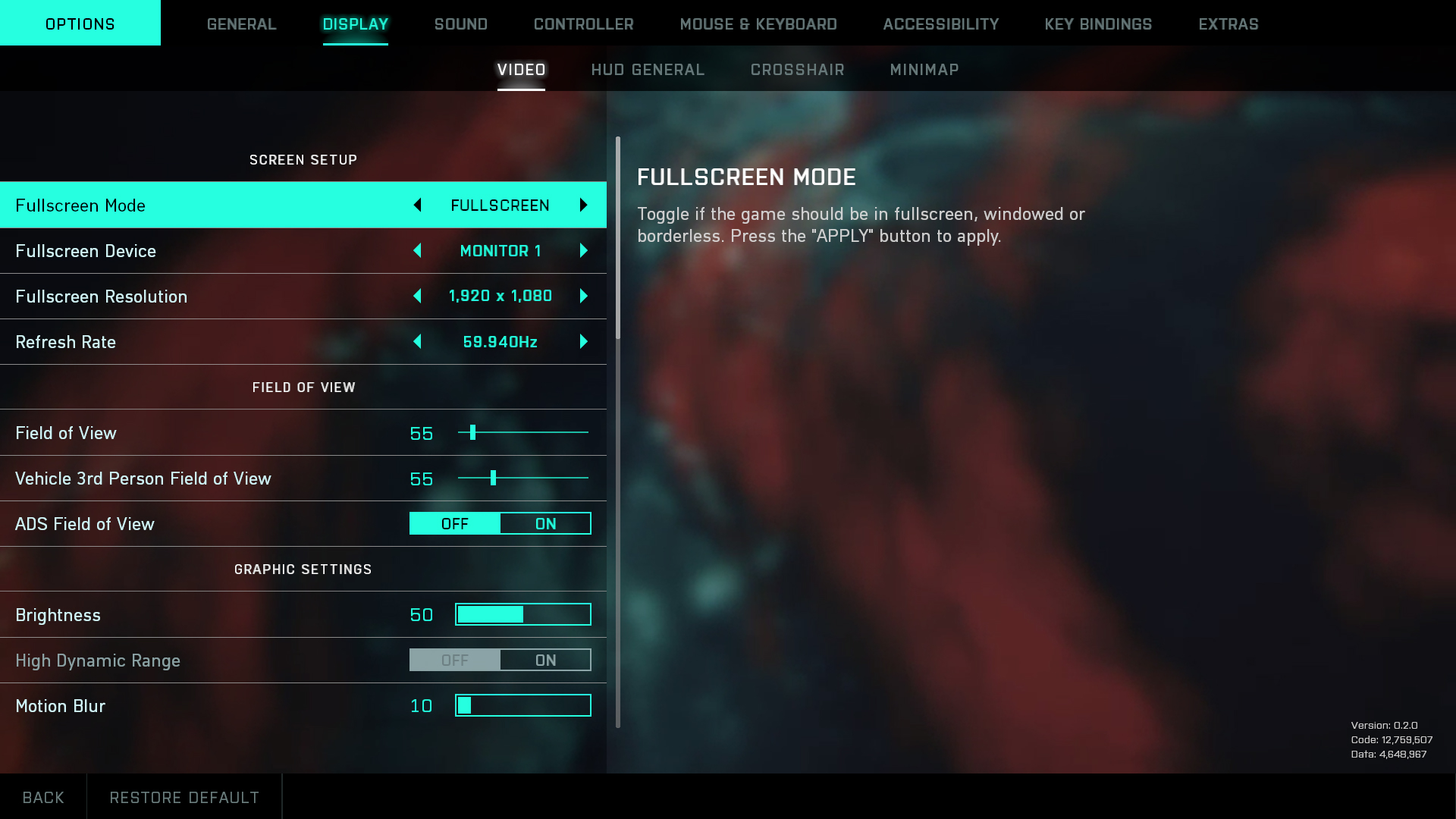

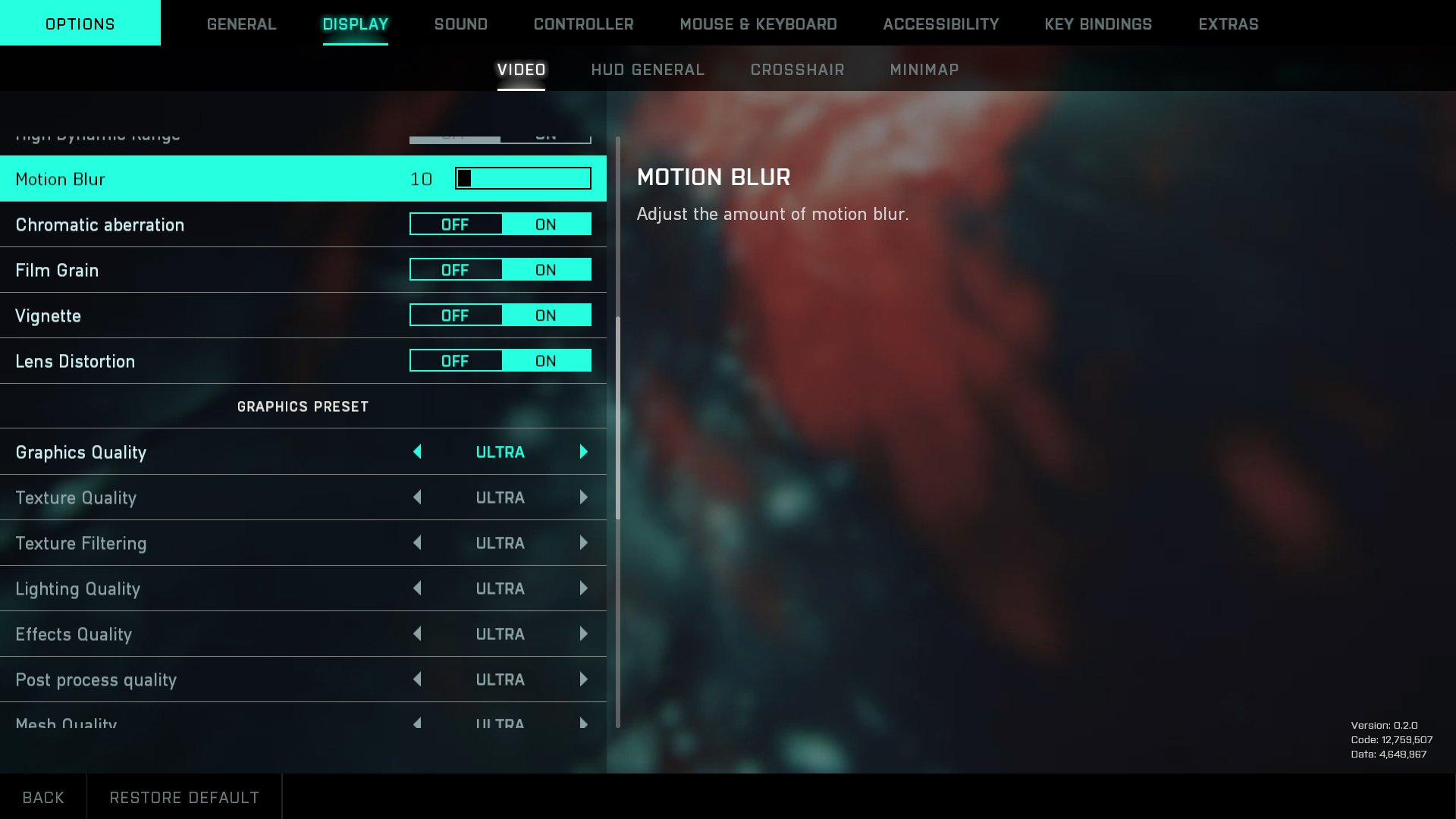

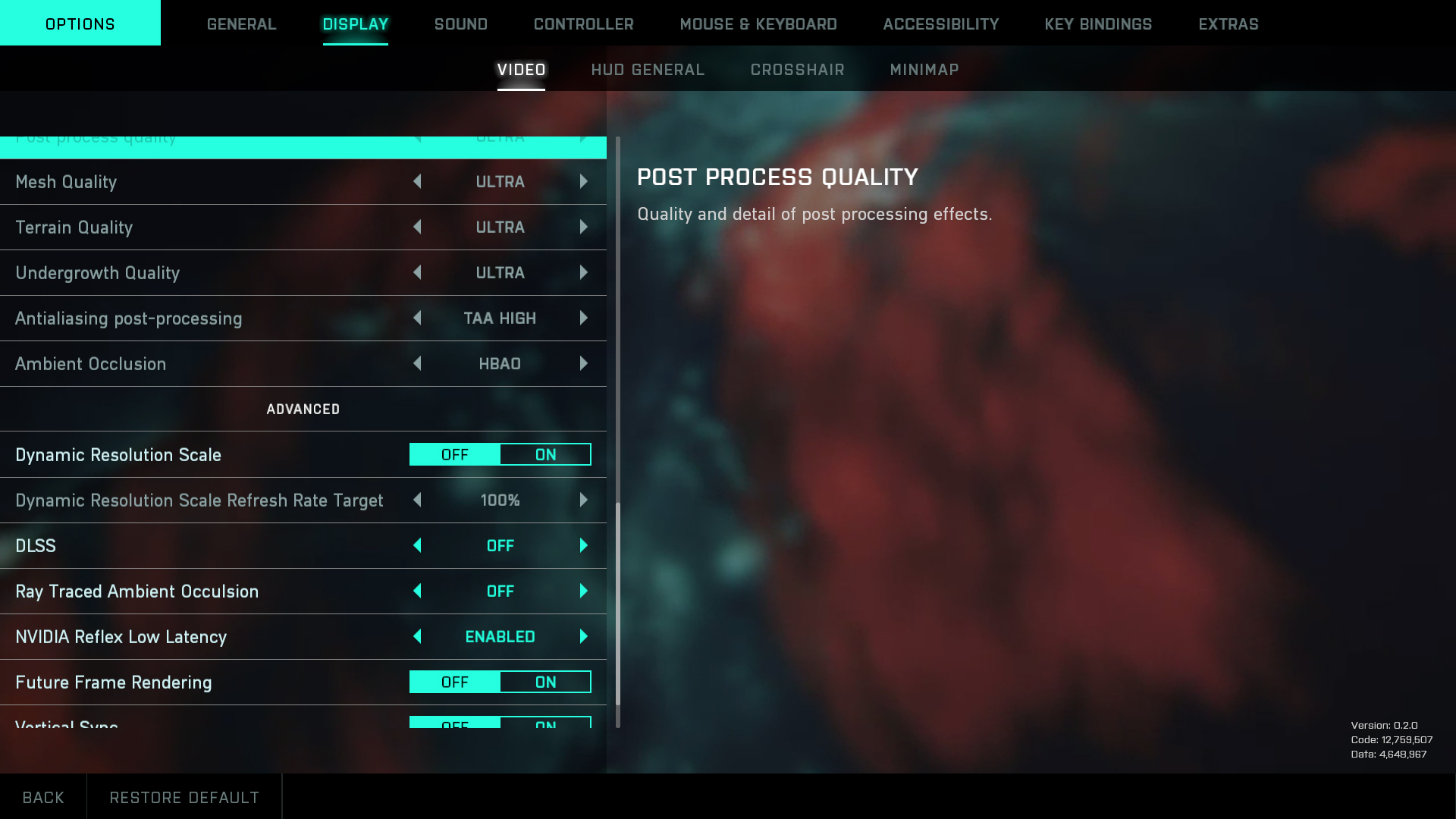

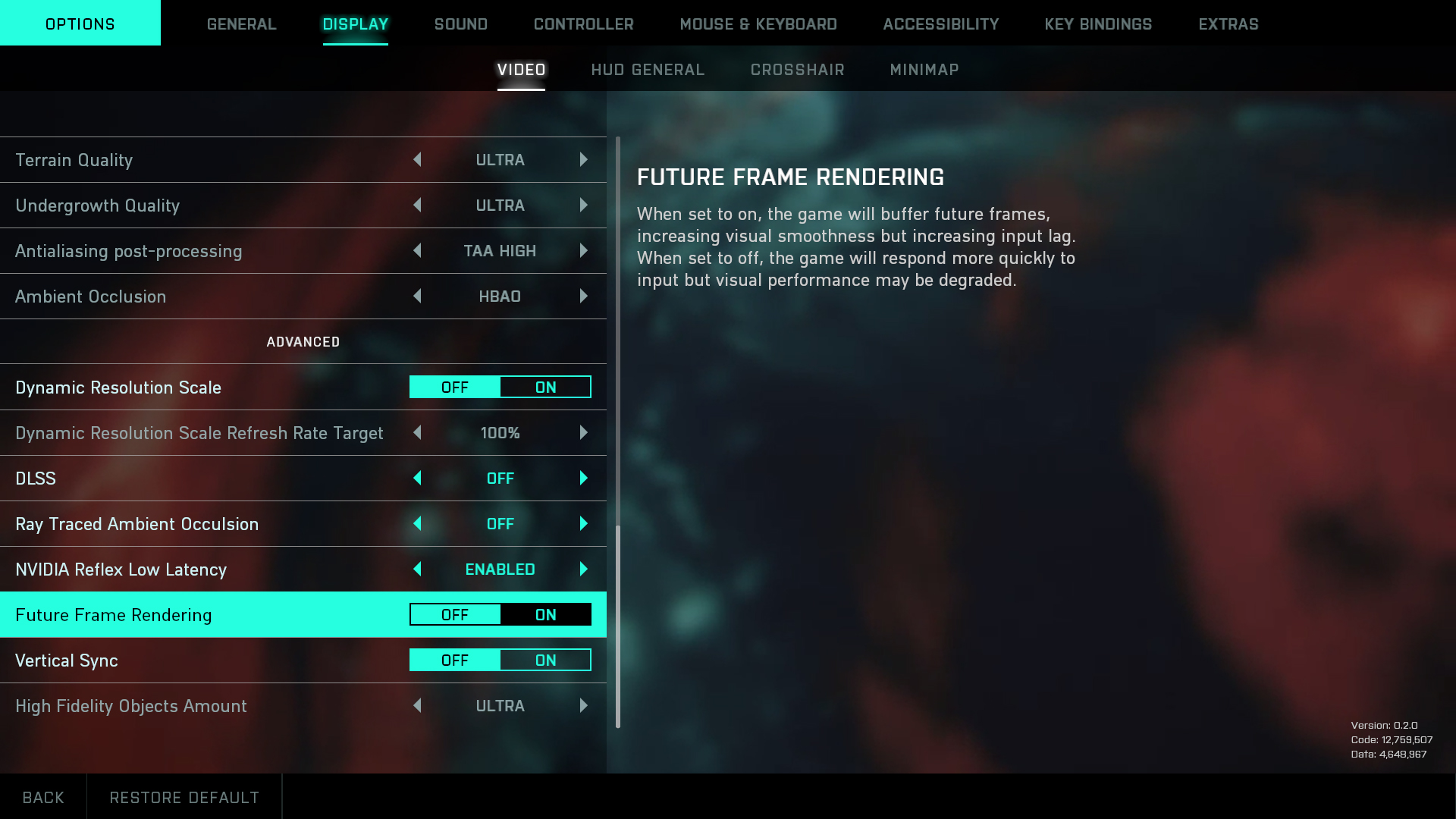

Battlefield 2042 has all the usual settings you'd expect to be able to tweak in a modern PC game, including ultrawide resolution support, uncapped framerates, high display refresh rates, HDR, four presets, and about 18 (depending on how you want to count) individual advanced graphics settings that you can tweak to your liking. If you use the presets, they'll lock in 11 of the settings, but you're free to enable/disable motion blur, chromatic aberration, film grain, vignette, lens distortion, DLSS, ray traced ambient occlusion, and a few other miscellaneous options.

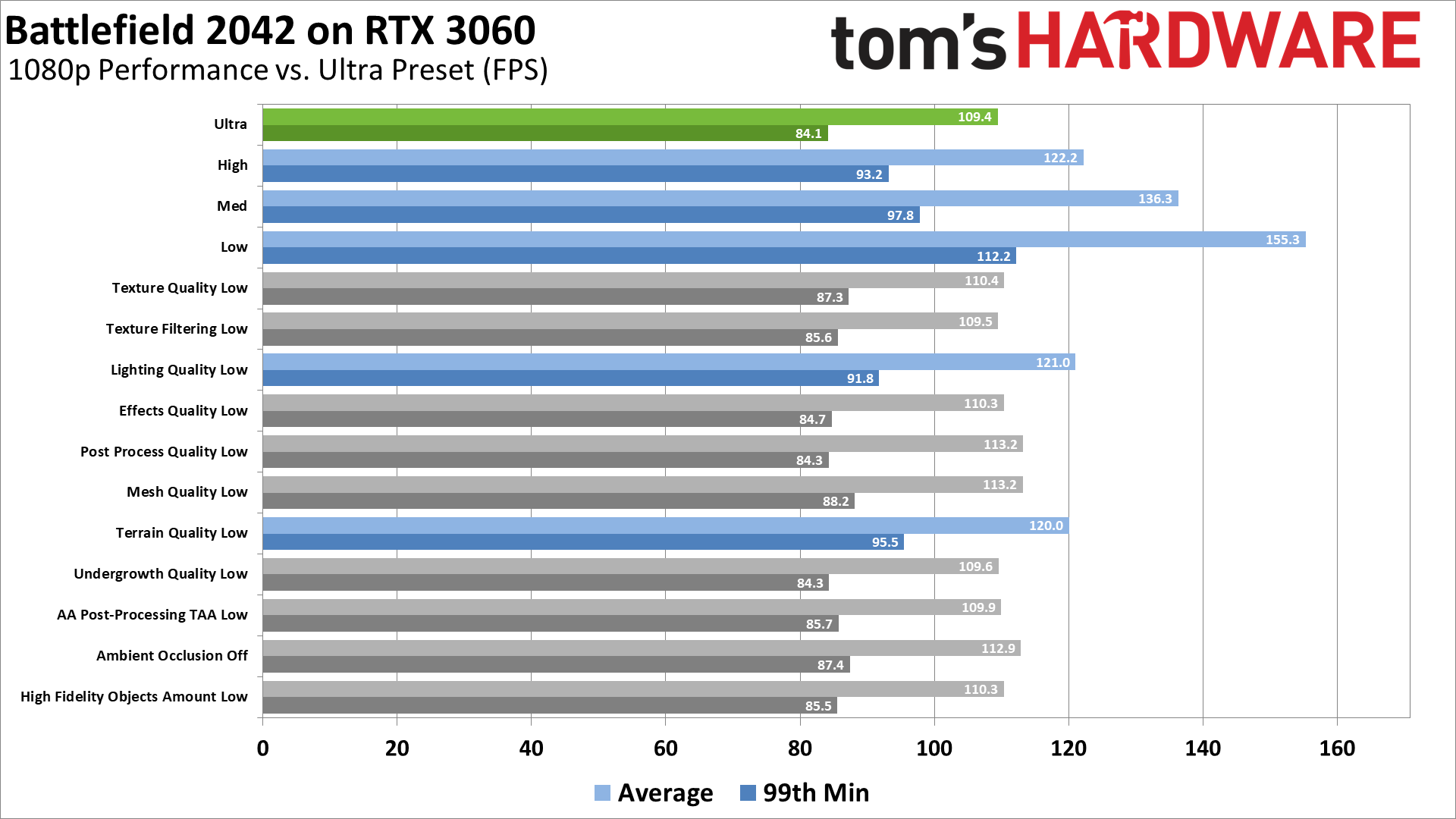

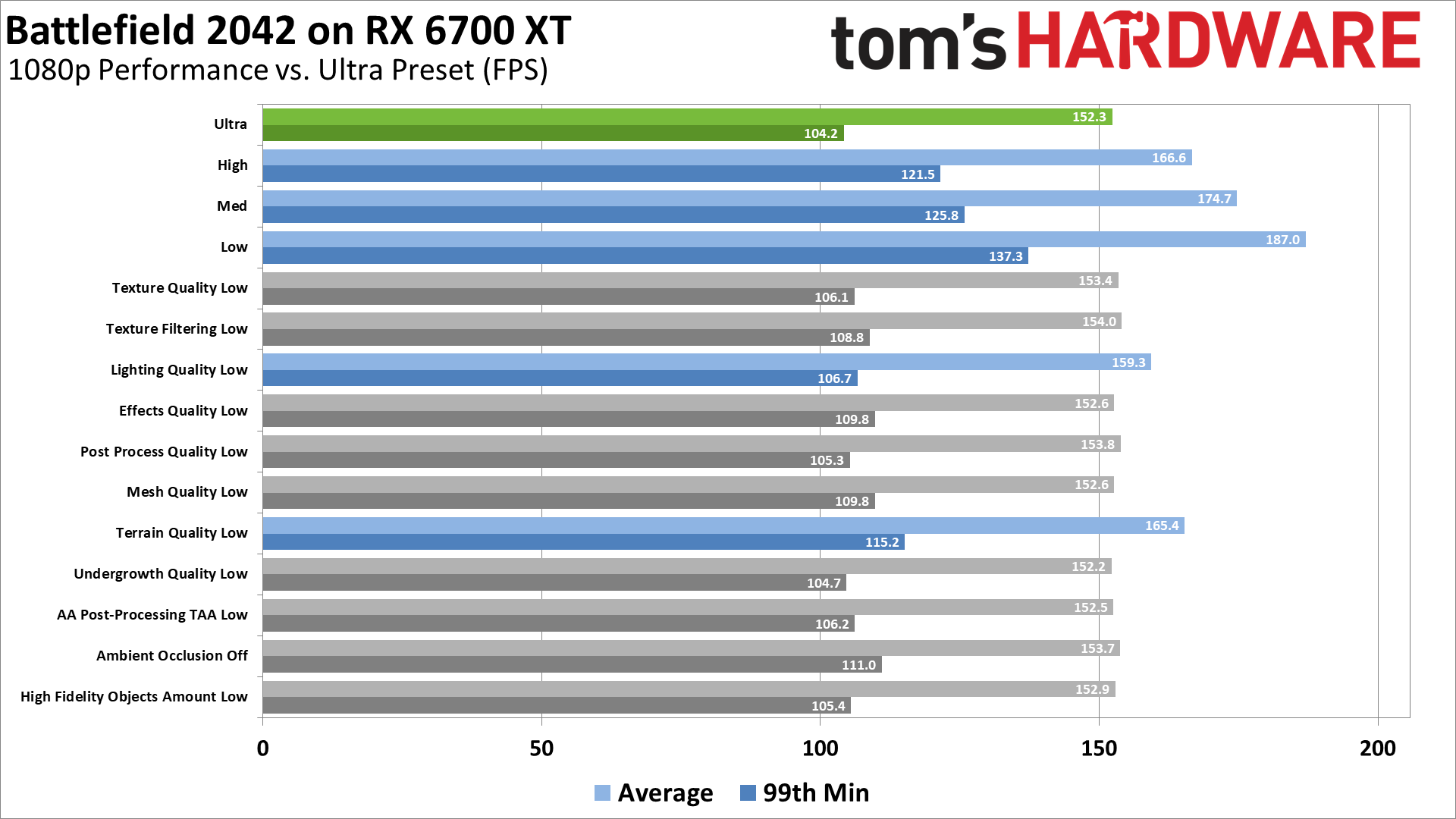

You can see the performance charts for an RTX 3060 and RX 6700 XT running using a 1080p ultra baseline in the above gallery, along with screenshots of the other display and graphics settings. In the next gallery, we have individual screenshots of each of the presets, along with a screenshot (see how to take screenshots in Windows) of each setting turned down from the ultra preset to its minimum value. If you compare the screenshots against the ultra version, you'll note that most settings have very little discernable impact on the way the game looks, or even the performance.

Note that the two GPUs were selected because they both had 12GB VRAM, which is necessary to avoid other bottlenecks. For example, suppose you have an AMD card with only 8GB or less or an Nvidia card with 6GB or less VRAM. In that case, you'll want to drop the texture quality a notch or two and probably lower lighting quality as well to avoid potentially serious stuttering.

Ultra preset

High preset

Medium preset

Low preset

Texture Quality Low

Texture Filtering Low

Lighting Quality Low

Effects Quality Low

Post Process Quality Low

Mesh Quality Low

Terrain Quality Low

Undergrowth Quality Low

Antialiasing Post-Processing TAA Low

Ambient Occlusion Off

High Fidelity Objects Amount Low

Ray Traced Ambient Occlusion On

You can flip through the above images to make your own evaluation, but the short summary of the biggest impact settings is that only lighting quality, terrain quality, and post-process quality change the visuals much. Oh, and of course, ray-traced ambient occlusion, but that really does need a potent GPU and/or running at lower resolutions (perhaps with DLSS) to be viable. As far as performance goes, it's again those same settings that have the greatest potential to improve framerates—or hurt them in the case of RTAO.

Lighting quality generally deals with the shadows you'll see in the game. At the low setting, the resolution of the shadow maps drops and so shadows—like of the nearby ranger robot—become less distinct. Shadows on objects farther away may disappear entirely, like on the semi-truck dumpster in the left of the image. Dropping this to low vs. ultra improved performance by just 5% on the AMD card and 11% on the Nvidia GPU. Part of that might be the CPU becoming a bottleneck with the faster RX 6700 XT, however.

Terrain quality seems to alter both the resolution of the terrain textures as well as the amount of terrain geometry. Flipping between low and ultra, there's less detail and deformation in the terrain on the low setting. However, it's not a massive visual difference, and outside of ray tracing, this was the single most demanding setting. Dropping it to low boosted performance by about 10%.

Post-process quality didn't impact performance much at all, but looking at the screenshots, this appears to include effects like screen space reflections. Set this to low, and the puddles don't have any approximated reflections. This could potentially impact performance and visuals more on some of the other levels, but given we measured a 1–3% drop in performance, you can probably leave it at ultra.

Ray traced ambient occlusion is the only other setting of note, and it improves the quality of shadows on objects. It's particularly noticeable (in the screenshots) underneath the trailer on the left, the platform in the middle, and the underside of the crane on the right. So it does make a difference in image quality, but the problem, as usual, is that the slight gains are offset by a rather steep drop in performance. We've benchmarked with and without RTAO on the various GPUs we tested, and saw about a 30% drop in performance on the AMD GPUs and a smaller 15–20% drop on the Nvidia GPUs. But that's only if your GPU has enough VRAM, as results on the RTX 3060 Ti at higher resolutions drop quite a bit more, while the RTX 2060 basically shouldn't even think about enabling RTAO.

Battlefield 2042 Graphics Card Benchmarks

Due to the difficulty in testing Battlefield 2042, I've elected to only include a handful of cards from the most recent GPU families. This is because it just takes so long to load the game and then start a match, and over half the time, the weather would interfere with the results and require me to exit and restart. Even with that effort, there's probably a 5% margin of error on these results, but it at least gives a decent idea of what to expect.

Most users will want to stick to the high or medium presets, particularly if you don't have more than 8GB of VRAM. Drop a note in the comments with other GPUs you'd like to see tested, and if there's enough demand (meaning more than one person saying "please test XYZ!"), I'll revisit the subject with more testing. But this isn't going into my regular benchmark suite as it took probably four times longer to test each GPU than on most other games with a built-in benchmark.

I've included results with DLSS Quality mode enabled on the Nvidia RTX GPUs as well, which some might say is unfair, but honestly, the difference between native and DLSS Quality is very difficult to spot, particularly when you're just playing the game. You could even opt for Balanced or Performance modes, especially at 1440p and 4K, and likely not notice. I'll have more to say regarding DLSS below.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

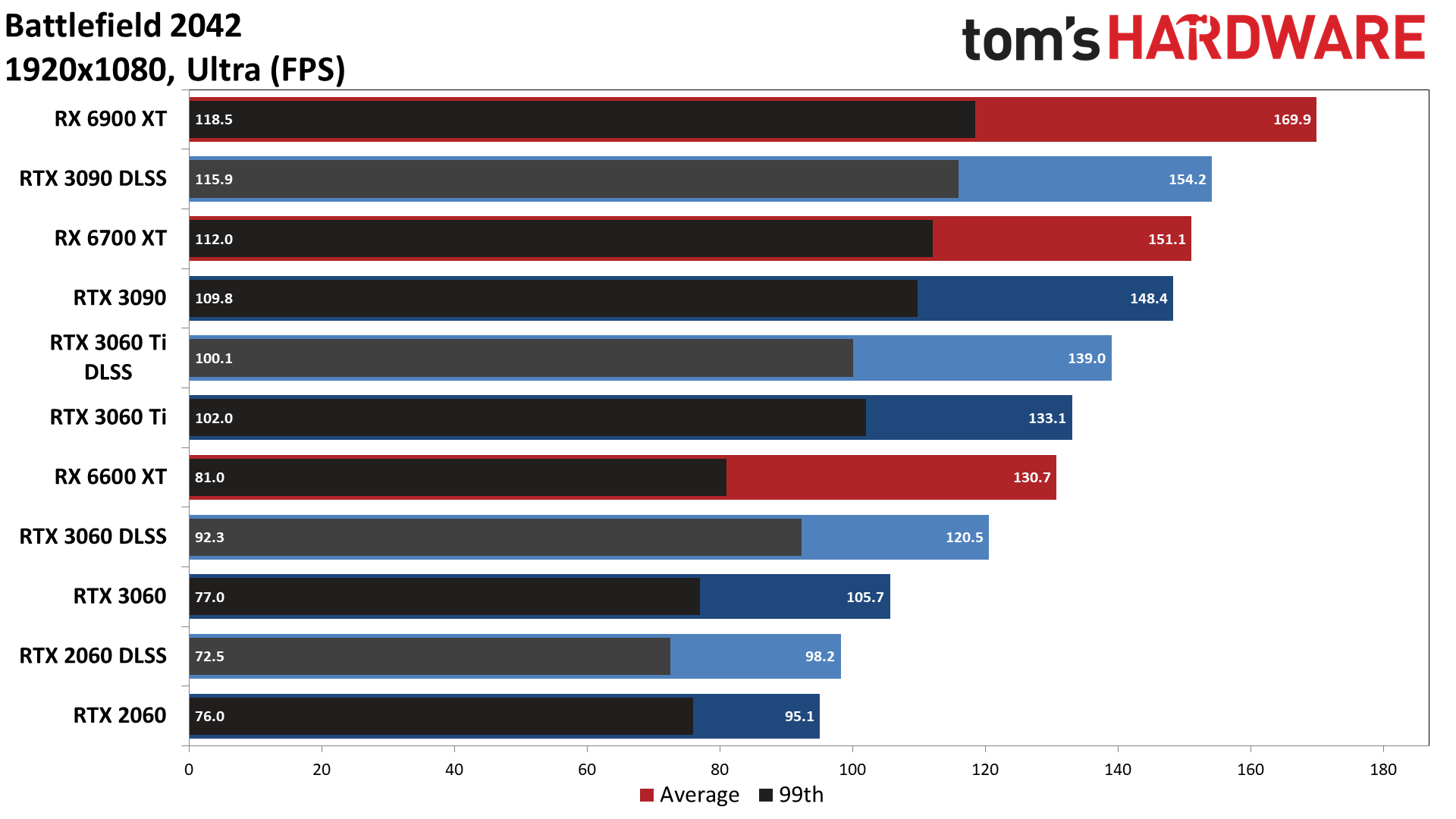

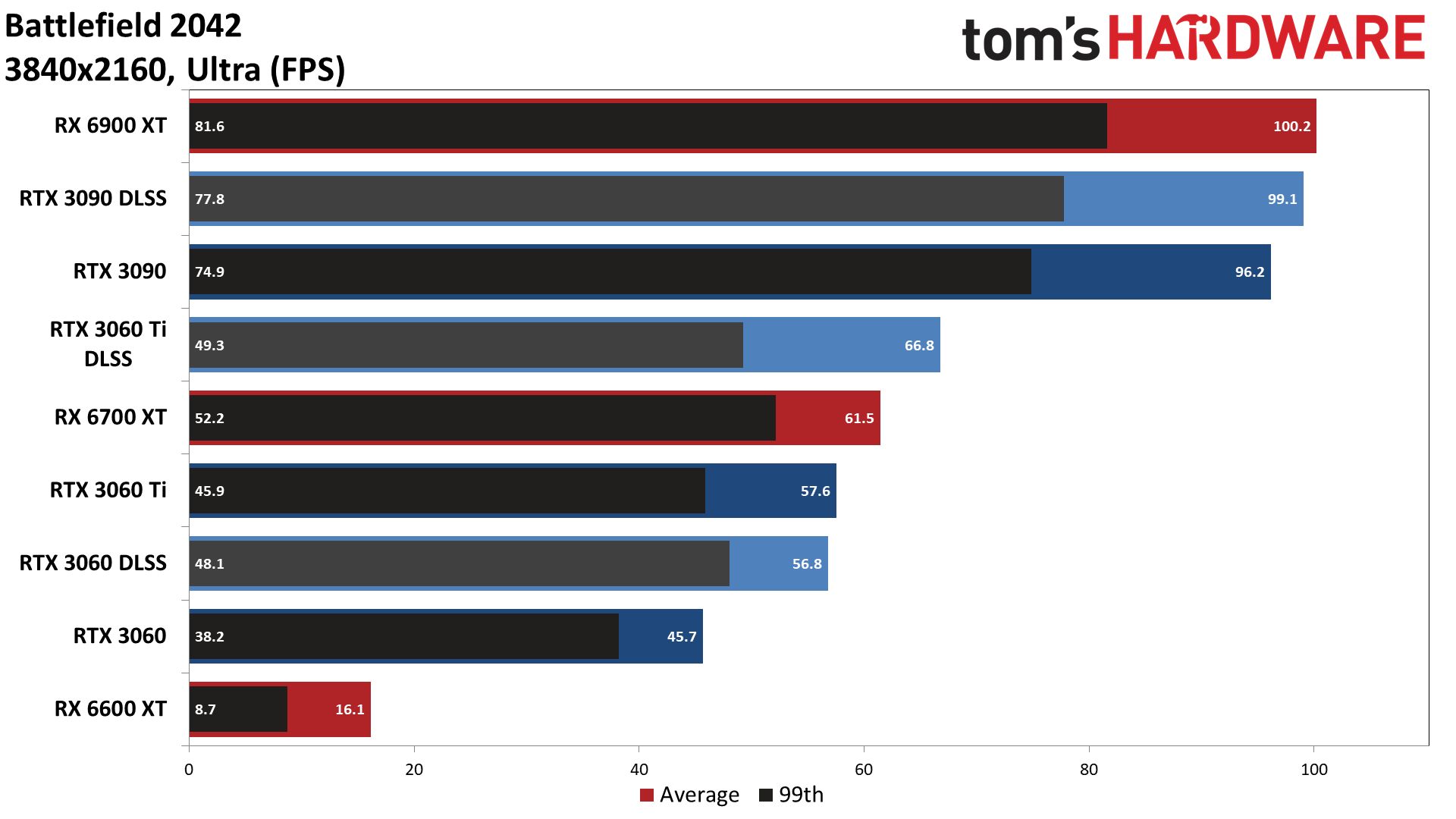

Again, in the interest of time, I stopped after testing performance at the ultra preset, as well as using ultra plus enabling RTAO. Every GPU tested managed more than 60 fps at 1080p ultra, though cards like the RTX 2060 and RX 6600 XT might exhibit more stuttering in lengthier sessions. If you want 144 fps for a high refresh rate display, only the RTX 3090 (and RTX 3080 Ti should perform about the same) and RX 6700 XT and above will suffice. Even with DLSS, Nvidia's top GPUs can't catch AMD's offerings.

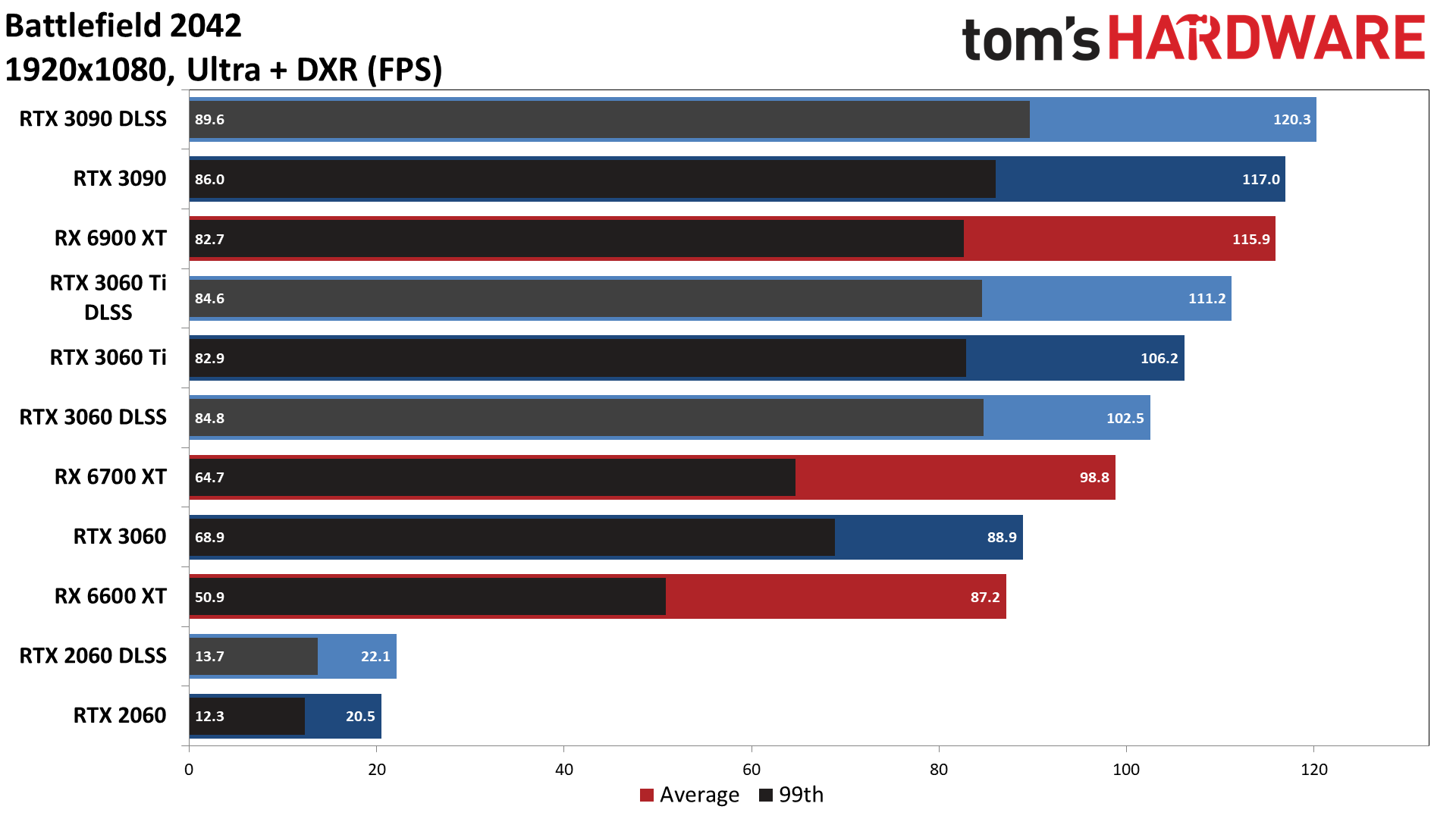

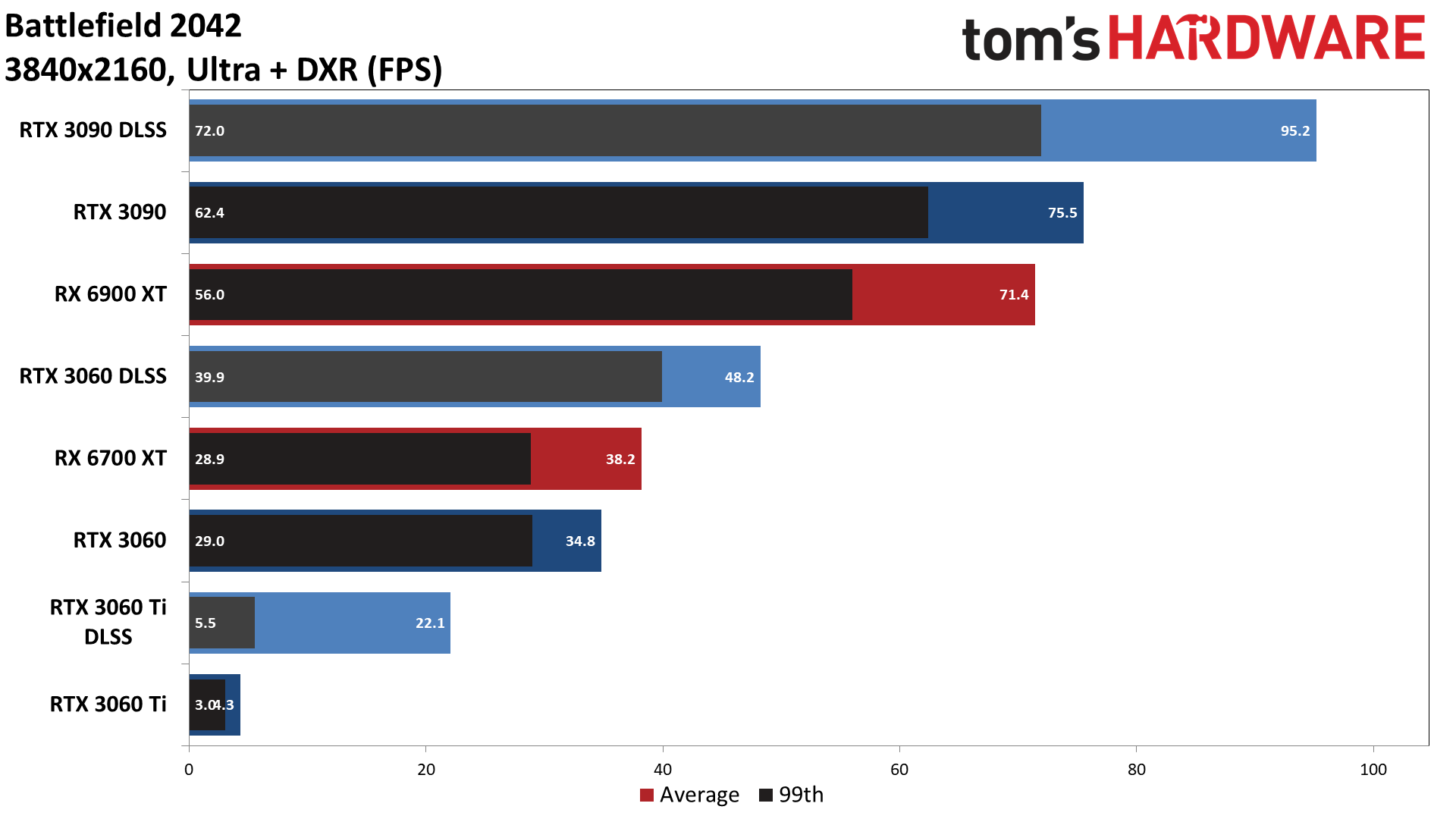

Enabling ray traced AO changes things up a bit. The Nvidia GPUs now lead AMD's equivalent (more or less) offerings. Nothing can achieve a steady 120 fps, though, never mind 144 or 240 fps. As a twitch-heavy shooter, I suspect most Battlefield 2042 players will be more interested in dropping settings to improve their fps rather than adding slightly better shadows.

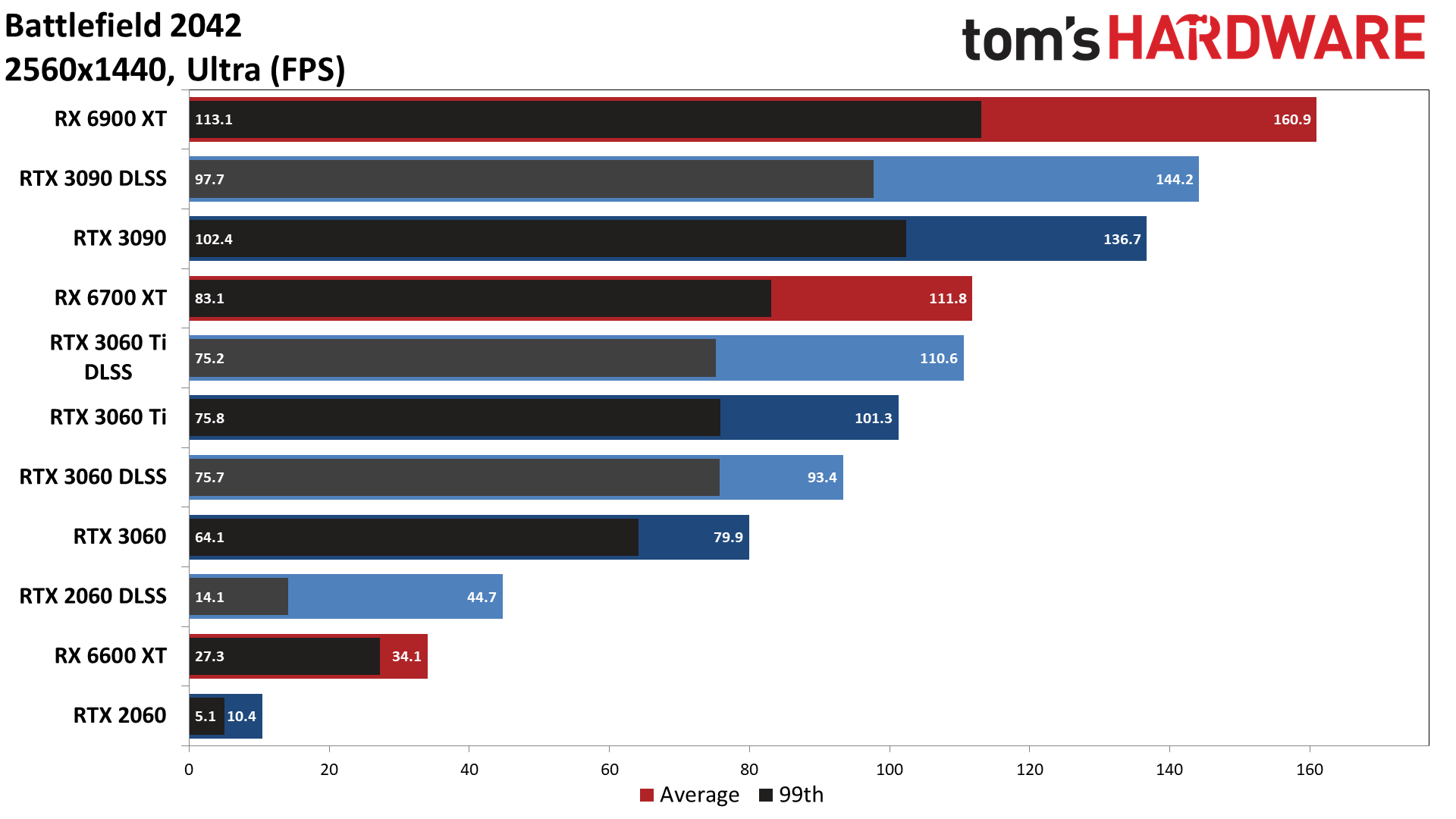

The fastest cards still handle 1440p quite well, but VRAM becomes a factor on AMD's 8GB RX 6600 XT, as well as the RTX 2060. CPU limitations are no longer a factor (except on the 6900 XT and RTX 3090), so provided your GPU has sufficient memory, you get about 25% lower performance at 1440p ultra than at 1080p ultra. The RTX 3060 and above all still break 60 fps with room to spare, while the RTX 3090 just barely averages 144 fps and the RX 6900 XT still has a bit of gas in its tank.

If you don't have enough VRAM, like on the RX 6600 XT or RTX 2060… well, performance can drop 75% to 90%. Oops. Don't run out of VRAM.

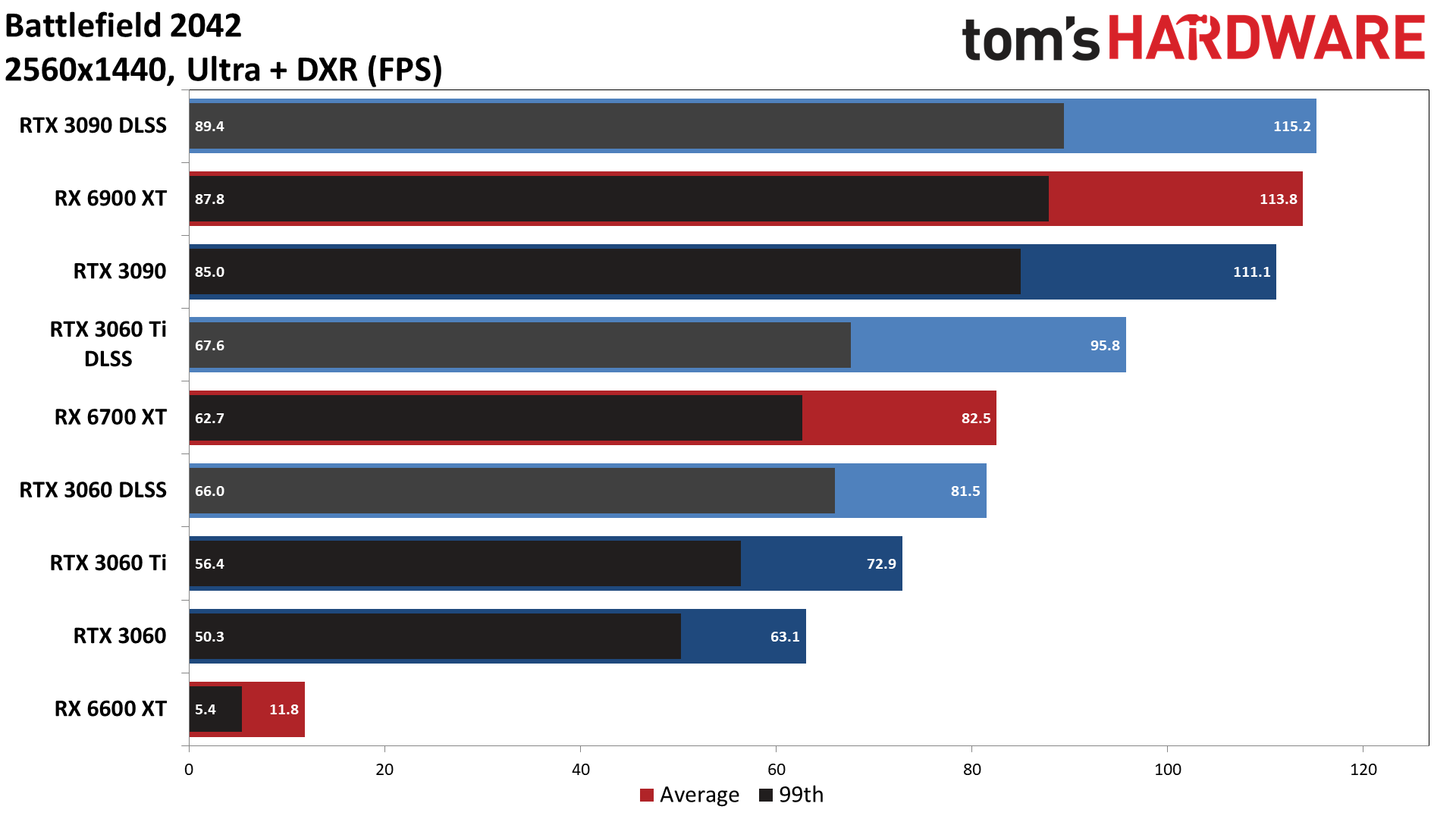

Enabling ray tracing and getting 60 fps or more is still possible on the RTX 3060 and above, though minimums on many of the cards may drop below that mark. The RTX 2060 couldn't even think about providing reasonable performance at 1440p ultra with DXR (DirectX Raytracing) enabled, but we did toss in the RX 6600 XT just for giggles. Again, don't run out of VRAM.

I'm not sure why AMD's GPUs often seem to need more memory for equivalent settings than Nvidia's GPUs, but it's probably internal delta color compression among other things. In Battlefield 2042, at least with the game ready AMD 21.11.2 and Nvidia 496.76 drivers we used for testing, it seems like AMD's 8GB cards behave like Nvidia's 6GB cards, and its 12GB cards more or less match the behavior of Nvidia's 8GB cards. There's probably more room for driver optimizations, in other words. That or AMD's Infinity Cache is playing a big role.

Given what we've seen so far, you'd expect a rough go of things at 4K ultra, and that's what we get. The RTX 3060 Ti with DLSS and RX 6700 XT can break 60 fps, but otherwise you'd want an RX 6800 or RTX 3080 or above. Technically the RTX 3070 and 3070 Ti should be fine as well, but the 8GB does cause more hitching from time to time. 8GB on AMD's RX 6600 XT meanwhile delivered sub-20 fps results.

What about 4K with maxed out settings plus ray tracing? The RX 6900 XT and RTX 3090 still averaged more than 60 fps, and DLSS Quality mode now proves more beneficial, boosting performance to 95 fps. With DLSS enabled, we expect the RTX 3080 and 3080 Ti would also provide a good experience. AMD's RX 6800 XT should be okay as well, since it's typically only about 5% slower than the 6900 XT. But this is mostly an academic question as I'm sure few Battlefield 2042 players will opt for lower fps just to get a few ray traced shadows.

Battlefield 2042 DLSS Analysis

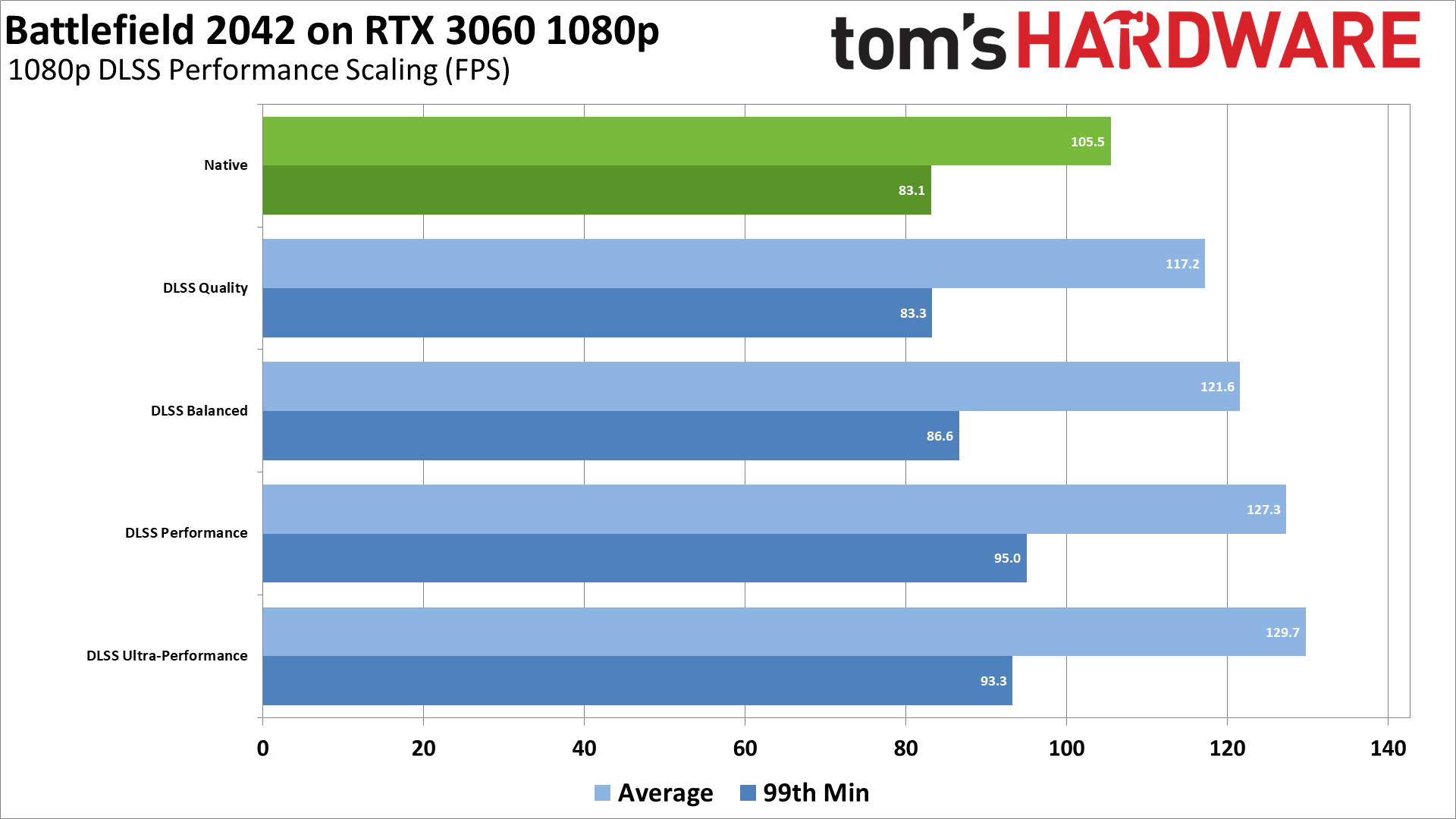

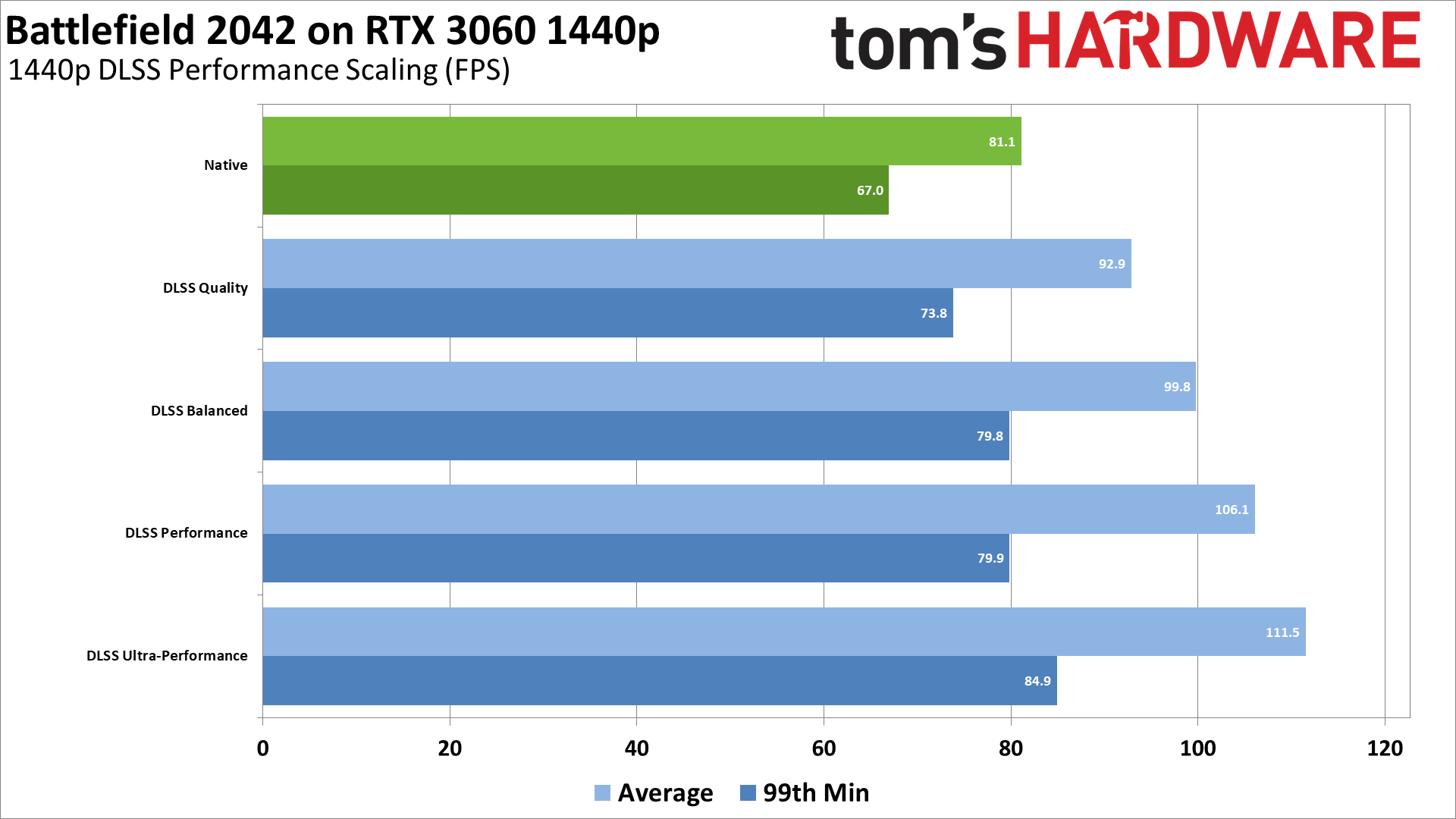

We included DLSS Quality mode on the RTX cards for the testing above, but here we've tested with just the RTX 3060 at 1080p and 1440p, and tried out all four DLSS modes. Quality uses 2x upscaling (approximately), Balanced uses 3x upscaling, Performance is 4x upscaling, and Ultra-Performance goes whole hog with 9x upscaling. That means at 1080p it's actually upscaling a 640x360 resolution frame, while at 1440p it upscales an 853x480 frame. Considering the low resolution source frames, it's pretty impressive that the resulting images don't look absolutely awful!

The performance benefits of DLSS aren't as high in Battlefield 2042 as we've seen in other games. Maybe they'd be greater at 4K, but most gamers still aren't using 4K displays. As we've mentioned in our best gaming monitors guide, high refresh rate 1440p displays are still our favorite option, and you can get G-Sync of FreeSync on most of them without paying a massive price premium. High refresh rate 4K displays cost more several times more money.

At 1080p, other factors (CPU and just the additional overhead of DLSS) come into play, so while Quality mode boosts performance by 11%, Balanced mode only gets you 15% more than native performance, and Performance and Ultra-Performance deliver 21% and 23% more performance than native, respectively. The scaling is a bit better with 1440p: 15% in Quality mode, 23% Balanced, 31% Performance, and 37% Ultra-Performance.

Native Resolution

DLSS Quality

DLSS Balanced

DLSS Performance

DLSS Ultra-Performance

But we have to put things in perspective. Sure, using DLSS Quality it's hard to spot the differences versus native rendering. Performance and Ultra-Performance? Not so much. We'd reserve Performance mode for 4K gaming, and Ultra-Performance is mostly just a gimmick that might enable 8K at acceptable framerates in some games (but probably not in Battlefield 2042).

You could also skip DLSS and just use the game's built-in upscaling. It doesn't look nearly as good, but the overhead is a lot lower.

Battlefield 2042 Ray Tracing

Ray Tracing Off

Ray Tracing On

Ray Tracing Off

Ray Tracing On

Ray Tracing Off

Ray Tracing On

We've talked already about how enabling ray tracing still causes a pretty severe performance hit, especially on GPUs that run out of VRAM. AMD's RX 6900 XT still managed just fine, and the other Navi 21 GPUs should be okay as well, but AMD cards with 12GB or less memory, and Nvidia GPUs with 8GB or less VRAM, will probably want to leave it off.

You can see the above images showing several different areas of the Discarded map and the impact RTAO has on the visuals. Or rather, the lack of impact. There's no denying on the crane images that RTAO looks far more correct than having it off, and if every part of every map showed that much of a visual change we could make a good argument for RTAO.

But then the outdoor view of the shipping crate and walls only shows slightly darker shadows on the walls to the right, and underneath the walls and bushes (frankly perhaps a bit too dark under the shrubbery), and the interior shot with the ship on stilts shows similarly minor differences.

And what the visuals don't show is that with ray tracing enabled, even on a fast GPU, there tend to be more stutters and slowdowns. They become less frequent after you've been running around an area for a bit, but die and respawn elsewhere and they can crop up again.

For the minor improvement in visuals, we just don't feel RTAO can be justified in a modern shooter. But then we'd also say the same of the minor improvements you can see when going from high to ultra quality. Using high with terrain quality at medium can boost performance 15%, which is what most people would likely prefer.

Battlefield 2042 Closing Thoughts

It's interesting to see the change in approach to ray tracing with Battlefield 2042. The previous Battlefield V used ray tracing just for reflections, and I and many other reviewers pointed out that doing this is kind of the worst way to add ray tracing. It's really only in games that use multiple ray tracing effects that we really start to see the benefits in terms of image quality. But ray traced reflections still tends to be more useful than ray traced shadows… and yet Battlefield 2042 opted to do ray traced ambient occlusion, which is a subset of shadows. It makes me want to shout, "We're going the wrong way!"

Fundamentally, the problem is that a game like Battlefield 2042 benefits far more from high framerates than from improved visuals. I'm sure there are some people that will love running at maxed out settings, with ray tracing, just because they have a sufficiently powerful PC. In a few more years, maybe even midrange PCs will be able to handle BF2042 with all the bells and whistles. But by then we'll have the next Battlefield installment to occupy our time.

I suspect EA had some sort of a deal with Nvidia where it had to include at least one ray tracing effect. DLSS support is more welcome, though sadly it's version 2.2.18.0 at launch, rather than the most recent 2.3.2 that includes some enhancements that get rid of the ghosting effect—one of the only real drawbacks to DLSS. Interestingly, swapping DLLs actually didn't work for Battlefield 2042 (you can't enable DLSS after swapping DLLs), so either 2.3 has some different hooks or this particular game uses DLSS in a way that required tighter integration. Probably it's just a version check, but either way, we'd love to see it updated.

As it sits right now, Battlefield 2042 doesn't feel radically different from previous games in the series. That's either good or bad, depending on what you think of those games. The new 128-player mode on PC can feel a bit too crowded on some maps, but 64-player mode can conversely feel a bit too unpopulated. In my playing so far, though, rarely did I encounter much in the way of solitude—which is part of what made testing performance in Battlefield 2042 such a complete slog.

If you'd like to see more GPUs tested, let us know in the comments. For now, hopefully these initial testing results will be sufficient to get you started. Or, you know, just use the medium or high preset and call it a day. That's what console players get.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

VforV I just saw HUB's video of the CPU scaling and benchmark for this game and GPU power is not the biggest issue in this game.Reply

The amount of tests to get the data for all the CPUs on 128 player matches was incredible, but this game is both crazy heavy on the CPU and needs a lot more optimization too.

I love how the 0.1% Lows show the 5600x matching the new "sweetheart" 12600k and having only 6 fps less than the "new king" (lmao) 12900k. And that's with an RTX 3090.

Does it really matter if the averages differ by 15 fps when the 0.1% Lows are the same?

Link to the full HUB video. -

larkspur Reply

I think that when you get to the top-end of that graph, the 3090 becomes the limiting component. There isn't much difference between the three AL and five Zen 3 chips there but (despite similar core counts) the older architectures seem to be performing significantly worse - indicating some heavy CPU dependency. It kind of tells us what we already know - a 6+ core AL or 6-core Zen3 is perfectly adequate for gaming.VforV said:I just saw HUB's video of the CPU scaling and benchmark for this game and GPU power is not the biggest issue in this game.

The amount of tests to get the data for all the CPUs on 128 player matches was incredible, but this game is both crazy heavy on the CPU and needs a lot more optimization too.

I love how the 0.1% Lows show the 5600x matching the new "sweetheart" 12600k and having only 6 fps less than the "new king" (lmao) 12900k. And that's with an RTX 3090. -

vinay2070 Reply

It would be interesting to see how adequate would the 6 core be with newer console games using 8Core AMD CPUs start migrating to PCs and with RDNA3/ADA LL level GPUs running them (assuming if they are as fast as the leakers claim them to be and be less of a GPU bottleneck). For now, they are perfectly adequate. I am tempted to upgrade my 3700X to 5600X for little price difference, but I will hold off and see how good the 6600X/6800X are gonna be.larkspur said:I think that when you get to the top-end of that graph, the 3090 becomes the limiting component. There isn't much difference between the three AL and five Zen 3 chips there but (despite similar core counts) the older architectures seem to be performing significantly worse - indicating some heavy CPU dependency. It kind of tells us what we already know - a 6+ core AL or 6-core Zen3 is perfectly adequate for gaming. -

Roland Of Gilead Meh! Some aspects of the game visually are good. But the game itself is not enjoyable to play. My 3060ti plays it roughly in line with the charts, but that only tells one side of the story for this game. There are major issues that need work , like massive server lag, which still exists after update, the horrible 'bloom' effect when shooting. The rubber banding is still going on too.Reply

It also seems to poorly optimized, as my 3060ti never goes above 70-75% usage on Ultra! Maybe with a few more updates it might be better, but for now I enjoy BF V more, despite the rampant cheating. -

sstanic it's a heavily CPU bottlenecked game it would seem. I'd really like to see some testing and optimizations in that sense. for example, Ryzen 3700X and 3600X are very common, I've a 3800X for now, and some other CPUs are common of course, and it'd be very interesting to see how different settings help the situation. faster RAM, lower timings in particular on Ryzens, is that the/a bottleneck? does the higher power of the 105W 3800X make a difference vs 65W 3700X, and similar comparisons would be interesting to many I feel.Reply

in this review, I feel that omitting DLSS for 4K missed the point of DLSS, as it seems that Nvidia is primarily optimizing it precisely for 4K. also, by now many people have a LG OLED 4K TV, with a 120Hz panel, and 120Hz HDMI 2.1, there's a 42" model coming soon it seems too. your comparisons for 4K were great to see, as were various optimizations and image quality comparisons. nice review, many thanks for the hard work 🙂 -

Dantte ReplyAdmin said:We tested Battlefield 2042 performance, including ray tracing and DLSS, and provide a detailed look at how the settings impact performance and visual quality. Here's our hHB9WNpnrdimdEXnxjMSrMe to tuning performance to get the most out of the game.

Battlefield 2042 PC Benchmarks, Performance, and Settings : Read more

You missed a big one!

By default BF2042 runs using DX11 and there is no in-game setting to enable DX12. Open the file "PROFSAVE_profile" in your Documents/Battlefield 2042\settings folder, and change the value for GstRender.Dx12Enabled from a 0 to a 1. This will give you an additional 5% or better performance! -

JarredWaltonGPU Reply

The 4K charts have DLSS Quality tested on the various RTX GPUs, and the point of the DLSS analysis section was to show scaling with the different modes. At 4K, the scaling might be a bit better than at 1440p, but it's not going to radically alter the charts. Basically, Quality mode on the 3060 boosted performance 24% at 4K, so Balanced and Performance modes will scale from there. It makes 4K @ >60 fps viable, but only if you have a card with more than 8GB VRAM.sstanic said:it's a heavily CPU bottlenecked game it would seem. I'd really like to see some testing and optimizations in that sense. for example, Ryzen 3700X and 3600X are very common, I've a 3800X for now, and some other CPUs are common of course, and it'd be very interesting to see how different settings help the situation. faster RAM, lower timings in particular on Ryzens, is that the/a bottleneck? does the higher power of the 105W 3800X make a difference vs 65W 3700X, and similar comparisons would be interesting to many I feel.

in this review, I feel that omitting DLSS for 4K missed the point of DLSS, as it seems that Nvidia is primarily optimizing it precisely for 4K. also, by now many people have a LG OLED 4K TV, with a 120Hz panel, and 120Hz HDMI 2.1, there's a 42" model coming soon it seems too. your comparisons for 4K were great to see, as were various optimizations and image quality comparisons. nice review, many thanks for the hard work 🙂 -

JarredWaltonGPU Reply

I can confirm that this information is definitely not correct when using current generation RX 6000 and RTX 30 series graphics cards. I never bothered to set DX12 mode, because that was the default for all the GPUs I tested. Here's an RTX 3090 launching the game, for example, with OCAT overlay mode running:Dantte said:You missed a big one!

By default BF2042 runs using DX11 and there is no in-game setting to enable DX12. Open the file "PROFSAVE_profile" in your Documents/Battlefield 2042\settings folder, and change the value for GstRender.Dx12Enabled from a 0 to a 1. This will give you an additional 5% or better performance!

108

With the exception of Crysis Remastered, there are no games with DirectX Raytracing support that use DX11 mode. Now, if you have a non-RTX and non-RX 6000 GPU, maybe the game will default to DX11. More likely, DX11 is only the default on Nvidia GTX GPUs, and there's a very good chance DX11 still runs better on Pascal and Maxwell GPUs. If you have an older AMD GCN GPU, though, and it defaults to DX11, you might get a minor benefit by switching to DX12. -

Dantte Reply

You going to test it? You said "I can confirm that this information is definitely not correct", so you have tested and there is no performance difference or you just making a blind statement with no proof or testing?JarredWaltonGPU said:I can confirm that this information is definitely not correct when using current generation RX 6000 and RTX 30 series graphics cards. I never bothered to set DX12 mode, because that was the default for all the GPUs I tested. Here's an RTX 3090 launching the game, for example, with OCAT overlay mode running:

108

With the exception of Crysis Remastered, there are no games with DirectX Raytracing support that use DX11 mode. Now, if you have a non-RTX and non-RX 6000 GPU, maybe the game will default to DX11. More likely, DX11 is only the default on Nvidia GTX GPUs, and there's a very good chance DX11 still runs better on Pascal and Maxwell GPUs. If you have an older AMD GCN GPU, though, and it defaults to DX11, you might get a minor benefit by switching to DX12.

Check the file settings, 0 or a 1, run your benchmark. Change the setting to a 0 or 1, and rerun the benchmark. Let us know what, if any difference there is. Also, if there is a performance difference and this isnt affecting the DX## API in use, then what is it changing? -

saunupe1911 The game itself just isn't fun but Tom's why is the 3080 omitted from testing when it's arguably the sweet spot for the RTX 3000 series line?Reply