Intel Arc A380 GPU Goes Neck-and-Neck With GTX 1650 Super In New Benchmarks

Alchemist takes on Turing

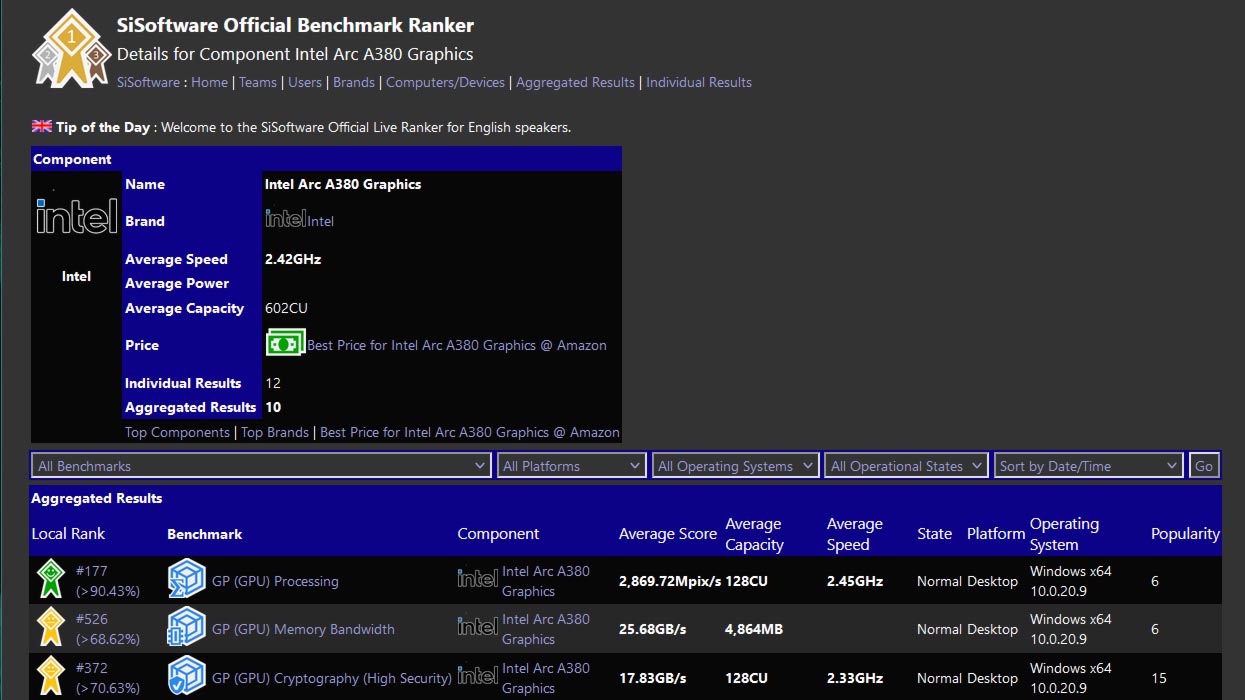

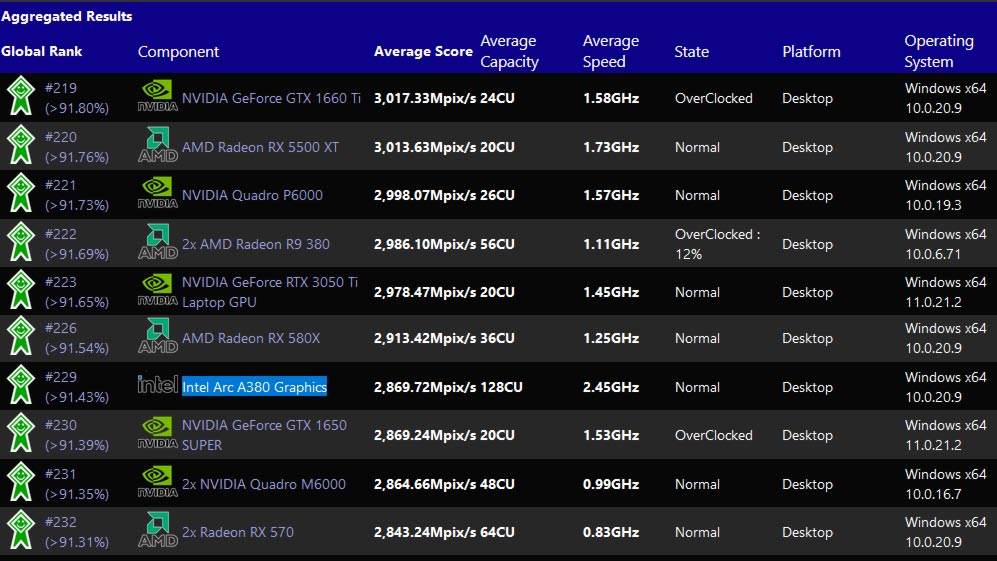

The key specifications of Intel's Arc Alchemist A380 desktop graphics card, and a matching crop of benchmark results, have been unearthed by serial PC hardware leaker momomo_us. According to the Twitter-based PC data digger, Intel's entry-level desktop GPU will feature 128 execution units (EUs) and run at up to 2.45 GHz. This yet-to-be-launched graphics card apparently has 4.8GB of VRAM onboard, according to the data leak — how it reaches that amount isn't quite clear. It performs roughly on a par with the GeForce GTX 1650 Super GPU when comparing the headlining GPU processing score in the aggregated SiSoft Sandra online database, which may or may not line up with real-world gaming performance.

The Arc A380 is one of two entry-level graphics cards from the blue team based around the Xe-HPG architecture and the DG2-128EU GPU. There may be a cut-down version of even this lowest rung GPU, dubbed the Intel Arc A350, featuring only 96 of the 128 total possible EUs enabled. A larger one will join Intel's littlest GPU with up to 512 EUs, and there logically should be a middle-sized GPU (or two or three) rolled out to fill in the gaps, but it's uncertain whether Intel will create a 256 EU or 384 EU mid-market pleaser.

Several vital specs of the Intel Arc A380 appear to be confirmed by this SiSoft Sandra benchmark run. According to the data, it's a desktop GPU with 128 EUs enabled, providing 1,024 FP32 shading units. In addition, the GPU ran at a clock speed of 2.45 GHz during the SiSoft tests. Other exciting specs recorded by the system info and benchmarking app include 1MB of L2 cache and a VRAM quota of 4.8GB.

That last item seems a bit odd, as most GPUs use 8Gb or 16Gb GDDR6 chips. It suggests Intel perhaps will use five 8Gb chips, but it may not fully enable one of those chips — or it could be for ECC data. Alternatively, it might simply be a misreporting on the part of SiSoft, since this is an unreleased GPU. Memory bandwidth also appears to be a bit low, with just 25.68GB/s (compared to 46.69GB/s from the GTX 1650 Super).

In terms of performance, the new Intel Arc A380 appears to nestle among others featuring GPUs like the GeForce RTX 1650 Super, the Radeon RX 580, and the modern RTX 3050 Ti laptop GPU. Note also that SiSoft tends to be far more synthetic in nature than other GPU tests, particularly games, so real-world performance could be very different from what we're seeing here.

In other words, as with all leaks of this nature, add a pinch of salt to the data. We expect Intel to make several announcements later this week during "virtual CES," some of which will very probably be about its initial salvo of Arc Alchemist graphics cards.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

hotaru251 tbh all i hope for is intel's gpu to be good at mining so miners go to them instead of others <_<Reply -

alceryes Good stuff but I want to see Intel's top gaming performance model.Reply

I really hope they bring something to the table to shake up the middling (or even high-end) market. -

mikeebb Considering what your article compares it to, it's kind of underwhelming. OTOH, it's better than most integrated graphics, so there's that.Reply -

vinay2070 Reply

Why do you feel so? If you compare the frame rate of a 1650Super vs 3070, its not far off, probably 2 to 3 times? The top end of this card will be 4 times as powerful. That means it will still be at 3070 to 3070ti level. Or am I missing something?mikeebb said:Considering what your article compares it to, it's kind of underwhelming. OTOH, it's better than most integrated graphics, so there's that. -

PCWarrior Reply

In this interview (start watching at around 27:58) Raja Koduri seems to point out that Intel’s way to solve the mining problem is to create dedicated hardware/ASIC that is so good/efficient at mining that miners would no longer use GPUs for mining.hotaru251 said:tbh all i hope for is intel's gpu to be good at mining so miners go to them instead of others <_<

Yre8CHS73-QView: https://www.youtube.com/watch?v=Yre8CHS73-Q -

renz496 ReplyPCWarrior said:In this interview (start watching at around 27:58) Raja Koduri seems to point out that Intel’s way to solve the mining problem is to create dedicated hardware/ASIC that is so good/efficient at mining that miners would no longer use GPUs for mining.

Yre8CHS73-QView: https://www.youtube.com/watch?v=Yre8CHS73-Q

That's the idea when ASIC for bitcoin being created. But some miners did not like those mining operation to be dominated by those that can afford crazy expensive ASIC machine back then they create other coin that is more ASIC resistant.

If they want to create a successful chip replacing GPU they need to create a chip that can mine every type of coin out there efficiently including new type of coin that will be created in the future. And this new mining specific card need to be cheap. Like $200 per board and yet it mined like 10 3090 combined. And those that produce thus chip will not going to handle their order for this board like Bitmain did. Else miner will keep targeting gpu for mining.