Pics of Apple M1 Max Die Hint at Future Chiplet Designs

'M1 Max Duo' or 'M1 Max Quadra' chips incoming?

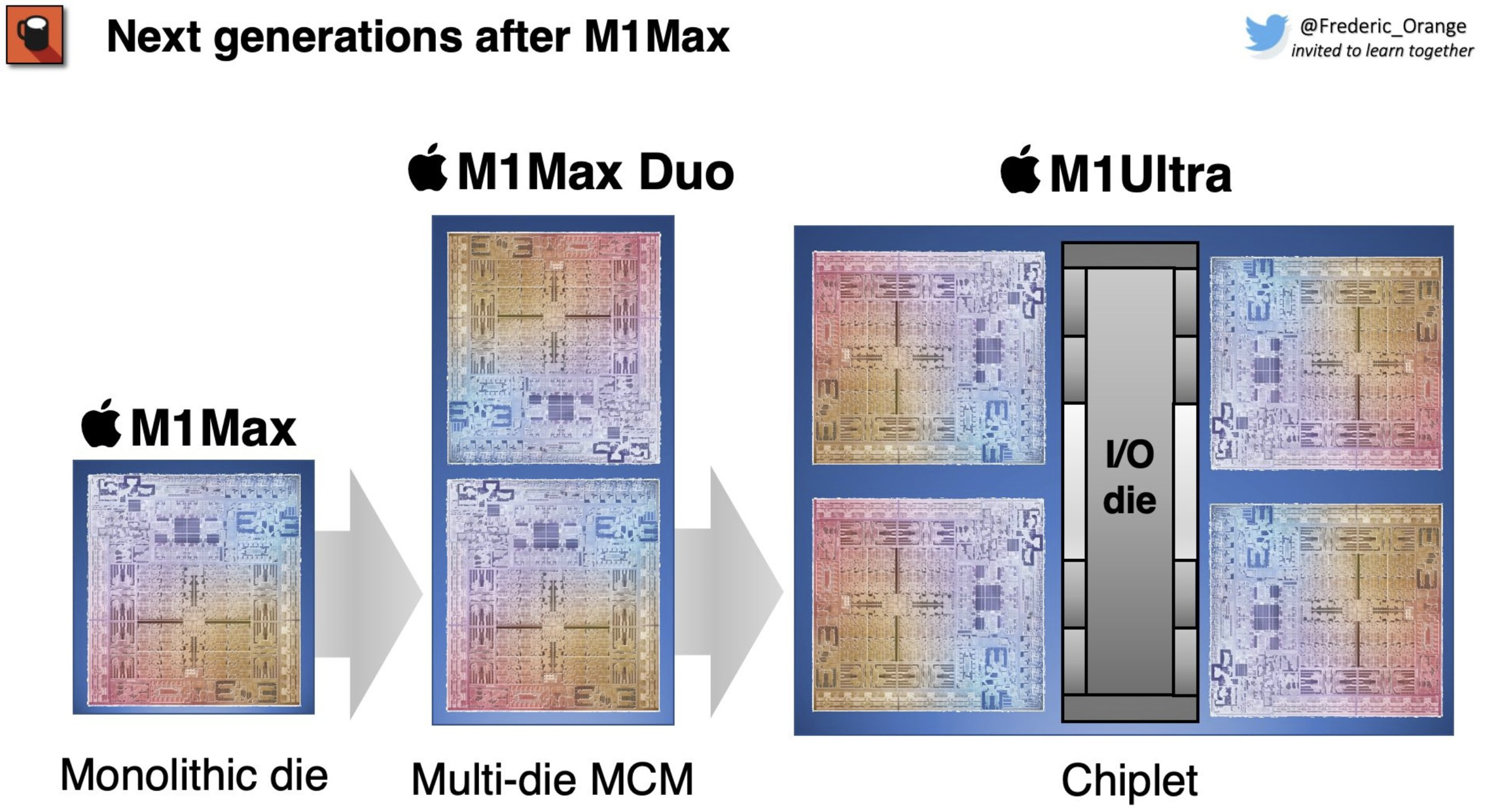

It appears that Apple has kept a closely-guarded secret regarding its new M1 Max silicon. New pics of the underside of the chip reveal that it may actually have an interconnect bus that enables Multi-Chip-Module (MCM) scaling, allowing the company to stack together multiple dies in a chiplet-based design. That could result in chips with as many as 40 CPU cores and 128 GPU cores. Apple has yet to confirm provisions for chiplet-based designs, but the M1 Max could theoretically scale into an "M1 Max Duo" or even an "M1 Max Quadra" configuration, aligning with persistent reports of various chiplet-based M1 designs in the future.

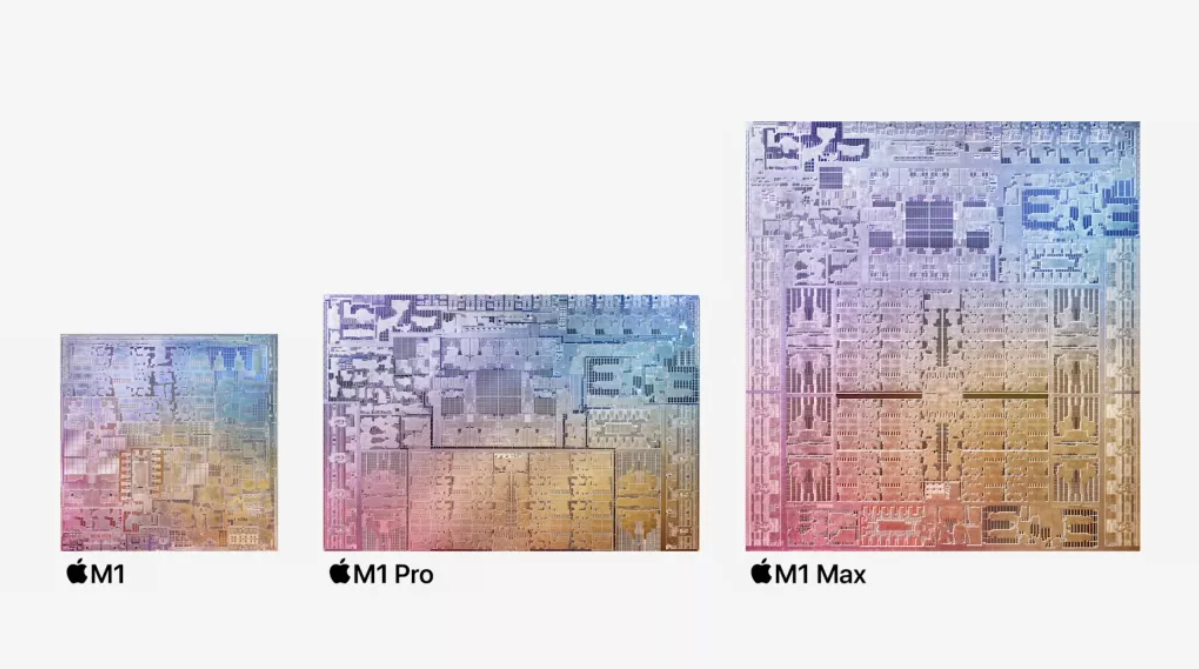

Apple has managed to impress the world not once but twice already with the performance of its Arm-based M1 CPUs. The company's latest M1 Max chip is a force to be reckoned with by itself - the chips' gargantuan 57 billion transistors enable Apple to scale up to 10 CPU cores and either 24 or 32 GPU cores (depending on the configuration you get), all in a single 5nm chip. Adding accommodations for a chiplet-based design would theoretically multiply compute resources, and thus performance.

You guys seeing this or am I just crazy? The actual M1 Max die has an entire hidden section on the bottom which was not shown at all in Apple's official renders of the M1 Max die. Just flip another M1 Max and connect it for an M1 Max Duo chip. Then use I/O die for M1 Max Quadra. https://t.co/McWmofJAls pic.twitter.com/JogRwUGvF6December 2, 2021

The interconnect bus would allow Apple to scale its chips by "gluing together" the appropriate number of M1 Max chips. But, of course, it's not simply a matter of flipping one M1 Max chip and aligning it with the second one; Apple would still have to use specific interposer and packaging options for a chiplet-based design.

Interestingly, Apple's M1 Pro chip (which fits between the M1 and M1 Max SoCs) lacks the interconnect bus — it's actually located in the extended half of the M1 Max (a beefier version of the M1 Pro). This likely means that Apple only expects users that need the additional graphics compute power in its M1 Max (such as graphics or television studios) to require further performance scaling via this chiplet design philosophy.

Marrying two 520 mm^2 Apple M1 Max dies in an "M1 Max Duo" chip could deliver up to 20 CPU cores and 48 or 64 GPU units. It would also require an appropriate doubling of the system's memory to 128 GB. Memory bandwidth should also scale in such a system, up to 800 Gb/s. That seems doable within the current M1 Max design, although the 10,040 mm^2 of Apple silicon would be more expensive, of course.

Going for the "M1 Max Quadra" solution with 40 CPU cores and 128 GPU cores would be even more complicated. Perhaps an added I/O die, as the source suggests, is the correct solution, but possibilities abound. Apple could also sustain enough inter-die bandwidth via an I/O technology akin to AMD's Infinity Fabric. Whether or not the larger chips would require an I/O die remains an open question, as other leaks have suggested the design will be expanded in a monolithic design.

Once again noting that Apple’s marketing diagrams do NOT accurately represent the floor plan of its chips, here is a less fanciful interpretation of the Pro Mac SoC rumors.Presenting Jade-C: The building block for Pro Mac SoCs. (M1 included for scale.) pic.twitter.com/Lp8ZBDeLiuMay 21, 2021

It's ultimately unclear how Apple would choose to handle memory bandwidth scaling - and any solution would have very increased platform development costs throughout. But then again, these theoretical "M1 Max Duo" and "M1 Max Quadra" products would cater to a market that cares more about performance and power efficiency than cost.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

Sippincider Is it really safe to resurrect a name from the era that also gave us Performa and Centris? :)Reply

Although "Quadra" would be better than "M1 Max Pro X Ultimate" or some eye-roller like that. -

saijanai Why stop at 4?Reply

While perhaps it's not worth doing right now, such a strategy could allow an arbitrary of SoCs to be tiled together, forming a 4x or 8x or even 16x uber-SoC, and depeding on how forward looking and versatile the design, not every "sub" package has to be fully populated with every conceivable type of core. Perhaps there are designs that have mostly RAM, for example, or mostly GPUs, or mostly whatever.

This design principle might be further extended so that Apple could offer customizable options for the uber users that mirror everything that the current Mac Pro ordering form offers, hardware-wise, plus even more (Machine-Learning specialization, anyone?). Perhaps the M1 series of Mac Pros won't offer it, but the M2 or M3 or M4... will. -

hotaru.hino Reply

The problem with doing this sort of thing is you start to run into two issues:saijanai said:Why stop at 4?

While perhaps it's not worth doing right now, such a strategy could allow an arbitrary of SoCs to be tiled together, forming a 4x or 8x or even 16x uber-SoC, and depeding on how forward looking and versatile the design, not every "sub" package has to be fully populated with every conceivable type of core. Perhaps there are designs that have mostly RAM, for example, or mostly GPUs, or mostly whatever.

This design principle might be further extended so that Apple could offer customizable options for the uber users that mirror everything that the current Mac Pro ordering form offers, hardware-wise, plus even more (Machine-Learning specialization, anyone?). Perhaps the M1 series of Mac Pros won't offer it, but the M2 or M3 or M4... will.

Unequal latency if data needs to be shared across blocks

Infrastructure limitsThe first issue is a problem once you start looking at more than 4 addressable units. It becomes impractical very fast if you try to connect all of the nodes to each other. So instead you have to look at other topology options like ring, mesh, or some variation and combination between the two. Since latency is now variable, it can rob whatever performance you were hoping to achieve with multiple chiplets. The second issue is a problem because the more elements you put on the package, the larger the I/O die has to be to support all of it. And then there's the question of what communication topology the I/O die is using.

I recommend reading this https://www.anandtech.com/show/16930/does-an-amd-chiplet-have-a-core-count-limit -

elementalRealms 128 GPU units ? could this be the death of dedicated GPU cards ?Reply

in theory such SOC would outperform RTX 3080 ! -

velocityg4 ReplySippincider said:Is it really safe to resurrect a name from the era that also gave us Performa and Centris? :)

Although "Quadra" would be better than "M1 Max Pro X Ultimate" or some eye-roller like that.

I had a Centris 650. It was awesome. Computers were insanely expensive then. They make Apple's current prices look downright thrifty. It was a "cheap" $600 motherboard swap from a IIvx.

Yes, there was a time Apple made upgrade-able computers and even let you purchase motherboard upgrades.

I also had two Performas. The Performa 6218CD and Performa 6400/180.

Their naming scheme is getting a bit complicated though. -

JamesJones44 This would honestly make a lot of sense. The only two non-Apple Silicon devices at this point are the iMac Pro and Mac Pro. Those two machines can use the Intel Multi Socket Xeon systems (depends on the core count), so this would essentially be that replacement. Single sockets replacement likely gets Duo and dual socket would get the Ultra. The M1 Pro/Max IPC is the same as an M1 so it would seem to follow what Apple did with the MBP, in that they just scale out the CPU core and GPU processing units and call it done for gen 1 of Apple Silicon.Reply -

cryoburner Reply

Perhaps it could manage that level of performance, assuming performance were to scale well across multiple chips. But that would also result in a relatively large amount of heat output and power draw within the package, which would likely require lower clocks to keep manageable. A chip like that might not even be practical for laptops.elementalRealms said:128 GPU units ? could this be the death of dedicated GPU cards ?

in theory such SOC would outperform RTX 3080 !

And it probably wouldn't replace dedicated GPUs, considering you would need a use case for the 40 CPU cores that would come along with it. And of course, this is Apple, so expect the price to performance ratio to be terrible. And at least as far as gaming is concerned, you're probably not going to find many demanding games ported to the processor's ARM architecture for a while, and AAA game ports in general have been few and far between on Apple's platform.

And APUs with integrated higher-end GPUs already exist, like the AMD processors found in the PS5 and Series X. The Series X chip is essentially an 8-core Ryzen Zen2 CPU combined with graphics hardware that can perform nearly on-par with a desktop Radeon 6700 XT, or roughly around the level of a 3060 Ti / 2080 SUPER when compared against Nvidia's desktop cards, at least for rasterized rendering. And that's an inexpensive chip that manages to be part of a device that retails for $500 in its entirety (albeit with some subsidization to manage that price point). So, theoretically, something along the lines of that could also be said to make dedicated cards "obsolete", and no doubt AMD could make a version with 6900 XT / RTX 3080-level hardware, if they felt there was a market for it. -

hotaru.hino Reply

If we're talking about hooking up a bunch of GPUs together, like some sort of SLI equivalent, unfortunately it doesn't really work that way. The biggest challenge with multi-GPU setups is how to distribute the workload in a way that minimizes latency. One might think it should be easy if CPUs can do it, but the workloads we tend to throw at CPUs are not time sensitive. That is, it doesn't matter if the CPU takes 1 minute or 1 second to do the job. We'd prefer faster, but it's not that important. GPUs on the other hand are given workloads that have strict deadlines. We demand at most 16ms, and preferably a nice average time between frames. Anything that adds latency, like having to hop nodes, starts to put a strain on this.elementalRealms said:128 GPU units ? could this be the death of dedicated GPU cards ?

in theory such SOC would outperform RTX 3080 ! -

elementalRealms Replyhotaru.hino said:If we're talking about hooking up a bunch of GPUs together, like some sort of SLI equivalent, unfortunately it doesn't really work that way. The biggest challenge with multi-GPU setups is how to distribute the workload in a way that minimizes latency. One might think it should be easy if CPUs can do it, but the workloads we tend to throw at CPUs are not time sensitive. That is, it doesn't matter if the CPU takes 1 minute or 1 second to do the job. We'd prefer faster, but it's not that important. GPUs on the other hand are given workloads that have strict deadlines. We demand at most 16ms, and preferably a nice average time between frames. Anything that adds latency, like having to hop nodes, starts to put a strain on this.

This is not hooking GPU's together ... nor SLI like . -

hotaru.hino Reply

Connecting multiple M1 chips over an interconnect to "combine" their power is very much like any multi video card setup. Just because they're in the same package substrate doesn't mean they're suddenly a single cohesive unit.elementalRealms said:This is not hooking GPU's together ... nor SLI like .