Part 1: Building A Balanced Gaming PC

Benchmark Results: Crysis

Crysis:

First up…Crysis. Although this first-person shooter was released in November of 2007, it still arguably represents one of the most graphically-demanding games out there. We needed to settle for less-than-maximum eye candy just to achieve any level of playability, so our compromise was to test at Very High detail levels and no AA, rather than drop to high details and enable AA.

Utilizing our normal benchmark tool provides a good combination of graphical eye candy and physics effects. Our typical target has been 40 FPS, but we put that foregone conclusion to the test in preparation for this series, playing and FRAPS benchmarking numerous configurations in three of the most demanding levels of the game.

The 40 FPS target remains our recommendation. Although Crysis is still quite playable at less than 40 FPS, there will be areas in levels like “Paradise Lost” and “Assault” where framerates will drop into the mid 20s. We feel the 40 FPS recommendation is a safe bet for acceptable performance, although the possibility still exists that stuttering during the game’s closing battle in “Reckoning” could require settings to be tuned down just a bit.

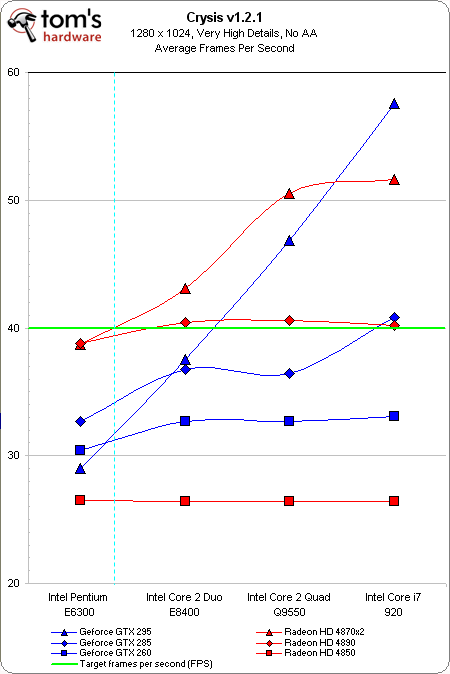

The relatively flat lines for the Radeon HD 4850 and GeForce GTX 260 show that, even at our lowest resolution, two of the graphics cards fall well below our target FPS. While GeForce GTX 260 owners may find the performance acceptable at stock clocks throughout most of the game, we found frame rates in the most demanding levels were, at times, sitting in the teens--a little too low for our liking

Contrast these two flat GPU-limited lines to the steep slope seen for the GeForce GTX 295, which is extremely CPU-limited, and isn’t able to claim its place at the top until we pair it up with the fastest CPU. Whether through some driver or hardware architecture advantage, it’s hard not to notice that ATI's Radeons have an edge here when matched up to dual-core CPUs.

The Pentium E6300 failed to reach our target with any of the graphics cards, but given enough CPU power, four of our graphics cards delivered playable performance. The Radeon HD 4890/E8400 combination broke 40 FPS, and stepping up to a quad-core processor doesn’t seem to provide any additional performance. So, in this case, the cheapest solution to reach the target is also the one representing the best balance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

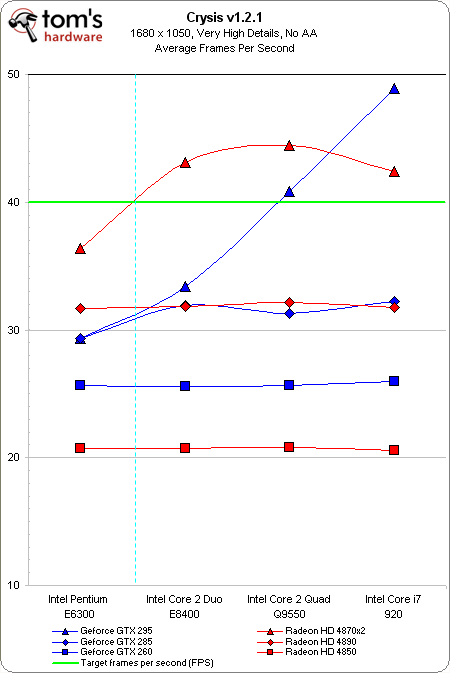

Talk about a graphically-intensive game. By simply turning up the resolution to 1680x1050, all of the single-GPU solutions now fall well below the target line. While the GeForce GTX 295 eventually provides the highest performance, it’s held back from reaching our target with the dual-core CPUs. Here it’s the Radeon HD 4870 X2 reaching our target with the three top CPUs representing the cheapest and best balance at this resolution.

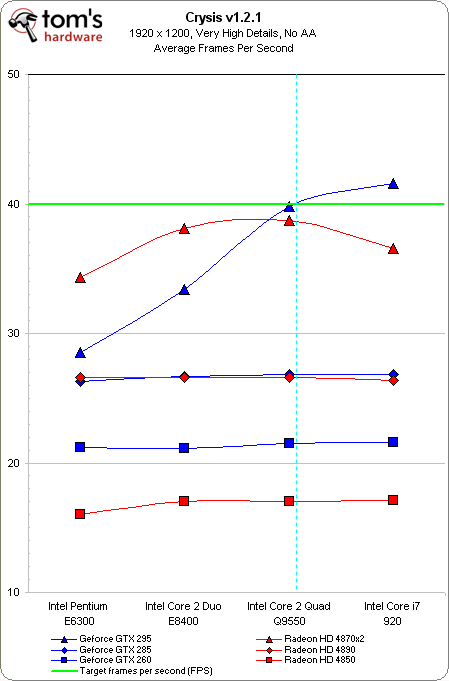

Once we raise the resolution to 1920x1200, only the most expensive platform manages to exceed 40 FPS. The mighty GeForce GTX 295 stands alone, but once again needs a quad-core CPU to shine. Complementing the powerful card from Nvidia with a Core 2 Quad Q9550 delivers 39.8 FPS. That number increases to 41.6 FPS with the Core i7-920. Interestingly, ATI's lineup actually loses performance as we shift to the Core i7-based platform.

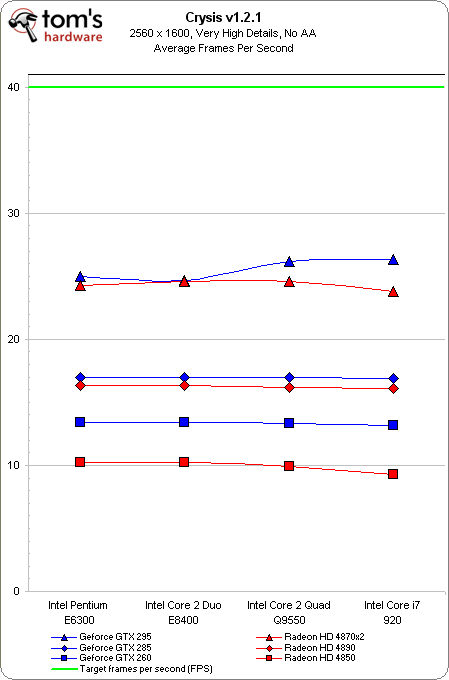

These six relatively-flat horizontal lines show that it’s going to take a lot more GPU muscle if 30” LCD owners hope to play Crysis at a native resolution without lowering their detail levels. Oddly, for the third straight 16:10 resolution, the Radeon HD 4870 X2 lost a little performance on Intel's Core i7 platform.

Current page: Benchmark Results: Crysis

Prev Page Test System Configuration And Benchmarks Next Page Benchmark Results: Far Cry 2-

yoy0yo Wow, this is an amazingly in depth review! I kinda feel that its sponsered by Asus or Corsair, but I guess you kept with the same brand for the sake of controls etc.Reply

Thankyou! -

inmytaxi Very helpful stuff.Reply

I'd like to see some discussion on the availability of sub $400 (at times as low as $280) 28" monitors. At this price range, does it make more sense to spend more on the LCD even if less is spent initially on graphics? I would think the benefit of 28" vs. 22" is so great that the extra money could be taken from, say, a 9550 + 4890 combo and getting a 8400/6300 + 4850 instead, with the right motherboard a second 4850 later will pass a 4890 anyway. -

frozenlead I like the balance charts. It's a good way to characterize the data. This article is well constructed and well thought-out.Reply

That being said - is there a way we can compile this data and compute an "optimized" system for the given hardware available? Finding the true, calculated sweet spot for performance/$ would be so nice to have on hand every quarter or twice a year. I'll have to think about this one for a while. There may be some concessions to make, and it might not even work out. But it would be so cool. -

Neggers I feel like the person that did this review got it finished alittle bit late. I can only assume he did all the testing some months back and has only just finished writing up his results. But its sad to not see the new P55/i5 Systems, AMD Athlon II Quad Cores, or the Radeon 5000 series.Reply

Good review, but hopefully it can be updated soon with some of the newer equipment thats out, to turn it into a fantastic guide for people. -

brockh Great job, this is the information people need to be seeing; the way people provide benchmarks these days hardly tells the story to most of the readers. It's definitely important to point out the disparities in ones CPU choice, rather than just assuming everyone uses the i7 all the sites choose. ;)Reply

Looking forward to part 2. -

Onyx2291 This will take up some of my time. Even though I know how, it's nice to get a refresher every now and then.Reply -

mohsh86 you are really kidding me by not considering the ATI 5000 series, although am a fan of nvidia , but this is not fair !Reply