Don't Believe the Hype: Apple's M2 GPU is No Game Changer

Still good for integrated graphics

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Apple has revealed most of the major details for its new M2 processor. The reveal was full of the usual Apple hyperbole, including comparisons with PC hardware that failed to disclose exactly what was being tested. Still, the M1 has been a good chip, especially for MacBook laptops, and the M2 looks to improve on the design and take it to the next level. Except Apple has to play by the same rules as all the other chip designers ... and it can't work miracles.

The M1 was the first 5nm-class processor to hit the market back in 2020. Two years later, TSMC's next-generation 3nm technology isn't quite ready, so Apple has to make do with an optimized N5P node, a "second-generation 5nm process." That means transistor density hasn't really changed much, which means Apple has to use larger chips to get more transistors and performance. The M1 had 16 billion transistors, and the M2 will bump that up to 20 billion.

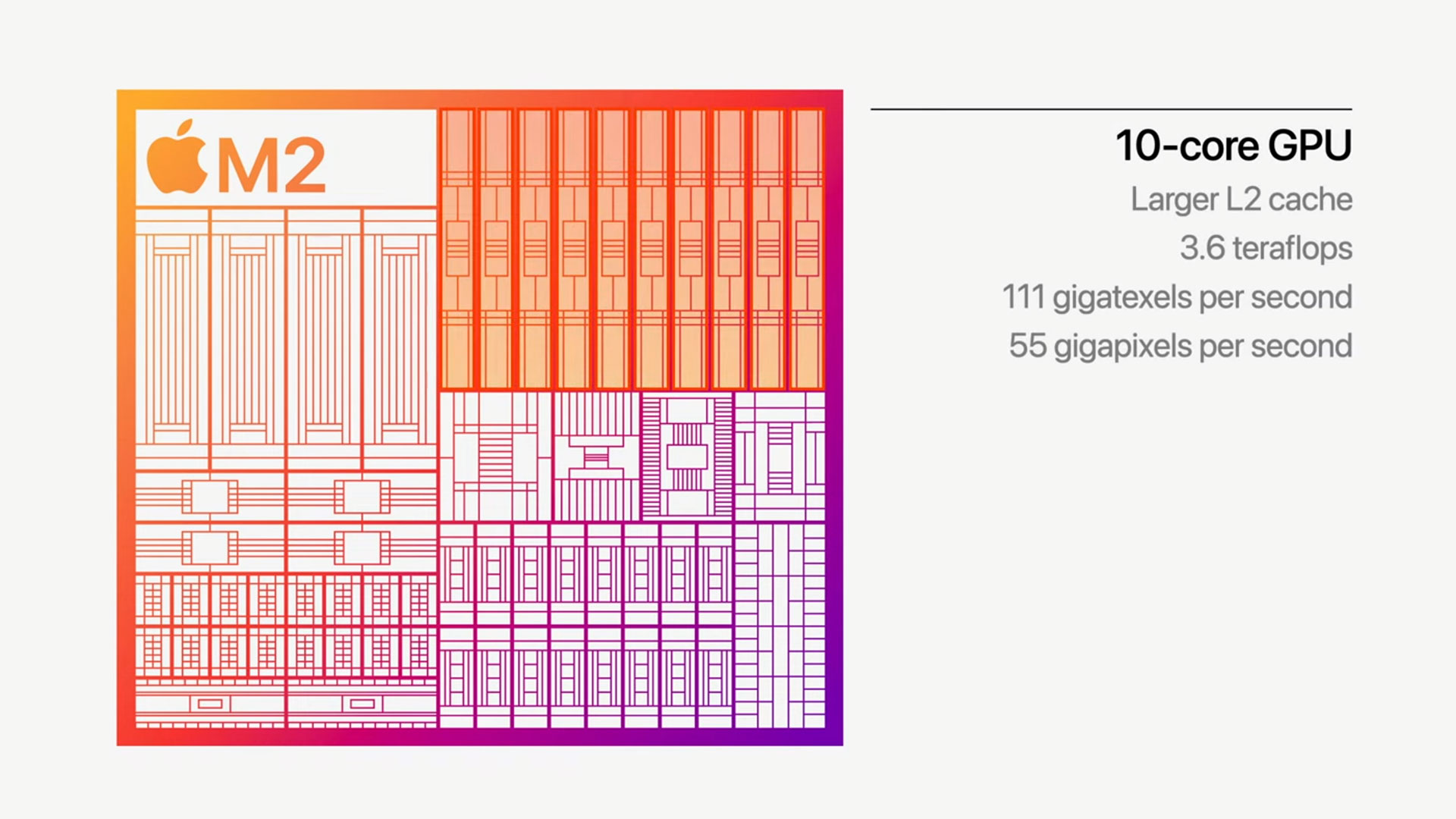

Overall, Apple claims CPU performance will be up to 18% faster than its previous M1 chip, and the GPU will be 35% faster — note that Apple's not including the M1 Pro, M1 Max, or M1 Ultra in this discussion. Still, I'm more interested in the GPU capabilities, and frankly, they're underwhelming.

Yes, the M2 will have fast graphics for an integrated solution, but what exactly does that mean, and how does it compare with the best graphics cards? Without hardware in hand for testing, we can't say exactly how it will perform, but we do have some reasonable comparisons that we can make.

Let's start with the raw performance figures. Not all teraflops are created equal, as architectural design decisions certainly come into play, but we can still get some reasonable estimates by looking at what we do know.

As an example, Nvidia has a theoretical 9.0 teraflops of single precision performance on its RTX 3050 GPU, while AMD's RX 6600 has a theoretical 8.9 teraflops. On paper, the two GPUs appear relatively equal, and they even have similar memory bandwidth — 224 GB/s for both cards, courtesy of a 128-bit memory interface with 14Gbps GDDR6. In our GPU benchmarks hierarchy, however, the RX 6600 is 30% faster at 1080p and 22% faster at 1440p. (Note that the RTX 3050 is about 15% faster in our ray tracing test suite.)

Architecturally, Apple's GPUs look similar to AMD's in terms of real-world performance based on teraflops. The M1 for example was rated at a theoretical 2.6 teraflops and had 68 GB/s of bandwidth. That's about half the teraflops and one third the bandwidth of AMD's RX 5500 XT, and in graphics benchmarks the M1 typically runs about half as fast. We don't anticipate any massive architectural updates to the M2 GPU, so it should be relatively similar to AMD's RDNA 2 GPUs.

Neither AMD nor Apple have Nvidia's dual FP32 pipelines (with one also handling INT32 calculations), and AMD has Infinity Cache that should at least be similar in practice to Apple's "larger L2 cache" claims. That means we can focus on the teraflops and bandwidth and get at least a ballpark estimate of performance (give or take 15%).

The M2 GPU is rated at just 3.6 teraflops. That's less than half as fast as the RX 6600 and RTX 3050, and also lands below AMD's much maligned RX 6500 XT (5.8 teraflops and 144 GB/s of bandwidth). It's not the end of the world for gaming, but we don't expect the M2 GPU to power through 1080p at maxed out settings and 60 fps.

Granted, Apple is doing integrated graphics, and 3.6 teraflops is pretty decent as far as integrated solutions go. The closest comparison would be AMD's Ryzen 7 6800U with RDNA 2 graphics. That processor has 12 compute units (CUs) and clocks at up to 2.2 GHz, giving it 3.4 teraflops. It also uses shared DDR5 memory on a dual-channel 128-bit bus, so LPDDR5-6400 like that in the Asus Zenbook S 13 OLED will provide 102.4 GB/s of bandwidth.

And that's basically the level of performance we expect from Apple's M2 GPU, again, give or take. It's much faster than Intel's existing integrated graphics solutions, and totally blows away the 8th Gen Intel Core GPUs used in the last Intel-based MacBooks. But it's not going to be an awesome gaming solution. We're aiming more for adequate.

One other interesting item of note is that Apple makes no mention of AV1 encode/decode support. AVI is backed by some major companies, including Amazon, Google, Intel, Microsoft, and Netflix. So far, Intel is the only PC graphics company with AV1 encoding support, while AMD and Nvidia support AV1 decoding on their latest RDNA 2 (except Navi 24) and Ampere GPUs.

Apple also detailed its upcoming MetalFX Upscaling algorithm, which makes perfect sense to include. Apple uses high-resolution Retina displays on all of its products, and there's no way a 3.6 teraflops GPU with 100 GB/s of bandwidth will be able to handle native 2560 x 1664 gaming without some help. Assuming Apple gets similar scaling to FSR 2.0 or DLSS 2.x, the M2 GPU could use a "Quality" mode and render 1706 x 1109, upscaled to the native 2560 x 1664, and most people wouldn't really notice the difference. That's less than 1920 x 1080, and certainly the M2 should be able to handle that well enough.

Let's also not forget that this is only the base model M2 announced so far. It's being used in the MacBook Air and MacBook Pro 13, just like the previous M1 variants, but there's a good chance Apple will also be making more capable M2 solutions. The M1 Pro had up to 16 GPU cores compared to the base M1's 8 cores. Doubling down on GPU core counts and bandwidth should boost performance into the 7.2 teraflops range — roughly equivalent to an RX 6600 or RTX 3050 in theory. Doubling that again for an M2 Max with 40 GPU cores and 14.4 teraflops would put Apple in the same realm as the RX 6750 XT or even the RX 6800.

For an integrated graphics solution running within a 65W power envelope, that would be very impressive. We still need to see the chips in action before drawing any final conclusions, however, and it's a safe bet that dedicated graphics solutions will continue to offer substantially more performance.

Bottom Line

Apple's silicon continues to make inroads against the established players in the CPU and GPU realms, but keep in mind that targeting efficiency first usually means lower performance. Dedicated AMD and Nvidia GPUs might use 300W or more on desktops, but the same chips can go into a laptop and use just 100W while still delivering 70–80% of the performance of their desktop equivalents.

Without hardware in hand and real-world testing, we don't know precisely how fast Apple's M2 GPU will be. However, even Apple only claims 35% more performance than the M1 GPU, which means the M2 will be quite a bit slower than the M1 Pro, never mind the M1 Max or M1 Ultra. And that's fine, as it's going into laptops that are more about all-day battery life than playing the latest games.

The combination of a reasonably performant integrated GPU combined with MetalFX Upscaling also holds promise, and game developers going after the Apple market will certainly want to look into using upscaling. That should deliver at least playable performance at the native display resolution (after upscaling), which is a good starting point. We're also interested in seeing how the passively cooled MacBook Air holds up under a sustained gaming workload.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Heat_Fan89 Apple are the Kings of Marketing. They could sell you a 1987 Yugo and convince you that it's really a 2022 Acura NSX. I raised an eyebrow when the Capcom Rep went off on how the M2 running Resident Evil Village is right up there with both the PS5 and XBOX Series X.Reply -

gggplaya ReplyHeat_Fan89 said:Apple are the Kings of Marketing. They could sell you a 1987 Yugo and convince you that it's really a 2022 Acura NSX. I raised an eyebrow when the Capcom Rep went off on how the M2 running Resident Evil Village is right up there with both the PS5 and XBOX Series X.

As far are ARM mobile design goes, Apple is King. That's not hype, they legit have the most superior ARM designs. However, their marketing people are just as evil as Intel's. Trying to steal away x86 marketshare by dirty tactics.

They should stick to the M1 and M2 merits that are legit good, such as amazing battery life in a laptop, especially when doing content creation compared to x86 counterparts. -

PiranhaTech Definitely have to be careful. The M1 charts were weird, and Apple is using an SoC, using it well, but that also makes comparisons harder.Reply -

hypemanHD Not every chip is meant to be a game changer. Apple always keeps their line up to date. These machines will work wonderfully for those whose needs they fit. Comparing graphics performance of M2 to dedicated graphics cards is completely unfair and unnecessary and just a tactic to stir up industry standard Apple criticism. The fact is these chips have put a lot of power into the hands of hobbyists/amateurs/students who are exploring new skills who may upgrade as they go deeper. That's who these chips are for, the average consumer, that's why they are going into the lowest level laptops Apple has available. Also note that at the top of the article it says that they won't be comparing the M2 to the M1Max or Pro and later on they do just that. I use excellent PCs at work that blow my Mac out of the water. I still like my Mac for what I use it for. You wouldn't compare a crap PC to an excellent PC. The difference is Apple doesn't make crappy Macs. Apple continues to make excellent, reliable, long term machines for consumers and pros alike. The M2 continues to keep these machines updated. Also keep in mind the niche that Apple Silicon is carving out for itself is the highest processing power at the lowest possible power consumption, and they're doing it well.Reply -

JarredWaltonGPU Reply

I never said I wouldn't compare the M2 with M1 Pro/Max/Ultra, just that Apple didn't make any such comparisons. For obvious reasons, since the M1 Pro/Max are substantially more potent than the base M1, though we can expect Apple will eventually create higher tier M2 products as well. I also compared the M2 GPU to AMD's Ryzen 7 6800U, which is also a 15W chip. But you're probably not here to reason with. LOLhypemanHD said:Not every chip is meant to be a game changer. Apple always keeps their line up to date. These machines will work wonderfully for those whose needs they fit. Comparing graphics performance of M2 to dedicated graphics cards is completely unfair and unnecessary and just a tactic to stir up industry standard Apple criticism. The fact is these chips have put a lot of power into the hands of hobbyists/amateurs/students who are exploring new skills who may upgrade as they go deeper. That's who these chips are for, the average consumer, that's why they are going into the lowest level laptops Apple has available. Also note that at the top of the article it says that they won't be comparing the M2 to the M1Max or Pro and later on they do just that. I use excellent PCs at work that blow my Mac out of the water. I still like my Mac for what I use it for. You wouldn't compare a crap PC to an excellent PC. The difference is Apple doesn't make crappy Macs. Apple continues to make excellent, reliable, long term machines for consumers and pros alike. The M2 continues to keep these machines updated. Also keep in mind the niche that Apple Silicon is carving out for itself is the highest processing power at the lowest possible power consumption, and they're doing it well. -

hotaru.hino From what slides that Apple has been pushing out, along with other people's take on it, I think Apple isn't so much focused on absolute performance. They're focused on higher efficiency. Sure an M2 cannot get the same performance as whatever 12 10-core Alder Lake Intel part they used, but they claim for some relative performance metric (it looks like they capped it out at 120), it uses 25% the power of the part they're comparing to. And the chart shows the part they're comparing to can get another 20 points, but it adds another ~60% power. Granted while we won't really know what tests they've ran, I've seen similar behavior on my computer simply by not allowing the parts to boost as fast as they can.Reply

Although I feel like that's what they were pushing with the M1, and they just happened to figure out how to fudge the numbers to pretend they also have some absolute performance crown.

EDIT: I'm trying to find some review that did a power consumption test with the Samsung Galaxy Book 2 360 laptop they used. While Intel's spec sheet says the i7-1255U's maximum turbo boost is 55W, it certainly won't stay there. Unfortunately I can't find anything. -

aalkjsdflkj Replygggplaya said:They should stick to the M1 and M2 merits that are legit good, such as amazing battery life in a laptop, especially when doing content creation compared to x86 counterparts.

Excellent point. Each of the new Mac SoCs are great for the market they're targeting. They legitimately do some things, particularly efficiency, better than anyone else right now. I don't understand why they try to deceive consumers with ridiculous claims like claiming the M1 ultra was faster than a 3090. While that was arguably the most egregious false or misleading claim last time around, there are plenty of others. I haven't even bothered to look at the M2 claims because I know people like the TH folks will provide comprehensive reviews. -

gggplaya Replyaalkjsdflkj said:Excellent point. Each of the new Mac SoCs are great for the market they're targeting. They legitimately do some things, particularly efficiency, better than anyone else right now. I don't understand why they try to deceive consumers with ridiculous claims like claiming the M1 ultra was faster than a 3090. While that was arguably the most egregious false or misleading claim last time around, there are plenty of others. I haven't even bothered to look at the M2 claims because I know people like the TH folks will provide comprehensive reviews.

Yes, efficiency under heavy load is where the ARM chips create the biggest performance gap compared to their x86 rivals.

I wonder how long the M2 will last while gaming on battery power? Tomshardware and other reviewers should test that scenario. People commute on trains and planes all the time, it would be nice to see if it'll last their entire commute. My laptops are lucky to last an hour while gaming with battery power, and they're heavily throttled. -

hotaru251 Reply

....prior to M1 pretty much all were crappy.hypemanHD said:You wouldn't compare a crap PC to an excellent PC. The difference is Apple doesn't make crappy Macs.

over priced for what performance you got (especially their trash can & cheese grater)

&

thermal throttled all time (cause they refuse to let ppl control fan speed properly ala the apple "my way or the highway" mentality.)

and yes we do compare crappy pc's to good pc's. (so you know whats better price to performance gain/loss or their improvements over last gen)

Apple arguably does "performance graphs" worse than Intel. (who has a habit of making bad graphs) as they refuse to say what they are compared to. "random core alternative" gives u nothing as it could be anything, running any configuration, possibly stock stats, etc etc.) -

hotaru.hino Reply

While it's not a gaming load, I ran across a YouTube video that did a review of the M1 MacBook Pro after 7 months and mentioned that they were able to edit (presumably 4K) videos for 5 hours compared to about 1-1.5 on the 2019 Intel MacBook Pro.gggplaya said:I wonder how long the M2 will last while gaming on battery power? Tomshardware and other reviewers should test that scenario. People commute on trains and planes all the time, it would be nice to see if it'll last their entire commute. My laptops are lucky to last an hour while gaming with battery power, and they're heavily throttled.

In the press release, there's a few footnotes:hotaru251 said:"random core alternative" gives u nothing as it could be anything, running any configuration, possibly stock stats, etc etc.)

Testing conducted by Apple in May 2022 using preproduction 13-inch MacBook Pro systems with Apple M2, 8-core CPU, 10-core GPU, and 16GB of RAM; and production 13-inch MacBook Pro systems with Apple M1, 8-core CPU, 8-core GPU, and 16GB of RAM. Performance measured using select industry‑standard benchmarks. Performance tests are conducted using specific computer systems and reflect the approximate performance of MacBook Pro.

The problem is they don't tell us what the "industry-standard benchmarks" are.

Testing conducted by Apple in May 2022 using preproduction 13-inch MacBook Pro systems with Apple M2, 8-core CPU, 10-core GPU, and 16GB of RAM. Performance measured using select industry‑standard benchmarks. 10-core PC laptop chip performance data from testing Samsung Galaxy Book2 360 (NP730QED-KA1US) with Core i7-1255U and 16GB of RAM. Performance tests are conducted using specific computer systems and reflect the approximate performance of MacBook Pro.

Testing conducted by Apple in May 2022 using preproduction 13-inch MacBook Pro systems with Apple M2, 8-core CPU, 10-core GPU, and 16GB of RAM. Performance measured using select industry‑standard benchmarks. 12-core PC laptop chip performance data from testing MSI Prestige 14Evo (A12M-011) with Core i7-1260P and 16GB of RAM. Performance tests are conducted using specific computer systems and reflect the approximate performance of MacBook Pro.