Why you can trust Tom's Hardware

Modern GPUs aren't just about gaming. They're used for video encoding, professional applications, and increasingly they're being used for AI. We've revamped our professional and AI test suite to give a more detailed look at the various GPUs. We'll start with the AI benchmarks, as they're more important for a lot of users.

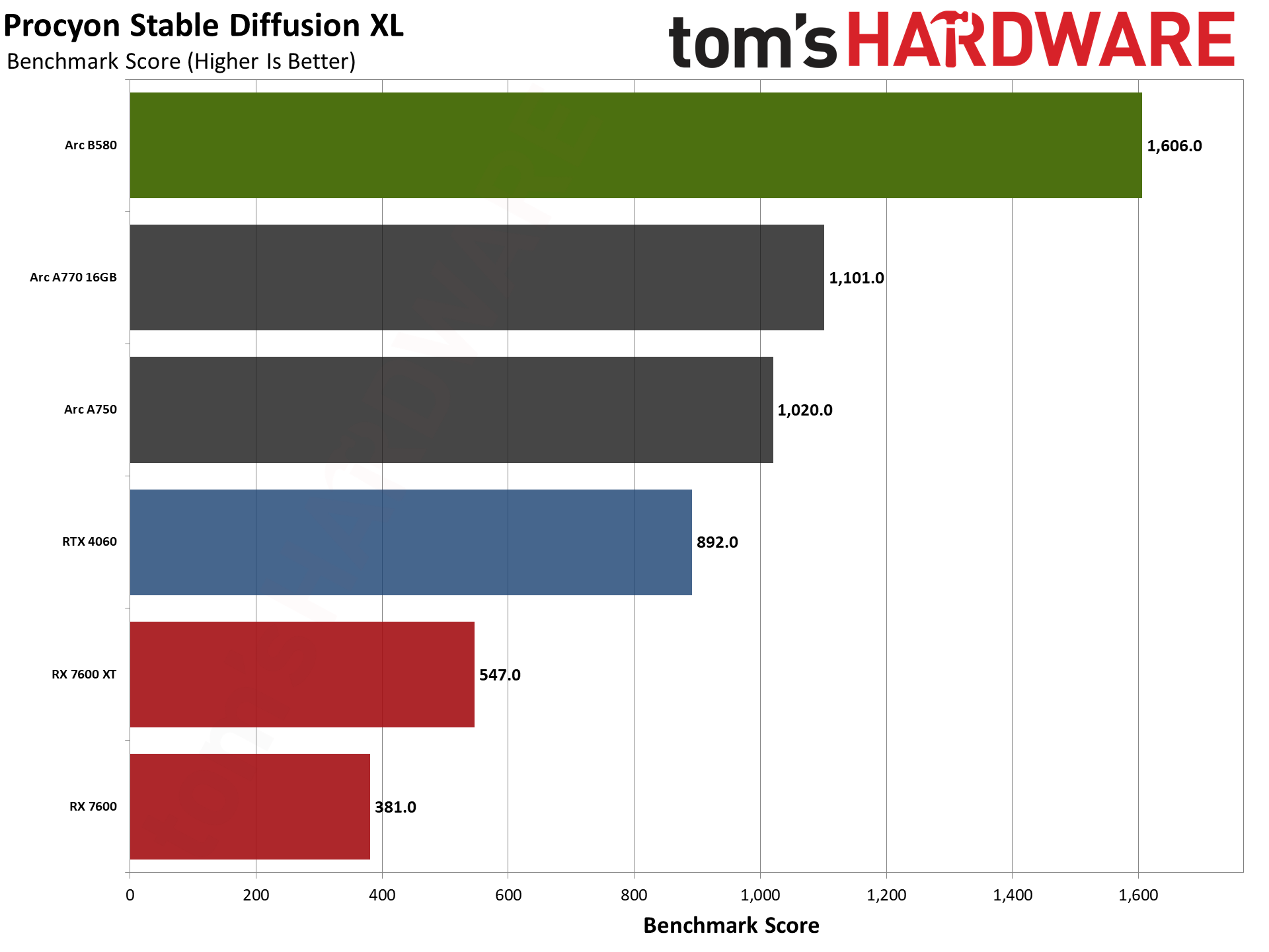

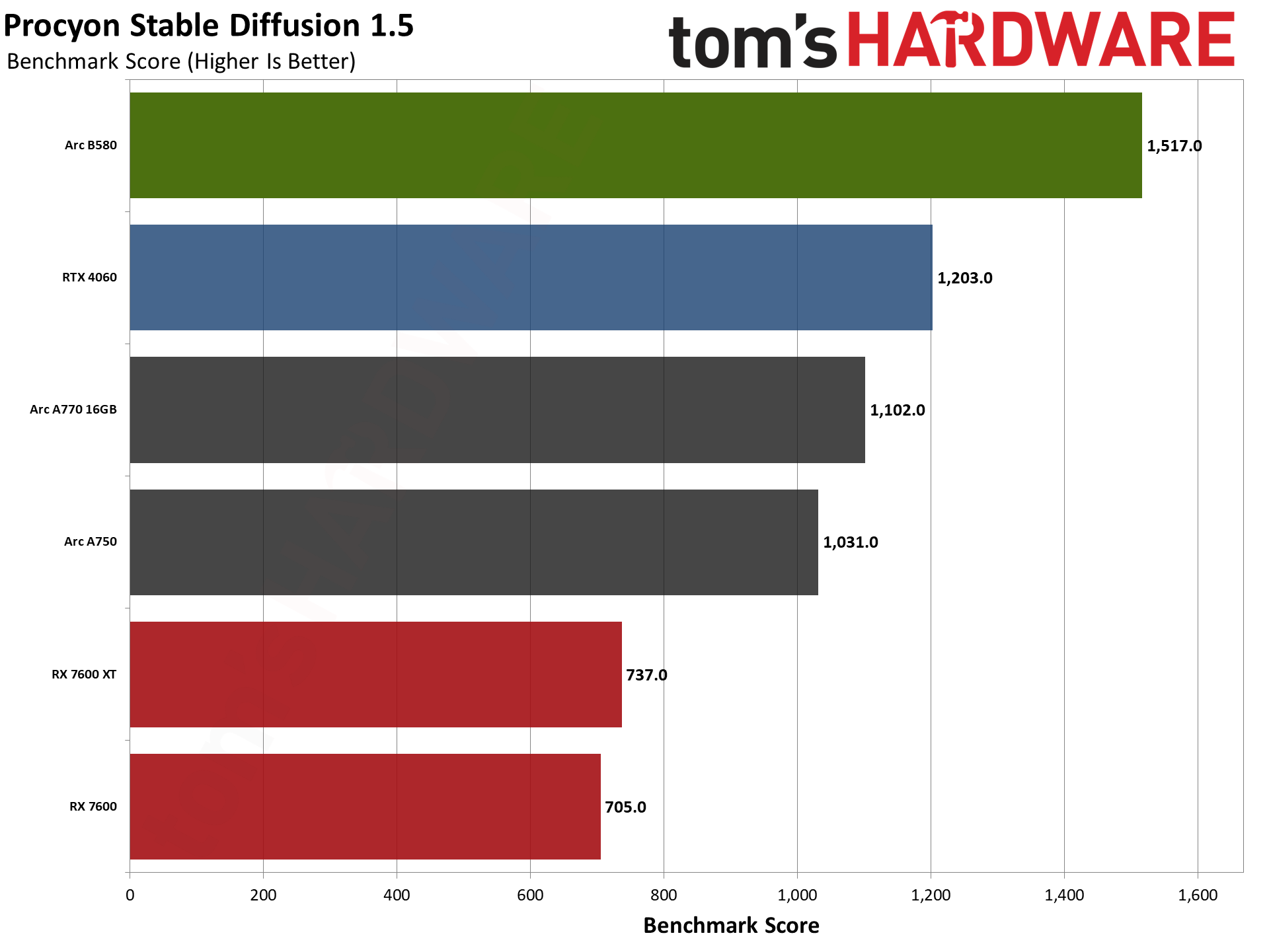

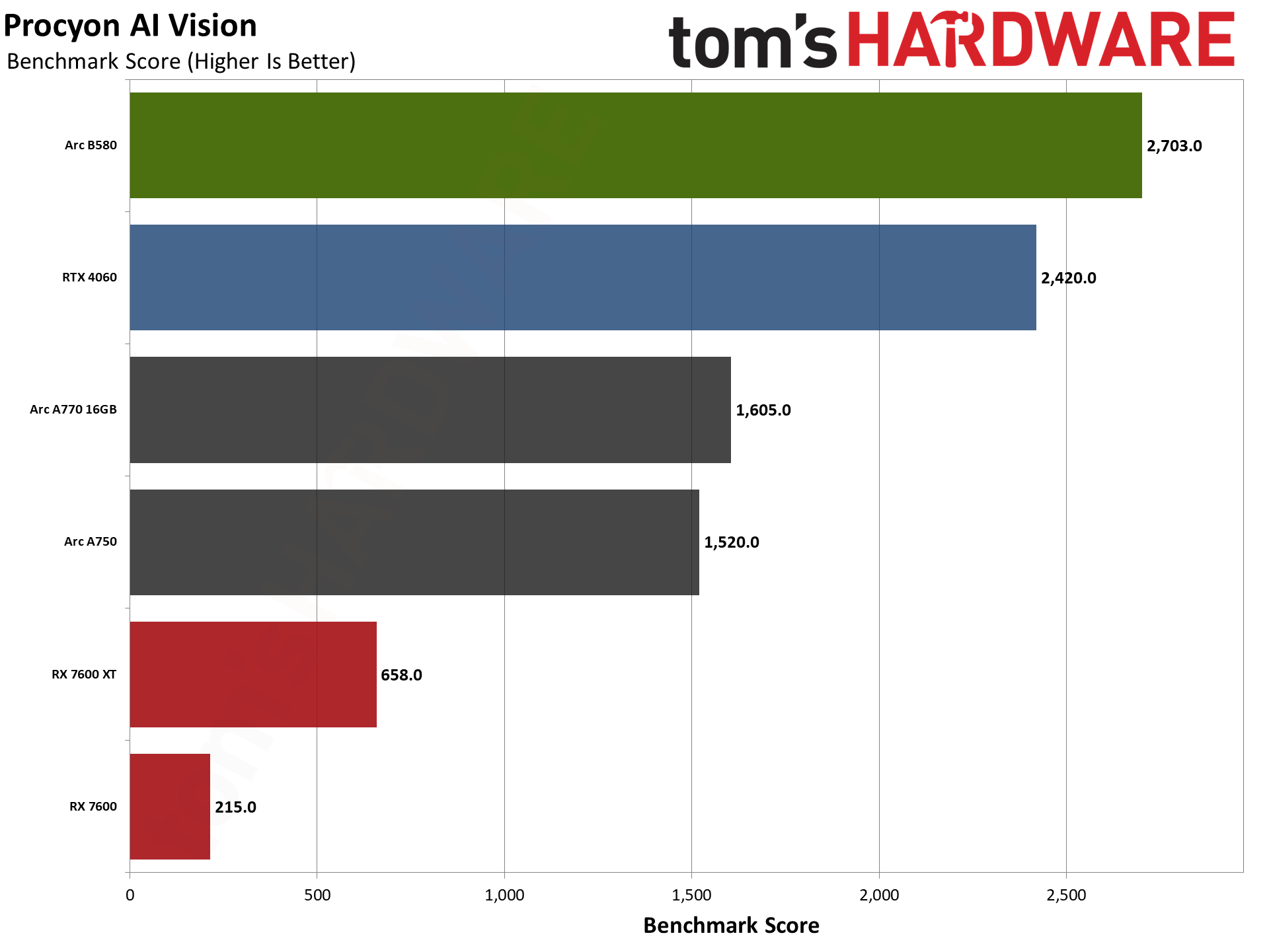

Procyon has several different AI tests, and for now we've run the AI Vision benchmark along with two different Stable Diffusion image generation tests. The tests have several variants available that are all determined to be roughly equivalent by UL: OpenVINO (Intel), TensorRT (Nvidia), and DirectML (for everything, but mostly AMD). There are also options for FP32, FP16, and INT8 data types, which can give different results. We tested the available options and used the best result for each GPU.

Arc B580 does really well in the Stable Diffusion tests, and while Nvidia closes the gap in the AI Vision suite, B580 also wins there. How applicable these results are in the real world remains debatable, as software and driver optimizations can yield massive performance improvements in many AI workloads. YMMV, in other words.

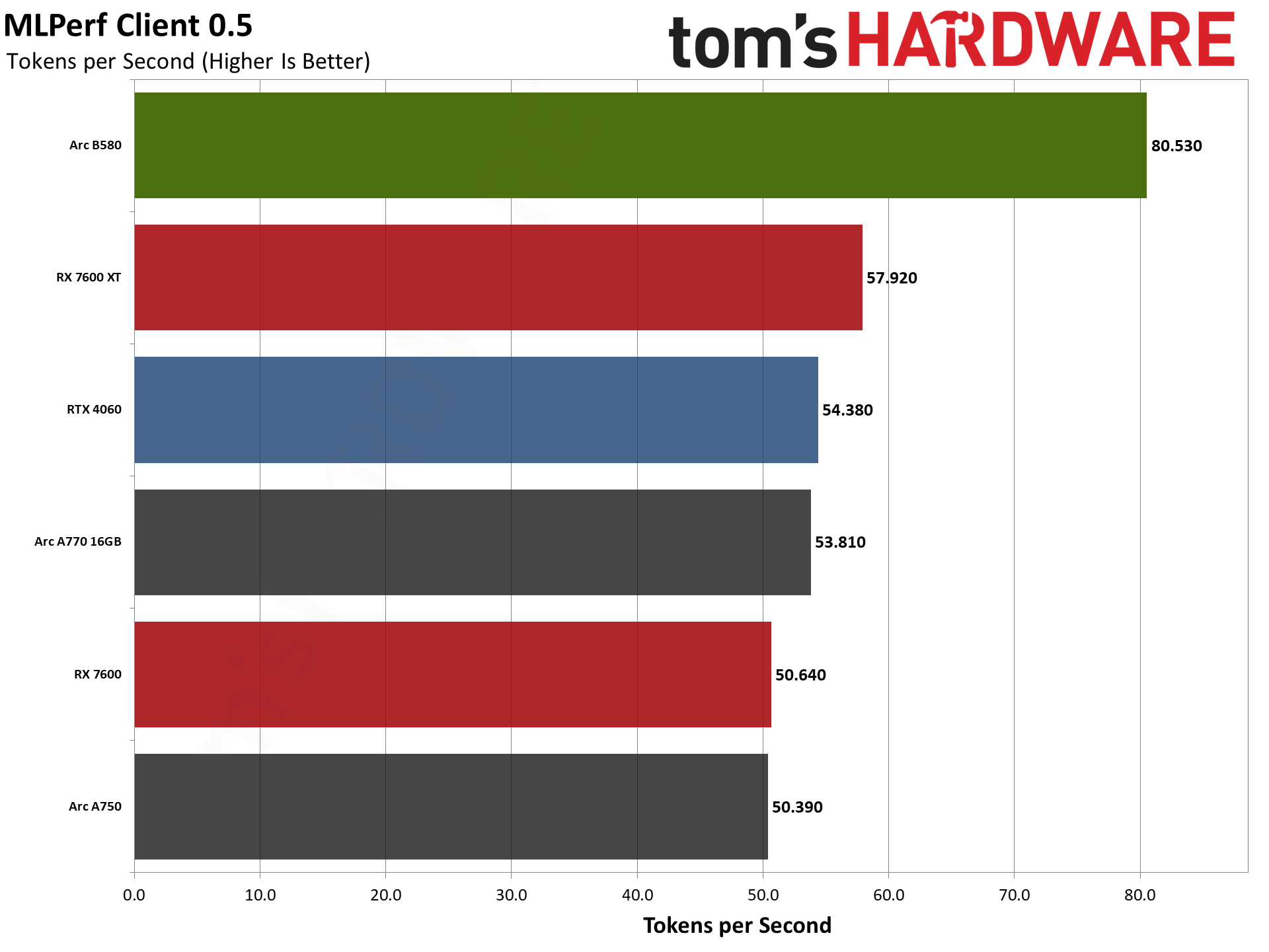

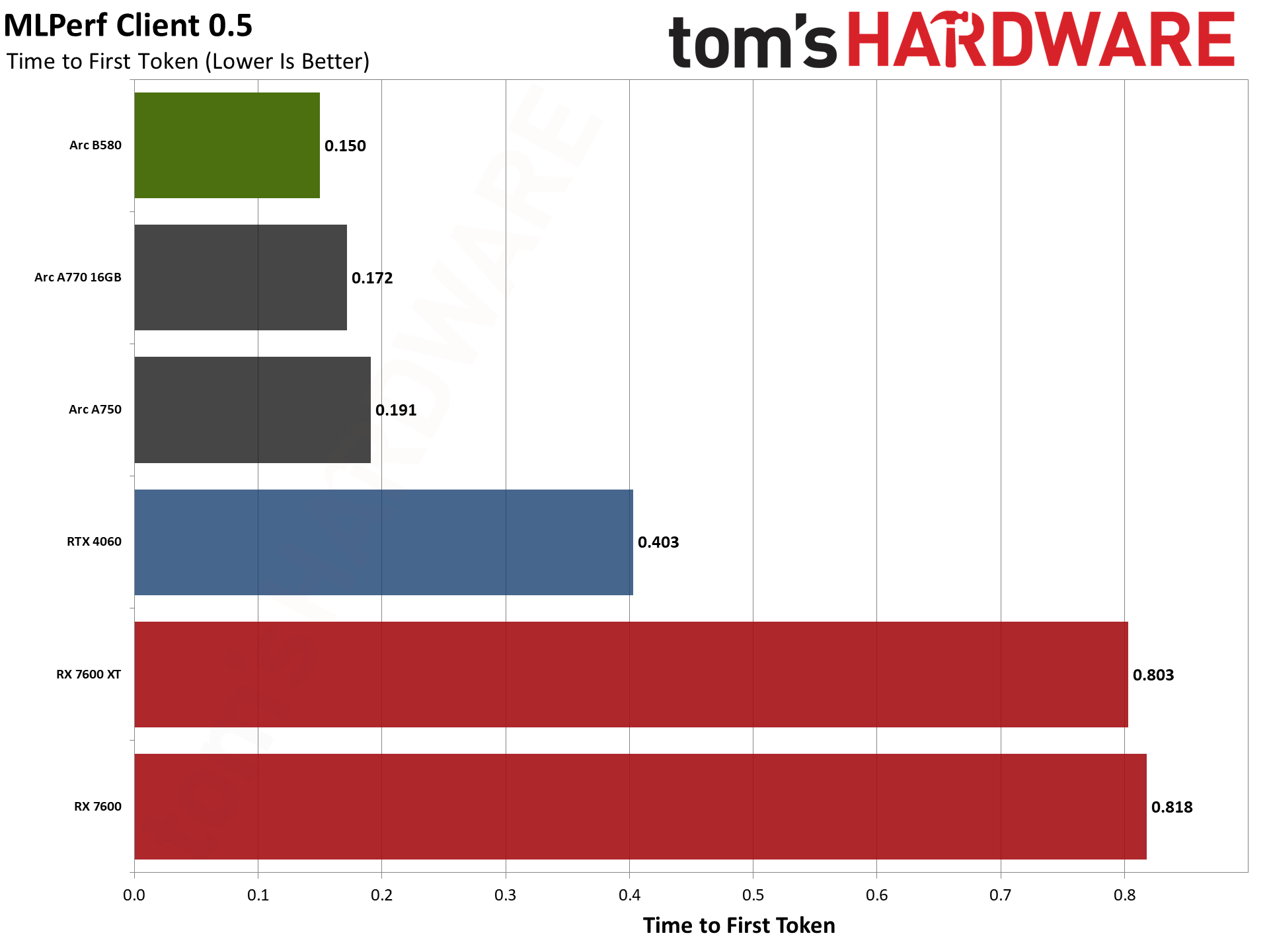

ML Commons just released a new MLPerf Client 0.5 test suite, which does AI text generation in response to a variety of inputs. There are four different tests, and the benchmark measures the time to first token (how fast a response starts appearing) and the tokens per second after the first token. These are combined using a geometric mean for the overall scores, which we report here.

While AMD, Intel, and Nvidia are all ML Commons partners and were involved with creating and validating the benchmark, it doesn't seem to be quite as vendor agnostic as we would like. AMD and Nvidia GPUs only currently have a DirectML execution path, while Intel has both DirectML and OpenVINO as options. We reported the OpenVINO numbers, which are quite a bit higher than the DirectML results.

Intel's B580 comes out as the fastest solution for these particular tests. However, we suspect a lot of that comes from the OpenVINO tuning. We'd be interested in seeing how a TensorRT tuned version of the test runs on Nvidia, as currently the 4060 splits the difference between the two AMD GPUs.

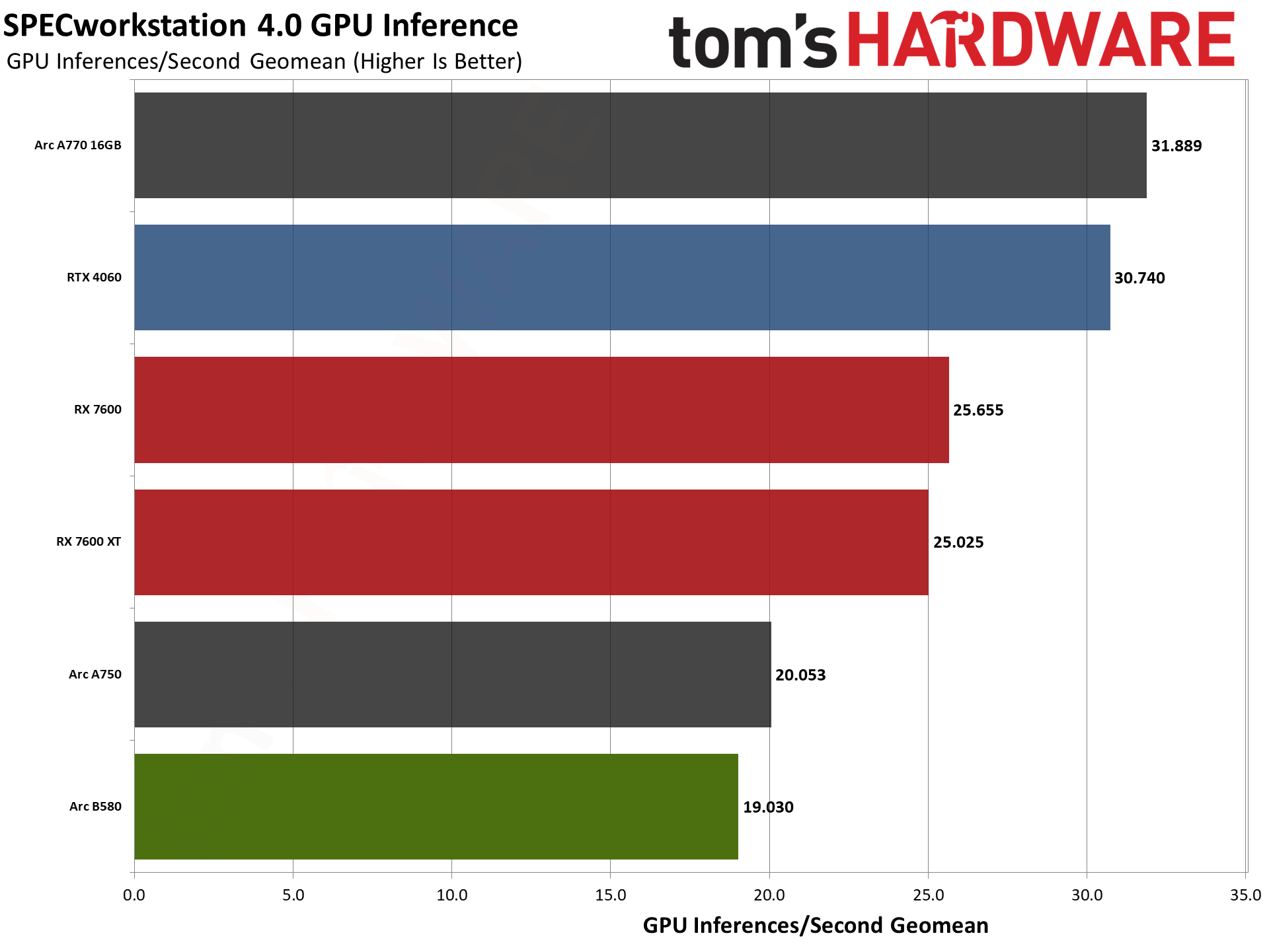

We'll have some other SPECworkstation 4.0 results below, but there's an AI inference test composed of ResNet50 and SuperResolution workloads that runs on GPUs (and potentially NPUs, though we haven't tested that). We calculate the geometric mean of the four results given in inferences per second, which isn't an official SPEC score but it's more useful for our purposes.

Again, software optimizations will likely make or break performance here. The RTX 4060 and A770 come out on top, with A750 trailing by a decidedly larger than normal margin — perhaps VRAM capacity is somehow a factor on that GPU. But VRAM doesn't seem to matter at all on the two AMD GPUs, so it must be something else.

Arc B580 comes in last this time, indicating how wide a spread you can encounter with AI workloads depending on driver optimizations and application tuning. Based on the specifications, we'd expect the B580 to beat the A770, but instead it falls to the bottom of the chart.

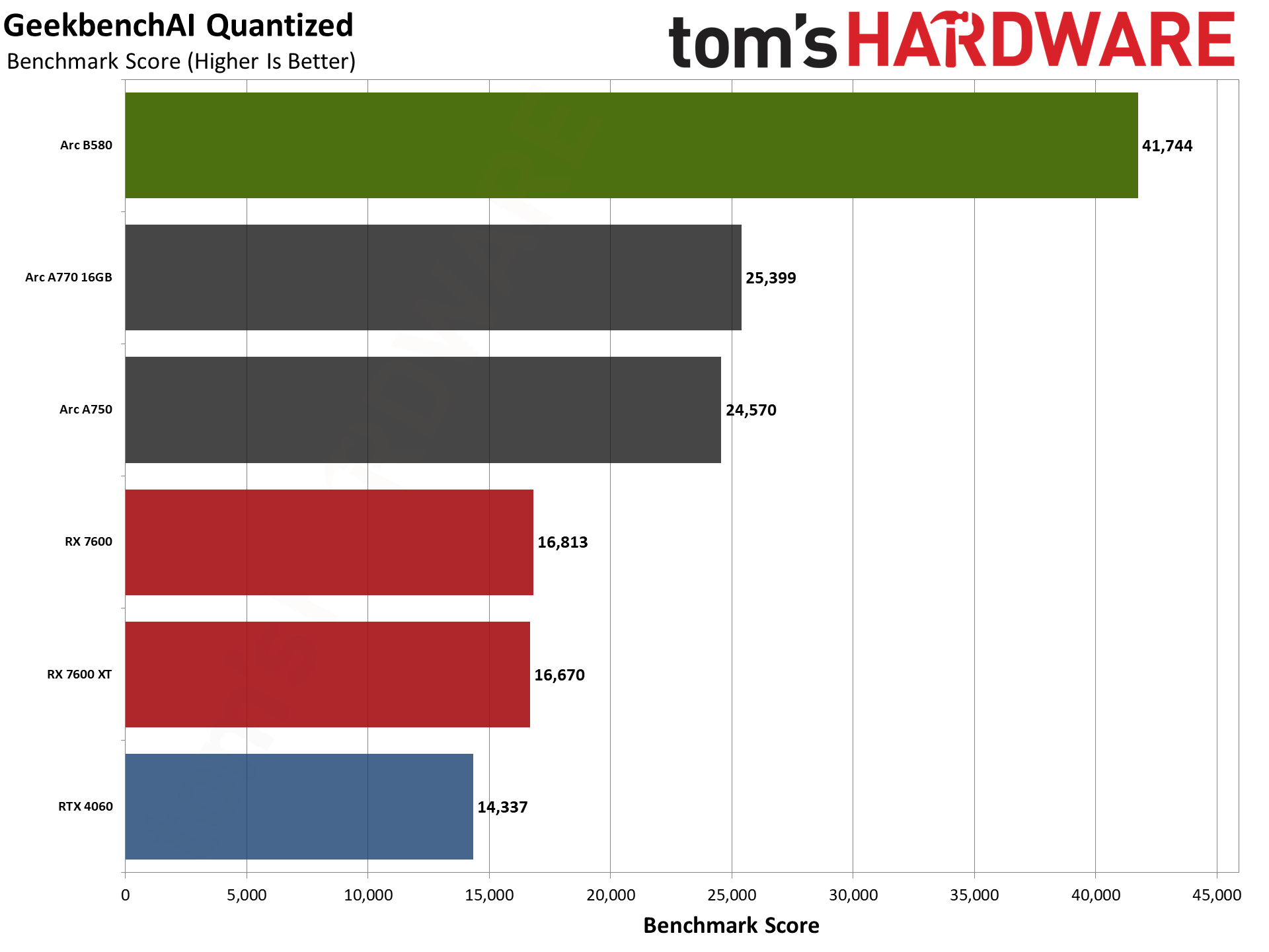

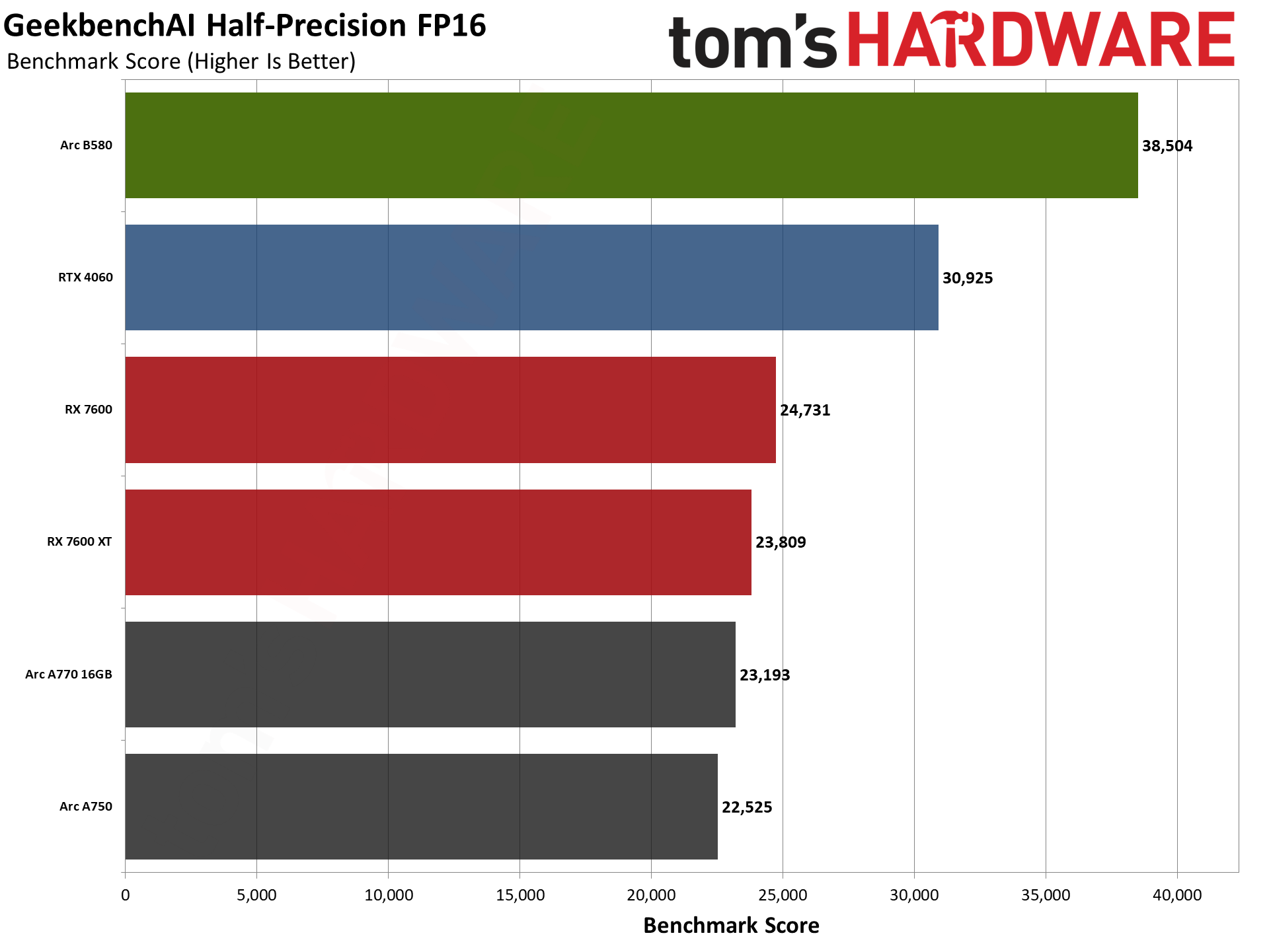

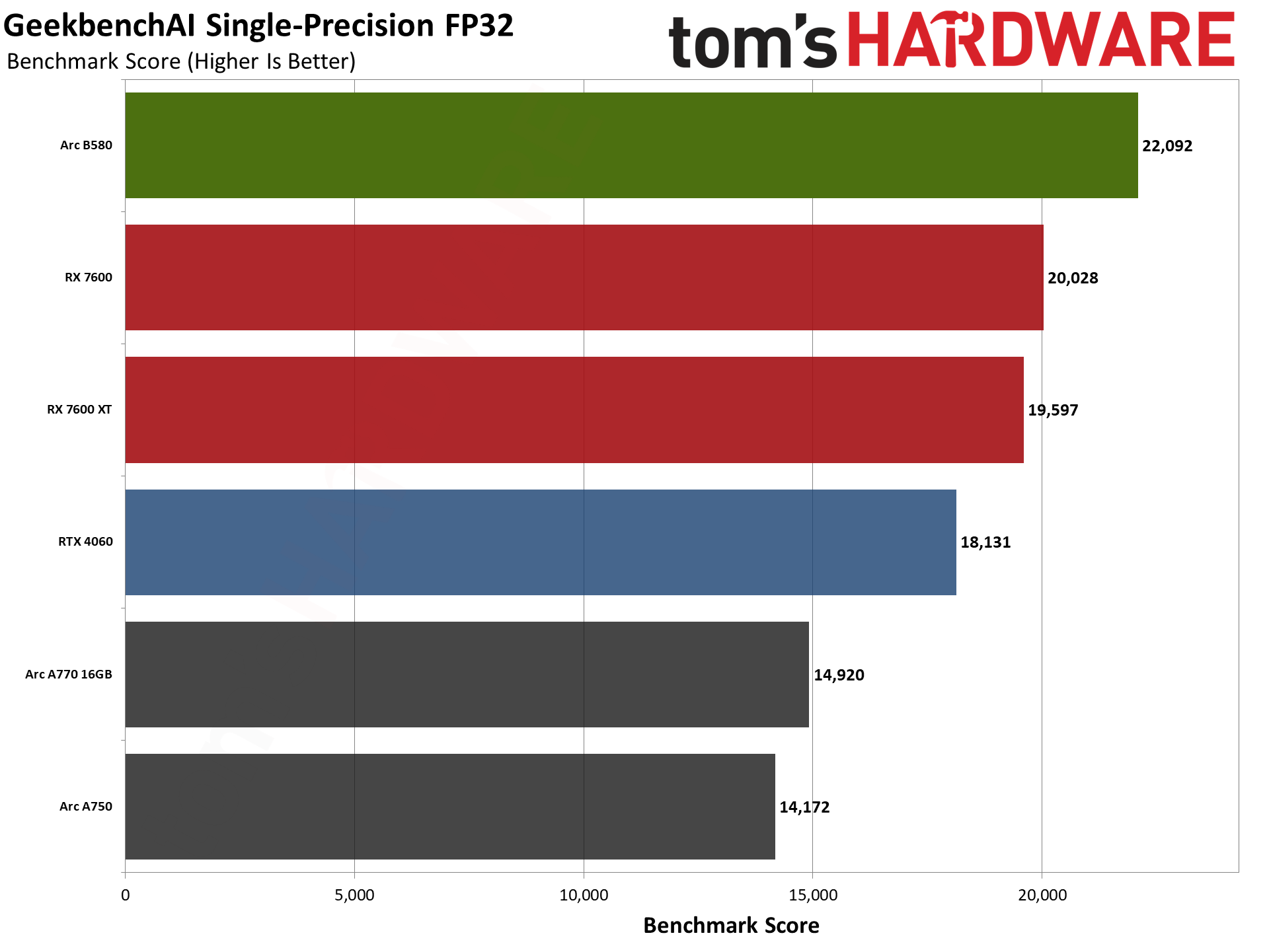

Our final AI test suite is Geekbench AI, and when you watch it run it's clearly not doing as much work as the above tests. Take the results with a dose of salt, in other words, but we'll report the overall score for now. (There are about a dozen different subtests that all complete in around two minutes.)

It has three modes: quantized (we assume 8-bit integer, though that might be incorrect), FP16, and FP32. The B580 gets a large relative boost for the quantized tests, and also comes out on top in the FP16 and FP32 results.

Interestingly, AMD's GPUs take second and third in FP32, but drop down the charts for FP16 and quantized work. The Arc A-series also does well for the quantized tests, but fill the bottom of the charts for FP16 and FP32.

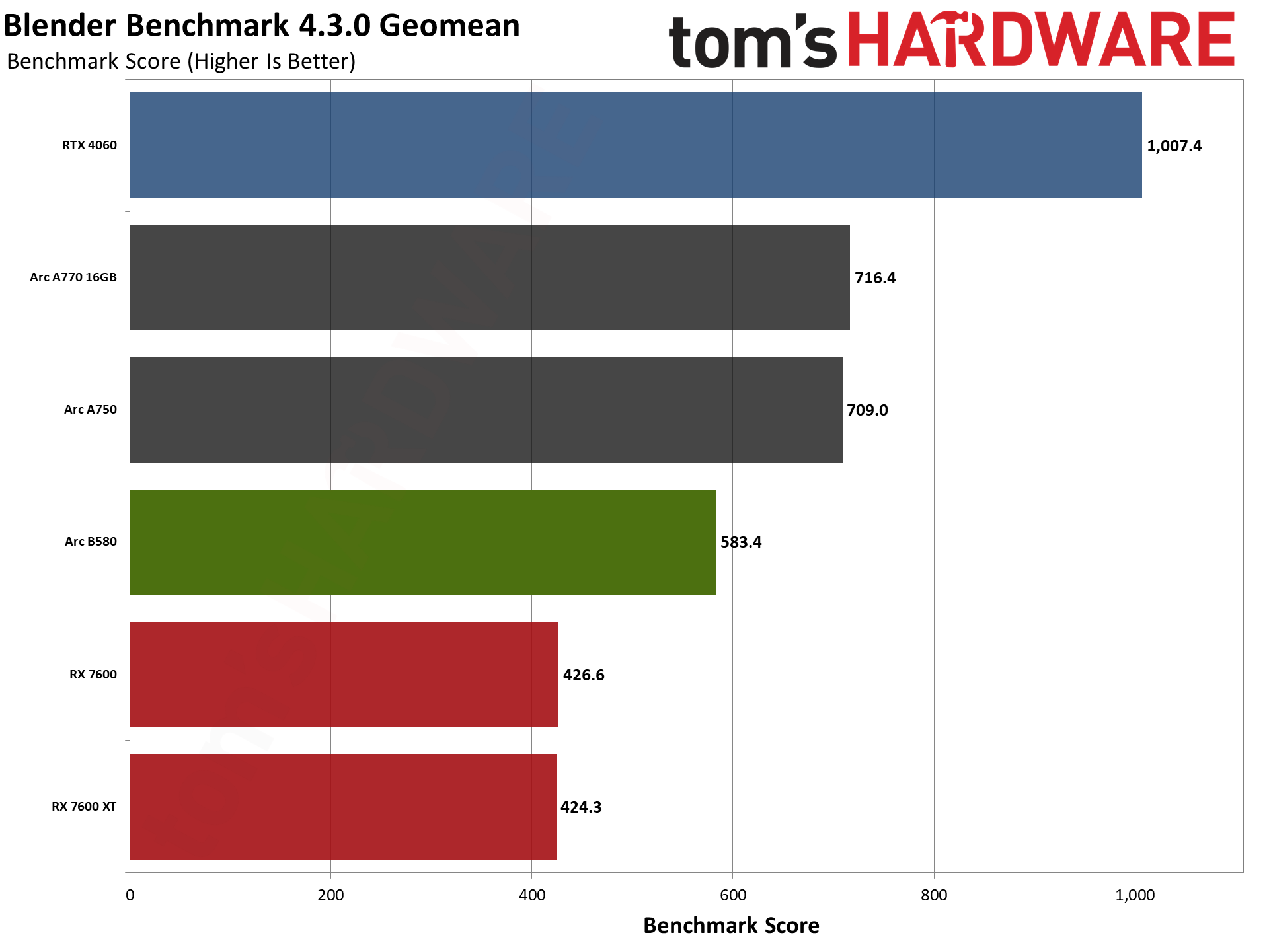

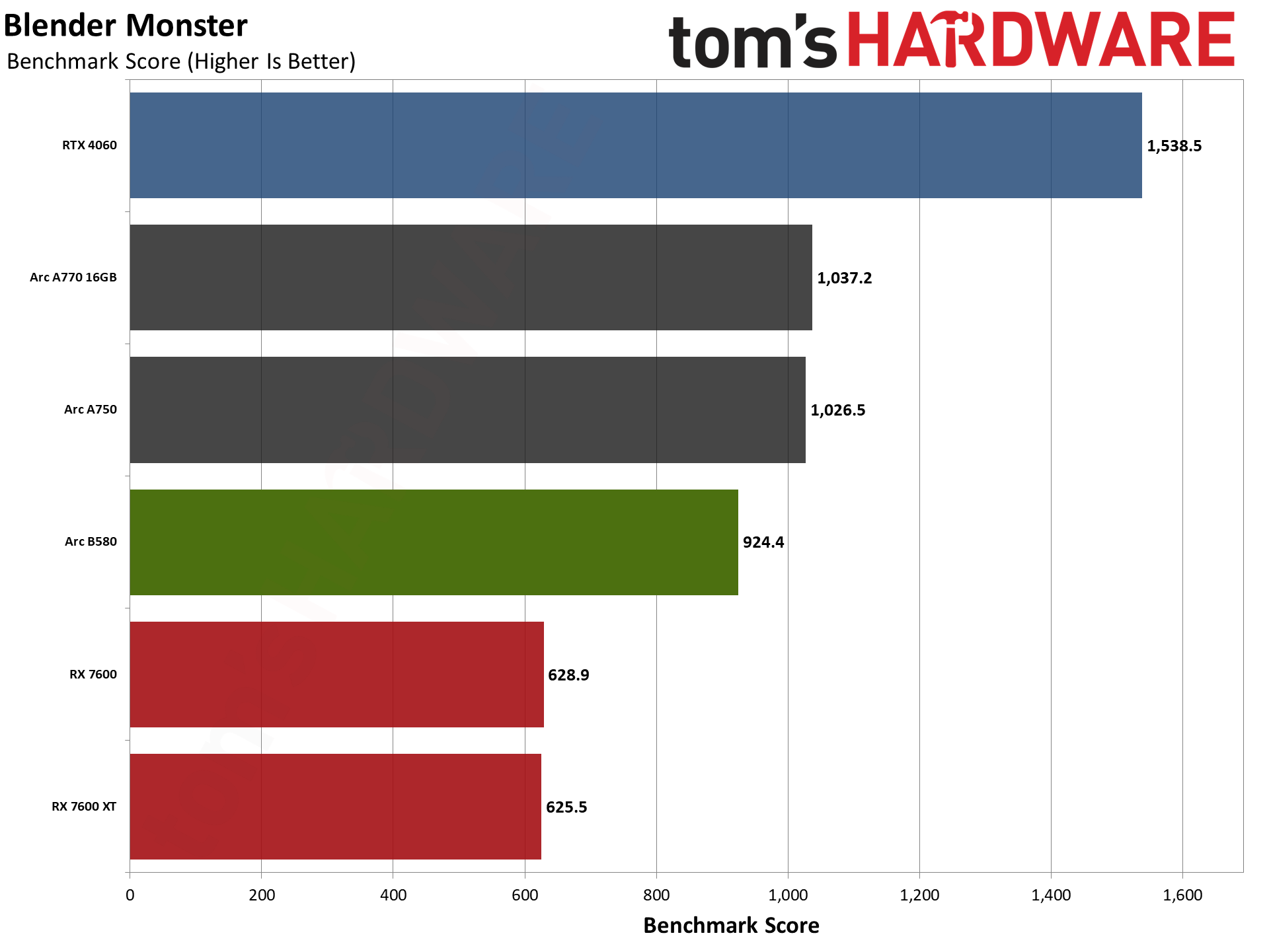

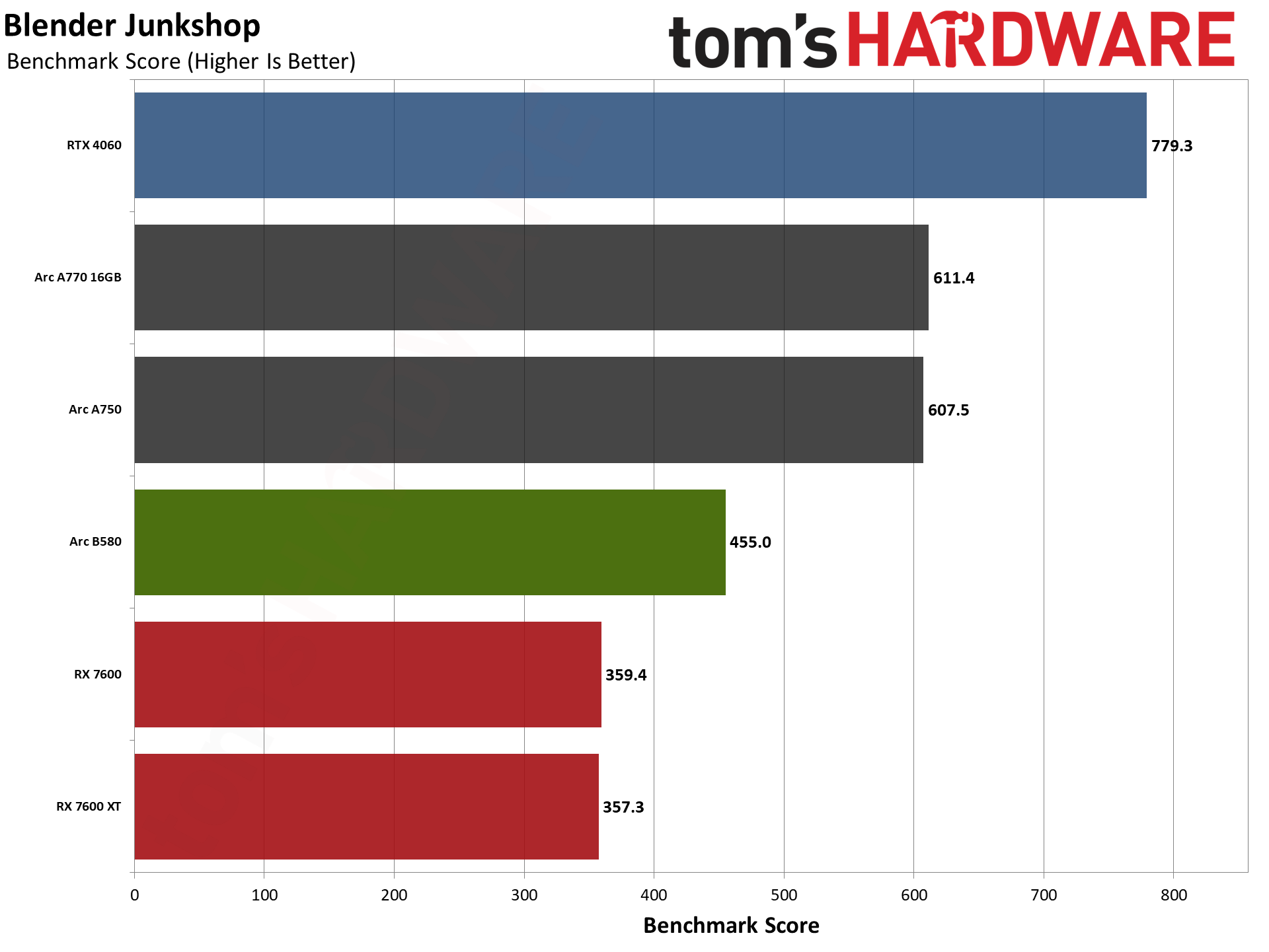

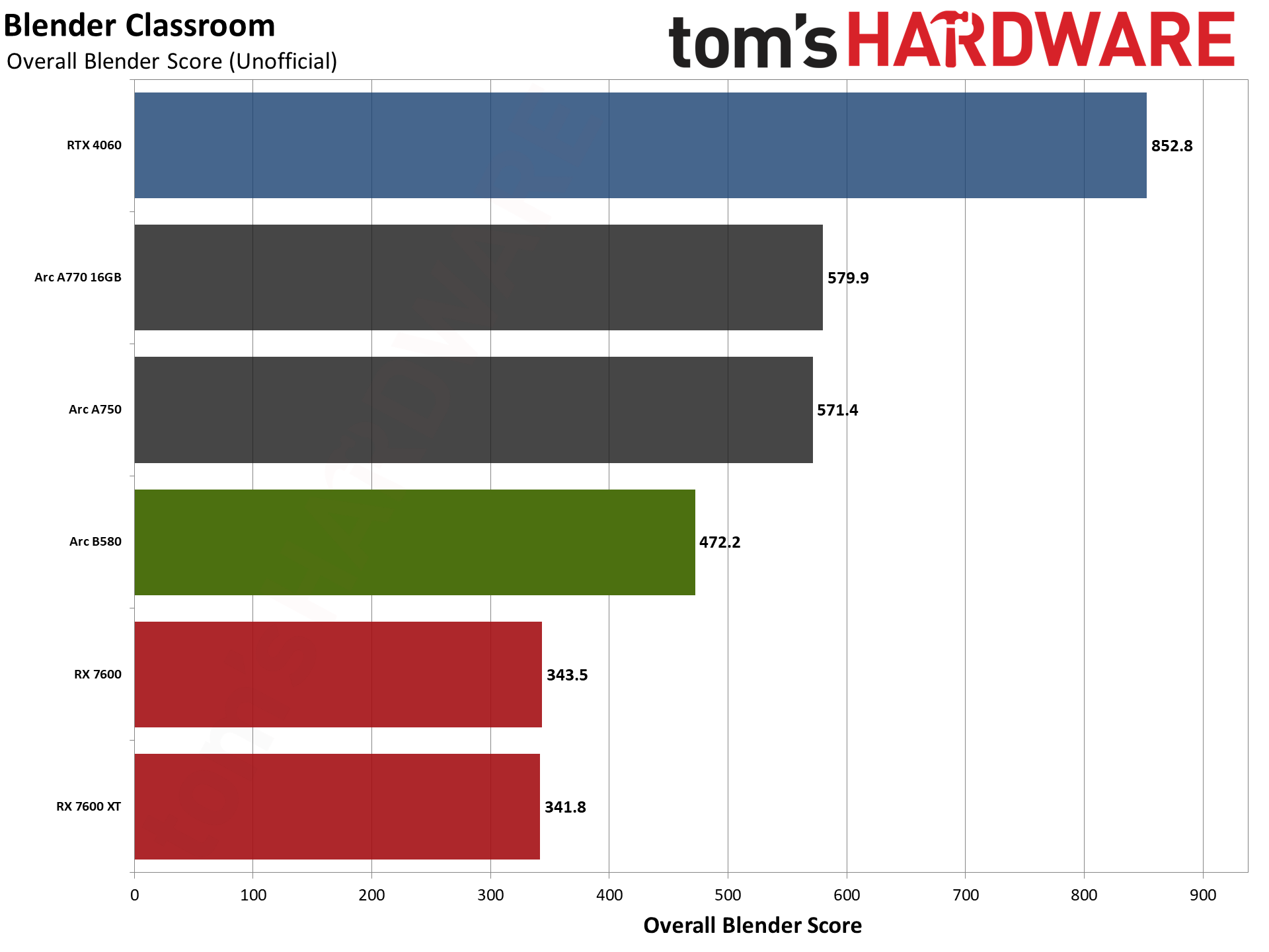

For our professional application tests, we'll start with Blender Benchmark 4.3.0, which has support for Nvidia Optix, Intel OneAPI, and AMD HIP libraries. Those aren't necessarily equivalent in terms of the level of optimizations, but each represents the fastest way to run Blender on a particular GPU at present.

Again, the Battlemage results look like there's not as much optimization in the drivers and/or application. It falls behind both A-series GPUs, which we don't think represents how things ought to play out.

Nvidia gets the top spot with the RTX 4060, and we've noted previously that Optix tends to be closer to the hardware than OneAPI and HIP so that's probably a factor. AMD's GPUs land at the bottom of the charts again, and that makes sense for a heavy RT workload as the RDNA 3 architecture hasn't generally done well for such tasks.

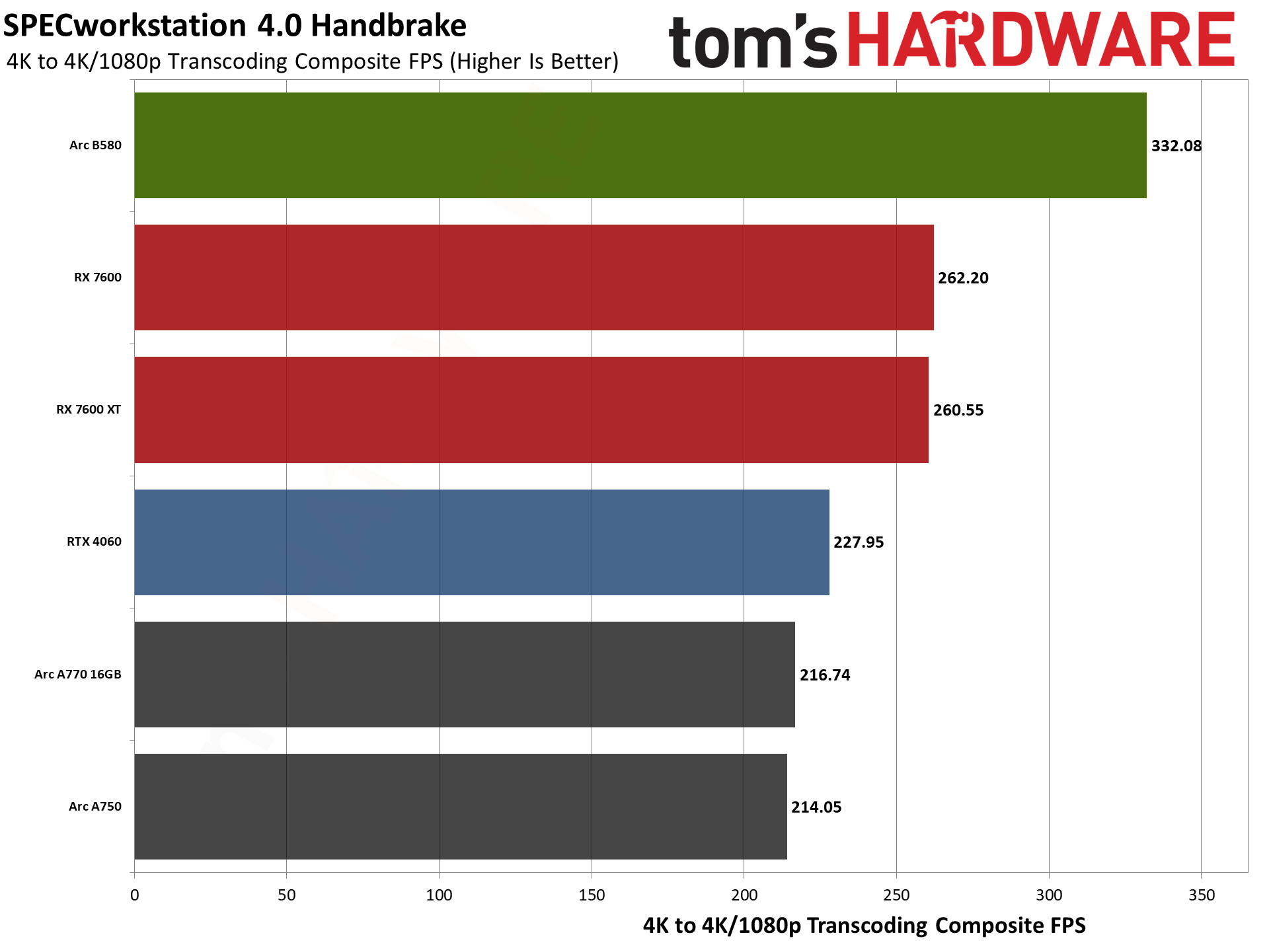

SPECworkstation 4.0 has two other test suites that are of interest in terms of GPU performance. The first is the video transcoding test using HandBrake, a measure of the video engines on the different GPUs and something that can be useful for content creation work. Here we use the average of the 4K to 4K and 4K to 1080p scores.

Here the Arc B580 does very well, easily eclipsing the competition. It's a shame that Intel killed off the studio portion of its drivers that allows you to easily do gameplay captures and streaming. I know OBS is generally the way to go for dedicated streamers, but built-in support like ShadowPlay is far lower overhead for a quick and dirty gameplay capture.

AMD's GPUs tie for second place, with the RTX 4060 and Arc A-series filling the bottom of the chart. This isn't the same as doing video editing in an application like Adobe Premiere Pro, however, as it's just doing video transcoding while live video editing stresses the hardware differently.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

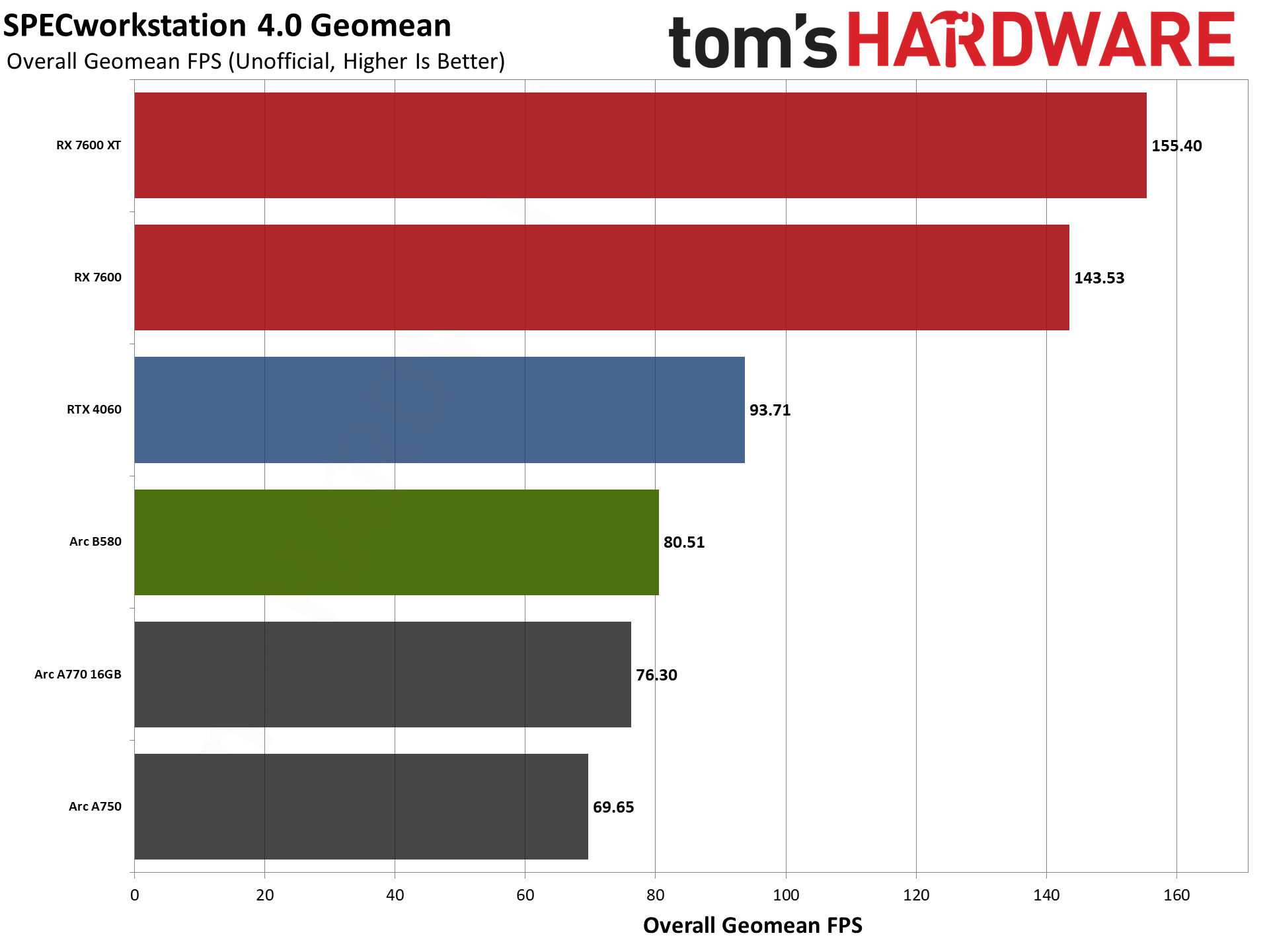

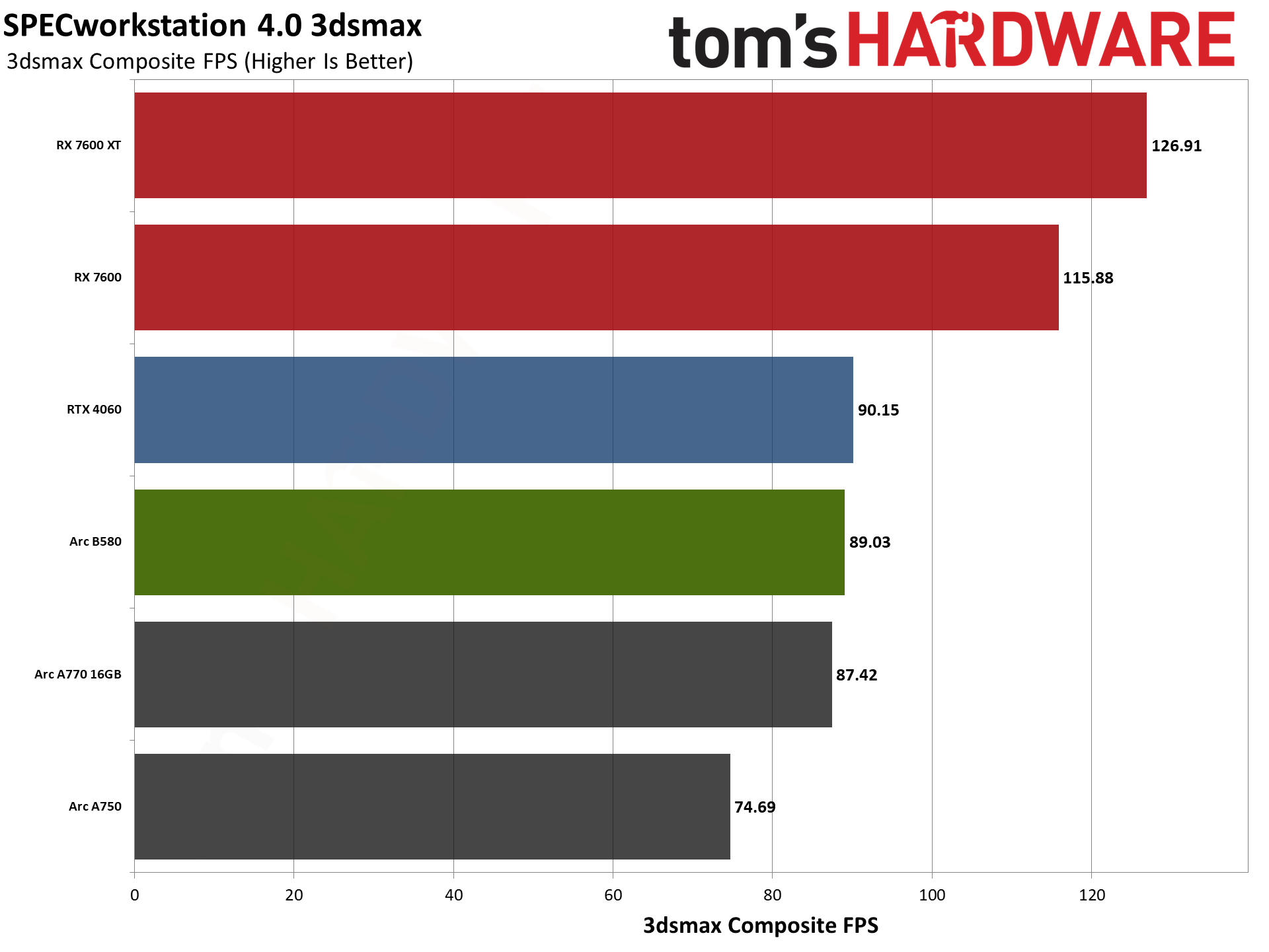

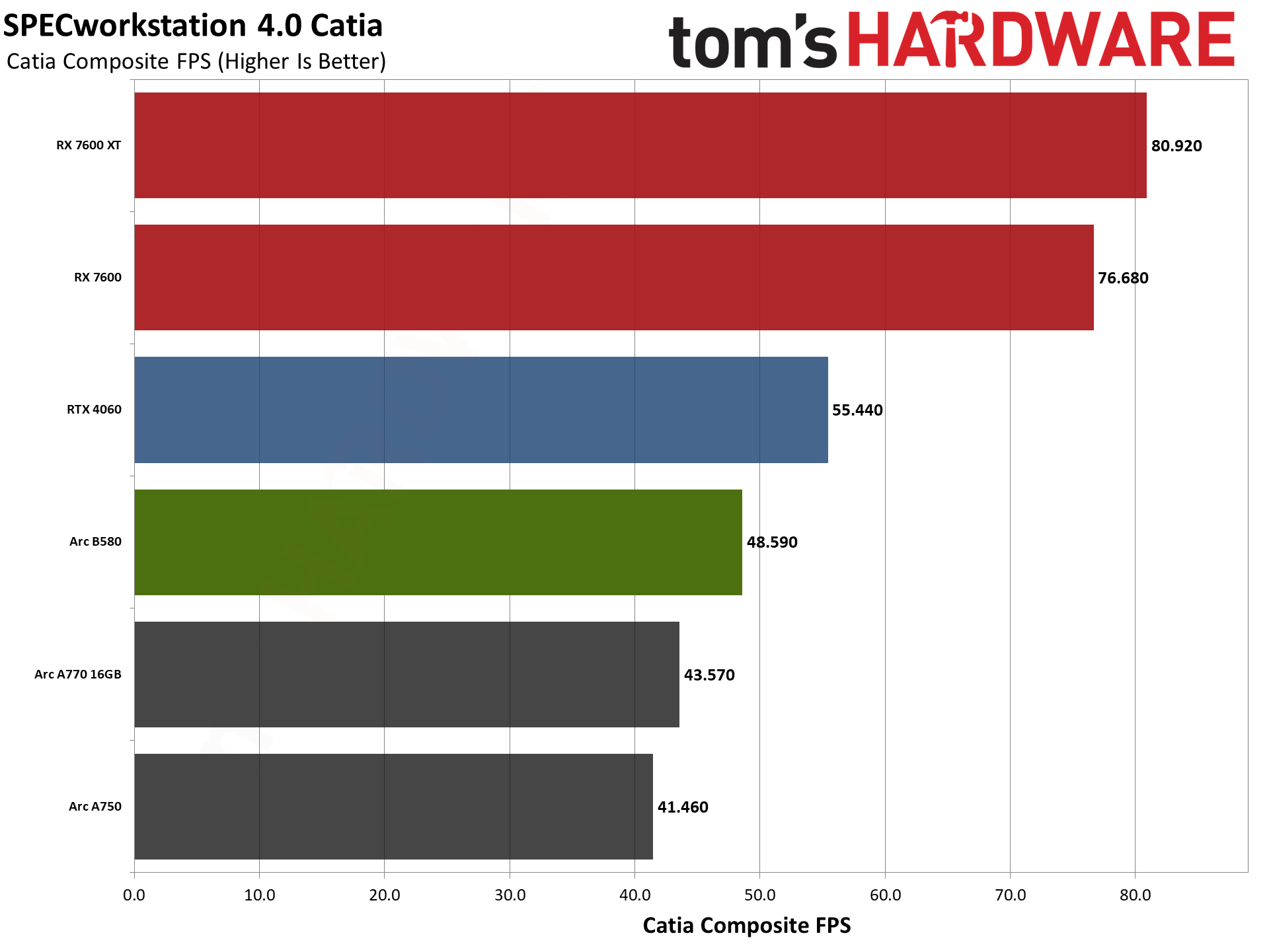

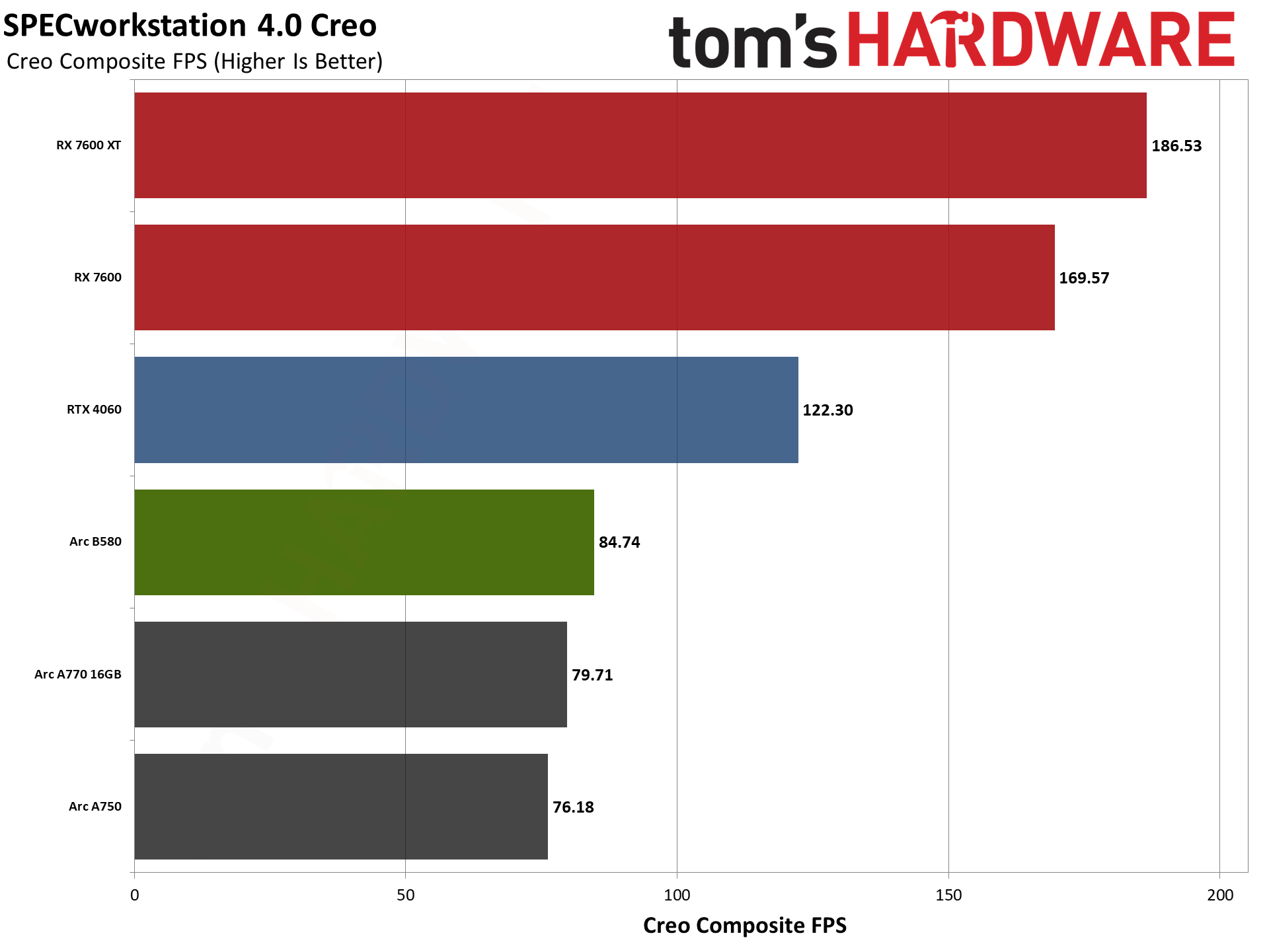

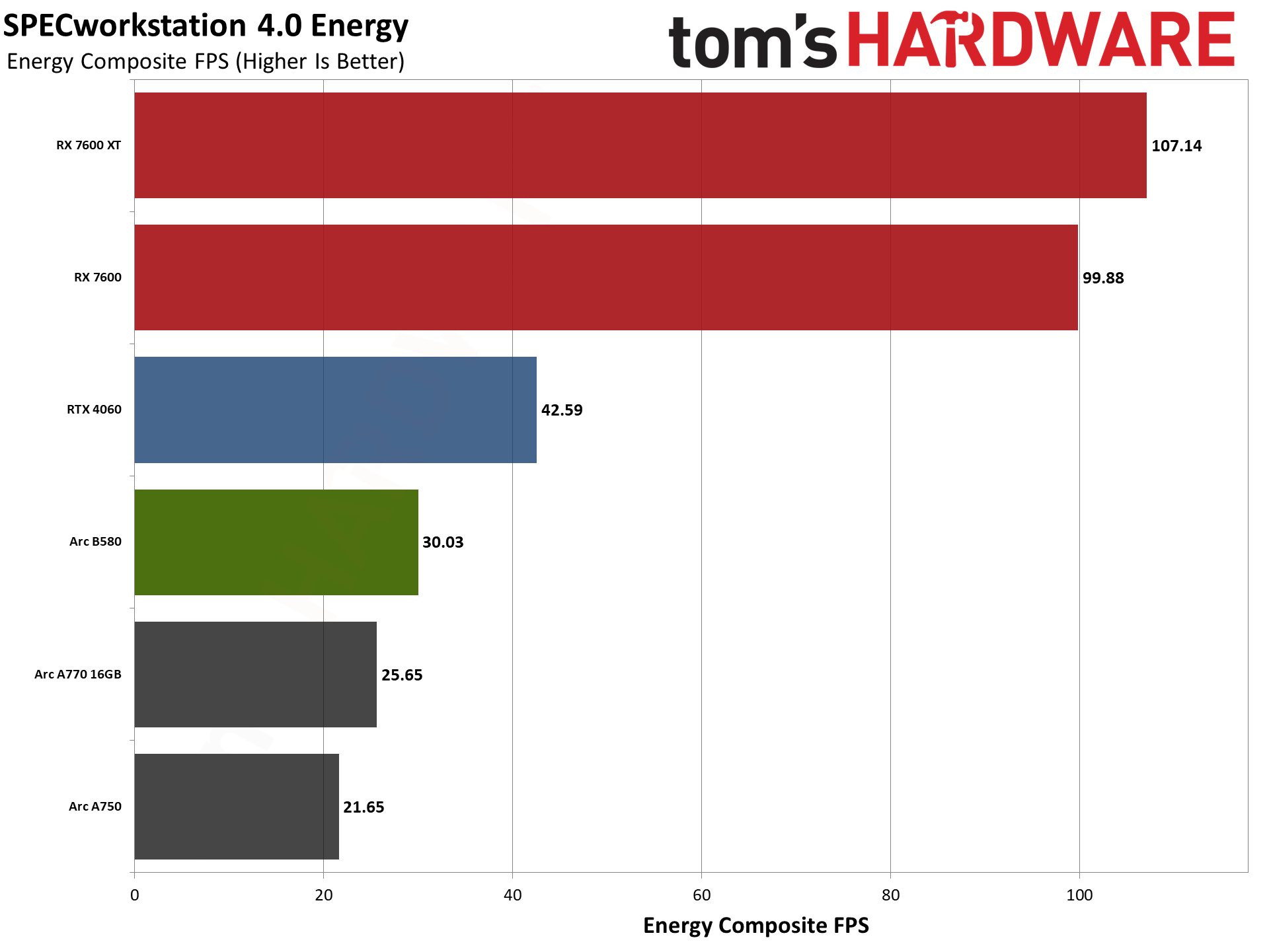

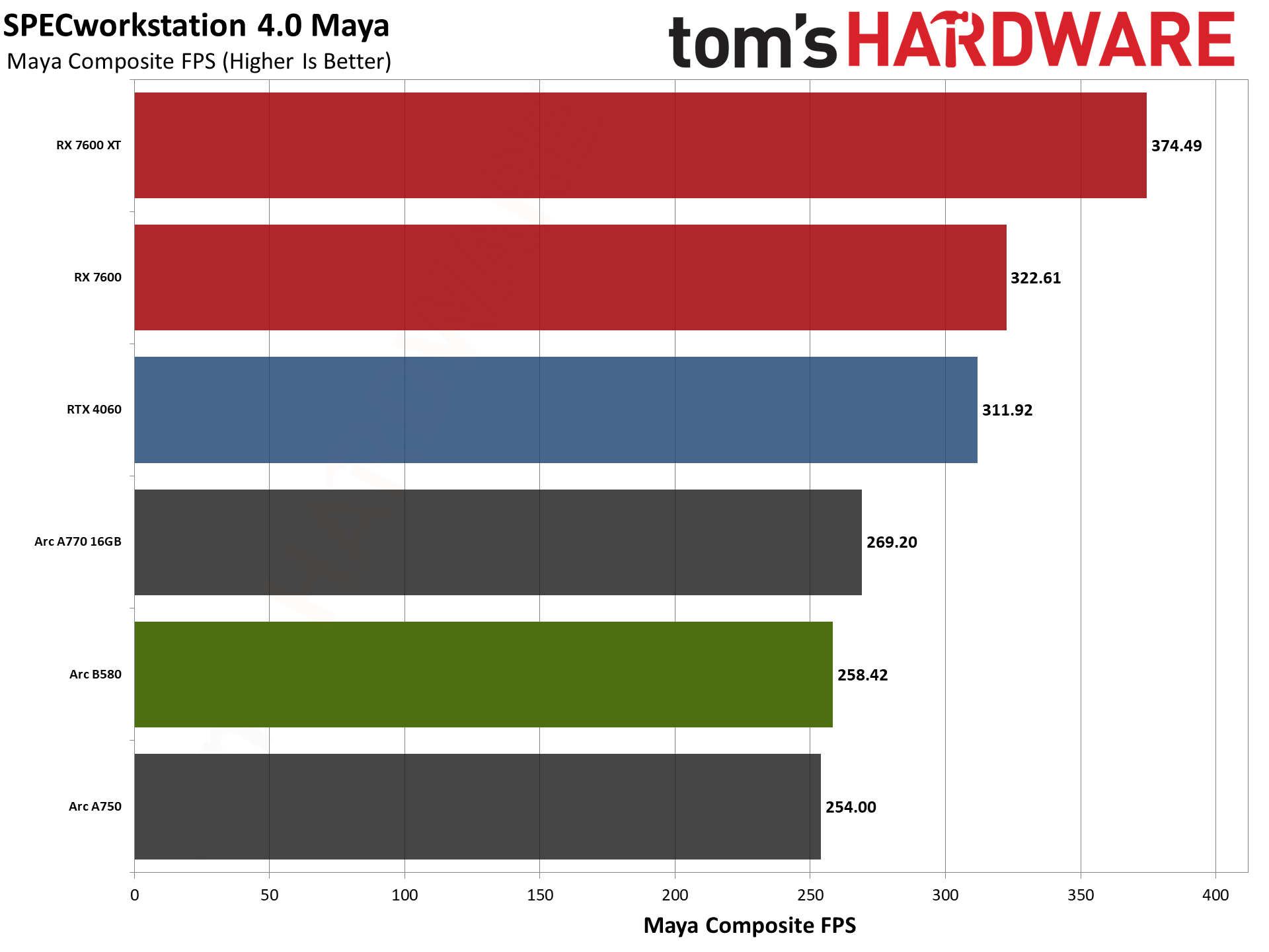

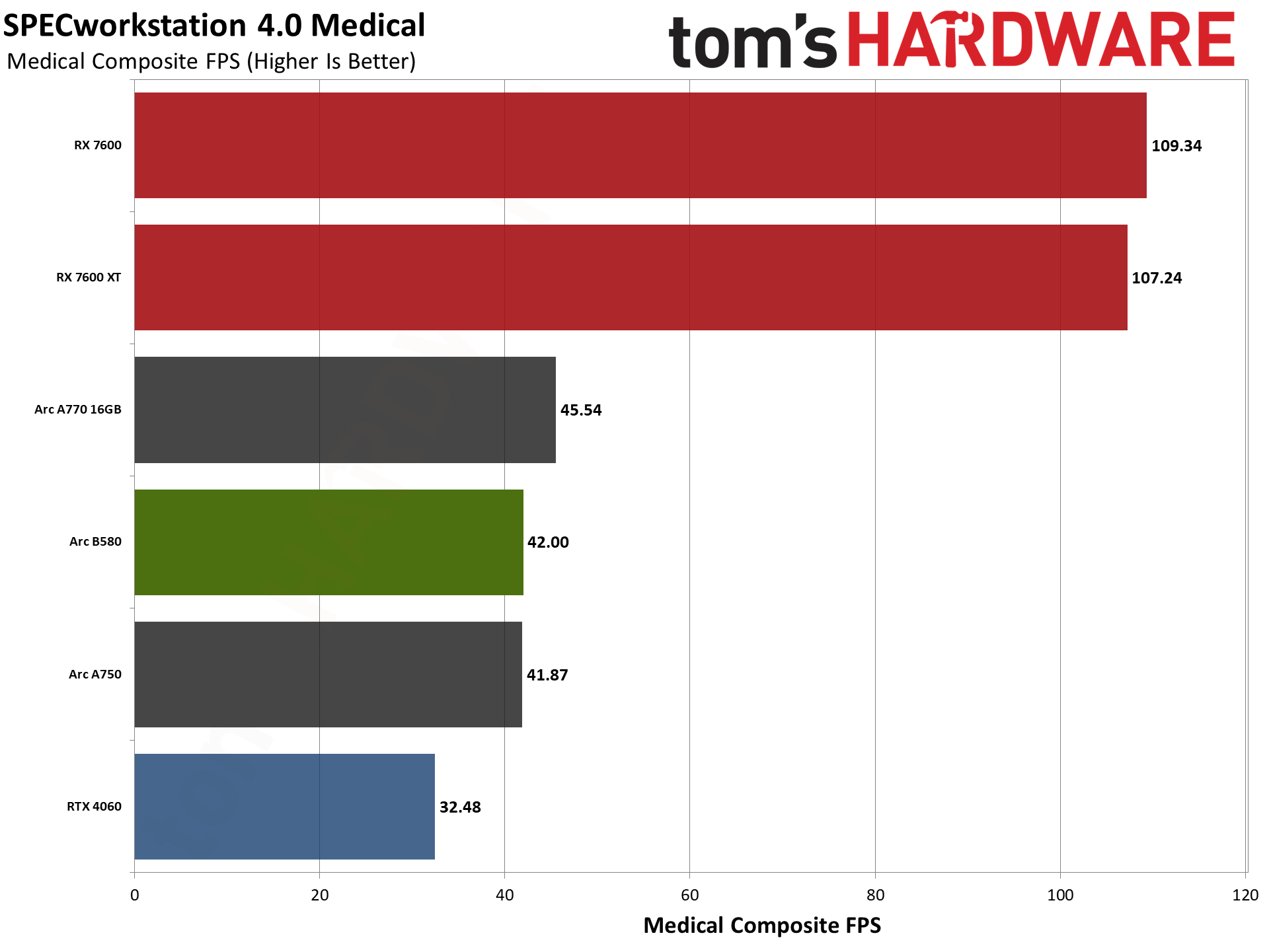

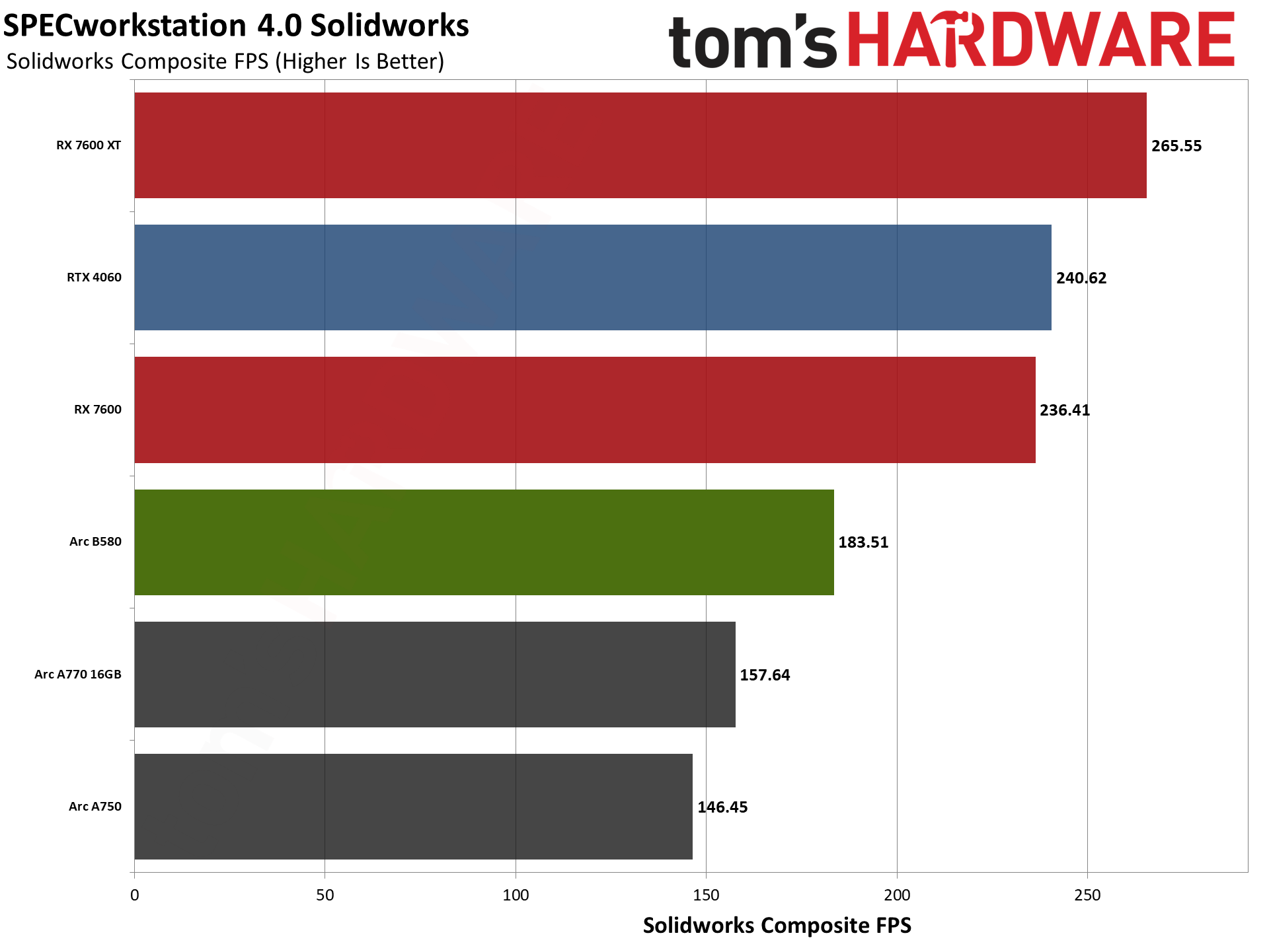

Our final professional app tests consist of SPECworkstation 4.0's viewport graphics suite. This is basically the same tests as SPECviewperf 2020, only updated to the latest versions. (Also, Siemen's NX isn't part of the suite now.) There are seven individual application tests, and we've combined the scores from each into an unofficial overall score using a geometric mean.

AMD provides the most 'professional' application performance in its consumer drivers, just about doubling the performance of the Arc B580. Even Nvidia comes out ahead of Arc with the RTX 4060 (now that Siemens NX isn't pulling down the average).

The only application test where the B580 manages to beat the RTX 4060 is the medical benchmark, where the Arc A-series GPUs also perform at a similar level. Otherwise, the three Arc GPUs occupy the bottom three slots on each chart. We suspect driver tuning could again help Intel a lot in these tests.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Current page: Intel Arc B580: Content Creation and AI Performance

Prev Page Intel Arc B580 Ray Tracing Gaming Performance Next Page Intel Arc B580: Power, Clocks, Temps, and Noise

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Math Geek nicely done :)Reply

looks like a good value. i'm in the market for my next card but seems like waiting a little bit to see what AMD does next is not too bad of an idea. i hate waiting to see what the next best thing is but this close it seems like prudent advice.

side note: it does look like you forgot to replace the place holders on the power consumption paragraph.

"On average, the B580 used xxxW at 1080p medium, xxxW at 1080p ultra, xxxW at 1440p, and xxxW at 4K. As you'd expect, power use typically increases at higher settings and resolutions." -

Jagar123 I am happy to have competition in the market. I imagine next gen AMD and Nvidia cards will be stronger competitors but they might be priced poorly again. Price to performance is key here. We'll see in a month or so.Reply -

shady28 Great review, against relevant parts for this price class too :DReply

Given that Steam shows the 3 most popular GPUs are the 3060 discrete, 4060 laptop, and 4060 discrete, Intel now has a GPU that competes in the largest part of the segment - and leads it in both value and performance.

Granted AMD and Nvidia are about to release new GPUs, but let's also note that the 5060 / 8600 aren't likely to show up until late 2025 or early 2026 if they follow their normal pattern. -

palladin9479 Great review, I'm in the market for a SFF two slot low power card for a living room system. The APU can only do so much and I'm starting to hit walls with it lately so a lower power dGPU might be the only real answer.Reply -

Gururu I guess it will come down to an availability issue. I doubt we will see superior cards by AMD or nVidia in this price bracket by end of Q1 2025. We will certainly see lots of benchmarks blowing these early battlemage offerings in January, but nothing ready for purchase. Later battlemage offerings are in my best guess going to be in the $400 range, likely beating 7800 and 4070, but again probably not until mid-late Q1. If AMD and nVidia drop anything crushing a 4090 you can bet it will be in the $700+ range. Is it fair to say that something beating the B580 readily available in April for $250 is fair competition now? I don't know. Maybe not if a normal consumer can actually get B580 silicon before Christmas.Reply -

Eximo Reply

I would probably still lean towards an RTX 3050 6GB for that. B580 is still a little power hungry for the job.palladin9479 said:Great review, I'm in the market for a SFF two slot low power card for a living room system. The APU can only do so much and I'm starting to hit walls with it lately so a lower power dGPU might be the only real answer.

I use an A380, and that isn't ideal either, since it still needs an 8-pin (at least that model). Though supposedly still only a 75W GPU. -

DS426 Great review, Jarred! The elaboration on your thinking and updating of your test bench's hardware and software is appreciated.Reply

It'll be some time before AMD and NVIDIA (green wants it written this way, by the way: http://http.download.nvidia.com/image_kit/LG_NVCorpBadge.pdf ... was curious as I noticed they have it written that way on their website) have new budget GPU's in this price class, so I myself wouldn't really recommend that a prospective owner waits. Of course, a lot of it also depends on if building new or upgrading (and upgrading from what). There's a huge user base at this price point, so I do imagine that Intel will get some market penetration for end users, not just prebuilds. This, particularly since day 1 drivers are already fairly stable overall and Intel now has the value leader at this price point; Intel didn't mess up this launch, whereas botched launches can tarnish audience sentiment of the product for months and years, if not permanently. -

King_V Definitely liking what I see here. And, glad to know that Intel is taking this very seriously. They're clearly not messing around.Reply -

rluker5 Transistor density pretty close to AMD.Reply

Transistor density of 7600XT is 65.2 M/mm2 and B580 is 72.1 M/mm2 per Techpowerup. Sure B580 is on 5nm while 7600XT is on 6nm but that isn't that big a difference. Definitely moving in the right direction. And more importantly performance per mm2 is much closer to AMD. And performance per watt.

Catching up really fast. -

caseym54 Probably flogging a dead horse here, but a 6-column table with 5 columns visible and a slider is truly lame.Reply