Why you can trust Tom's Hardware

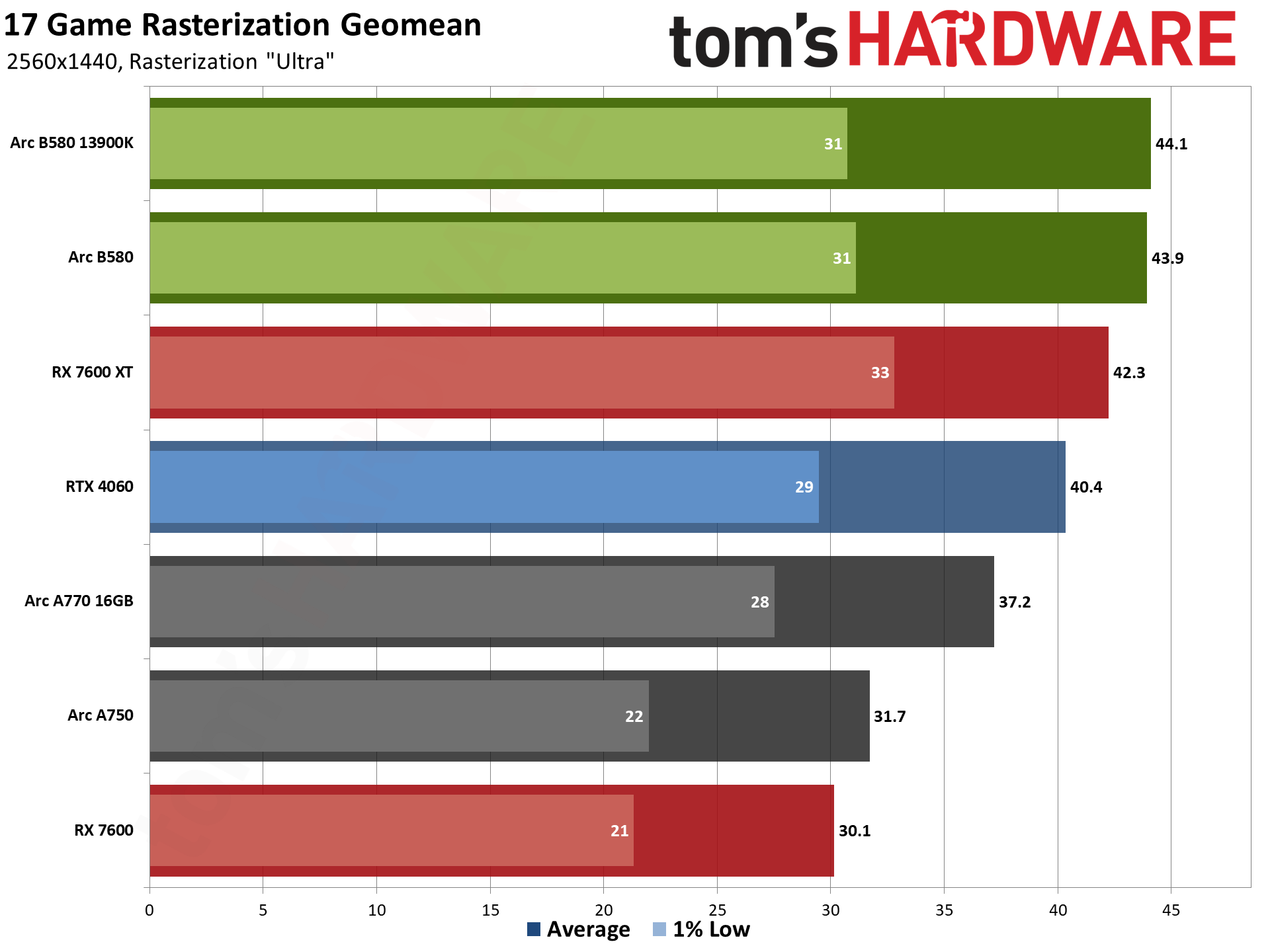

We're going to try something new with this review and test suite. Rather than breaking things down by resolution, we'll show the four performance charts for each game, along with the overall performance geomean, the rasterization geomean, and the ray tracing geomean. We'll start with the rasterization suite of 17 games, because that's arguably still the most useful measurement of gaming performance.

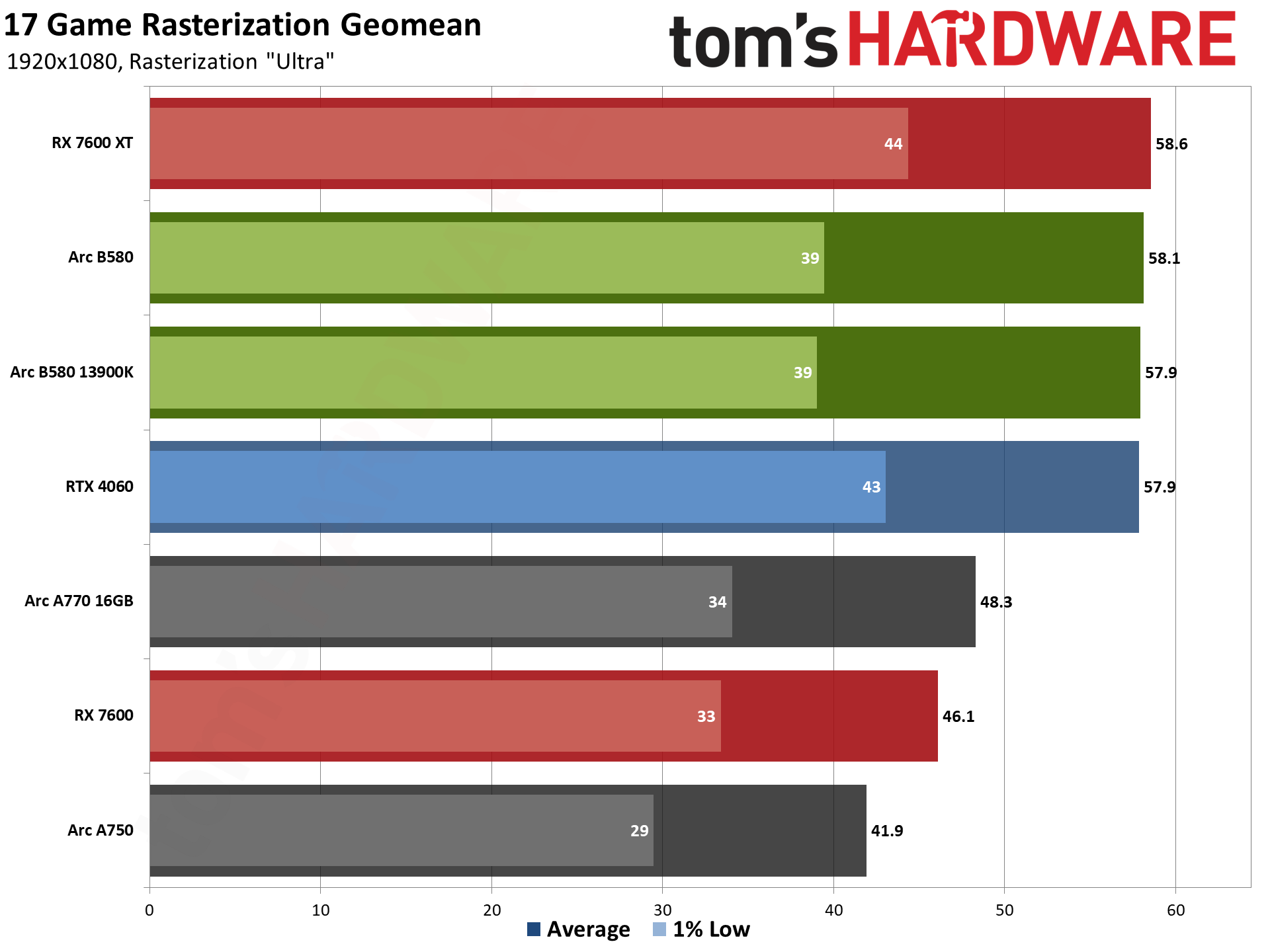

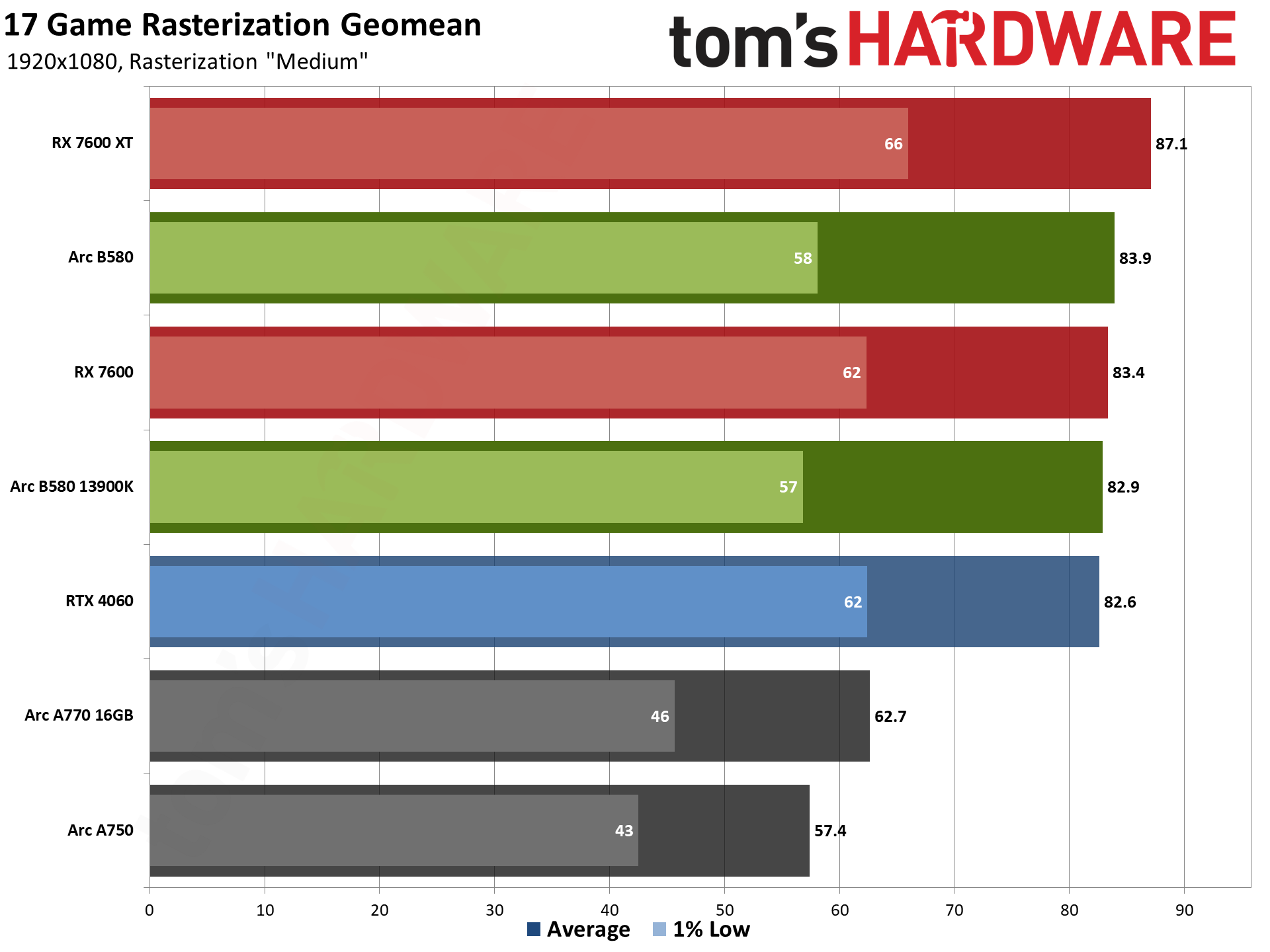

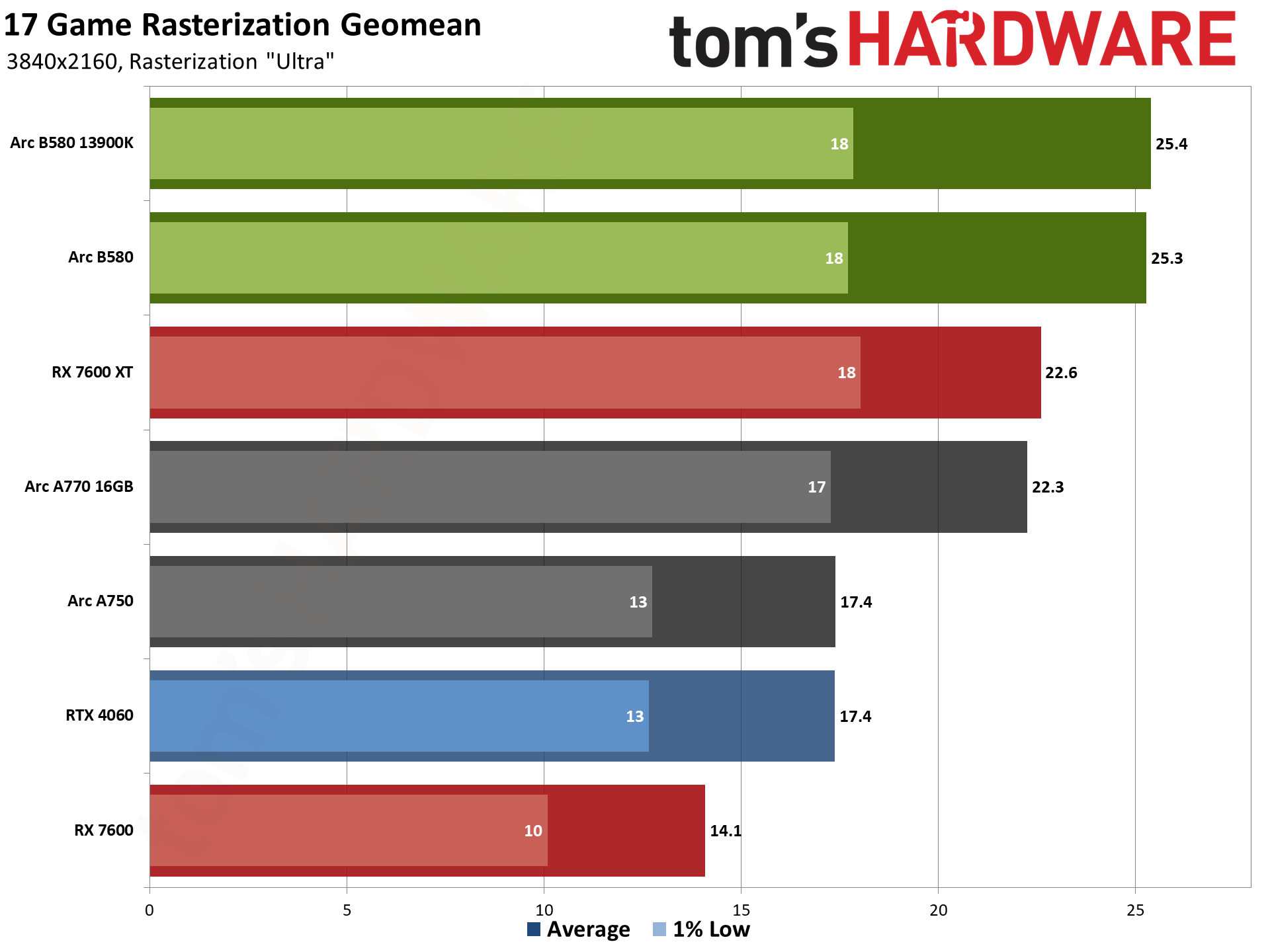

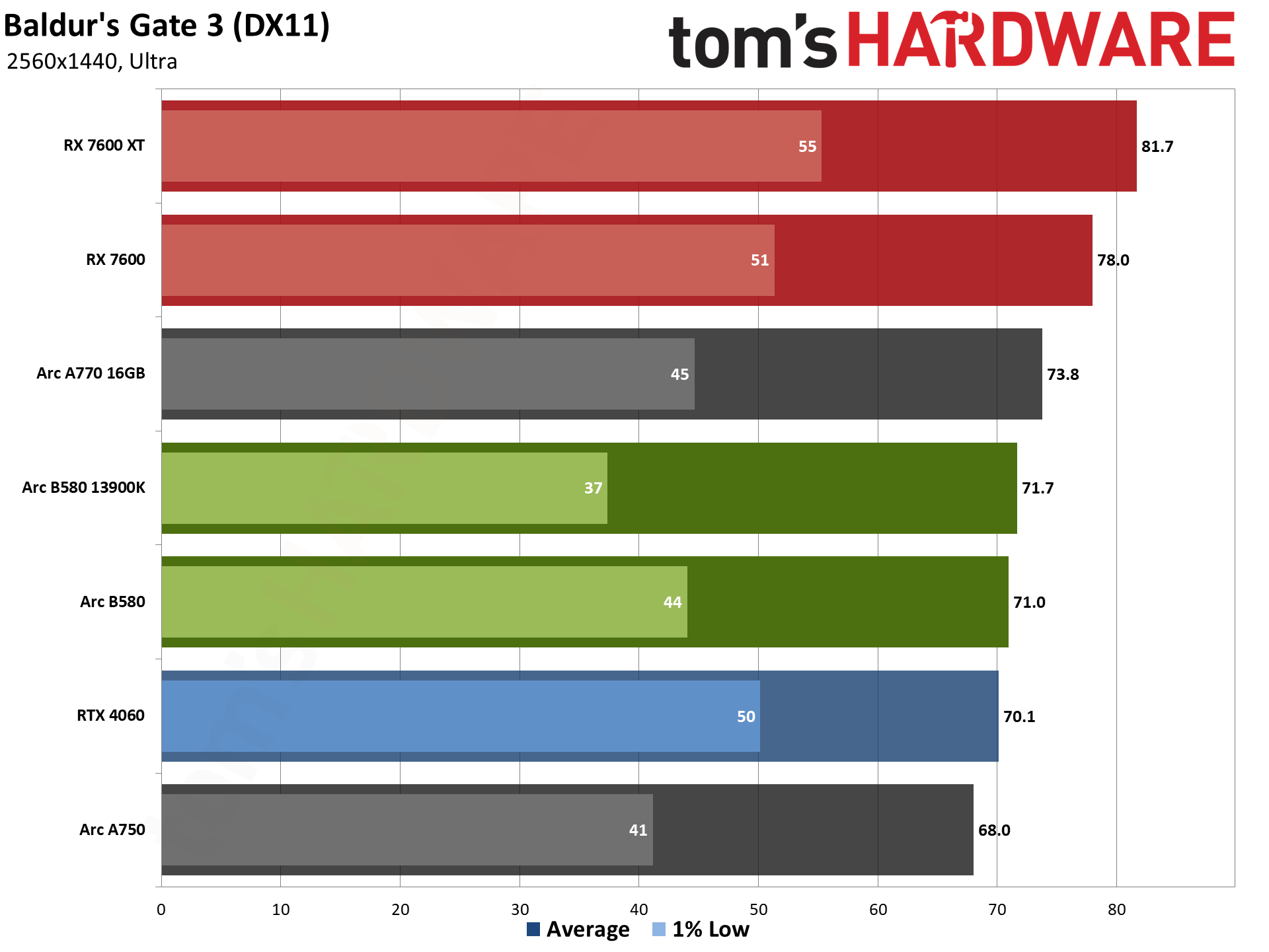

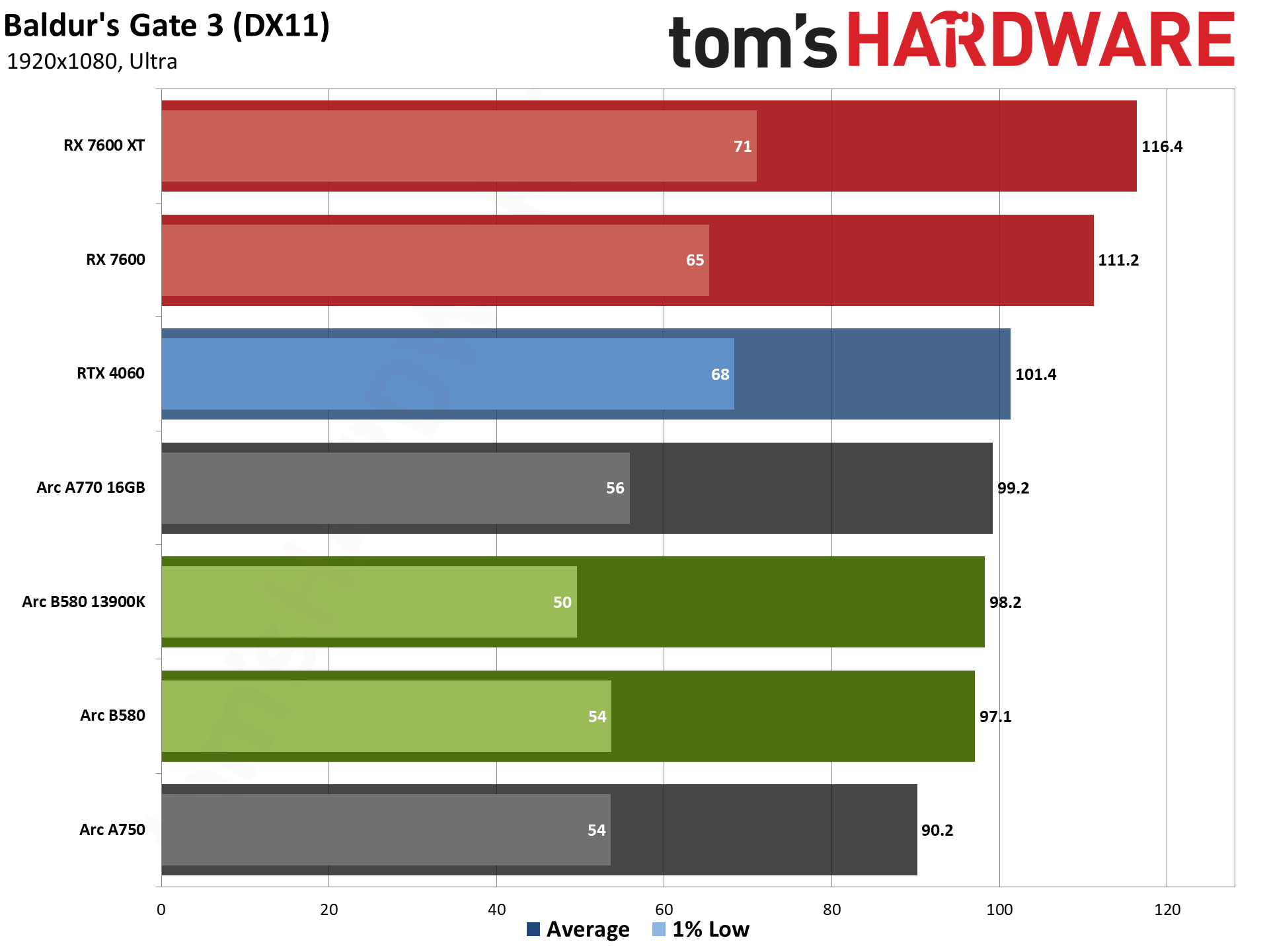

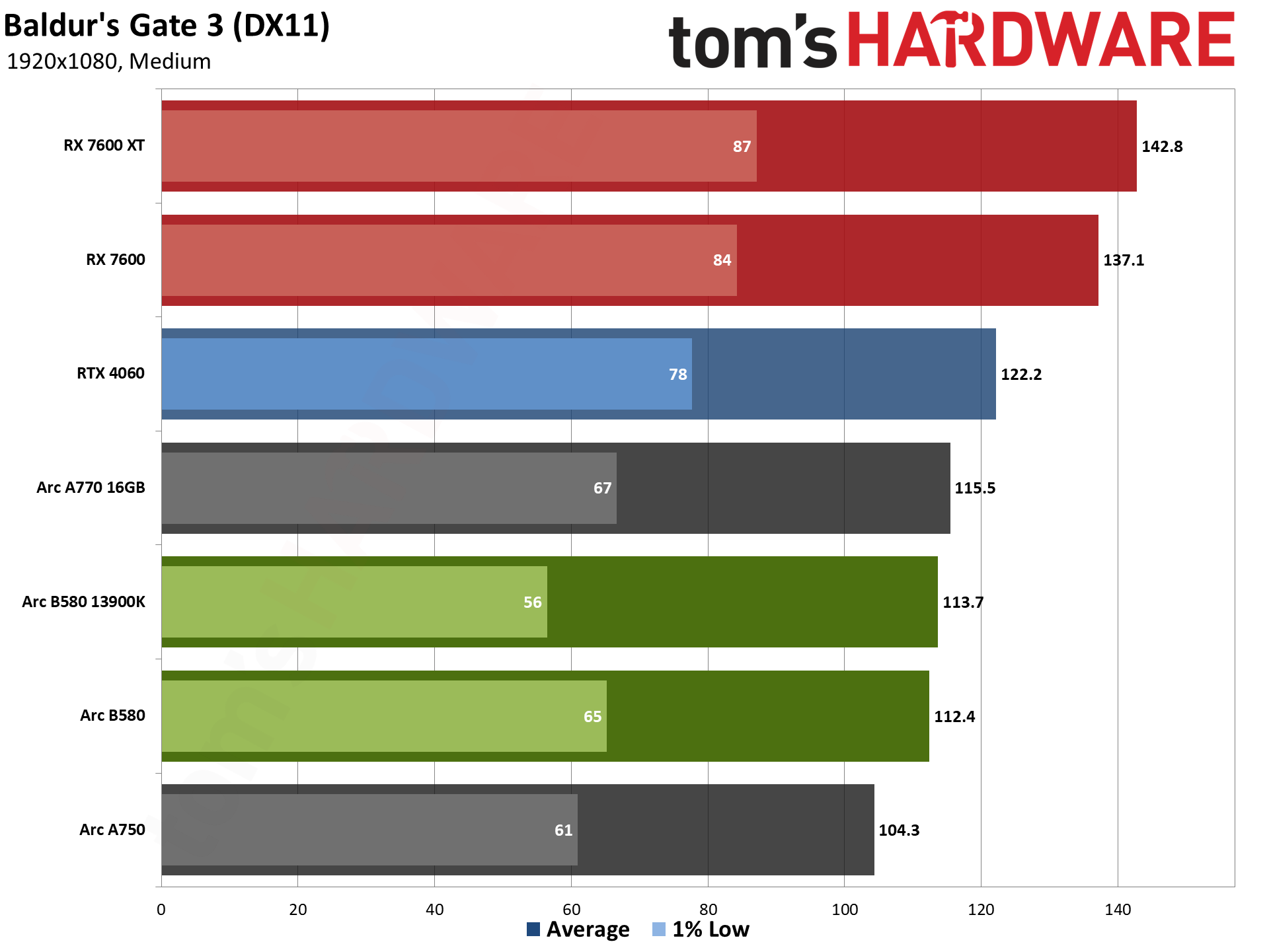

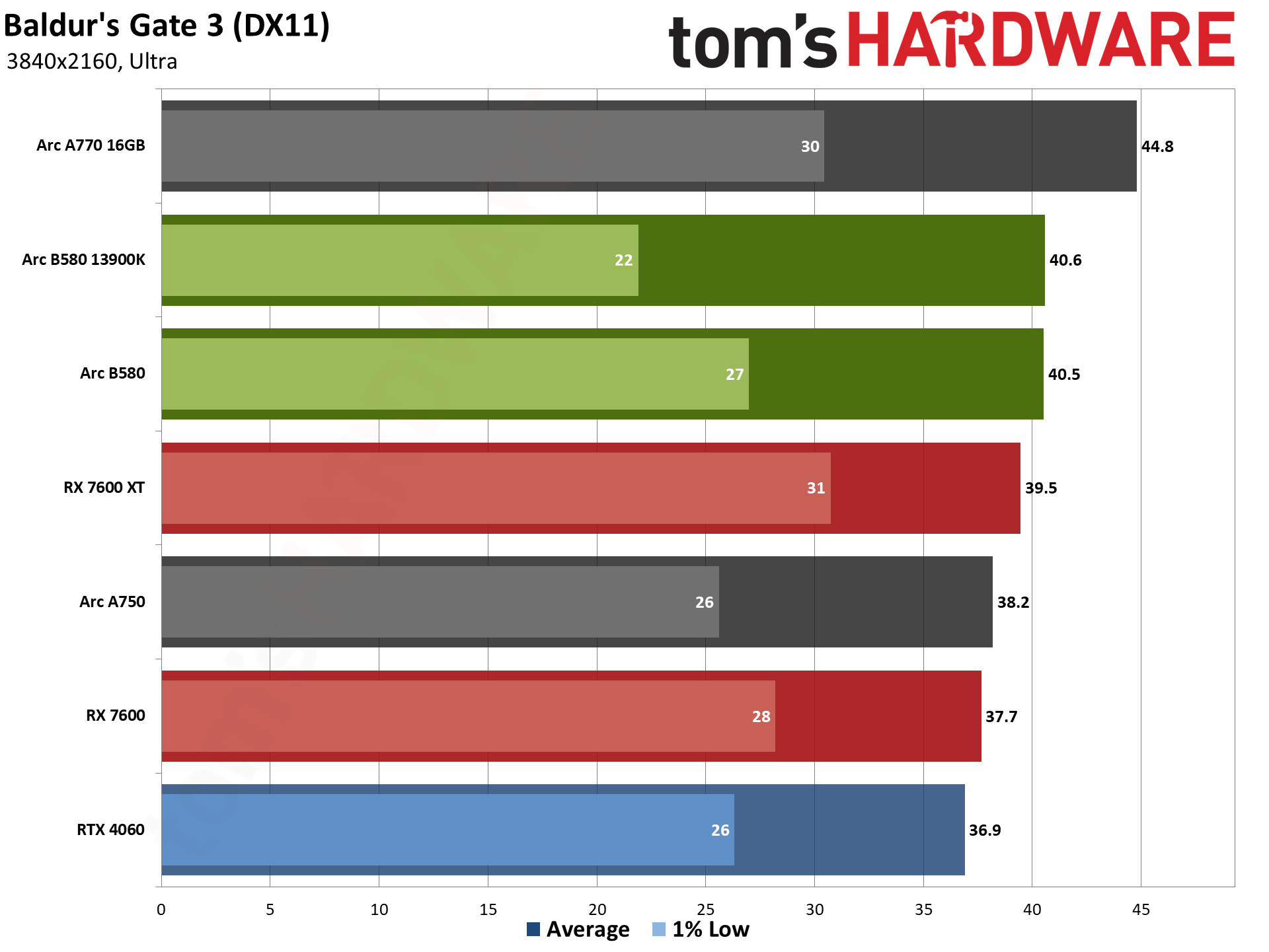

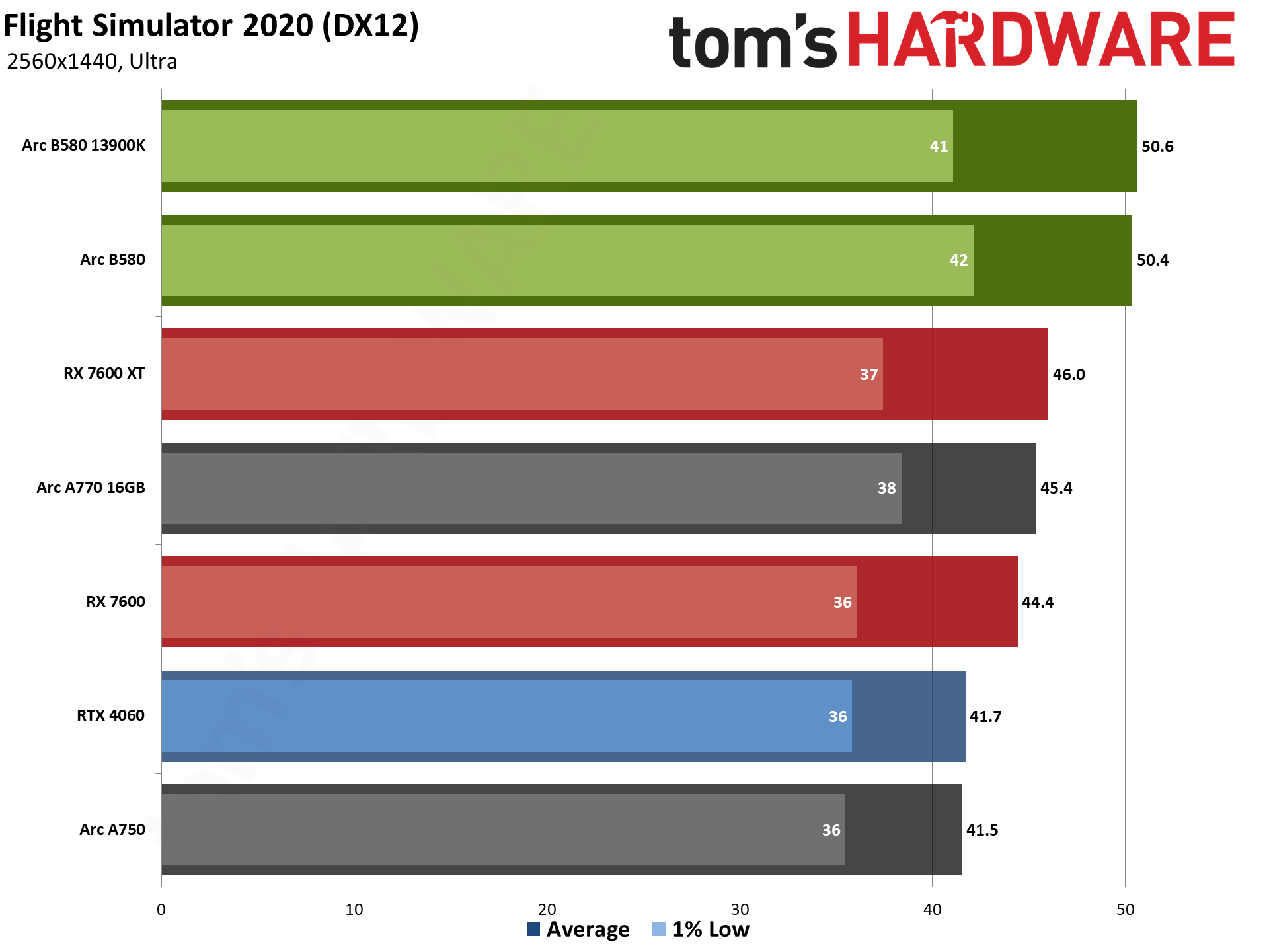

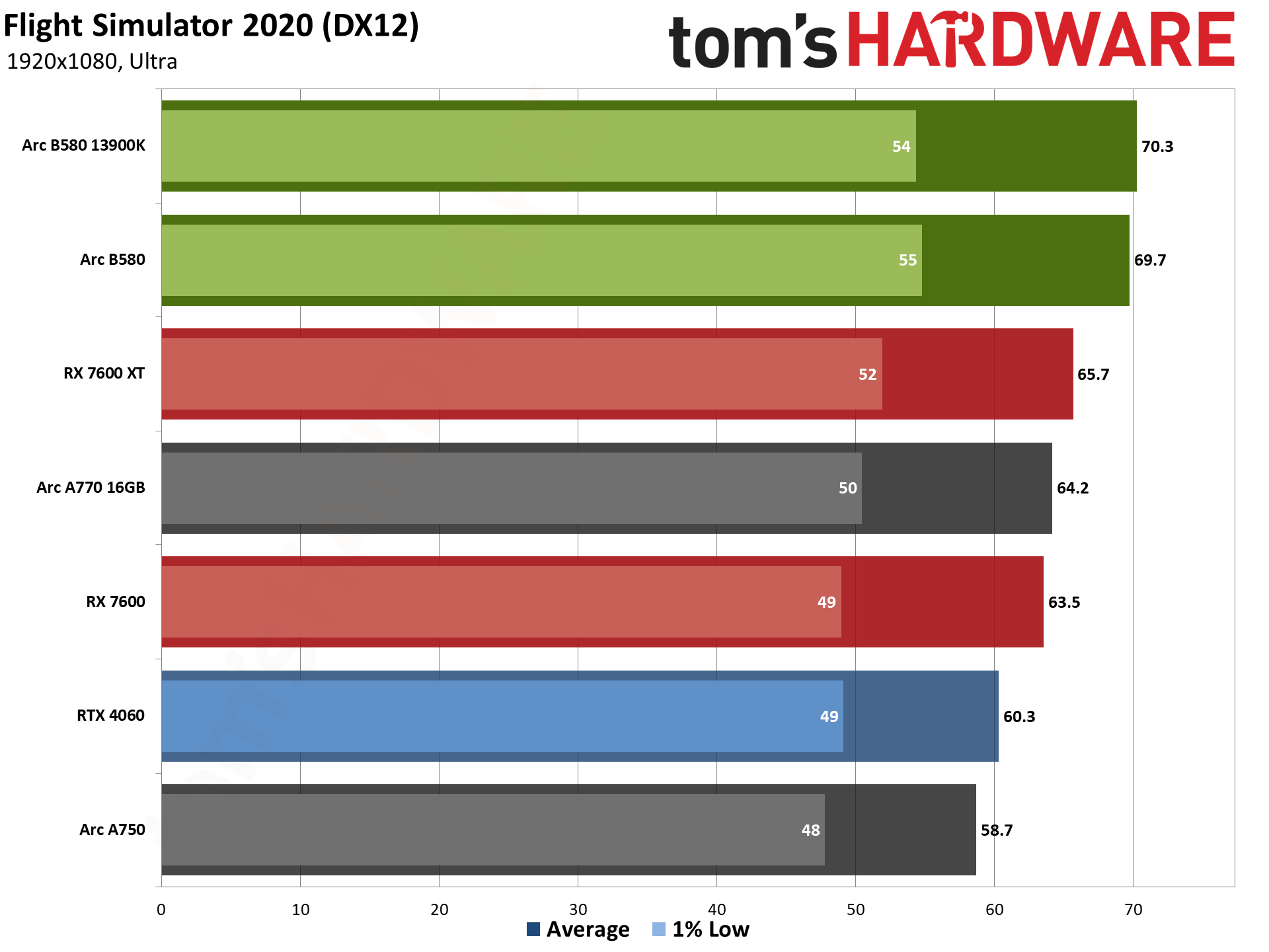

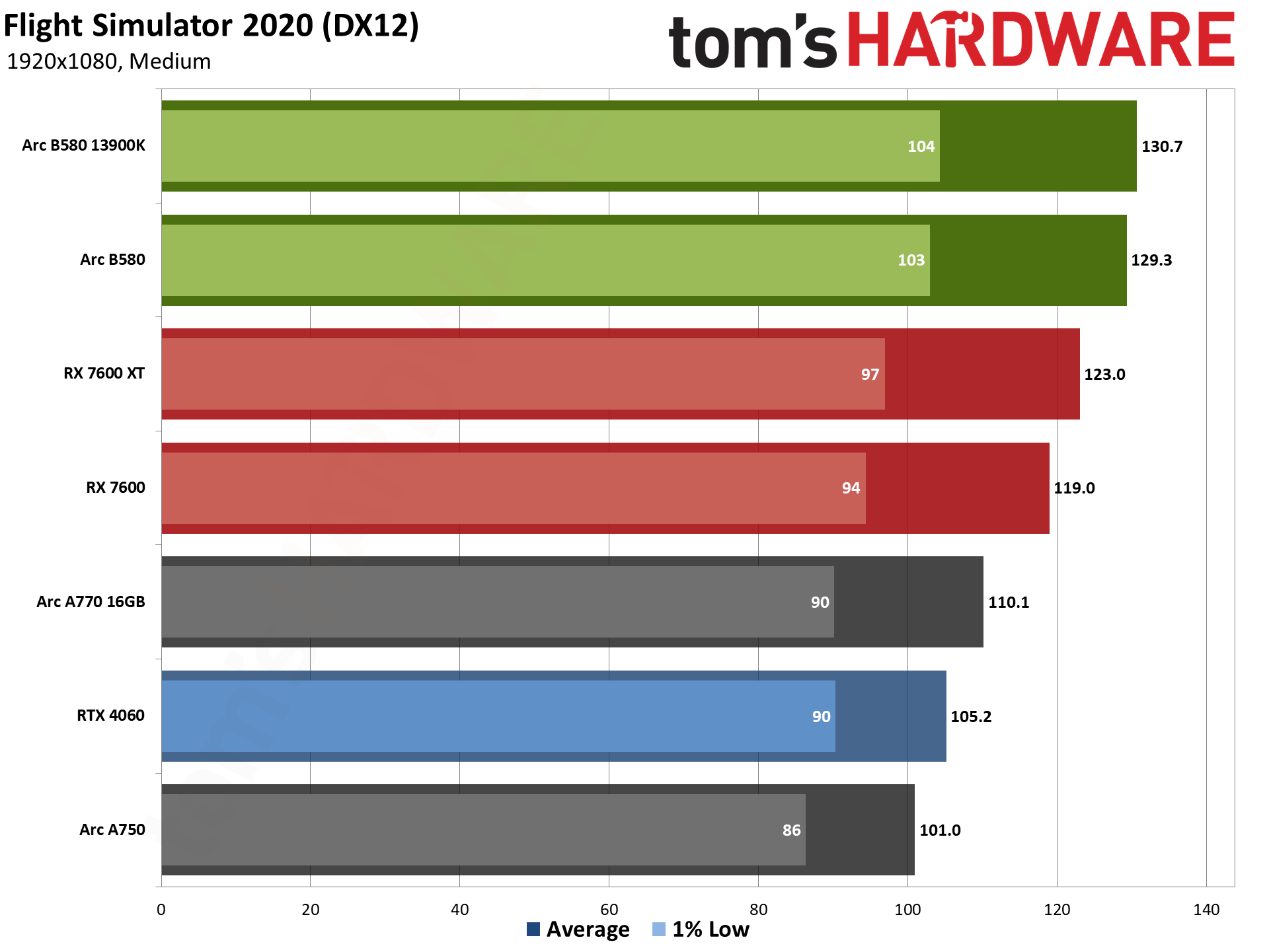

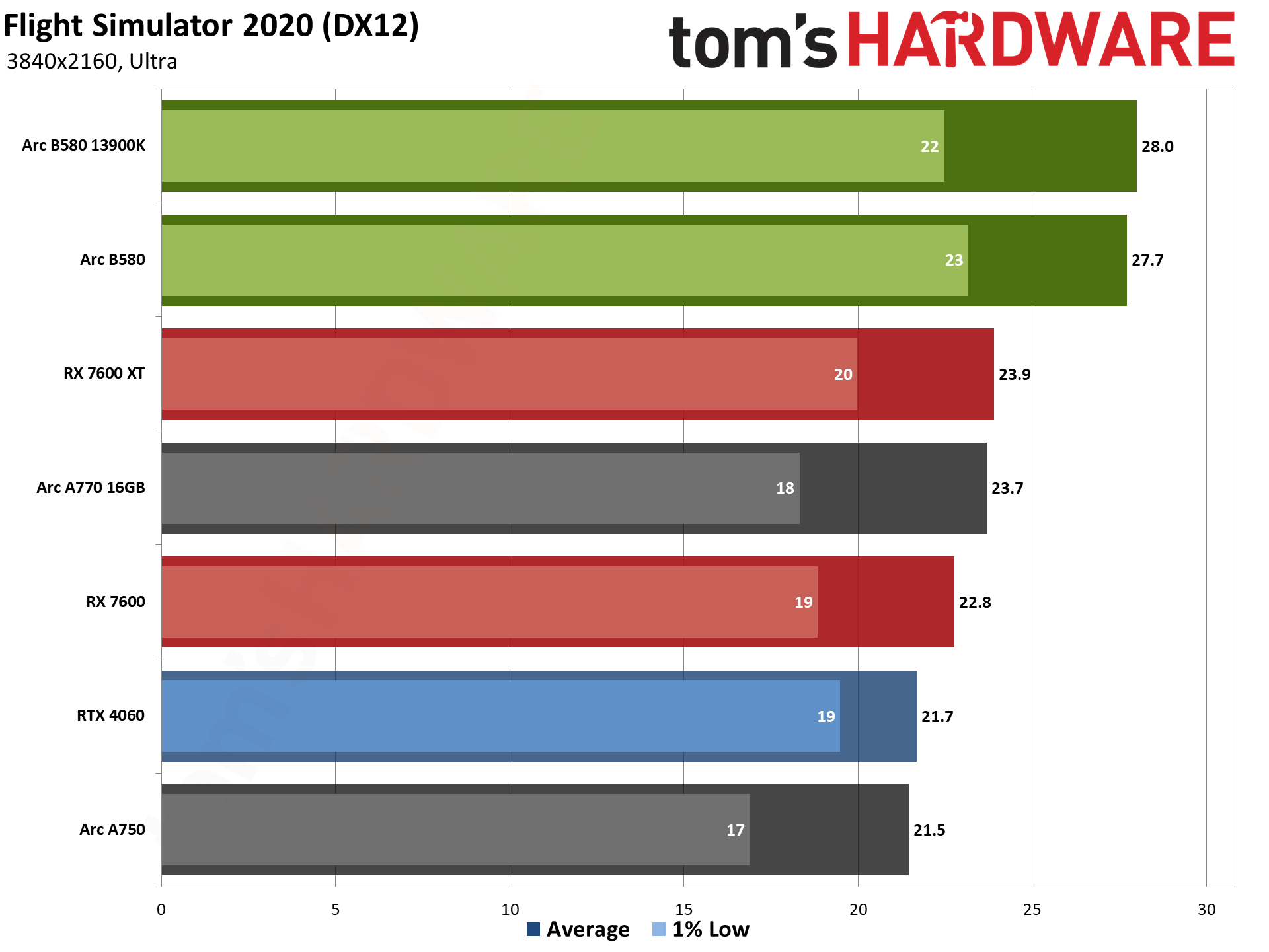

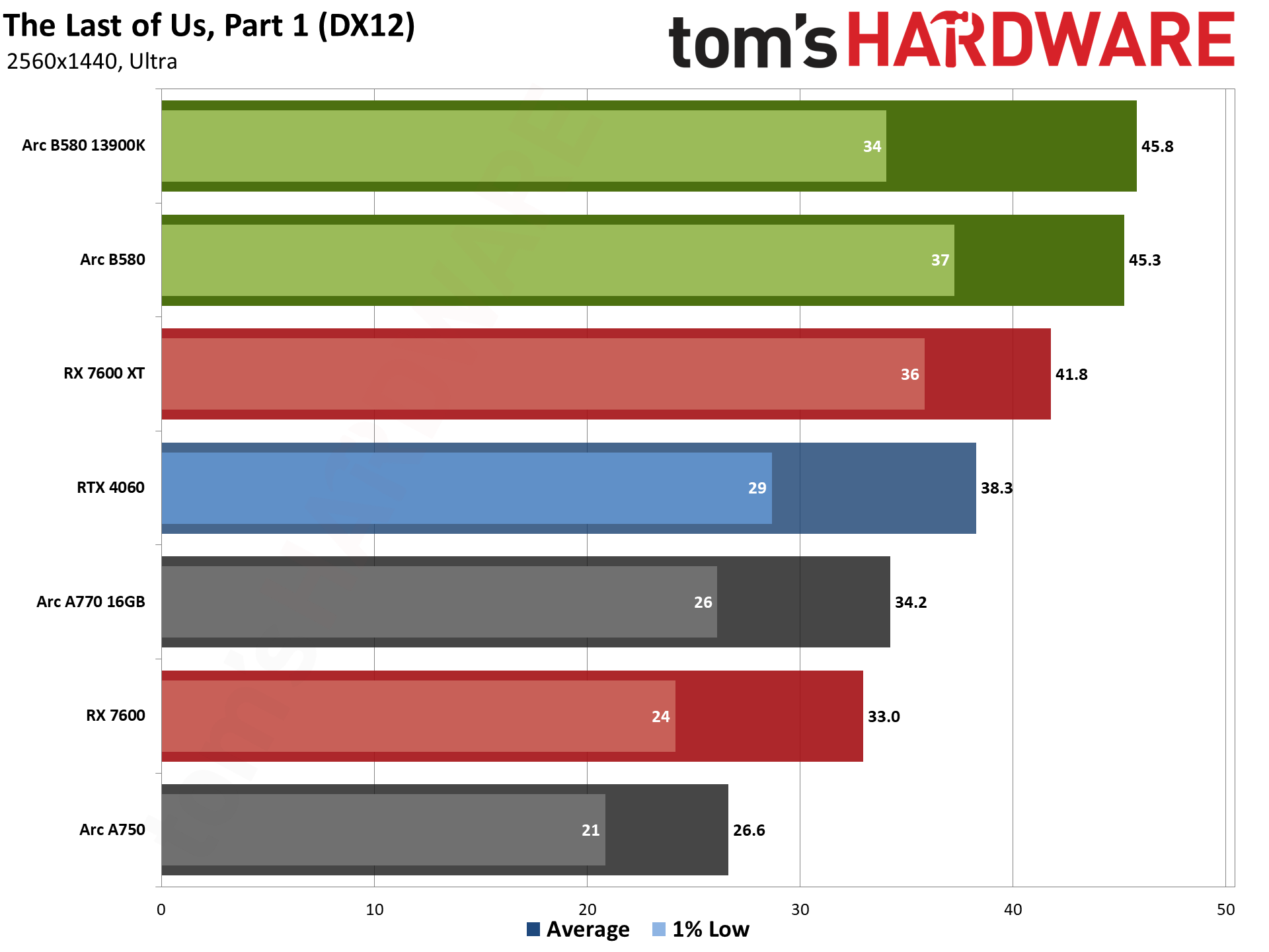

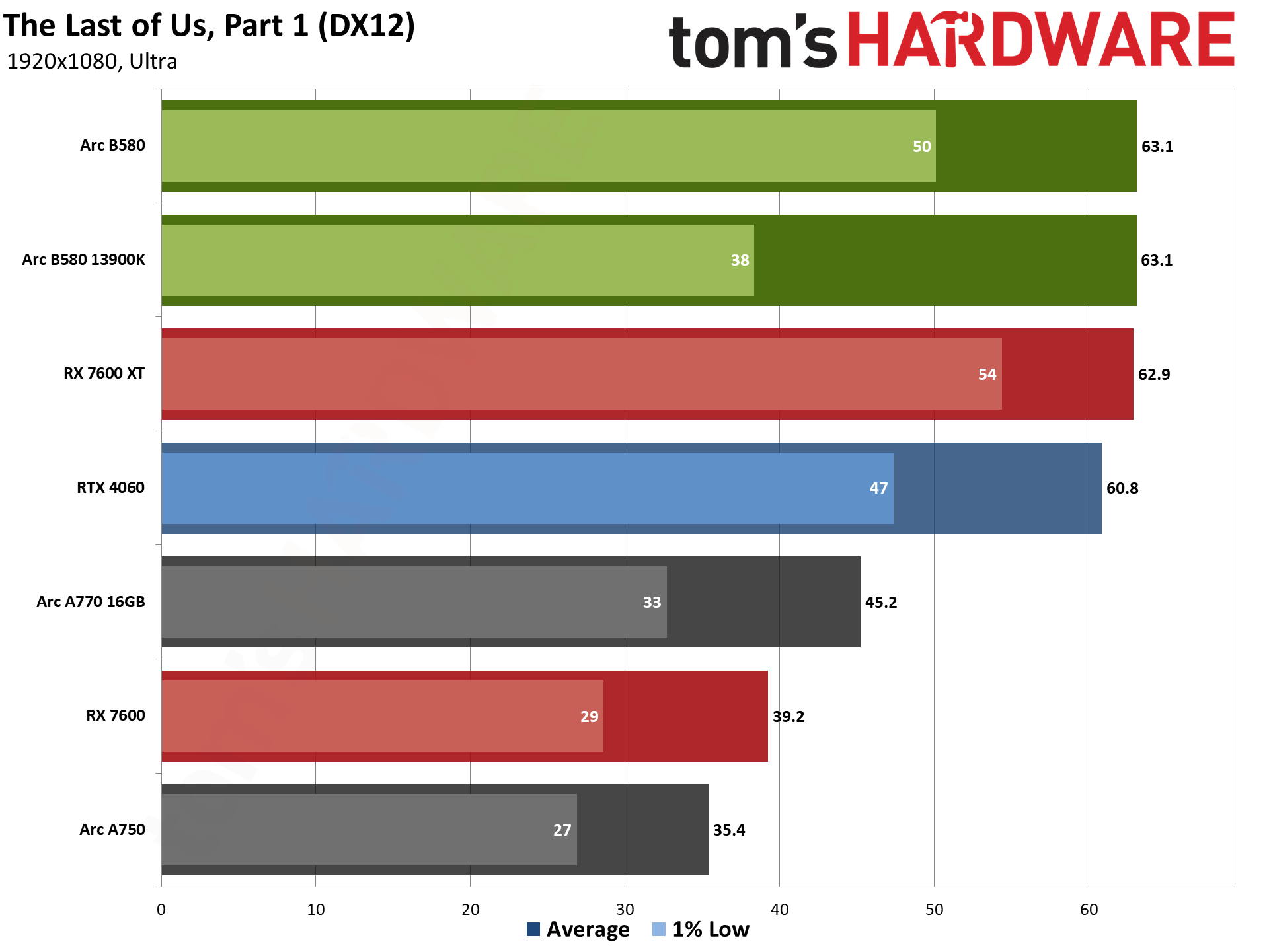

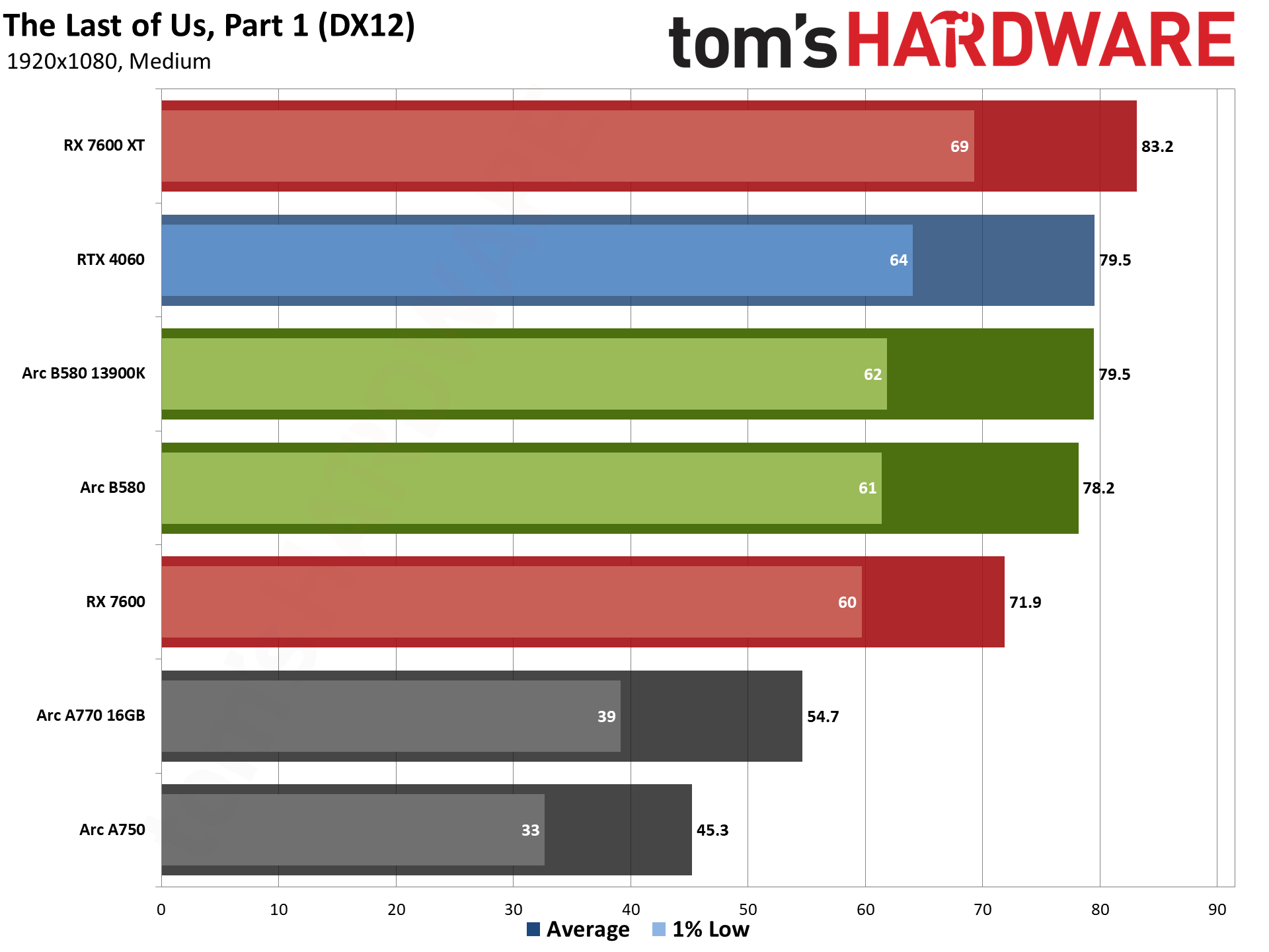

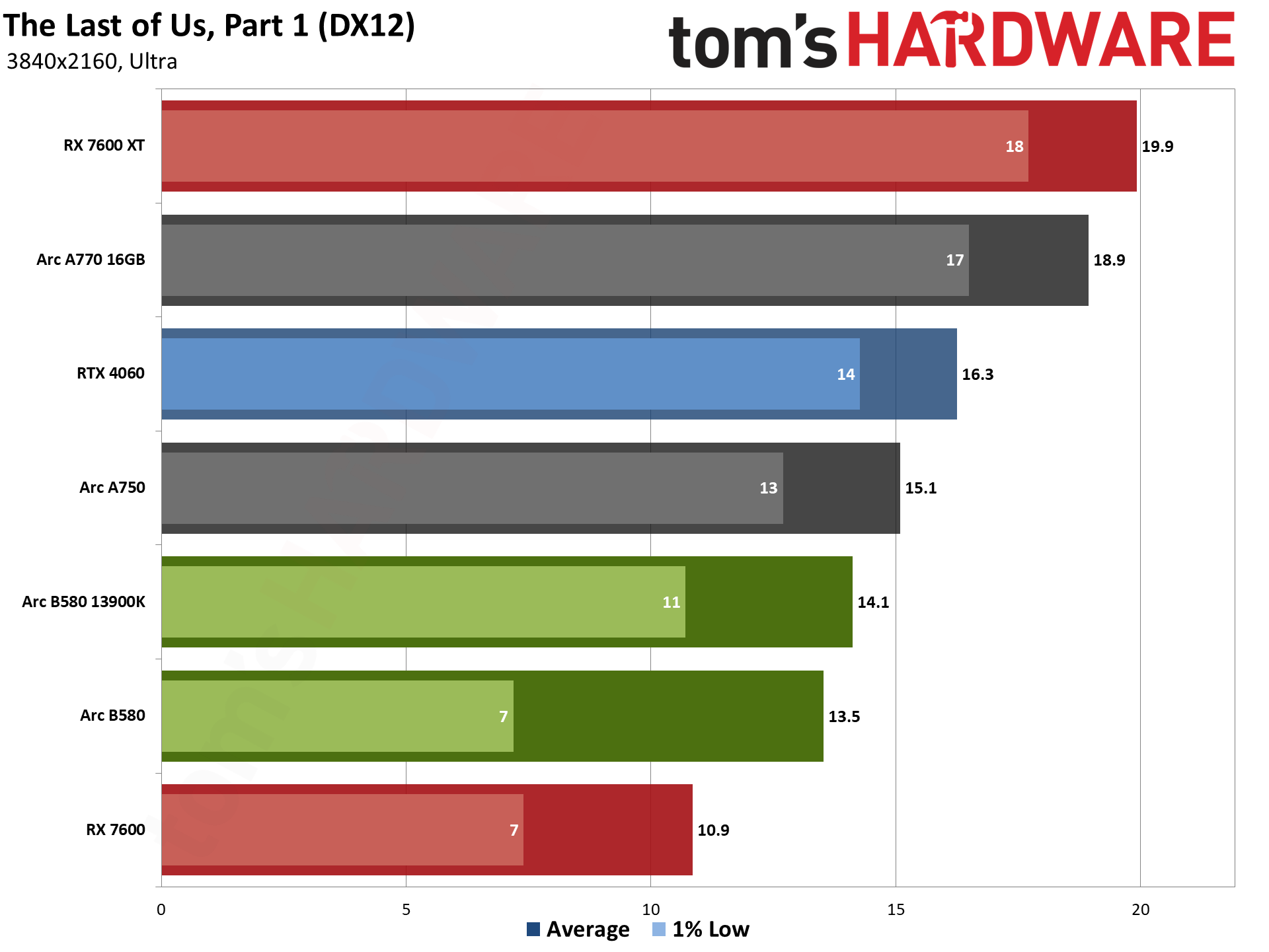

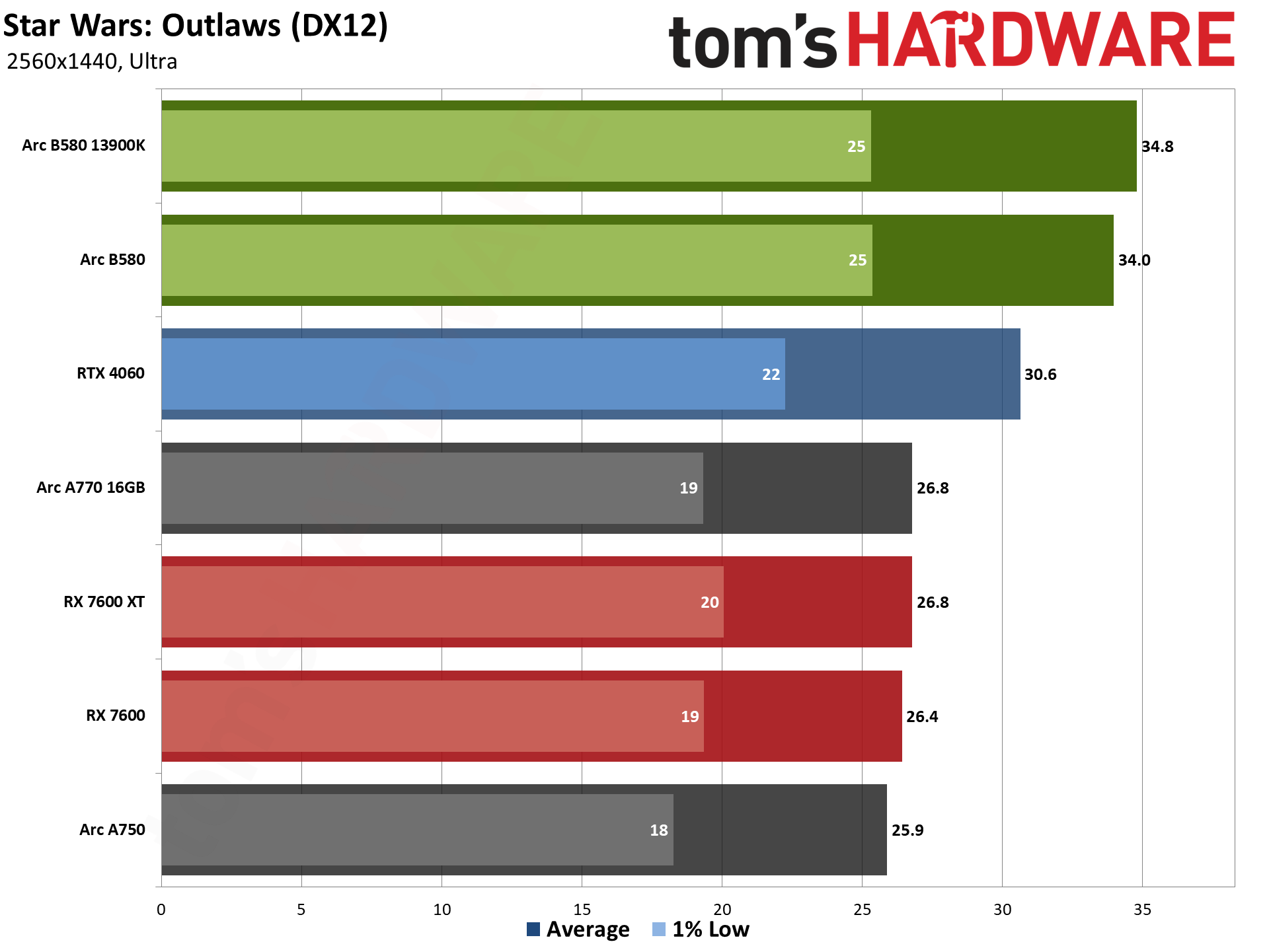

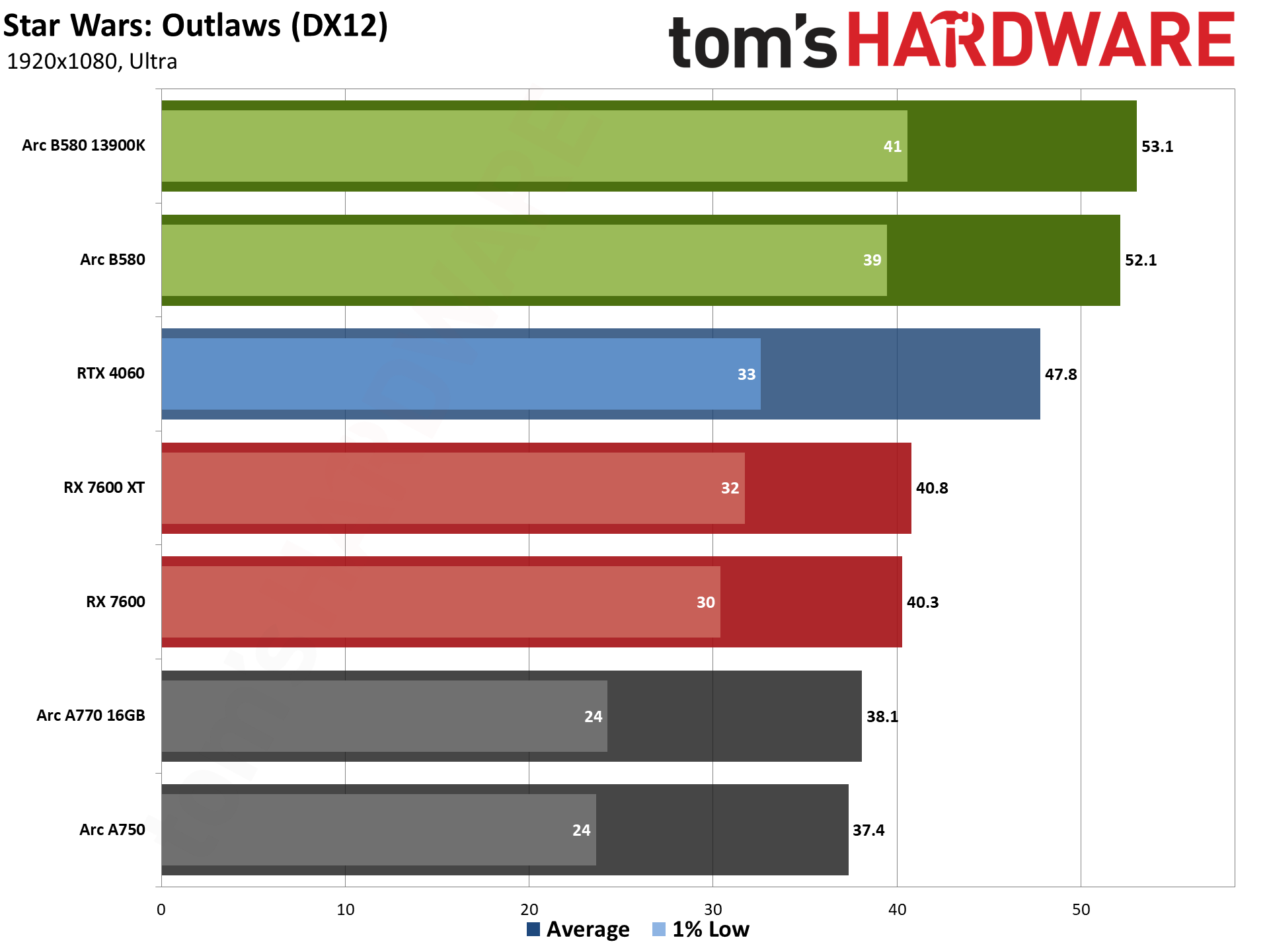

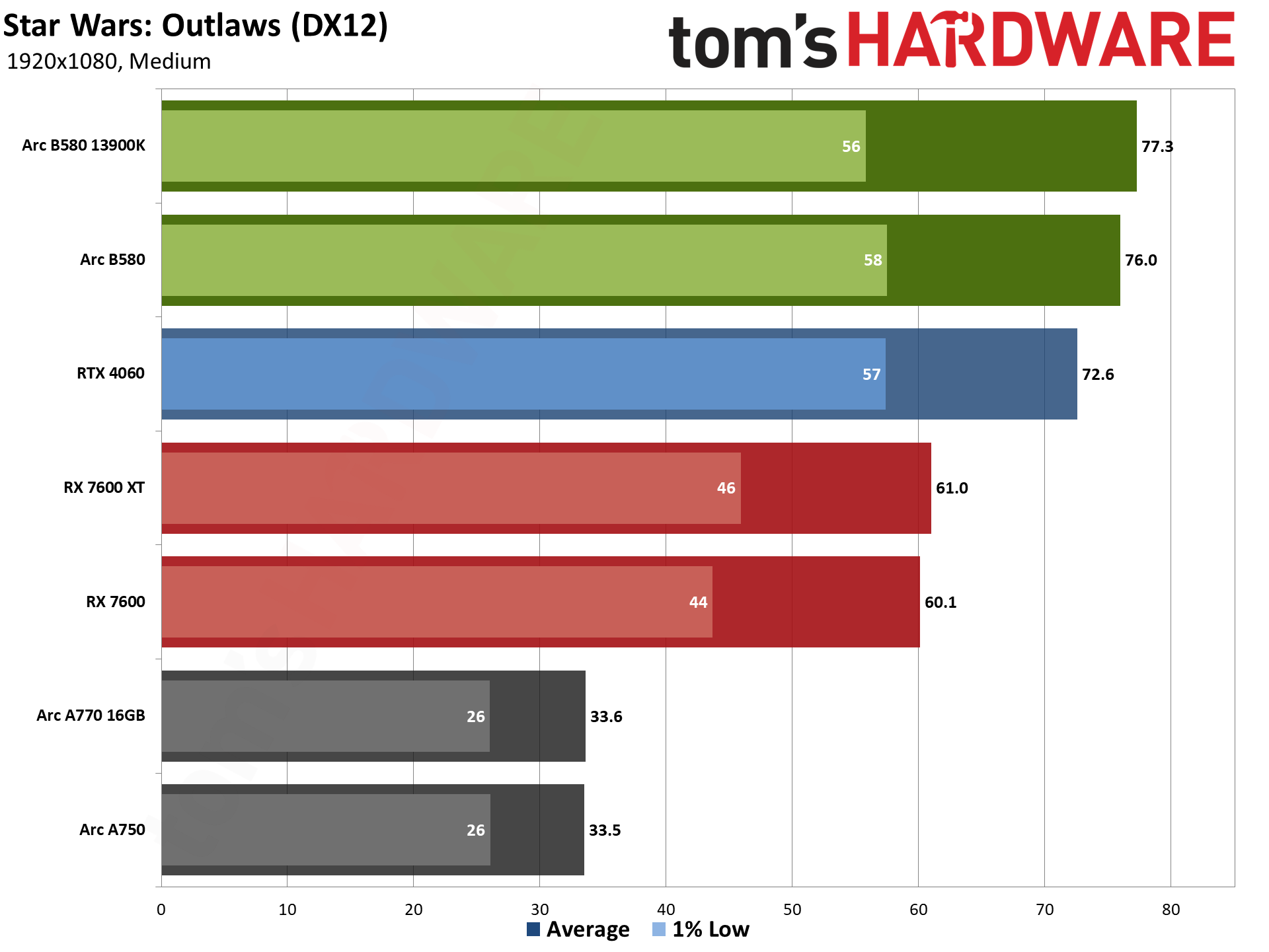

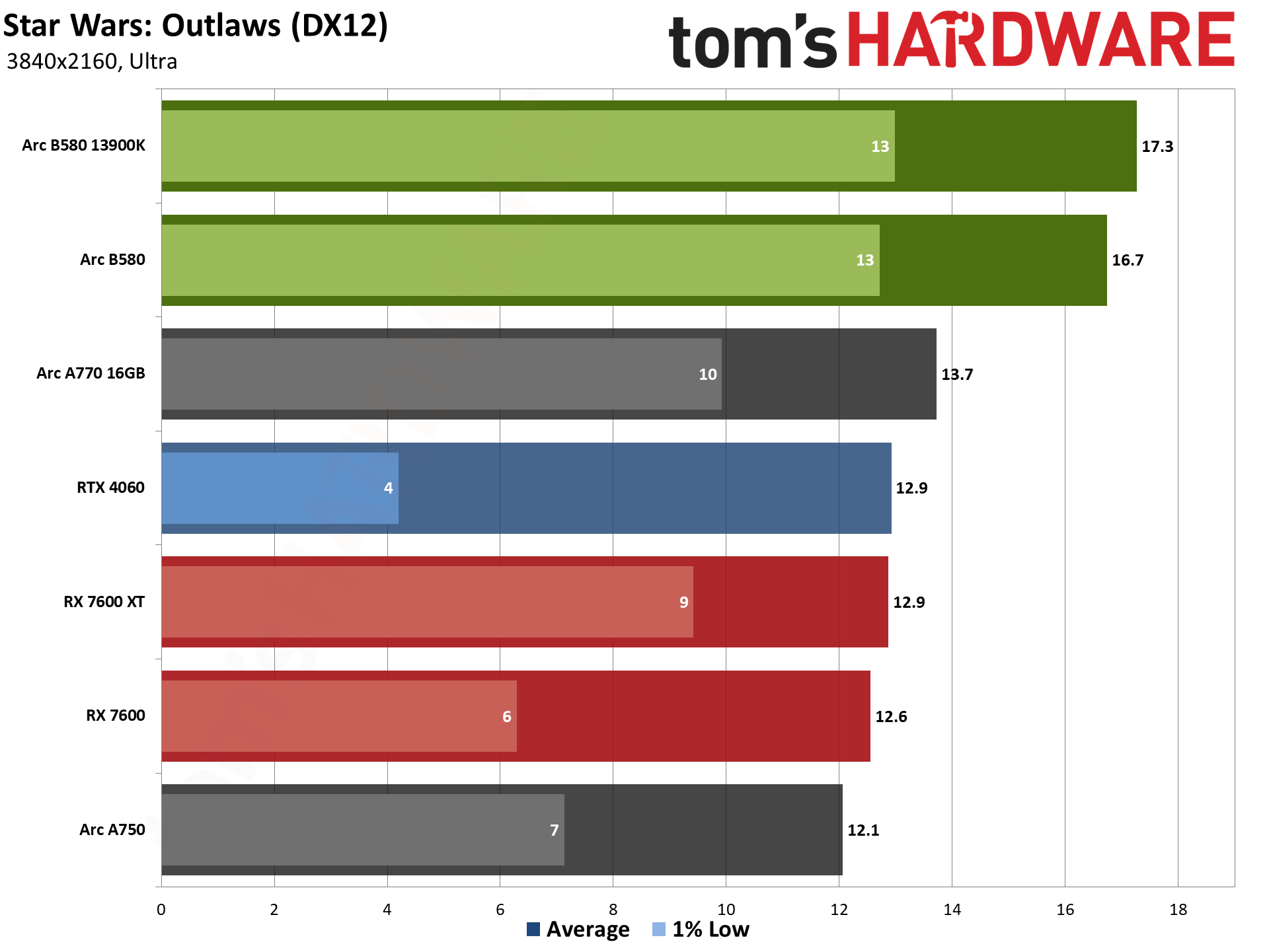

Each test has four charts, and we've ordered them by how we would rate their importance. So 1440p ultra comes first, then 1080p ultra, 1080p medium, and last is 4K ultra. The 4K results are mostly just extra information, and it's interesting to see how badly some of the GPUs do at those settings.

The high-level overview of rasterization performance largely serves as the TLDR for gaming in general. Intel's Arc B580 clearly comes out ahead of the competition in most cases, and despite somewhat lower specs in certain areas, it also easily eclipses the previous generation Arc A770. Which potentially bodes well for the rumored higher spec BMG-G20 GPU that will likely come out in an Arc B770 at some point, featuring up to 32 Xe-cores, and probably an Arc B750 as well. But we'll have to wait and see what Intel actually does there.

Against the Nvidia RTX 4060, the incumbent budget-mainstream GPU right now, Arc B580 ends up leading by a comfortable 10% at 1440p ultra — exactly what Intel claimed. That's surprising as we're doing more demanding games and don't have any older esports stuff that runs at hundreds of frames per second. What's not surprising is that the gap narrows a lot at 1080p, where it's only 3% faster at ultra settings and 1.5% faster at medium, pretty much tied. And even though it's mostly meaningless — neither GPU really handles 4K ultra well — it's 48% faster in our 4K ultra results. The 4060 definitely runs out of VRAM in several games, which tanks performance.

AMD's RX 7600 and RX 7600 XT trade blows with the RTX 4060 — with the 7600 XT clearly pulling ahead at higher resolutions thanks to its 16GB of VRAM. The 7600 XT also takes the top spot at 1080p medium, ties the B580 at 1080p ultra, and then falls behind at 1440p and 4K. That's again pretty much expected. AMD has additional hardware features that help reduce CPU load at lower settings and resolutions, thus enabling higher performance. So it's 4% faster than the B580 at 1080p medium, tied (1% faster) at 1080p ultra, and 4% slower at 1440p — and 11% slower at 4K, if you care.

For the vanilla 7600, Intel gets a clear win. They're the same price, more or less, but Intel's B580 leads by 1% at 1080p medium, but that jumps to a 26% lead at ultra settings. Yes, even at 1080p, ultra settings benefit from having more than 8GB VRAM in our test suite. That increases to a massive 46% lead at 1440p, and an almost laughable 79% lead at 4K where the 8GB card generally has no business trying that resolution without upscaling.

Intel's real advantage in gaming boils down to the value proposition. It's not universally faster, but it nominally costs $50 less than the RTX 4060, about $20 less than the RX 7600 (depending on the model), and $60–$70 less than the RX 7600 XT. It also uses less power than the RX 7600 XT on average, slightly more power than the RX 7600, and 30W more power than the RTX 4060.

With the overall performance out of the way, let's go through the individual games in alphabetical order. We've provided a few testing notes on the performance results as well where there's something interesting to mention — like driver issues we encountered.

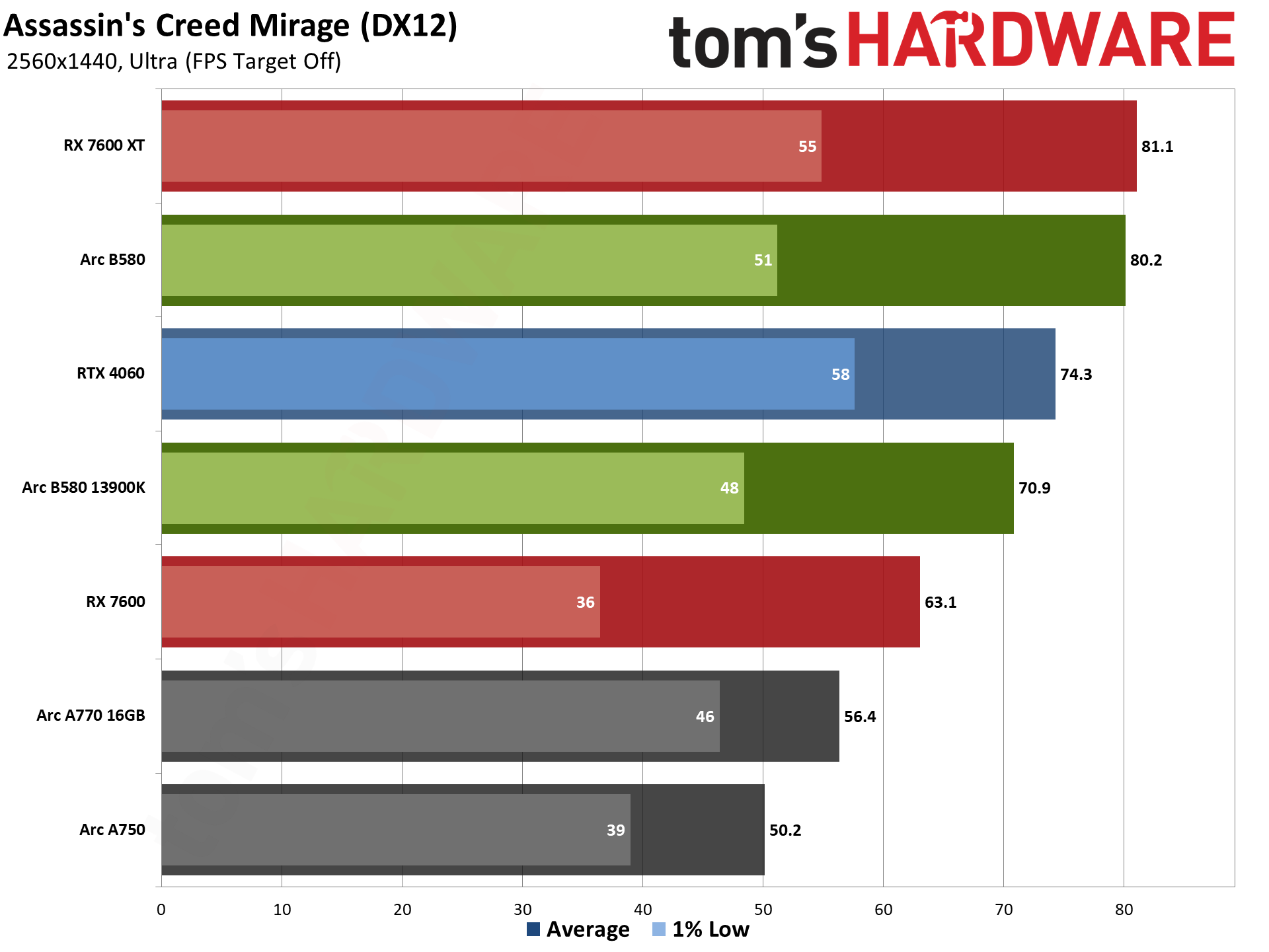

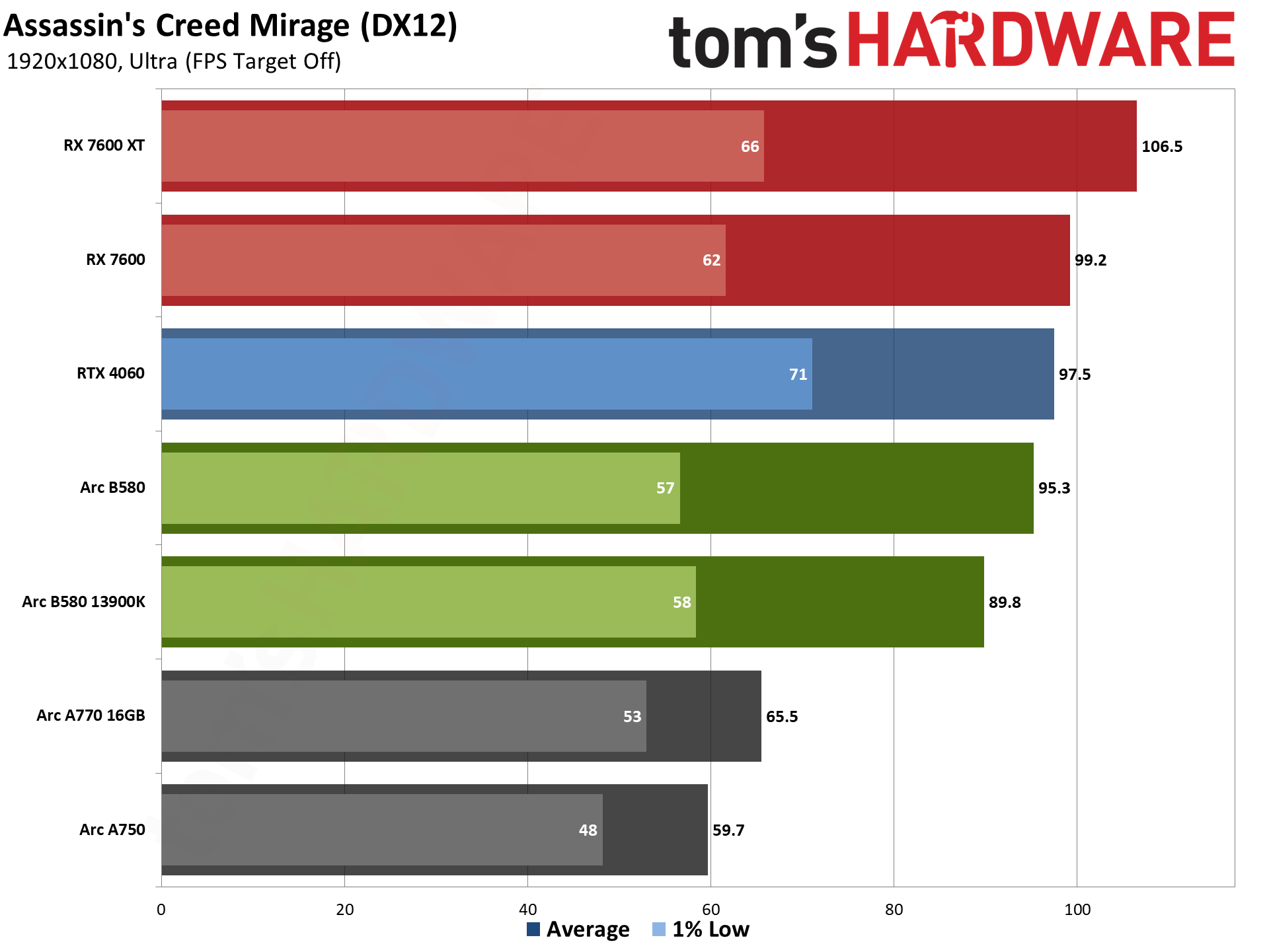

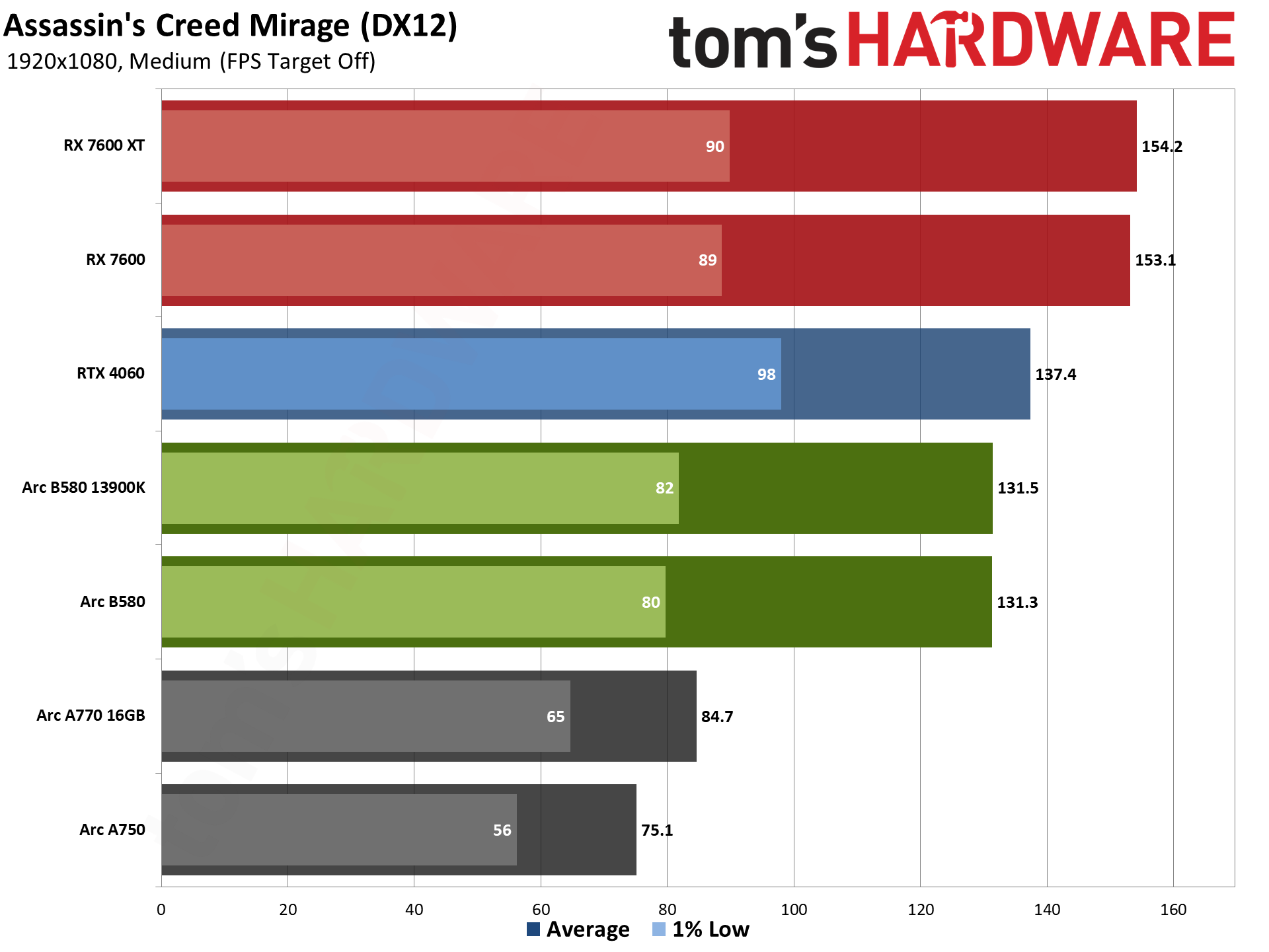

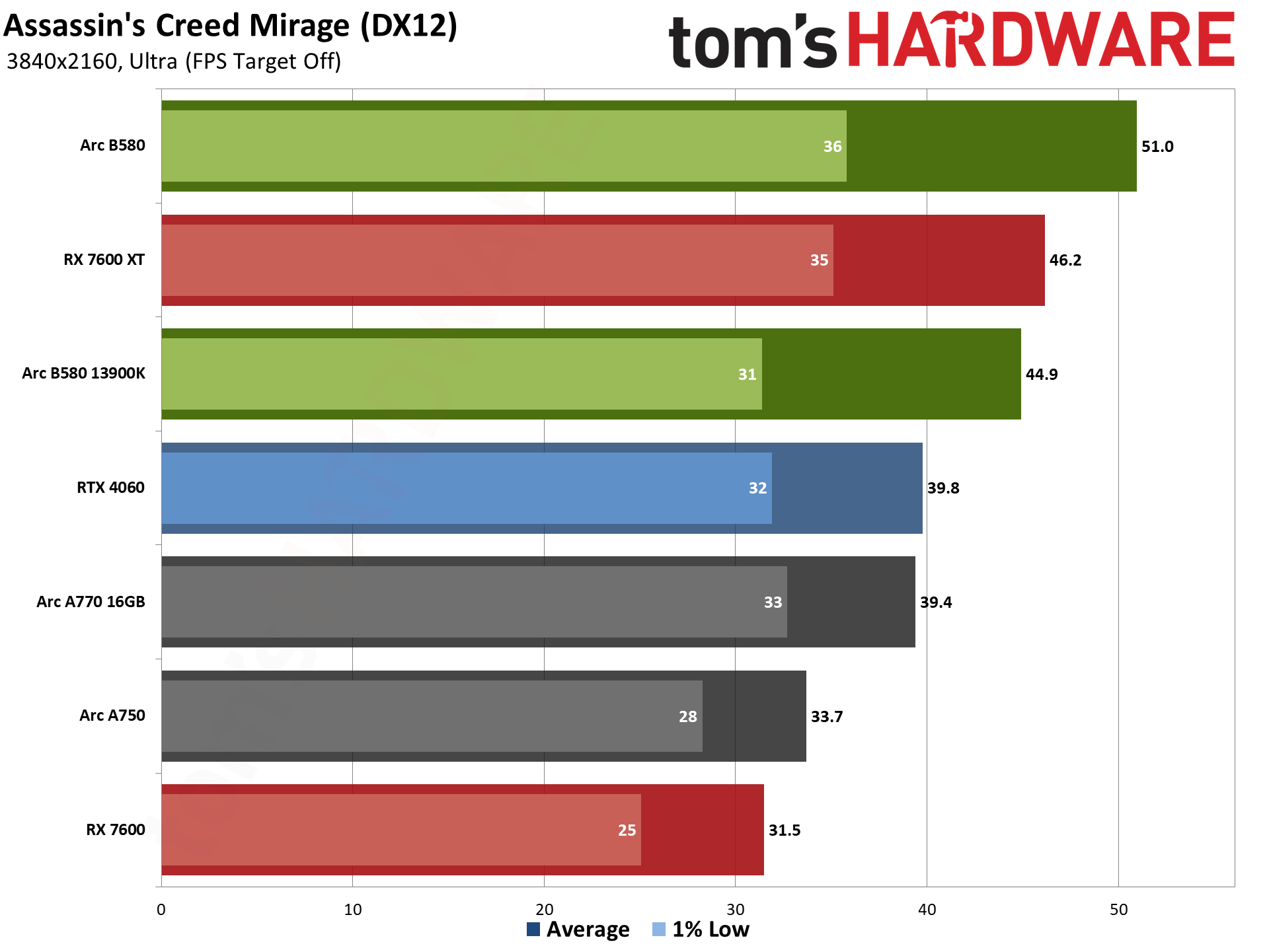

Assassin's Creed Mirage uses the Ubisoft Anvil engine and DirectX 12. It's an AMD-promoted game as well, though these days that doesn't necessarily mean it always runs better on AMD GPUs. It could be CPU optimizations for Ryzen, or more often it just means a game has FSR2 or FSR3 support — FSR2 in this case. It also supports DLSS and XeSS upscaling.

Given the AMD promotional aspect, it's no surprise to see AMD's 7600 and 7600 XT at the top of the charts at 1080p, though the vanilla 7600 falls behind at 1440p and 4K. It's basically tied with the 7600 XT at 1440p, and then pulls ahead by 10% at 4K. And that's not a useless 4K ultra result in this case, as the two cards are still clearly playable at 46 and 51 FPS, respectively.

The B580 only comes close to matching the RTX 4060 at 1080p, which makes this one of its worse showings. It does pull ahead at 1440p and 4K, however, due most likely to the 12GB vs 8GB VRAM. The B580 also delivers pretty massive generational improvements over the A-series. It's 50–75 percent faster than the A750, and 30–55 percent faster than the A770.

Baldur's Gate 3 is our sole DirectX 11 holdout — it also supports Vulkan, but that performed worse on the GPUs we checked, so we opted to stick with DX11. Built on Larian Studios' Divinity Engine, it's a top-down perspective game, which is a nice change of pace from the many first person games in our test suite. It's also one of a handful of games where there were some Arc performance oddities.

The A-series GPUs again performed comparatively better in this particular game, with the A770 coming out slightly ahead of the B580 and the A750 only slightly behind at 1080p. We mentioned this to Intel and it's looking into the situation. We expect a future driver release will improve the B580 performance.

AMD's Navi 33 chips do really well, beating the B580 at everything except 4K, and even then it's still only slightly behind. 1% lows on the B580 are also worse than on the other GPUs, again indicating there's more driver work to be done.

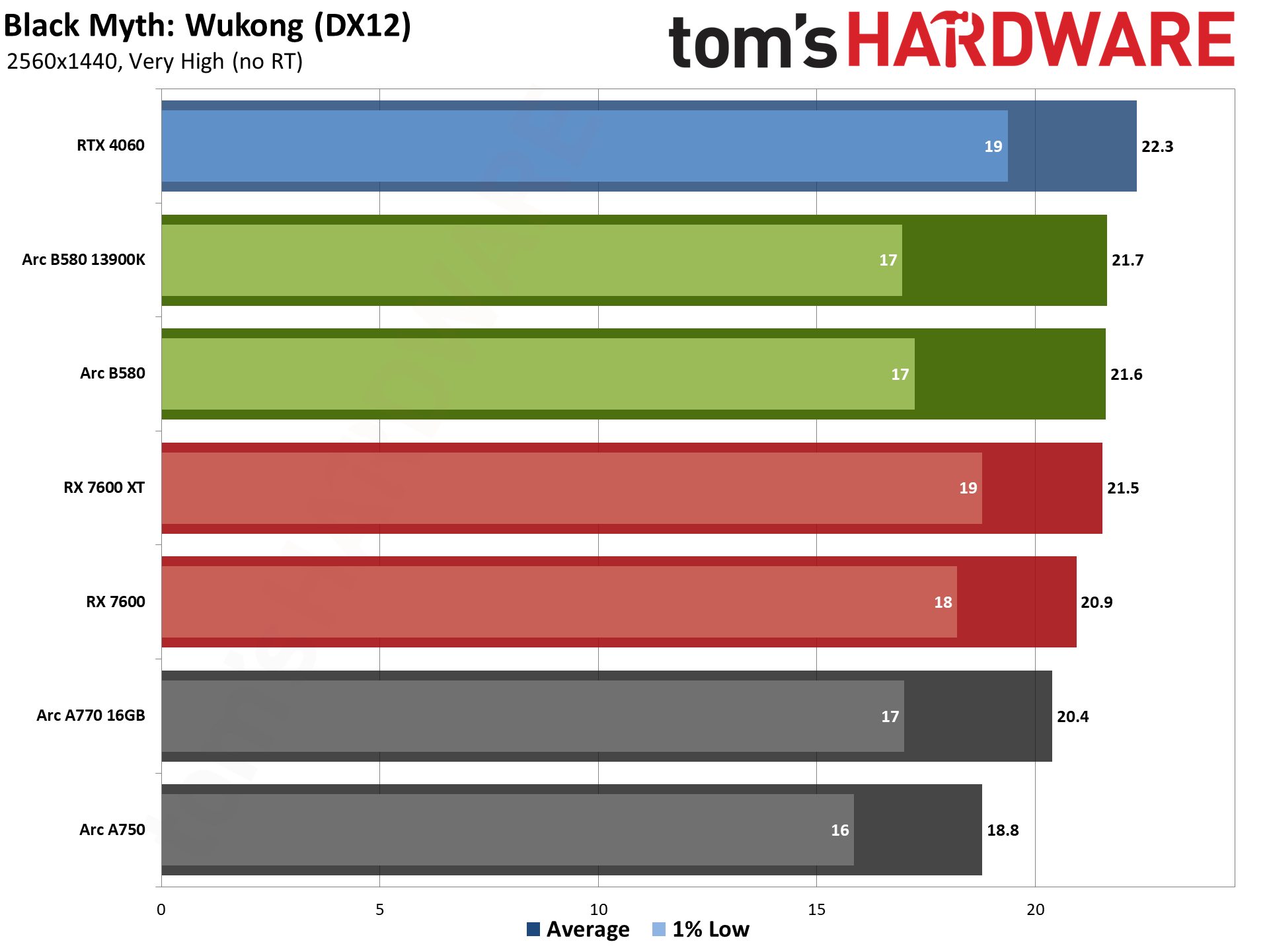

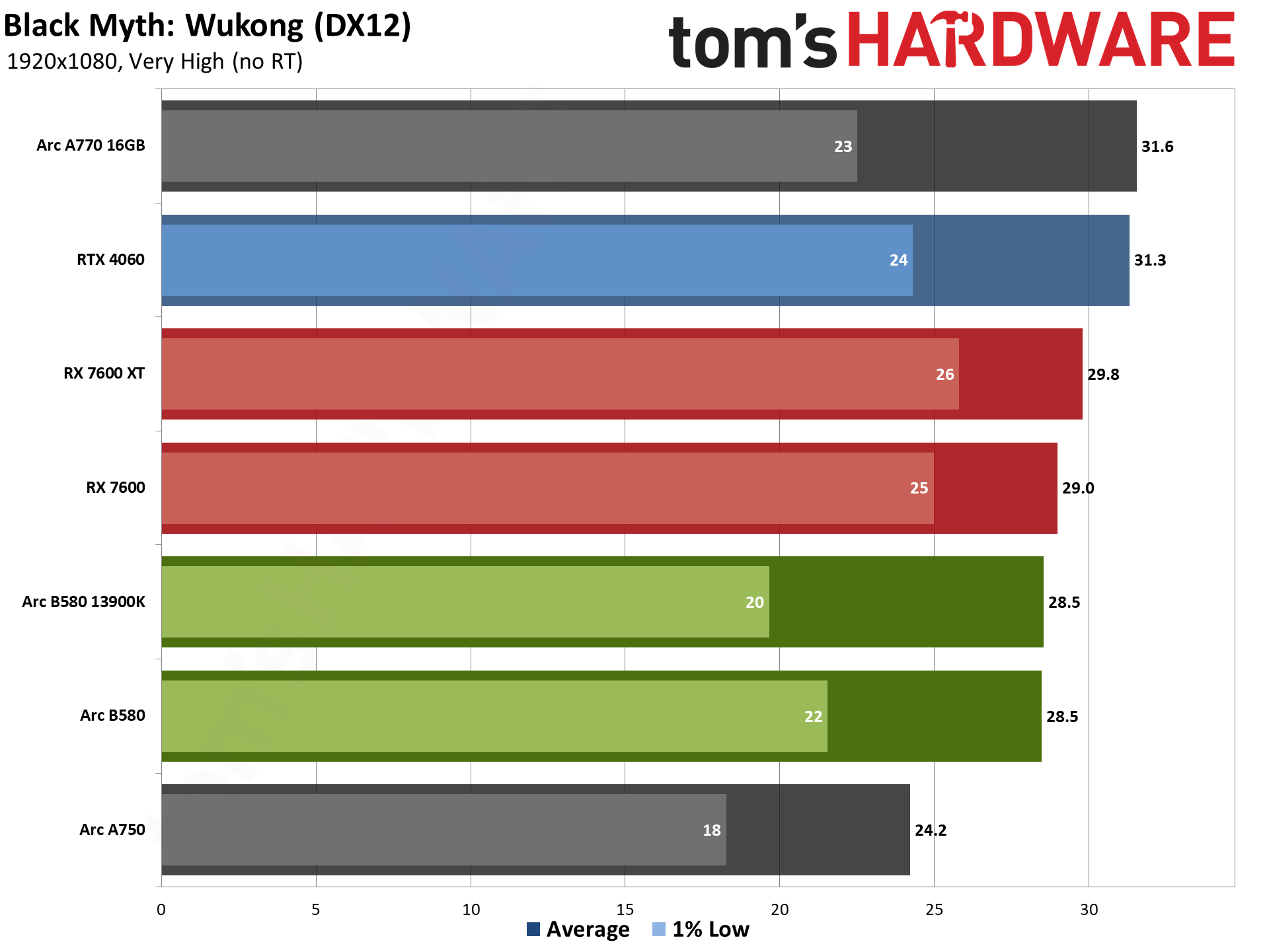

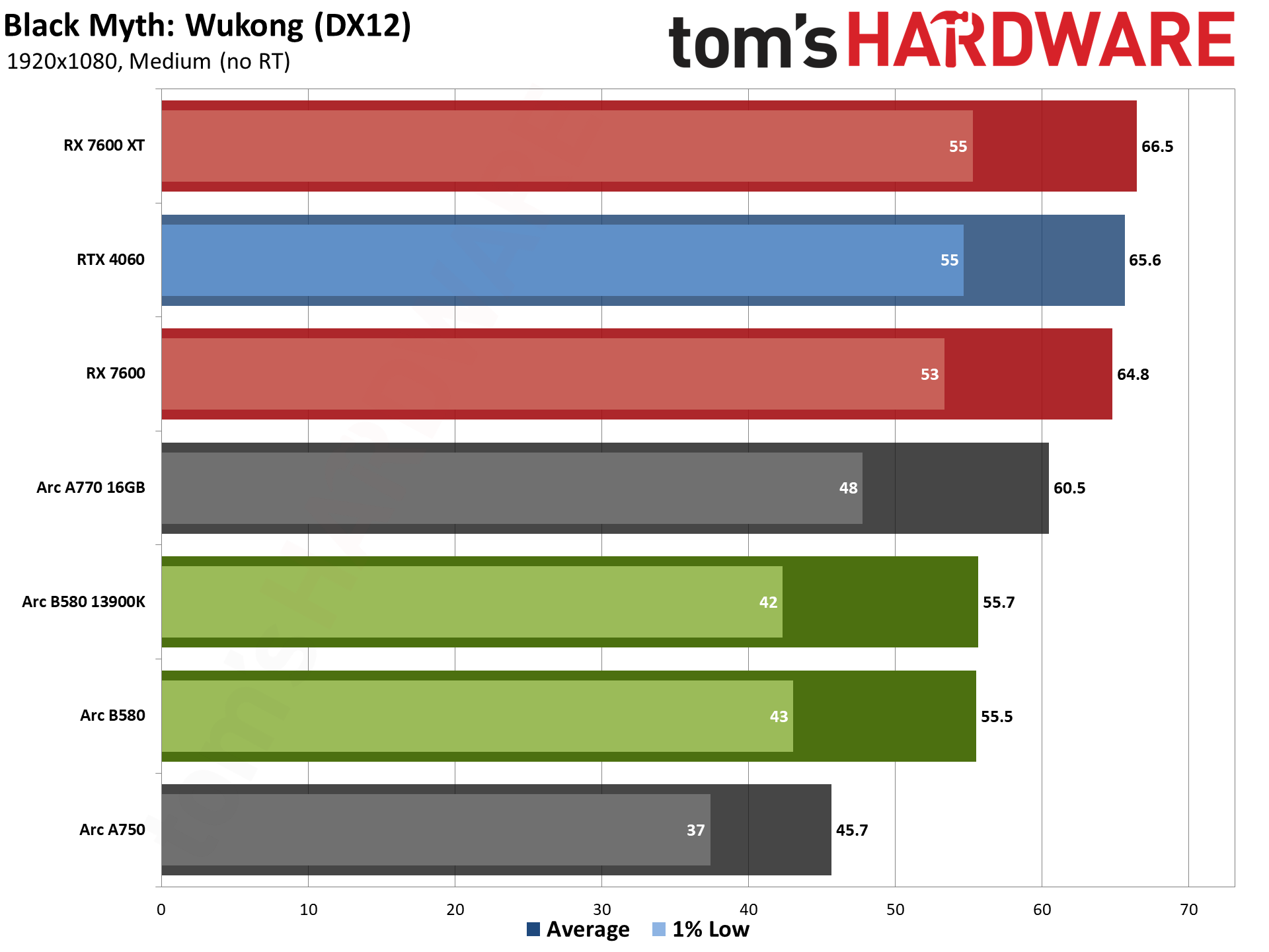

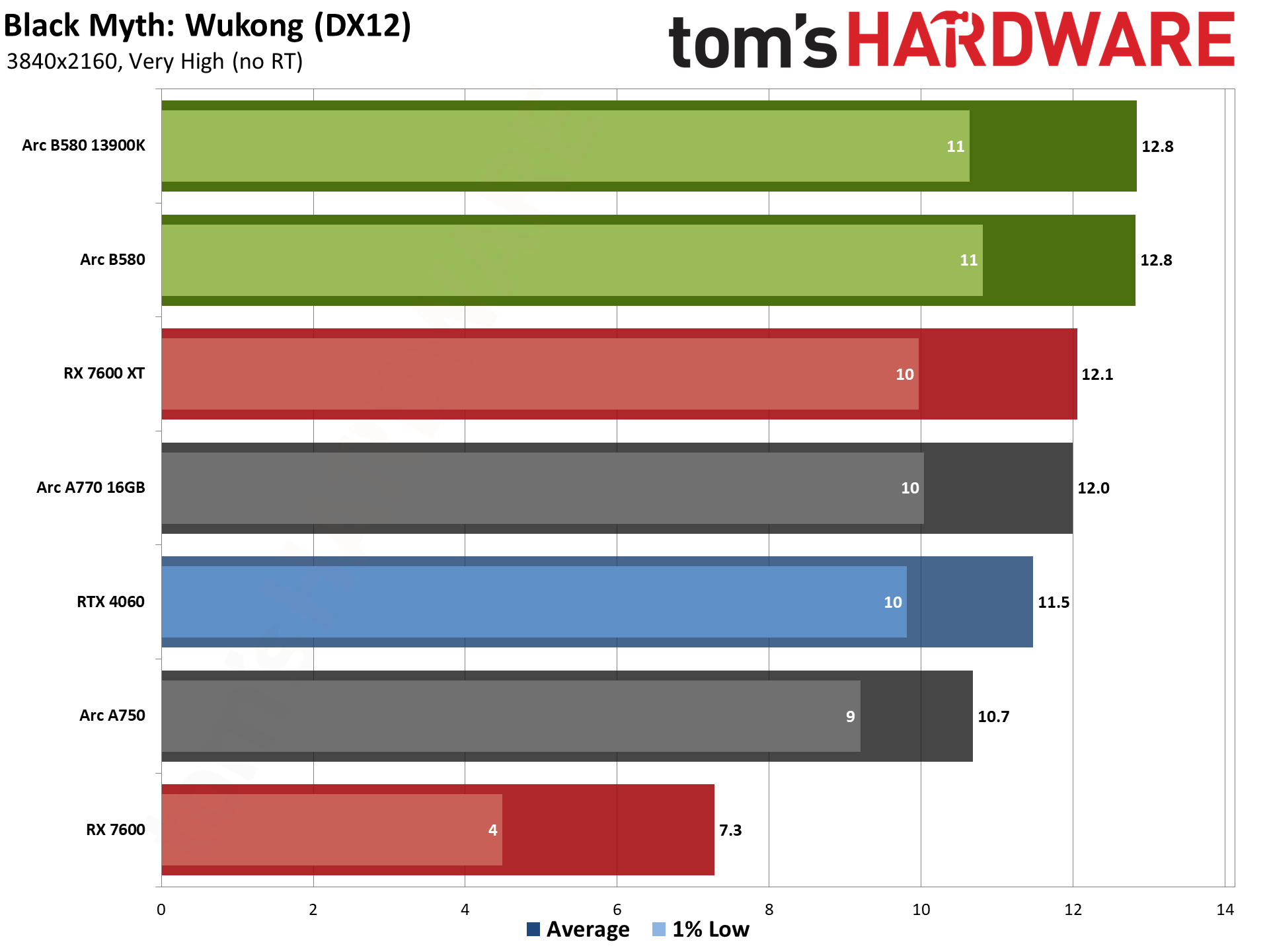

Black Myth: Wukong is one of the newer games in our test suite. Built on Unreal Engine 5, with support for full ray tracing as a high-end option, we opted to test using pure rasterization mode. Full RT may look a bit nicer, but the performance hit is quite severe. (Check our linked article for our initial launch benchmarks if you want to see how it runs with RT enabled.)

This is an Nvidia-promoted game, and the RTX 4060 does better than normal at 1440p and lower resolutions, coming out 3–18 percent faster than the B580. But there are some performance oddities as well, like the A770 ranking first at 1080p very high settings.

VRAM at 1440p and lower isn't an issue, based on the AMD GPU results. That means there's likely driver optimization work to do for Intel on the B580. The new GPU beats the A750 by 15–22 percent, which is far lower than in most other games. Against the A770, it's up to 10% slower at 1080p, and 6–7 percent faster at 1440p/4K.

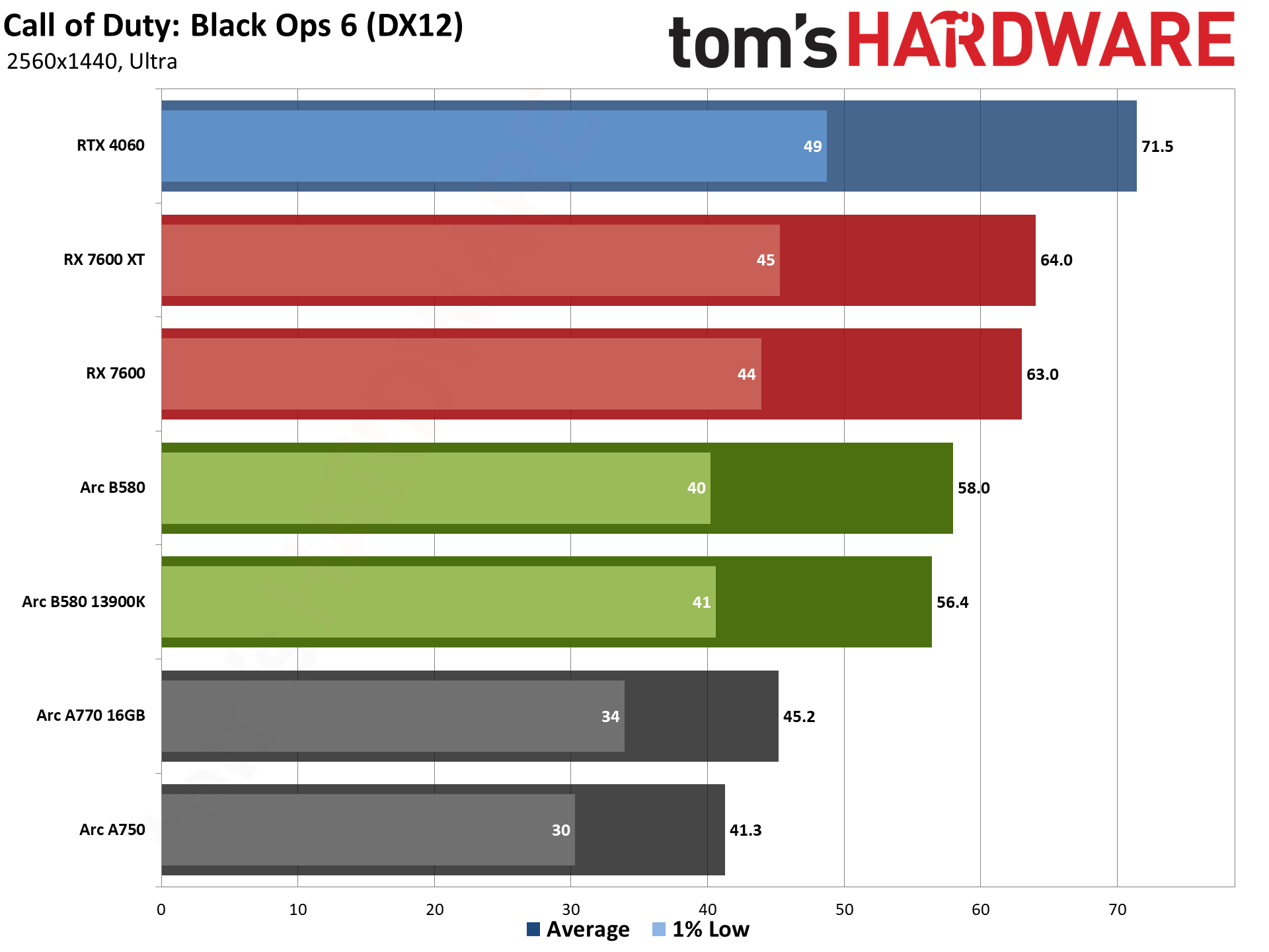

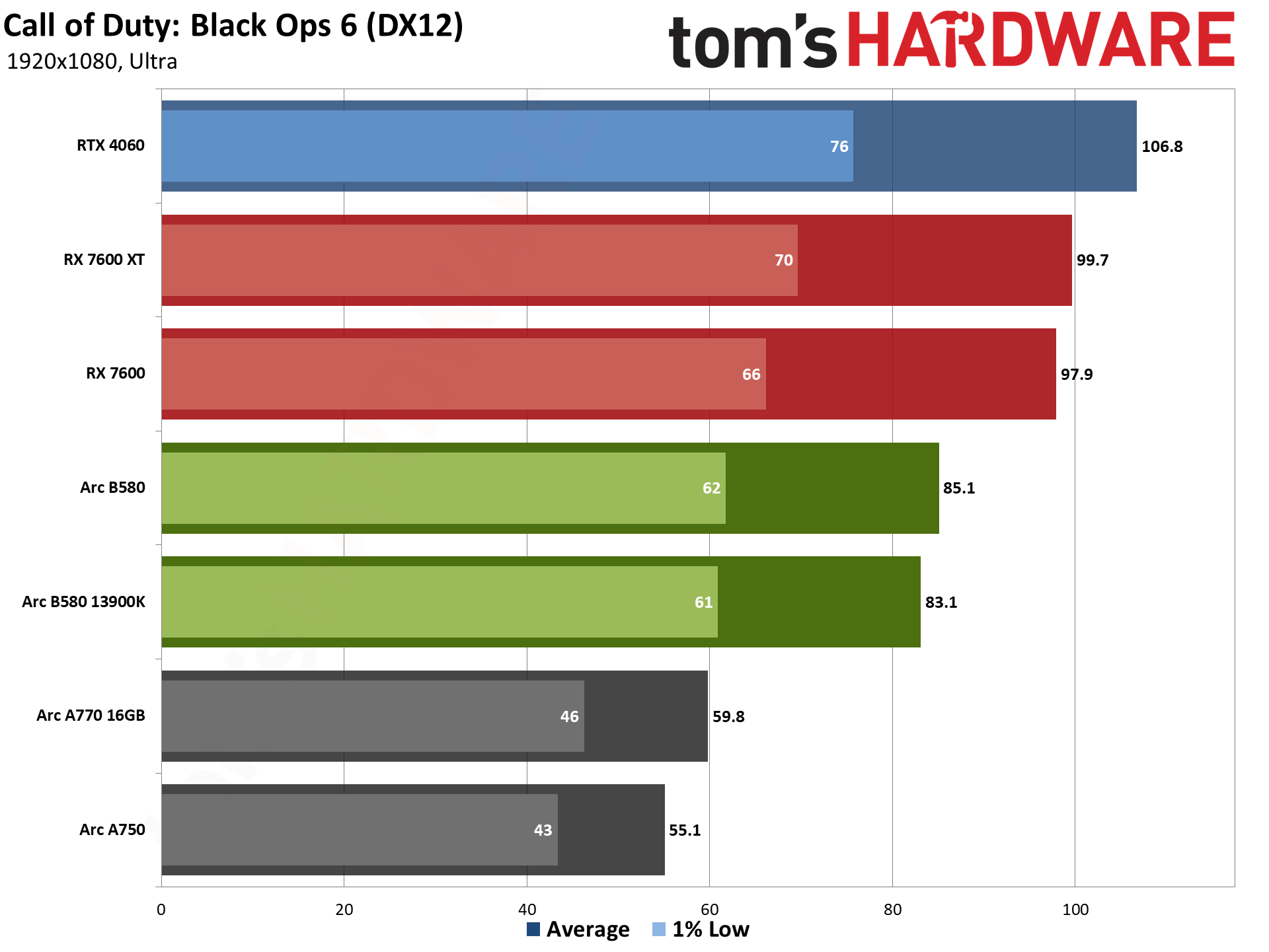

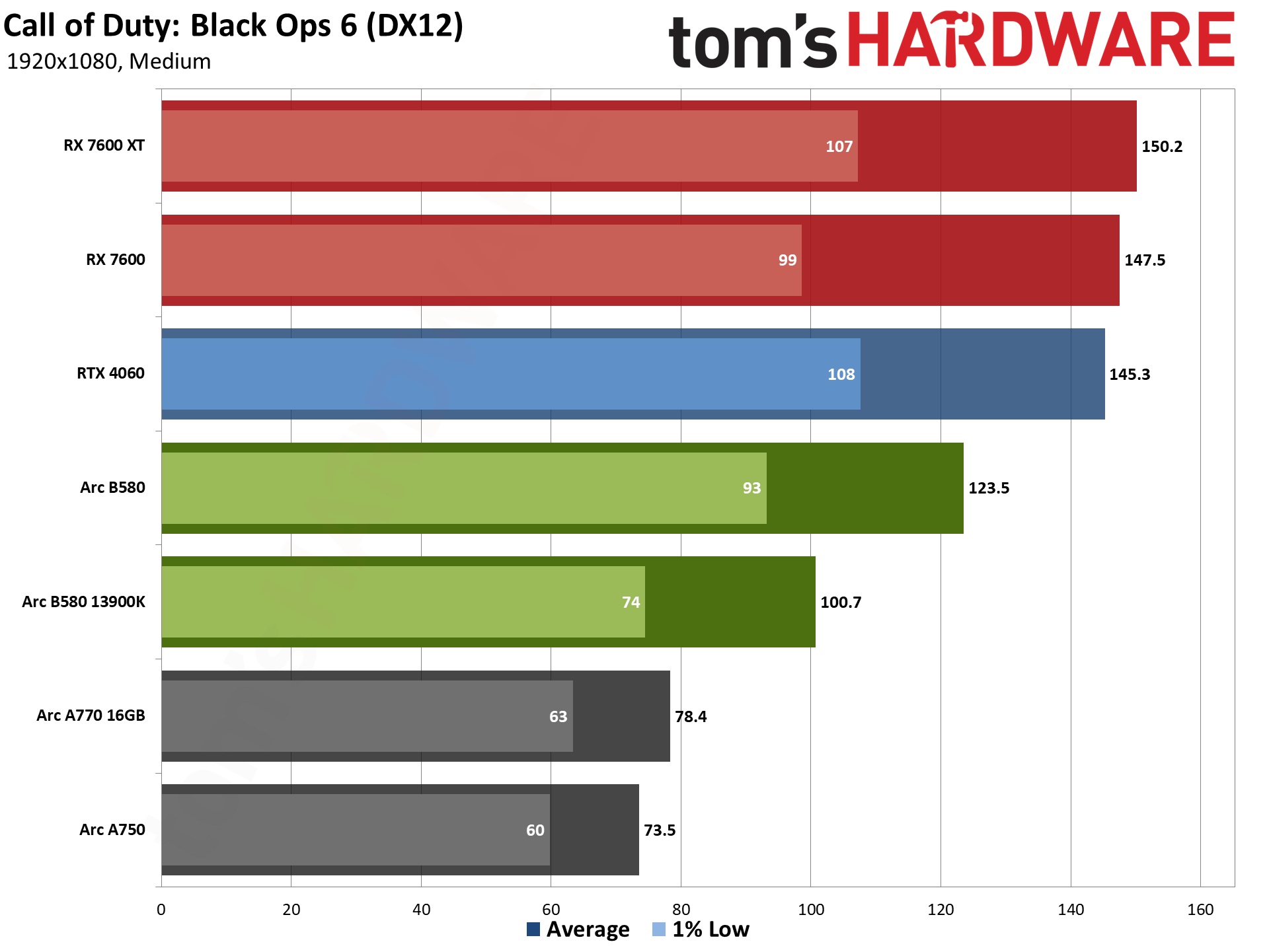

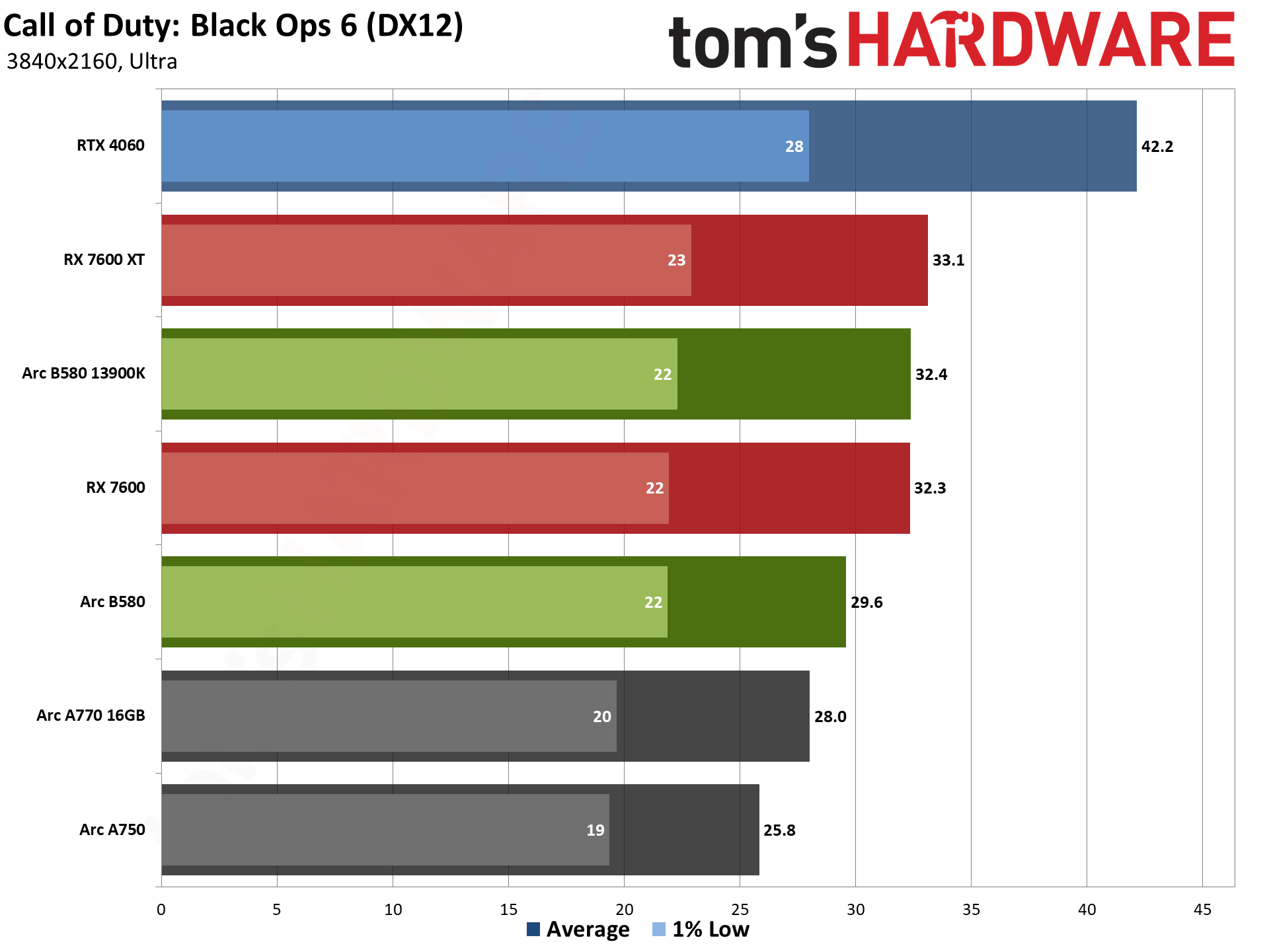

Call of Duty Black Ops 6 uses the Infinity Ward game engine with DX12 support, but let me tell you: This one is on my chopping block. I tested in the Hunting Season mission of the campaign, which opens up on a large map and lets you wander around more. The other missions tended to be far smaller, so this one felt like a better proxy for the multiplayer game. (Trying to benchmark multiplayer in a repeatable fashion is an exercise in frustration that I didn't want to deal with.)

Across every GPU I've tested, there's a regular hitching that occurs in this mission, which causes the minimum FPS to skew wildly. There have also been numerous patches in just the past couple of weeks, so this one probably isn't a long-term resident of my benchmark list. That's unfortunate, as the Call of Duty games tend to be popular, but I may need to look into other ways to attempt to benchmark the game.

As it stands, Black Ops 6 is an AMD-promoted game and the two AMD GPUs generally beat the Arc B580 competition here. Nvidia's RTX 4060 takes the top spot at all three ultra settings, but I had to test it on the 13900K — for whatever reason, the AM5 test PC does not like the RTX 4060 in Black Ops 6. It would hitch badly a few times and then typically stall completely and crash.

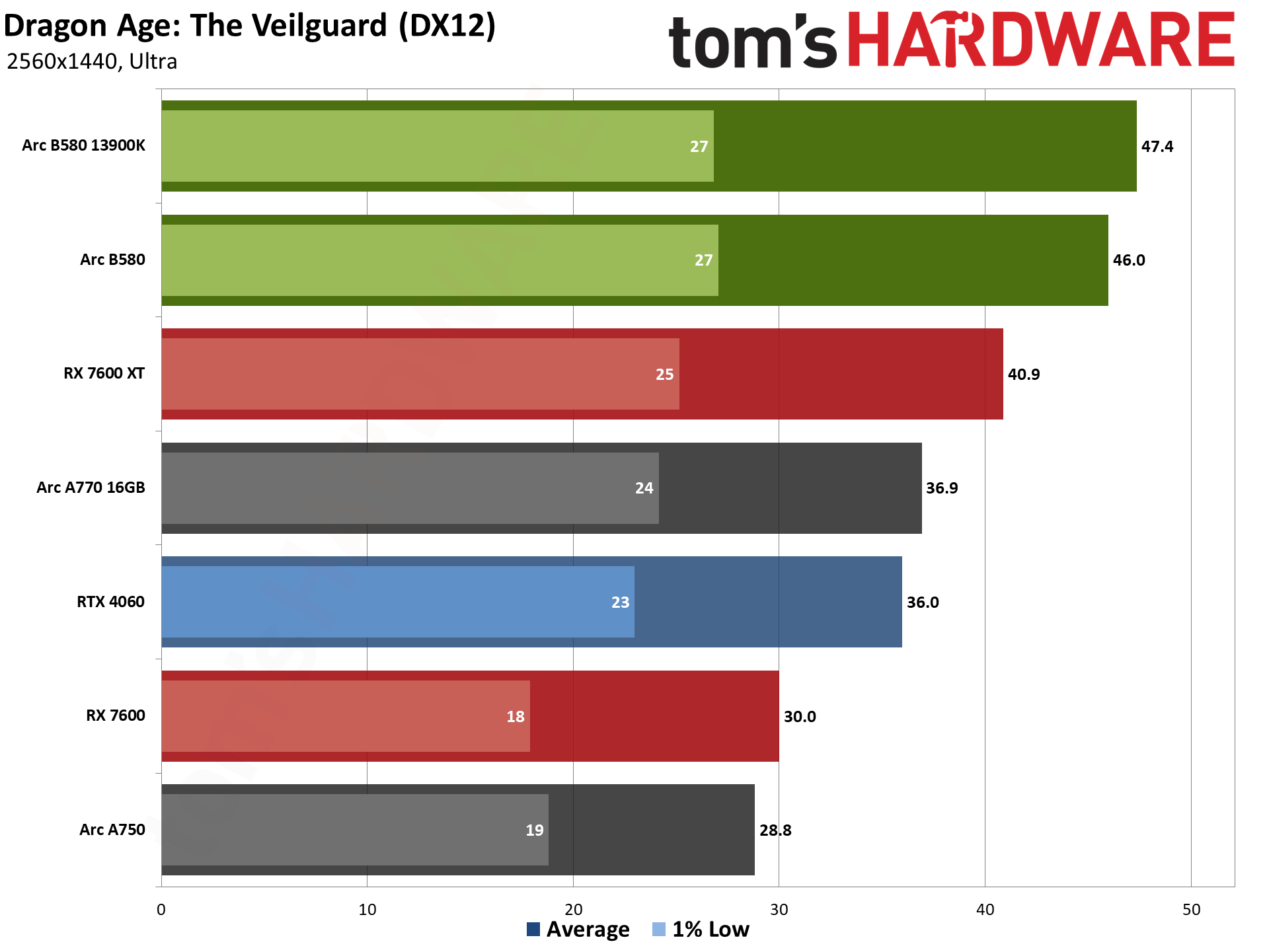

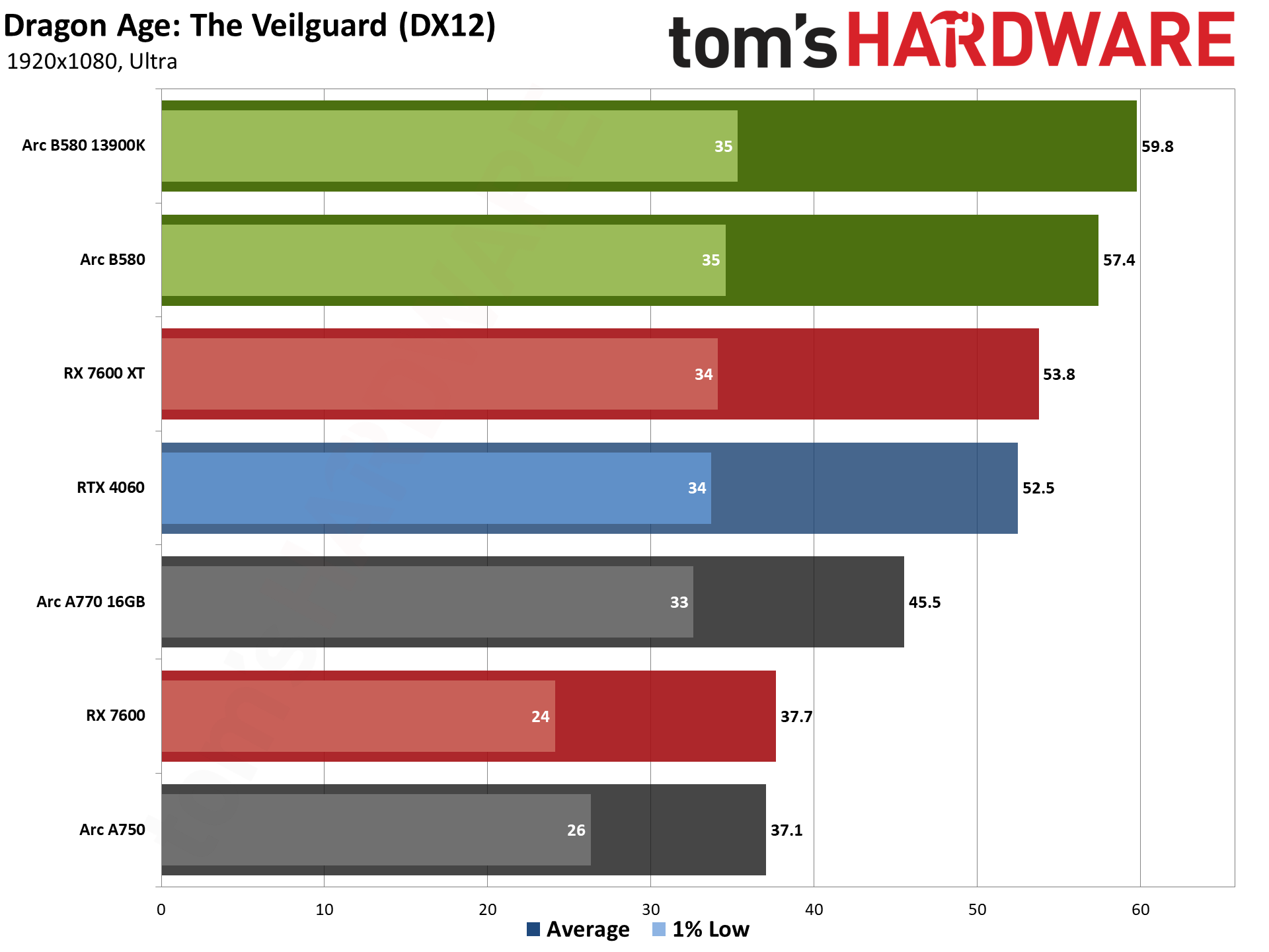

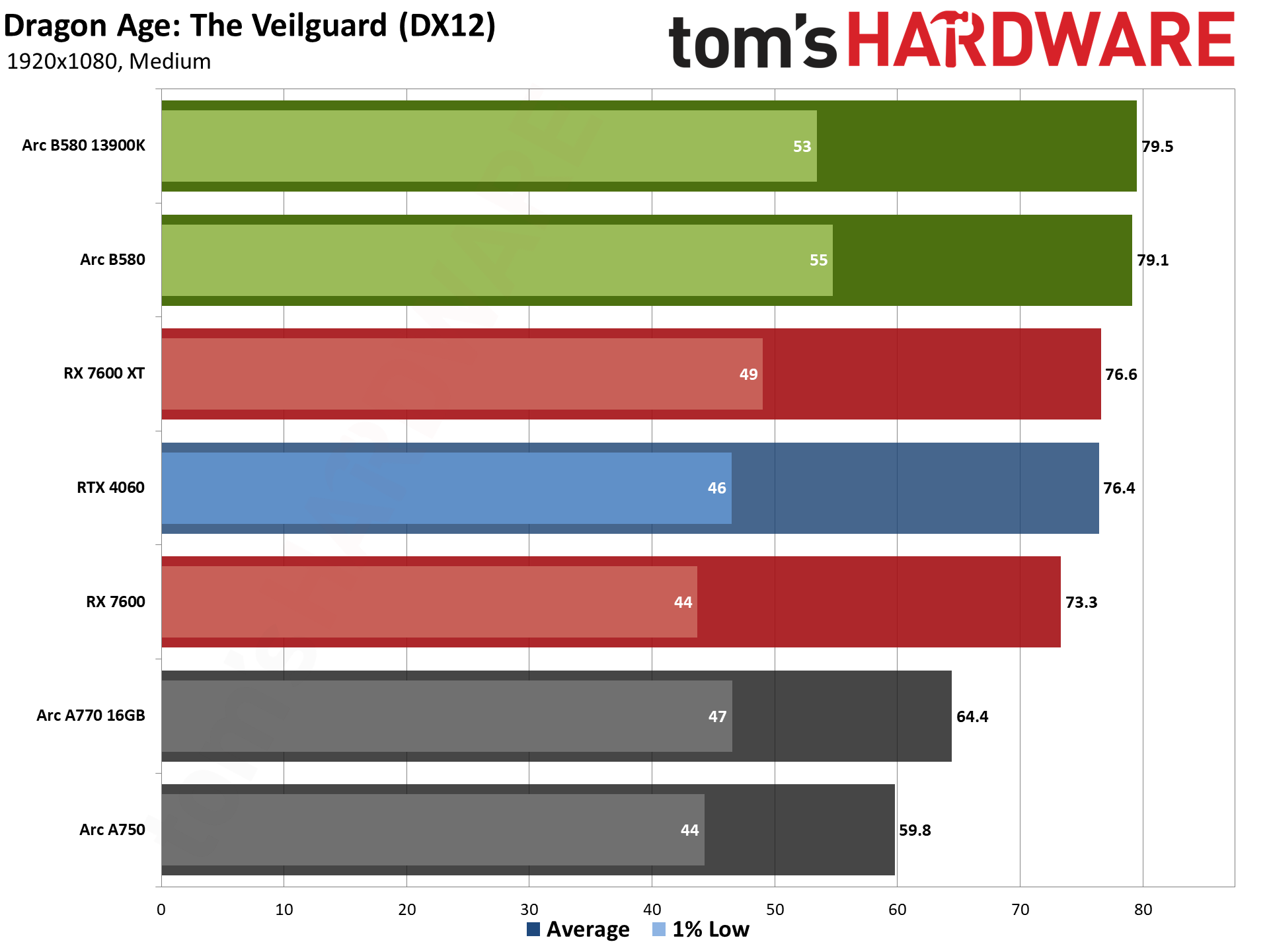

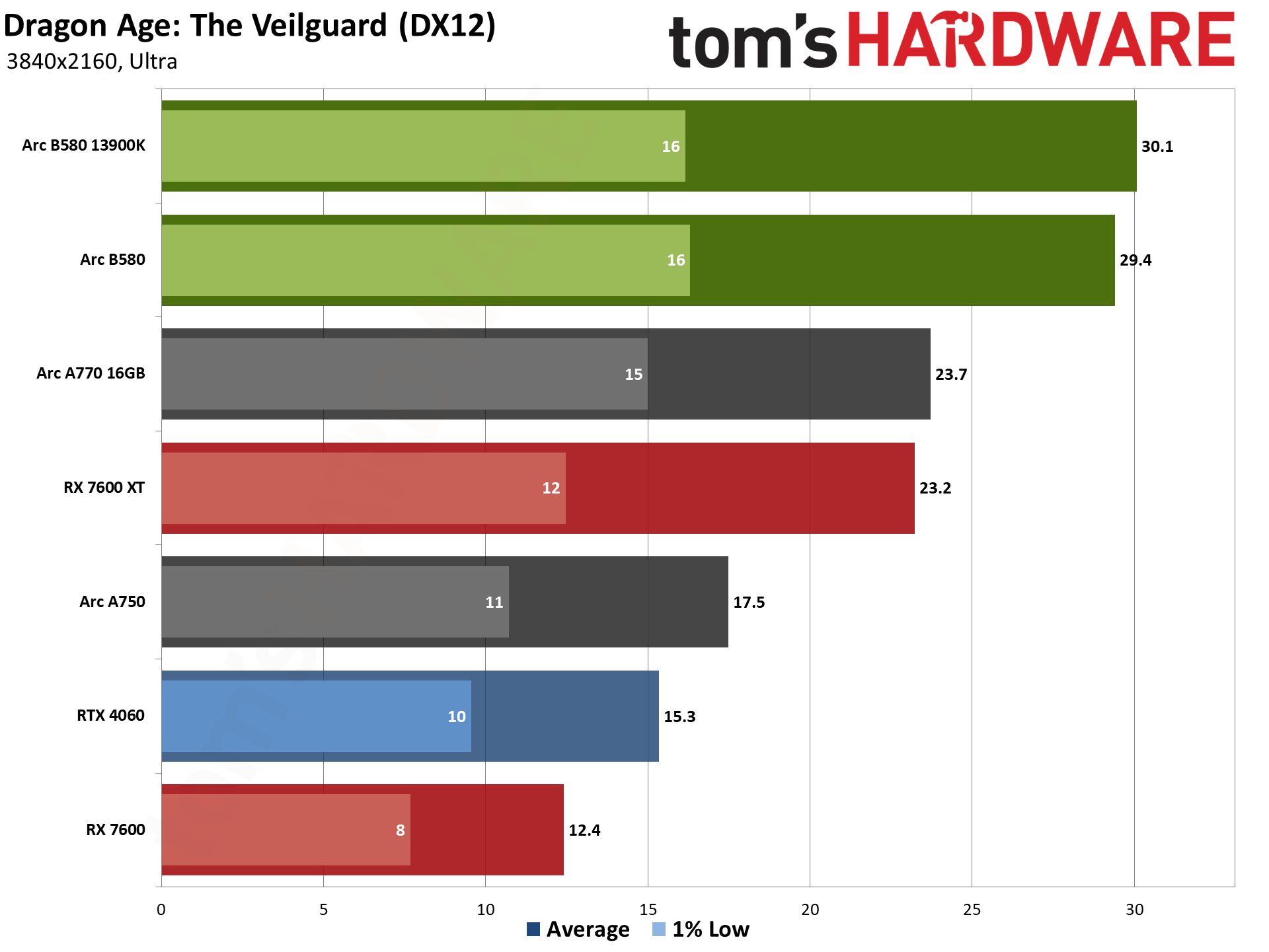

Dragon Age: The Veilguard uses the Frostbite engine and runs via the DX12 API. It's one of the newest games in my test suite, having launch on Halloween. It's been received quite well, though, and in terms of visuals I'd put it right up there with Unreal Engine 5 games — without some of the LOD pop-in that happens so frequently with UE5. But it's also still quite demanding.

The initial preview driver had some rendering issues in Veilguard, but they were fixed with the second preview driver. The public drivers worked fine on the A-series GPUs, so it was really just a case of the preview drivers being based off an older code branch, perhaps one that forked off the main branch before Veilguard was released.

With the newer driver, Arc B580 takes top honors. It's only 8% faster than the 7600 at 1080p medium, but the bump to ultra settings causes the 8GB card to falter badly and the B580 ends up leading by over 50% at 1080p and 1440p, with a 137% lead at 4K ultra. It beats the 7600 XT by 3%, 7%, 13%, and 27% at our test settings (1080p medium, ultra, 1440p, 4K).

Nvidia's RTX 4060 does okay at 1080p, with the B580 leading by 4% at medium settings and 9% at ultra. But then, as with the 7600, performance dies at 1440p ultra and the B580 ends up being 28% faster, with a 92% lead at 4K ultra.

The B580 also delivers big generational gains over the A-series, It's 32–68 percent faster than the A750, and 23–26 percent faster than the A770. Notice how the 16GB A770 ends up being a consistent improvement, rather than a massive jump, as it doesn't run into VRAM issues.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

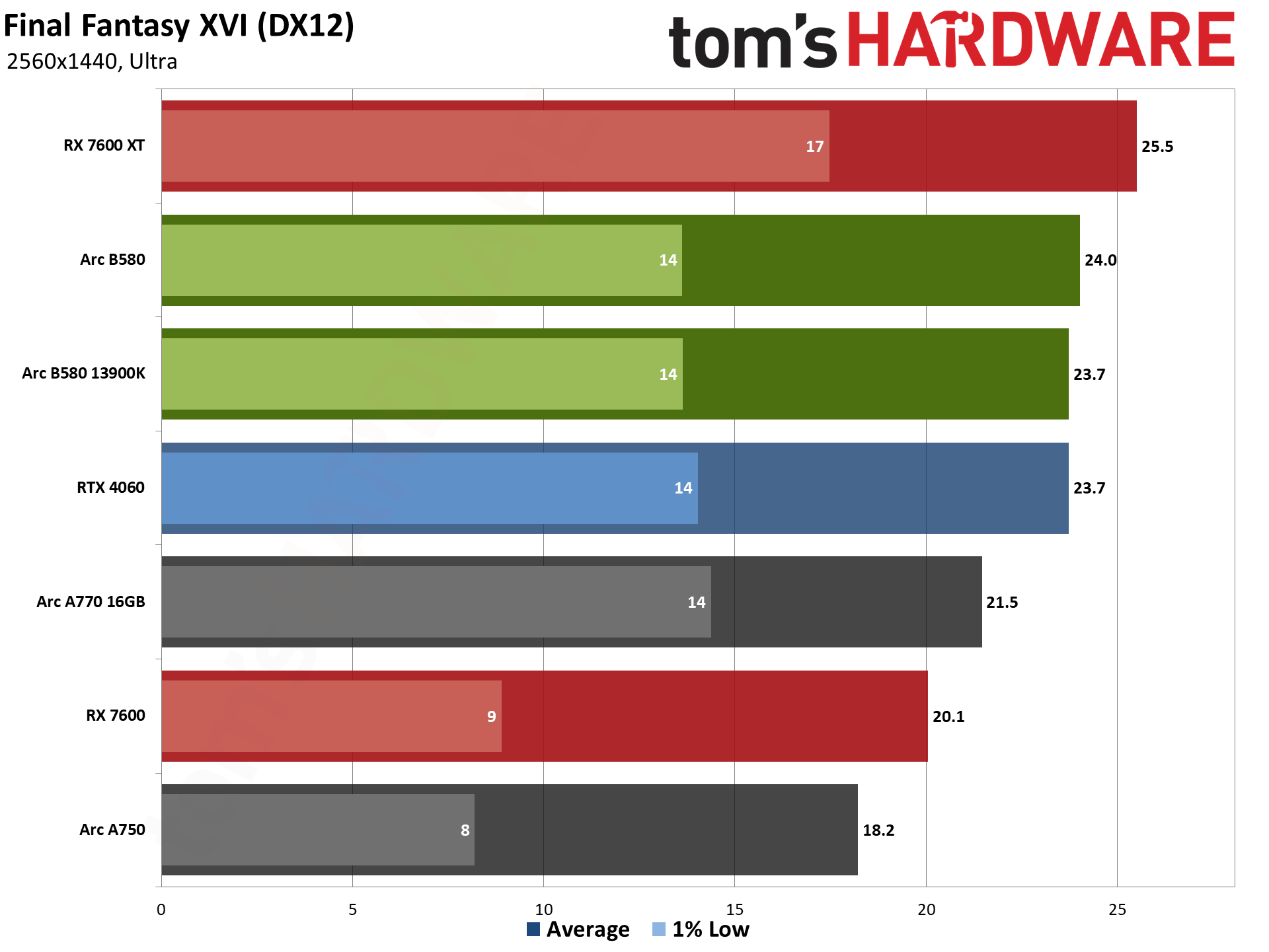

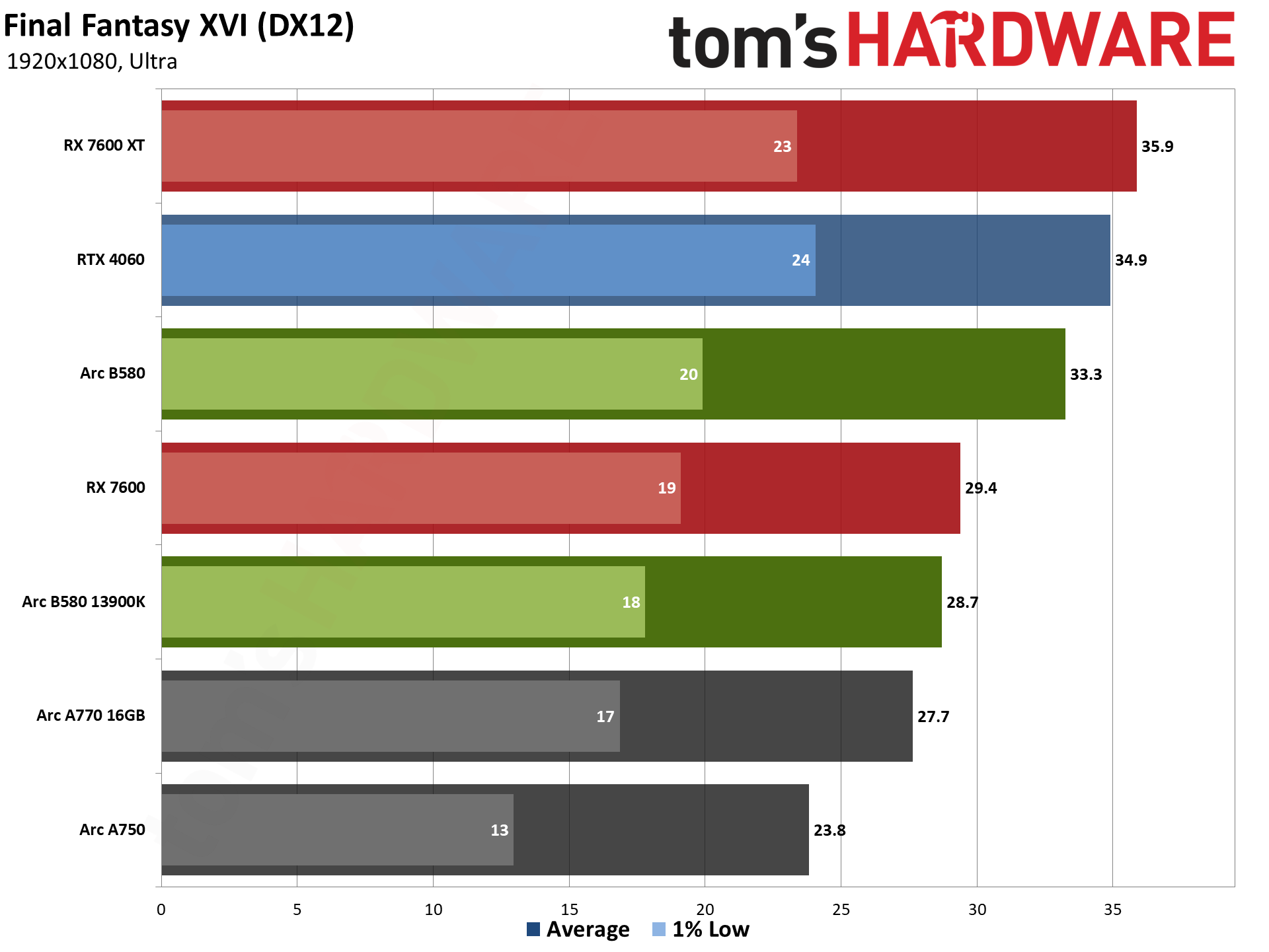

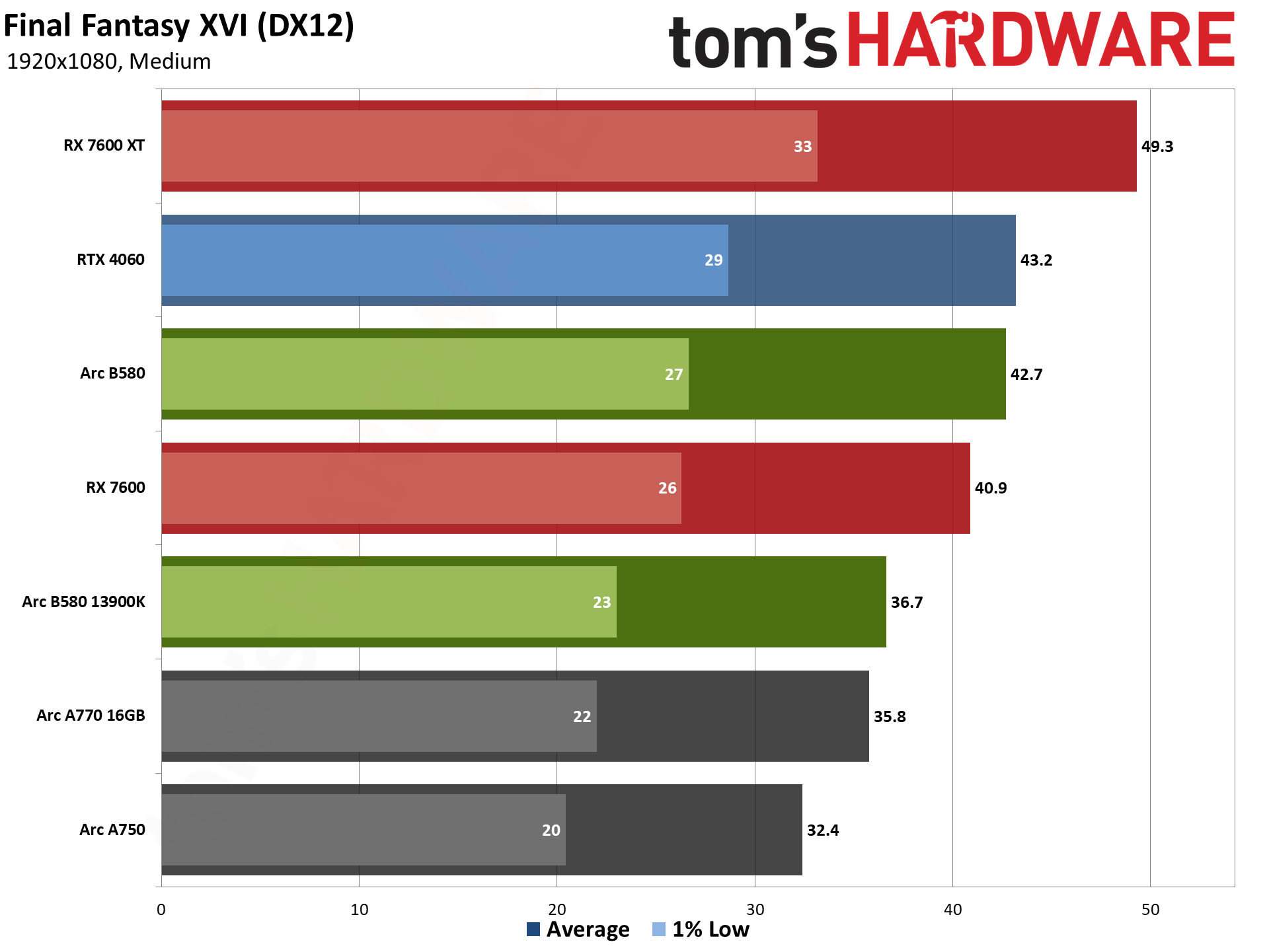

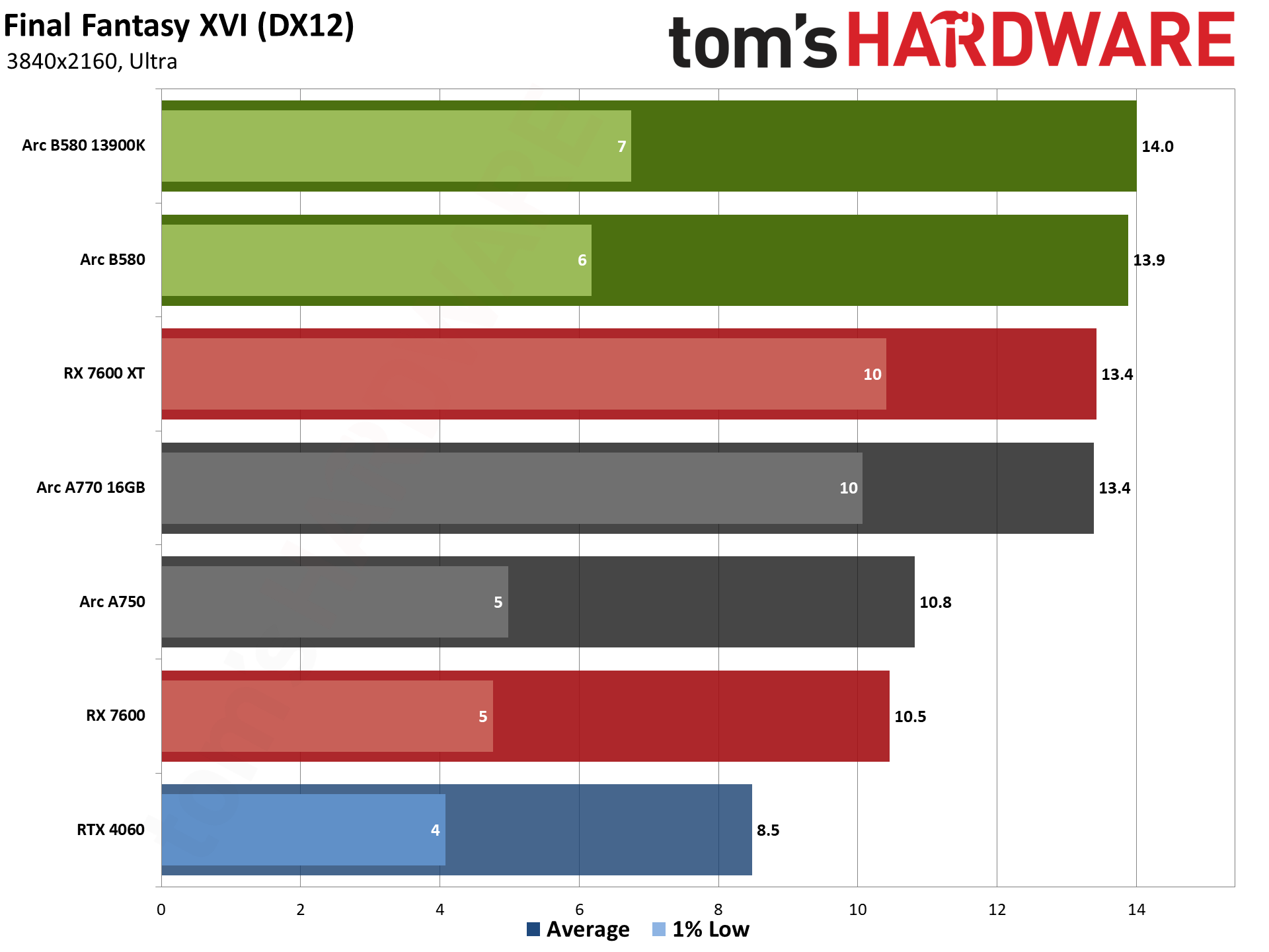

Final Fantasy XVI came out for the PS5 last year, but it only recently saw a Windows release. It's also either incredibly demanding or quite poorly optimized, but it does tend to be very GPU limited. Our test sequence consists of running a path around the town of Lost Wing.

I'm not sure if Final Fantasy XVI is specifically AMD promoted, or if it's just the console origins that make it run better on the RX 7600 XT. But VRAM capacity does matter, as evidenced by the RX 7600. Even at 1080p medium, there's a decent separation between the 8GB and 16GB AMD GPUs, and the gap widens at higher settings.

But none of the tested GPUs do very well in Final Fantasy XVI. The 7600 XT almost reaches 50 fps at 1080p medium, and everthing else falls short. Technically the B580 breaks 30 FPS at 1080p ultra, though, so with upscaing and framegen it becomes more playable.

We've been using Flight Simulator 2020 for several years, and there's a new release below. But it's so new that we also wanted to keep the original around a bit longer as a point of reference. We've switched to using the 'beta' (eternal beta) DX12 path for our testing now, as it's required for DLSS frame generation even if it runs a bit slower on Nvidia GPUs (not that we're using framegen).

The game is extremely CPU heavy, but with the Ryzen 7 9800X3D we're mostly able to push beyond the capabilities of these mainstream graphics cards so that we're not CPU limited.

The B580 ends up moderately faster than the 7600, with a 9–22 percent lead. The 7600 XT offers almost no improvement over the 7600, indicating the 8GB of memory isn't a problem in this particular game. B580 still leads by 5–16 percent. Intel's new chip also easily eclipses the RTX 4060, with a 16–28 percent margin of victory.

The A750 ends up being pretty close to the RTX 4060 in this game, so the B580 offers a similar 19–29 percent improvement in performance. The A770 16GB does better, but B580 is still 9–17 percent faster. So this is a clean sweep for Battlemage.

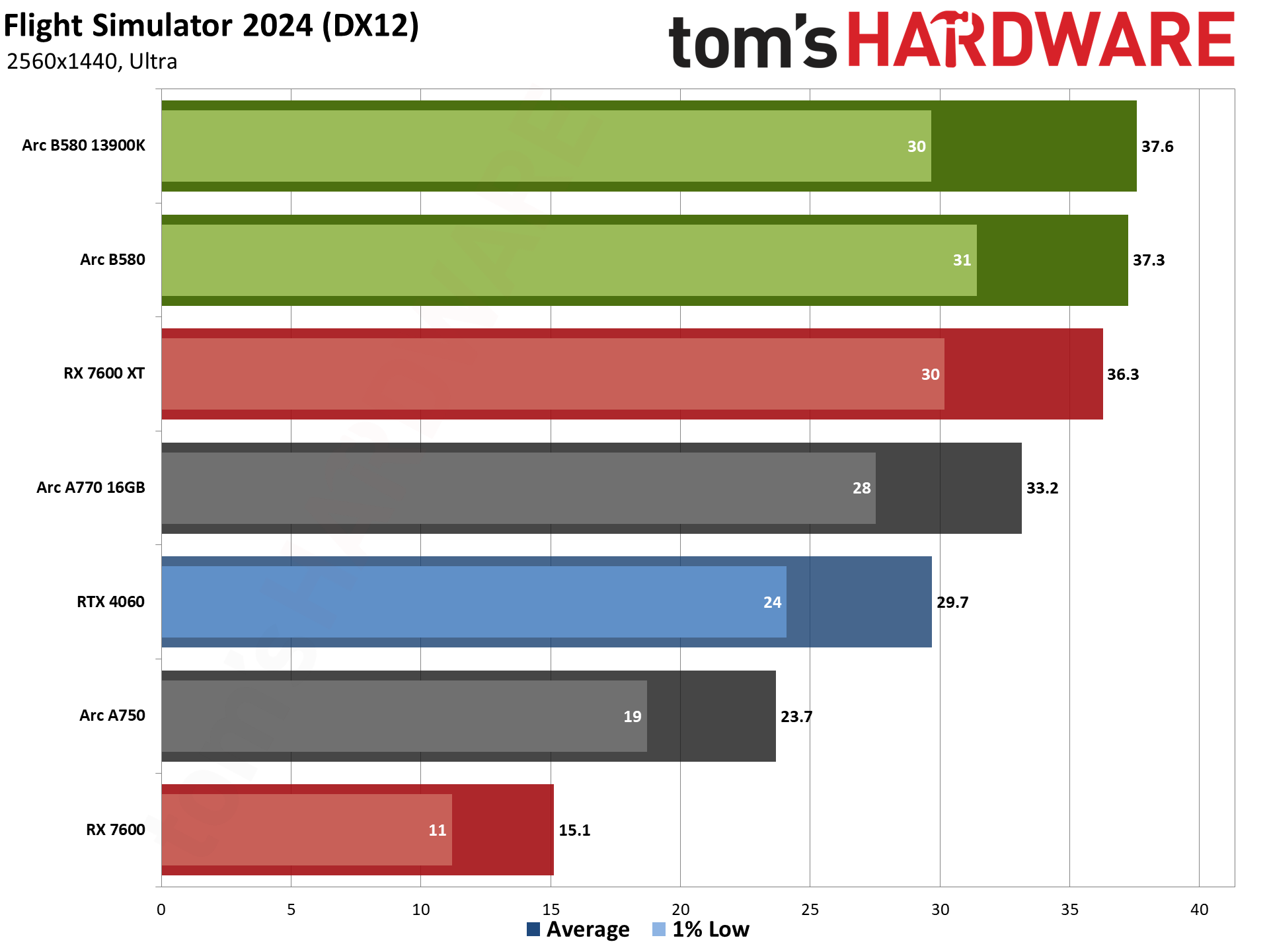

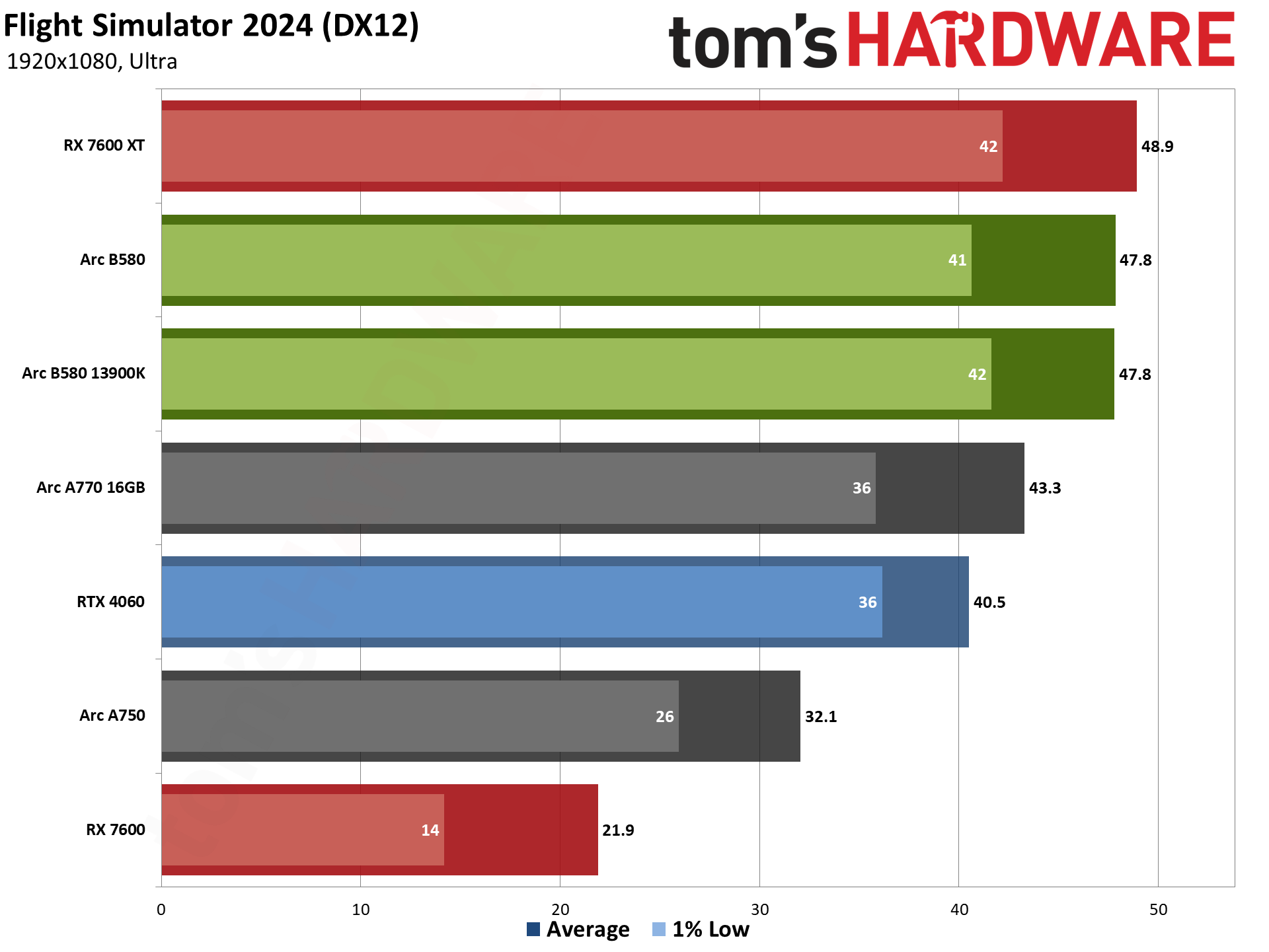

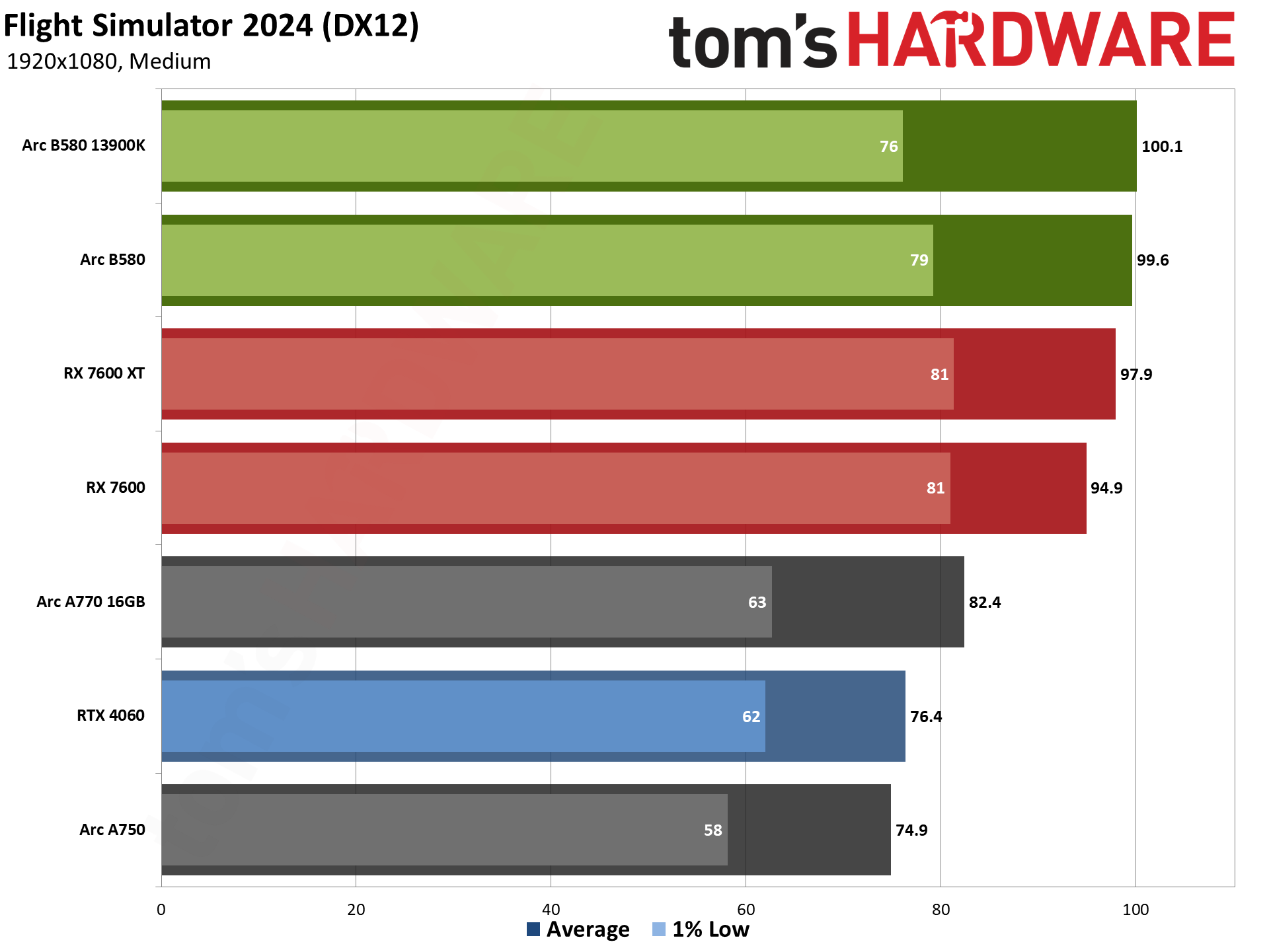

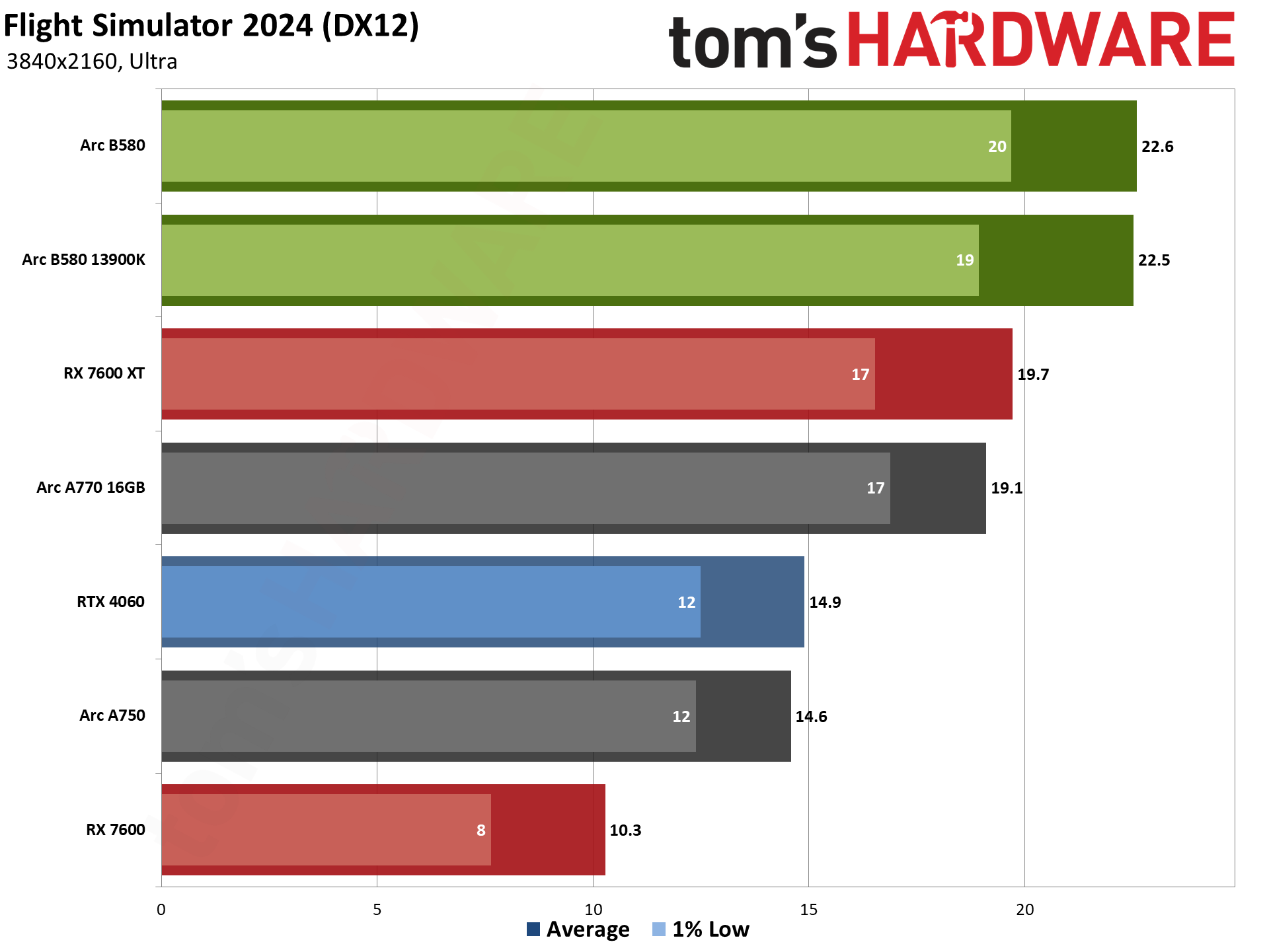

Flight Simulator 2024 is only a few weeks old, and after a rough launch, we've run benchmarks on the game for the Arc B580 review. With updated "game ready" AMD drivers in tow, we found performance dropped substantially on the RX 7600 at the ultra preset, but the medium preset was faster.

The new version tends to be a bit more GPU heavy rather than CPU limited, though it still definitely hits the CPU hard. Intel's 13900K basically keeps pace with the 9800X3D on the mainstream GPUs, however.

The B580 trades blows with the 7600 XT at 1080p and 1440p, but then gets a 15% lead at 4K — where both GPUs are running sub-30 FPS. The RX 7600 performance goes down in flames at ultra settings, with the B580 more than doubling its performance. B580 is also consistently faster than the RTX 4060, leading by 18–30 percent at 1080p and 1440p, and then with a 52% lead at 4K.

Generationally, B580 delivers some major gains as well. It's 33–57 percent faster than the A750, and 10–21 percent faster than the A770 16GB. Having more memory (or memory bandwidth, perhaps) does help the A770 in this case.

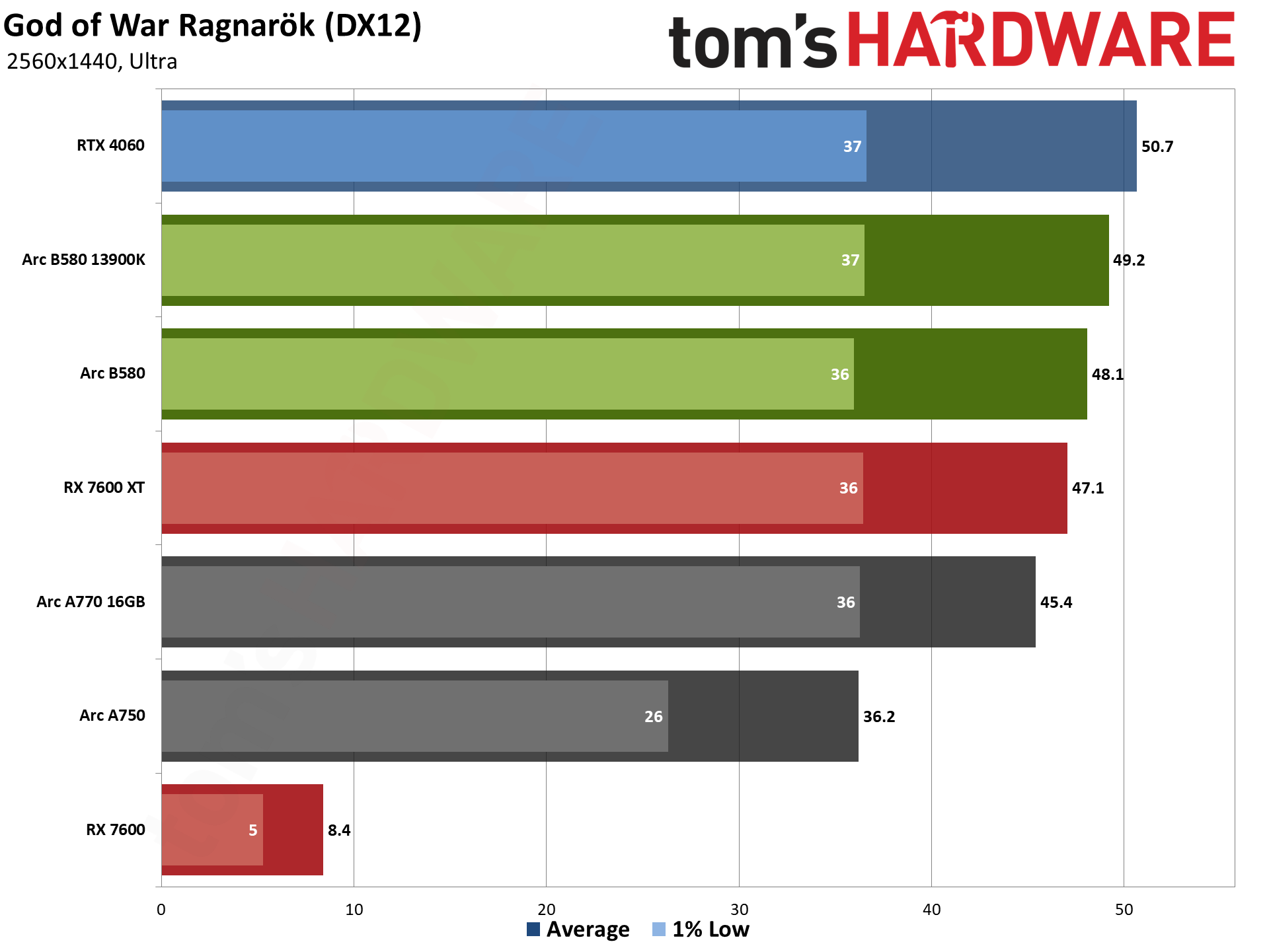

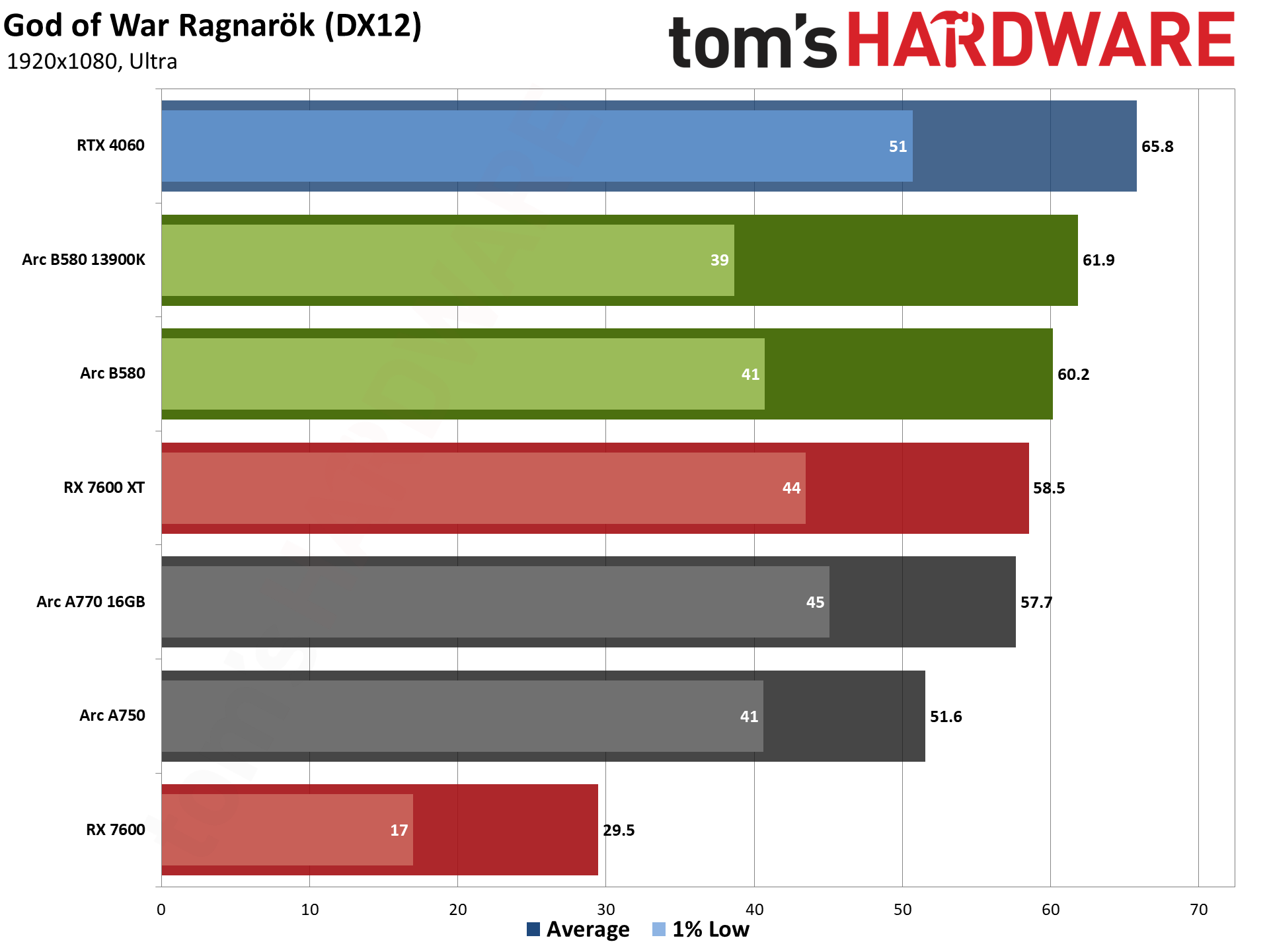

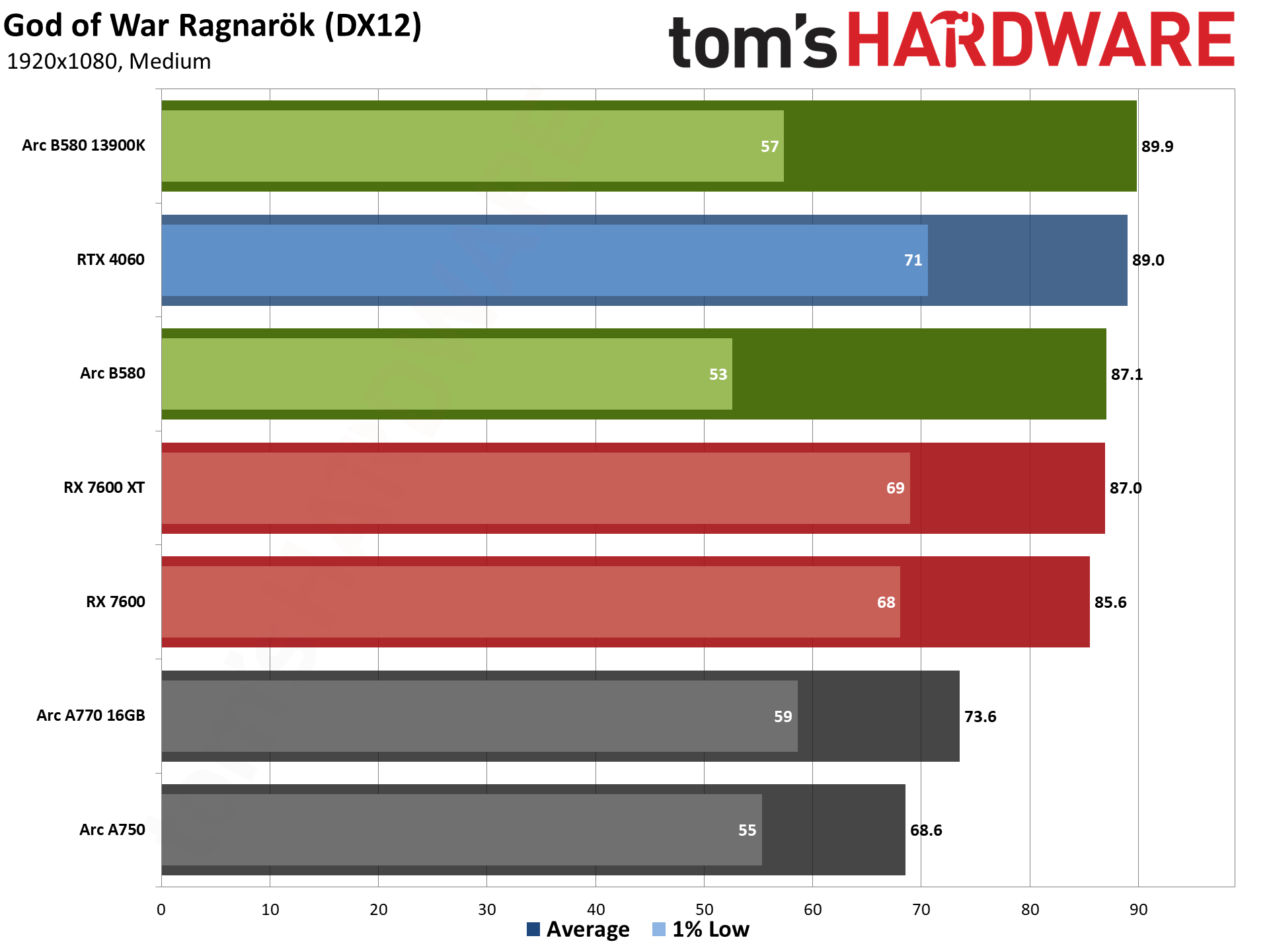

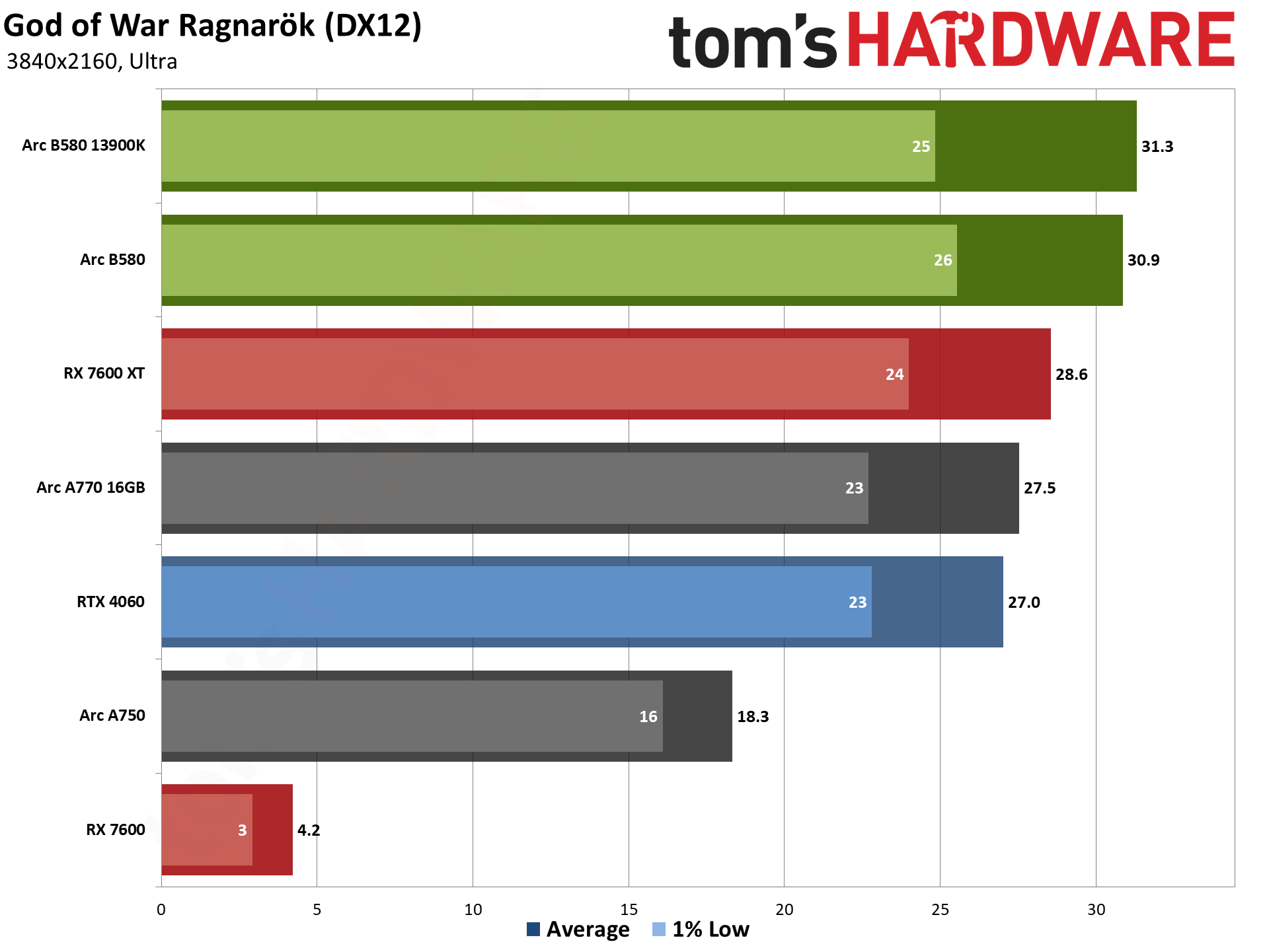

God of War Ragnarök released for the PlayStation two years ago and only recently saw a Windows version. It's AMD promoted, but it also supports DLSS and XeSS alongside FSR3. We ran around the village of Svartalfheim, which is one of the most demanding areas in the game that we've encountered.

The B580 and RX 7600 are basically tied at 1080p medium, but then the 7600 just dies at ultra settings — B580 is just over twice as fast at 1080p ultra, nearly six times faster at 1440p, and over seven times faster at 4K. And that's not just a "fake" win at 4K, as the B580's 31 fps is technically playable while the 7600's 4.2 fps is decidedly not.

VRAM makes all the difference for AMD, and the B580 and 7600 XT end up nearly tied, with the B580 offering an 8% lead at 4K. The 1% lows at 1080p medium are also quite a bit higher on AMD's GPU — another opportunity for further driver improvements from Intel.

Nvidia doesn't seem to have a problem with 8GB in Ragnarök, unlike AMD, as the RTX 4060 actually beats the B580 at 1440p and below, again with much better minimum fps. Intel does win by 14% at 4K, however.

The A770 and A750 show an 8GB limitation on the lesser GPU, with B580 leading by 17–27 percent at 1080p and 1440p, but with a bigger 68% lead at 4K. Against the A770, it's only 4–18 percent faster, this time with the largest lead at 1080p medium — probably a geometry or "execute indirect" limitation on the older A-series architecture.

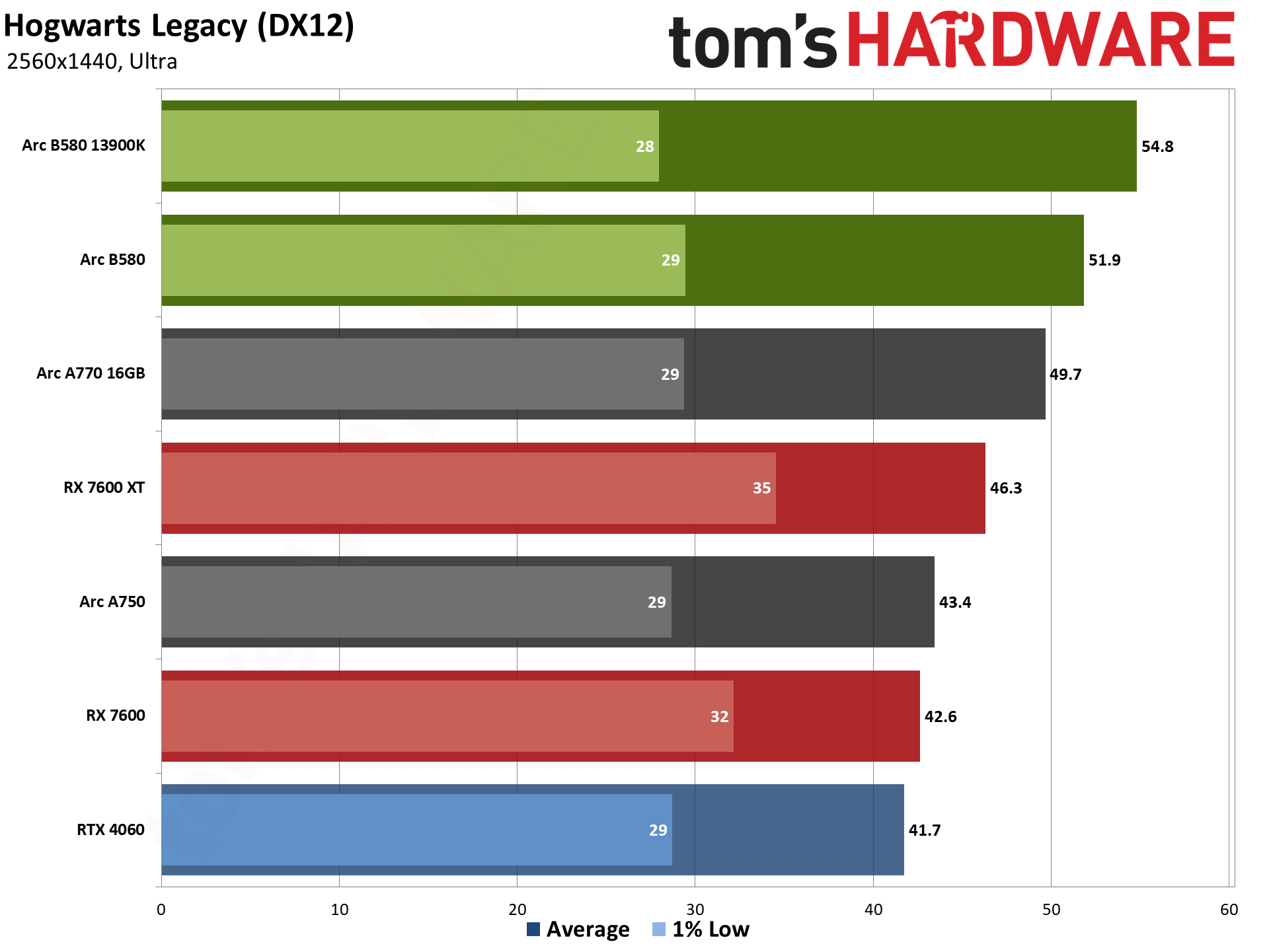

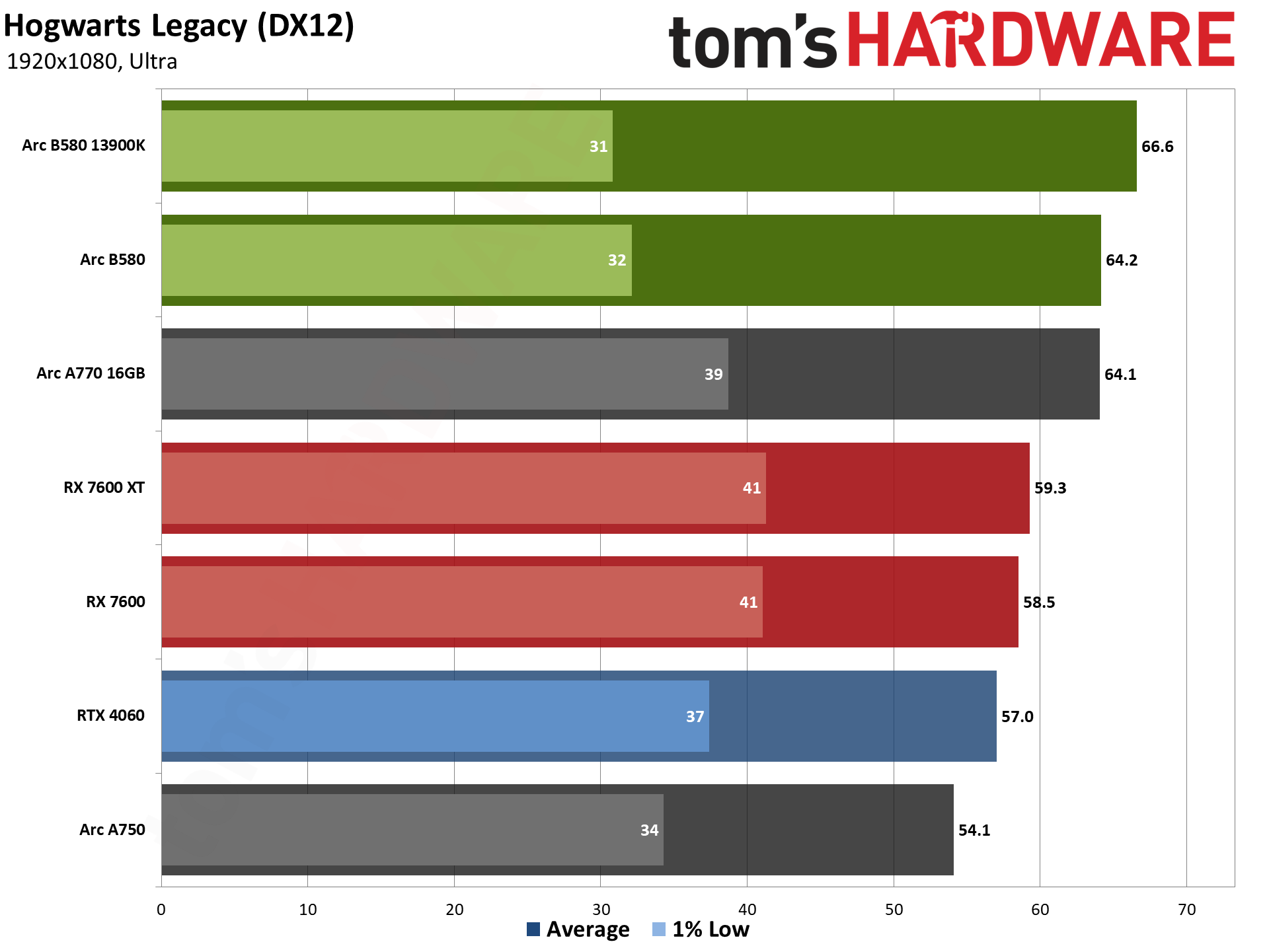

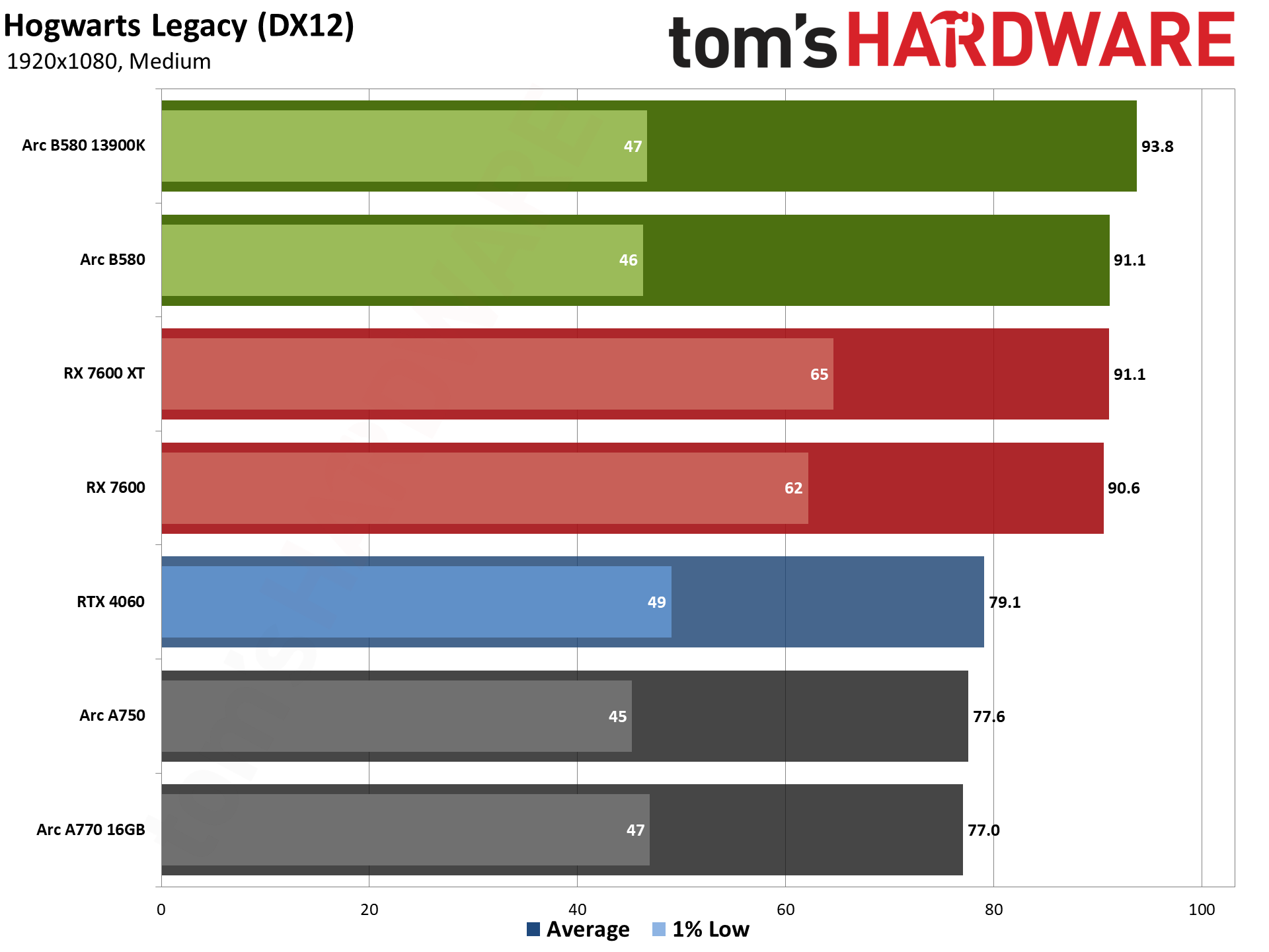

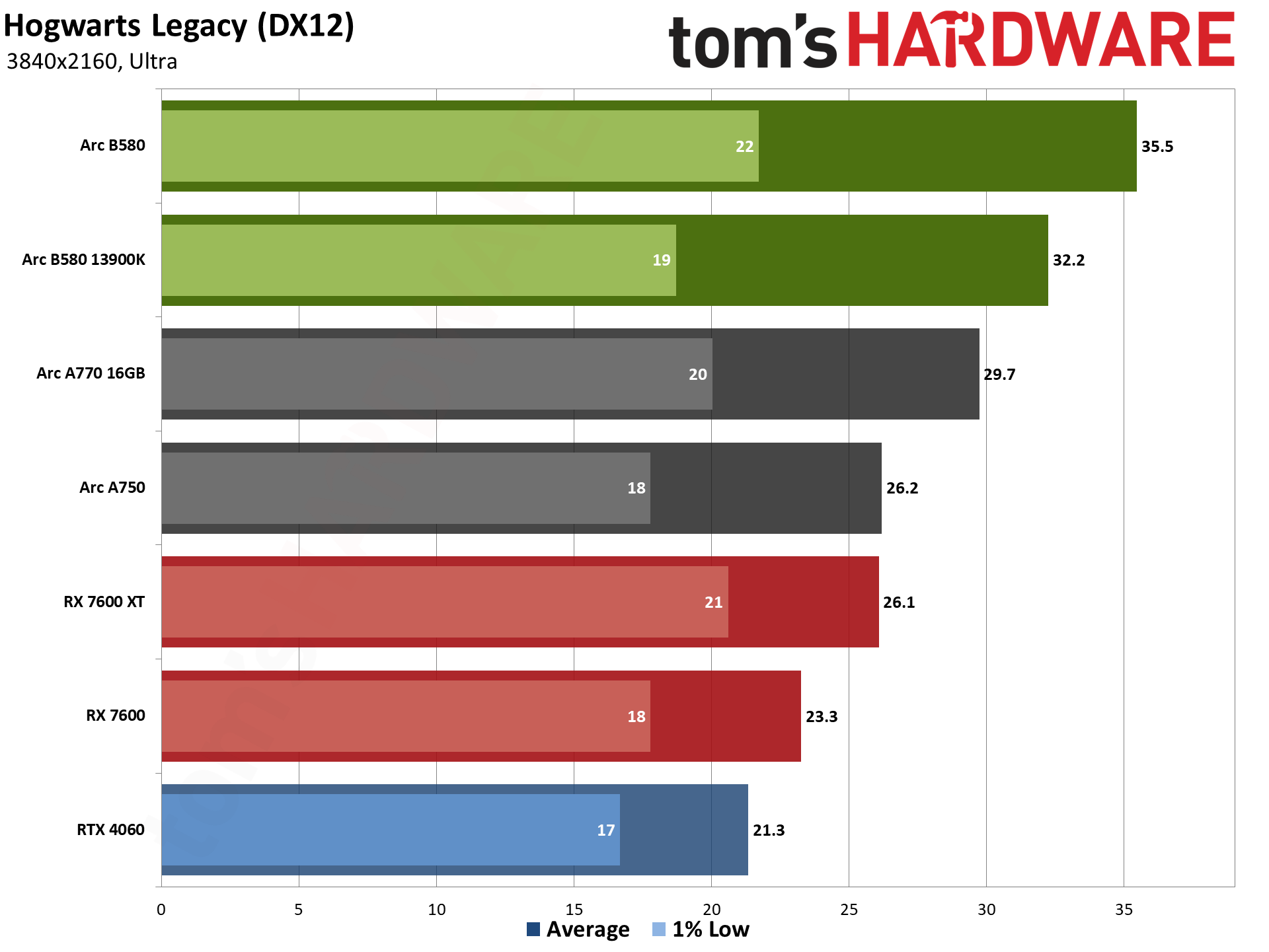

Hogwarts Legacy came out in early 2023, and it uses Unreal Engine 4. Like so many Unreal Engine games, it can look quite nice but also has some performance issues with certain settings. Ray tracing in particular can bloat memory use and tank framerates, often crashing without warning on 8GB and even 12GB cards, so we've opted to test without ray tracing.

The B580 has inconsistent minimum fps, so while it ties the RX 7600 at 1080p medium and leads by 10% at ultra, the 1% lows are 20–25 percent worse — yet another driver optimization issue. Even at 1440p, where the B580 has a 22% lead, its 1% lows are 9% worse. It does get a mostly clean win at 4K, though, with 53% higher average fps and 22% better minimum fps.

As you might expect given the above, things are worse against the RX 7600 XT. Arc B580 ties or wins on average fps at all four settings, but minimum fps is 15–28 percent worse at 1440p and below. At 4K, the B580 gets 36% higher average fps but only 6% higher minimum fps, with stuttering being clearly noticeable while playing.

Nvidia's RTX 4060 is a similar story, with better minimum fps at 1080p. The B580 leads by 13–24 percent at 1080p and 1440p, but with a -14 to +3 percent difference on minimum fps. 4K of course goes beyond the 4060's capabilities and VRAM capacity, so B580 gets a clean win there.

The Arc A-series parts aren't as far behind this time, especially on minimum fps, so perhaps newer drivers will fix the B580 when Intel eventually merges the drivers into a single release. B580 is 18–35 percent faster than the A750, but only 0–19 percent faster than the A770.

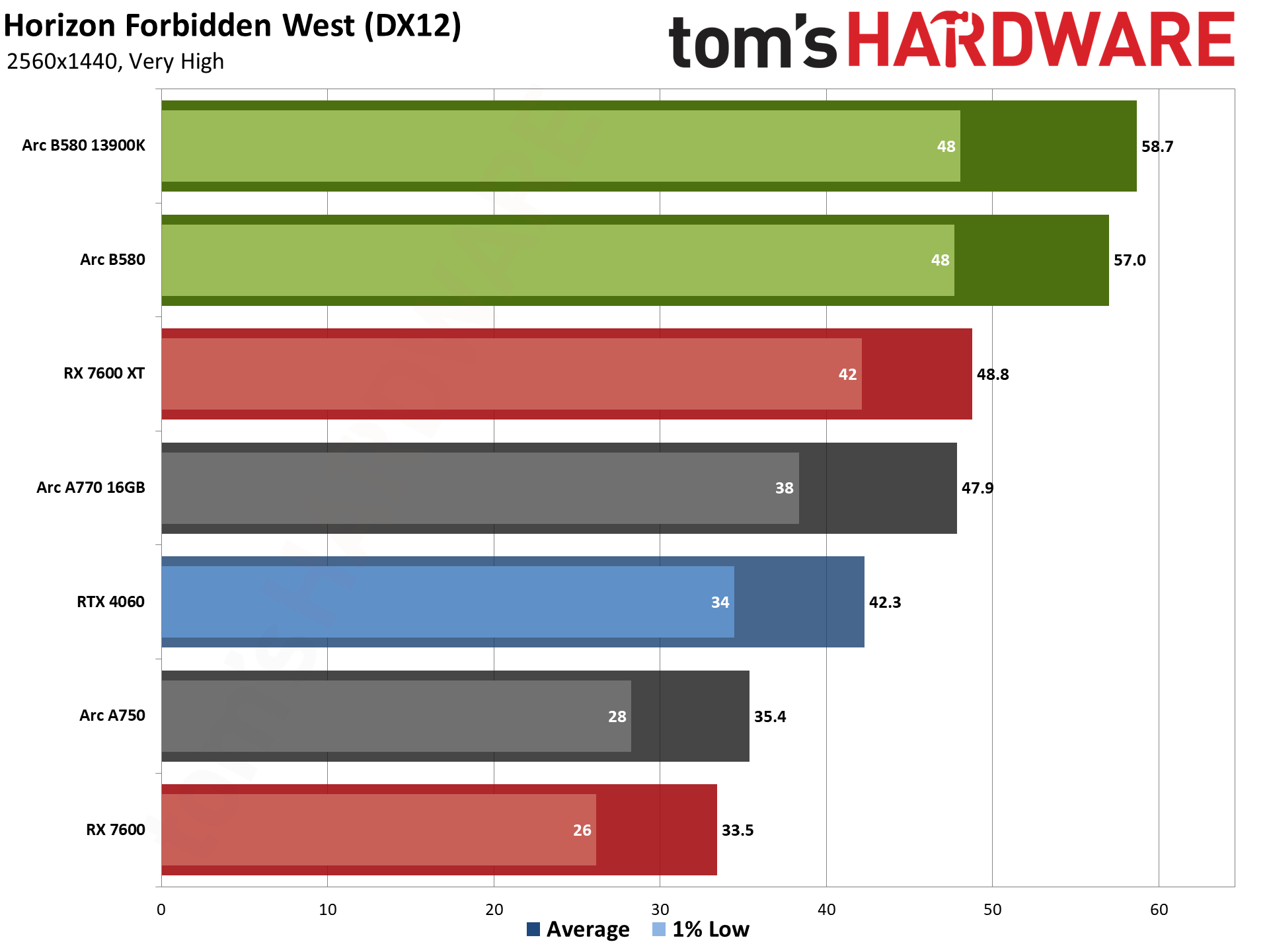

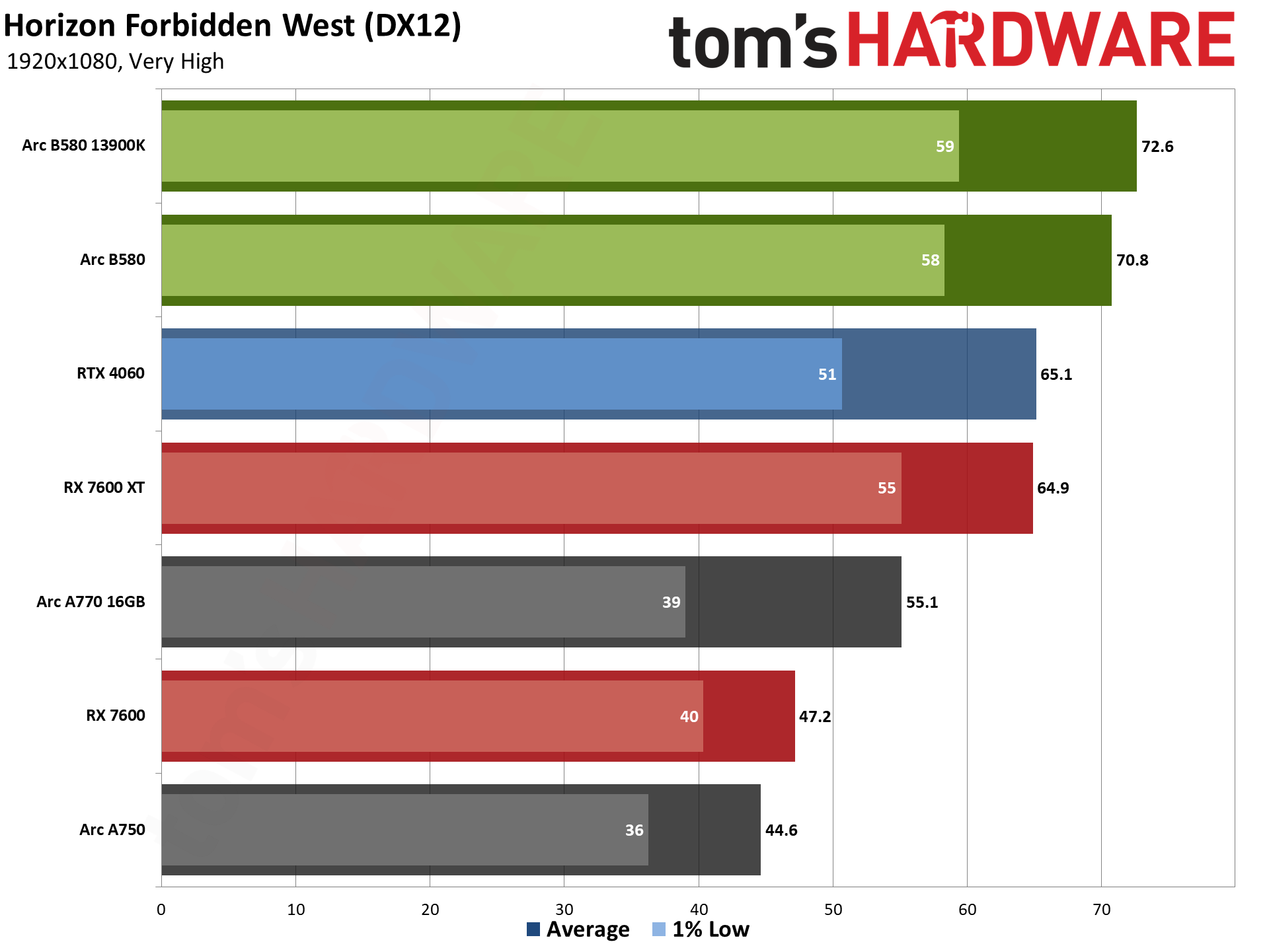

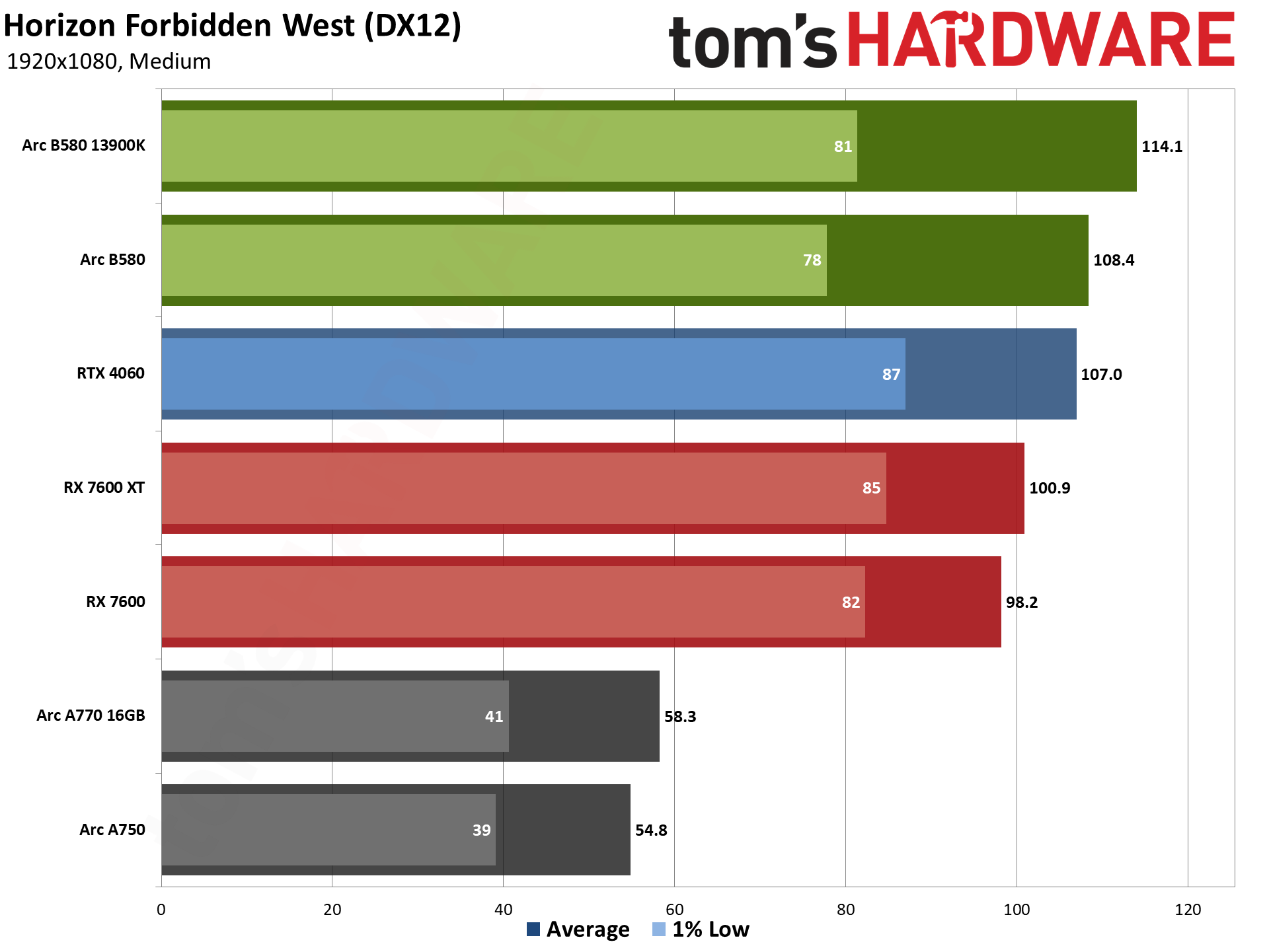

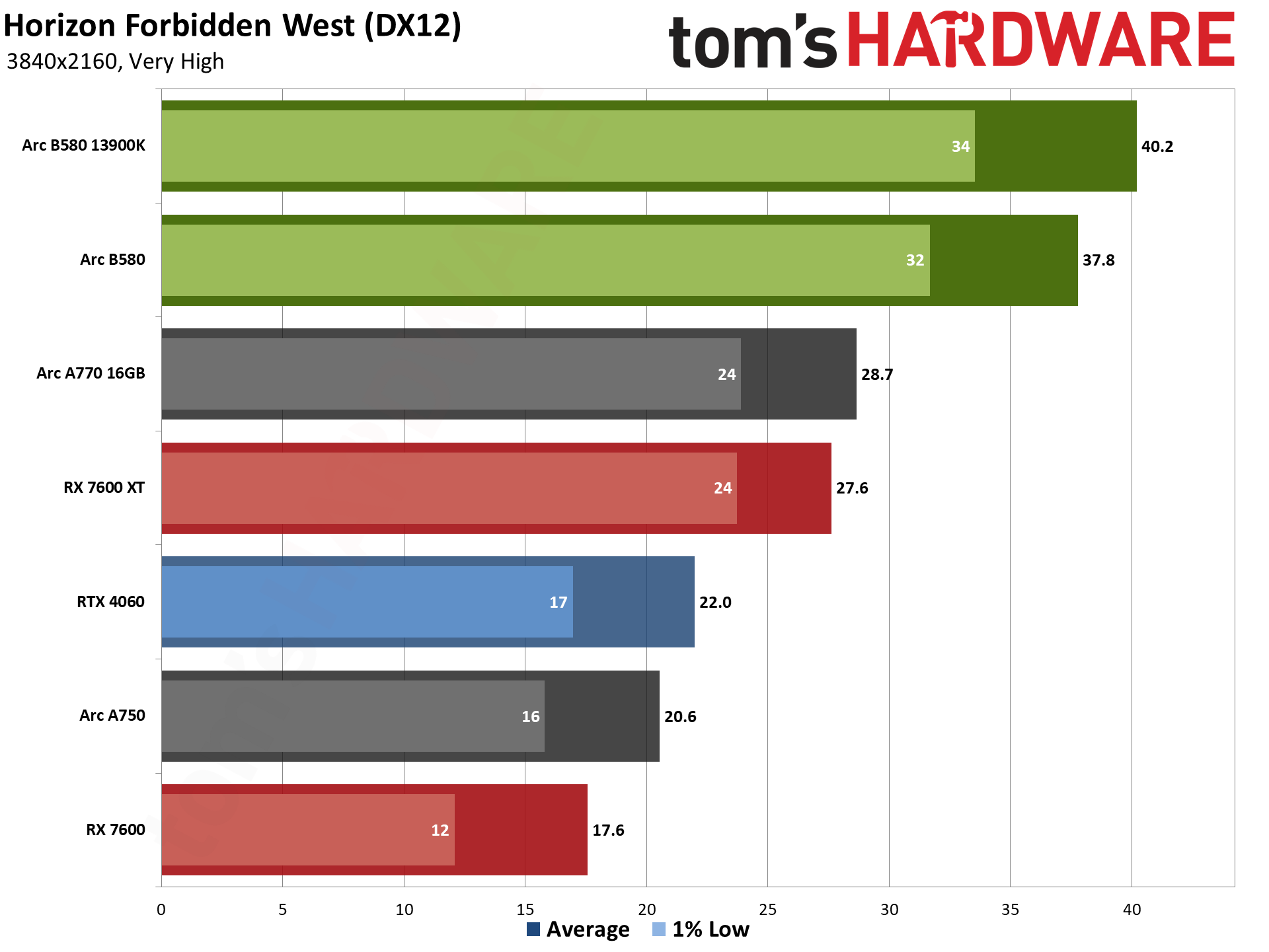

Horizon Forbidden West is another two years old PlayStation port, using the Decima engine. The graphics are good, though I've heard at least a few people that think it looks worse than its predecessor — excessive blurriness being a key complaint. But after using Horizon Zero Dawn for a few years, it felt like a good time to replace it.

This is, yet again, a game where 8GB just isn't sufficient for maximum quality settings. The B580 beats the 7600 by 10% at 1080p medium (but with 6% worse minimum fps). But at ultra settings? It's 50% faster at 1080p, 71% faster at 1440p, and 115% faster at 4K — and again, that's a playable 38 fps, native, compared to 18 fps. RX 7600 XT is closer, but B580 still wins. It's a tie at 1080p medium, 8% faster at 1080p ultra, 12% at 1440p, and then a large 36% lead at 4K.

Versus the RTX 4060, we get a tie at medium 1080p (with 11% worse lows on the B580), a 9% lead at 1080p ultra, and then that balloons to 35% and 72% at 1440p and 4K. Ever wonder why Intel's pre-launch marketing benchmarks only showed 1440p performance comparisons with the RTX 4060? This is why.

Arc A-series really doesn't do well in Horizon Forbidden West. The B580 is 59–98 percent faster than the A750, and still 28–86 percent faster than the A770. Curiously, the biggest delta was at 1080p medium, so probably this game engine uses a lot of execute indirect calls, or maybe it's mesh shaders or geometry holding back the A-series.

The Last of Us, Part 1 is another PlayStation port, though it's been out on PC for about 20 months now. It's also an AMD promoted game, and really hits the VRAM hard at higher quality settings. As you can see with the benchmark charts, the 8GB AMD card doesn't do too well here, but the A750 and 4060 seem to be fine.

The B580 leads the 7600 by 9% at 1080p medium, and 61, 37, and 25 percent at ultra settings (for 1080p, 1440p, and 4K). But even the B580 seems to run into VRAM issues at 4K, as performance drops to less than a third of the 1440p result. The 7600 XT ties or leads the B580, with a 47% (but meaningless since it's running at just 20 fps) win at 4K.

Interestingly, again, Nvidia's RTX 4060 doesn't seem to struggle as much with VRAM. Yes, it's slower than the B580 at 1440p, but it gets a win at 4K. The A750 and A770 also beat the B580 at 4K, even though Battlemage has a sizeable lead at 1440p and 1080p.

Say it with me again: drivers. It's not that Intel's drivers are completely broken, but there are clearly lots of games where further optimizations would help. Maybe some of those optimizations are already present for the Alchemist branch, but Battlemage falls behind at times.

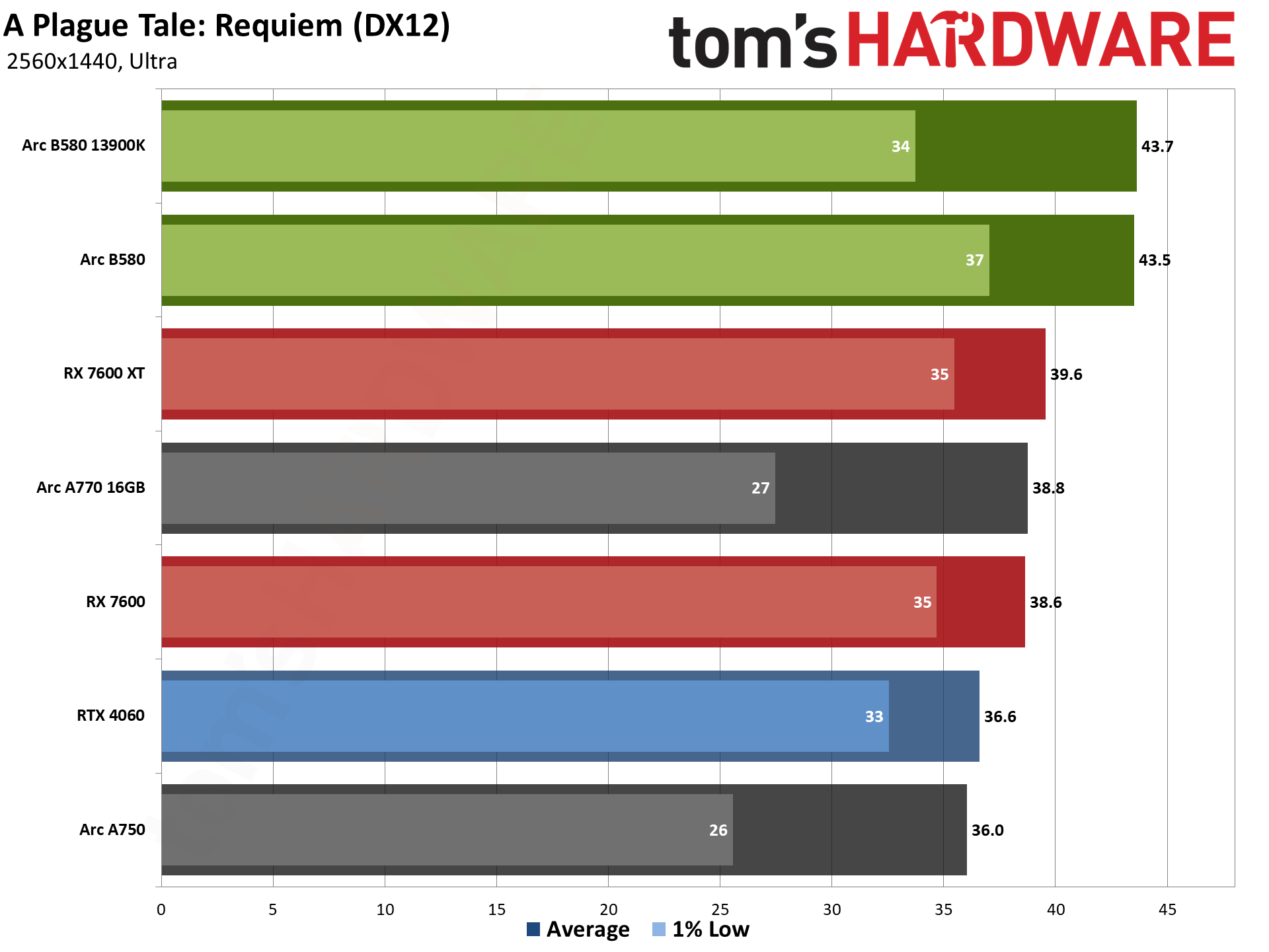

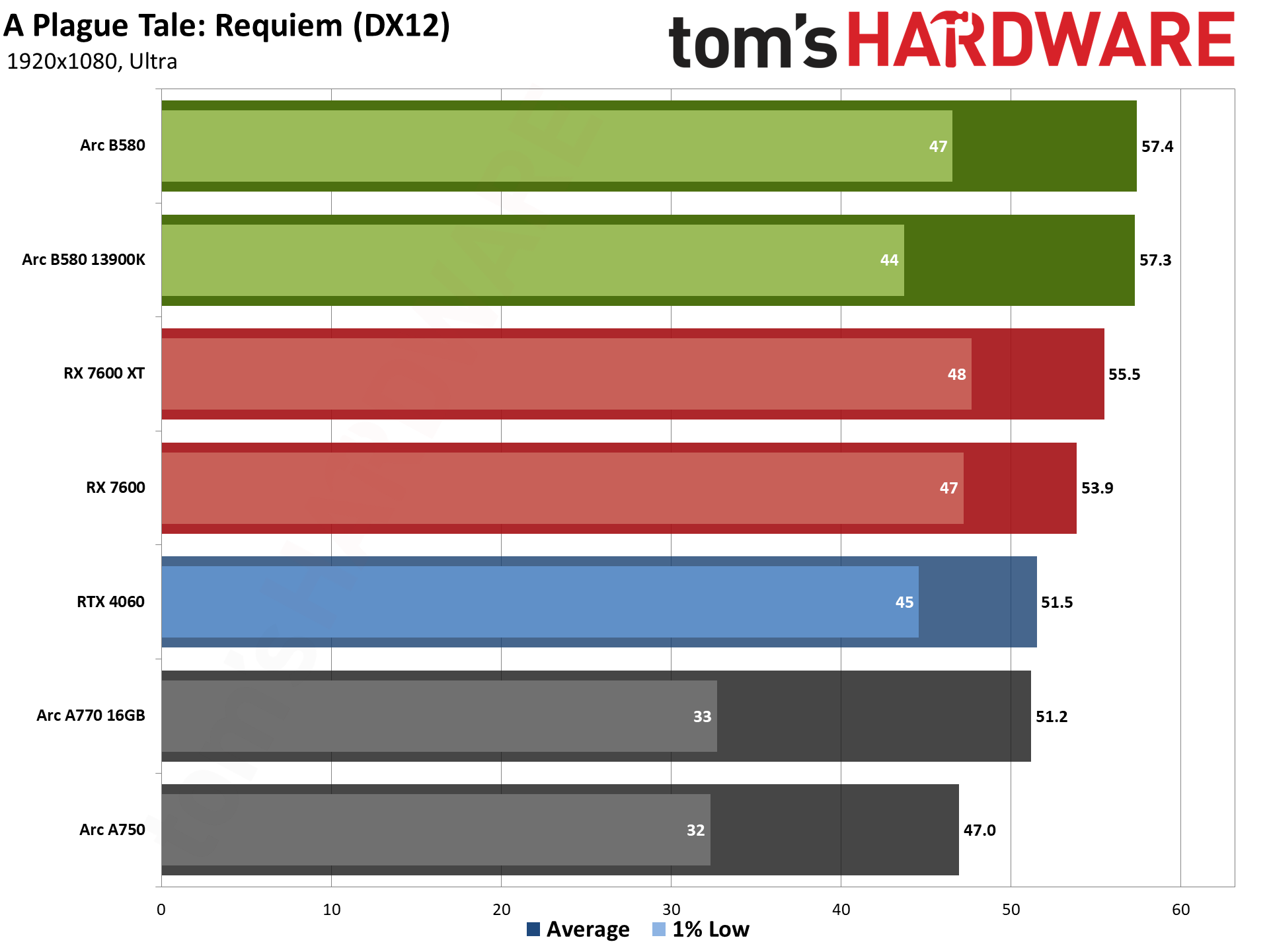

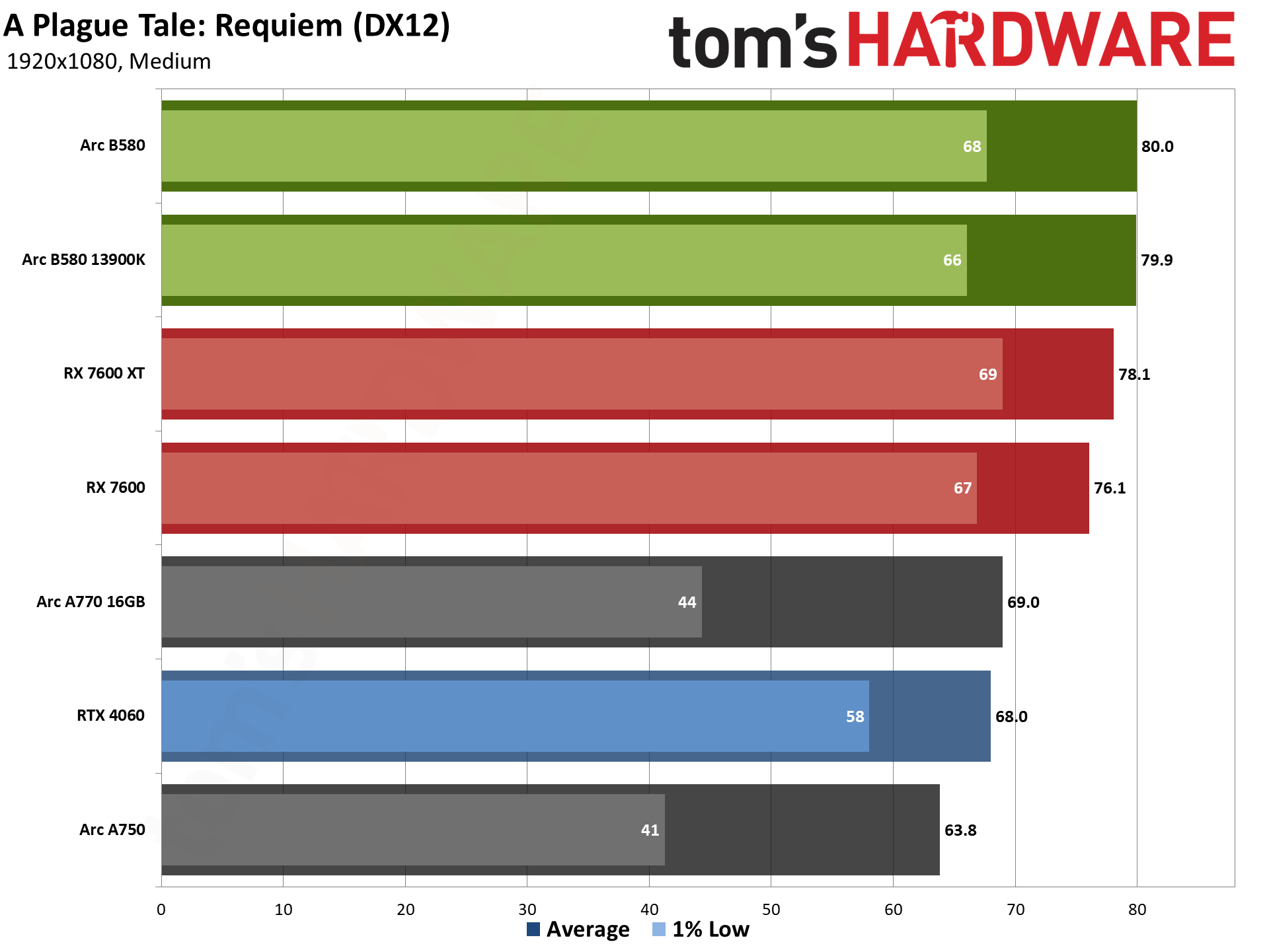

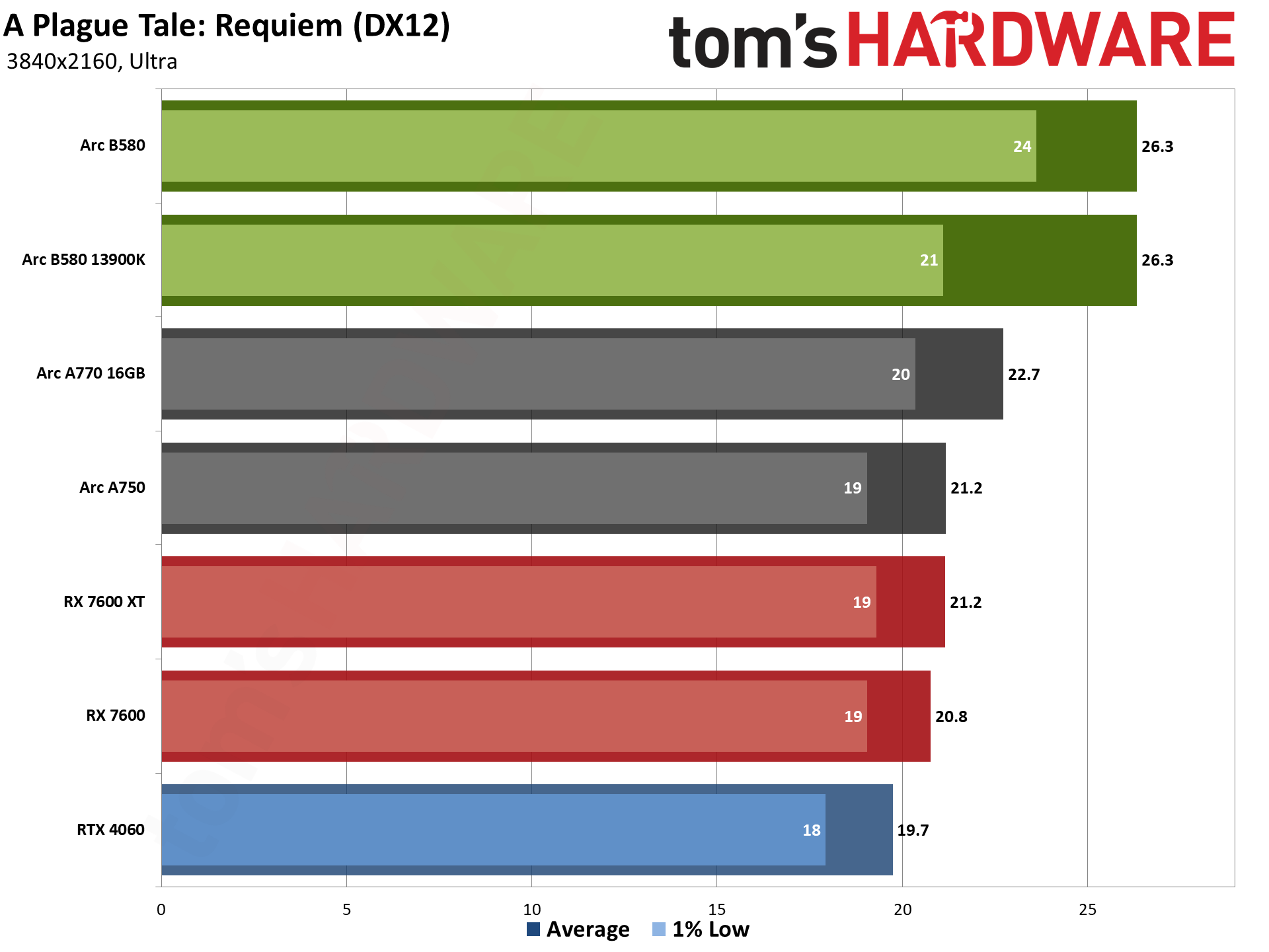

A Plague Tale: Requiem uses the Zouna engine and runs on the DirectX 12 API. It's an Nvidia promoted game that supports DLSS 3, but neither FSR or XeSS. (It was one of the first DLSS 3 enabled games as well, incidentally.)

Arc B580 only holds a 5–13 percent lead over the 7600 at 1440p and lower, with a 27% win at 4K. It's also 3–10 percent faster than the 7600 XT at lower resolutions, and 25% faster at 4K, so this seems to be a game that doesn't benefit from more than 8GB of VRAM.

Despite being Nvidia promoted, the RTX 4060 doesn't fare too well. B580 wins by 12–33 percent. And generationally, B580 delivers 21–25 percent more performance than the A750, and 12–16 percent higher framerates than the A770.

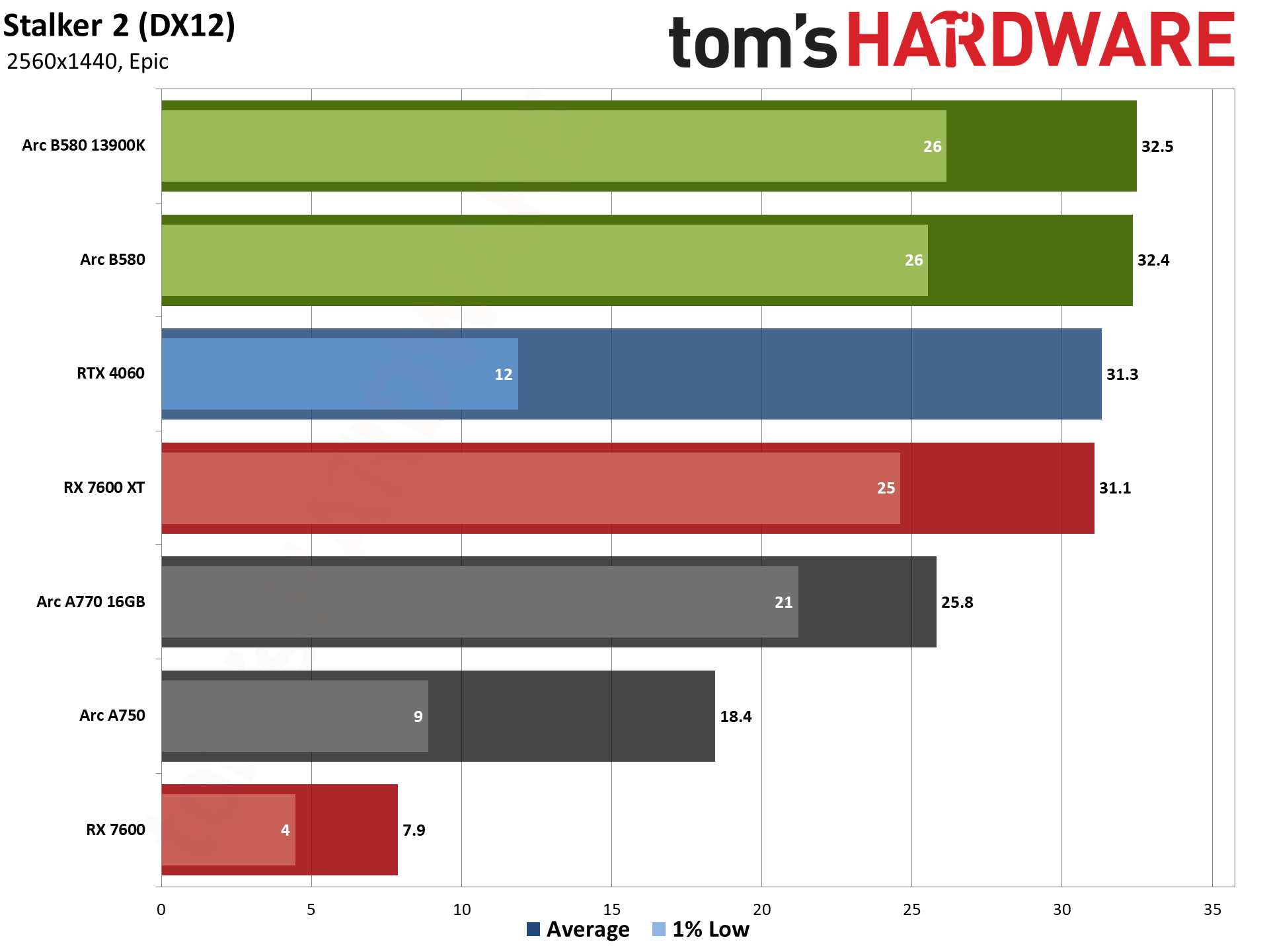

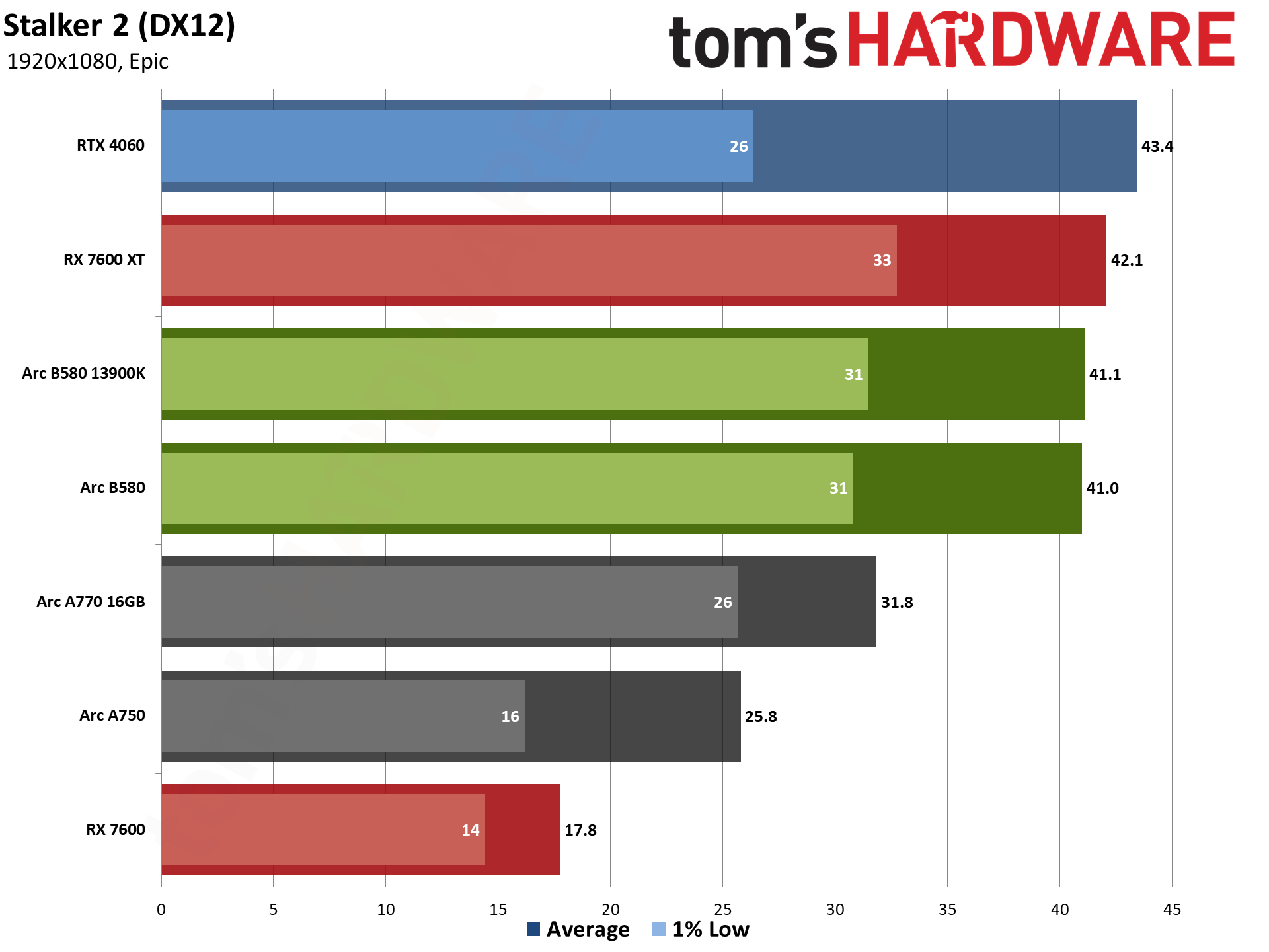

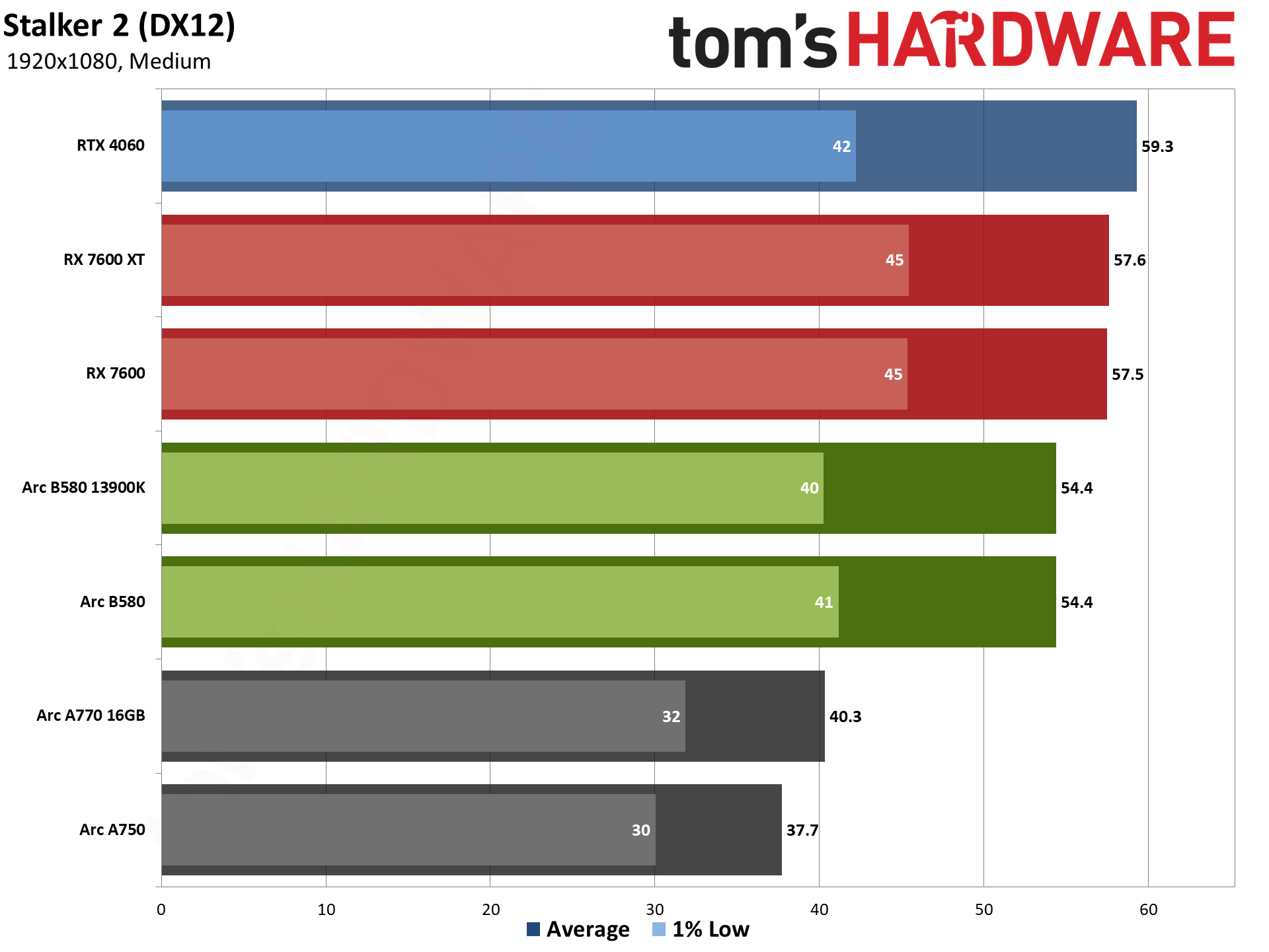

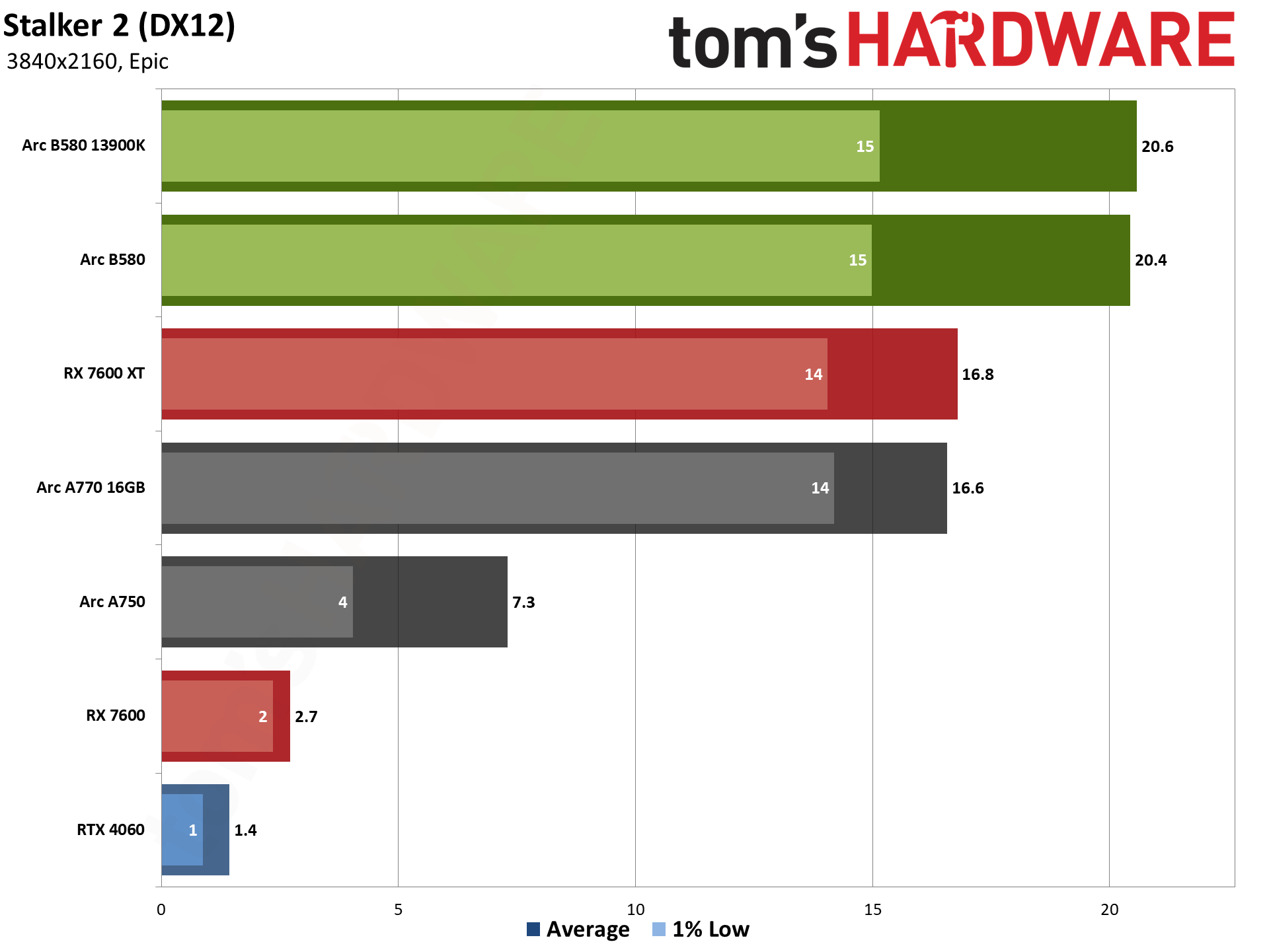

Stalker 2 came out a few weeks ago, the day after Flight Simulator 2024. That makes it the newest game in our test suite, and we suspect performance will change in the coming weeks and months. It uses Unreal Engine 5, but without any hardware ray tracing support — the Lumen engine also does "software RT" that's basically just fancy rasterization as far as the visuals are concerned, though it's still quite taxing. VRAM can also be a serious problem when trying to run the epic preset, with 8GB cards struggling at most resolutions (depending on the GPU vendor).

The B580 loses to the 7600 at medium settings, but more than doubles AMD's performance with the epic preset at 1080p, is four times faster at 1440p, and over seven times faster at 4K. Having 16GB on the 7600 XT makes all the difference, and AMD gets a win at 1080p and only trails slightly behind at 1440p, though B580 still wins by 22% at 4K.

Stalker 2 is, I believe, Nvidia promoted, and the RTX 4060 ends up doing pretty well overall. It's faster than B580 at 1080p, and only 1 fps slower at 1440p. But again, 4K epic performance collapses to basically nothing — 1.4 fps on the 4060.

B580 offers a big generational improvement over the A-series, beating the A750 by 44–76 percent at 1440p and below (and 180% at 4K where the A750 VRAM hits the wall). A770 doesn't run out of VRAM, but the B580 still leads by 23–35 percent.

Star Wars Outlaws came out this past summer, with a rather poor reception. But good or bad, it's a newer game using the Snowdrop engine and we wanted to include a mix of options. Outlaws also happens to be one of the games where we've had the most difficulty on Arc GPUs, with some rendering errors on the B580 using the initial preview drivers. The public drivers did much better on the A-series GPUs, however, so Intel just needs to get B-series support caught up with the latest driver updates.

B580 gets a clean sweep over the 7600 in performance, leading by 26–33 percent. RX 7600 XT doesn't do much better, with B580 showing a 25–30 percent lead. But AMD GPUs never crashed in the game during our testing, which is still a problem on the B580 with the current 6252 drivers.

B580 also beats the RTX 4060, by 5–30 percent, but only 11% or less at 1440p and below. Also, 4K hits the VRAM wall on the 8GB cards and minimum fps drops into the single digits.

There's a different bug with the A-series public drivers and Star Wars Outlaws. Medium 1080p ended up performing worse than ultra 1080p. Oops. Intel is aware of the issue and working on a fix. Looking just at the ultra results, though, B580 is 31–40 percent faster than the A750, and 22–37 percent faster than the A770.

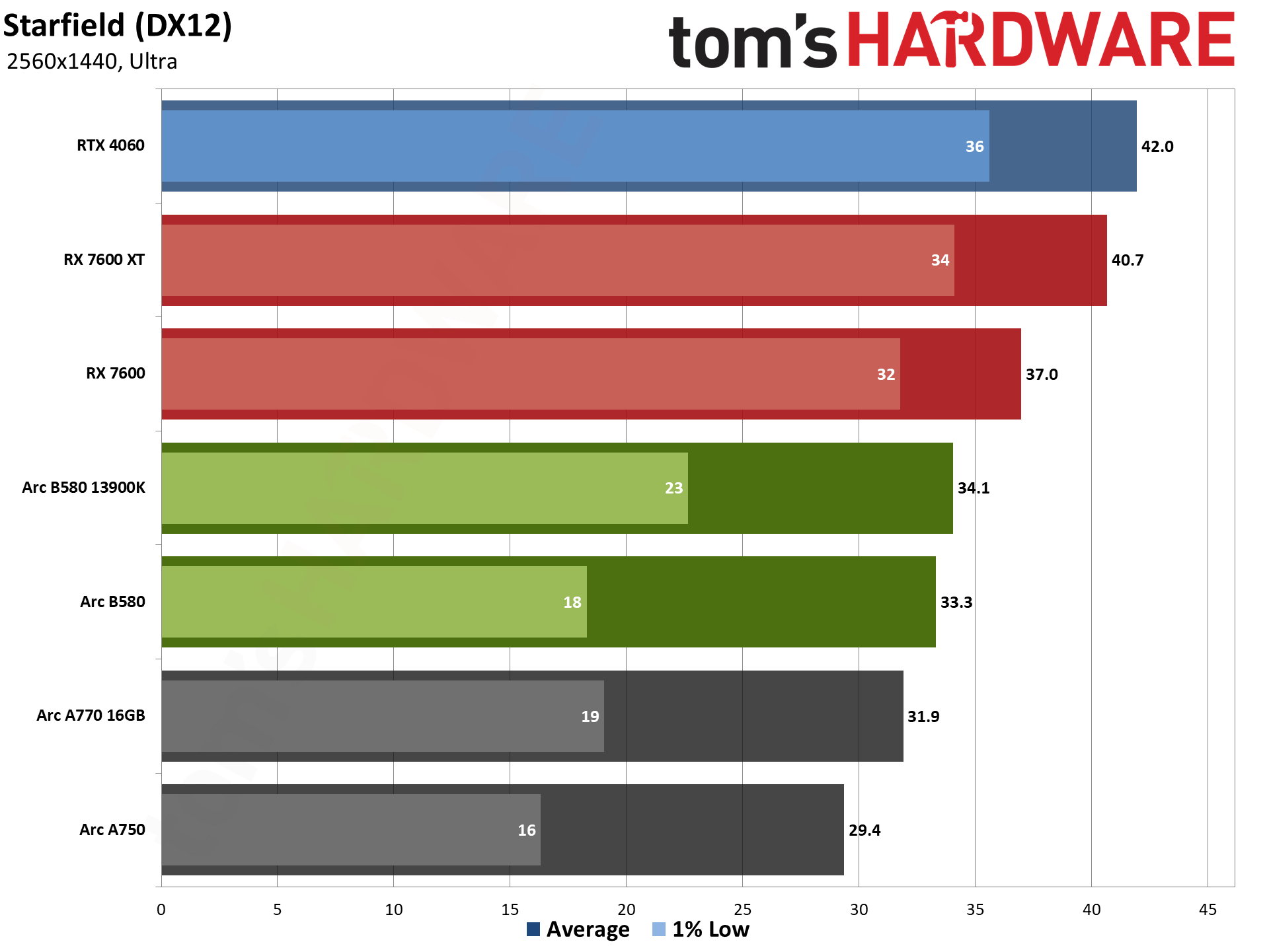

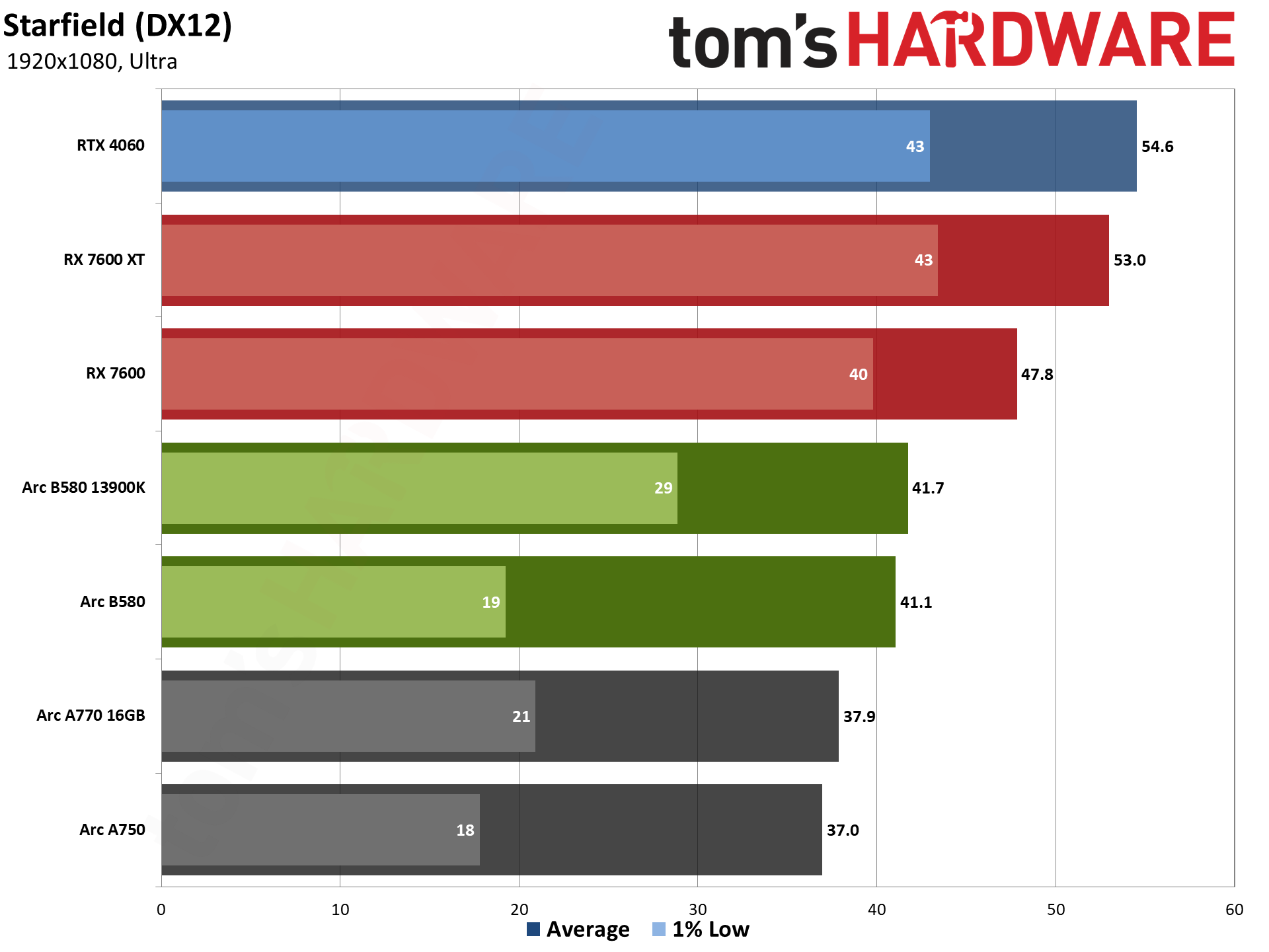

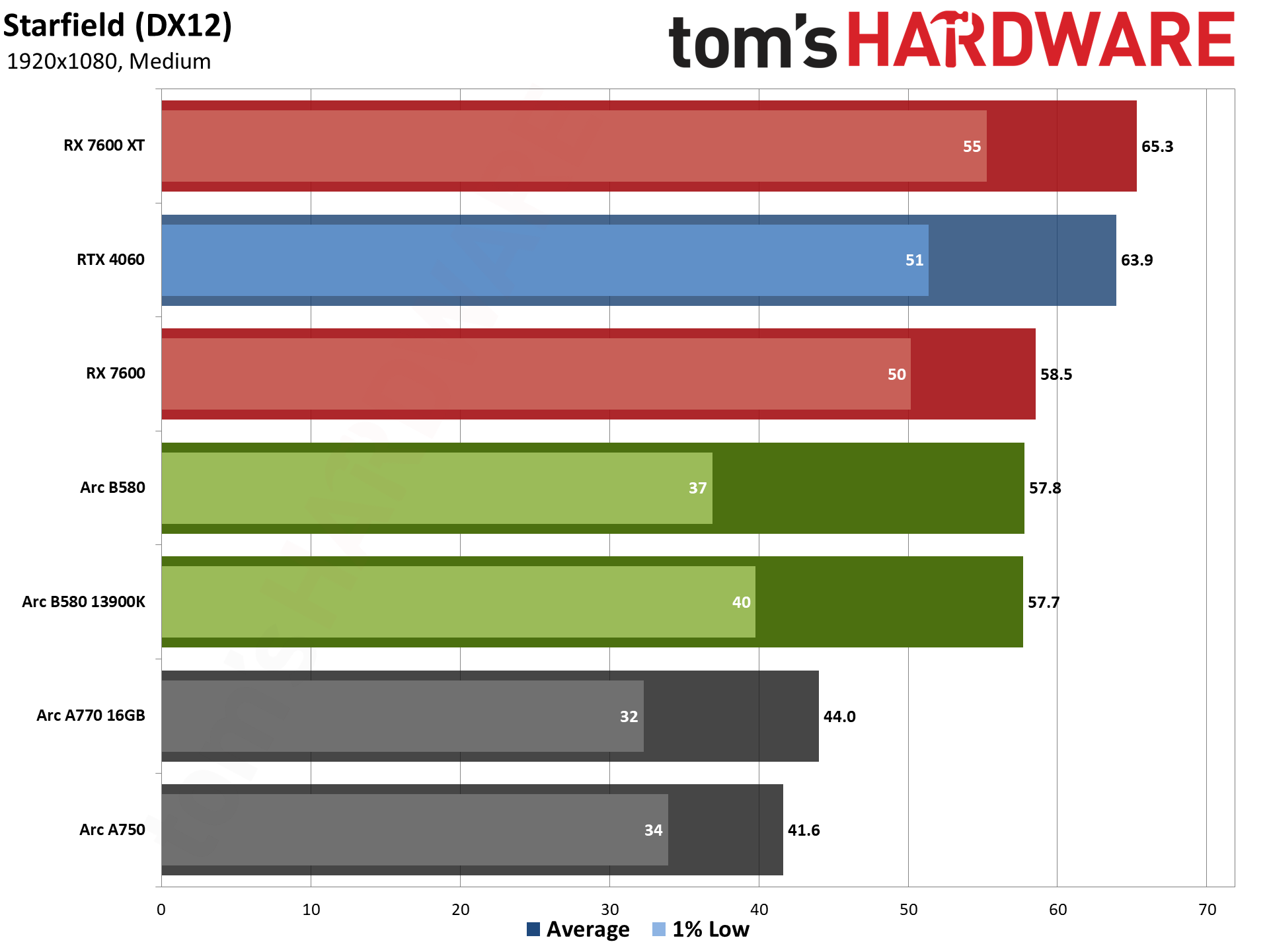

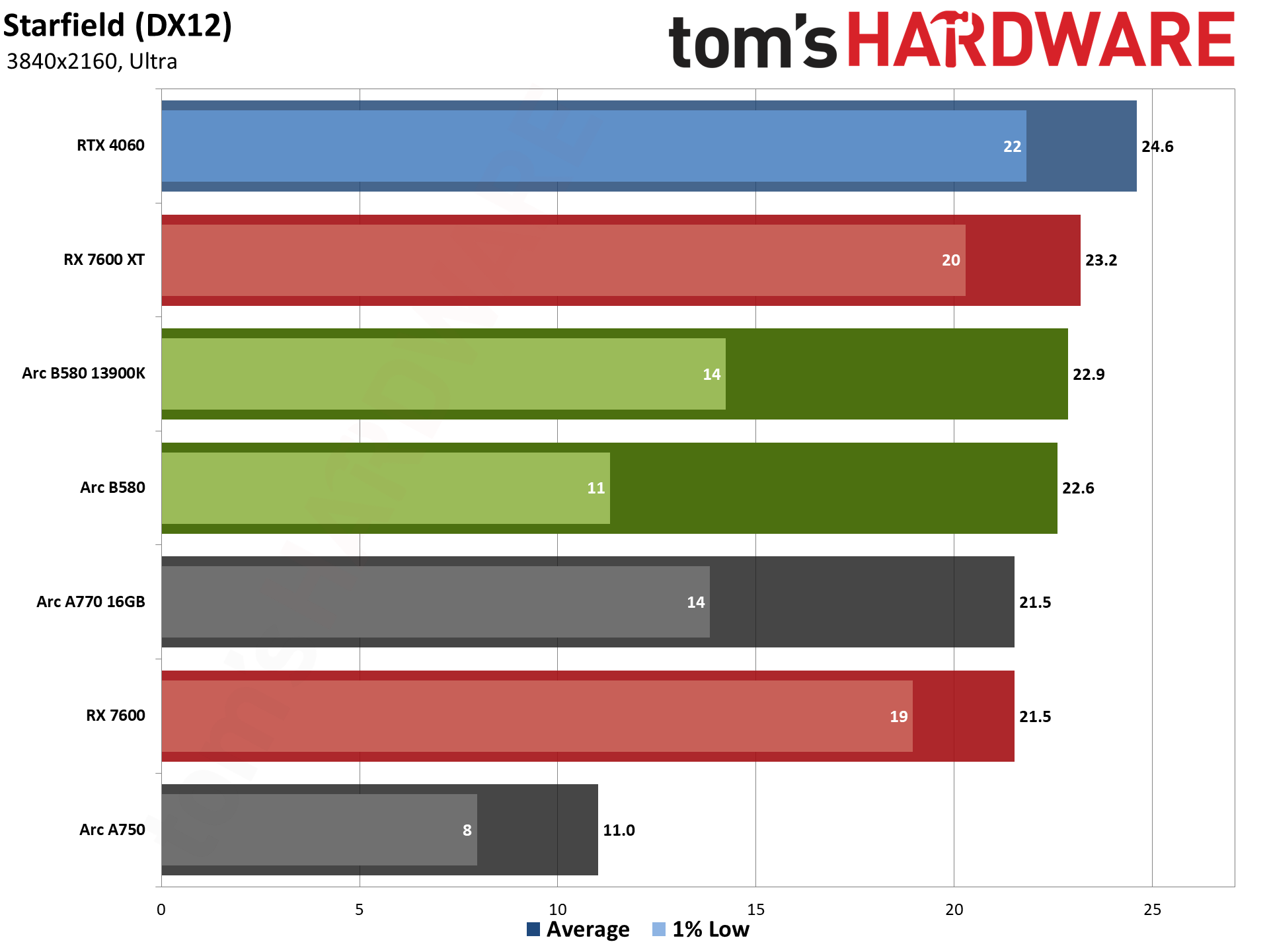

Starfield uses the Creation Engine 2, an updated engine from Bethesda where the previous release powered the Fallout and Elder Scrolls games. It's another fairly demanding game, and we run around the city Akila, which is one of the more taxing locations in the game.

This is also one of the worst showings for the B580, and minimum fps is a clear problem area. The ultra preset drops minimums below 20 fps at all three resolutions.

VRAM isn't really a problem, as the RX 7600 generally does fine. It loses to the B580 at 4K but leads by 1–17 percent at 1440p and 1080p. The 7600 XT gets the clean sweep, with 3–29 percent higher performance. Nvidia's RTX 4060 easily wins as well, with the top result in most of the tests. It's 9–33 percent faster than the B580, but minimum fps ends up being 39–123 percent higher on the 4060, with 1080p ultra being particularly problematic for Arc.

Generationally, the B580 still gets the win, beating the A750 by 11–39 percent at 1080p and 1440p, and 105% at 4K. It's also 4–31 percent faster than the A770. But whatever else is going on in Starfield, it looks like Intel needs to do a lot more driver tuning here.

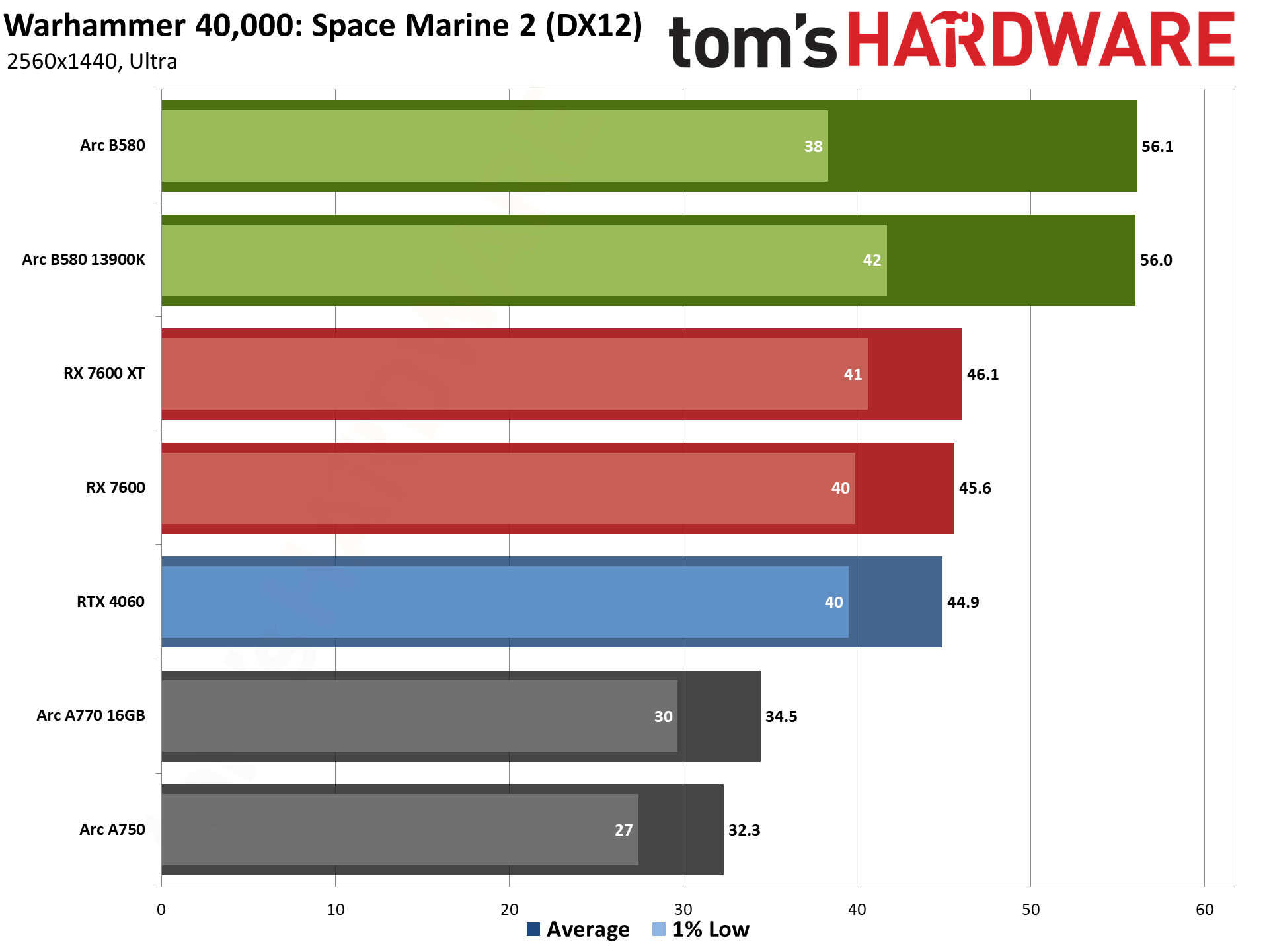

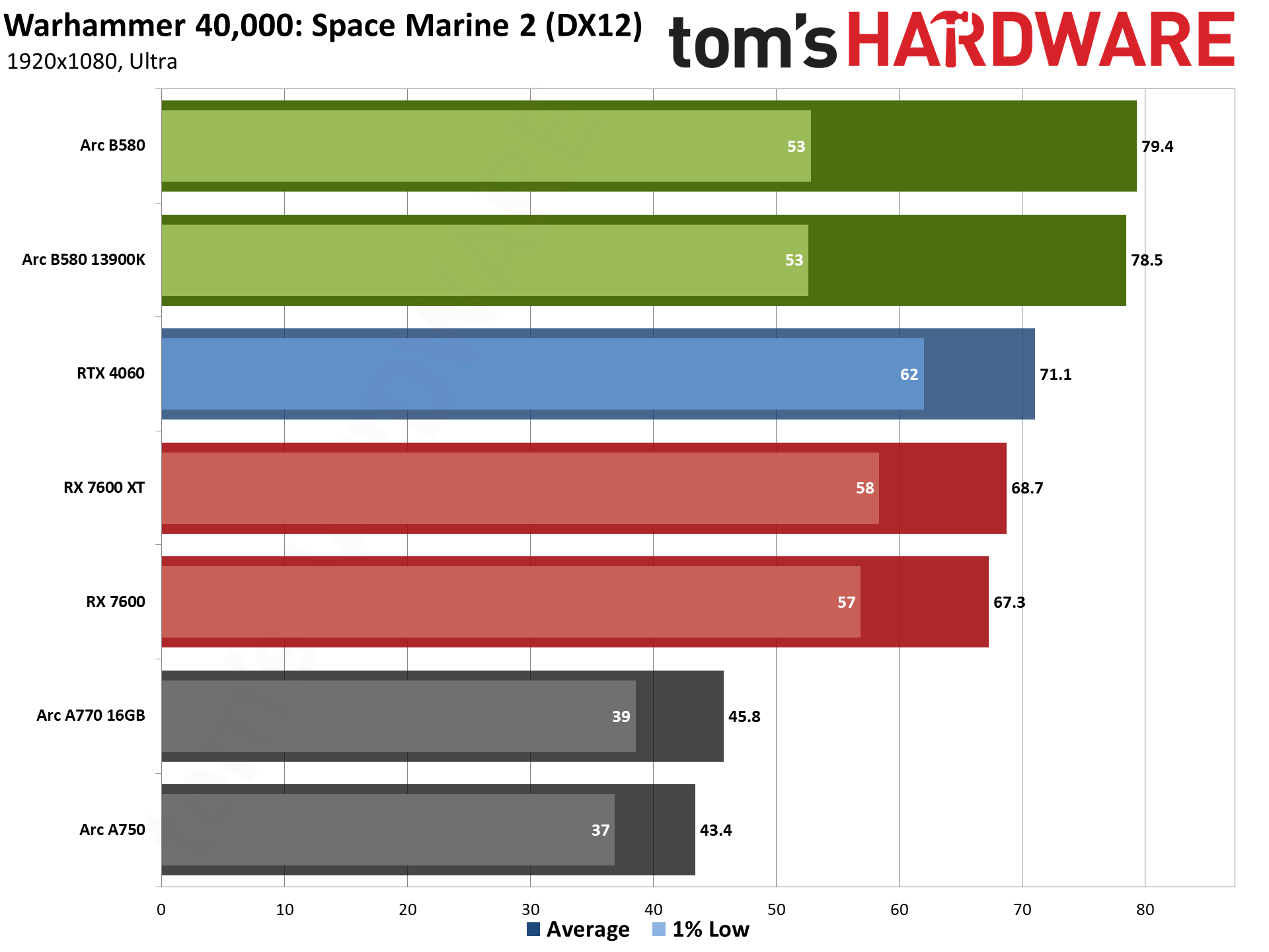

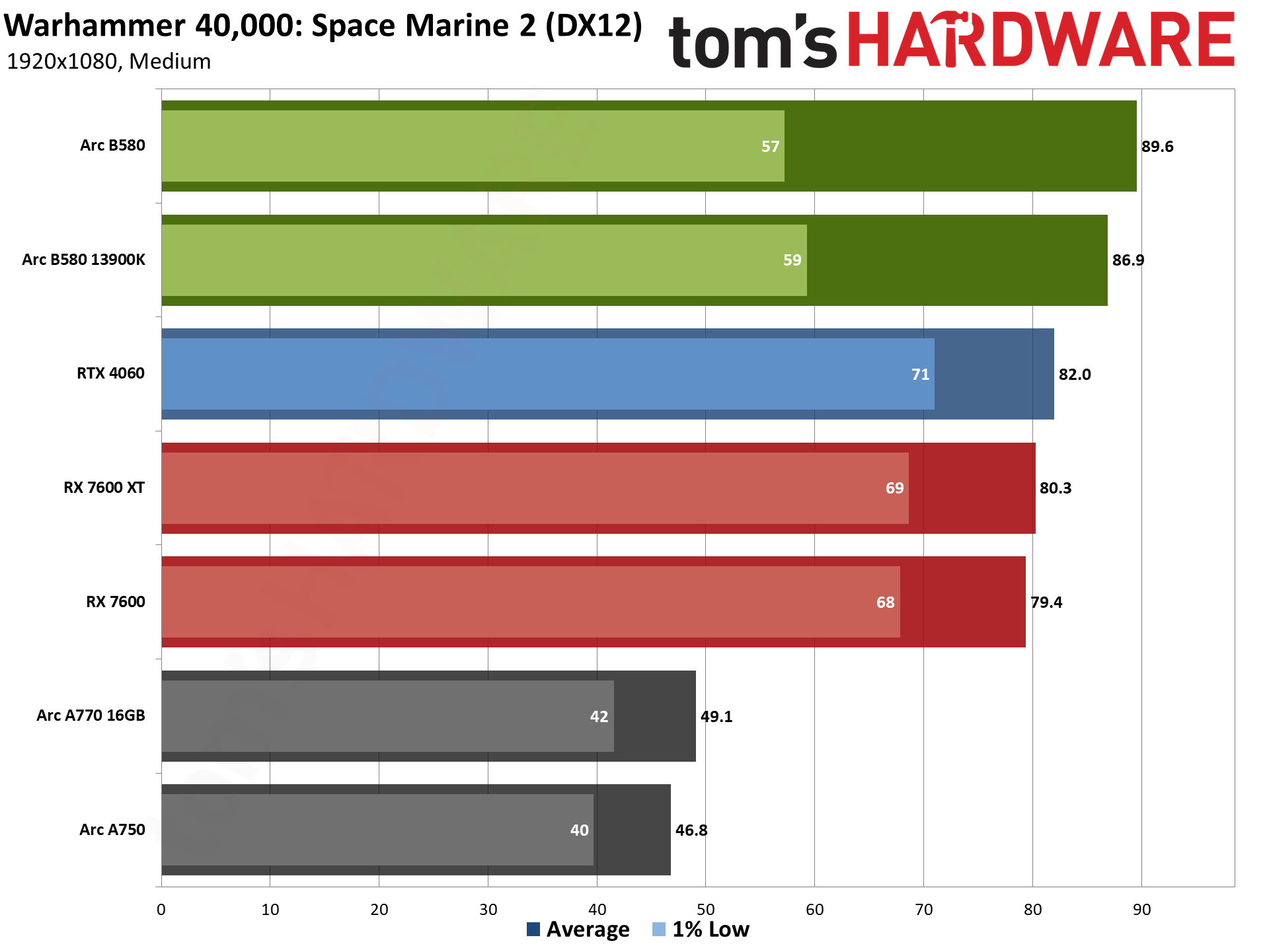

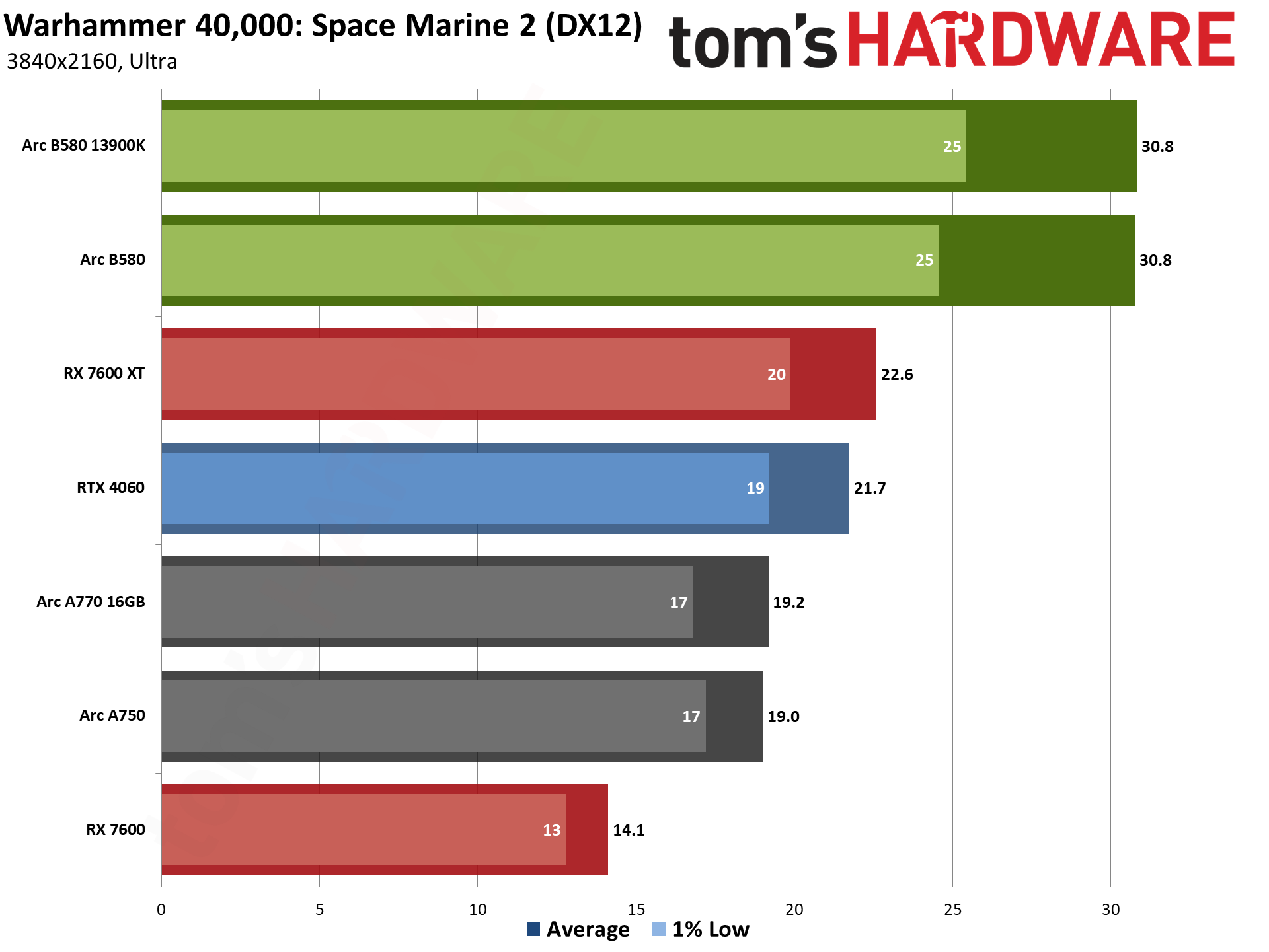

Wrapping things up, Warhammer 40,000: Space Marine 2 is yet another AMD promoted game that's only a few months old. It runs on the Swarm engine and uses DirectX 12, without any support for ray tracing hardware. We use a sequence from the introduction, which is generally less demanding than the various missions you get to later in the game but has the advantage of being repeatable and not having enemies everywhere. We may need to change our test sequence on this one, or drop it, but let us know what you think.

The B580 beats the vanilla 7600 at lower resolutions, with a 13–23 percent margin of victory, but minimum fps ends up 4–16 percent lower on the B580. 4K requires more VRAM, however, and Intel gets more than double the 7600 result. It's another game where 16GB doesn't help AMD much, and the B580 leads the 7600 XT by 12–36 percent (but again with worse minimums at 1440p and below).

The same pattern holds against the RTX 4060. The B580 has 9–42 percent higher average fps, but the 4060 has better minimums at the two lower resolutions. Only 4K really pushes beyond the capabilities of the 4060.

But against the A-series? B580 destroys the A750, offering 62–91 percent higher performance. And it's also 60–83 percent faster than the A770. Perhaps this is another game that makes use of the "execute indirect" functionality of DirectX 12.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Current page: Intel Arc B580 Rasterization Gaming Performance

Prev Page Intel Arc B580 Test Setup Next Page Intel Arc B580 Ray Tracing Gaming Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Math Geek nicely done :)Reply

looks like a good value. i'm in the market for my next card but seems like waiting a little bit to see what AMD does next is not too bad of an idea. i hate waiting to see what the next best thing is but this close it seems like prudent advice.

side note: it does look like you forgot to replace the place holders on the power consumption paragraph.

"On average, the B580 used xxxW at 1080p medium, xxxW at 1080p ultra, xxxW at 1440p, and xxxW at 4K. As you'd expect, power use typically increases at higher settings and resolutions." -

Jagar123 I am happy to have competition in the market. I imagine next gen AMD and Nvidia cards will be stronger competitors but they might be priced poorly again. Price to performance is key here. We'll see in a month or so.Reply -

shady28 Great review, against relevant parts for this price class too :DReply

Given that Steam shows the 3 most popular GPUs are the 3060 discrete, 4060 laptop, and 4060 discrete, Intel now has a GPU that competes in the largest part of the segment - and leads it in both value and performance.

Granted AMD and Nvidia are about to release new GPUs, but let's also note that the 5060 / 8600 aren't likely to show up until late 2025 or early 2026 if they follow their normal pattern. -

palladin9479 Great review, I'm in the market for a SFF two slot low power card for a living room system. The APU can only do so much and I'm starting to hit walls with it lately so a lower power dGPU might be the only real answer.Reply -

Gururu I guess it will come down to an availability issue. I doubt we will see superior cards by AMD or nVidia in this price bracket by end of Q1 2025. We will certainly see lots of benchmarks blowing these early battlemage offerings in January, but nothing ready for purchase. Later battlemage offerings are in my best guess going to be in the $400 range, likely beating 7800 and 4070, but again probably not until mid-late Q1. If AMD and nVidia drop anything crushing a 4090 you can bet it will be in the $700+ range. Is it fair to say that something beating the B580 readily available in April for $250 is fair competition now? I don't know. Maybe not if a normal consumer can actually get B580 silicon before Christmas.Reply -

Eximo Reply

I would probably still lean towards an RTX 3050 6GB for that. B580 is still a little power hungry for the job.palladin9479 said:Great review, I'm in the market for a SFF two slot low power card for a living room system. The APU can only do so much and I'm starting to hit walls with it lately so a lower power dGPU might be the only real answer.

I use an A380, and that isn't ideal either, since it still needs an 8-pin (at least that model). Though supposedly still only a 75W GPU. -

DS426 Great review, Jarred! The elaboration on your thinking and updating of your test bench's hardware and software is appreciated.Reply

It'll be some time before AMD and NVIDIA (green wants it written this way, by the way: http://http.download.nvidia.com/image_kit/LG_NVCorpBadge.pdf ... was curious as I noticed they have it written that way on their website) have new budget GPU's in this price class, so I myself wouldn't really recommend that a prospective owner waits. Of course, a lot of it also depends on if building new or upgrading (and upgrading from what). There's a huge user base at this price point, so I do imagine that Intel will get some market penetration for end users, not just prebuilds. This, particularly since day 1 drivers are already fairly stable overall and Intel now has the value leader at this price point; Intel didn't mess up this launch, whereas botched launches can tarnish audience sentiment of the product for months and years, if not permanently. -

King_V Definitely liking what I see here. And, glad to know that Intel is taking this very seriously. They're clearly not messing around.Reply -

rluker5 Transistor density pretty close to AMD.Reply

Transistor density of 7600XT is 65.2 M/mm2 and B580 is 72.1 M/mm2 per Techpowerup. Sure B580 is on 5nm while 7600XT is on 6nm but that isn't that big a difference. Definitely moving in the right direction. And more importantly performance per mm2 is much closer to AMD. And performance per watt.

Catching up really fast. -

caseym54 Probably flogging a dead horse here, but a 6-column table with 5 columns visible and a slider is truly lame.Reply