Nvidia confirms Blackwell Ultra and Vera Rubin GPUs are on track for 2025 and 2026 — post-Rubin GPUs in the works

Come to GTC, says Nvidia.

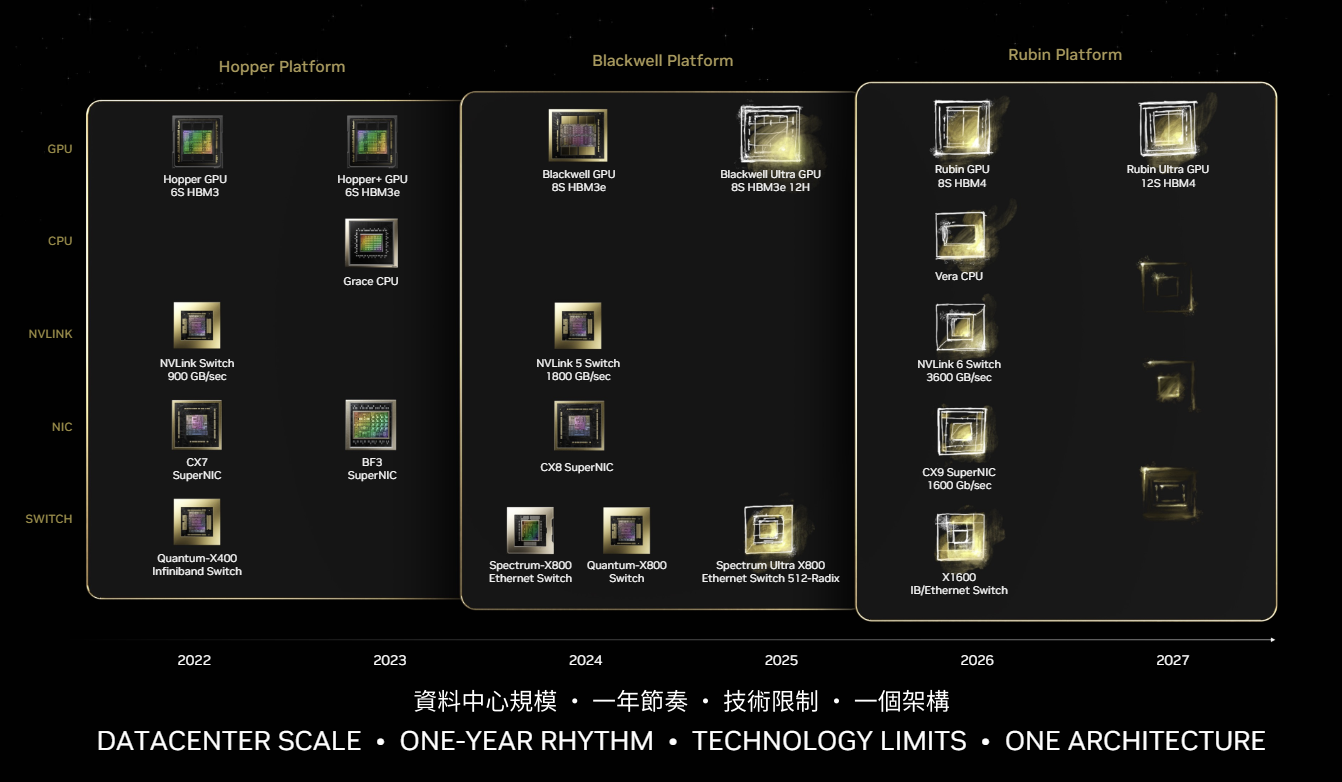

A design flaw delayed Nvidia's rollout of Blackwell GPUs for data centers last year, prompting the company to redesign the silicon and packaging. However, this did not affect Nvidia's work on mid-cycle refresh Blackwell 300-series (Blackwell Ultra) GPUs for AI and HPC and next-generation Vera Rubin GPUs. Nvidia can already share some details about Rubin GPUs and its post-Rubin products.

"Blackwell Ultra is [due in the] second half," Jensen Huang, chief executive of Nvidia, reaffirmed analysts and investors at the company's earnings conference call. "The next train [is] Blackwell Ultra with new networking, new [12-Hi HBM3E] memory, and, of course, new processors. […] We have already revealed and have been working very closely with all of our partners on the click after that. The click after that is called Vera Rubin and all of our partners are getting up to speed on the transition to that. […] [With Rubin GPUs] we are going to provide a big, big, huge step up."

Later this year, Nvidia plans to release its Blackwell B300-series solutions for AI and HPC (previously known as Blackwell Ultra) that will offer higher compute performance as well as eight stacks of 12-Hi HBM4E memory, thus providing up to 288GB of memory onboard. Unofficial information indicates that performance uplift enabled by Nvidia's B300-series will be around 50% compared to the comparable B200-series products, though the company has yet to confirm this.

To further improve performance, B300 will be offered with Nvidia's Mellanox Spectrum Ultra X800 Ethernet switch, which has a radix of 512 and can support up to 512 ports. Nvidia is also expected to provide additional system design freedom to its partner with its B300-series data center GPUs.

Nvidia's next-generation GPU series will be based on the company's all-new, codenamed Rubin architecture, further improving AI compute capabilities as industry leaders march towards achieving artificial general intelligence (AGI). In 2026, the first iteration of Rubin GPUs for data centers will come with eight stacks of HBM4E memory (up to 288GB). The Rubin platform will also include a Vera CPU, NVLink 6 switches at 3600 GB/s, CX9 network cards supporting 1,600 Gb/s, and X1600 switches.

Jensen Huang plans to talk about Rubin at the upcoming GPU Technology Conference (GTC) in March, though it remains to be seen what he plans to discuss. Surprisingly, Nvidia also intends to talk about post-Rubin products at the GTC. From what Jensen Huang announced this week, it is unclear whether the company plans to reveal details of Rubin Ultra GPUs or its GPU architecture that will come after the Rubin family.

Speaking of Rubin Ultra, this could indeed be quite a breakthrough product. It is projected to come with 12 stacks of HBM4E in 2027 once Nvidia learns how to efficiently use 5.5-reticle-size CoWoS interposers and 100mm × 100mm substrates made by TSMC.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

"Come to GTC and I will [tell you about] Blackwell Ultra," said Huang. "There are Rubin, and then [we will] show you what is one click after that. Really, really exciting new product."

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user I wonder when they're going to begin moving away from CUDA. I think it really is holding them back, when most others have already move on to dataflow architectures. I liken Nvidia's current lead to how Intel's lead from around 10-15 years ago was primarily based on better manufacturing tech.Reply

CUDA was once Nvidia's biggest advantage, but now it's turning into their greatest liability. They know it, too. Just look at their edge SoCs, which pack most of their inferencing horsepower in the NVDLA engines, not the iGPUs. -

bit_user Reply

Just a few guesses:George³ said:HBM4E, hmm, why Nvidia have not plans to use 16 stacks?

Perhaps the timeframe for availability of the 12-high capable dies is sooner?

Heat, given that it's going to be stacked atop logic dies. Heat was also an issue for them in B200: https://www.tomshardware.com/tech-industry/artificial-intelligence/nvidia-partners-indirectly-confirms-blackwell-b200-gpu-delay-offer-interested-parties-liquid-cooled-h200-instead Maybe higher stacks require more vias, making it less cost- & area-efficient?

Perhaps the optimal compute vs. data ratio favors slightly smaller stacks?

Or, perhaps something to do with concerns about yield, the more dies you stack. Yield seems to have been an ongoing area of struggle, for the B200. -

TCA_ChinChin Reply

What do you mean? The current industry seems entrenched in CUDA and even if there are cases where different architectures might have advantages, I can't see CUDA moving away or even hindering Nvidia's position much in the future. The only competitors I'm aware of like ROCm and UXL aren't really very competitive.bit_user said:I wonder when they're going to begin moving away from CUDA. I think it really is holding them back, when most others have already move on to dataflow architectures. I liken Nvidia's current lead to how Intel's lead from around 10-15 years ago was primarily based on better manufacturing tech.

CUDA was once Nvidia's biggest advantage, but now it's turning into their greatest liability. They know it, too. Just look at their edge SoCs, which pack most of their inferencing horsepower in the NVDLA engines, not the iGPUs.

Nvidia can certainly fumble like Intel has done recently but I wouldn't say CUDA is a liabilty now, just like 15 years ago I never though Intel would be in their current position. -

valthuer AI/Datacenters, is where real money is made for Nvidia. I wonder when they 're gonna stop making consumer GPUs.Reply -

bit_user Reply

CUDA is too general and depends on SMT to achieve good utilization. SMT isn't the most efficient, in terms of energy or silicon area. Dedicated NPUs don't work this way - they use local memory, DSP cores, and rely on DMAs to stream data in and out of local memory. Nvidia has been successful in spite of CUDA's inefficiency, but they can't keep their lead forever if they don't switch over to a better programming model for the problem.TCA_ChinChin said:What do you mean?

They did succeed in tricking AMD into following them with HIP, rather than potentially leap-frogging them. With oneAPI, I think Intel is also basically following the approach Nvidia took with CUDA. As long as they keep thinking they're going to beat Nvidia at its own game, they deserve to keep losing. AMD should've bought Tenstorrent or Cerebras, but now they're probably too expensive.

At least AMD and Intel both have sensible inferencing hardware. Lisa Su should pay attention to the team at Xilinx that designed what they now call XDNA - I hope that's what UDNA is going to be.

I already gave you an example where even Nvidia clearly sees the light. Just look at their NVDLA engines, which are now already on their second generation. Those aren't programmable using CUDA.TCA_ChinChin said:The current industry seems entrenched in CUDA and even if there are cases where different architectures might have advantages, I can't see CUDA moving away or even hindering Nvidia's position much in the future.

Just sit back and wait. I wonder if Rubin is going to be their first post-CUDA training architecture. -

hannibal Replyvalthuer said:AI/Datacenters, is where real money is made for Nvidia. I wonder when they 're gonna stop making consumer GPUs.

They just start making gaming gpus by using older nodes that are not needed for AI chips!

$2000 to $ 4000 for gaming GPU is still profit even if it is tiny compared to AI stuff. So don´t worry! There will be overpriced gaming GPUs also in the future!

;) -

valthuer Replyhannibal said:There will be overpriced gaming GPUs also in the future!

;)

Thank God! :ROFLMAO: -

bit_user Reply

RTX 5090 is made on a TSMC N4-class node, which is the same as they're using for AI training GPUs. The RTX 5090's die can't get much bigger. So, the only way they could do a 3rd generation on this family of nodes is by going multi-die, which Intel and AMD have dabbled with (and Apple successfully executed), but Nvidia has steadfastly avoided. What I've heard about the multi-die approach is that the amount of global data movement makes this inefficient for rendering. So, I actually doubt they'll go in that direction, at least not yet.hannibal said:They just start making gaming gpus by using older nodes that are not needed for AI chips!

My prediction is that they'll use a 3 nm-class node for their next client GPU. They're already set to use a N3 node for Rubin, later this year.

Yeah, I think the main risk that AI poses to their gaming products is simply that it tends to divert resources and focus. That's probably behind some of the many problems that have so far affected the RTX 5000 launch.hannibal said:$2000 to $ 4000 for gaming GPU is still profit even if it is tiny compared to AI stuff. So don´t worry! There will be overpriced gaming GPUs also in the future!

Nvidia does seem to keep doing research on things like neural rendering, so that clearly shows they're not leaving graphics any time soon. It's more that they're focusing on the intersections between AI and graphics, which is certainly better than nothing. -

TCA_ChinChin Reply

I can see Nvidia introducing new architectures in the future and shifting away from CUDA eventually, I can't see Nvidia's position dominance being challenged. My point is:bit_user said:I already gave you an example where even Nvidia clearly sees the light. Just look at their NVDLA engines, which are now already on their second generation. Those aren't programmable using CUDA.

Just sit back and wait. I wonder if Rubin is going to be their first post-CUDA training architecture.

"Nvidia can certainly fumble like Intel has done recently but I wouldn't say CUDA is a liabilty now, just like 15 years ago I never though Intel would be in their current position."

Despite all the drawbacks you've pointed out, they're clearly still on top of their game. CUDA has technical downsides but it certainly doesn't have significant financial downsides for Nvidia, at least not yet. I'm not holding my breath for Rubin, maybe afterwards.