AMD A10-7800 APU Review: Kaveri Hits the Efficiency Sweet Spot

AMD recently introduced another model in its A-series APU family called the A10-7800. While we already know a lot about the Kaveri architecture, this particular chip's power profile makes it more interesting than the performance-oriented incarnations.

Results: OpenCL and HSA

While we don’t want to rely on synthetic benchmarks, we'll use a couple to illustrate the potential gains of OpenCL and AMD's HSA initiative as those efforts start to take hold.

LuxMark 2.0

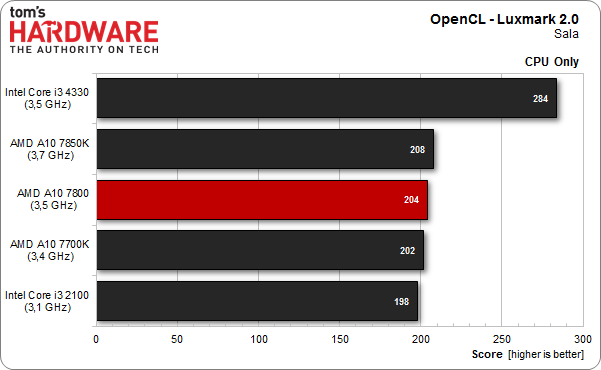

In order to assess the A10-7800's capabilities, we run three separate tests: CPU-only, GPU-only, and both combined. While we might have expected the more resource-laden APUs to win, Intel's Core i3-4330 and its superior IPC still puts in a strong showing. Let’s start with just the CPU:

We naturally expect the Haswell design to fare well in a CPU-only measure of performance. But while the win is unsurprising, the magnitude of Intel's advantage is fairly overwhelming. Favor is shifted to AMD by only using the on-chip graphics engine:

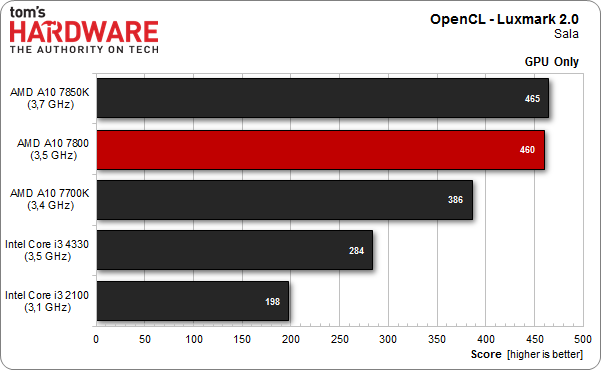

Intel's HD Graphics implementation is outclassed by Graphics Core Next in the Kaveri design. But what happens when CPU and GPU resources are utilized simultaneously?

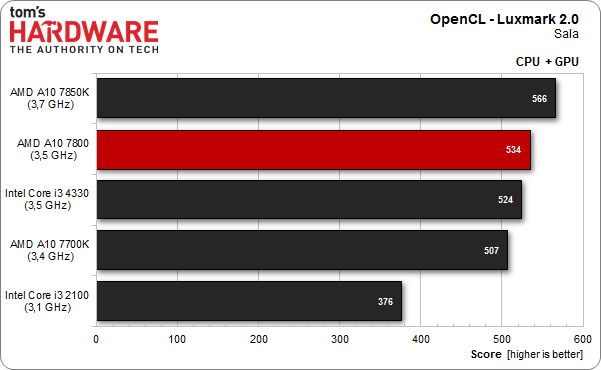

Just as Bill Murray likes to photobomb pictures, the Core i3-4330 sneaks right in the middle of AMD’s family photo. One of the reasons appears to be that the Core i3's CPU and GPU scores are added together, yielding an aggregate, while the APUs are weighed differently. Perhaps one on-chip complex or the other isn't running at peak performance under a combined full load.

HSA: A Great Idea in Need of Software Support

Leading up to the Kaveri APU introduction, AMD put a lot of effort into evangelizing the benefits of its HSA (Heterogeneous System Architecture) initiative. Again, if you want to know more, read our Kaveri launch article. However, even now, several months later, the number of applications exploiting HSA remains small. That's a disappointing state of affairs, since the fundamentals of HSA are enticing.

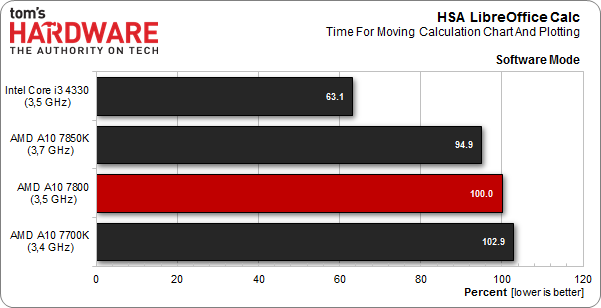

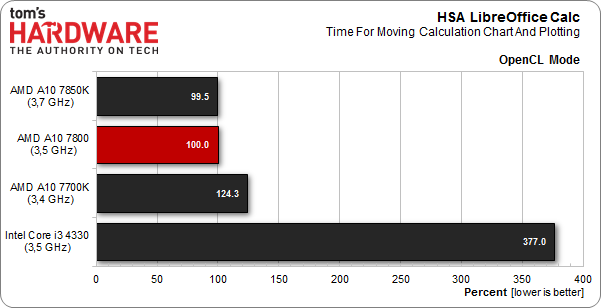

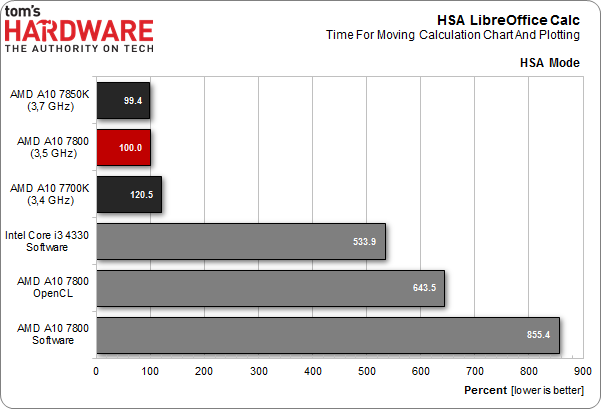

Moving on from theory to practice, the LibreOffice benchmark originally provided by AMD impressively documents the value proposition of HSA. Once again, we conduct three separate test runs: CPU-only, OpenCL-only, and HSA. Since Intel CPUs do not support HSA, they aren't represented in the third chart. And we didn’t bother benchmarking the outdated Core i3-2100 at all.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Let’s start with the CPU-only chart, which, as we might expect, the Core i3-4330 dominates:

Thirty-seven percent higher performance. Such an advantage cries for an OpenCL-based rematch. Said and done. Surprisingly, Intel’s relatively modest graphics engine is slower than its CPU. Or perhaps the company's drivers are to blame.

It'd be easy to guess that a seeded test like this one would favor AMD's hardware. And it does, demonstrating what a highly parallelized workload can do on hardware with the necessary support. Those gray bars are for comparison only. They depict the execution times of Intel's Core i3-4330 and AMD's A10-7800 in software and OpenCL modes.

Looking at the HSA-based results after the numbers generated with OpenCL turned on reminds us of a quote attributed to General LeMay. When asked about the purpose of a nuclear second strike capability, he replied, "To make the cinders dance". However, not every application is suitable for HSA optimizations, and adoption of the initiative by the software industry is thus far disappointing.

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

blackmagnum Just to wonder if Microsoft or Sony were to put this chip in their next gaming consoles and give those gamers a fighting chance.Reply -

tiger15 You are stressing power efficiency.Reply

What about comparing those numbers with other offerings? (Intel?) -

Memnarchon Reply13989815 said:Just to wonder if Microsoft or Sony were to put this chip in their next gaming consoles and give those gamers a fighting chance.

Maybe the new consoles lack CPU power (even if they are 8 core, the 1,6Ghz/1,75Ghz cripples them), their GPU part is far more powerful than existing APUs.

PS4's GPU has cores like 7870 and XBOX1 has cores like 7790, in other words more powerful than the 512 core R7 which exists in today's best APU A10-7850K. -

Cryio Wait. You can now CrossFire A10 7850 with GPUs other than the 240 and 250X?Reply

I have a friend with a 7850K and a 260X and he's dying to know if he can CrossFire.

"I see no point in buying a processor that emphasizes on-die graphics and then adding a Radeon R7 265X. Yes, AMD officially recommends it and yes, we tried it out." Can I take this as a yes ? -

gadgety The A8-7600 seems to be the effiency sweet spot in the Kaveri line up, specially at 45W. Trying to compare with of the A10-7800 with the A8-7600, although as far as I can tell just about ALL your tests seem to be done at different settings (e.g. BioShock Infinity is run at Medium Quality Presets rather than the lowest settings as in the test of the A10-7800) so the comparison isn't straightforward. A8-7600 is within 91-94% of the A10-7850K. One item which is comparable is video encoding in Handbrake, where the A8-7600 is at 92.8% of the 7850k, whereas the A10-7800 is at 95.7% of the 7850k. Price wise you'd pay a 63% premium for the A10-7800 over the A8-7600 to get an extremely minute performance advantage, around 3% or so.Reply -

Drejeck Reply

Not accurate.13989815 said:Just to wonder if Microsoft or Sony were to put this chip in their next gaming consoles and give those gamers a fighting chance.

Maybe the new consoles lack CPU power (even if they are 8 core, the 1,6Ghz/1,75Ghz cripples them), their GPU part is far more powerful than existing APUs.

PS4's GPU has cores like 7870 and XBOX1 has cores like 7790, in other words more powerful than the 512 core R7 which exists in today's best APU A10-7850K.

PS4 GPU is a crippled and downclocked 7850 (disabled cores enhance redundancy and less dead chips)

XB1 GPU is a crippled and downclocked R7 260X (as above) and like the 7790 should have AMD True Audio onboard, but they could have changed that. This actually means that CPU intensive and low resolution games are going to suck because the 8 cores are just Jaguar netbook processors.

The reality is that PS4 is almost cpu limited already and the XB1 is more balanced. Now that we've finished speaking of "sufficient" platforms let's talk about the fact that a CPU from AMD and the word efficient are in the same phrase. -

Memnarchon Reply

I think you need to do a little more research since: Reverse engineered PS4 APU reveals the console’s real CPU and GPU specs. "Die size on the chip is 328 mm sq, and the GPU actually contains 20 compute units — not the 18 that are specified. This is likely a yield-boosting measure, but it also means AMD implemented a full HD 7870 in silicon."13990605 said:Not accurate.

PS4 GPU is a crippled and downclocked 7850 (disabled cores enhance redundancy and less dead chips)

13990605 said:XB1 GPU is a crippled and downclocked R7 260X (as above) and like the 7790 should have AMD True Audio onboard, but they could have changed that. This actually means that CPU intensive and low resolution games are going to suck because the 8 cores are just Jaguar netbook processors.

The reality is that PS4 is almost cpu limited already and the XB1 is more balanced. Now that we've finished speaking of "sufficient" platforms let's talk about the fact that a CPU from AMD and the word efficient are in the same phrase.

The PS4 will be CPU limited? Since they write the code/API according to a hardware that it will remain the same for like 7-8 years, such thing as CPU limited especially for a console that runs the majority of games at 1080p, does not exist...

ps: I agree with the downclocked part since they need to save as much power as they can... -

blubbey Reply

Not accurate.13989815 said:Just to wonder if Microsoft or Sony were to put this chip in their next gaming consoles and give those gamers a fighting chance.

Maybe the new consoles lack CPU power (even if they are 8 core, the 1,6Ghz/1,75Ghz cripples them), their GPU part is far more powerful than existing APUs.

PS4's GPU has cores like 7870 and XBOX1 has cores like 7790, in other words more powerful than the 512 core R7 which exists in today's best APU A10-7850K.

PS4 GPU is a crippled and downclocked 7850 (disabled cores enhance redundancy and less dead chips)

XB1 GPU is a crippled and downclocked R7 260X (as above) and like the 7790 should have AMD True Audio onboard, but they could have changed that. This actually means that CPU intensive and low resolution games are going to suck because the 8 cores are just Jaguar netbook processors.

The reality is that PS4 is almost cpu limited already and the XB1 is more balanced. Now that we've finished speaking of "sufficient" platforms let's talk about the fact that a CPU from AMD and the word efficient are in the same phrase.

PS4 is 1152:72:32 at 800MHz, 7850 is 1024:64:32@ 900MHz or so (860MHz release?) It is not a "crippled 7850", the 7850 is a crippled pitcairn (20 CUs is the full fat 7870, PS4 has 18, 7850 16 CUs). "CPU limited" is very PC orientated thinking, things like offloading compute to the GPU will help. No, I'm not saying their CPUs are "good" but they will find ways of offloading that work onto the GPU. -

silverblue ReplyThe A8-7600 seems to be the effiency sweet spot in the Kaveri line up, specially at 45W. Trying to compare with of the A10-7800 with the A8-7600, although as far as I can tell just about ALL your tests seem to be done at different settings (e.g. BioShock Infinity is run at Medium Quality Presets rather than the lowest settings as in the test of the A10-7800) so the comparison isn't straightforward. A8-7600 is within 91-94% of the A10-7850K. One item which is comparable is video encoding in Handbrake, where the A8-7600 is at 92.8% of the 7850k, whereas the A10-7800 is at 95.7% of the 7850k. Price wise you'd pay a 63% premium for the A10-7800 over the A8-7600 to get an extremely minute performance advantage, around 3% or so.

Yes, but the A8-7600 has a 384-shader GPU. I suppose it depends on whether you want to use the GPU or not.