DeepSeek's AI breakthrough bypasses industry-standard CUDA for some functions, uses Nvidia's assembly-like PTX programming instead

Dramatic optimizations do not come easy.

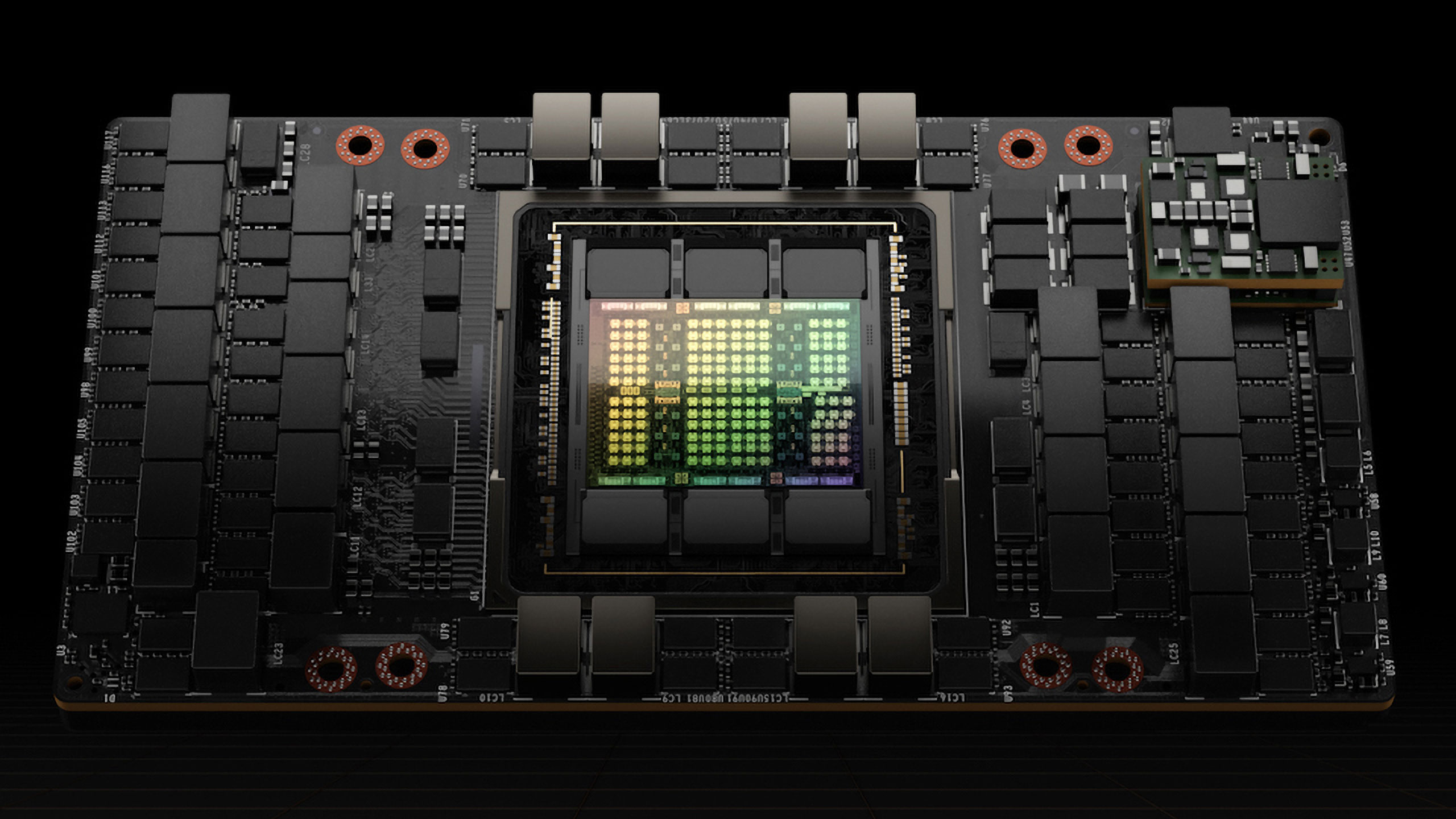

DeepSeek made quite a splash in the AI industry by training its Mixture-of-Experts (MoE) language model with 671 billion parameters using a cluster featuring 2,048 Nvidia H800 GPUs in about two months, showing 10X higher efficiency than AI industry leaders like Meta. The breakthrough was achieved by implementing tons of fine-grained optimizations and usage of Nvidia's assembly-like PTX (Parallel Thread Execution) programming instead of Nvidia's CUDA for some functions, according to an analysis from Mirae Asset Securities Korea cited by @Jukanlosreve.

Today: OpenAI boss Sam Altman calls DeepSeek 'impressive.' In 2023 he called competing nearly impossible.

Jan. 28, 2025: Investors panic: Nvidia stock loses $589B in value.

Dec. 27, 2024: DeepSeek is unveiled to the world.

Nvidia's PTX (Parallel Thread Execution) is an intermediate instruction set architecture designed by Nvidia for its GPUs. PTX sits between higher-level GPU programming languages (like CUDA C/C++ or other language frontends) and the low-level machine code (streaming assembly, or SASS). PTX is a close-to-metal ISA that exposes the GPU as a data-parallel computing device and, therefore, allows fine-grained optimizations, such as register allocation and thread/warp-level adjustments, something that CUDA C/C++ and other languages cannot enable. Once PTX is into SASS, it is optimized for a specific generation of Nvidia GPUs.

For example, when training its V3 model, DeepSeek reconfigured Nvidia's H800 GPUs: out of 132 streaming multiprocessors, it allocated 20 for server-to-server communication, possibly for compressing and decompressing data to overcome connectivity limitations of the processor and speed up transactions. To maximize performance, DeepSeek also implemented advanced pipeline algorithms, possibly by making extra fine thread/warp-level adjustments.

These modifications go far beyond standard CUDA-level development, but they are notoriously difficult to maintain. Therefore, this level of optimization reflects the exceptional skill of DeepSeek's engineers. The global GPU shortage, amplified by U.S. restrictions, has compelled companies like DeepSeek to adopt innovative solutions, and DeepSeek has made a breakthrough. However, it is unclear how much money DeepSeek had to invest in development to achieve its results.

The breakthrough disrupted the market as some investors believed that the need for high-performance hardware for new AI models would get lower, hurting the sales of companies like Nvidia. Industry veterans, such as Intel Pat Gelsinger, ex-chief executive of Intel, believe that applications like AI can take advantage of all computing power they can access. As for DeepSeek's breakthrough, Gelsinger sees it as a way to add AI to a broad set of inexpensive devices in the mass market.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Gururu Have to give this one to the brilliant, resourceful and hard-working engineers over there. Is it always going to be high maintenance, even sustainable?Reply -

DS426 Even if it's difficult to maintain and implement, it's clearly worth it when talking about a 10x efficiency gain; imagine a $10 Bn datacenter only costing let's say $2 Bn (still accounting for non-GPU related costs) at the same AI training performance level. I believe we do need to focus more on optimizations than outright XPU compute performance, whether it's going a similar route as DeepSeek or other alternatives. I'd say this might also drive some changes to CUDA as NVIDIA obviously isn't going to like these headlines and what, $500B of market cap erased in a matter of hours?Reply

Broadly speaking, China seems to be impeccable at reverse engineering and than iterating over others, all at savings to both cost and time-to-market. -

JamesJones44 I don't think it will hurt sales, even at 10x faster it still took 2 months if I read that right. Companies are likely to invest in hardware until that time becomes significantly less than 2 months.Reply -

Gaidax There is a ton of low-hanging optimization fruit in this whole area that is still left to pick for sure.Reply

But in the end the industrial AI requirements are not going anywhere. -

vanadiel007 It's scary to see AI being added to everything you use. Especially since it's not intelligent.Reply -

Itsahobby Excellent article! Driving down the cost of AI is good for everybody. There will be more demands for Nvidia chips.Reply -

anoldnewb Reply

People should be concerned about rampant AI proliferation with out adequate safeguards because it is very prone to hallucinations. How can you be certain the output is reliable?Gaidax said:Why?

People should have reason to be concerned were AI failure can harm people; for example, driving a semitruck at 70 MPH, automating air traffic control, flying airplanes, writing code for applications were failure can hurt people. -

abufrejoval Well, I guess there is a correlation between the cost per engineer and the cost of AI training, and you can only wonder who will do the next round of brilliant engineering.Reply

But here is what DeepSeek R1 had to say on my local GPU using lmstudio-community/DeepSeek-R1-Distill-Qwen-7B-GGUF via LM studio on Marie Antoinette and who was her mother:

Marie Antoinette did not have a traditional mother in history; she was raised by her stepfather, Louis XVIII, who became her legal father after her mother's death from an affair with her biological father. Later, after her father's issues led to political exile and instability, Marie was taken in by Charles X of France as his ward. Thus, she never had a real biological mother but was instead reared by these two influential figures in her life.

Now few things are as certain as the need for a biological mother, unless you're at plankton level, so that's an interesting claim. And Louis XVIII and Charles X were actually younger brothers of her husband Louis XVI, who lost his head just like she did, while her biological mother was Maria Theresa, empress of the Holy Roman empire and rather better known than her daughter.

About herself, DeepSeek had to say:

Marie Antoinette was a member of the Jacobin Club, which supported the monarchy during the revolution. Her reign as the King's girlfriend put her into a position of power within the political arena, but it ultimately led to her downfall. After her execution, she was exiled and died in seclusion under mysterious circumstances.

Who did die in seclusion under mysterious circumstances while still a boy was actually her son, to whom her in-law Louis XVIII posthumously awarded the number XVII before he was crowned as the eighteenth Louis of France.

You can see how it has facts and figures as related, but as to their nature... and what it concludes from them...

I have no idea why people put so much faith into these AI models, except as a source for entertainment. -

Gaidax Reply

So is the Internet. And heck it's FAR wilder at that too.anoldnewb said:People should be concerned about rampant AI proliferation with out adequate safeguards because it is very prone to hallucinations. How can you be certain the output is reliable?

People should have reason to be concerned were AI failure can harm people; for example, driving a semitruck at 70 MPH, automating air traffic control, flying airplanes, writing code for applications were failure can hurt people.

Will you have some dumb answers from AI? Yes, but so will happen with your average Joe getting advice to drink bleach from his social media circle to cure a certain viral infection.

Compared to nonsense you can read on the Internet from the "experts", AI is already far more curated and correct, and it will only get better, even if once in a while it will still fudge it up.

In the end - the person in front of a display needs at the very least minimal understanding of what this notification means, or heck how Internet works at all.

https://i.gyazo.com/9e0270540be1f3059d1ebaa229904000.png