Four SAS 6 Gb/s RAID Controllers, Benchmarked And Reviewed

We got our hands on four SAS 6 Gb/s RAID controllers from Adaptec, Areca, HighPoint, and LSI and ran them through RAID 0, 5, 6, and 10 workloads to test their mettle. Does your system need eight more ports of connectivity? We can answer that!

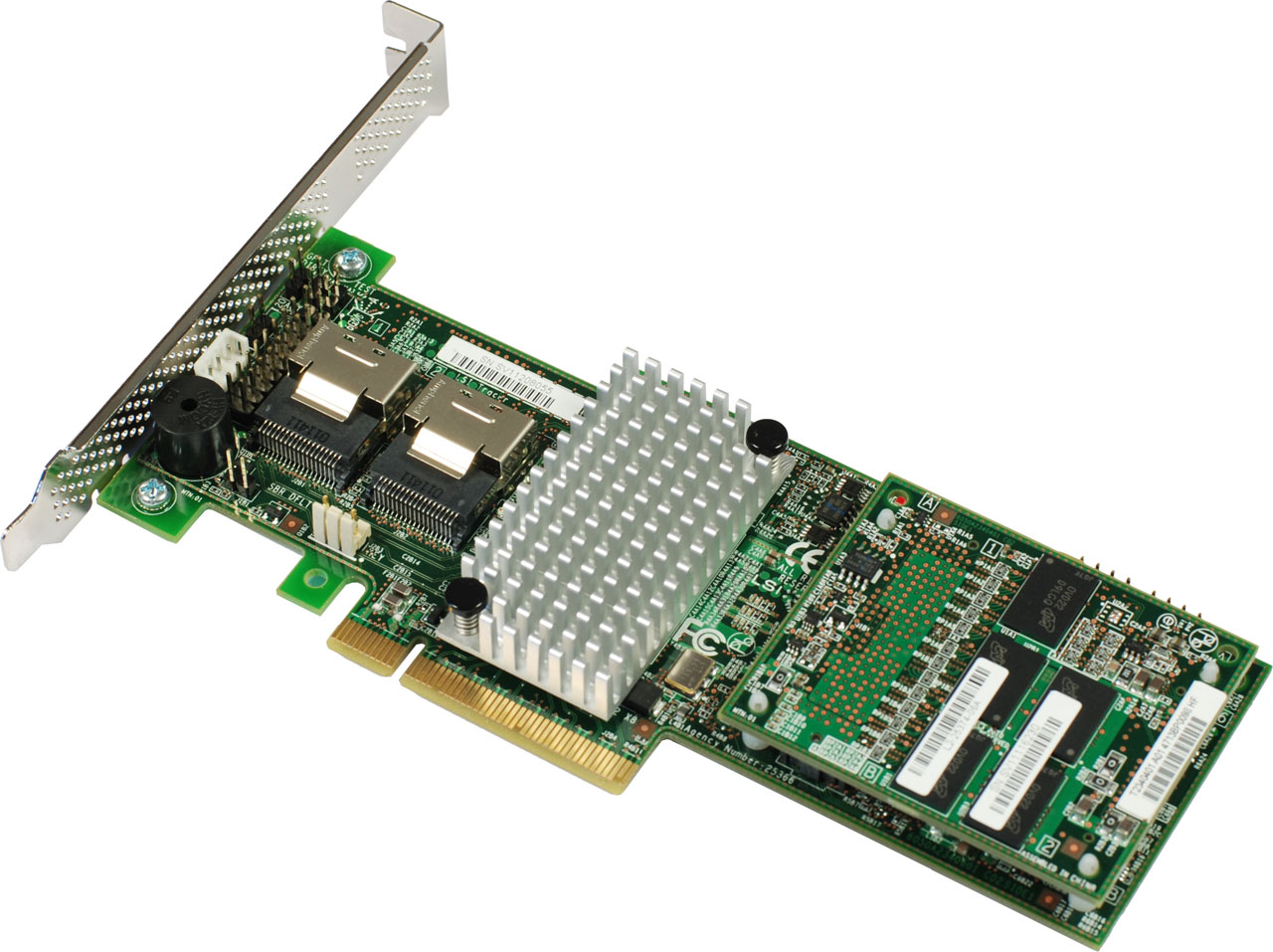

LSI MegaRAID 9265-8i

According to LSI, SMEs (small and medium enterprises) are the target audience for its MegaRAID 9265-8i. The company markets the card as well-suited for cloud, security, and business applications. With a street price of approximately $630, the MegaRAID 9265-8i is the most expensive controller in this test, but as the benchmark results show, you get what you pay for. Before we present the benchmark results, let’s discuss this controller's technical features and its optional software add-ons called FastPath and CacheCade.

The LSI MegaRAID 9265-8i is based on a dual-core LSI SAS2208 ROC, employing an eight-lane PCIe 2.0 interface. The suffix -8i in the product name denotes eight internal SATA/SAS ports, each of which supports 6 Gb/s. Up to 128 physical storage devices can be connected to the controller via SAS expanders. The low-profile card also features 1 GB DDR3-1333 cache, and supports RAID levels 0, 1, 5, 6, 10, and 60.

Tuning Tools, FastPath, and CacheCade

LSI claims that FastPath can dramatically accelerate the I/O operations of attached SSDs. The company says the FastPath feature works with any flash-based SSD, markedly improving the read/write performance of a SSD-based RAID array by up to 2.5x in writes and up to 2x in reads, achieving 465 000 IOPS. We weren't even able to put that to the test, though. As delivered, this card already had enough horsepower to handle our five-drive SSD array without FastPath installed.

The other software option for the MegaRAID 9265-8i is called CacheCade. It enables the use of one SSD as a read cache for an array of hard disks. According to LSI, this may accelerate read operations by a factor of up to 50, depending on the size of the data being accessed, the application, and the use case. We tried this tool out and created a RAID 5 array consisting of seven hard disks and one SSD (the SSD serving as a read cache). Compared to a RAID 5 setup composed of eight hard disks, it's evident that CacheCade not only improves measured I/O throughput, but even perceived performance (increasingly so as the original data set becomes smaller). We used a 25 GB data set for our test and achieved 3877 IOPS in the Web server Iometer workload, whereas a normal hard disk-based array was only able to hit 894 IOPS.

Performance

In a nutshell, LSI's MegaRAID 9265-8i is the fastest SAS RAID controller in this round-up with respect to I/O performance. As far as sequential read/write operations go, however, the controller only achieves mid-range performance, as its sequential performance varies greatly depending on the RAID level you use. In the RAID 0 hard disk test, we see sequential reads of up to 1080 MB/s (significantly faster than the competitors). Sequential write performance in RAID 0, 927 MB/s, takes a first-place finish, too. In RAID 5 and 6, however, the LSI controller is beaten by all of the other contenders, only redeeming itself in the RAID 10 benchmarks. In the SSD RAID test, LSI's MegaRAID 9265-8i posts the best sequential write performance (752 MB/s) and is only beaten by the Areca ARC-1880i in sequential reads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

If you are looking for an SSD-oriented RAID controller with high I/O performance, the LSI controller is a winner. With rare exceptions, it winds up in first place in our Iometer database, file server, Web server, and workstation workloads. When your RAID array consists of SSDs, LSI's contender is truly unleashed. It utterly outclasses the three other controllers. For example, in the workstation benchmark, the MegaRAID 9265-8i achieves 70 172 IOPS, while the second-place finisher, Areca's ARC-1880i, posts slightly more than half that number, 36 975 IOPS.

Current page: LSI MegaRAID 9265-8i

Prev Page HighPoint RocketRAID 2720SGL: Web GUI And Array Setup Next Page LSI MegaRAID 9265-8i: RAID Software And Array Setup-

americanherosandwich Great review! Though I would have like to see some RAID 1 and RAID 10 benchmarks. Don't usually see RAID 0 for expensive SAS RAID Controllers, and more RAID 10 configurations than RAID 5.Reply -

purrcatian I just sold my HighPoint RocketRAID 2720 because of the terrible drivers. Not only do the drivers add about 60 seconds to the Windows boot, they also cause random BSODs. The support was a joke, and the driver that came on the disc caused the Windows 7 x64 setup to instantly BSOD even though the box had a Windows 7 compatible logo on it. I even RMAed the card and the new one was exactly the same.Reply -

dealcorn Very cool, fast and expensive means not home server stuff. For that, try the IBM BR10I, 8port PCI-e SAS/SATA RAID controller, which is generally available on eBay for $40 with no bracket (I live for danger). You are stuck with 3 GB/sec per port, but if you add $34 for a pair of forward breakout cables you have 8 sata ports at a cost of under $10 per port. The card requires a PCIe X8 slot but if you only give it 4 lanes (the number of lanes offered by our Atom's NM10) if will give each port 1.5 Gb/sec. Cheap SAS makes software RAID 6 prudent in a home storage server.Reply -

slicedtoad I have pretty much no use for anything other than raid 0 but it was still an interesting read. I think i prefer this type of article over the longer type with actual benchmarks thrown in (not for gpu or cpu reviews though).Reply -

pxl9190 Only wish this review had came earlier !Reply

I had a hard time deciding between 9265-8i, 1880 and 6805 a month ago. I bought the 6805 and always wondered why RAID-10 was not as fast as I thought it should be. This reviewed proved my worries.

I eventually went to RAID 6 with 6 Constellation ES 1TB disks. Here's where the adaptec really shines. This is for a photo/video storage/editing disk array.

Admittedly if I have a choice again I would have picked the Areca after seeing the numbers. Adaptec was the cheapest among all of them so it's not too much of a regret. -

Great review! As I am in the process of building a new home file server and always have a habit of going overboard in such situations, I will be referring back to this article many more times before purchasing.Reply

That said can you please talk more to the differences performance wise between SATA and SAS? I understand the reliability argument, however I wonder if for my purposes I would not be better served by using cheaper SATA disks over SAS disks?

I would also love some direction with regard to a good enclosures/power supplies for a hard drive only enclosure. I realize I am quickly priced out of an enterprise solution in this arena, but have seen at least a couple cheaper options online such as the Sans Digital TR8M+B. (This enclosure is normally bundeled with some RocketRaid controller which I would probably discard in favor of either the Adaptec or LSI solution.) -

You are missing a huge competitor in this space. Atto RAID Adapters are on par and I think the only other one out there, why are they not compared in this review?Reply

-

Marco925 I bought the Highpoint, for it's money, it was incredible value at a little under $120Reply -

stuckintexas I evaluated all but the Highpoint for work. What isn't shown, and would be unrealistic for a home user, is that the LSI destroys the competition when you throw on a SAS expander. With 24 15k SAS drives, the LSI card tops out at 3500MB/s, RAID0 sequential write, while the Areca isReply