AMD to Fuse FPGA AI Engines Onto EPYC Processors, Arrives in 2023

AMD to arm its chips with FPGAs

AMD announced during its earnings call that it will infuse its CPU portfolio with Xilinx's FPGA-powered AI inference engine, with the first products slated to arrive in 2023. The news indicates that AMD is moving swiftly to incorporate the fruits of its $54 billion Xilinx acquisition into its lineup, but it isn't entirely surprising — the company's recent patents indicate it is already well underway in enabling multiple methods of connecting AI accelerators to its processors, including using sophisticated 3D chip stacking tech.

AMD's decision to pair its CPUs with in-built FPGAs in the same package isn't entirely new — Intel tried the same approach with the FPGA portfolio it gained through its $16.7 billion Altera purchase in late 2015. However, after Intel announced the combined CPU+FPGA chip back in 2014 and even demoed a test chip, the silicon didn't arrive until 2018, and then only in a limited experimental fashion that apparently came to a dead-end. We haven't heard more about Intel's project, or any other derivatives of it, for years.

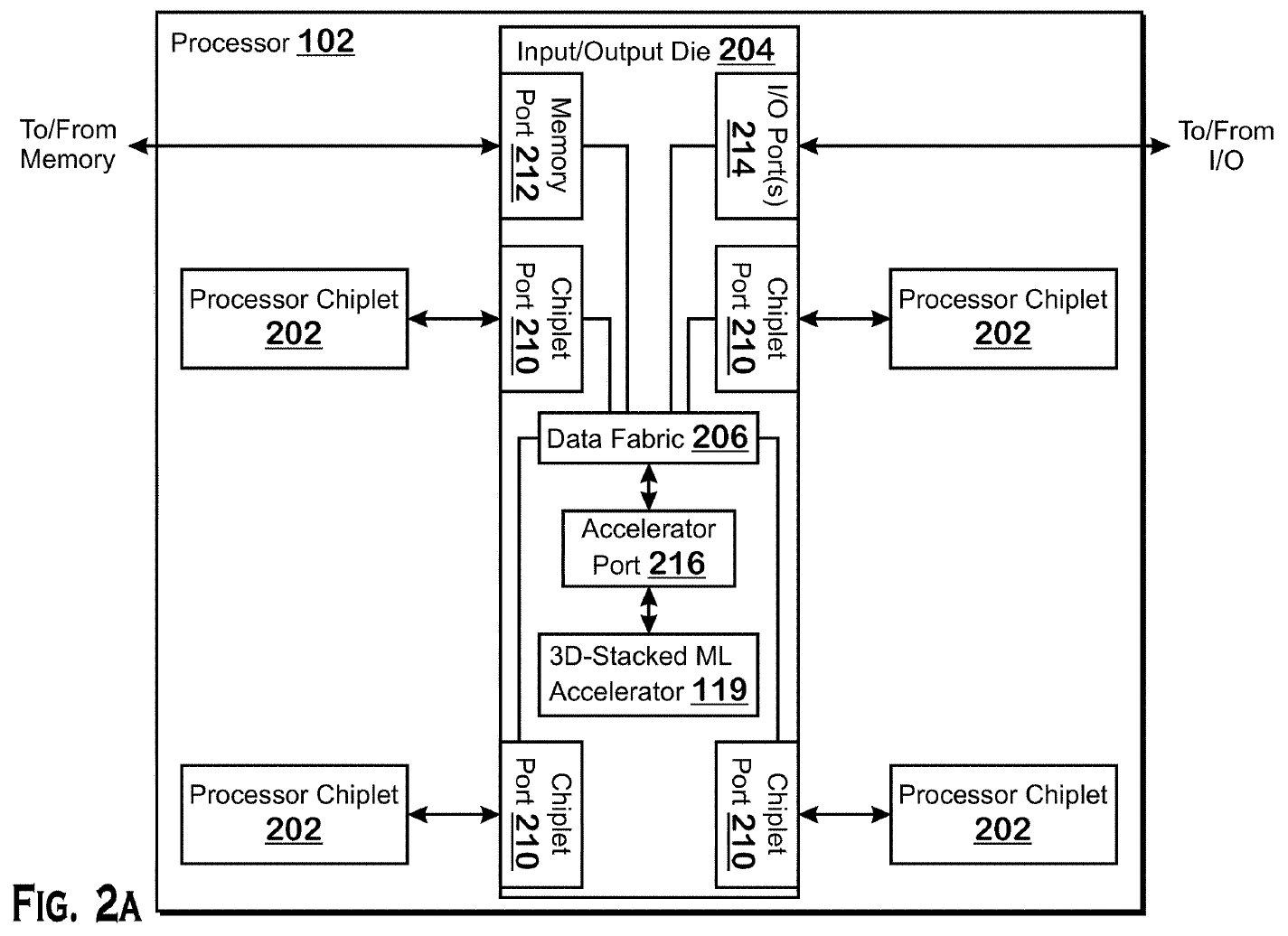

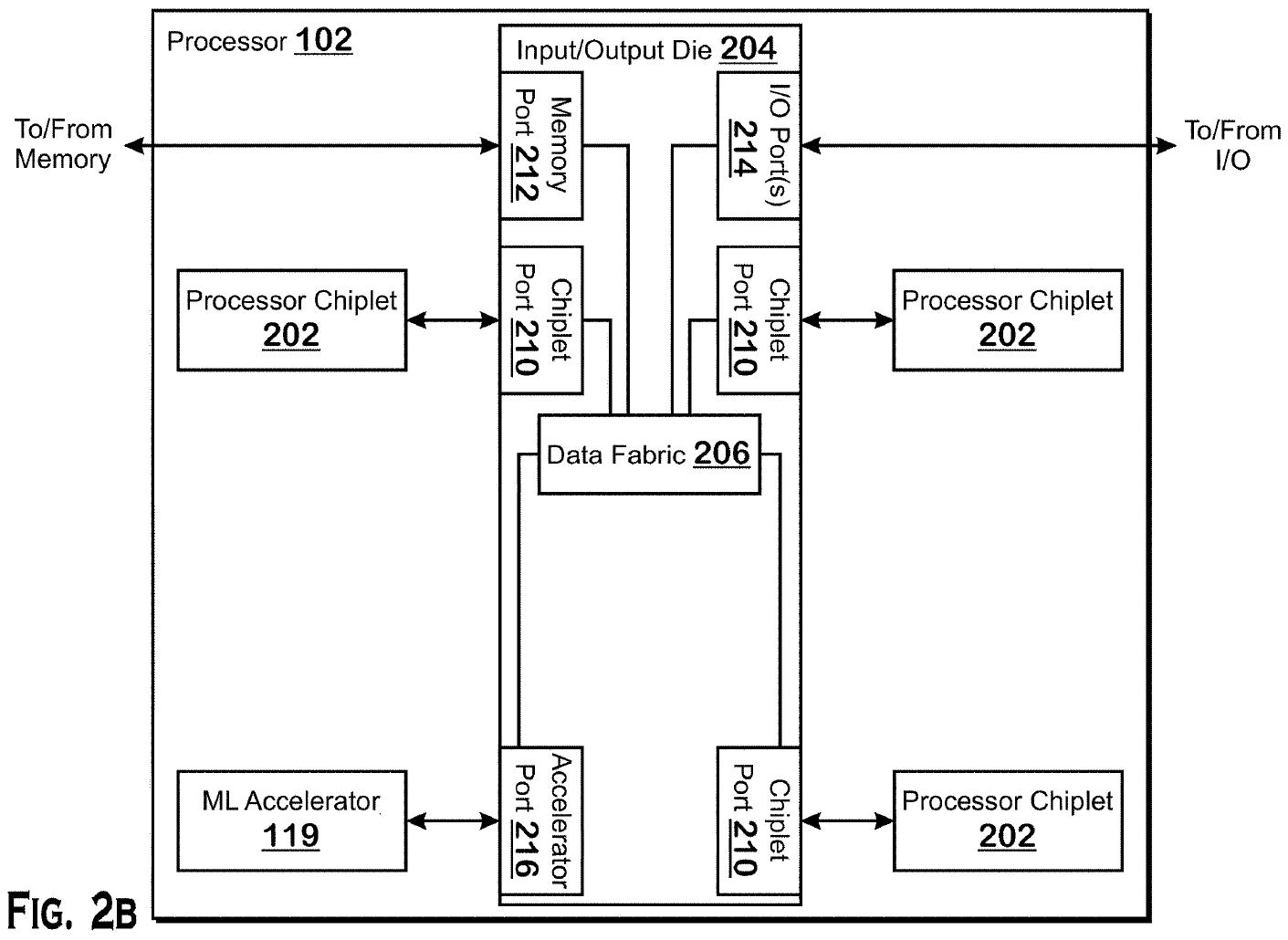

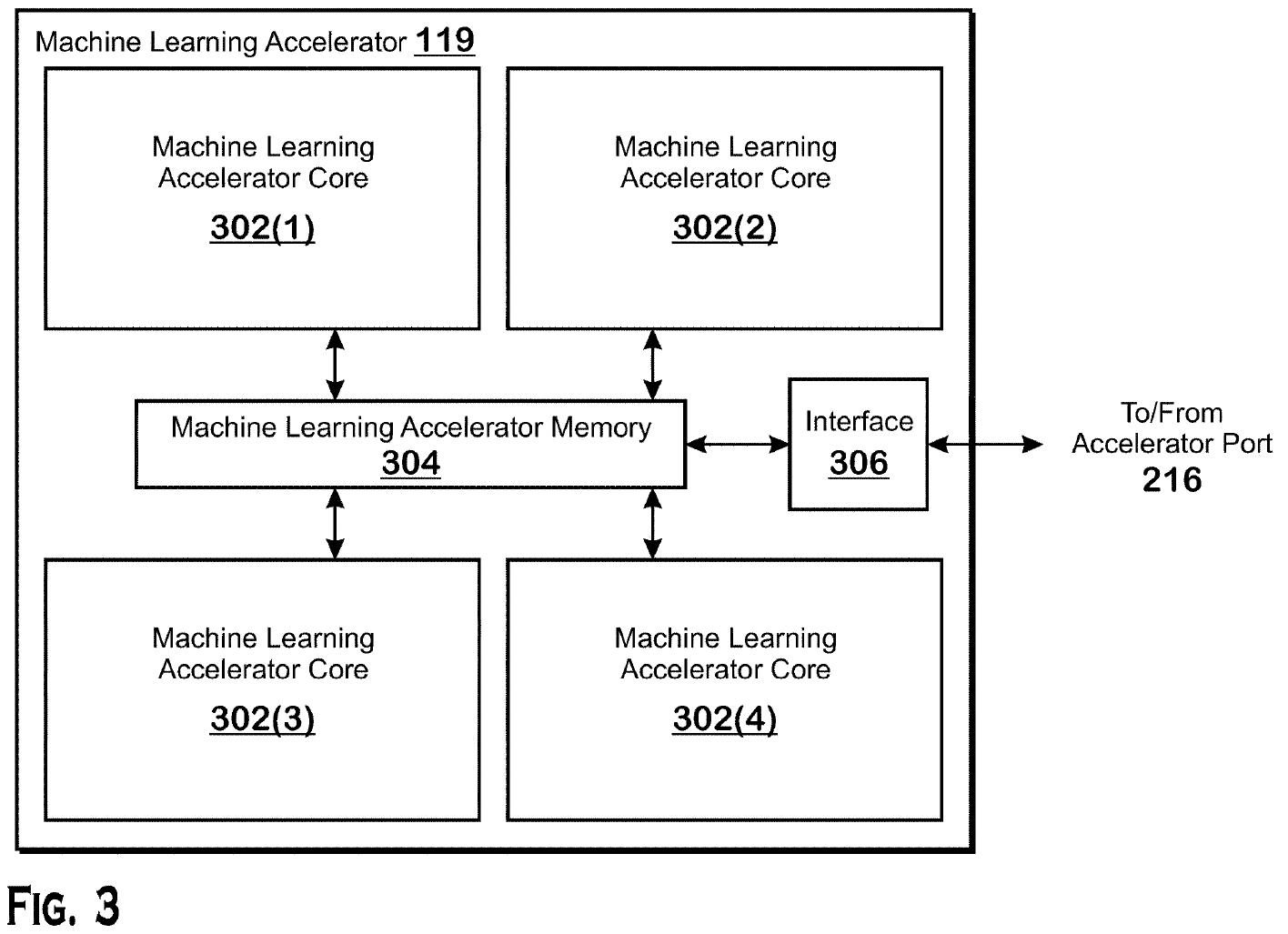

AMD hasn't revealed any specifics of its FPGA-infused products yet, but the company's approach to connecting the Xilinx FPGA silicon to its chip will likely be quite a bit more sophisticated. While Intel leveraged standard PCIe lanes and its QPI interconnect to connect its FPGA chip to the CPU, AMD's recent patents indicate that it is working on an accelerator port that would accommodate multiple packaging options.

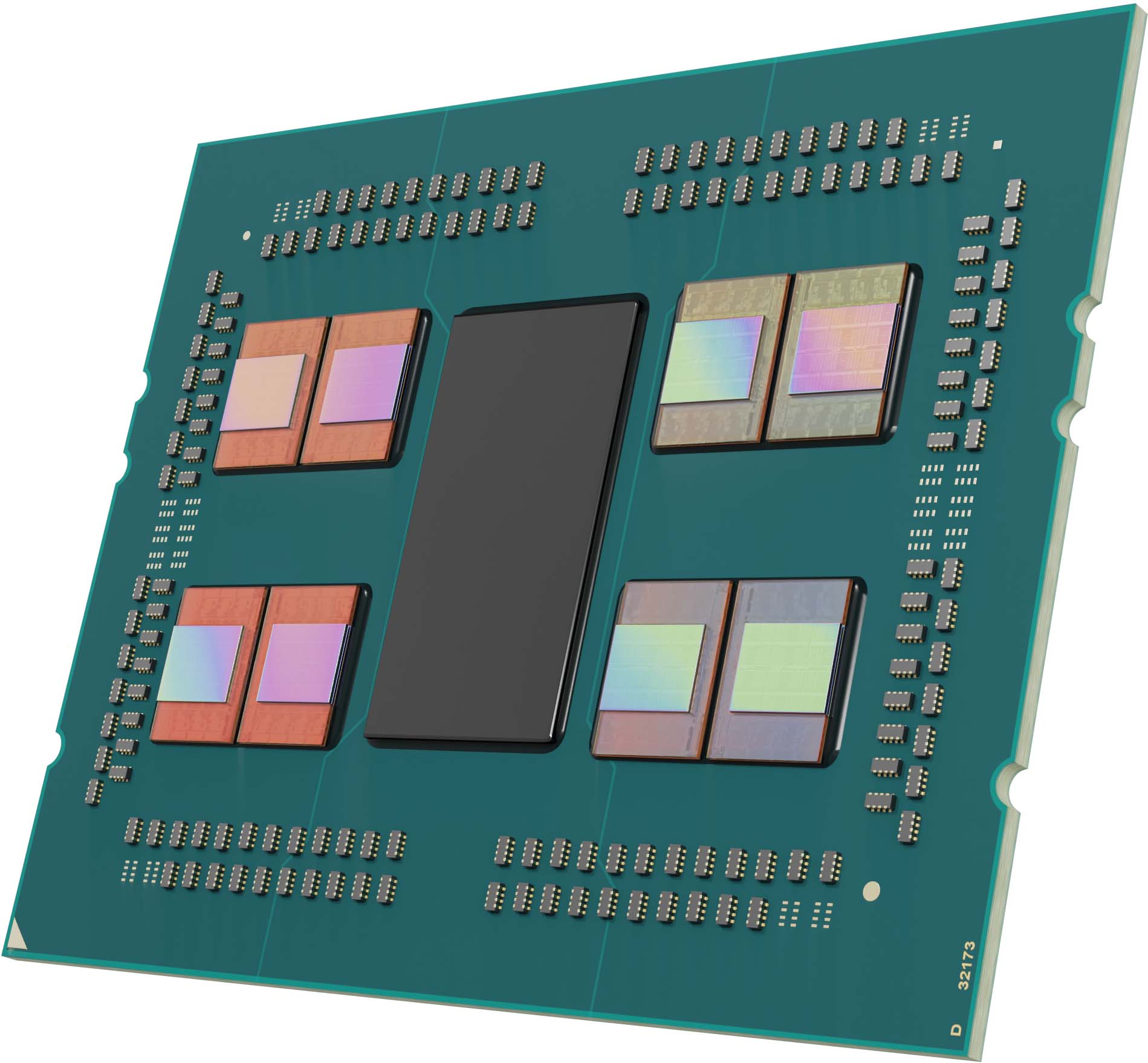

Those options include 3D stacking chip tech, similar to what it currently uses in its Milan-X processors to connect SRAM chiplets, to fuse an FPGA chiplet on top of the processors' I/O die (IOD). This chip stacking technique would provide performance, power, and memory throughput advantages, but as we see with AMD's existing chips that use 3D stacking, it can also present thermal challenges that hinder performance if the chiplet is placed close to the compute dies. AMD's option to place an accelerator atop the I/O die makes plenty of sense because it would help address thermal challenges, thus allowing AMD to extract more performance from the neighboring CPU chiplets (CCDs).

AMD also has other options. By defining an accelerator port, the company can accommodate stacked chiplets on top of other dies or simply arrange them in standard 2.5D implementations that use a discrete accelerator chiplet instead of a CPU chiplet (see above diagrams). Additionally, AMD has the flexibility to bring other types of accelerators, like GPUs, ASICs, or DSPs, into play. This affords a plethora of options for AMD's own proprietary future products and could also allow customers to mix and match these various chiplets into custom processors that AMD designs for its semi-custom business.

This type of foundational tech will surely come in handy as the wave of customization continues in the data center, as evidenced by AMD's own recently-announced 128-core EPYC Bergamo CPUs that come with a new type of 'Zen 4c' core that's optimized for cloud native applications.

AMD already uses its data center GPUs and CPUs to address AI workloads, with the former typically handling the compute-intensive task of training an AI model. AMD will use the Xilinx FPGA AI engines primarily for inference, which uses the pre-trained AI model to execute a certain function.

Victor Peng, AMD's president of its Adaptive and Embedded Computing group, said during the company's earnings call that Xilinx already uses the AI engine in image recognition and "all kinds" of inference applications in embedded applications and edge devices, like cars. Peng noted that the architecture is scalable, making it a good fit for the company's CPUs.

Inference workloads don't require as much computational horsepower and are far more prevalent than training in data center deployments. As such, inference workloads are deployed en masse across vast server farms, with Nvidia creating lower-power inference GPUs, like the T4, and Intel relying upon hardware-assisted AI acceleration in its Xeon chips to address these workloads.

AMD's decision to target these workloads with differentiated silicon could give the company a leg up against both Nvidia and Intel in certain data center deployments. Still, as always, the software will be the key. Both AMD CEO Lisa Su and Peng reiterated that the company would leverage Xilinx's software expertise to optimize the software stack, with Peng commenting, "We are absolutely working on the unified overall software enabled the broad portfolio, but also especially in AI. So you will hear more about that at the Financial Analyst Day, but we are definitely going to be leaning in AI both inference and training."

AMD's Financial Analyst Day is June 9, 2022, and we're sure to learn more about the new AI-infused CPUs then.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Rob1C Hopefully AMD will be able to offer an AI Engine that's even better than what's available in IBM's Telum processor: https://research.ibm.com/blog/research-powering-ibmz16Reply -

PiranhaTech This sounds similar to what Apple is doing with the Apple M1. The M1 has a lot of image acceleratorsReply

However, if they can do things with the chiplets concept, almost making chiplets like expansion cards that can be plugged into the CPU, that becomes powerful. AMD is somewhat ahead of a lot of others when it comes to chiplets -

AMD being second to Intel is a good thing. It has to make hardware that can be widely adopted. Read - not locked like Optane. We need good, but not monopoly strong, Intel so AMD can make good things for us customers 😊Reply

-

TerryLaze Reply

Oh please go ahead and explain to the class how we will be able to take out this AI module to use it on a M1 adroid or intel...tommo1982 said:AMD being second to Intel is a good thing. It has to make hardware that can be widely adopted. Read - not locked like Optane. We need good, but not monopoly strong, Intel so AMD can make good things for us customers 😊

Optane needs the CPU to talk to the disk like it's ram, you can't fake that, you need the CPU to actually do this and no other CPU company is going to invest money into making their CPUs do that for something that they can't control and don't know if it's ever going to take off. -

rluker5 Reply

That's optane dimms.TerryLaze said:Oh please go ahead and explain to the class how we will be able to take out this AI module to use it on a M1 adroid or intel...

Optane needs the CPU to talk to the disk like it's ram, you can't fake that, you need the CPU to actually do this and no other CPU company is going to invest money into making their CPUs do that for something that they can't control and don't know if it's ever going to take off.

Optane as an ssd works the same as an ssd, only with lower latency and no slowdowns ever. AMD chips just get a little less performance out of it, with the likely exception of the x3d.