Apple's M1 SoC Shreds GeForce GTX 1050 Ti in New Graphics Benchmark

If the Apple M1's processing power didn't leave you impressed, maybe the 5nm chip's graphical prowess will. A new GFXBench 5.0 submission for the M1 exhibits its dominance over oldies, such as the GeForce GTX 1050 Ti and Radeon RX 560.

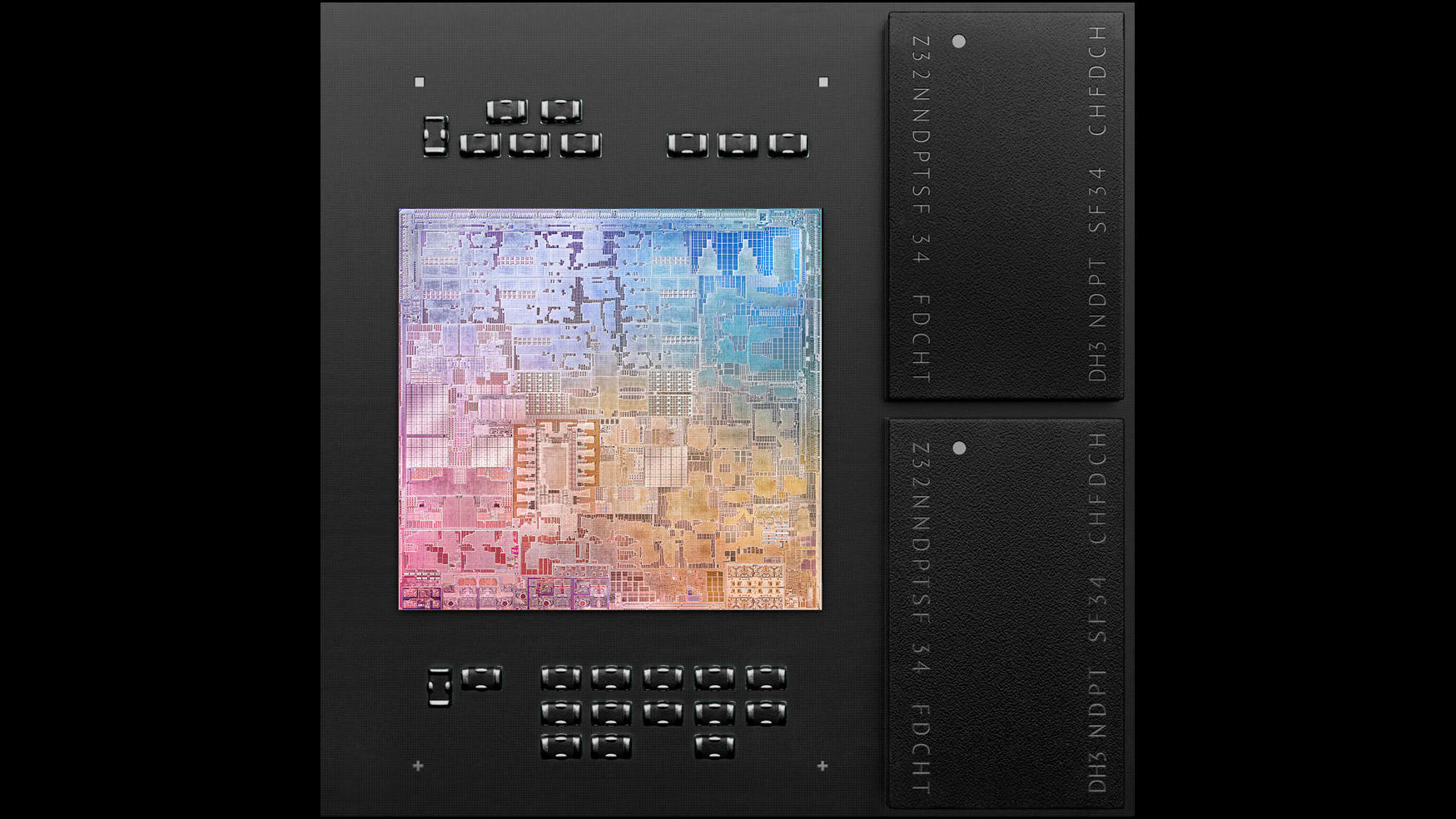

The Apple M1 marks an important phase in the multinational giant's history. It's the start of an era where Apple no longer has to depend on a third-party chipmaker to power its products. The M1 might be one of the most intriguing processor launches in the last couple of years. Built on the 5nm process node, the unified, Arm-based SoC (system-on-a-chip) brings together four Firestorm performance cores, four Icestorm efficiency cores, and an octa-core GPU in a single package.

Much of the M1's GPU design continues to remain a mystery to us. So far, we know it features eight cores, which amounts to 128 execution units (EUs). Apple didn't reveal the clock speeds, but it wasn't shy to boast about its performance numbers.

According to Apple, M1 can simultaneously tackle close to 25,000 threads and deliver up to 2.6 TFLOPS of throughput. Apple is probably quoting the M1's single-precision (FP32) performance. If you're looking for a point of reference, the M1 ties the Radeon RX 560 (2.6 TFLOPS), and it's just a few TFLOPS away from catching the GeForce GTX 1650 (2.9 TFLOPS).

Apple M1 Benchmarks

| GPU | Aztec Ruins Normal Tier | Aztec Ruins High Tier | Car Chase | 1440p Manhattan 3.1.1 | Manhattan 3.1 | Manhattan | T-Rex | ALU 2 | Driver Overhead 2 | Texturing |

|---|---|---|---|---|---|---|---|---|---|---|

| Apple M1 | 203.6 FPS | 77.4 FPS | 178.2 FPS | 130.9 FPS | 274.5 FPS | 407.7 FPS | 660.1 FPS | 298.1 FPS | 245.2 FPS | 71,149 MTexels/s |

| GeForce GTX 1050 Ti | 159.0 FPS | 61.4 FPS | 143.8 FPS | 127.4 FPS | 218.3 FPS | 288.3 FPS | 508.1 FPS | 512.6 FPS | 218.2 FPS | 59,293 MTexels/s |

| Radeon RX 560 | 146.2 FPS | 82.5 FPS | 115.1 FPS | 101.4 FPS | 174.9 FPS | 221.0 FPS | 482.9 FPS | 6275.4 FPS | 95.5 FPS | 22,8901 MTexels/s |

Generic benchmarks only tell one part of the story. Furthermore, GFXBench 5.0 isn't exactly the best tool for testing graphics cards either, given that it's aimed at smartphone benchmarking. As always, we recommend treating the benchmark results with caution until we see a thorough review of the M1.

The anonymous user tested the M1 under Apple's Metal API, making it hard to find apples-to-apples non-Apple comparisons. At the time of writing, no one has submitted a Metal run with the GeForce GTX 1650. Luckily, there is a submission for the GeForce GTX 1050 Ti, so the Pascal-powered graphics card will have to serve as the baseline for now.

In a clear victory, the Apple M1 bested the GeForce GTX 1050 Ti by a good margin. The Radeon RX 560 didn't stand a chance, either. Admittedly, the two discrete gaming graphics cards are pretty old by today's standards, but that shouldn't overshadow the fact that M1's integrated graphics outperformed both 75W desktop graphics cards, but within a pretty tight TDP range of its own.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The M1 will debut in three new Apple products: the 13-inch MacBook Pro starting $1,299, the Macbook Air at $999, and the Mac Mini at $699. Nobody really buys an Apple device to game. However, if the in-house SoC lives up to the hype, casual gaming could be a reality on the upcoming M1-powered devices.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

hotaru251 "1050ti"Reply

is that supposed to be shocking? that was a card that shouldnt of existed to begin with :/ -

shady28 Wow. Only thing in the low power segment mobile space that can come close to this is TigerLake U, but looks like this it's a bit faster in every single way, and lower power to boot.Reply -

cryoburner These benchmarks don't necessarily tell the whole story. Perhaps they can get these numbers in a synthetic benchmark like this when the CPU cores are sitting mostly idle, but what happens when those cores are active and fighting for the available 10 watts of TDP headroom? And we don't even know if these numbers were achieved at that TDP, or in some device working with higher TDP limits. Or what sort of hardware and software environment the 1050 Ti submission was operating in. If they didn't even have a 1650 submission, a card that's now been on the market for 19 months, then it's a bit hard to take this benchmark seriously.Reply

However, if the in-house SoC lives up to the hype, casual gaming could be a reality on the upcoming M1-powered devices.

What kind of games are going to be able to run natively on this hardware? Angry Birds? It might be great at running ports of smartphone games, but something tells me that support for ports of PC and console games is going to get significantly worse on Macs than it already is, at least in the short-term. -

nofanneeded 1050 ti ??? This is in Virtualization/Emulation mode for the Nvidia cards for sure . not real benchmarks.Reply

next.. -

DavidC1 Replyshady28 said:Wow. Only thing in the low power segment mobile space that can come close to this is TigerLake U, but looks like this it's a bit faster in every single way, and lower power to boot.

It's a fantastic result but trusting GFXBench and applying that to actual gaming is misleading at best.

-According to Notebookcheck, the Geforce 1050 Max-Q is only 10% faster in Aztec Ruins High and 50% faster in Normal compared to the Xe G7, the GPU in Tigerlake

-In games however, the Geforce 1050 Max-Q is 2.5-3x the performance of the same Xe G7

-I will also mention the only Xe G7 result for GFXBench is using the Asus Zenbook Flip S UX371, which is the poorest performing Xe G7. In games the top Xe G7 system, the Acer Swift is 50% faster.

Interim summary: 1050 Max-Q is 10-50% faster than Xe G7 in GFXBench, but 2.5-3x as fast in games

-Anandtech notes that mobile systems use FP16, while PCs are using FP32, meaning the mobile systems have an effective 2x Flop advantage. Instant 30-40% performance gain

-The GTX 1050 Ti is higher end than 1050 Max-Q plus its a desktop part and should be even faster!

Interim summary 2: You can take the Apple M1 result and divide it by 1.3-1.4x, instantly making it slower than the 1050 Ti due to rendering differences.

Based on the actual game differences, the 1050 Ti is still going to end up being 2x the performance of Apple M1.

Conclusion: Take GFXBench results with a heap of salt. Apple's results are fantastic, considering its at a 10W power envelope which includes the CPU.

Saying its faster than 1050 Ti Desktop is misleading, because in actual games it'll be way, way ahead. -

ingtar33 Reply

I was going to post this too. absolutely correct. This is the 2nd uncritical "article" posted on this site in the past week that could have been written by apple's marketing department masquerading as news.nofanneeded said:1050 ti ??? This is in Virtualization/Emulation mode for the Nvidia cards for sure . not real benchmarks.

next.. -

kristoffe ad... sorry article... paid for by apple. also, emu... and also... only a 1050 ti? lol. ok "whoaaaaa". weird flex.Reply -

Danpapua Comparing a product that's releasing to something from 4 years ago and calling it a flex is quite funny. Seriously, if Apple can't beat the upcoming 3060(ti) or some other modern piece of hardware it's not really a flex.Reply -

atomicWAR Replykristoffe said:ad... sorry article... paid for by apple. also, emu... and also... only a 1050 ti? lol. ok "whoaaaaa". weird flex.

I don't think the articles that bad but they should do a better job of pointing out the short comings of this test. I will be interested to see more suitable/varied benchmarks and real world behavior of this chip. I have long said that if ARM based CPUs would catch up to x86, assuming they ever did, it would be around the 5nm mark. And my statement goes back nearly 18 years ago when my mom was looking in to ARM stock at the time. She asked me if ARM based CPUs had a realistic chance of catching Intel/AMD x86 chips. On of her techs at work was an ARM enthusist who wrongly insisted ARM would beat x86 within 10 years and be powering everything from Linux to Windows on desktops/laptops/servers as the primary CPU architecture in use. dude got some of it right and certainly had the right idea if not the wrong time frame. Point being I told her if I was correct the investment would not be a bad thing and if ARM didn't beat x86 they would still have a strangle hold on the lower power/mobile market. so either way the investment would likely be solid. Looks like I was right for the most part... -

spongiemaster Reply

It's also an example of what is possible when you aren't competing against yourself. AMD, and heading forward Intel, aren't going to make iGPU as fast as they can because that would prevent them from selling lower end dGPU's. It will be many years before we see an AMD CPU with an iGPU as fast as what the new consoles have. iGPU's for PC's are basically only designed to be fast enough for business desktops and to speed up encoding/decoding tasks. Apple doesn't have to worry about cannibalizing their own sales and can just make things the best they can for the target price.Danpapua said:Comparing a product that's releasing to something from 4 years ago and calling it a flex is quite funny. Seriously, if Apple can't beat the upcoming 3060(ti) or some other modern piece of hardware it's not really a flex.