Acer Predator XB321HK 32-inch Ultra HD G-Sync Monitor Review

Today we’re looking at Acer’s latest flagship gaming monitor, the Predator XB321HK. Sporting a 32-inch IPS screen, G-Sync and premium build quality; it looks like just the thing for a cost-no-object gaming rig.

Why you can trust Tom's Hardware

Grayscale Tracking And Gamma Response

Our grayscale and gamma tests are described in detail here.

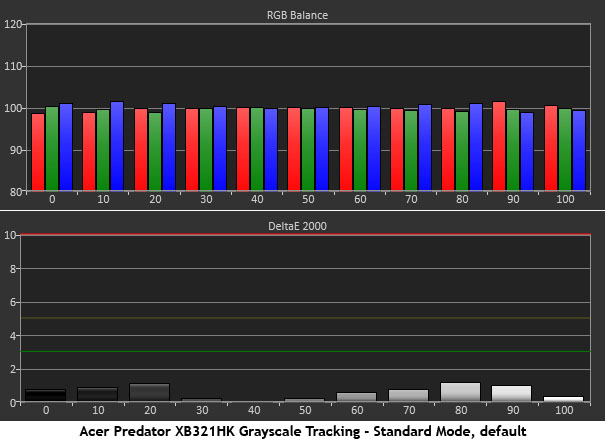

One might look at the default grayscale tracking chart and conclude the XB321HK is ready to go without adjustment. That is mostly true. Certainly in this test, there’s no concern. RGB levels are almost ruler-flat from bottom to top. This is the result we’d expect from a professional monitor.

Our contrast and gamma changes alter the landscape a little at the 100% brightness level. There we see a slight blue reduction on the chart, but the error is still below the visible point. Things are still looking quite good for our test subject.

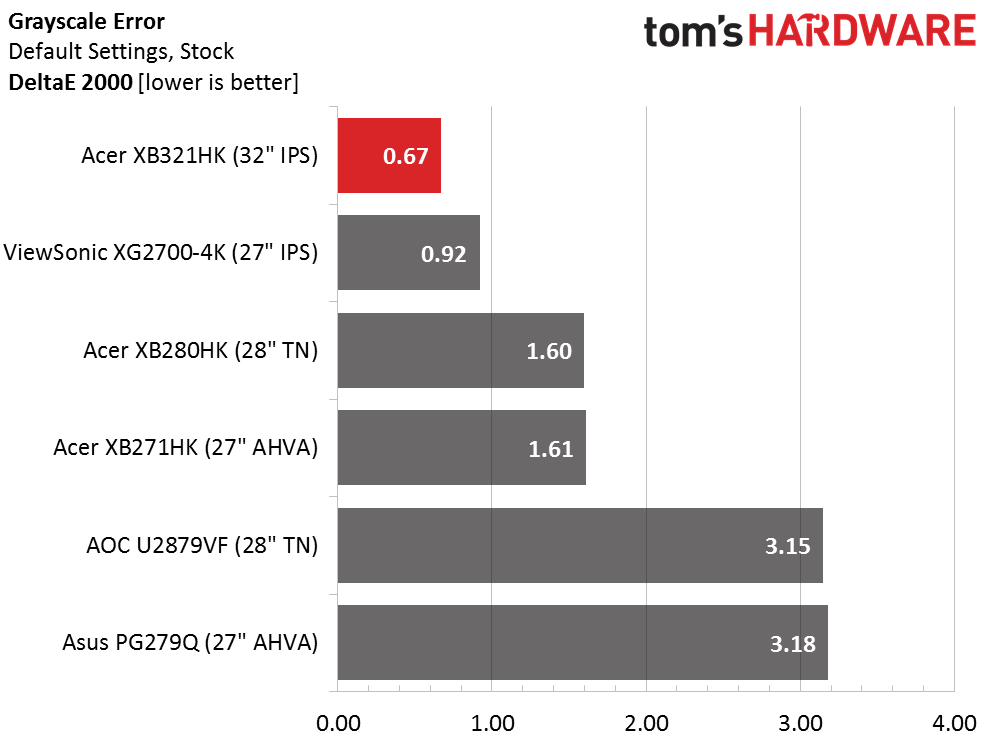

Here is our comparison group.

The XB321HK would beat out most professional displays in the out-of-box grayscale test, yet there is no evidence of a factory-certified calibration here. Acer is simply using a good panel part that’s been engineered properly.

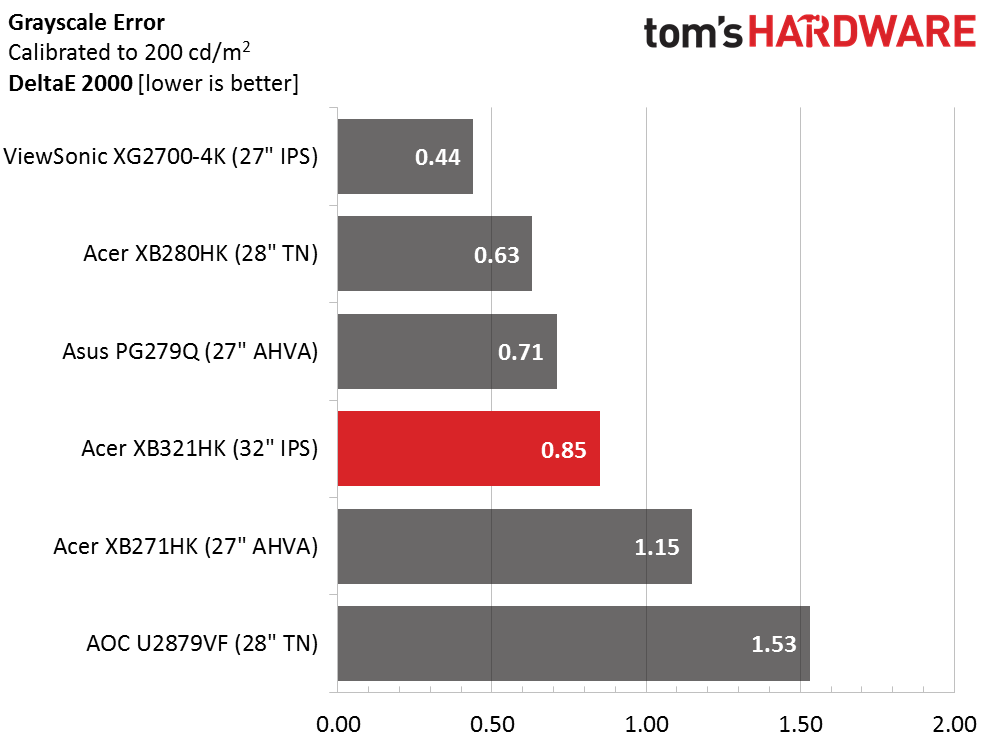

We don’t often record a worse grayscale result after calibration but .85dE is still a super-low error level. The change is due to the 100% brightness point which has shifted thanks to our gamma and contrast adjustments.

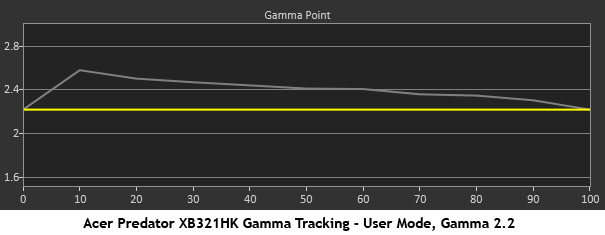

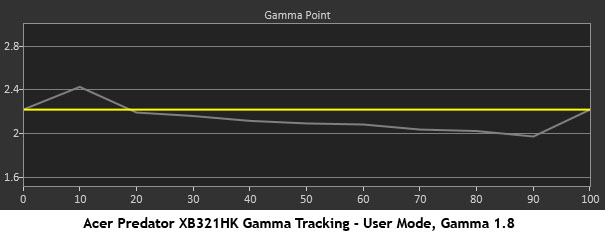

Gamma Response

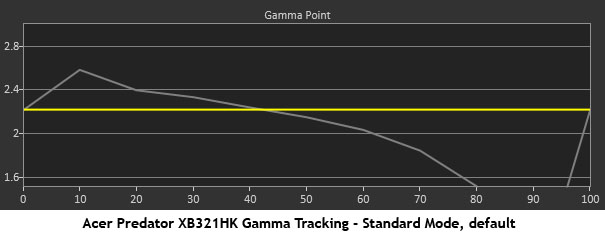

Now we get to the heart of the matter. This is what the XB321HK’s gamma looks like by default. You’d think some sort of dynamic contrast was at work here, but that is not the case. It’s simply a result of the contrast slider being set too high. In real-world content you’ll see a distinct lack of definition in brighter material and highlight detail will disappear.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

After changing only the contrast slider, gamma greatly improves. At least now we’re getting close to the mark. It still looks a little too dark, however. We wish there were a 2.0 setting, but there isn’t.

We weren’t sure which chart we liked better, this one or the previous. To the eye, it’s a matter of preference. When gamma is set to 1.8, the image is a little brighter. One might leave it to personal preference, but after you see the color gamut results on the next page, the choice becomes clear.

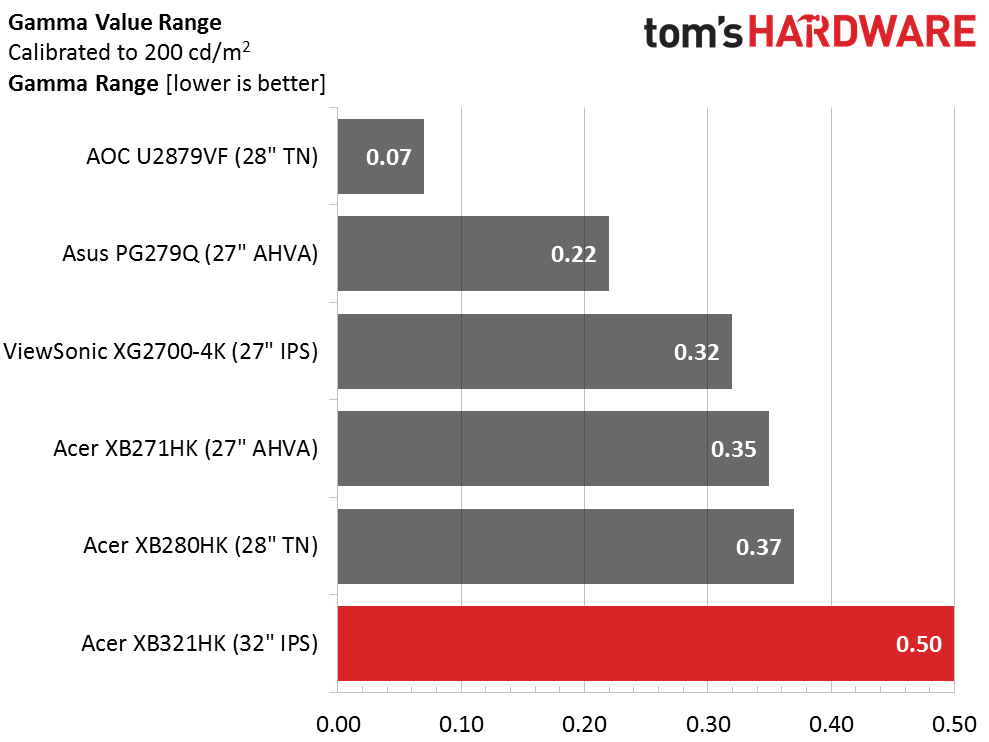

Here is our comparison group again.

Tracking isn’t quite ruler-flat like the others, so the XB321HK finishes last in this test. A .5 range of values isn’t too bad but at this price point, it should be better.

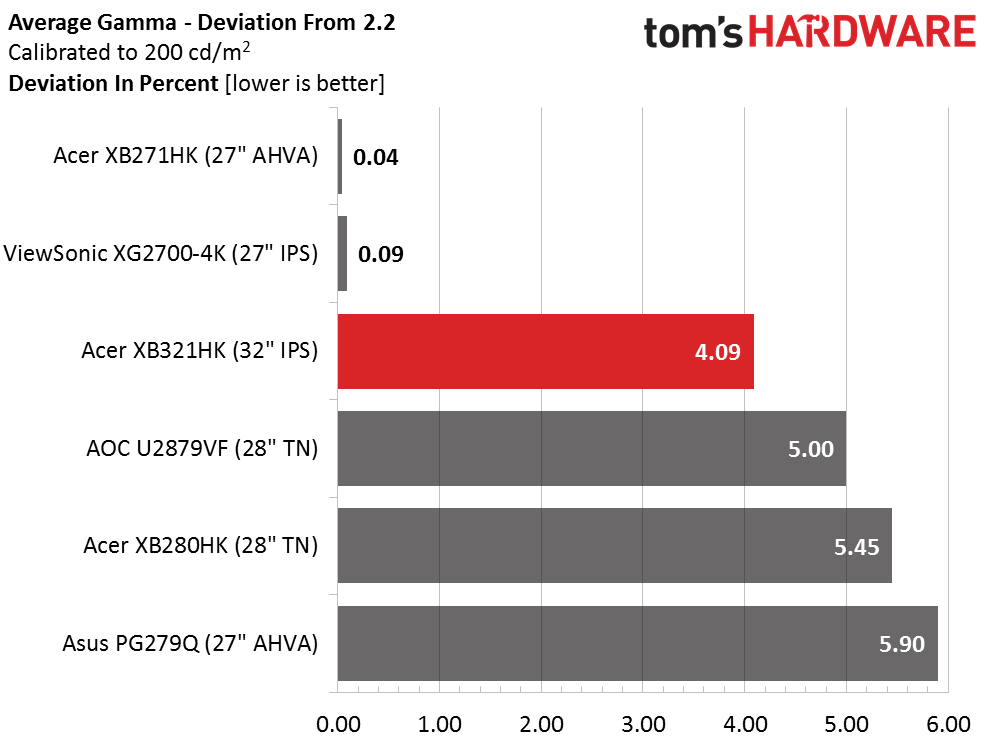

We calculate gamma deviation by simply expressing the difference from 2.2 as a percentage.

Gamma is a compromise with the XB321HK, although the 1.8 setting is better for color accuracy as you’ll see on the next page. It also comes closer to the 2.2 standard. It seems four of the screens could use a tweak in this department. Only the XB271HK and XB2700-4K hit the mark squarely.

Current page: Grayscale Tracking And Gamma Response

Prev Page Brightness And Contrast Next Page Color Gamut And Performance

Christian Eberle is a Contributing Editor for Tom's Hardware US. He's a veteran reviewer of A/V equipment, specializing in monitors. Christian began his obsession with tech when he built his first PC in 1991, a 286 running DOS 3.0 at a blazing 12MHz. In 2006, he undertook training from the Imaging Science Foundation in video calibration and testing and thus started a passion for precise imaging that persists to this day. He is also a professional musician with a degree from the New England Conservatory as a classical bassoonist which he used to good effect as a performer with the West Point Army Band from 1987 to 2013. He enjoys watching movies and listening to high-end audio in his custom-built home theater and can be seen riding trails near his home on a race-ready ICE VTX recumbent trike. Christian enjoys the endless summer in Florida where he lives with his wife and Chihuahua and plays with orchestras around the state.

-

Bartendalot The nite about 4K@60hz being obsolete soon is a valid one and makes the purchase price even more difficult to swallow.Reply

I'd argue that my 1440p@144hz is a more future-proof investment. -

Yaisuah I have this monitor and think its great and almost worth the money, but I want to point out that nobody on the internet seems to realize there's a perfect resolution between 1440 and 4k that looks great and runs great and I think it would be considered 3k. Try adding 2880 x 1620 to your resolutions and see how it looks on any 4k monitor. I run windows and most less intensive games at this resolution and constantly get 60fps with a 970(around 30fps at 4k). You also don't have to mess with windows scaling on a 32in monitor. After seeing how great 3k looks and runs, I really don't know why everyone immediately jumped to 4k.Reply -

mellis I am still going to wait before getting a 4K monitor, since there is still not a practical solution for 4K gaming. In a couple of more years hopefully 4K monitors will be cheap and midrange GPUs will be able to support gaming on them. I think trying to invest in 4K gaming now is a wait of money. Sticking with 1080p for now.Reply -

truerock A 4K@120Hz G-Sync video monitor based PC rig under $4,000 is probably 2 to 3 years away.Reply -

RedJaron Reply

I would hazard a few guesses. First would be that 2880x1620 is so close to 2560x1440 that no manufacturer wants to complicate product lines like that.18346905 said:I have this monitor and think its great and almost worth the money, but I want to point out that nobody on the internet seems to realize there's a perfect resolution between 1440 and 4k that looks great and runs great and I think it would be considered 3k. Try adding 2880 x 1620 to your resolutions and see how it looks on any 4k monitor. I run windows and most less intensive games at this resolution and constantly get 60fps with a 970(around 30fps at 4k). You also don't have to mess with windows scaling on a 32in monitor. After seeing how great 3k looks and runs, I really don't know why everyone immediately jumped to 4k.

Second, 2160 is the least common multiple of both 720 and 1080, meaning it's the lowest resolution that's a perfect integer scalar of both. So with proper upscaling, a 720 or 1080 source picture can be displayed reasonably well on a 4K display. These panels are made for TVs as well as computer monitors, and the majority of TV signal ( at least in the US ) is still in either 720p or 1080p. Upscaling 1080 to 1620 is the same as upscaling 720 to 1080 ( they're both a factor of 150% ). Upscaling by non-integer factors means you need a lot of pixel interpolation and anti-aliasing. To me, this looks very fuzzy ( I bought a 720p TV over a 1080 TV years ago because playing 720p PS3 games and 720p cable TV on a 1080p display looked horrible to me ). So it may be the powers that be decided on the 4K resolution so that people could adopt the new panels and still get decent picture quality with the older video sources ( at least until, or if, they get upgraded ). If so, I can agree with that. -

michalt I have one and have not regretted my purchase for a second. I tend to keep monitors for a long time (my Dell 30 inch displays have been with me for a decade). When looked at over that time period, it's not that expensive for something I'll be staring at all day every day.Reply -

photonboy It would be nice to offer a GLOBAL FPS LOCK to stay in asynchronous mode at all times regardless how high the FPS gets.Reply -

photonboy Update: AMD has this, not sure where it is for NVidia or if the GLOBAL FPS LOCK is easy to do.Reply