Intel SSD 710 Tested: MLC NAND Flash Hits The Enterprise

HET MLC: Supercharged MLC Or SLC Lite?

It's time to pick apart High Endurance Technology MLC, because the terminology is fairly new and, frankly, subject to some confusion. According to Intel, HET provides SLC-like write endurance. This is accomplished in two ways:

- Die-screening consumer MLC for marginally higher endurance.

- Increasing the page programming cycle (tProg).

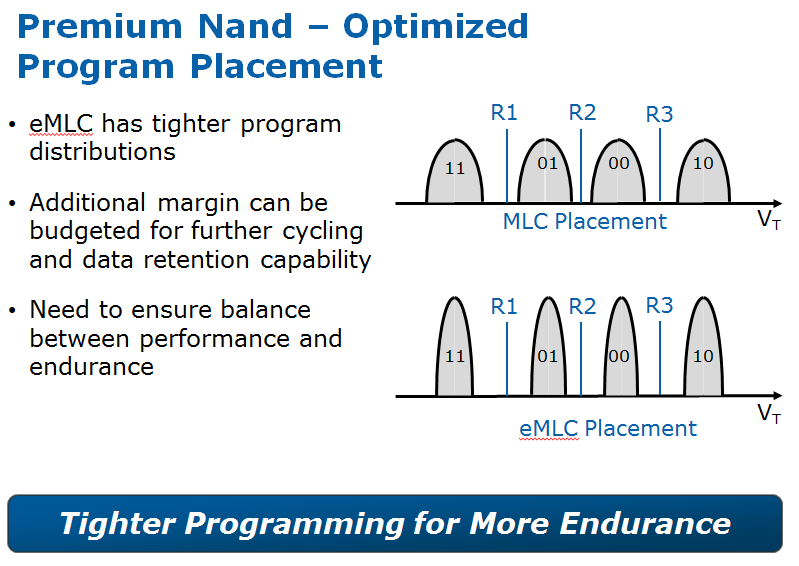

Incidentally, these two traits are what define eMLC. In other words, HET is nothing but a marketing term. At the technology level, Micron tells us that die-screening (picking out the very best dies from a wafer) optimistically results in a two-fold write endurance boost. However, the company is citing an eMLC endurance spec that's six times higher than its consumer-grade MLC. Increasing the page programming cycle makes up the difference, as it potentially increases endurance by a factor of two or three.

| 3x nm Lithography | SLC | MLC | eMLC |

|---|---|---|---|

| Bits/Cell | 1 | 2 | 2 |

| Endurance (P/E Cycles) | 100 000 | 5 000 | 10 000 - 30 000 |

| ECC | 8b/512B | 24b/1KB | 24b/1KB |

| tProg | 0.5 ms | 1.2 ms | 2 - 2.5 ms |

| tErase | 1.5 - 2 ms | 3 ms | 3 - 5 ms |

| Performance Over Time | Constant | Degrades | Degrades |

Although die-screening seems like an easy (albeit cost-adding) way to cherry-pick the best pieces of memory without compromising performance, increasing the time it takes to program a page doesn't necessarily sound as sexy. The reason why relates back to the difference between MLC and SLC NAND.

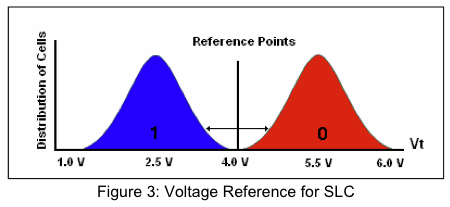

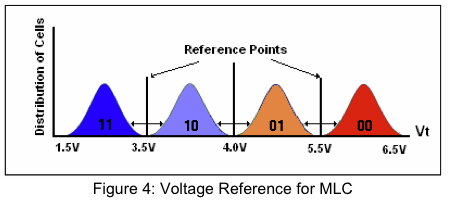

Single-level cell flash stores one bit per cell. It is a single-bit binary system, either a "0" or a "1." MLC memory stores up to two bits per cell, so you're looking at four states to represent all possible combinations. Though that works out neatly on paper, there is a cost associated with increasing storage density.

Flash memory only has so much voltage tolerance. You can't just double the voltage to multiply the scale. Instead, you need more sensitivity between each state. This means more programming to manipulate a very precise amount of charge stored in the floating gate. MLC and SLC memory both operate similarly. However, MLC needs more precision in charge placement and charge sensing.

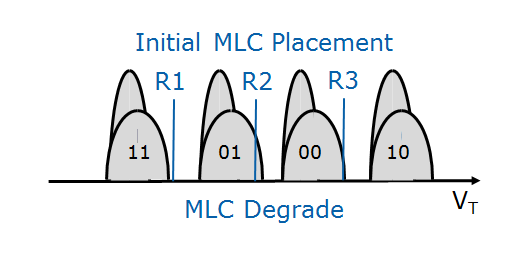

As P/E cycles are slowly consumed over the course of time, however, the read margins that determine the value of each cell start shrinking as a result of:

- loss of charge due to flash cell oxide degradation

- over-programming caused by erratic programming steps

- programming of adjacent erased cells due to heavy read or writes

Consequently, over time, the drive experiences data retention problems and read-related errors. Basically, they wear out. That's not a problem on SLC-based drives because they only have one reference point. But MLC memory is completely different, which is why extending the page programming cycle has a pronounced impact on endurance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

In essence, additional time is spent sending a more precise charge to the memory cell. This increases the probability of writing to a cell within a smaller window, in turn creating much larger reference points and extending the amount of wear each cell can withstand. The result is higher endurance at the expense of less performance. And that, ladies and gentlemen, is the long explanation for the SSD 710's low 2700 IOPS random write rate.

Current page: HET MLC: Supercharged MLC Or SLC Lite?

Prev Page Inside The SSD 710: Something Old And Something New Next Page HET MLC: What Does Endurance Really Look Like?-

whysobluepandabear TLDR; Although expensive, the drives offer greater amounts of data transfer, reliability and expected life - however, they cost a f'ing arm and a leg (even for a corporation).Reply

Expect these to be the standard when they've dropped to 1/3rd their current price. -

RazorBurn To some companies or institutions.. The data this devices hold far outweighs the prices of this storage devices..Reply -

nekromobo I think the writer missed the whole point on this article.Reply

What happens when you RAID5 or RAID1 the SSD's??

I don't think any enterprise would trust a single SSD without RAID. -

halcyon __-_-_-__with the reliability those have they will never ever find their way into any serverMy Vertex 3 has been very reliable and I'm quite satisfied with the performance. However, I've heard reports that some, just like with anything else, haven't been so lucky.Reply -

toms my babys daddy I thought ssd drives were unreliable because they can die at any moment and lose your data, and now I see that they're used for servers as well? are they doing daily backups of their data or have I been lied to? ;(Reply -

halcyon toms my babys daddyI thought ssd drives were unreliable because they can die at any moment and lose your data, and now I see that they're used for servers as well? are they doing daily backups of their data or have I been lied to? ;(SSDs are generally accepted to be more reliable than HDDs...at least that's what I've been lead to believe.Reply -

Onus halcyonSSDs are generally accepted to be more reliable than HDDs...at least that's what I've been lead to believe.Yes, but when they die, that's it; you're done. You can at least send a mechanical HDD to Ontrack (or a competing data recovery service) with a GOOD chance of getting most or all of your data back; when a SSD bricks, what can be done?Reply

-

CaedenV nekromoboI think the writer missed the whole point on this article.What happens when you RAID5 or RAID1 the SSD's??I don't think any enterprise would trust a single SSD without RAID.The assumption is that ALL servers will have raid. The point of this article is how often will you have to replace the drives in your raid? All of that down time, and manpower has a price. If the old Intel SSDs were about as reliable as a traditional HDD, then that means that these new ones will last ~30x what a traidional drive does, while providing that glorious 0ms seek time, and high IO output.Reply

Less replacement, less down time, less $/GB, and a similar performance is a big win in my book.

toms my babys daddyI thought ssd drives were unreliable because they can die at any moment and lose your data, and now I see that they're used for servers as well? are they doing daily backups of their data or have I been lied to? ;(SSDs (at least on the enterprise level) are roughly equivalent to their mechanical brothers in failure rate. True, when the drive is done then the data is gone, but real data centers all use RAID, and backups for redundancy. Some go so far as to have all data being mirrored at 2 locations in real time, which is an extreme measure, but worth it when your data is so important.

Besides, when a data center has to do a physical recovery of a HDD then they have already failed. The down time it takes to physically recover is unacceptable in many data centers. Though at least it is still an option.