Nvidia’s $2B Synopsys stake strengthens its push into AI-accelerated chip design — partnership to bolster GPU-accelerated EDA tools, but a careful balance is required

Nvidia buys a minority position in Synopsys, and the pair announce a new joint effort.

Nvidia has taken a $2 billion equity stake in Synopsys, as the two companies announced a long-term collaboration to accelerate electronic design automation workloads on GPUs. Under the partnership, which was announced on December 1, the two companies will co-develop tools intended to shift computationally heavy EDA tasks from CPUs to Nvidia GPUs.

Synopsys already dominates several segments of the chip-design software market, including generative AI for chip development, and its tools are used across the industry by CPU and GPU vendors.

Nvidia and Synopsys emphasized that the agreement is non-exclusive and that Synopsys will continue its work with other hardware makers, but it still raises questions about influence and long-term control of the design pipeline. That’s especially true for Nvidia, for whom the deal serves to accelerate its own development cycle while giving the company a foothold in the upstream tooling that competitors rely on.

Pushing chip design deeper into accelerated computing

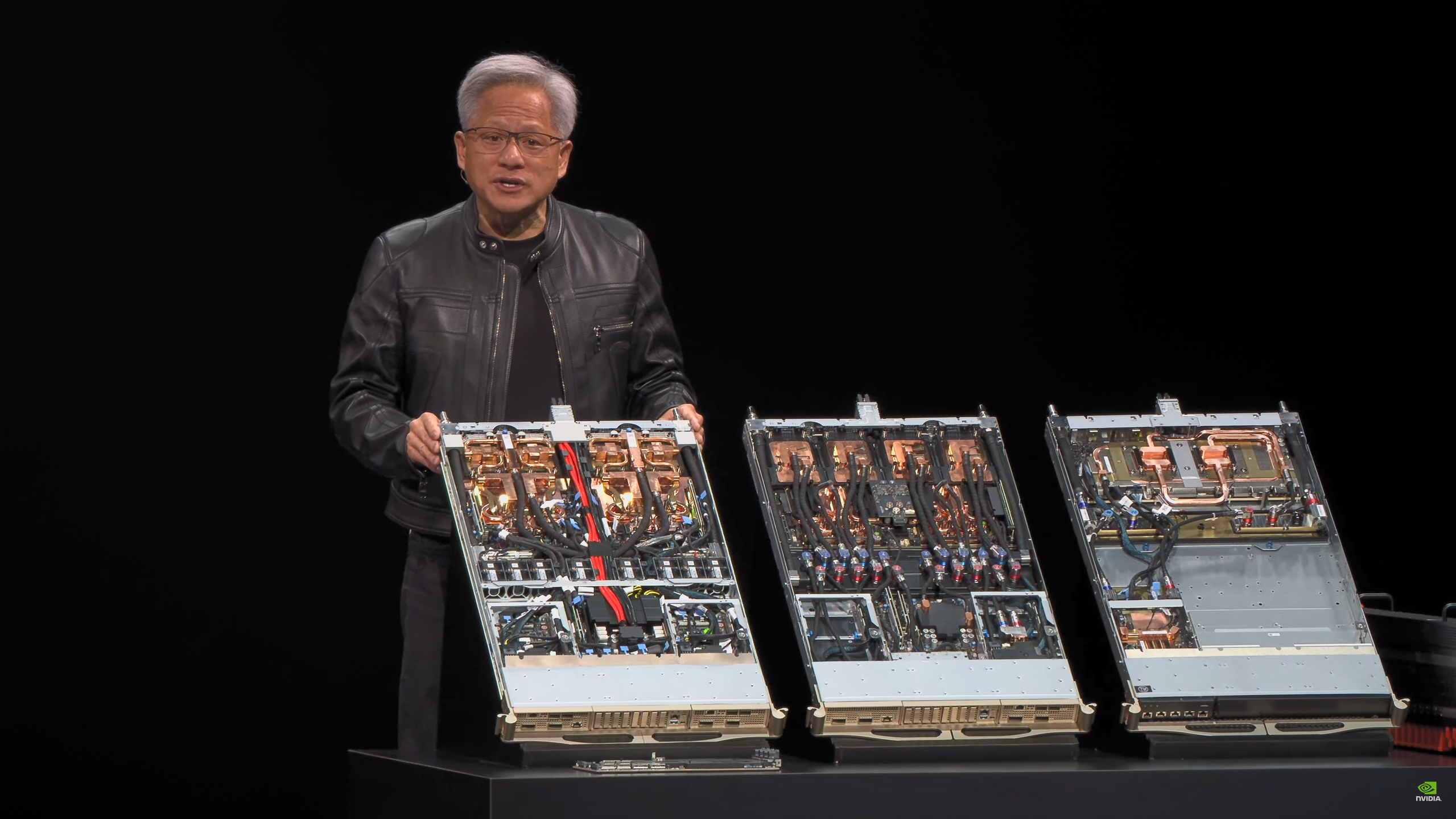

GPU-accelerated EDA is nothing new, but this partnership formalises it at a scale that no other chip designer currently matches. In the announcement, Jensen Huang described a workflow in which full-chip simulations and verification steps are executed on GPU clusters rather than CPU farms.

"CUDA GPU-accelerated computing is revolutionizing design — enabling simulation at unprecedented speed and scale, from atoms to transistors, from chips to complete systems, creating fully functional digital twins inside the computer," he said.

The shift may reduce multi-week simulation stages to timescales closer to days or hours, depending on the workload. Synopsys is contributing its existing AI-assisted design stack while adopting Nvidia’s software frameworks, including CUDA and the company’s agent-based automation work from its NeMo platform.

It appears that the aim is to create a generation of tools that treat accelerated compute as the baseline for design work. Synopsys has been integrating AI into its DSO.ai and VSO.ai products for years, but the scale of GPU compute available through Nvidia’s platform allows full-chip workloads that were previously constrained by CPU throughput. This, in practice, would mean more design variants can be explored, more exhaustive verification passes can be performed, and layout optimization loops can be run to convergence rather than truncated for schedule reasons.

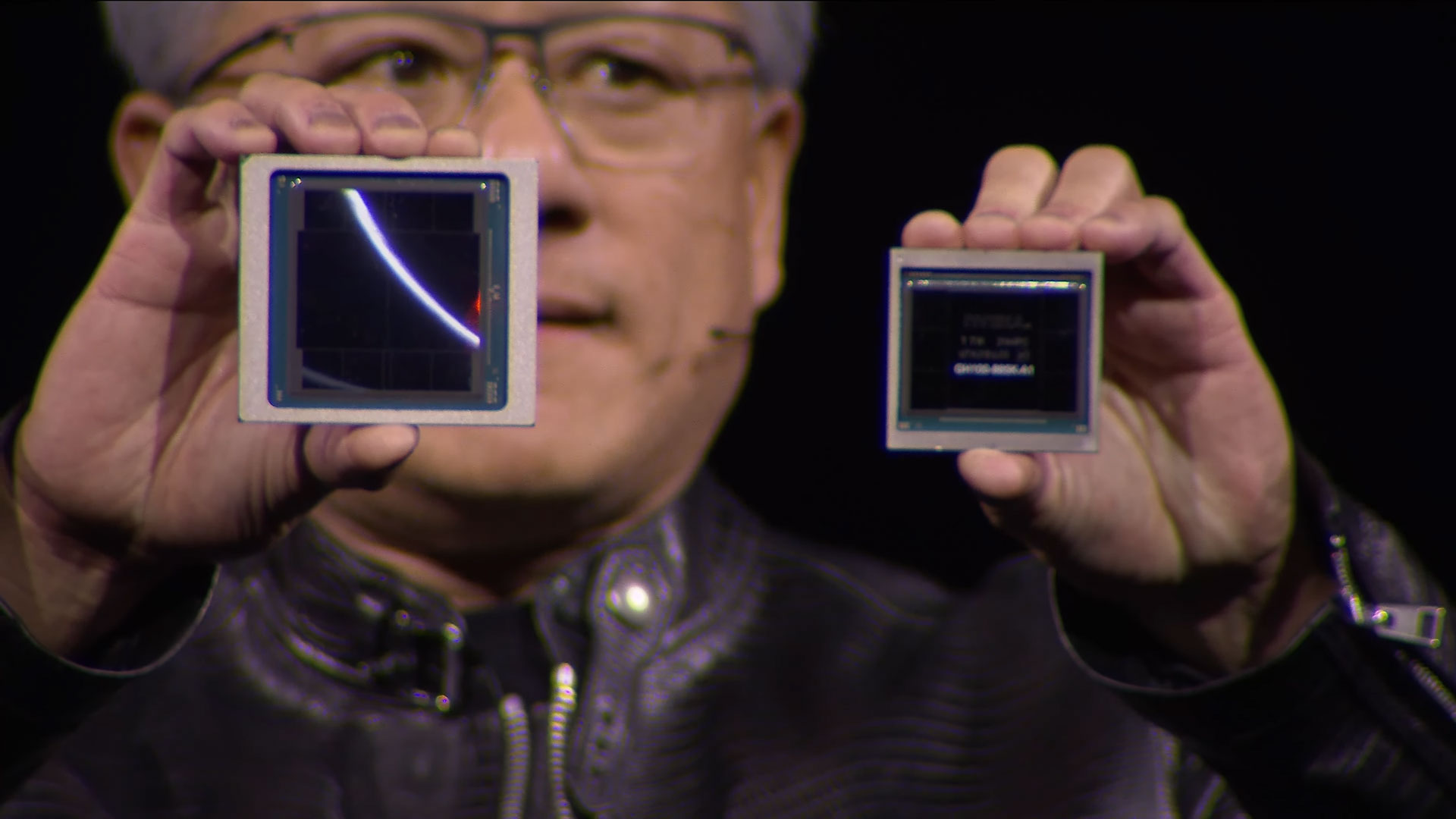

Additionally, if EDA becomes faster and more automated on Nvidia hardware, Nvidia is the first beneficiary. It can build, evaluate, and iterate on silicon faster than rivals bound to traditional CPU clusters unless those rivals make equivalent transitions to accelerated simulation. Cadence has been experimenting with similar GPU-accelerated workflows, but without the scale or direct equity alignment that Synopsys now has with Nvidia.

Influence without ownership

Synopsys is not a neutral supplier in the abstract sense, but it is the closest thing the semiconductor industry has to a common operational layer for design. Its tools are ubiquitous, sitting inside AMD, Intel, and hundreds of other fabless companies. Nvidia taking a stake here isn’t an acquisition, but it still introduces a non-trivial degree of influence.

The announcement insists that the $2 billion investment comes without obligations to purchase Nvidia hardware or divert roadmap priorities, and Synopsys leadership stressed that it will engage with AMD, Intel, and others just as before. Even with these assurances, the market will parse how the partnership evolves. If accelerated EDA features ship first on Nvidia hardware, AMD and Intel could find themselves depending on optimization paths tuned to their largest rival’s platform.

Similarly, if some design teams migrate portions of their flows to GPU-equipped compute clusters for the performance gain, they may need to use Nvidia systems unless alternative vendors can deliver similar acceleration. Cadence has begun to collaborate with Nvidia separately and offers its own AI-based tooling, which acts as a counterweight, but Synopsys controls a broad portfolio. Competitors are unlikely to walk away from it.

It’s also very important to be mindful of internal data handling, which could become a particular area of scrutiny because EDA vendors are entrusted with proprietary designs. Synopsys and Nvidia will have to demonstrate that joint development does not give either side visibility into sensitive content, especially designs belonging to Nvidia’s GPU and CPU rivals. Synopsys already operates in an environment where strict separation is required, but if nothing else, the equity link changes perceptions.

Accelerated chip timelines?

The wider impact of this partnership depends on how much of the EDA workflow can be moved onto GPUs and how quickly Synopsys and Nvidia deliver production-grade tools. If simulation, verification, and layout generation are materially accelerated, chipmakers could reduce time-to-tape-out. The first commercial deployments are likely to appear in Synopsys’s cloud EDA platform, with on-premise integration following for customers that already run GPU infrastructure for HPC or AI.

This may also influence how aggressively companies prototype new architectures. A design team constrained by CPU-based simulation might only explore a narrow window of configurations. With higher throughput, they could test larger matrices of power-performance-area trade-offs and validate more advanced designs. This is very expensive under conventional methods, but GPU-accelerated workflows could theoretically reduce costs significantly.

Again, Nvidia benefits from this directly. Faster convergence during design shortens internal roadmaps across its silicon. The company is already associated with rapid architectural cadence, and this gives it another internal advantage before considering external competition. But the broader industry stands to gain if Synopsys can generalise these acceleration paths for all customers. Design complexity is rising with each new node — faster than tools and methodologies are evolving — and many of the most challenging issues of the day relate to physical verification.

A careful balance is required

Nvidia’s $2 billion investment in Synopsys brings chip-design tooling and accelerated compute closer together at a moment when semiconductor development is straining under its own complexity.

The joint plan to execute full-scale EDA workloads on GPUs has the potential to shorten design cycles and expand the scope of what teams can simulate. It also positions Nvidia inside one of the most sensitive parts of the semiconductor stack while competitors evaluate how the arrangement will affect their own design flows.

Ultimately, the duo will have to strike a careful balance with their collaboration. If EDA becomes increasingly reliant on Nvidia hardware, competitors may feel that the design phase itself is moving too close to Nvidia. That could trigger efforts to diversify accelerated workflows, whether through AMD GPUs, dedicated NPUs, or domain-specific accelerators for simulation and verification. The industry has historically been comfortable with Synopsys as a unifying layer because it remained supplier-agnostic, so keeping that perception intact will matter.

Luke James is a freelance writer and journalist. Although his background is in legal, he has a personal interest in all things tech, especially hardware and microelectronics, and anything regulatory.