How Much CPU Does the GeForce RTX 3080 Need?

Breaking the bottleneck

We've just posted the GeForce RTX 3080 Founders Edition review, which now reigns — until next week's launch on the GeForce RTX 3090 — as the best graphics card for gaming and sits at the top of the GPU benchmarks hierarchy. While your graphics card is usually the biggest considering when it comes to gaming performance, however, we also wanted to look at some of the best CPUs for gaming to see just how much performance you lose — or gain! — by running it with something other than the Core i9-9900K we use in our current GPU testbed.

For this article, we've pulled out several of the latest AMD and Intel processors: Core i9-10900K, Core i9-9900K, Core i3-10100, Ryzen 5 3600, and Ryzen 9 3900X. Then just for good measure, we've gone old school and dug up an 'ancient' Core i7-4770K Haswell chip. This is the oldest CPU I've currently got hanging around, and it should help answer the question of just how much CPU you need to make good use of the RTX 3080.

We'll be testing at 1080p, 1440 and 4K at both medium and ultra presets. We said in the RTX 3080 review that it's a card primarily designed for 4K gaming, and that it starts to hit CPU bottlenecks at 1440p. That applies even more at 1080p with medium settings. Generally speaking, you don't buy a top-shelf GPU to play games at 1080p, though there are esports pros that do just that while using a 240Hz or even 360Hz monitor. Most games will struggle to hit 240 fps; however, some even have fps caps of around 200 fps. But we're doing this for science!

Because we're using multiple platforms and were under time constraints, not every PC used identical hardware. In the case of the old Haswell PC, it couldn't use most of the modern hardware we install in other testbeds — the sole LGA1150 motherboard I have doesn't have an M.2 slot, and of course, it requires DDR3 memory. I also only have a single kit of DDR3-1600 CL9-9-9 memory, which is fine but certainly not the best possible RAM for such a PC. Cases, power supplies, SSD, and other items also differ, though most of these aren't critical as far as gaming performance is concerned.

The DDR4-capable PCs were all tested with the same DDR4-3600 CL16 memory kit, while our standard GPU benchmarks use DDR4-3200 CL16 memory. We've included both scores for the 9900K as yet another point of reference. Memory speed matters a bit, depending on the game, though it doesn't make a huge difference overall. In fact, at higher settings, the 'slower' DDR4-3200 kit actually came out slightly ahead, probably thanks to slightly better subtimings. Here are the full testbed specs:

| Platform | Z490 | Z390 | X570 | Z97 |

|---|---|---|---|---|

| CPU | Core i9-10900K, Core i3-10100 | Core i9-9900K | Ryzen 9 3900X, Ryzen 5 3600 | Core i7-4770K |

| Cooler | NZXT X63 Kraken | Corsair H150i RGB Pro | NZXT X63 Kraken | Be Quiet! Shadow Rock Slim |

| Motherboard | MSI MEG Z490 Ace | MSI MEG Z390 ACE | MSI MPG X570 Gaming Edge Wifi | Gigabyte Z97X-SOC Force |

| Memory | Corsair 2x16GB Platinum RGB DDR4-3600 CL16-18-18 | Corsair 2x16GB Platinum RGB DDR4-3600 CL16-18-18 | Corsair 2x16GB Platinum RGB DDR4-3600 CL16-18-18 | G.Skill 2x8GB Ripjaws X DDR3-1600 CL9-9-9 |

| Memory | - | Corsair 2x16GB Vengeance LPX DDR4-3200 CL16-18-18 | - | - |

| Storage | Samsung 970 Evo 1TB | Adata XPG SX8200 Pro 2TB | Corsair MP600 2TB | Samsung 850 Evo 2TB |

| Power Supply | NZXT E850 | Seasonic Focus PX-850 | Thermaltake Grand 1000W | PC Power & Cooling 850W |

| Case | NZXT H510i | Phanteks Enthoo Pro M | XPG Battlecruiser | Some Ultra POS |

All of the systems are running at stock settings but with XMP memory profiles activated. However, the old Z97 board applies an all-core clock of 4.3GHz, even at stock settings. It's a bit of a quirky motherboard, and the BIOS was finicky enough that I just left it alone. Basically, even though it says 4770K, treat these results as being representative of a stock-clocked Core i7-4790K. If you actually have a stock clocked 4770K, or something like an older i7-2600K, your performance will be even lower than what we're showing here.

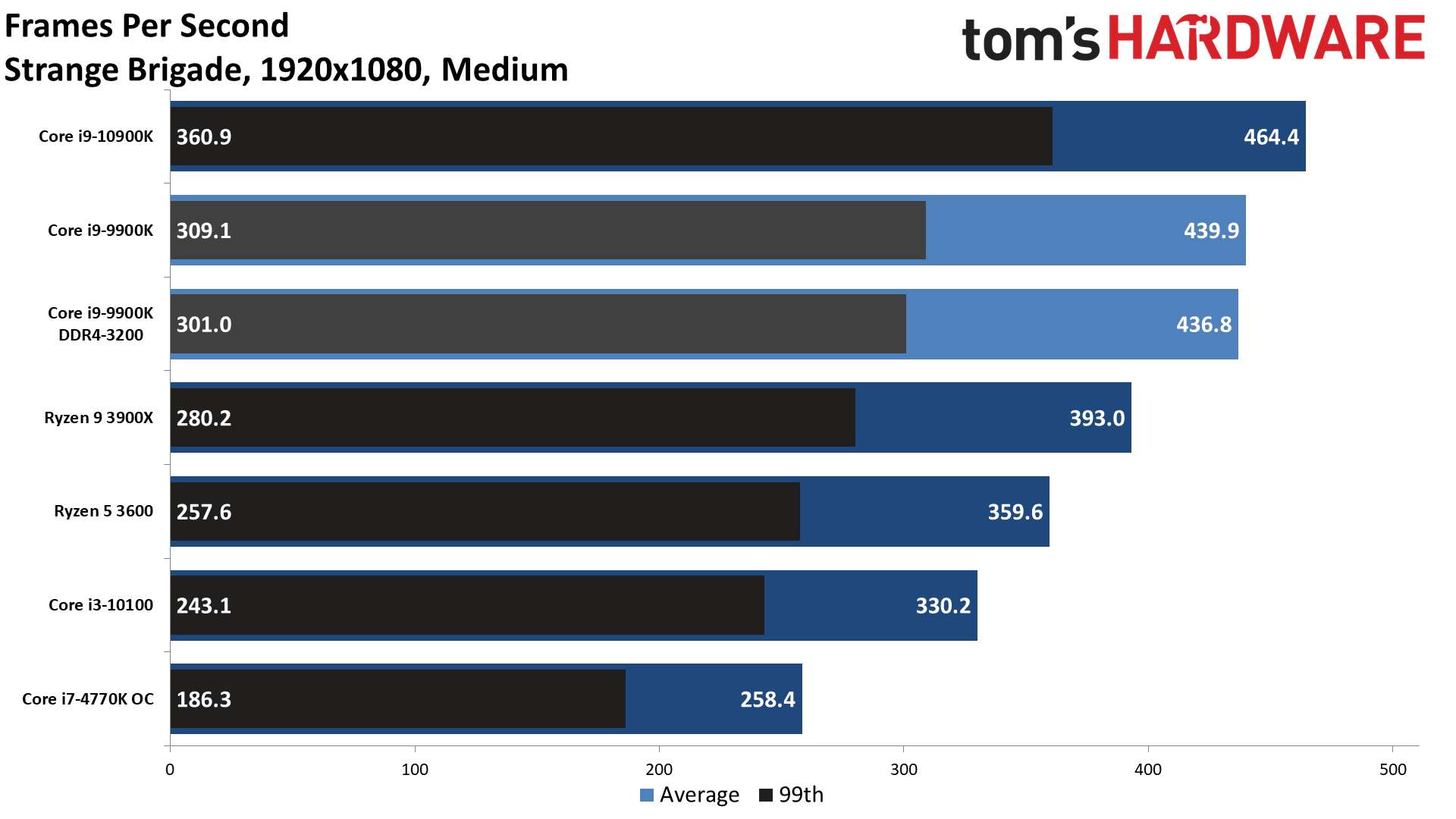

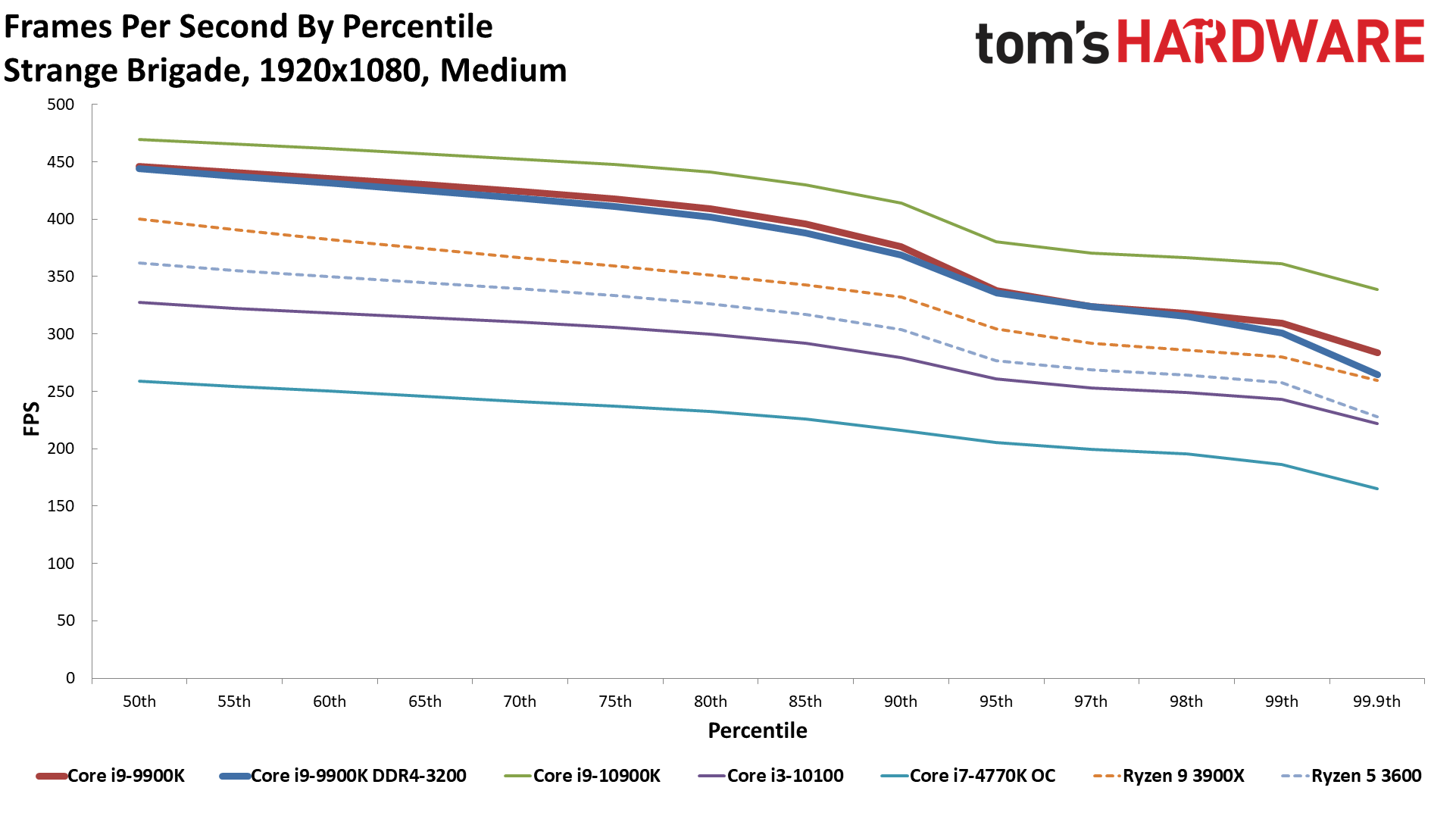

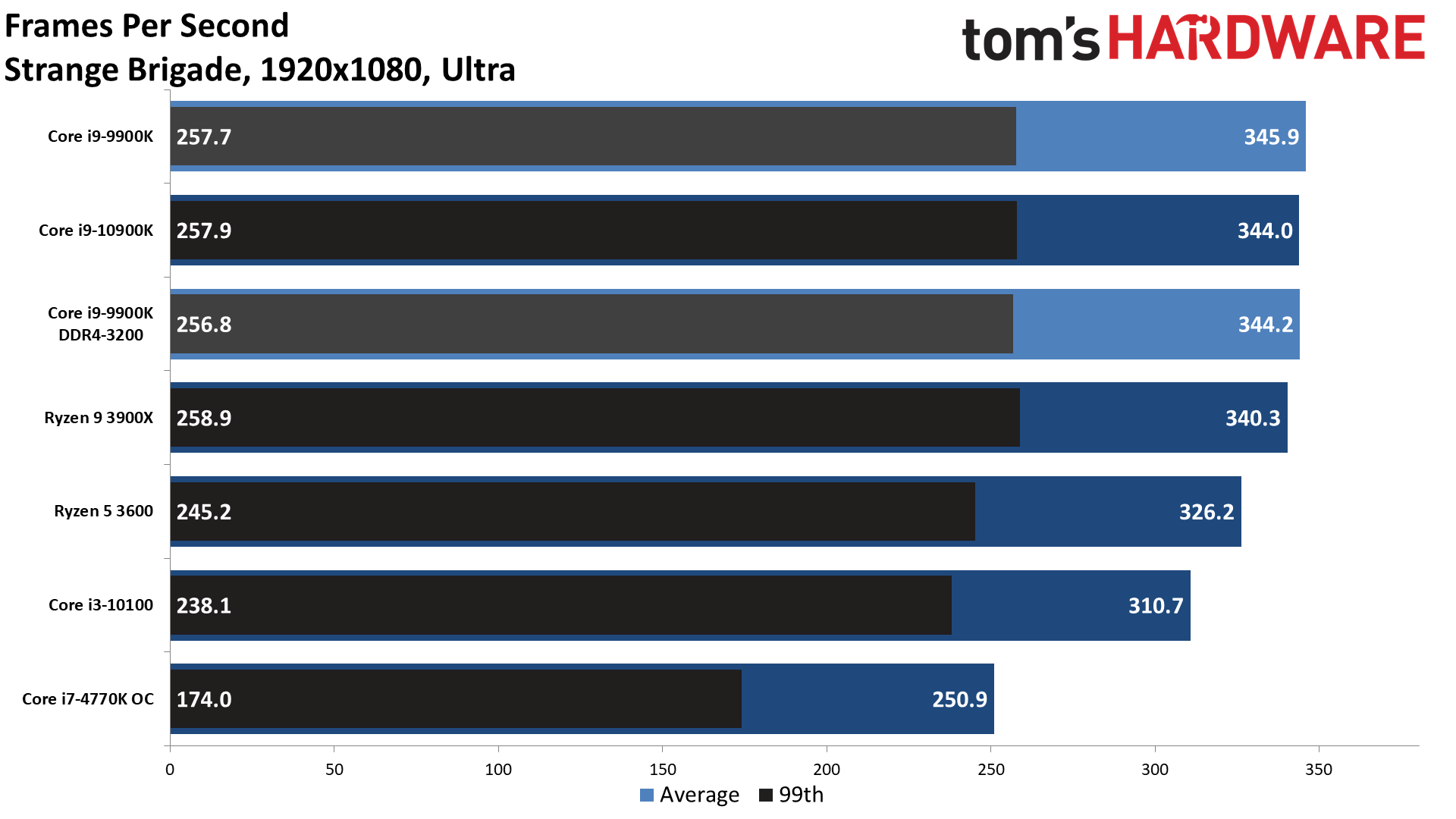

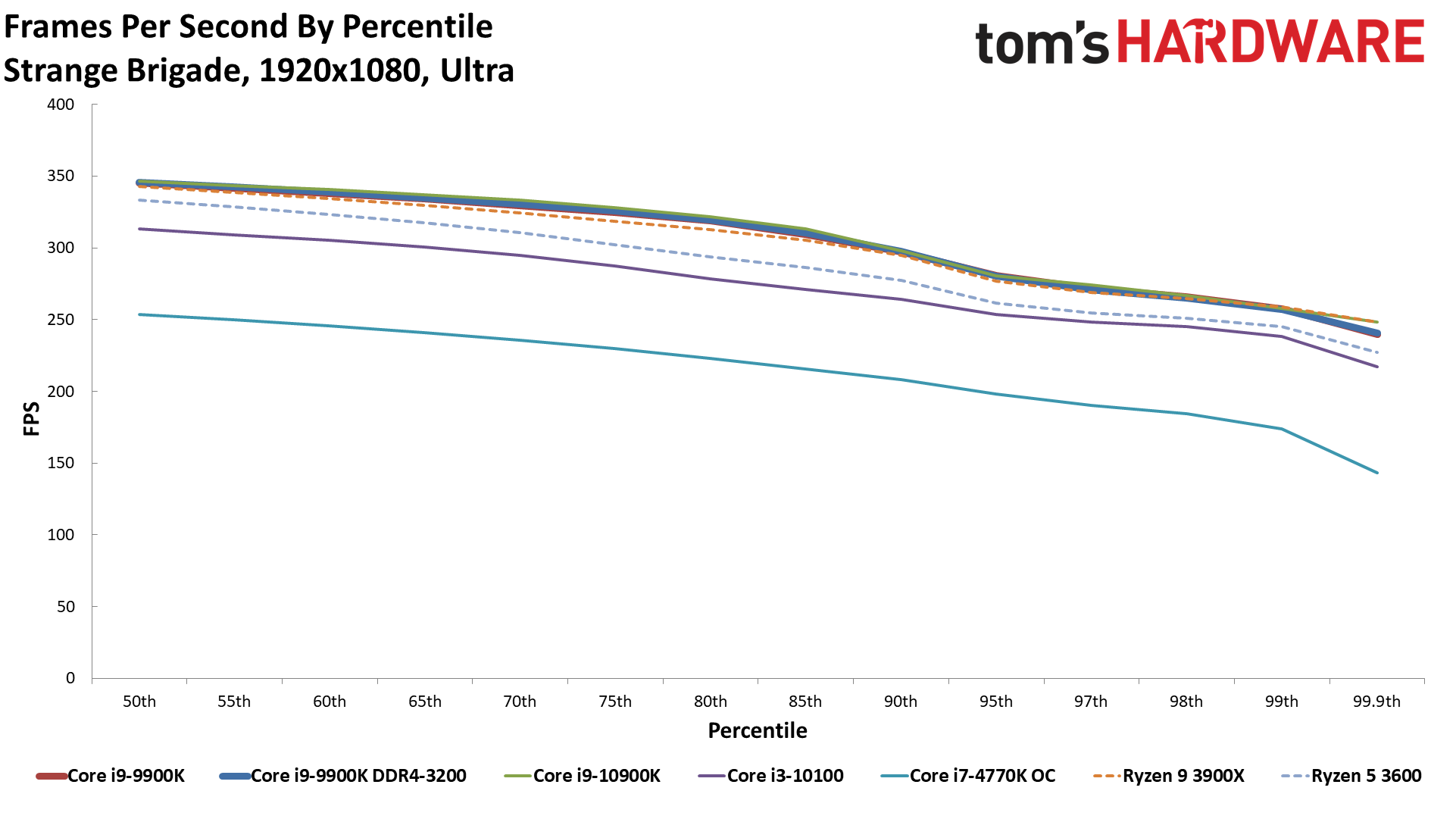

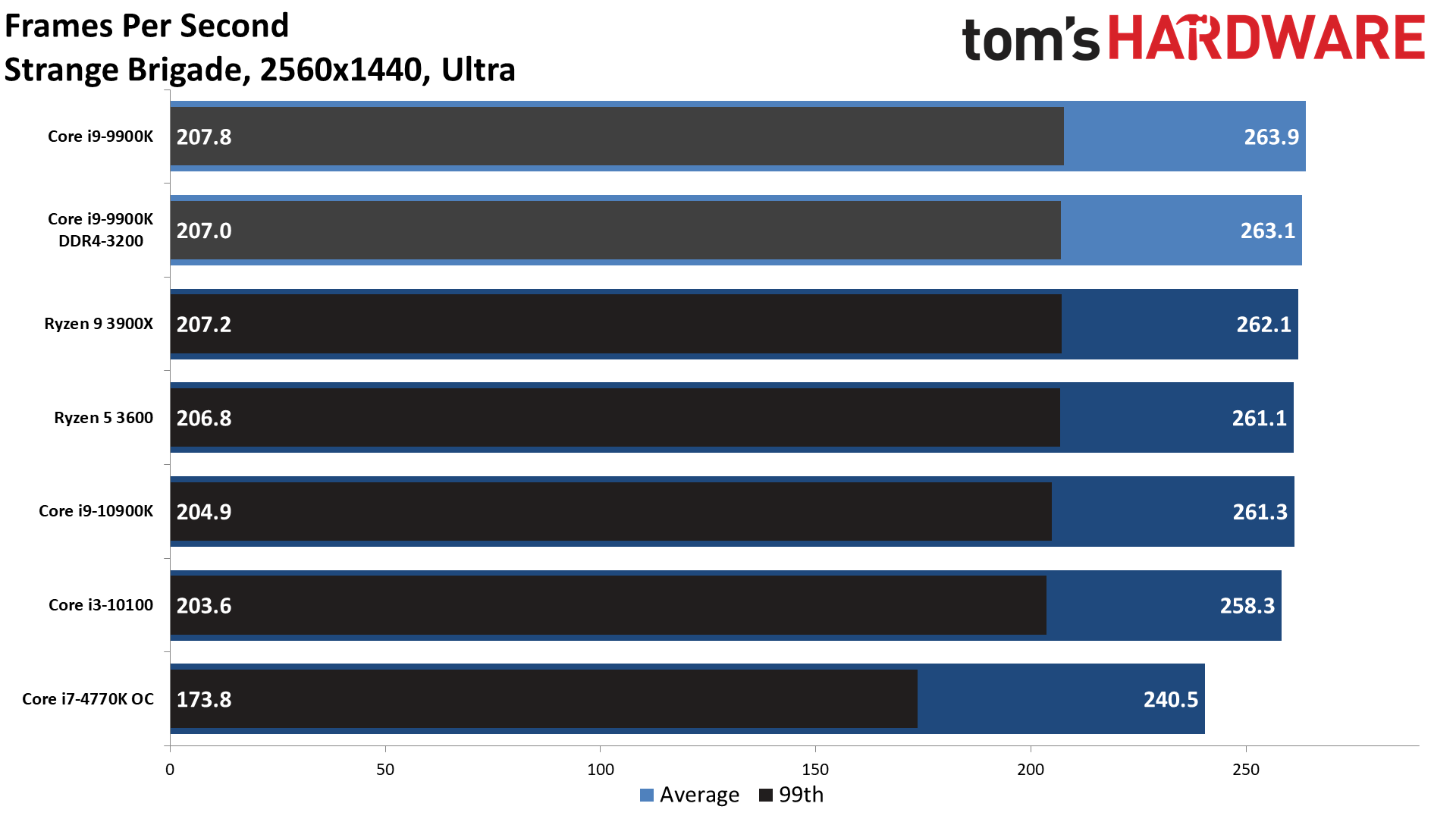

We're going to start out with 1080p testing, which is where the choice of CPU is going to make the biggest difference. None of the games in our test suite are esports titles where extreme fps matters, though we do have at least one game that can break into the >360 fps range (Strange Brigade). We've long maintained that there are greatly diminishing returns going beyond 144Hz or even 120Hz displays, and a big part of that is the fact that many games simply don't scale to the higher framerates necessary to make the most of higher refresh rates. Okay, let's hit the charts:

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

GeForce RTX 3080 FE: 1080p CPU Benchmarks

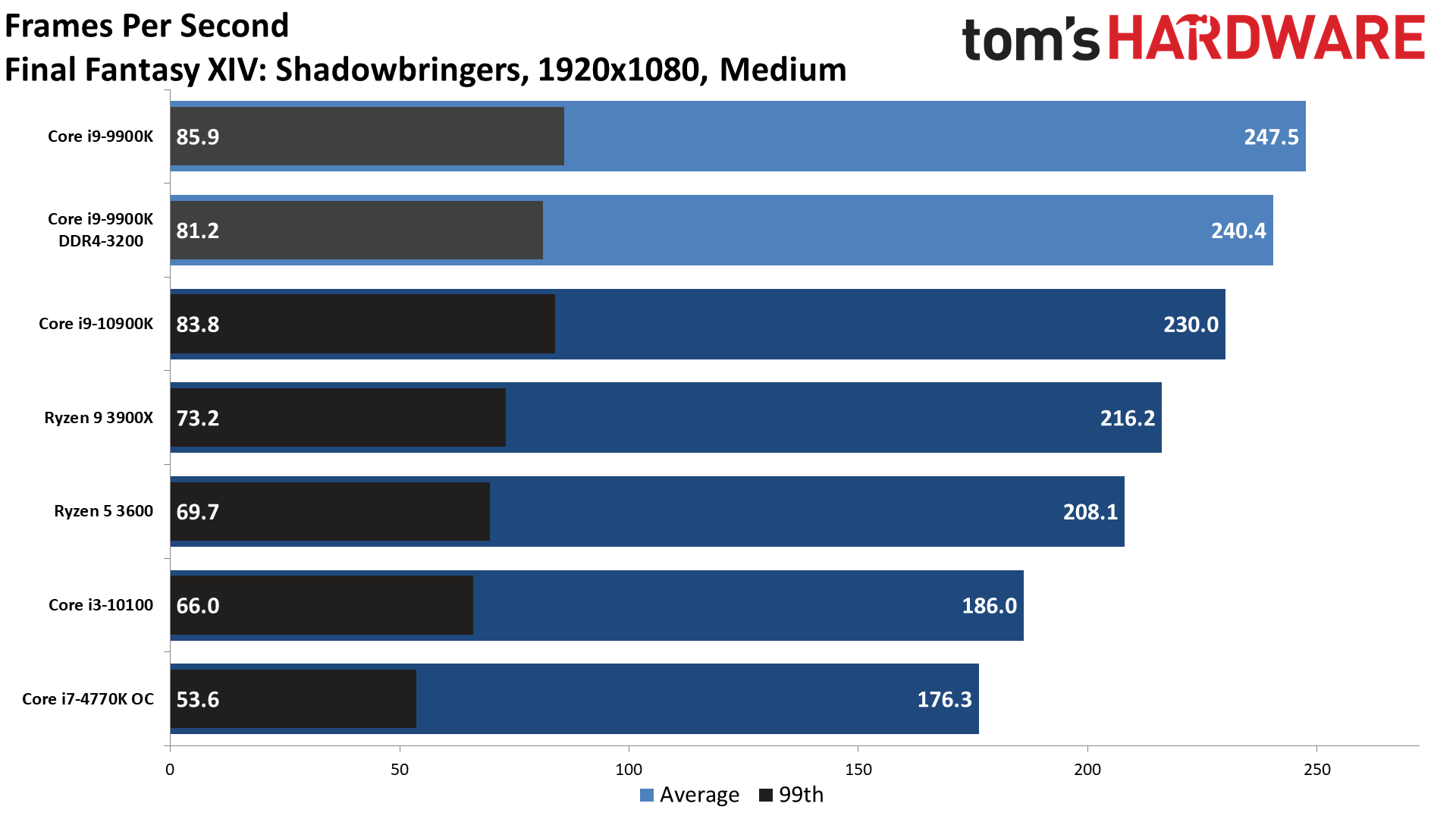

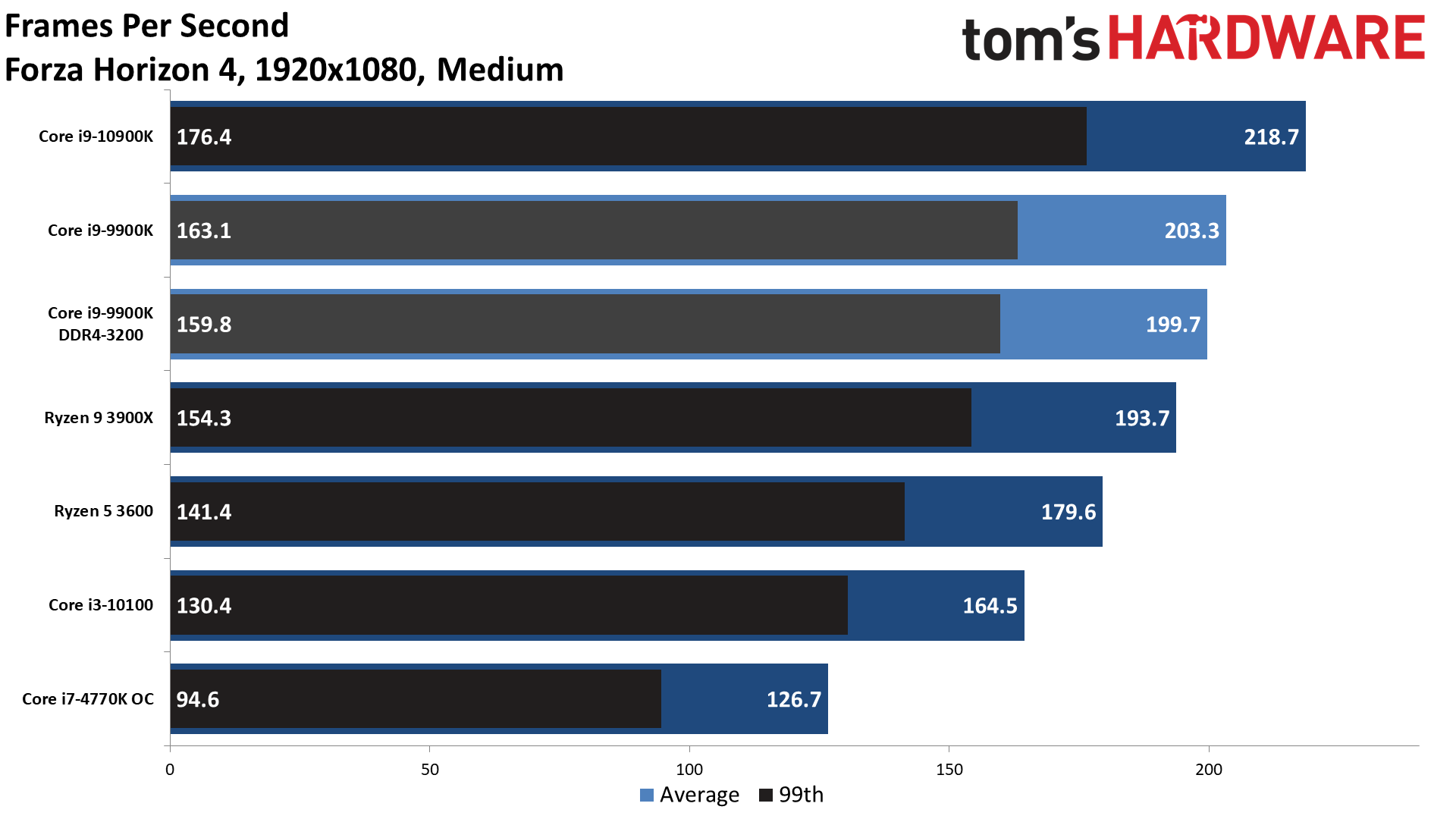

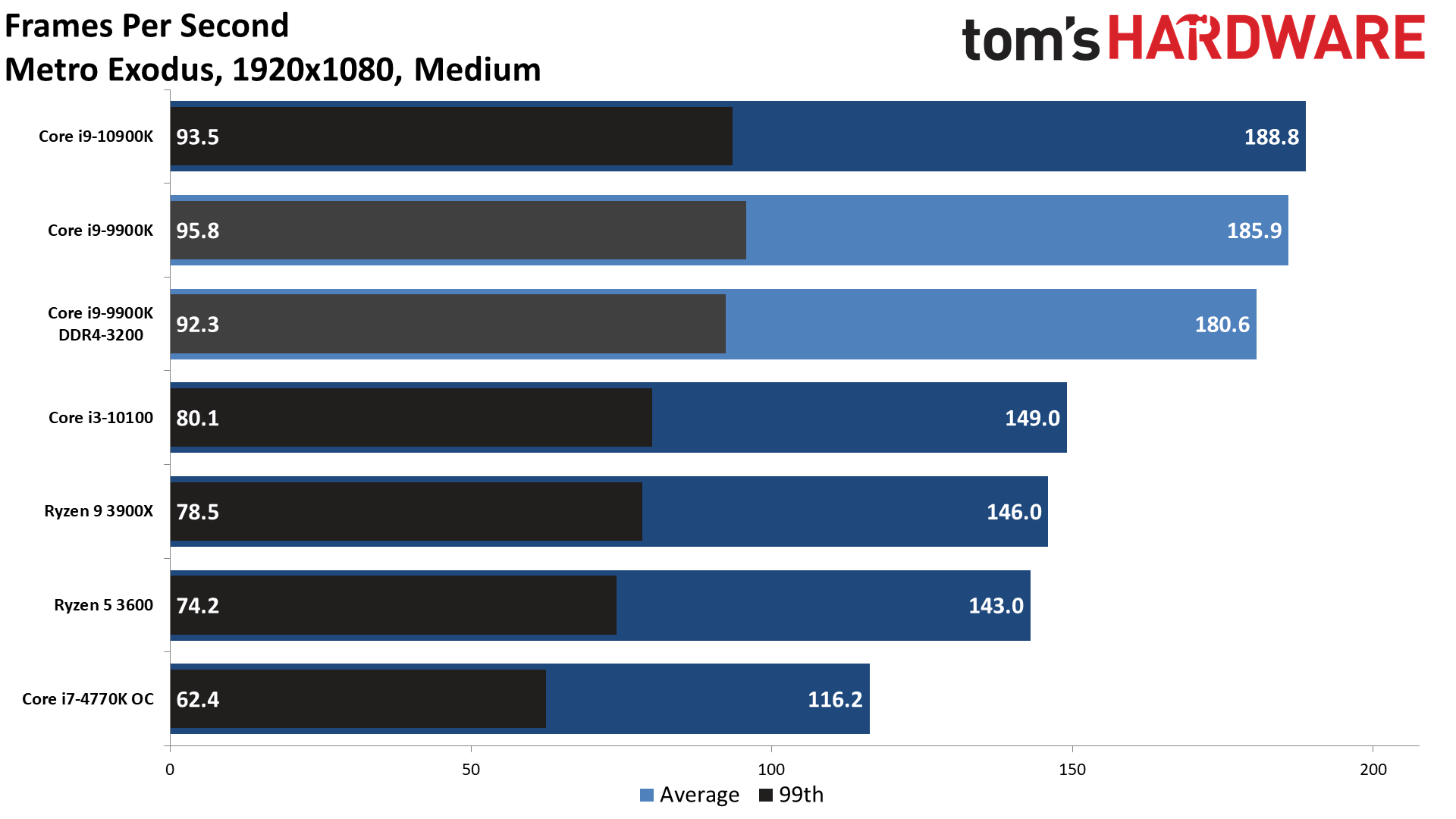

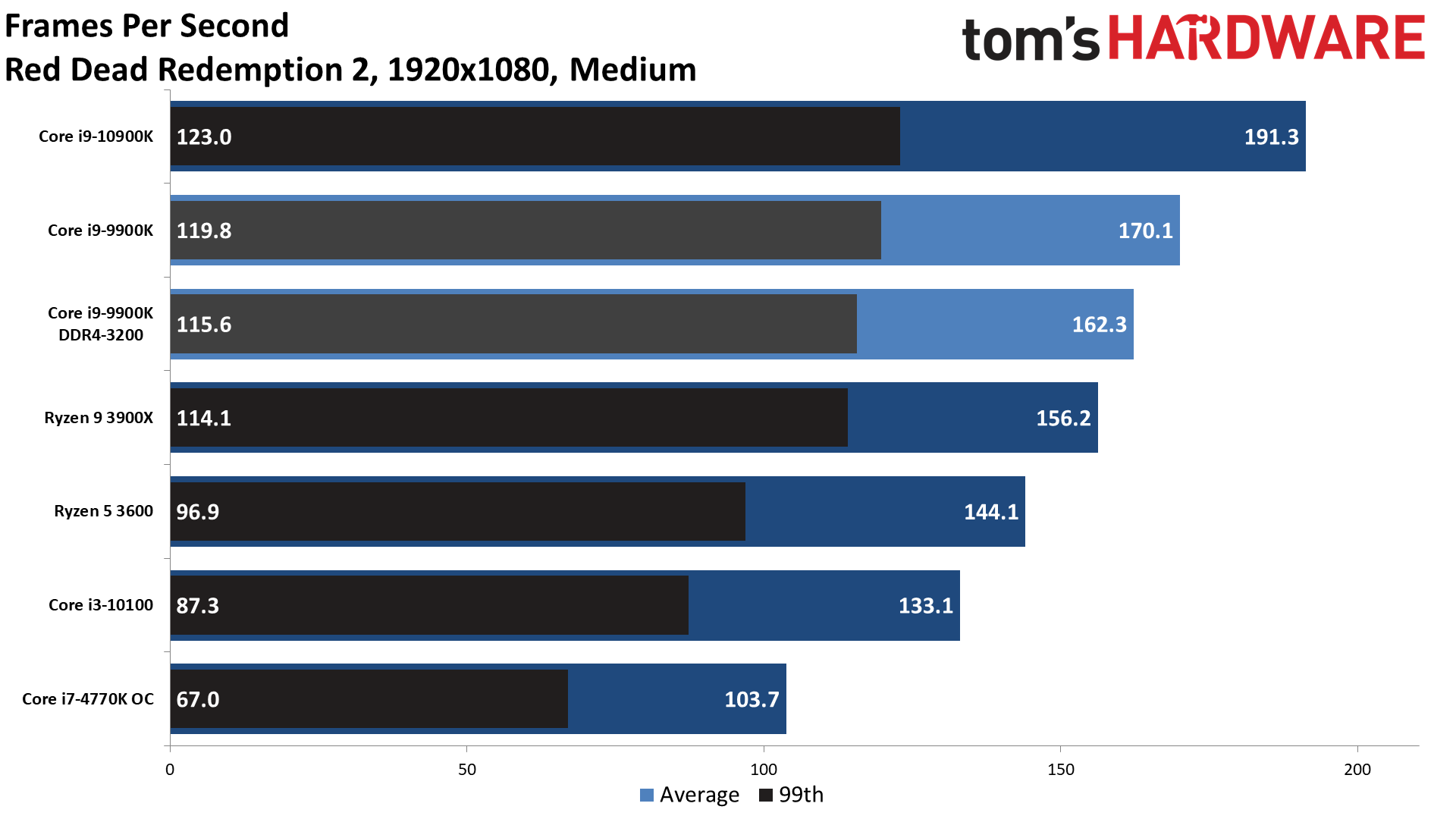

1080p Medium

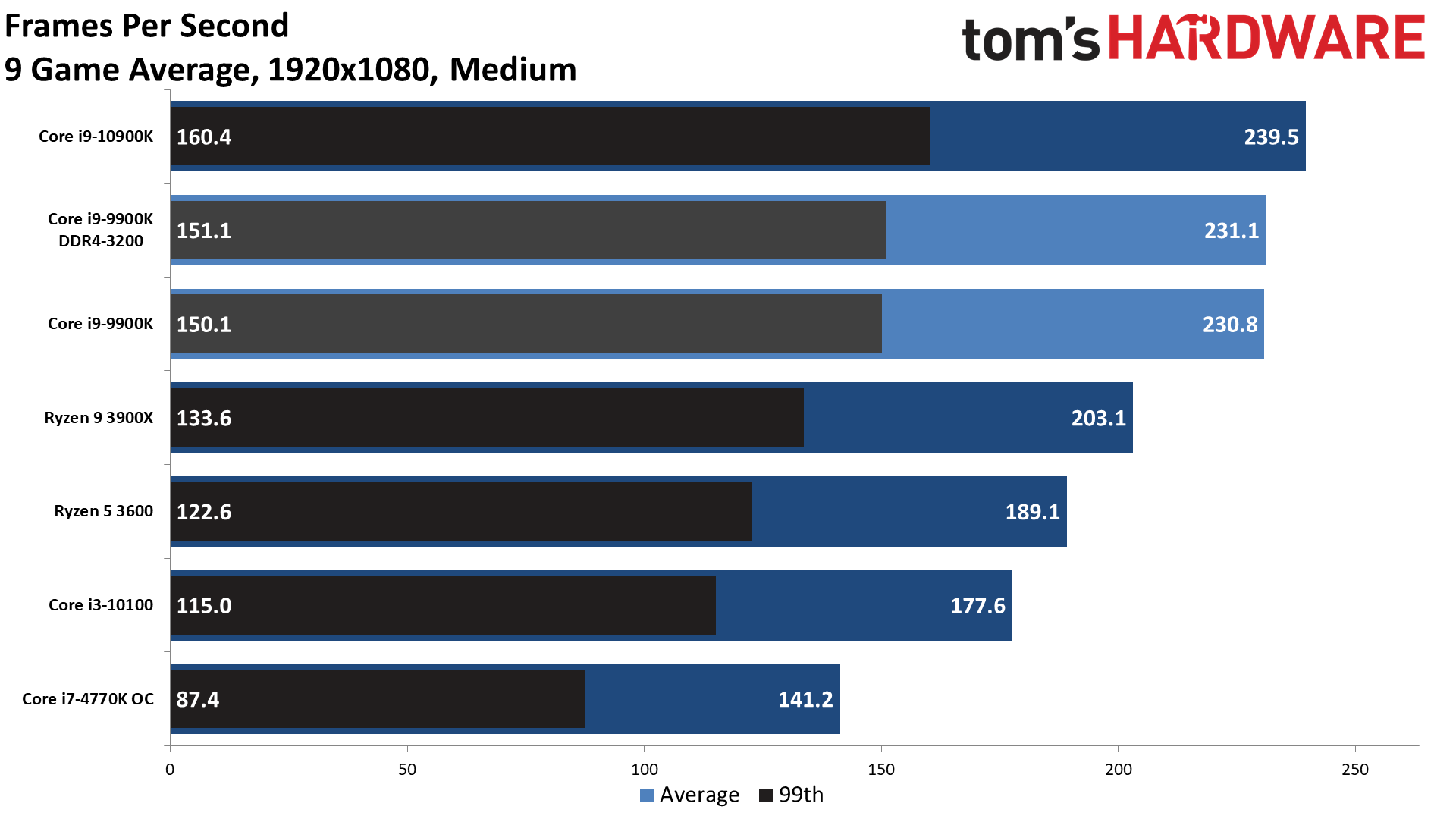

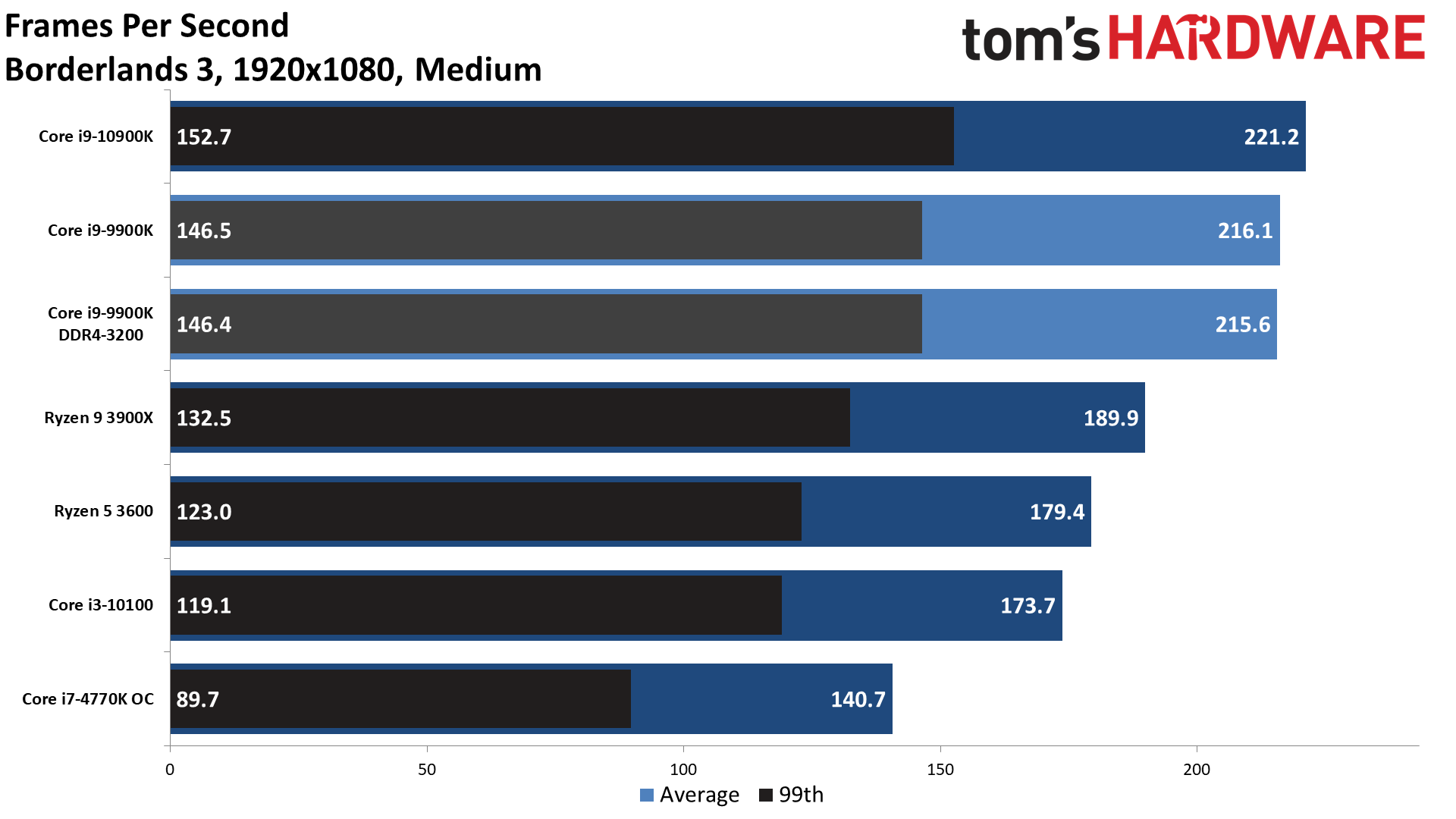

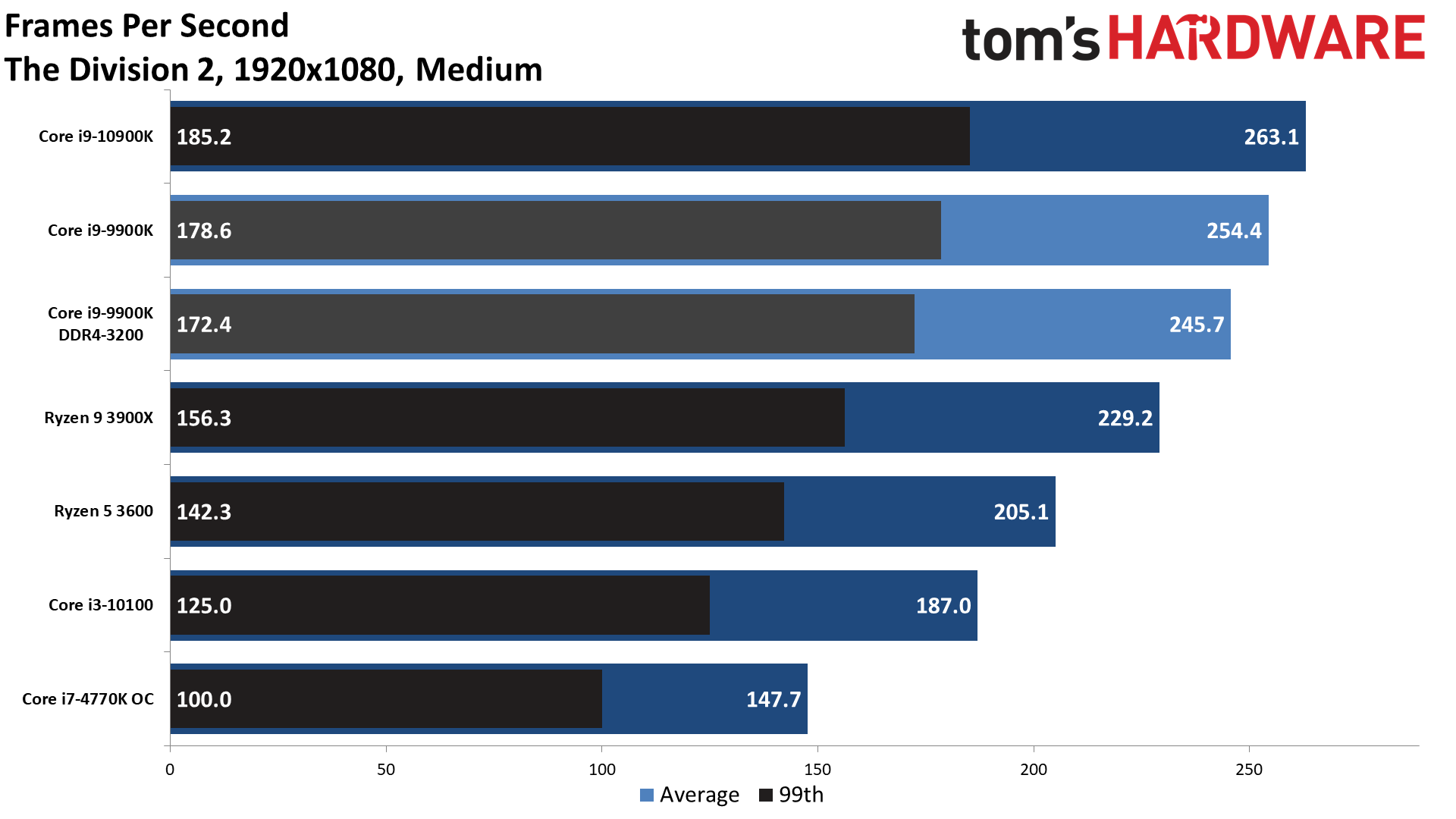

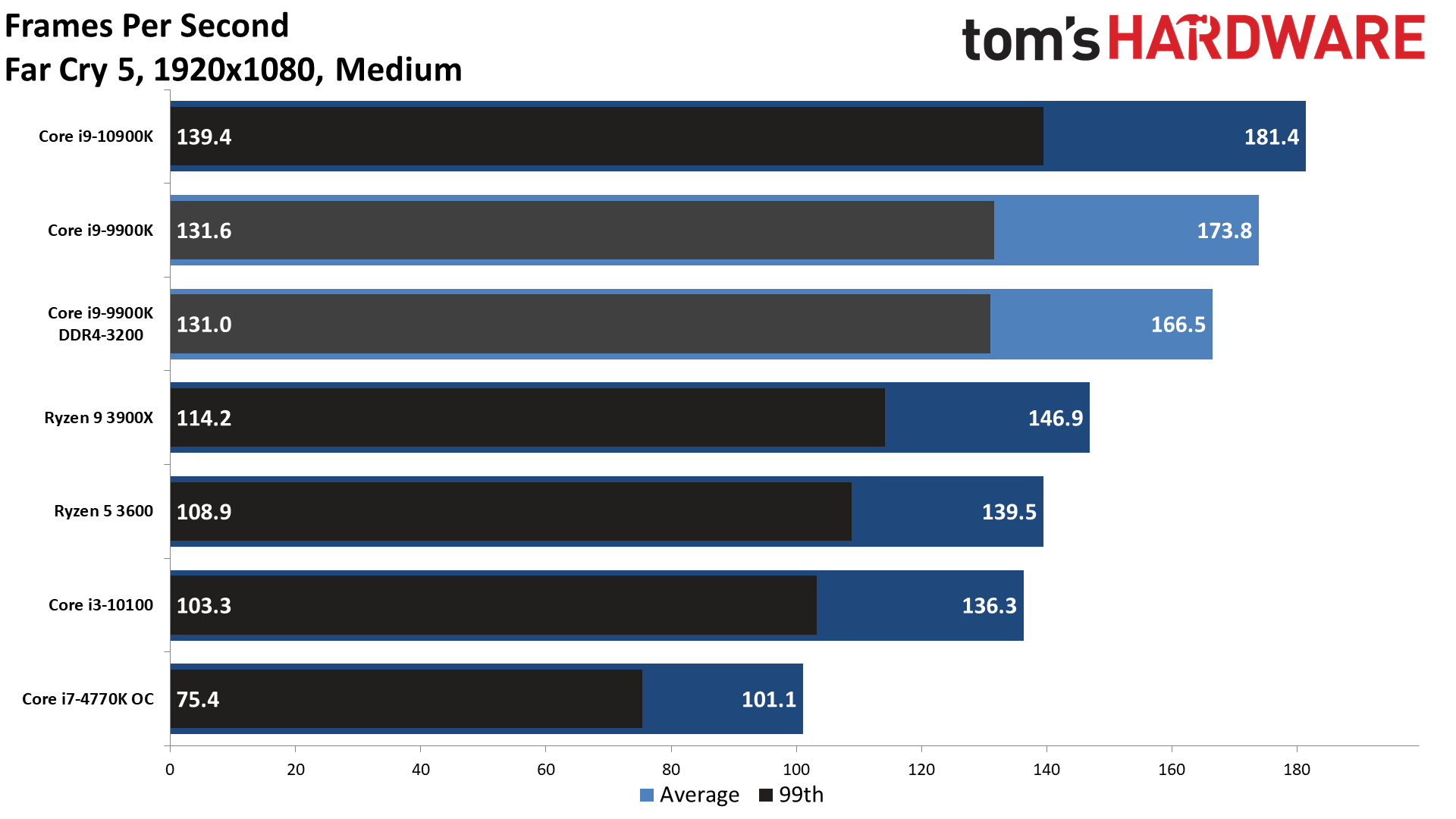

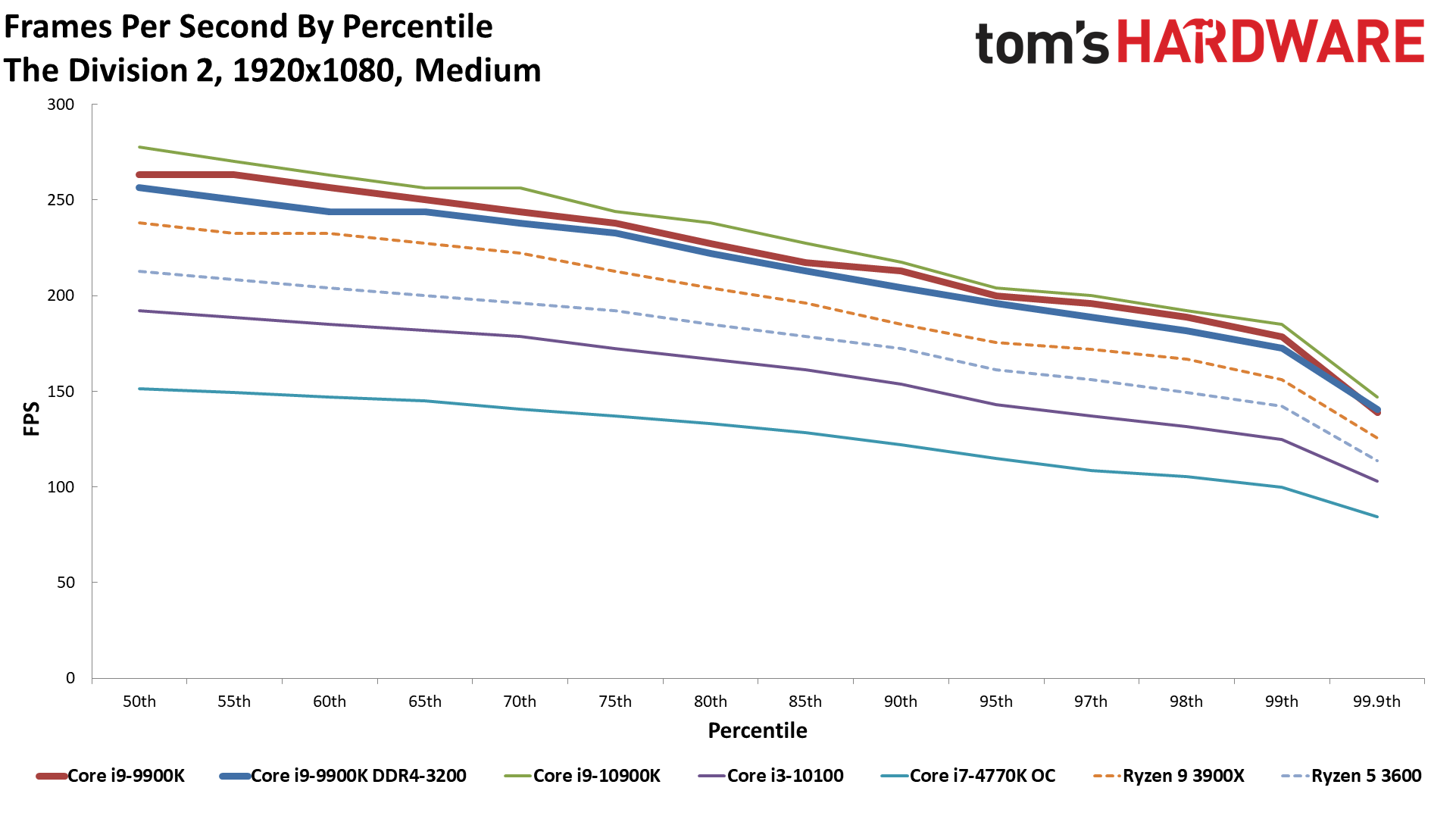

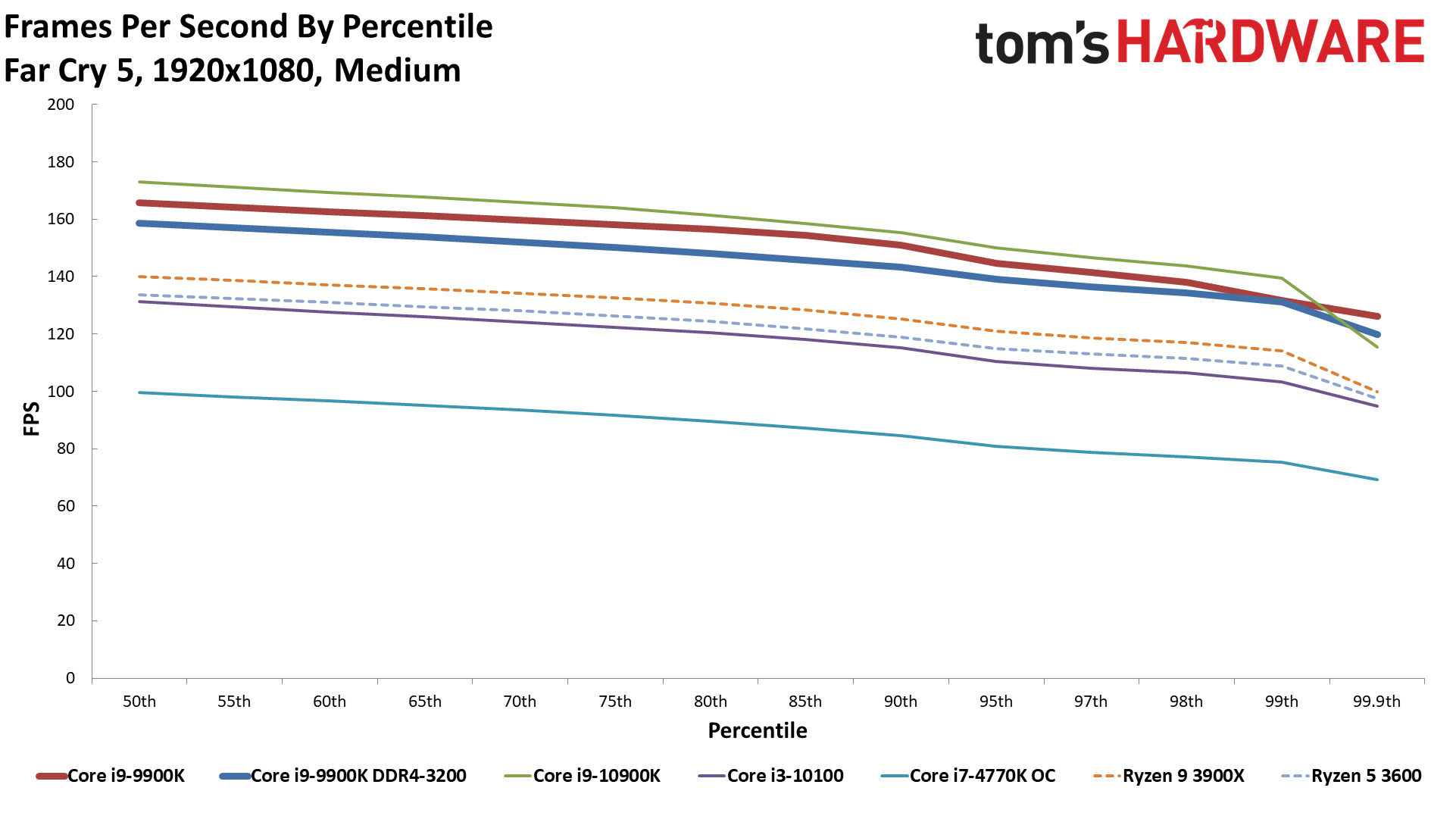

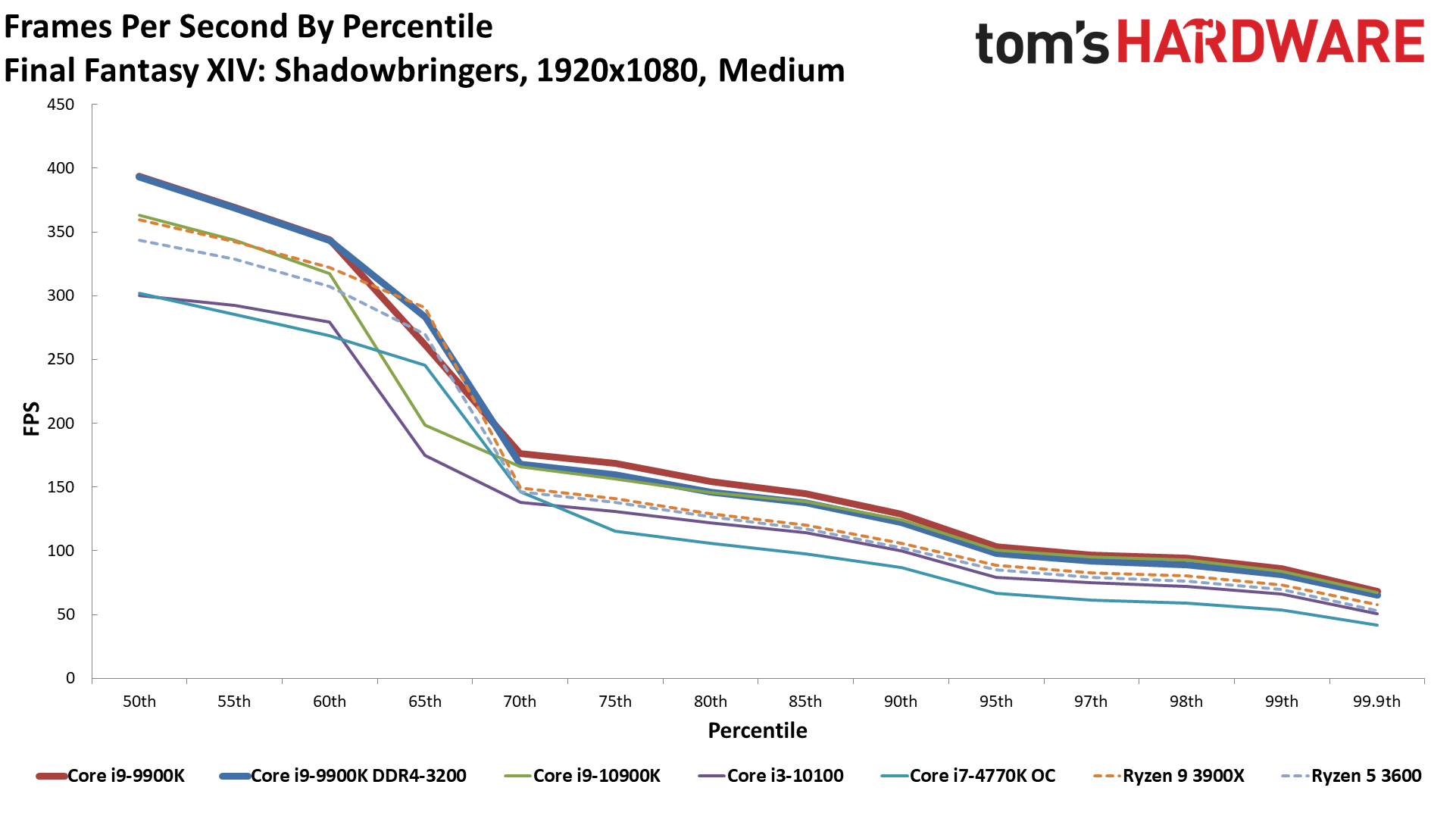

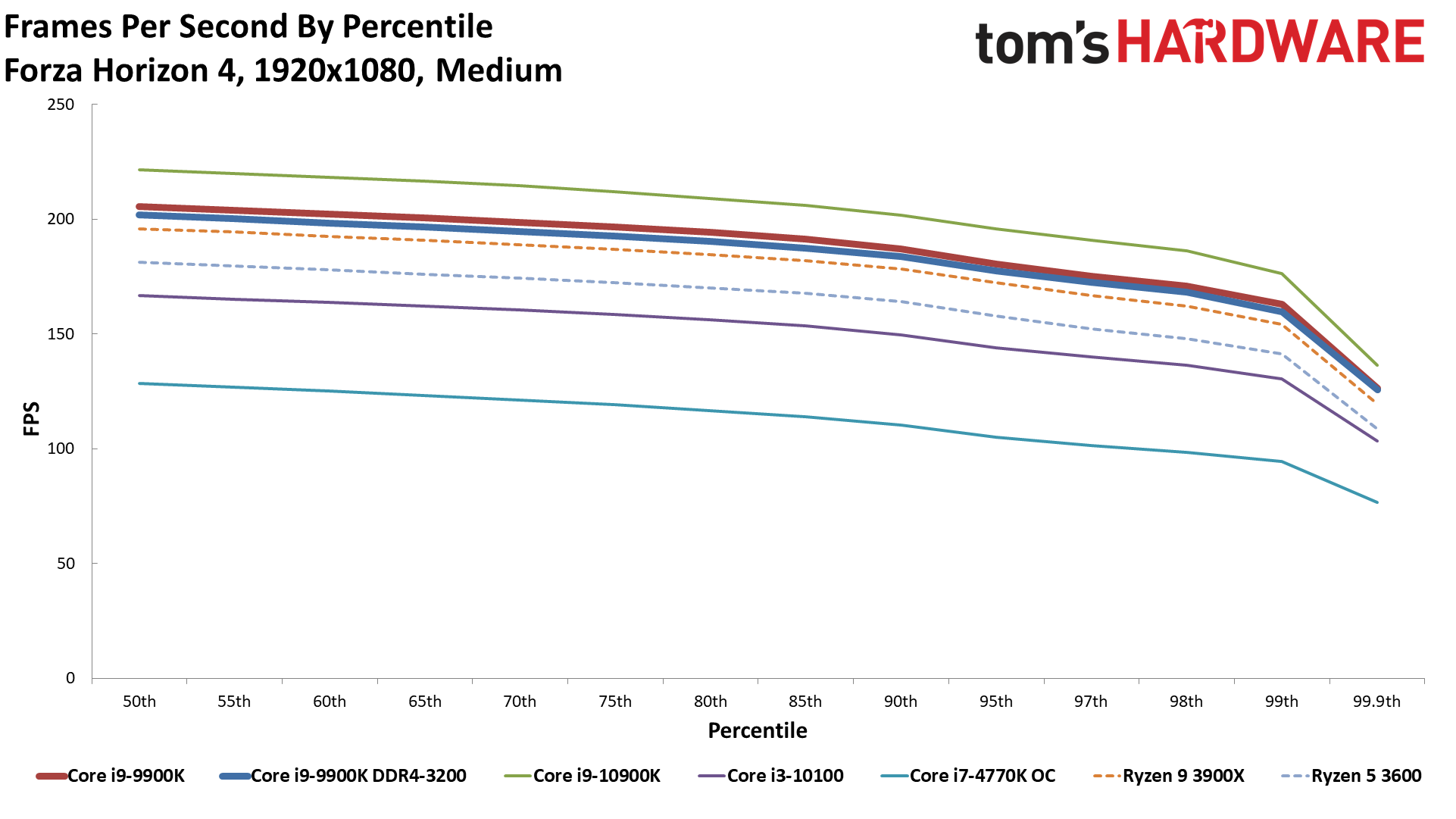

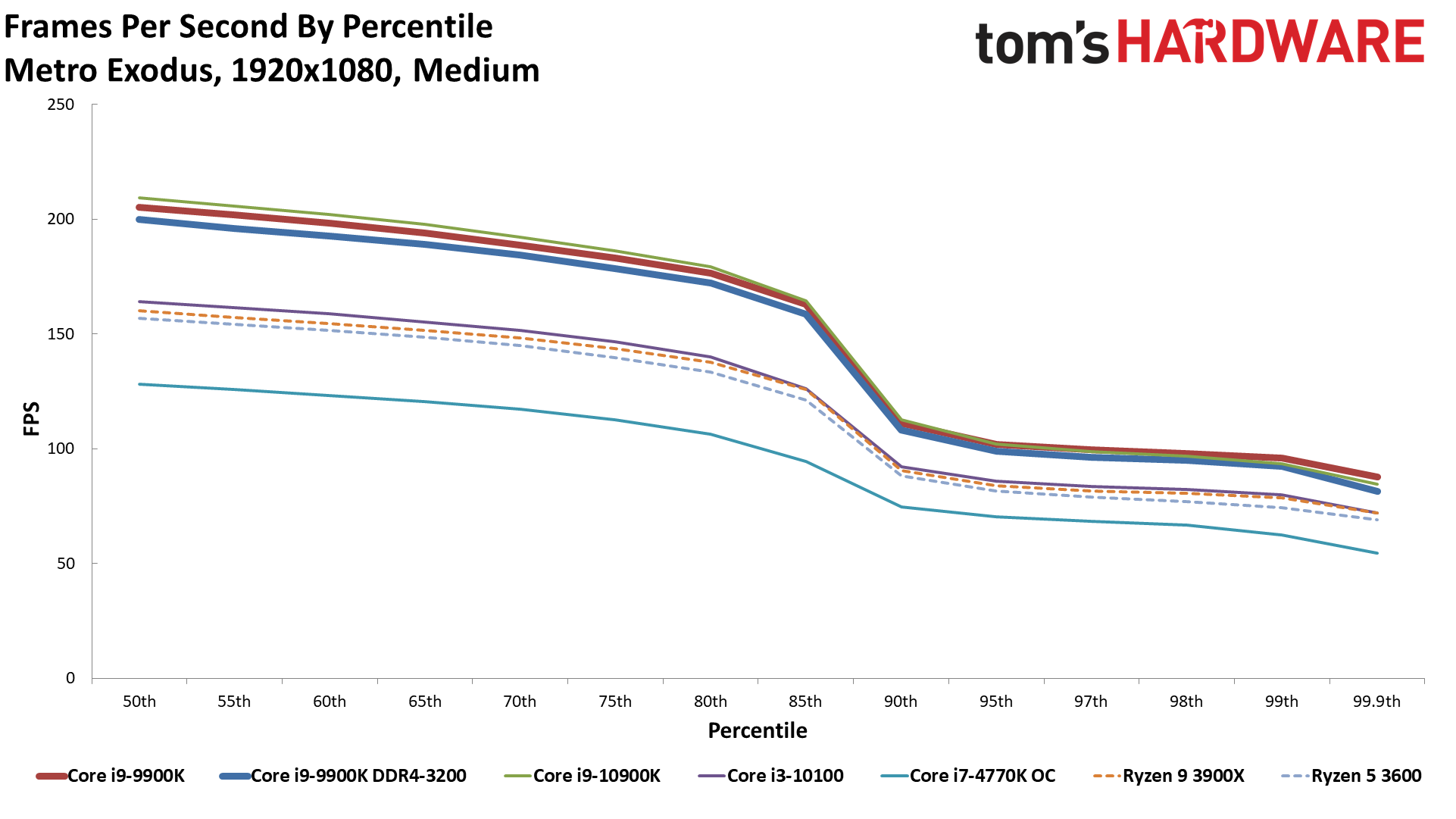

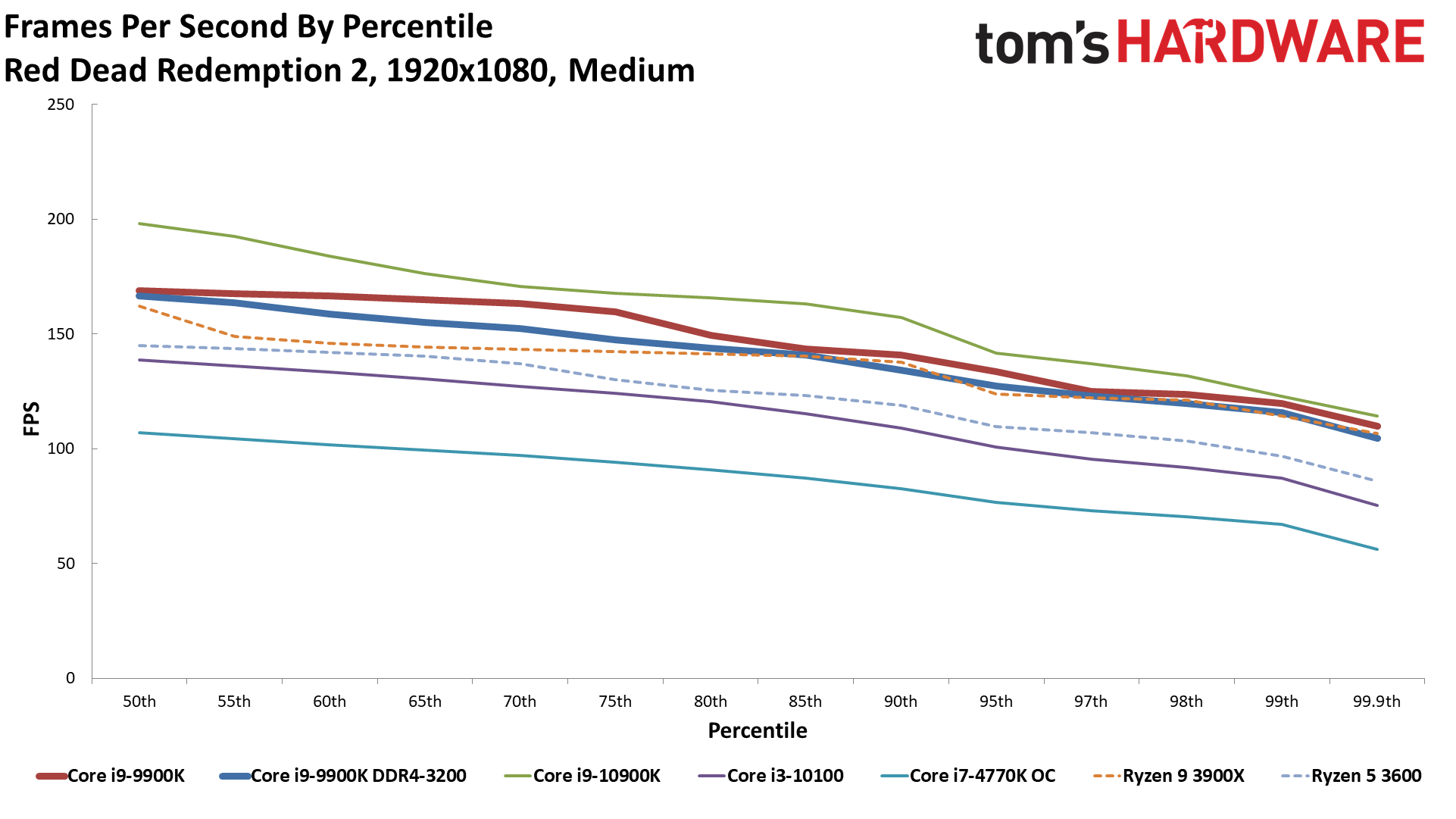

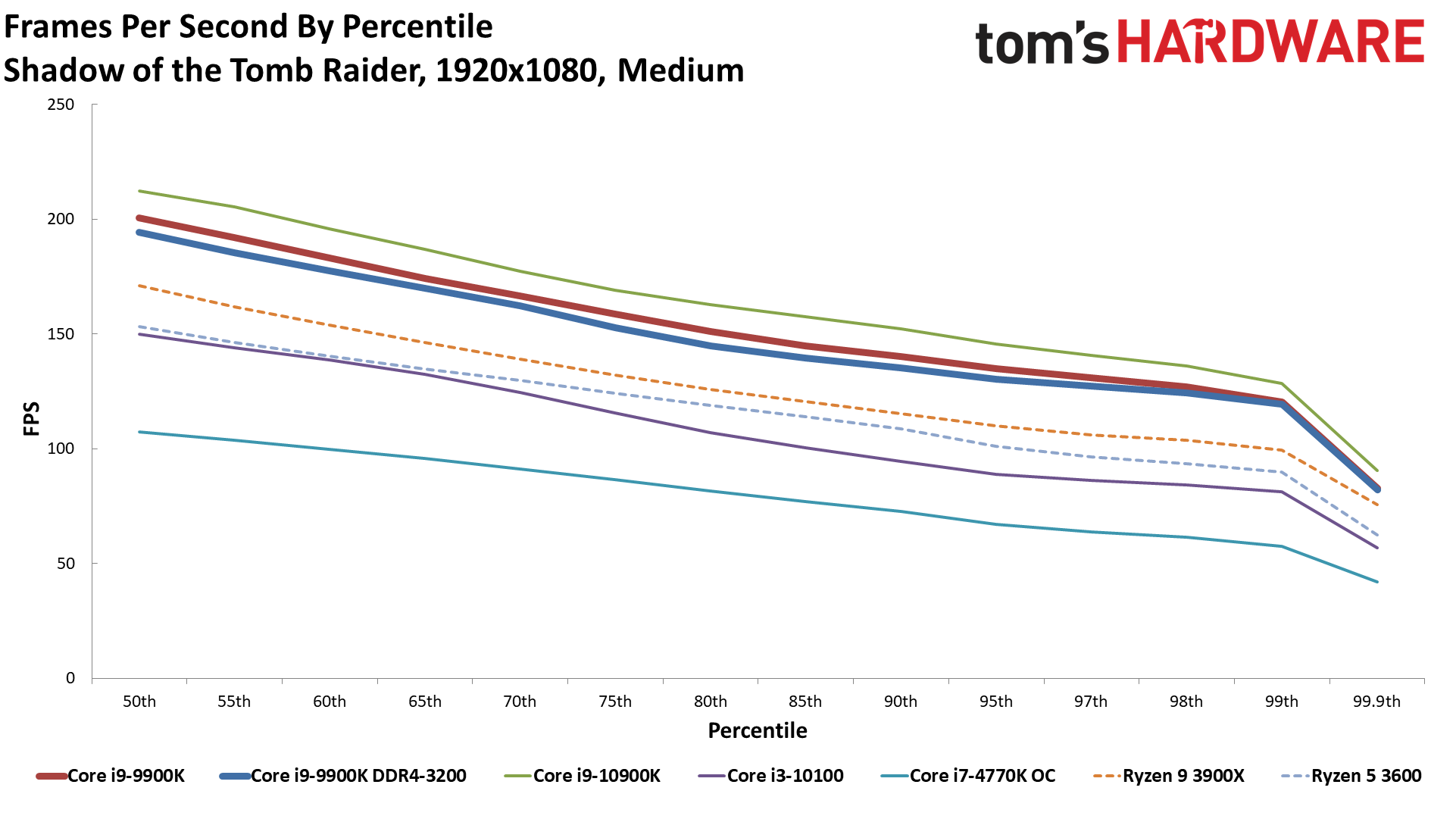

1080p Medium Percentiles

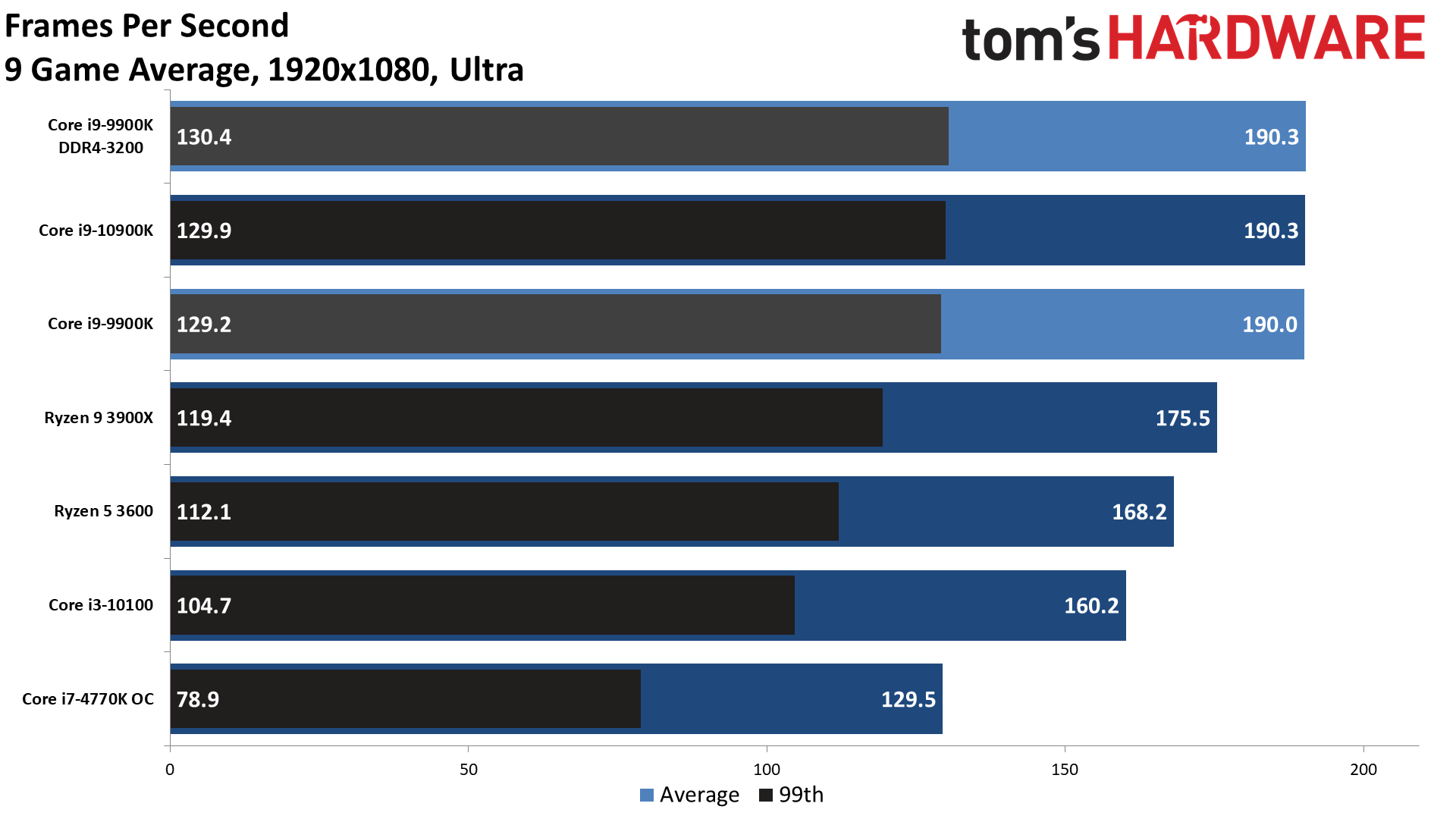

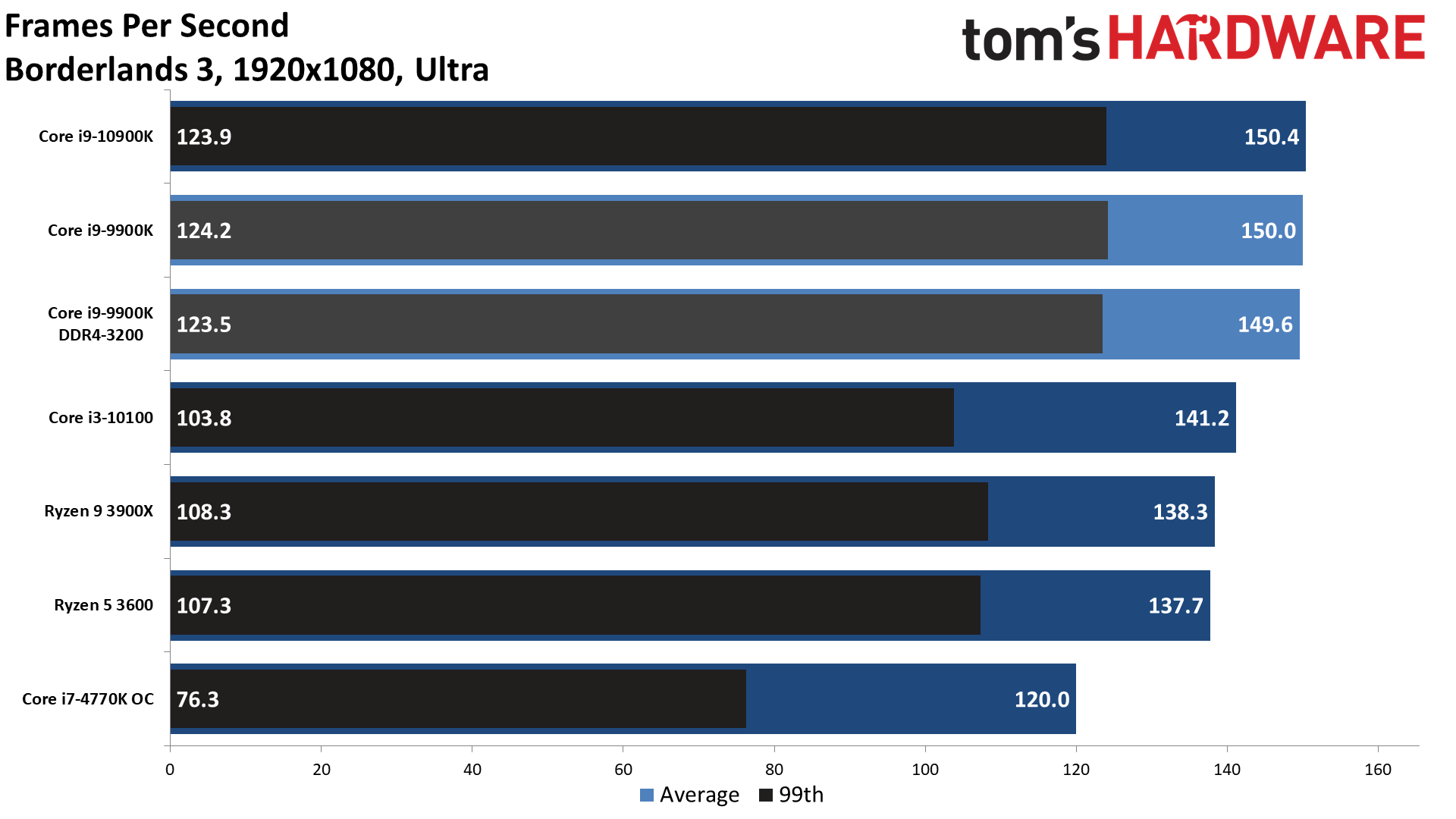

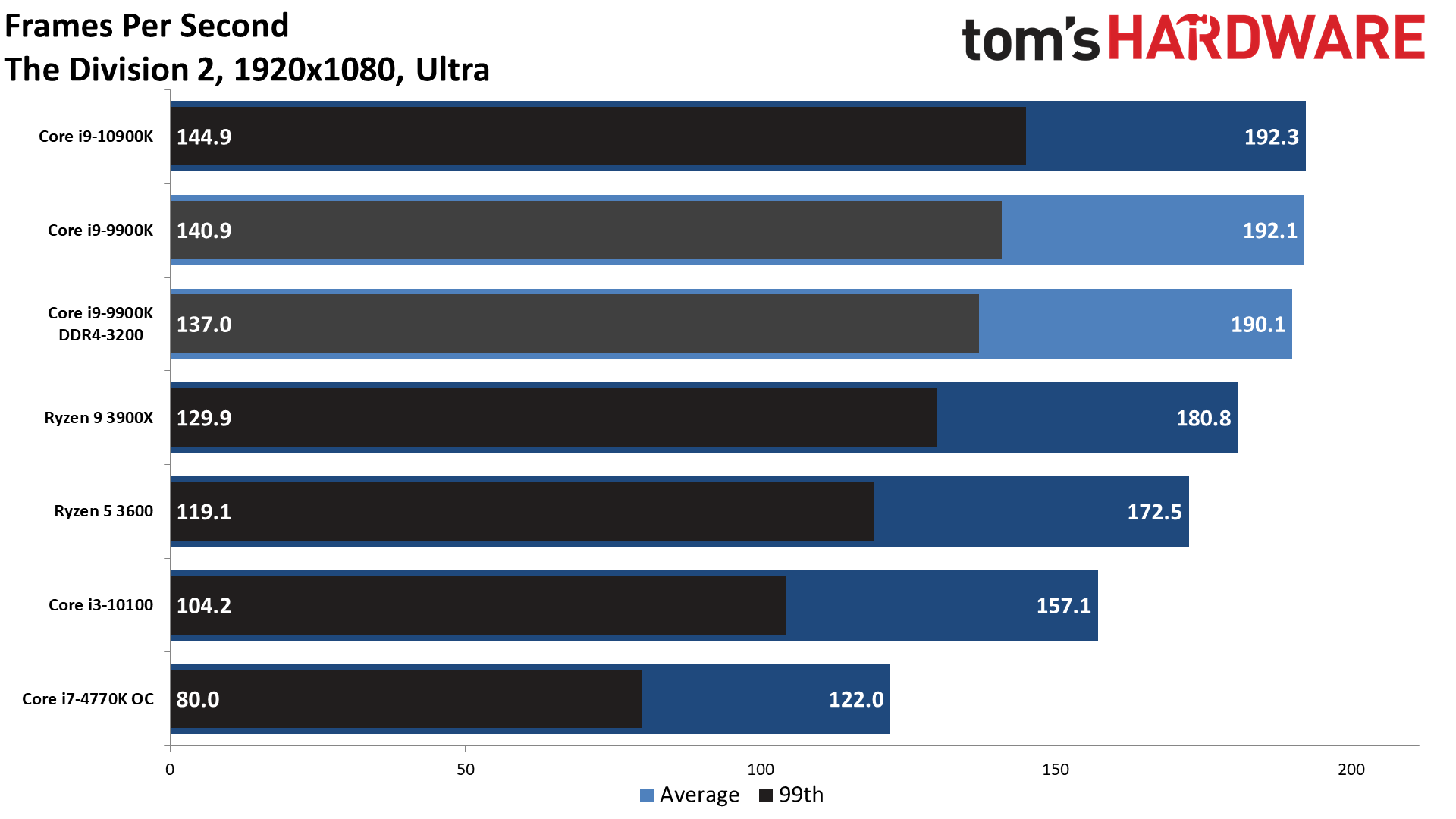

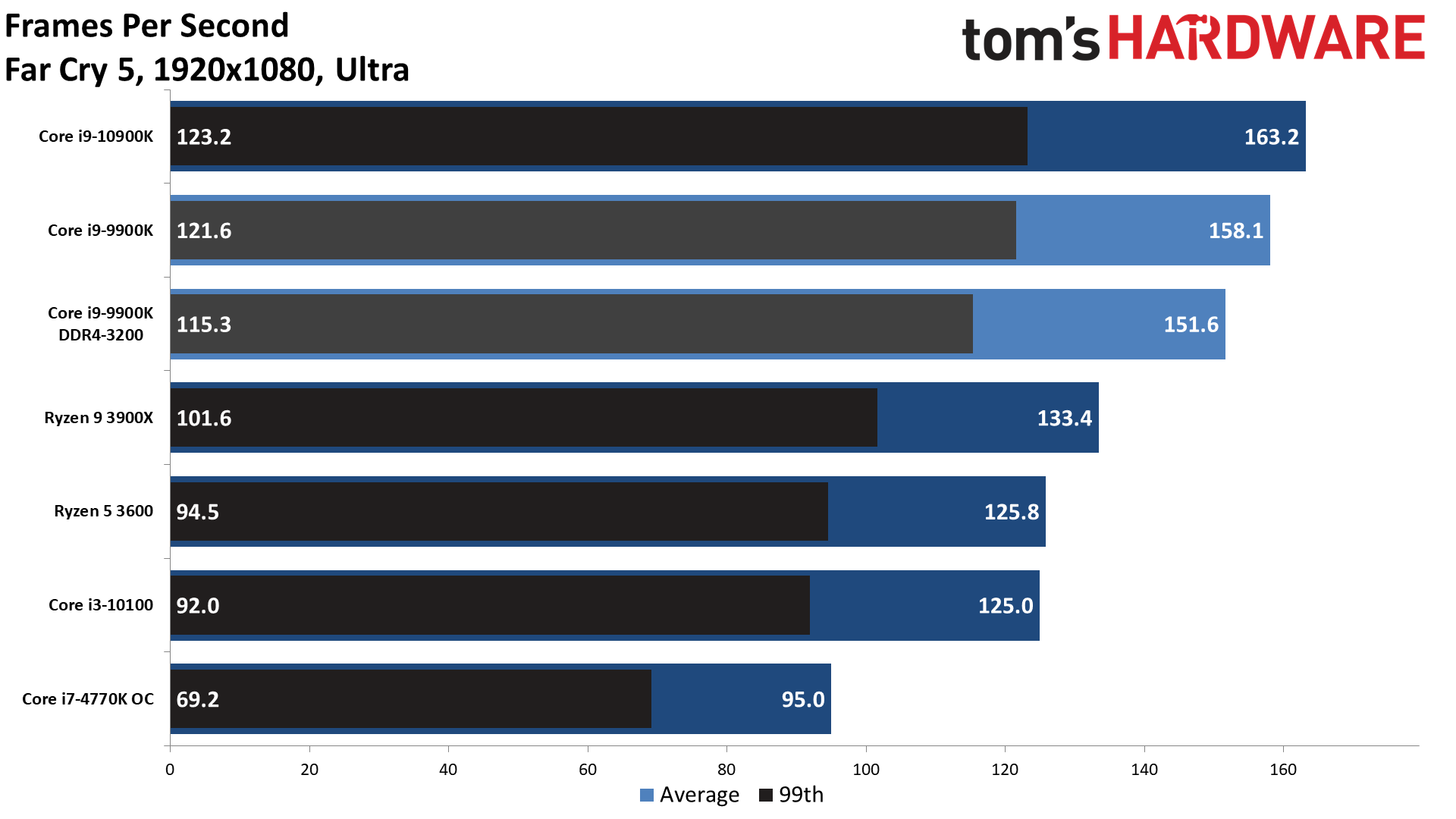

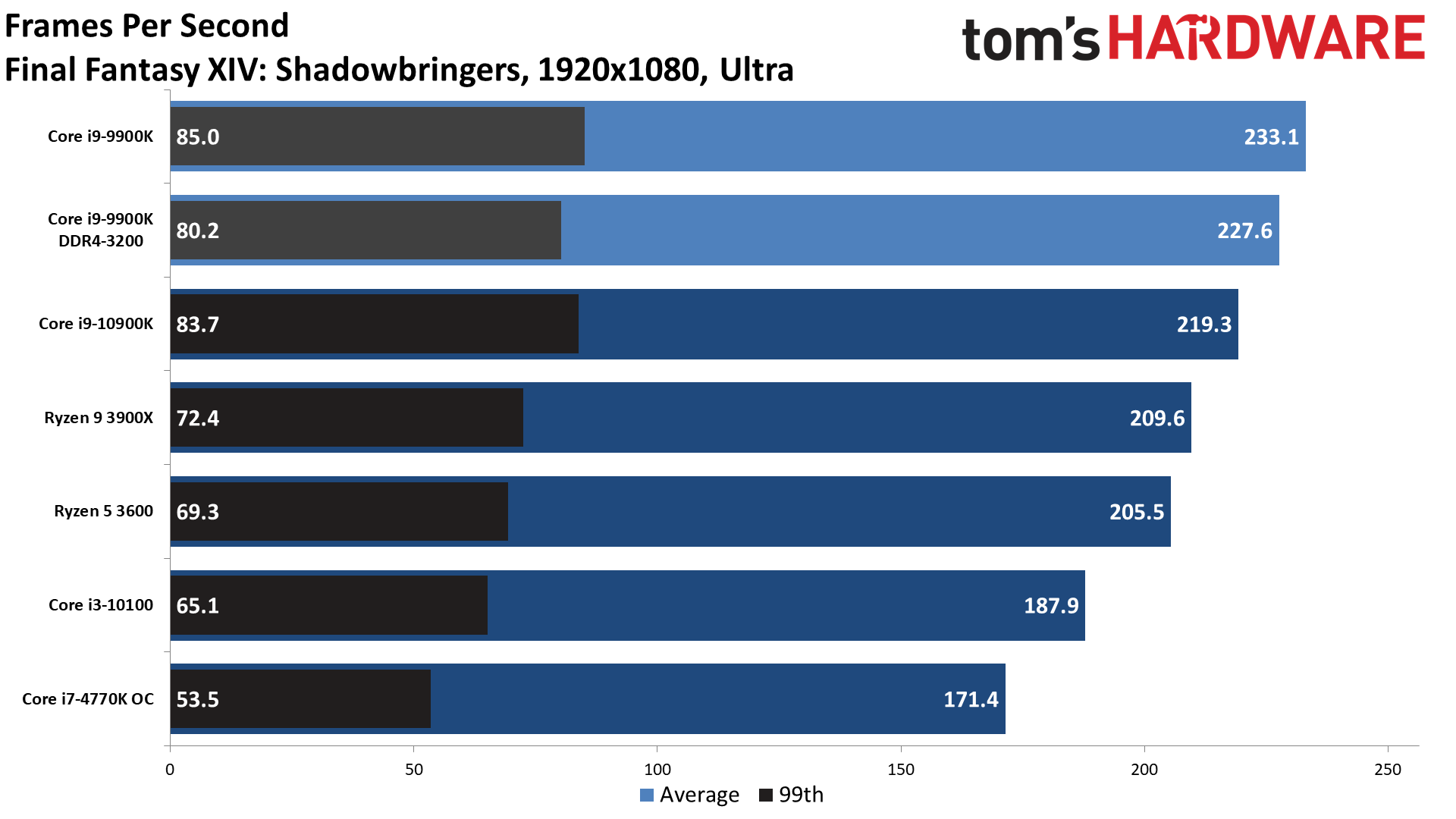

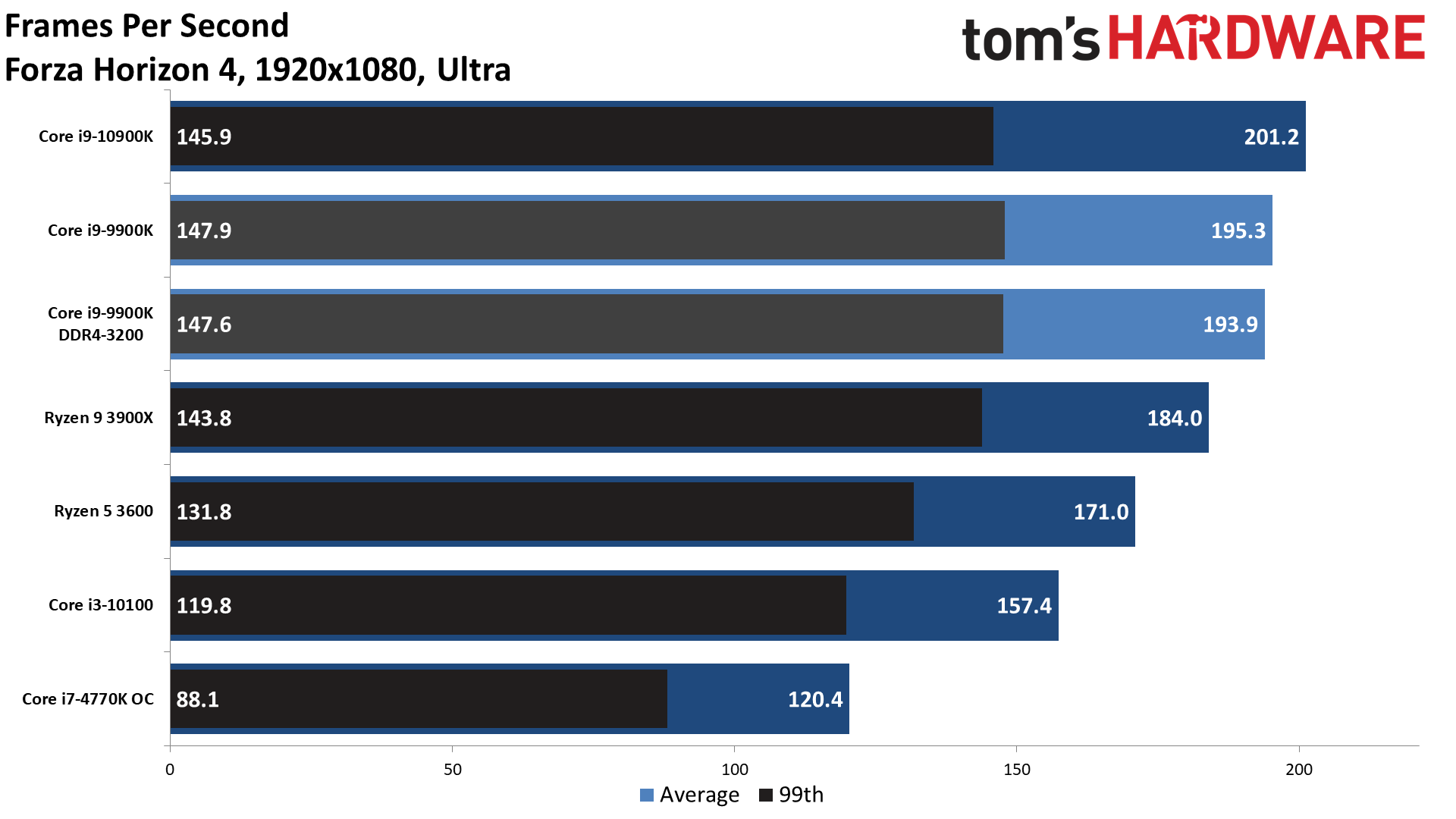

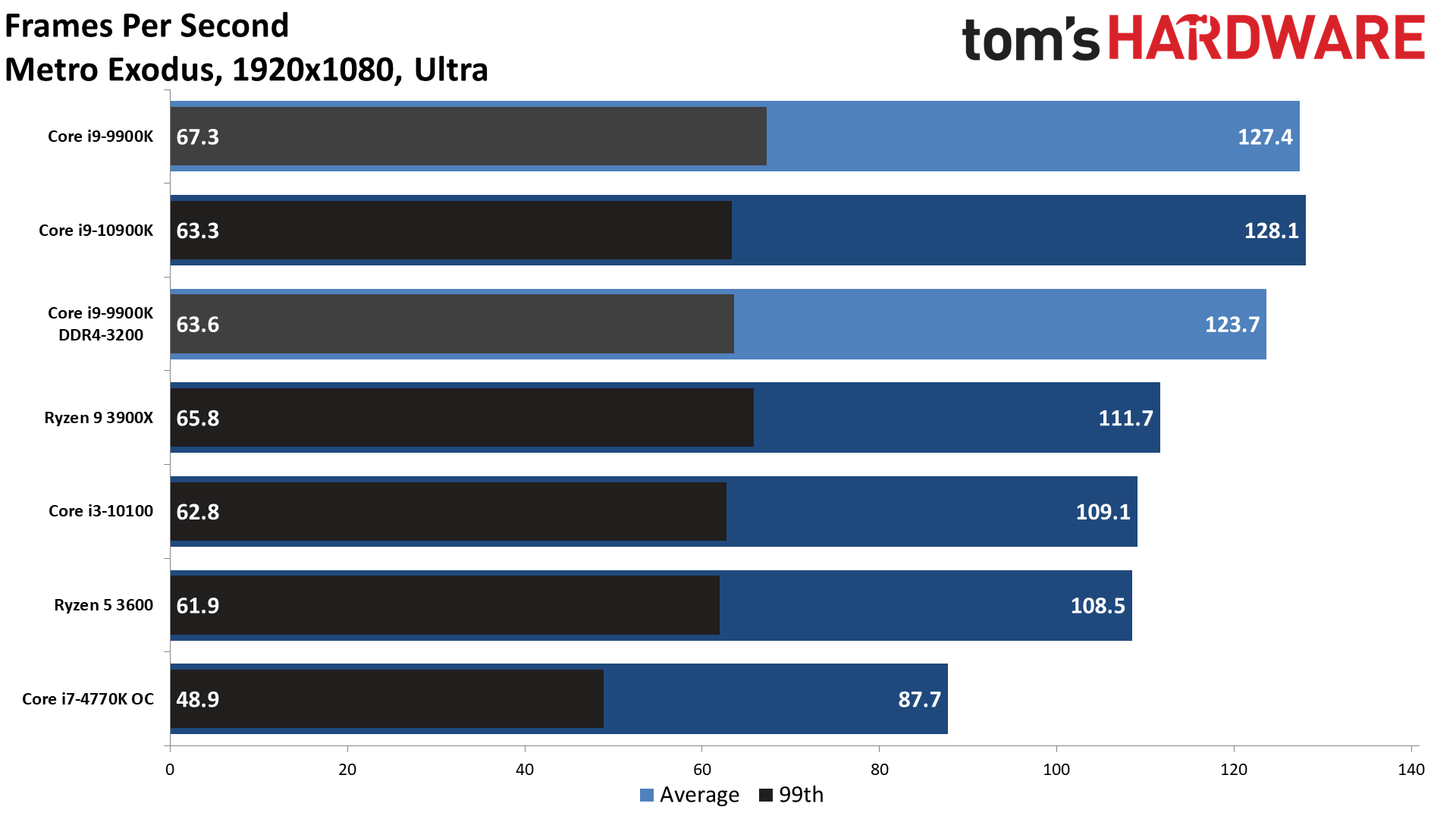

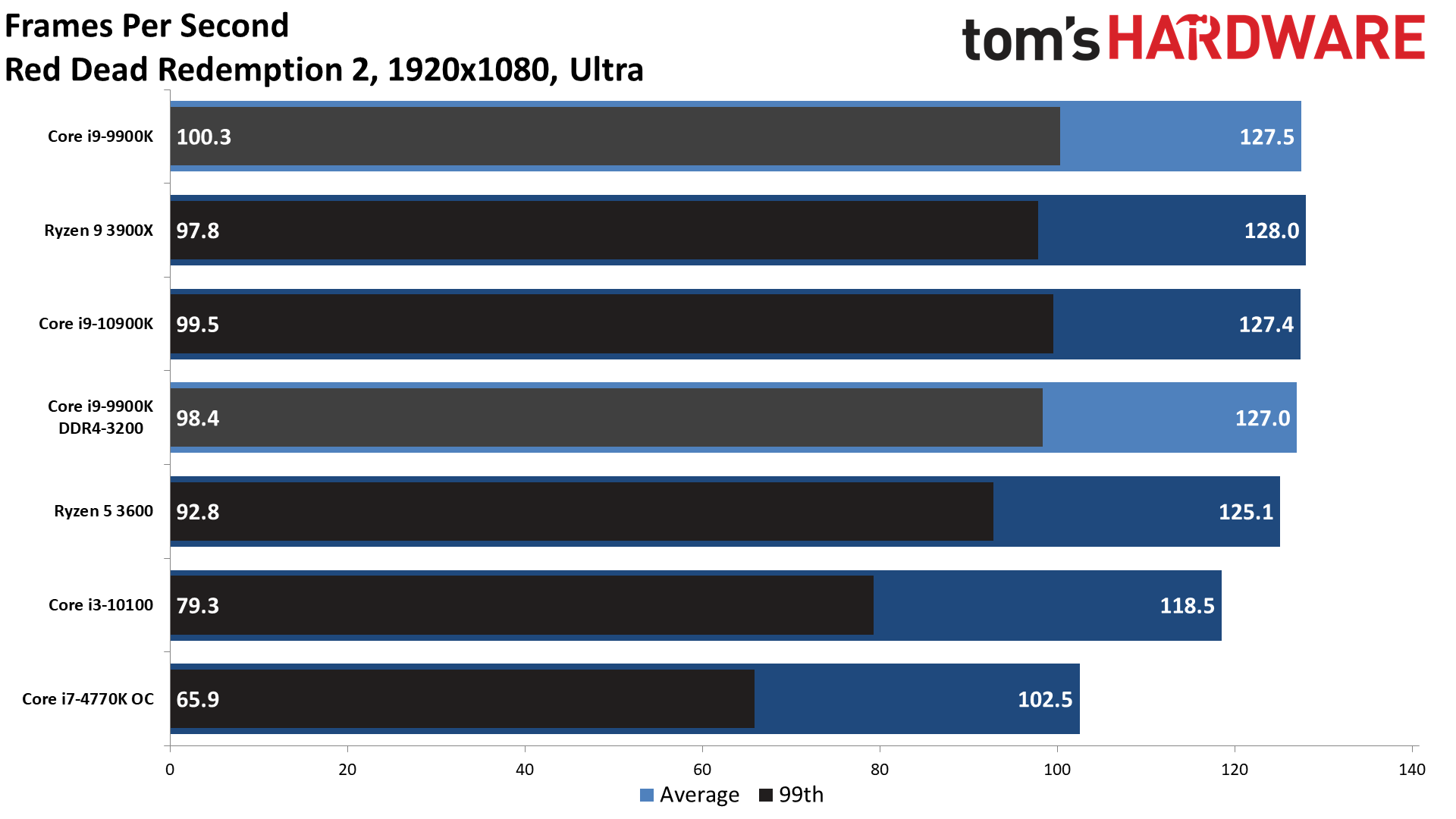

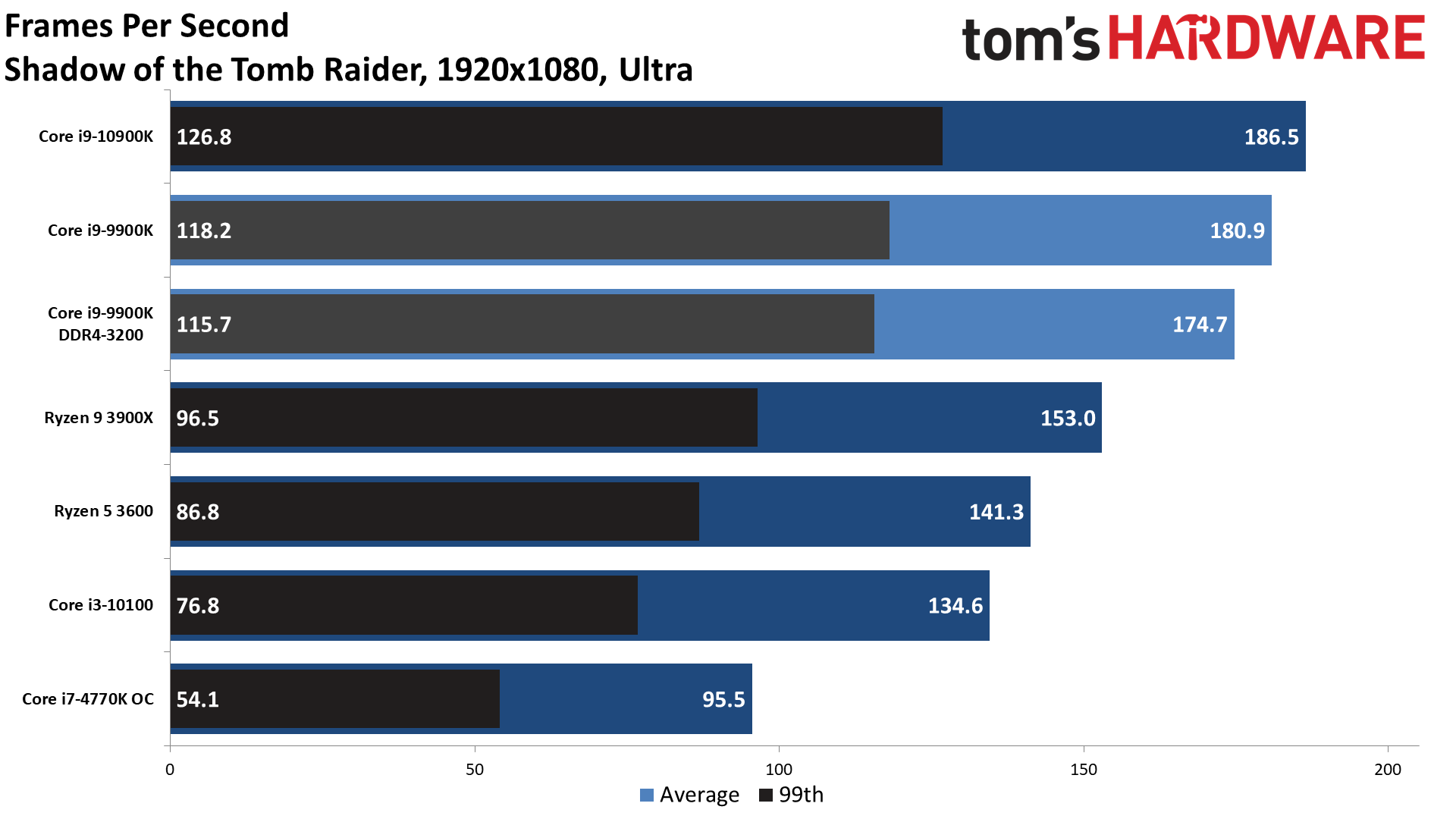

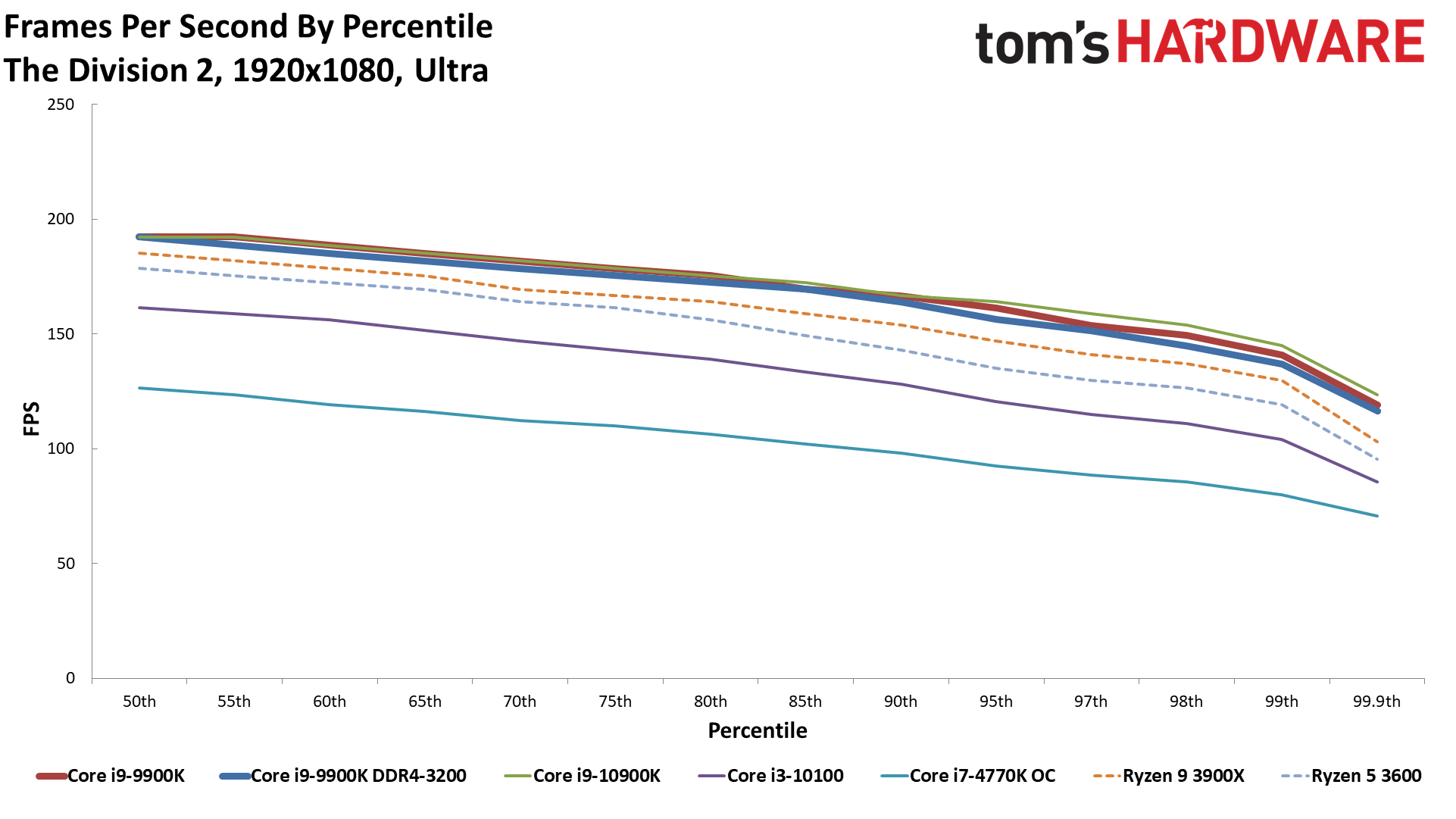

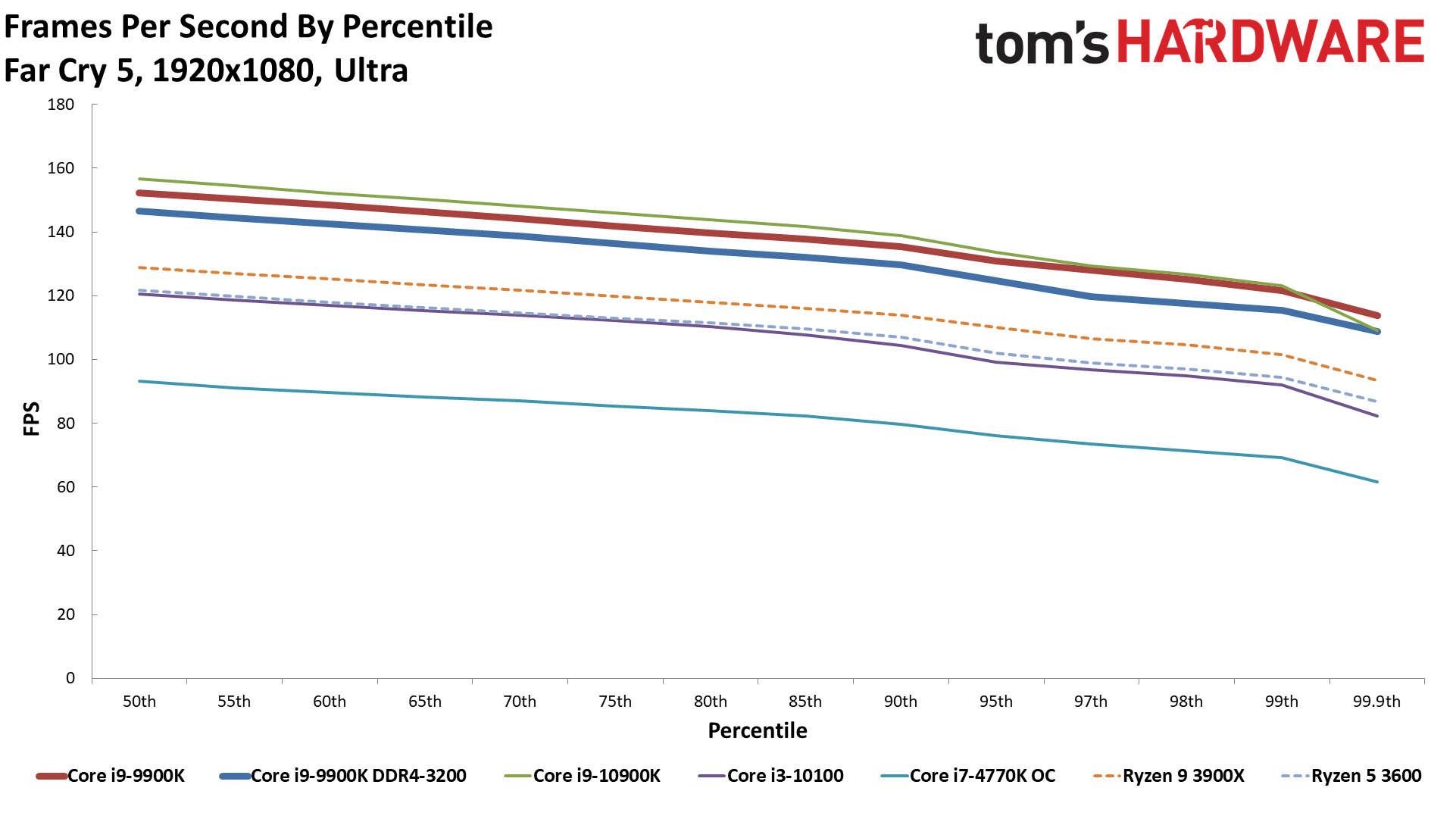

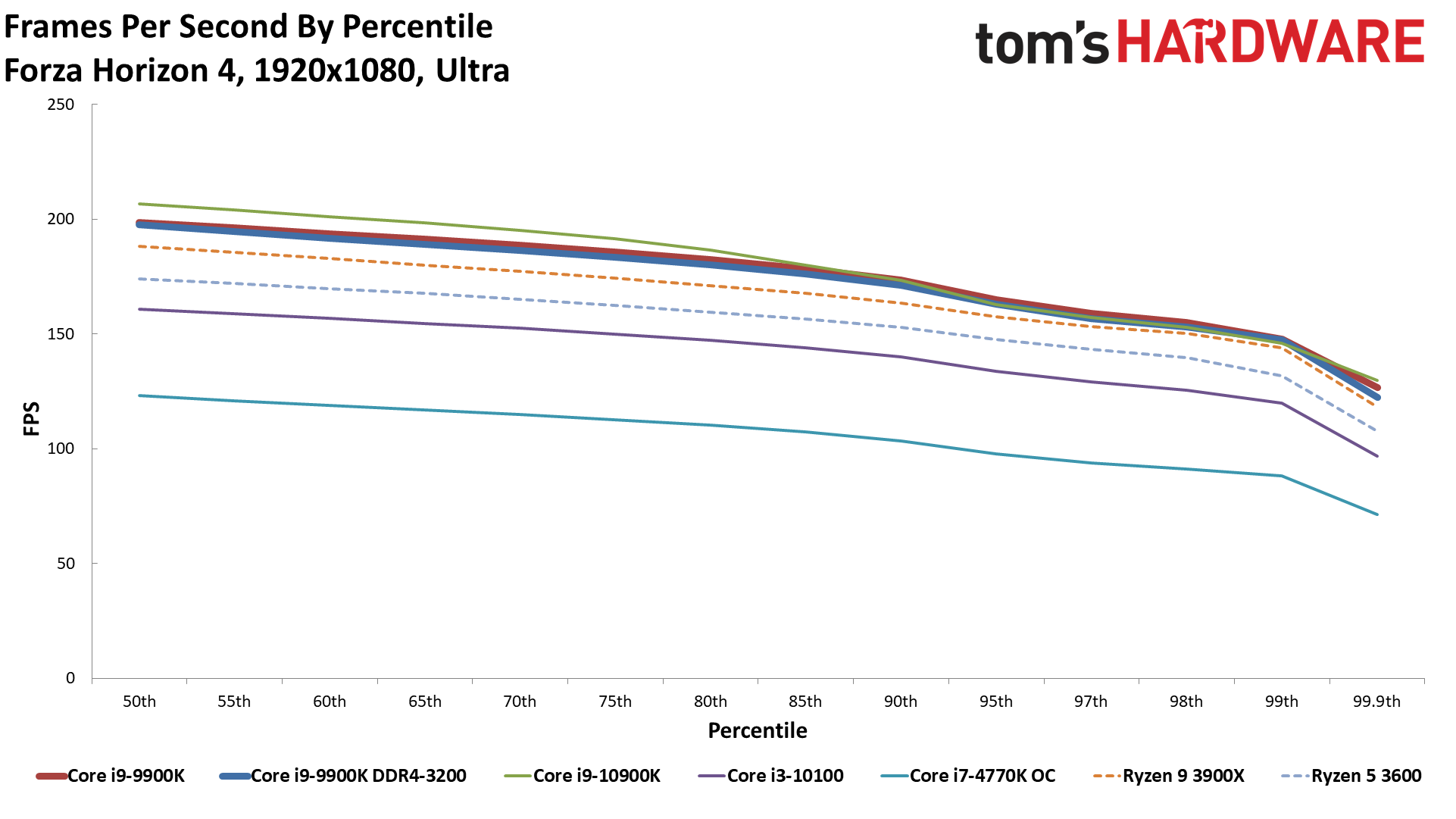

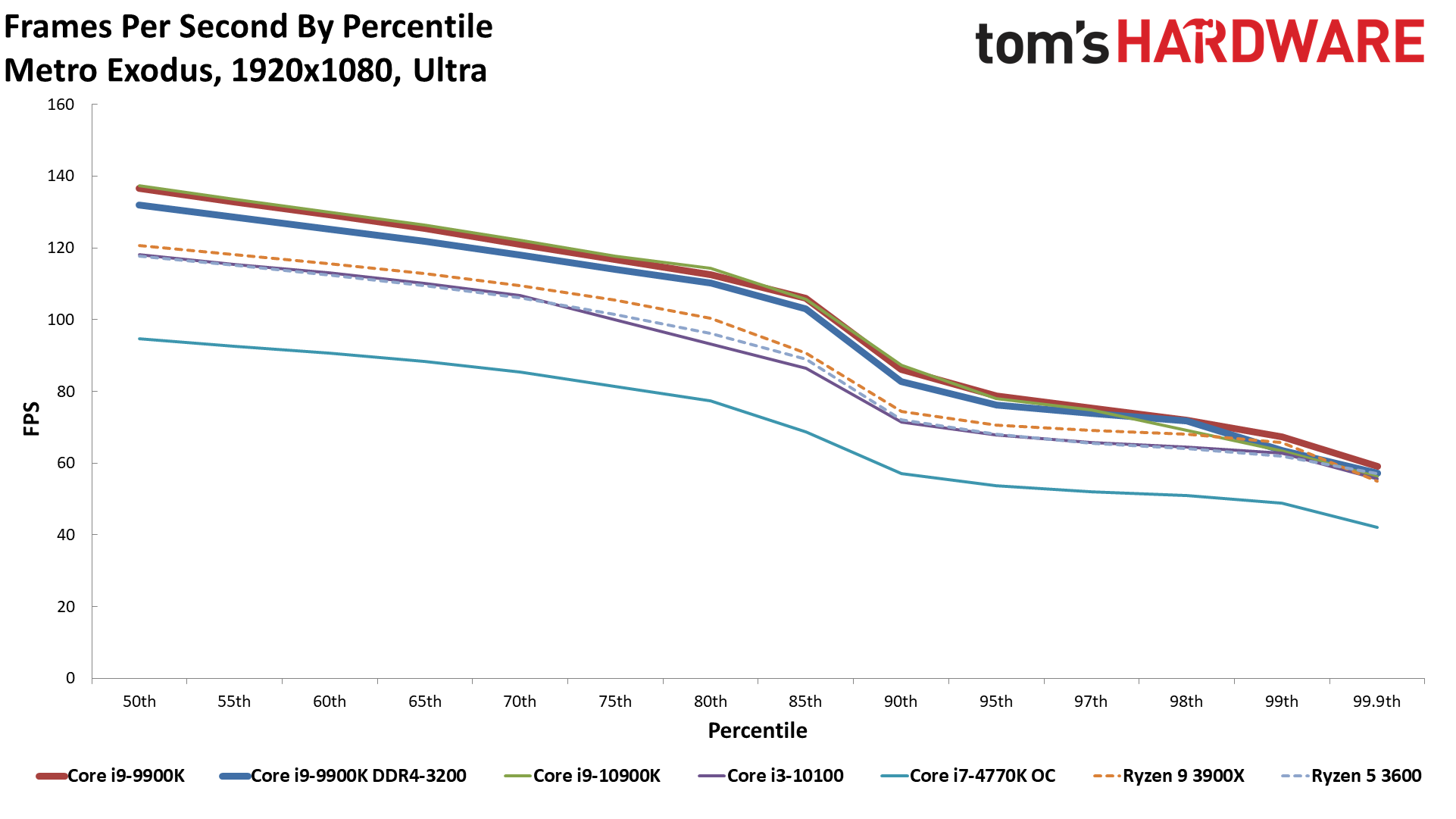

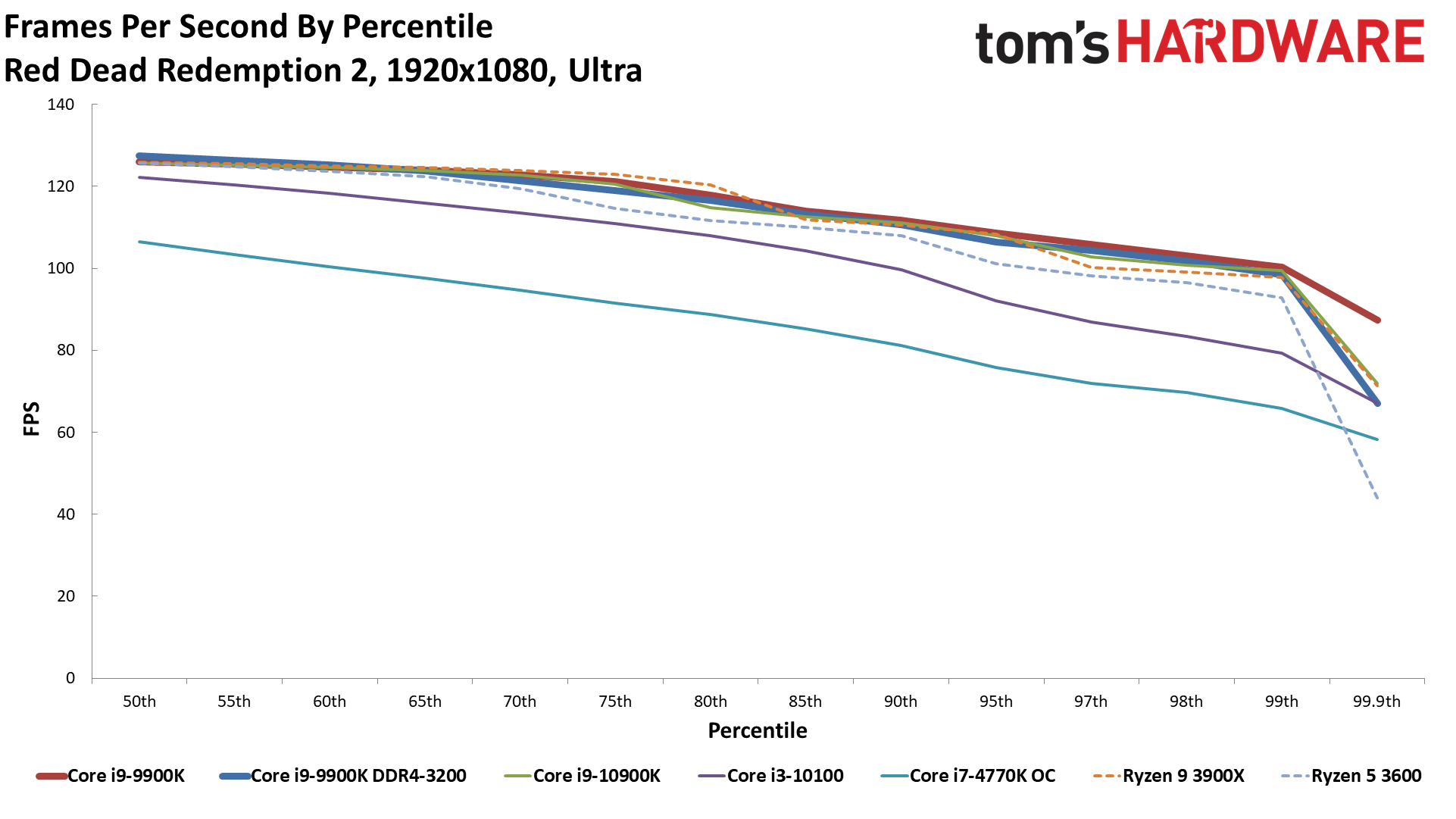

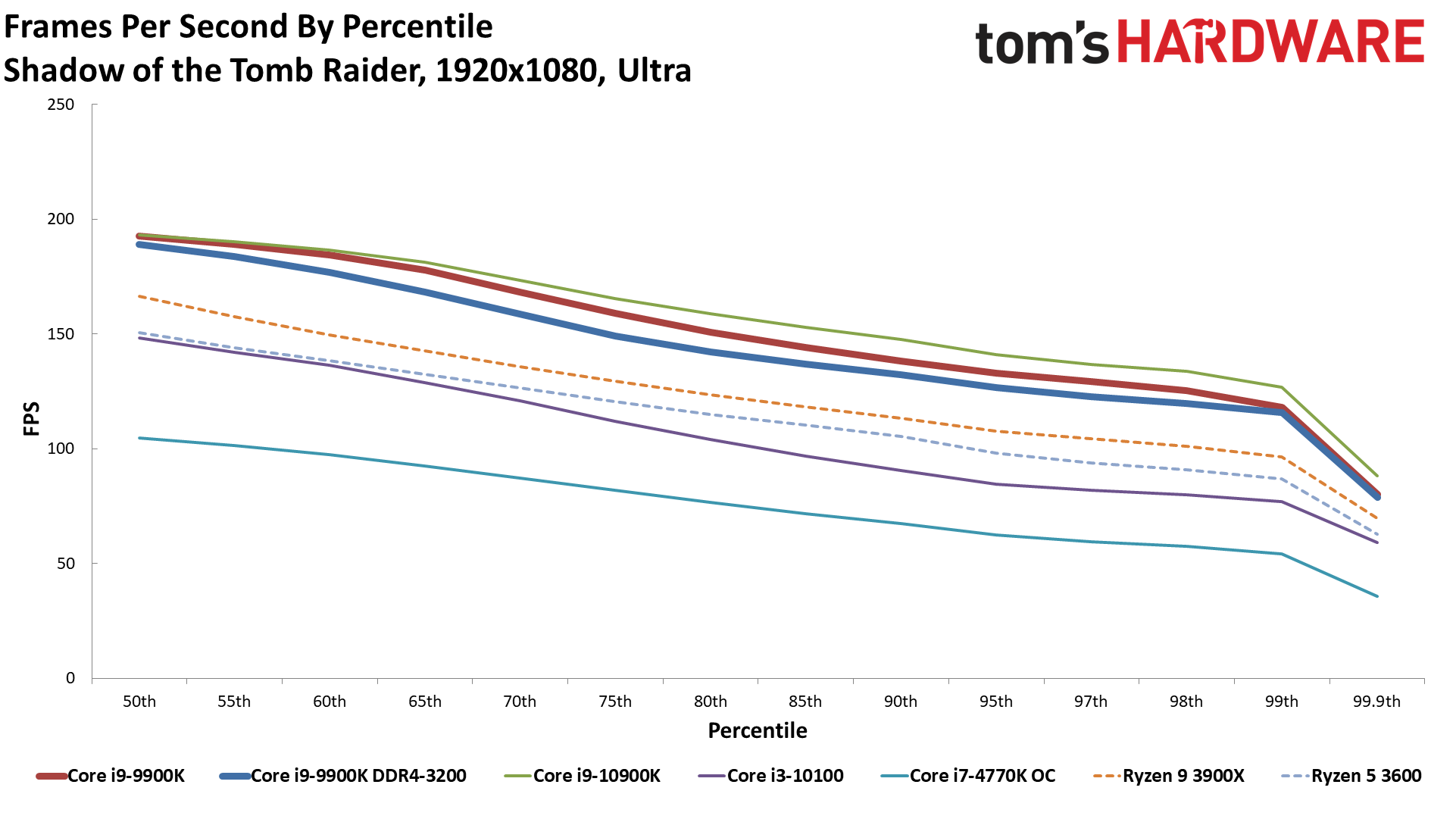

1080p Ultra

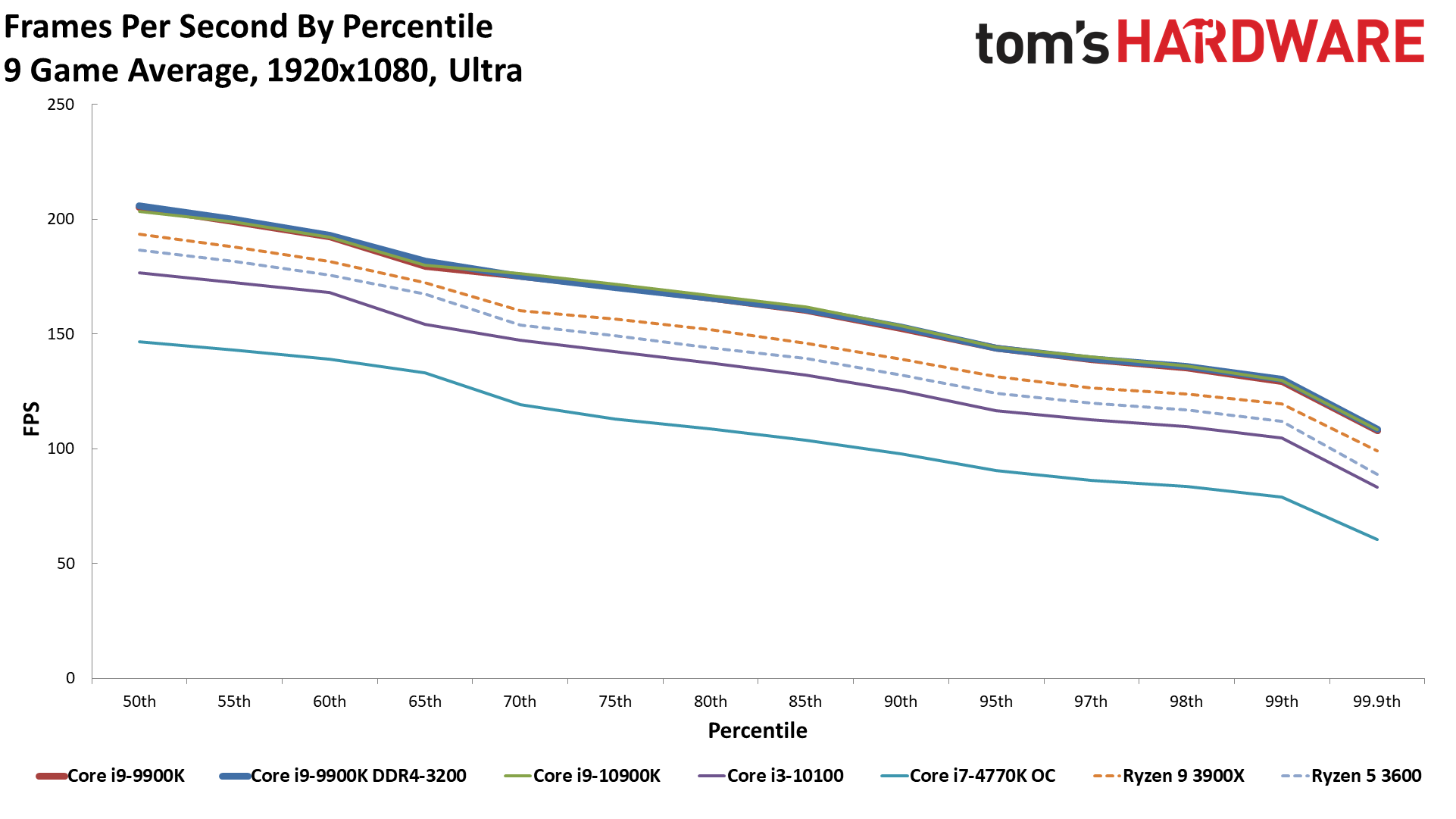

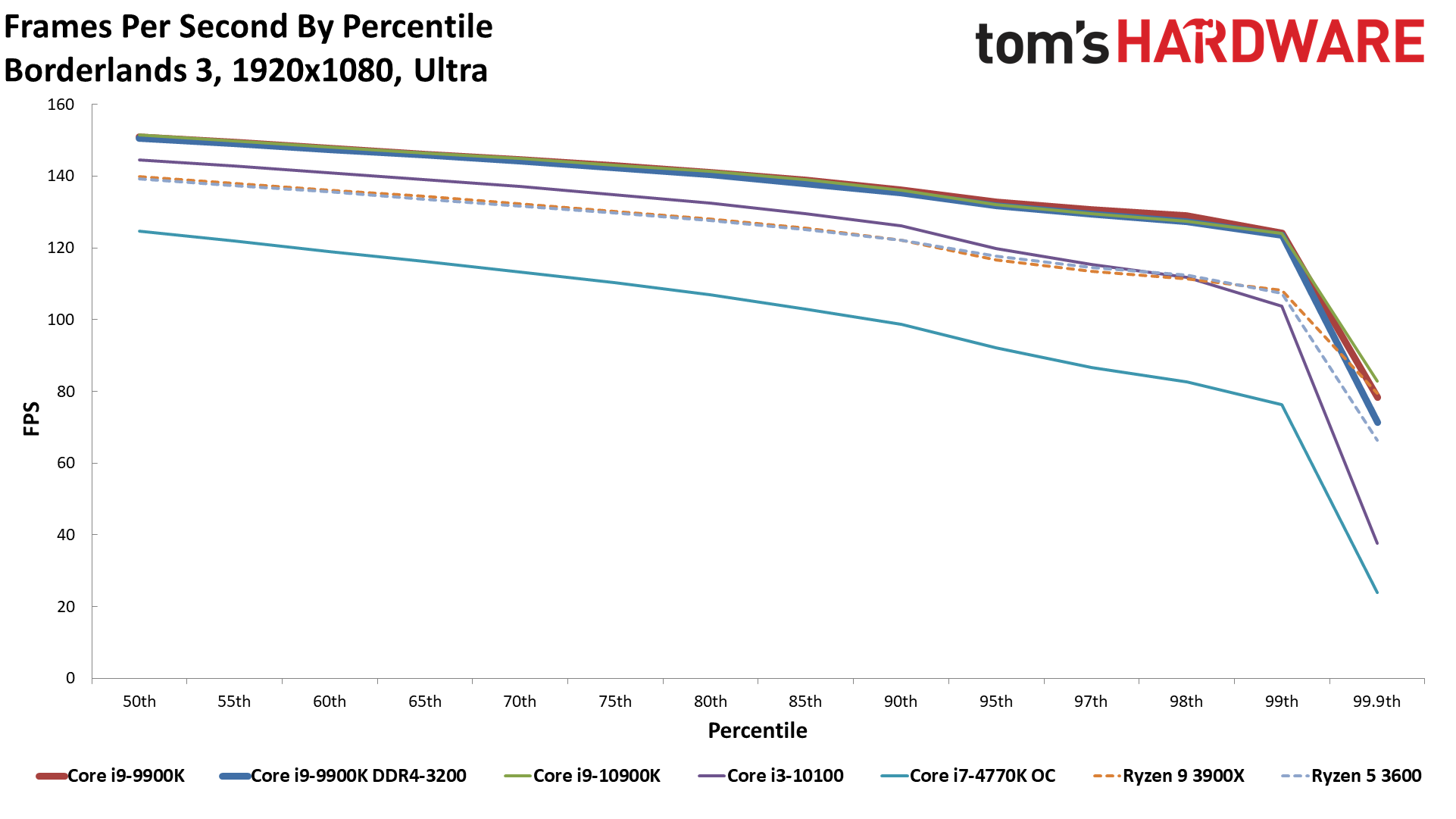

1080p Ultra Percentiles

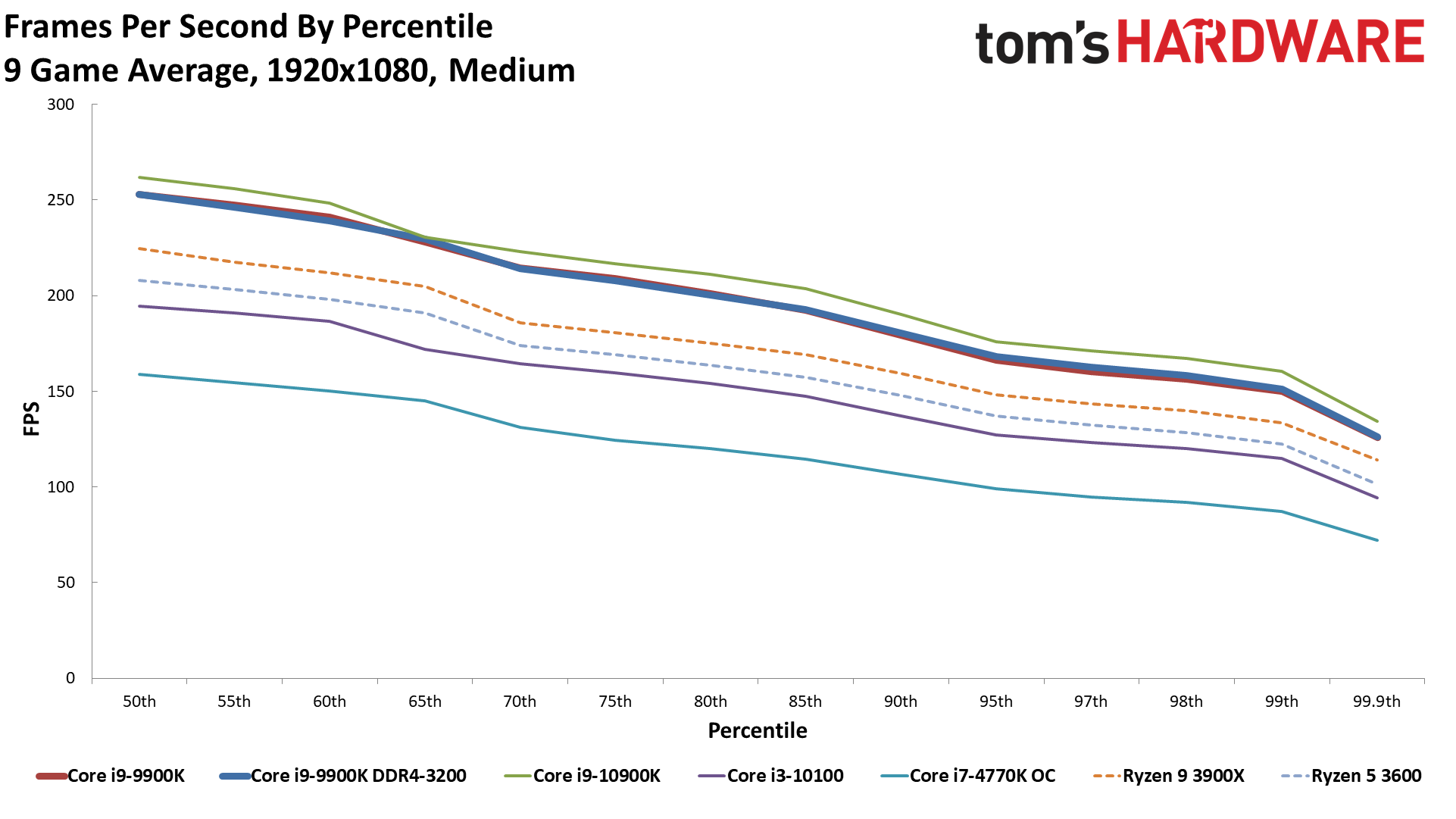

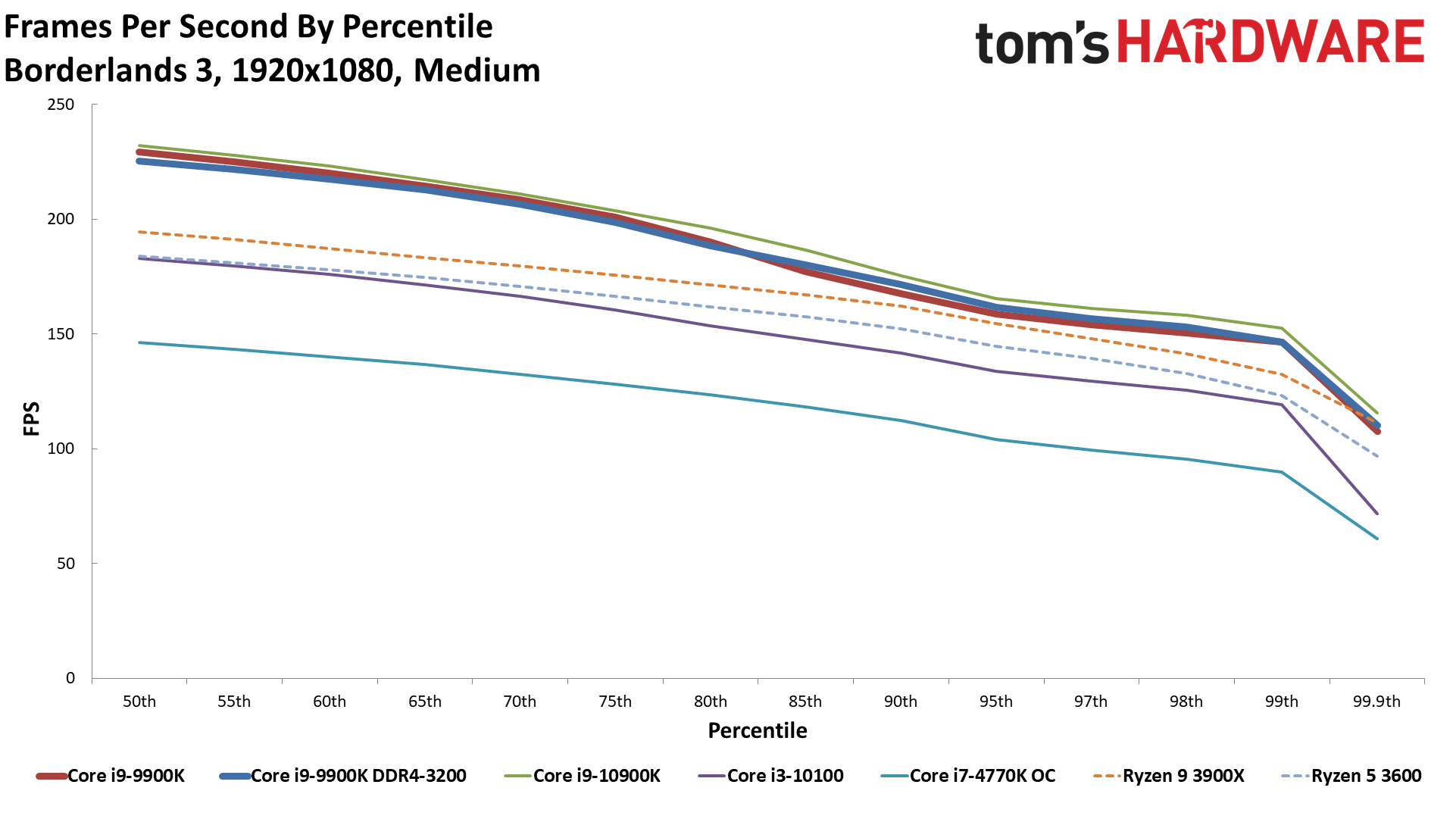

If you were thinking of pairing the new king of the GPUs with a Core i7-4770K (overclocked) from mid-2013, or maybe even a 3770K or 2600K, the worst-case results at 1080p should at least give you pause. There are plenty of situations where your maximum performance is nearly cut in half. With the RTX 3080, the Core i9-10900K is 70% faster at 1080p medium, though the gap drops to 47% at 1080p ultra.

Even stepping up to a new Core i3-10100 provides a pretty substantial boost to performance — some of that is from the newer Comet Lake architecture, but a lot of the benefit likely comes from the faster memory. The i3-10100 ends up about 25% faster overall compared to the overclocked i7-4770K.

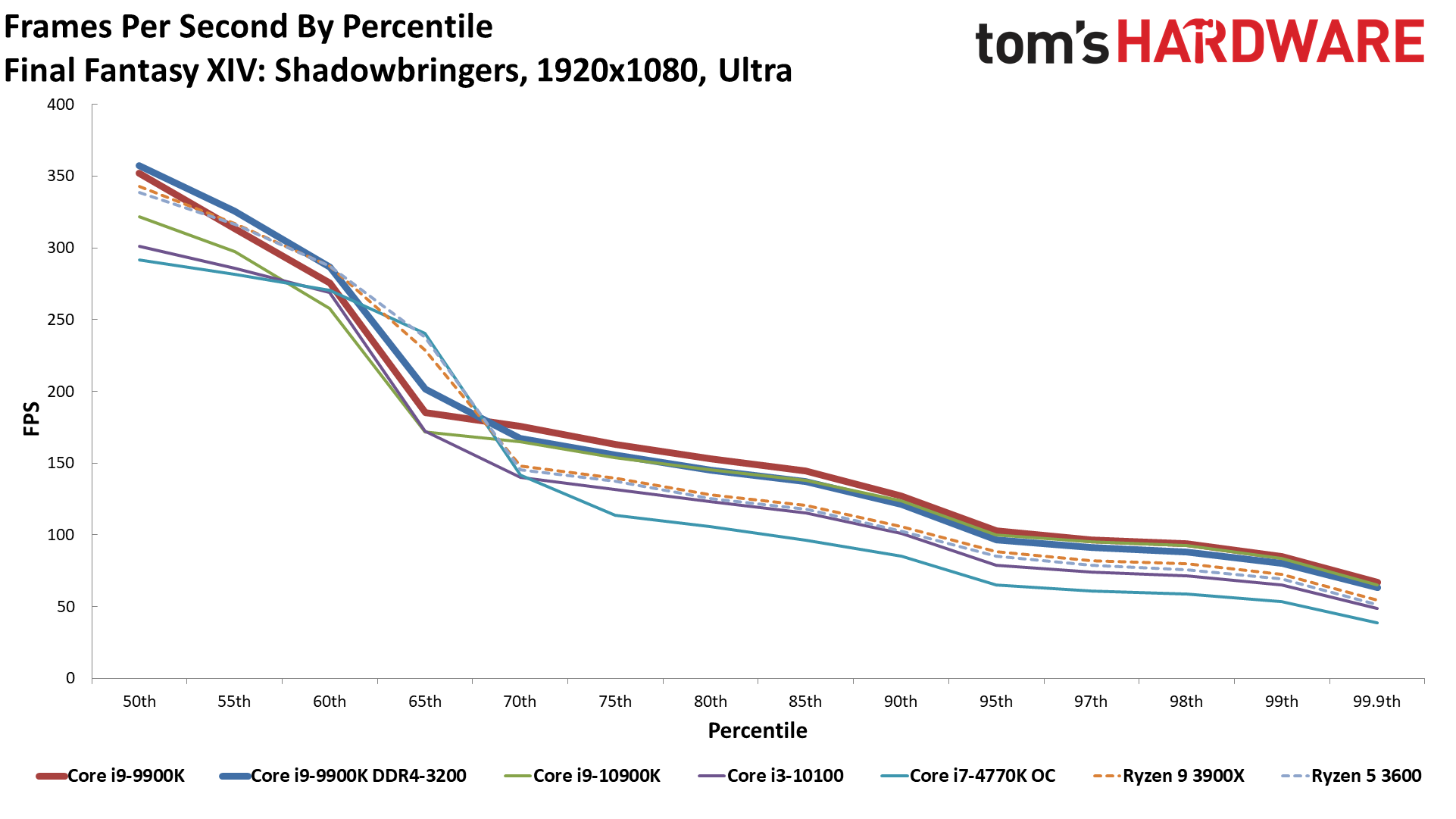

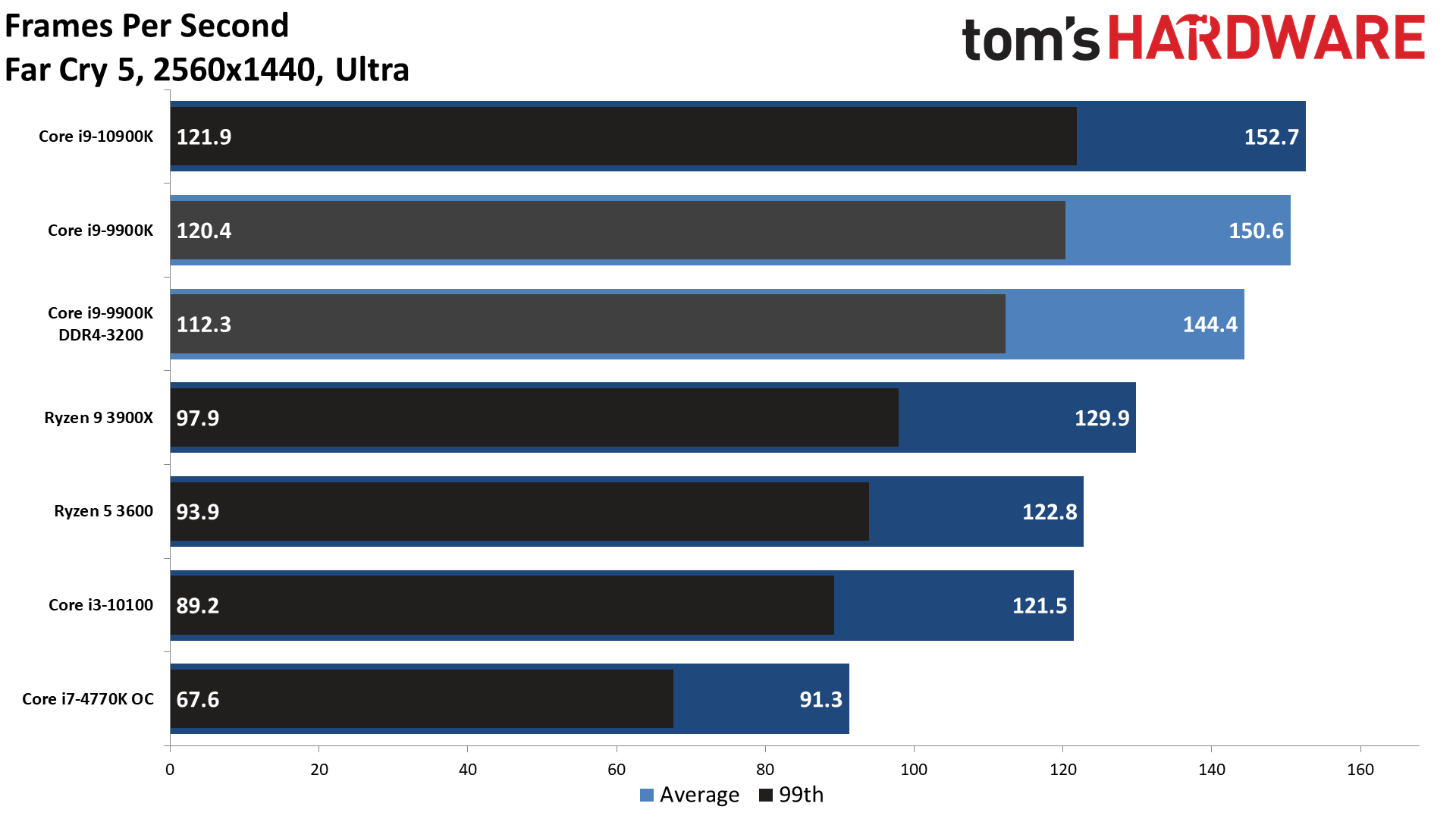

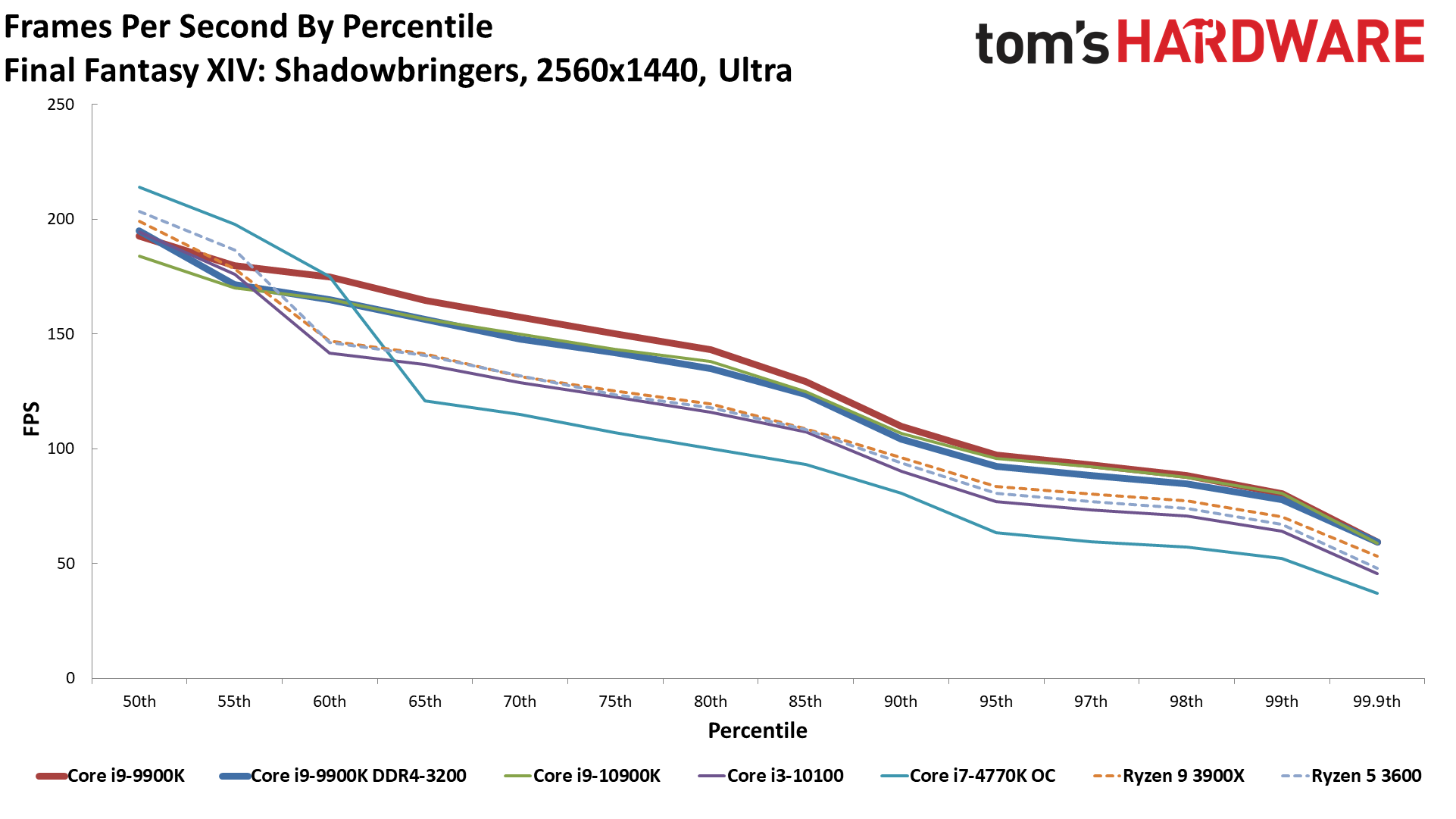

At the other end of the spectrum, the move from Core i9-9900K to Core i9-10900K hardly matters at all. At 1080p medium, the 10900K is 4% faster across our test suite. That's not a lot, and it's not really too surprising. Very few games will leverage more than eight cores, so the majority of the improvement comes from the slightly higher clocks on the 10900K. At 1080p ultra, the two chips are basically tied. Some of this almost certainly comes down to differences in motherboard firmware, however. Even though both testbeds use MSI MEG Ace motherboards (Z390 and Z490), they're not the same. Final Fantasy XIV performs quite a bit worse with the Z490 setup, while everything else sees small to modest gains.

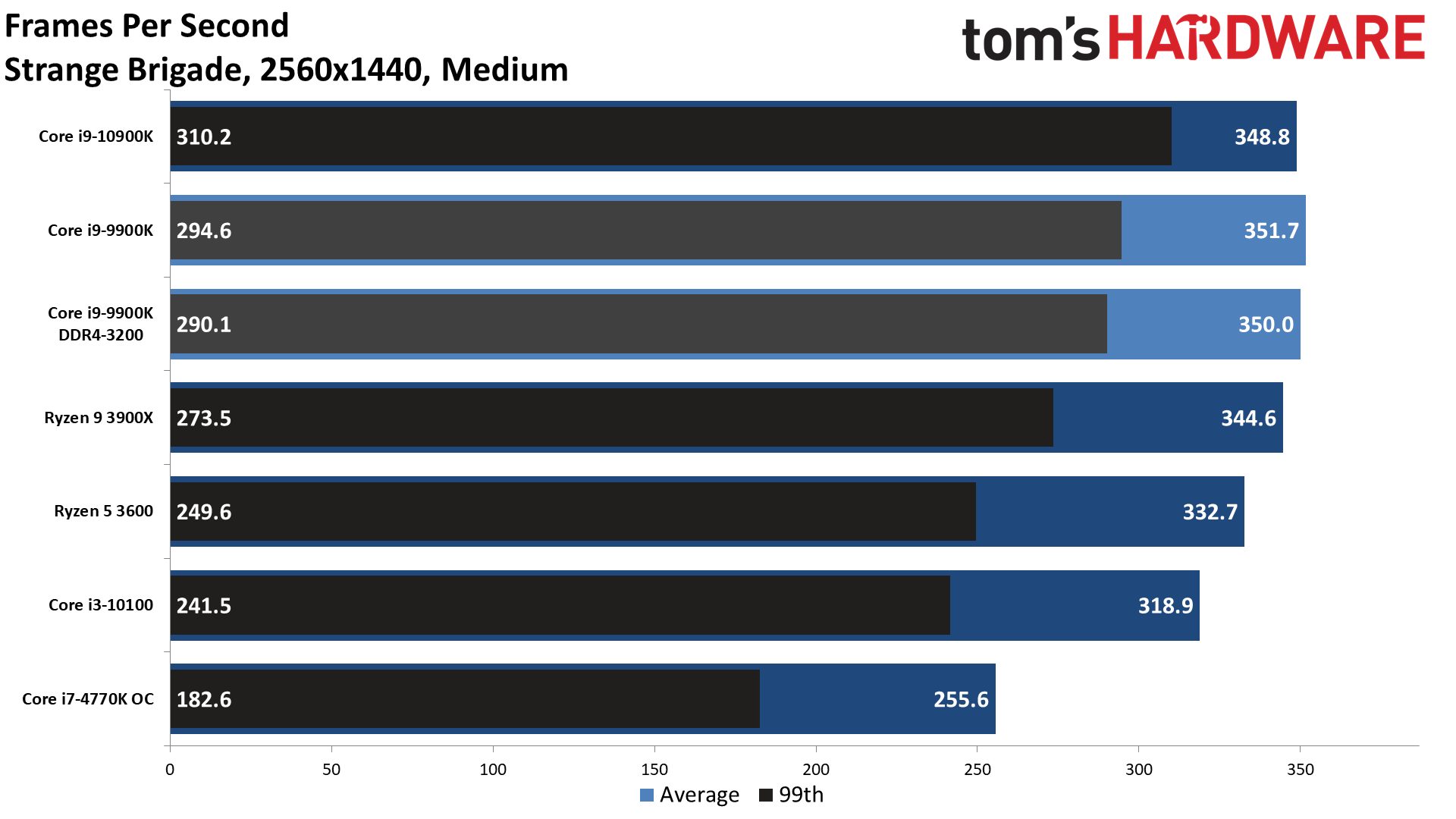

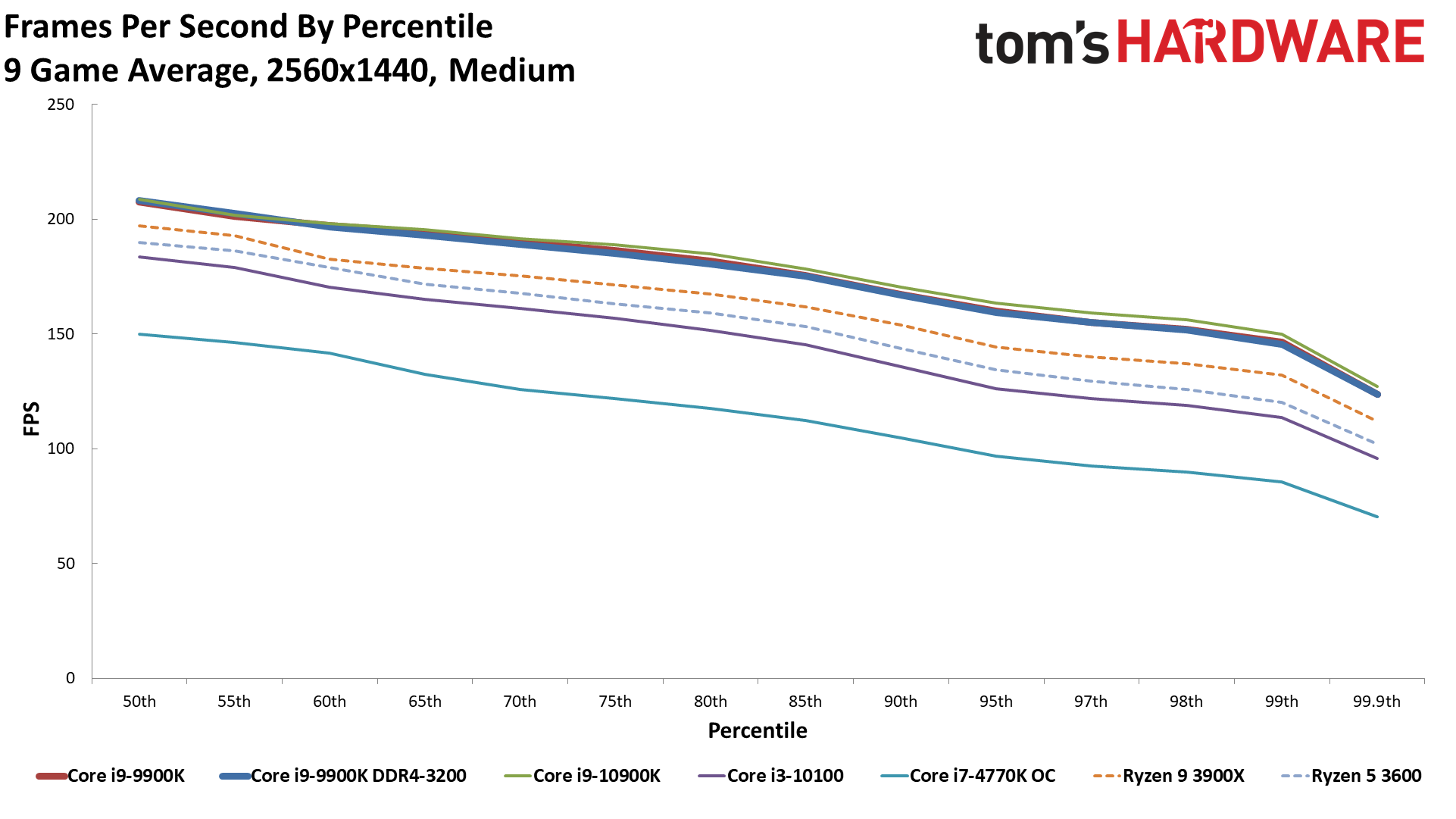

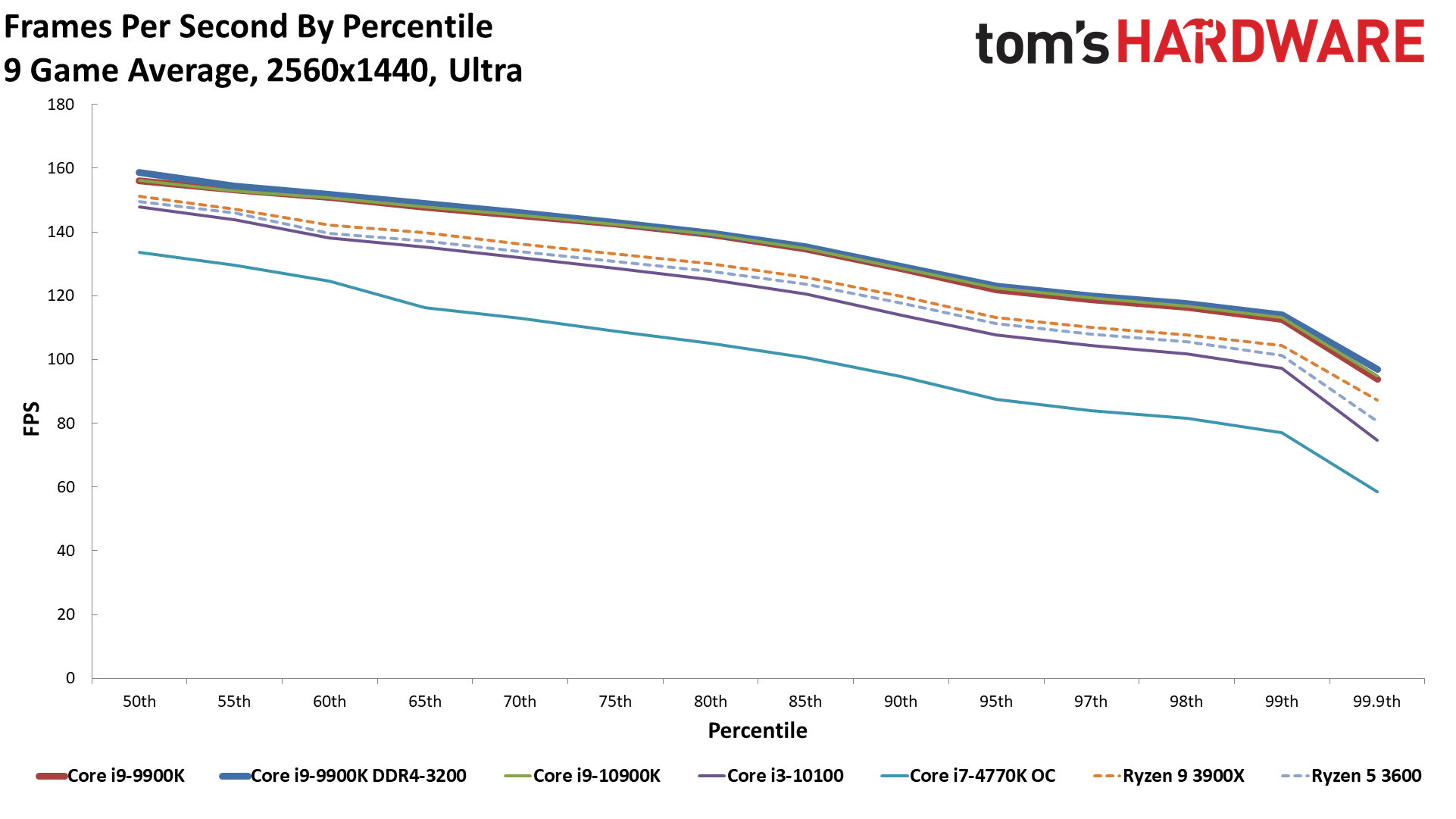

What about AMD's CPUs? First, let's note that the Ryzen 9 3900X only leads the Ryzen 5 3600 by a scant 7% at medium and 4% at ultra. Several games show nearly a 10% lead while others are effectively tied. That means comparisons between Intel and AMD don't change substantially when moving to a different AMD processor — the Zen 2 chips will all be within 10% of each other. And as we'll see momentarily, the AMD CPUs really scrunch together at higher resolutions.

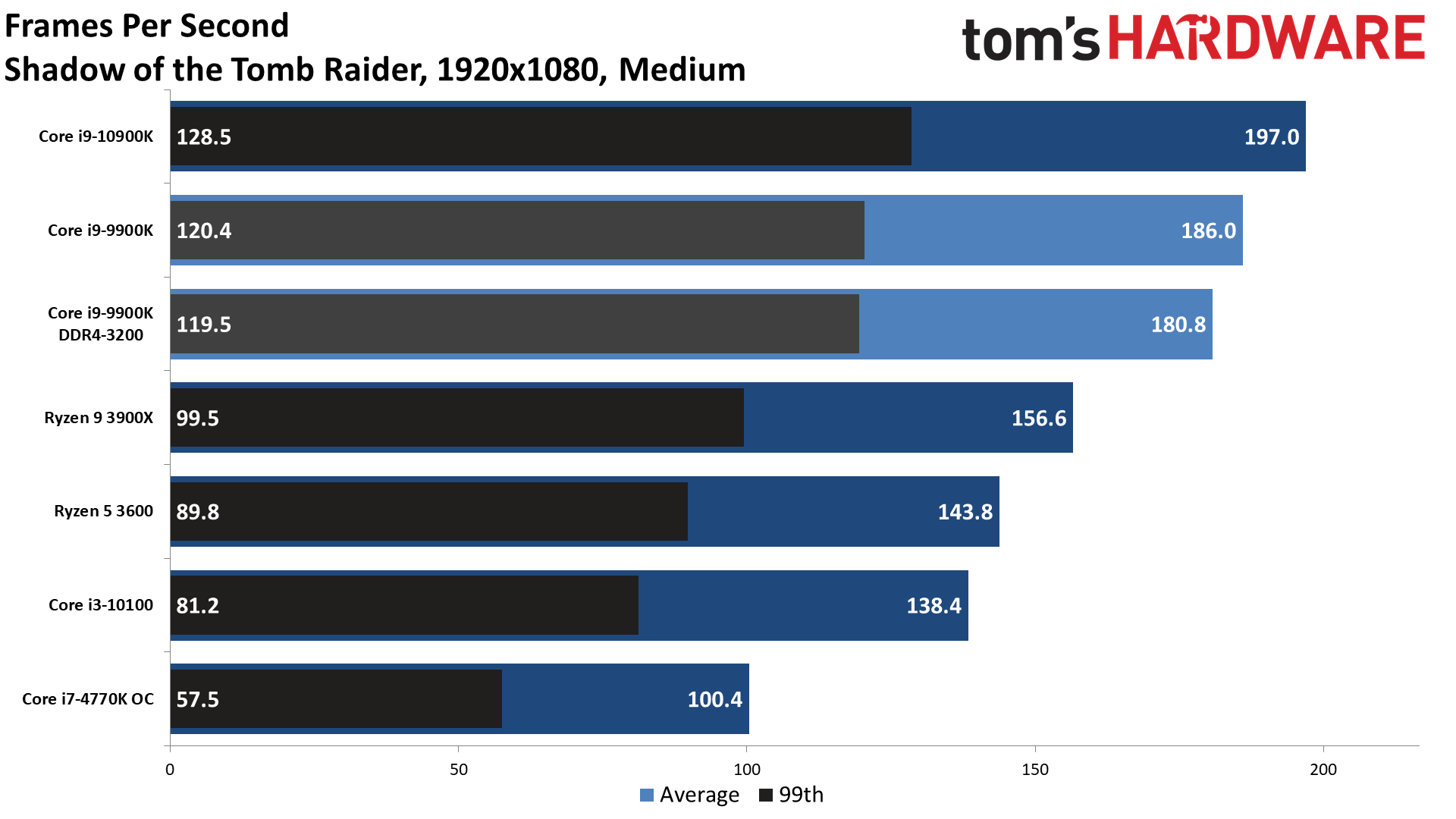

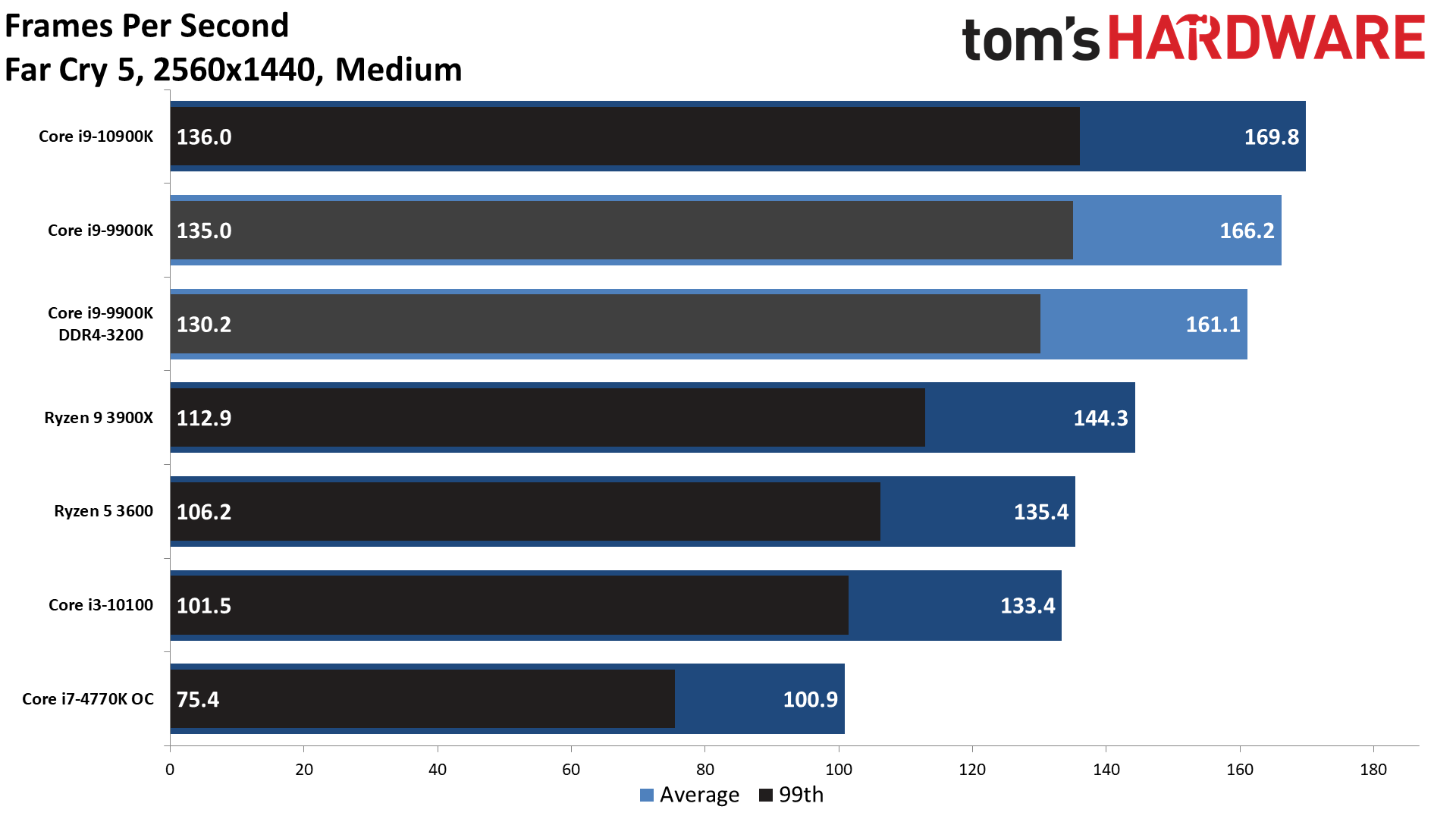

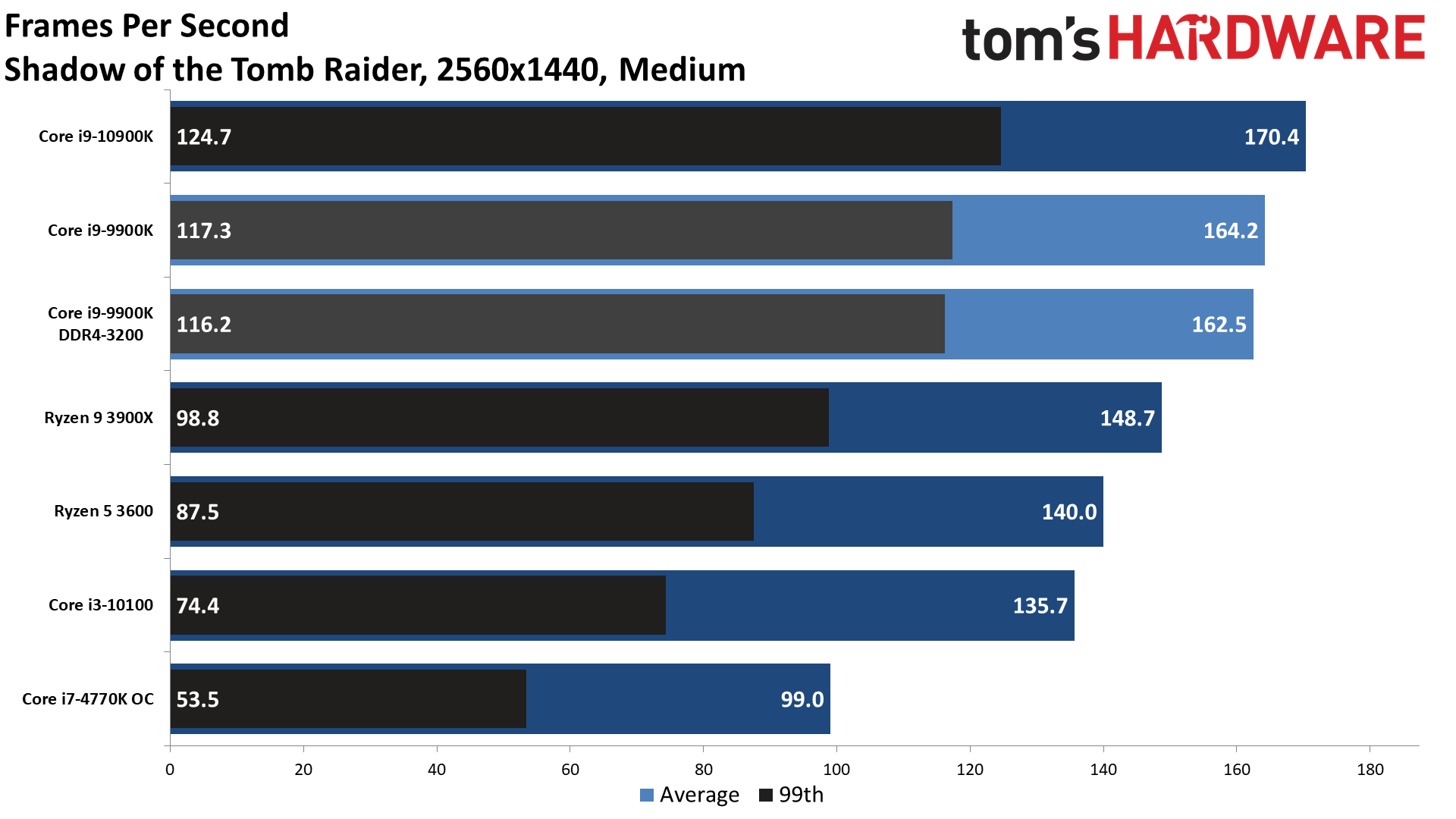

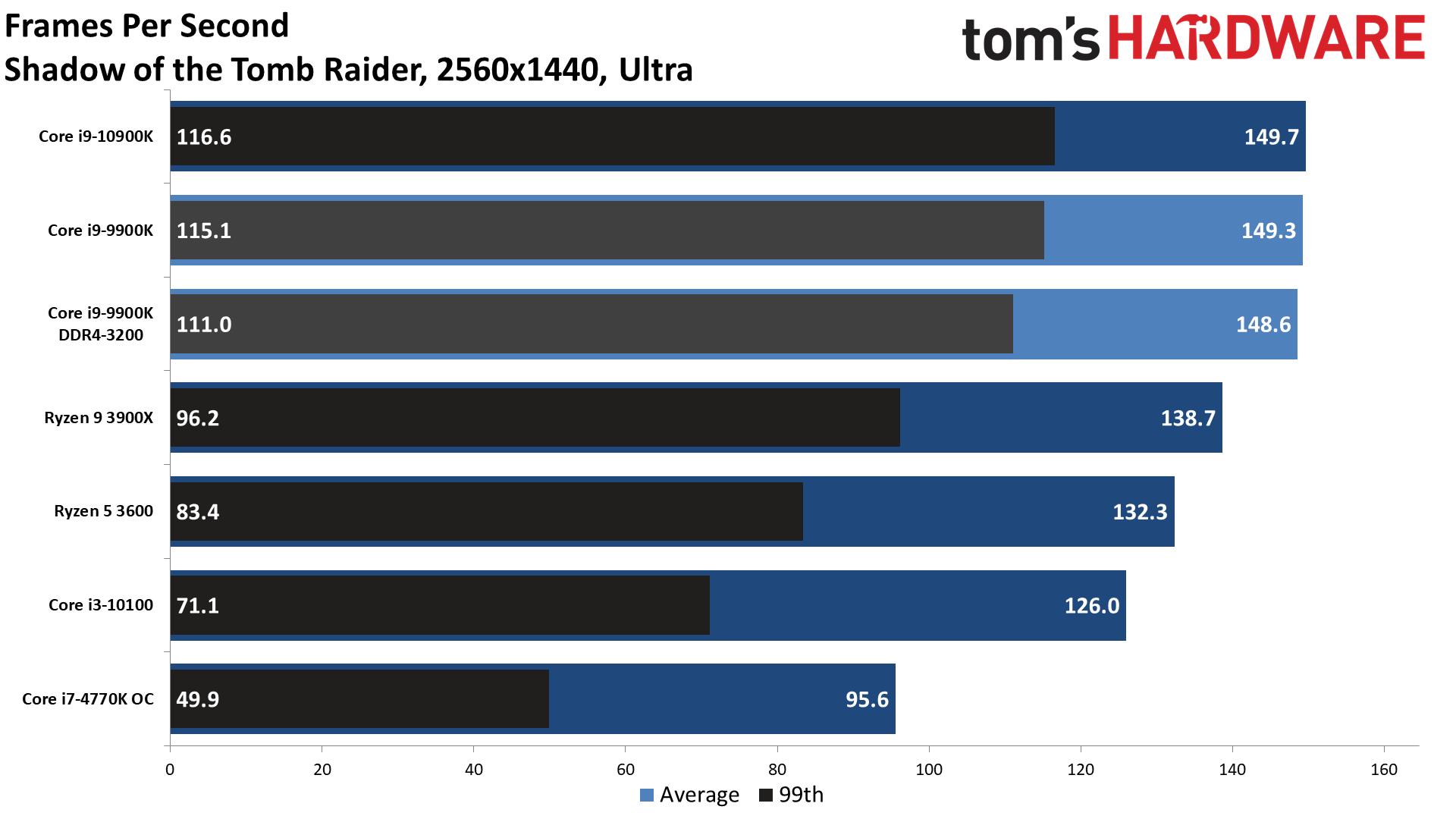

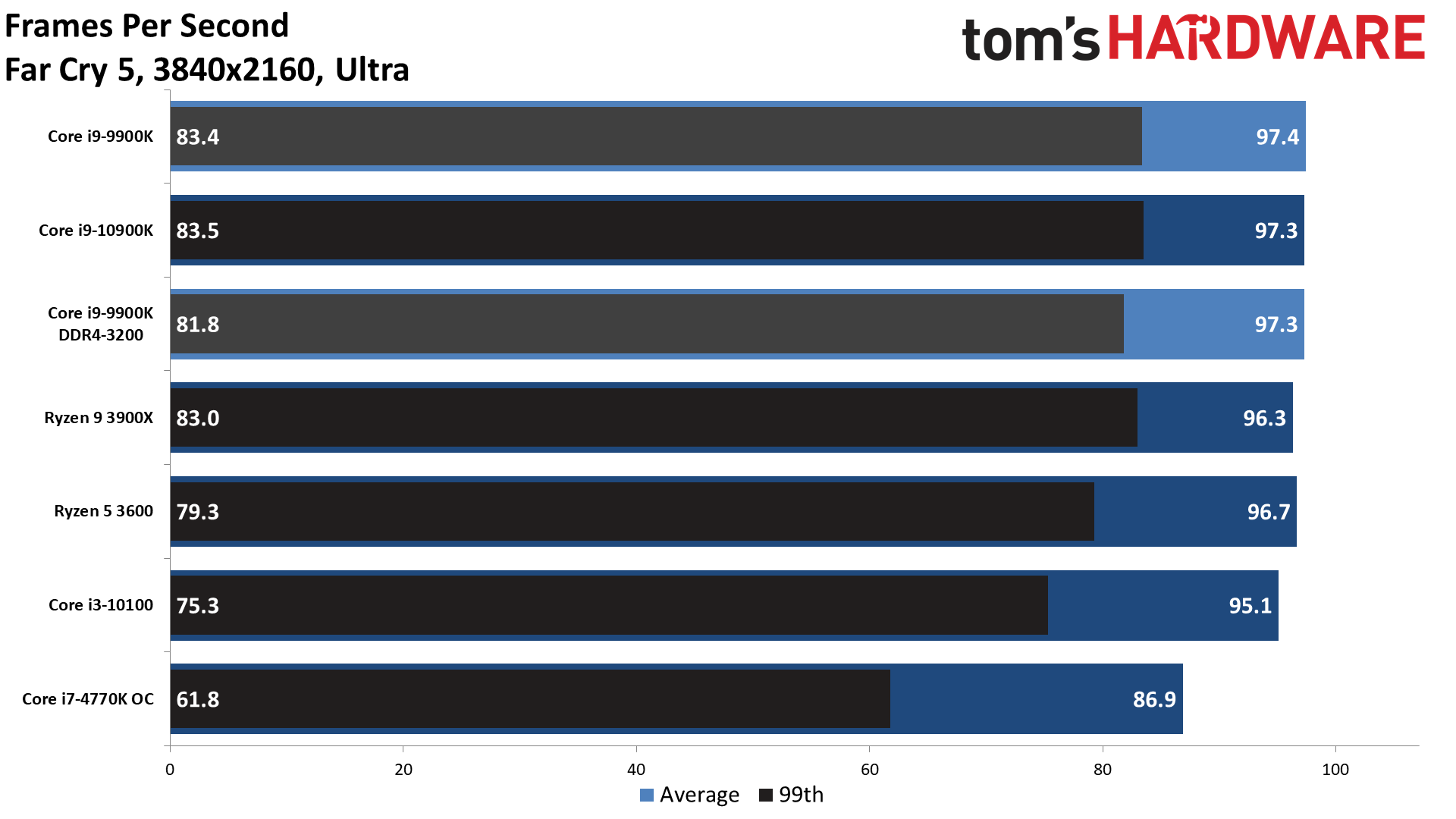

For AMD vs. Intel, the Core i9-10900K unsurprisingly comes out on top, by a noticeable 18% at 1080p medium. Four of the games we tested give Intel more than a 20% lead, with Final Fantasy being the only result that's in the single percentage points. Again, we don't expect most gamers would ever pair the RTX 3080 with 1080p medium settings, but this is the worst-case scenario for CPU scaling. Shifting up to 1080p ultra, Intel's lead drops to just 8%. Far Cry 5 and Shadow of the Tomb Raider still favor Intel by over 20%, Metro Exodus shows a 15% lead, and everything else is below 10%.

Finally, while we didn't do a full suite of testing with ray tracing and DLSS effects, it's important to remember that the more demanding a game becomes on your GPU — and ray tracing is definitely demanding — the less your CPU matters. Many games will still need DLSS in performance mode to stay above 60 fps with all the ray tracing effects enabled.

GeForce RTX 3080 FE: 1440p CPU Benchmarks

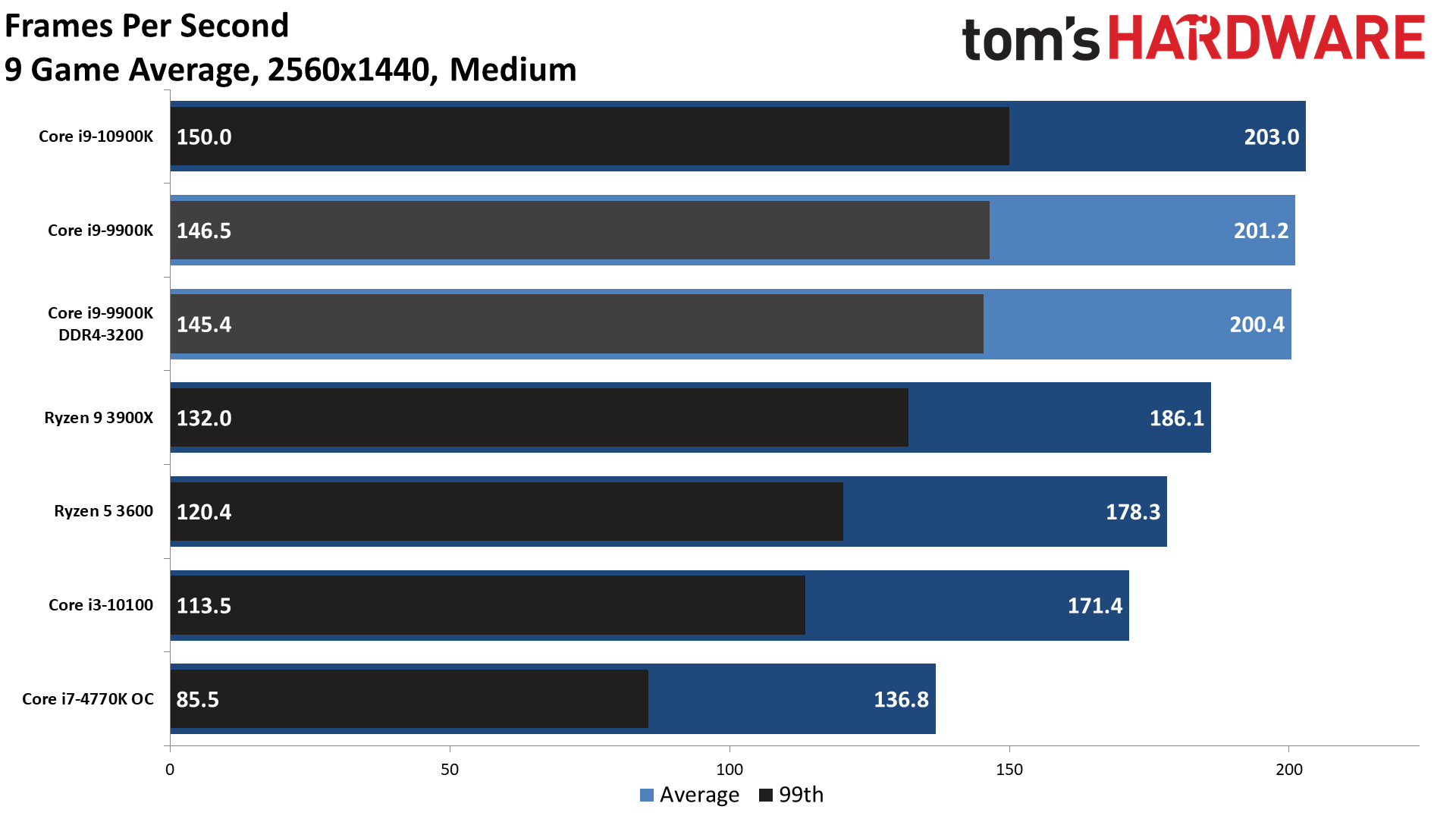

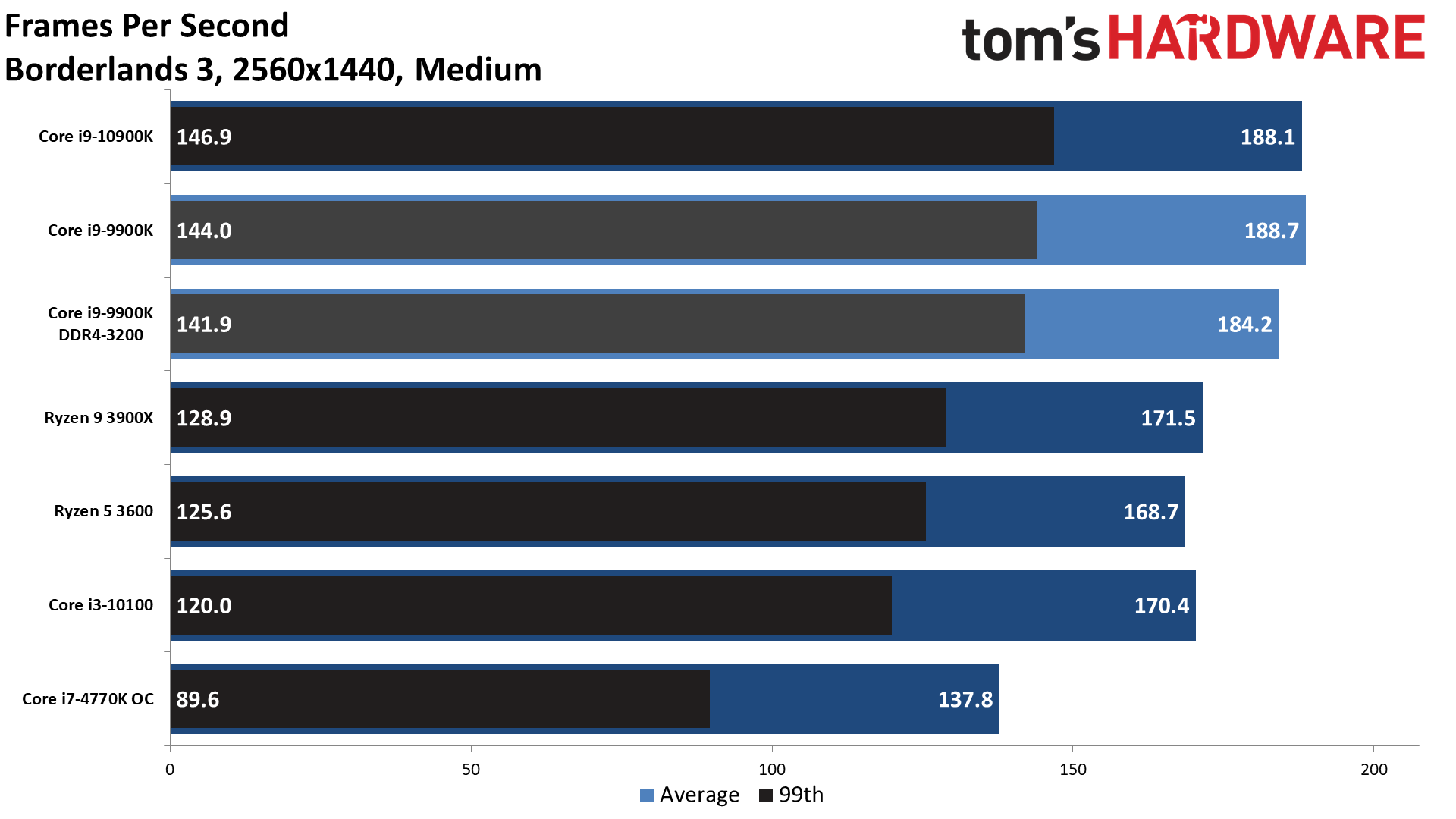

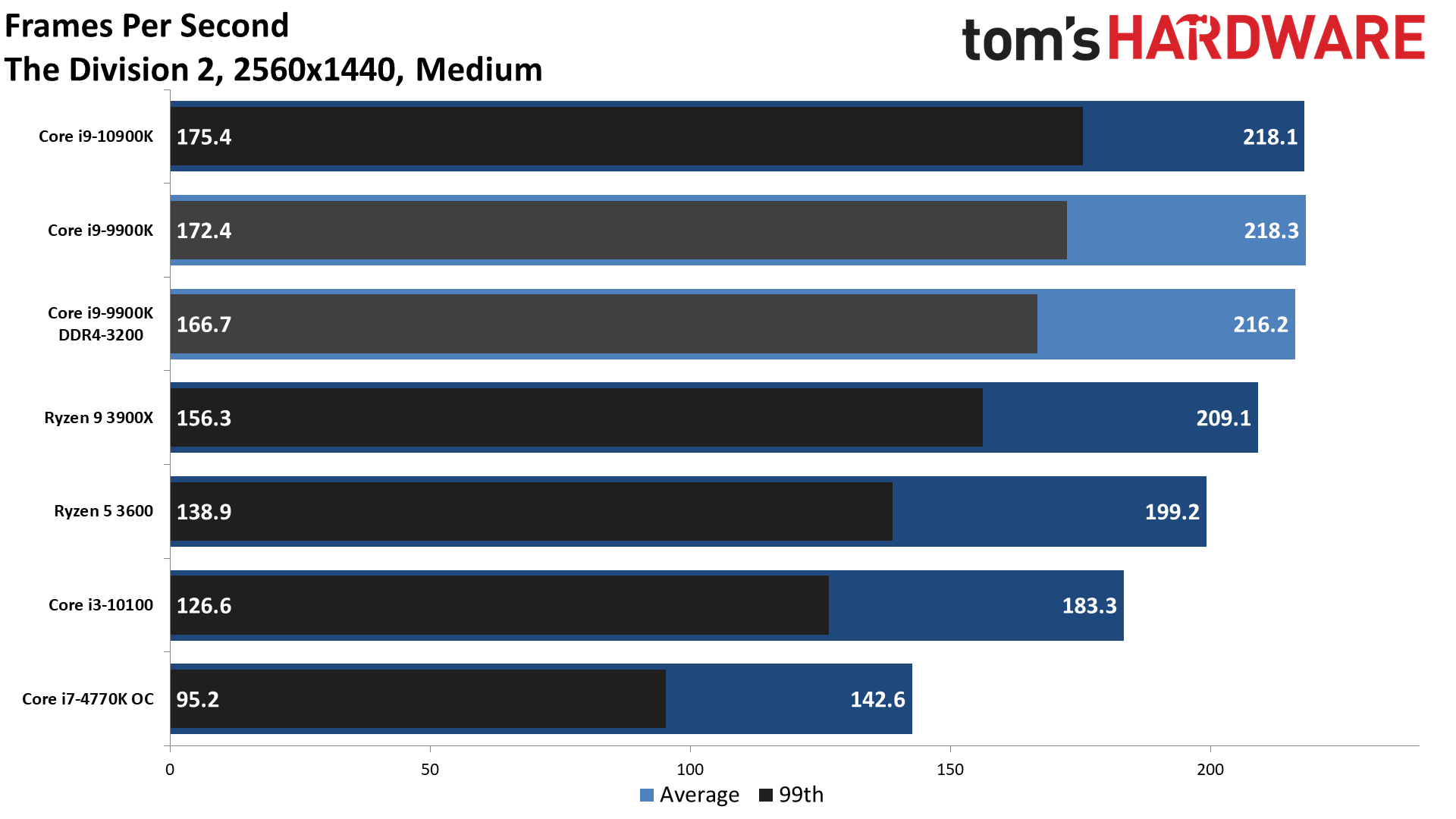

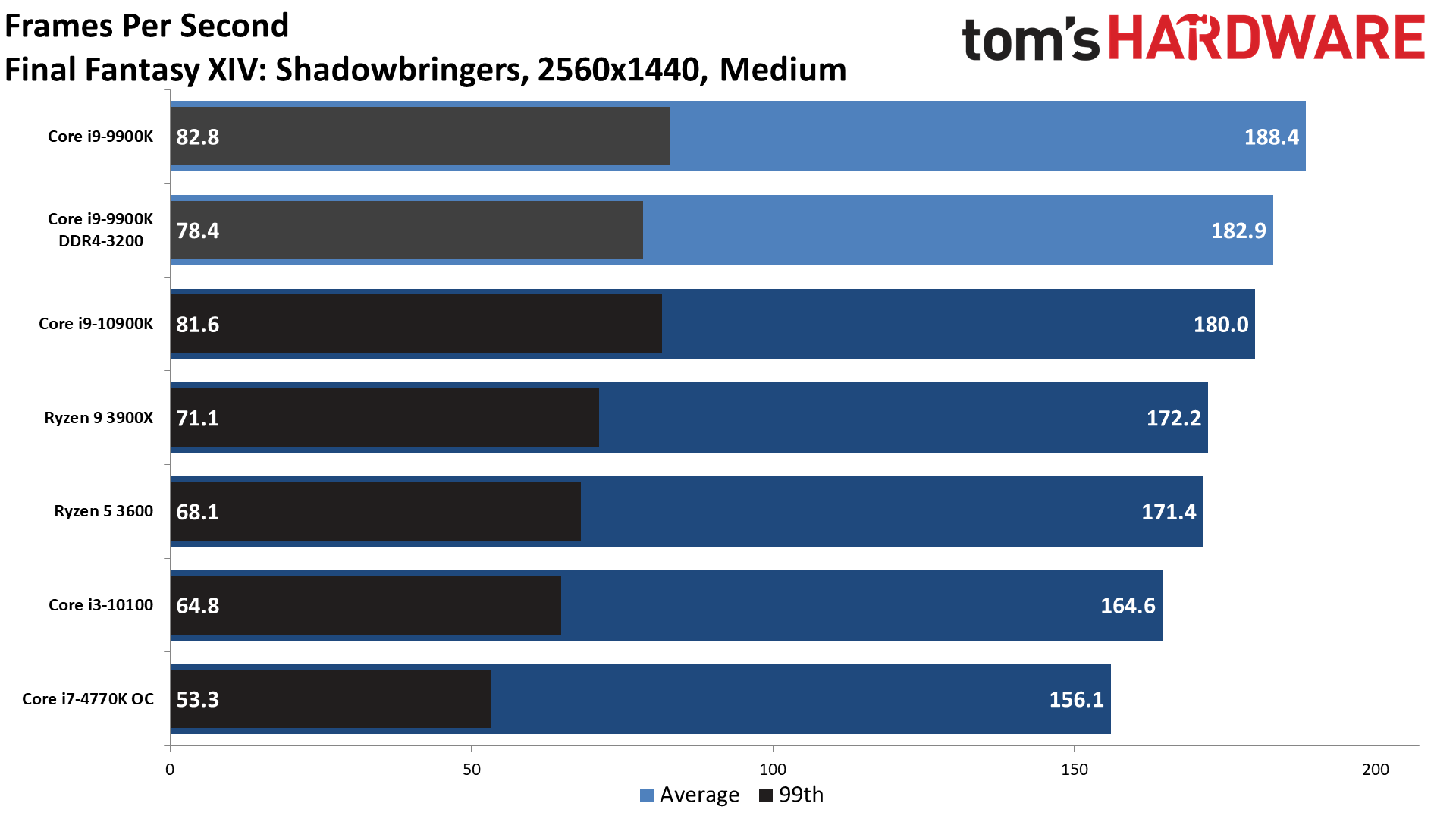

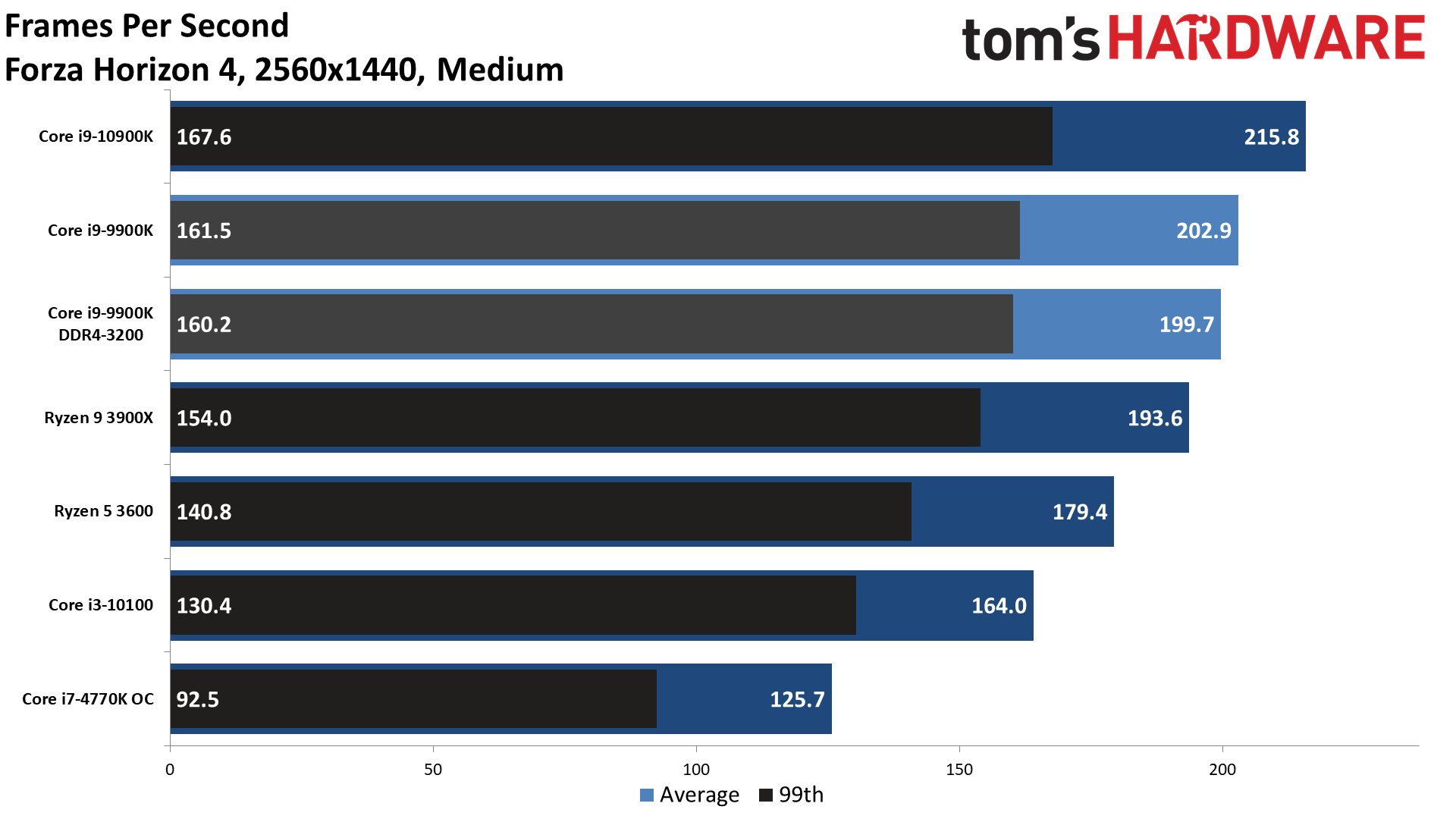

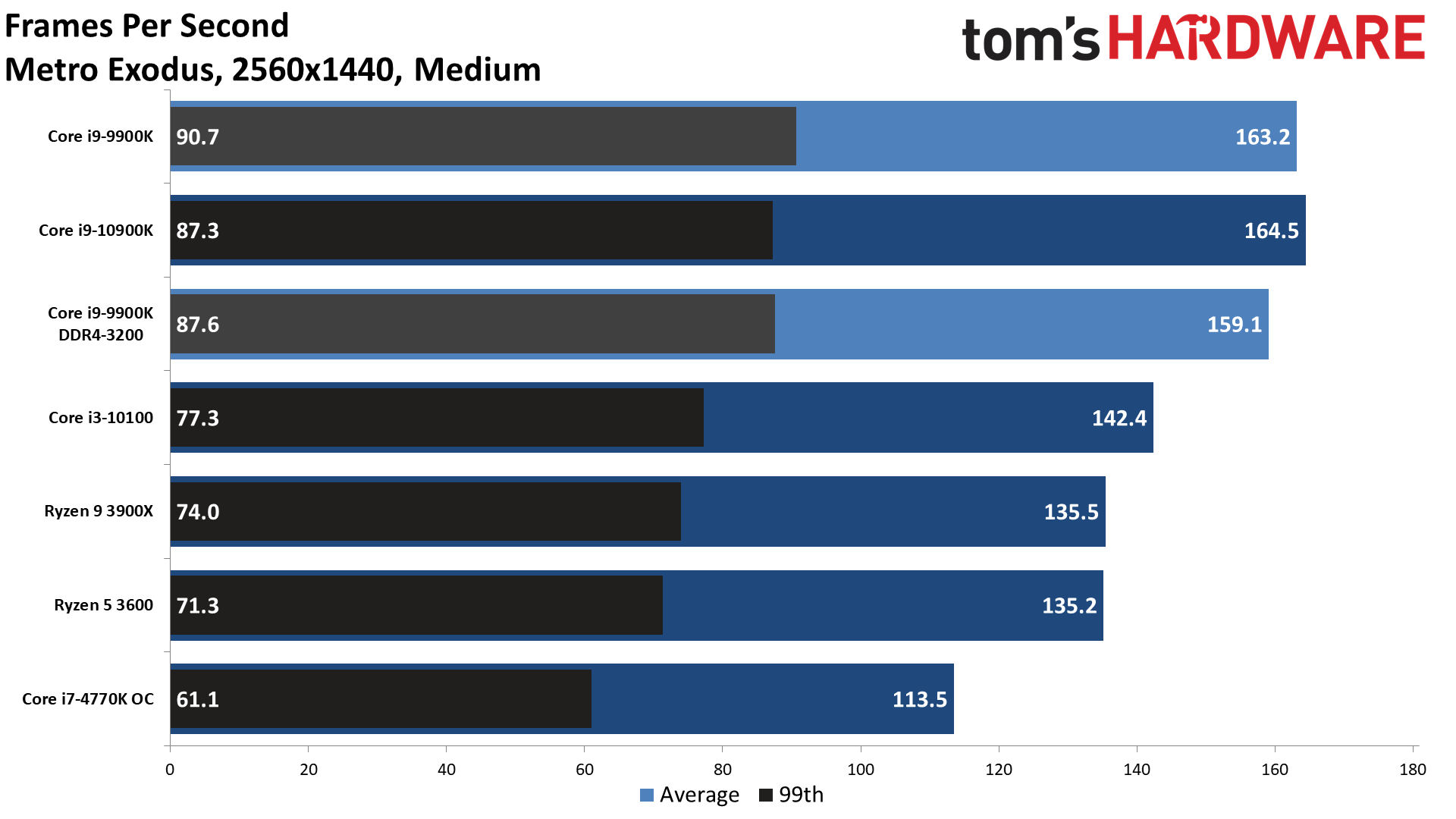

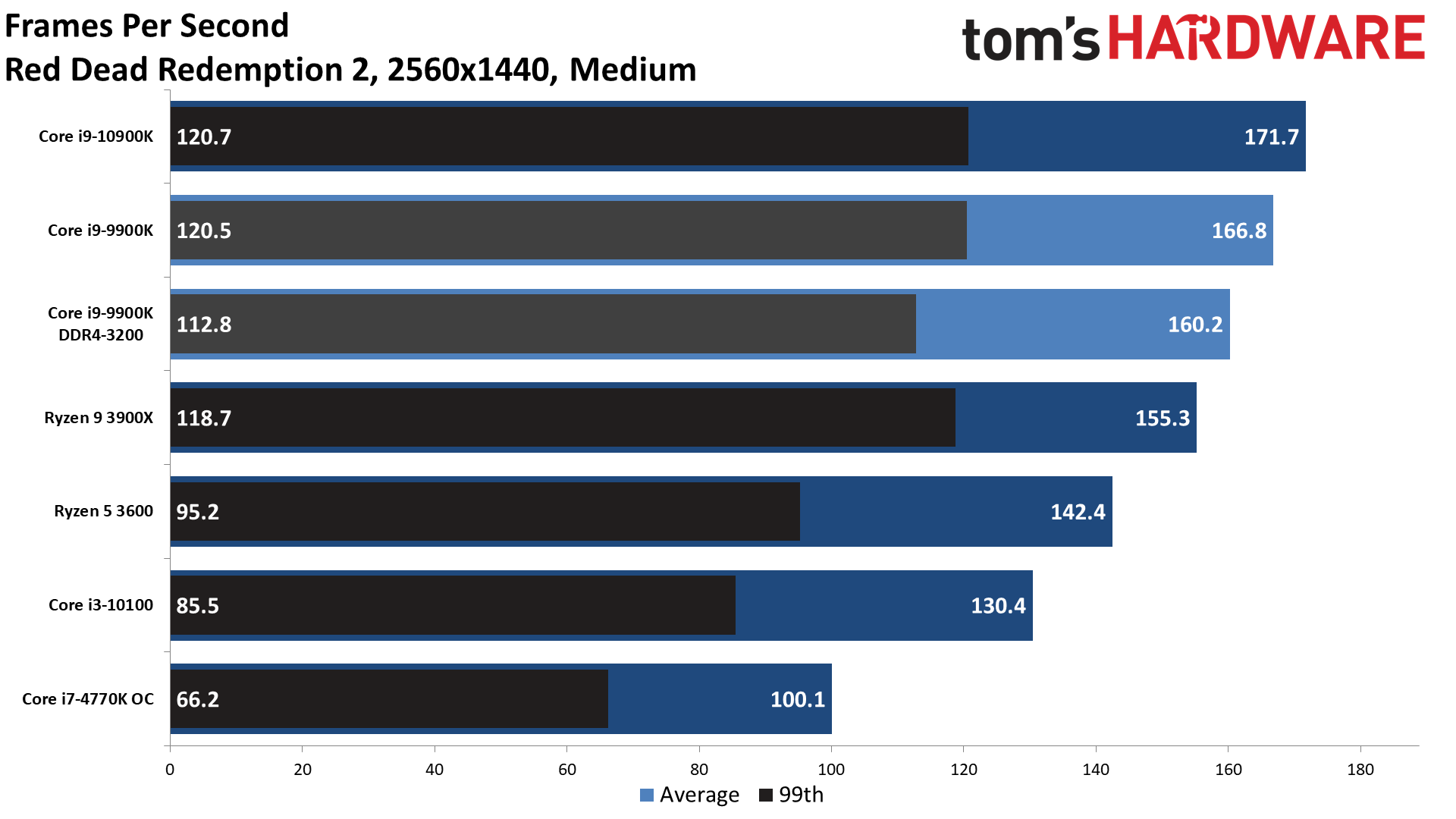

1440p Medium

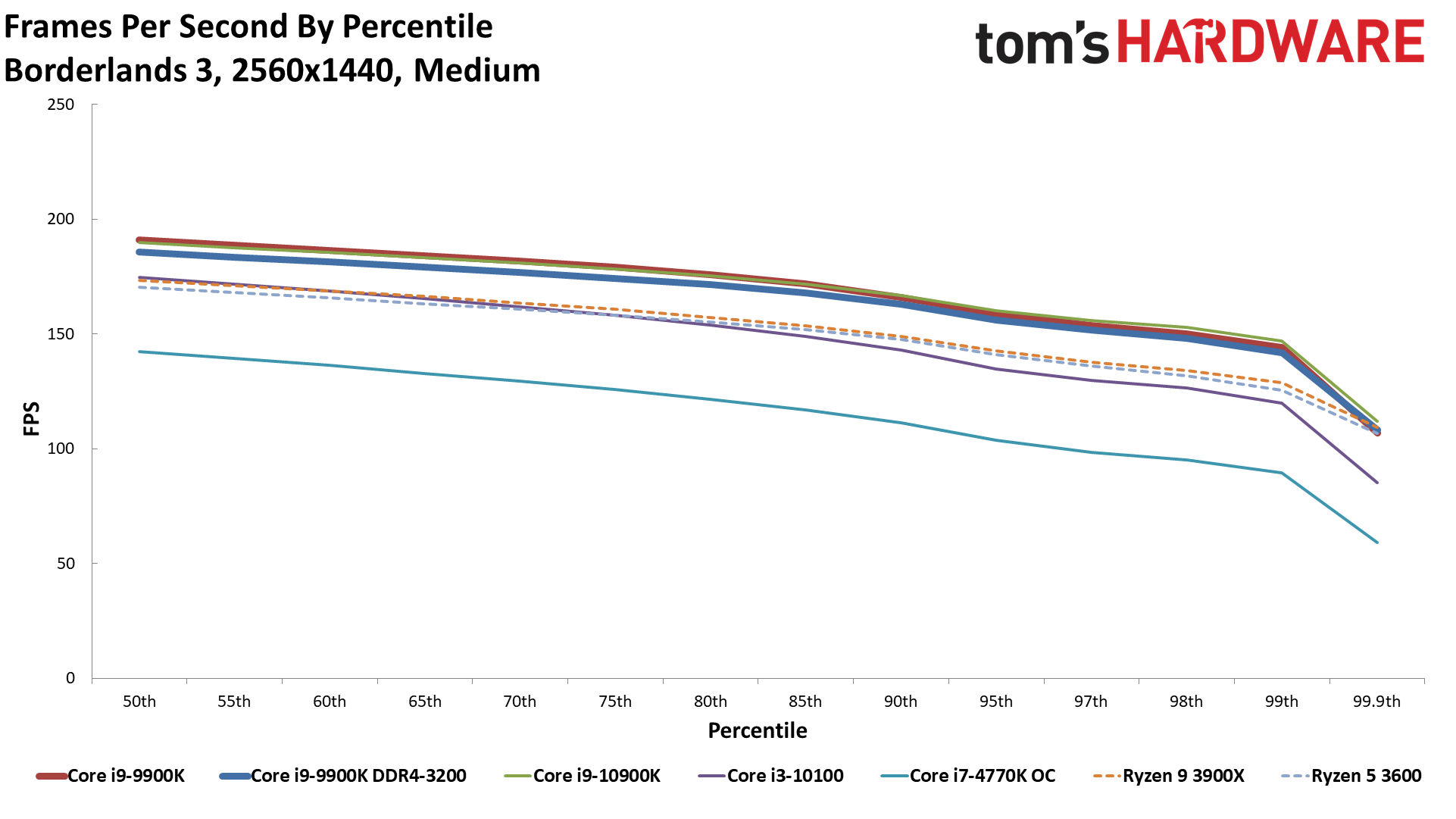

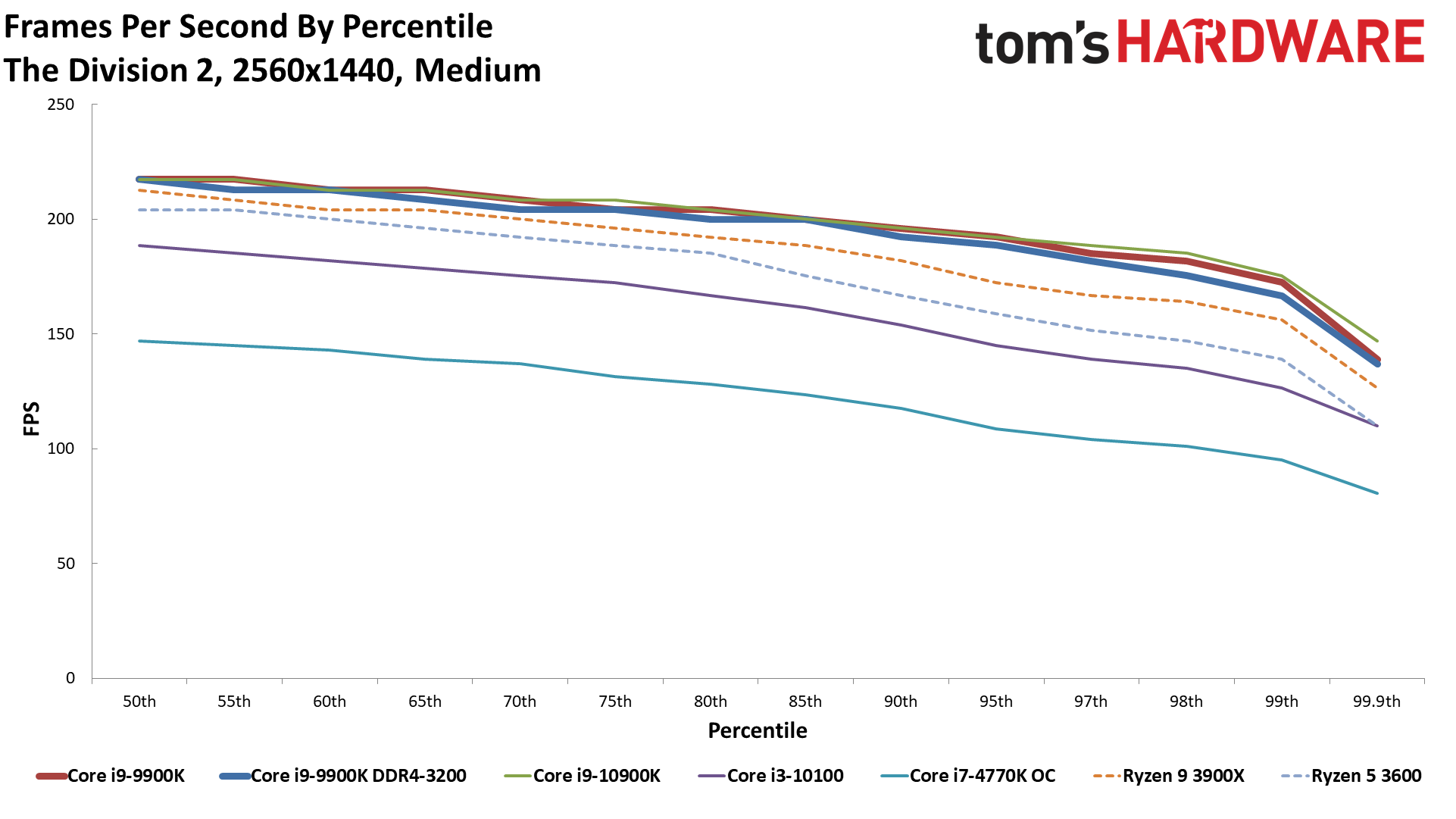

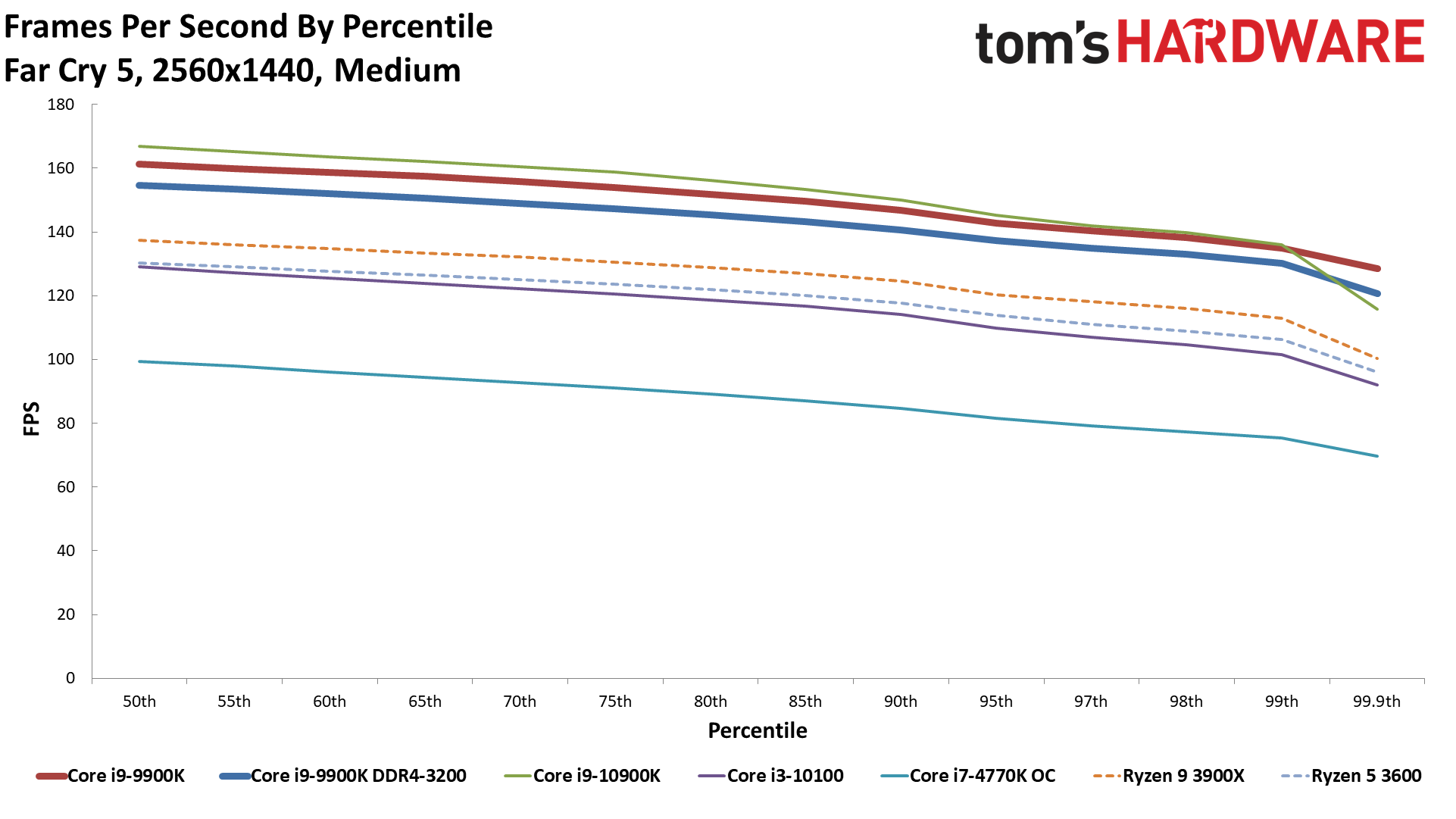

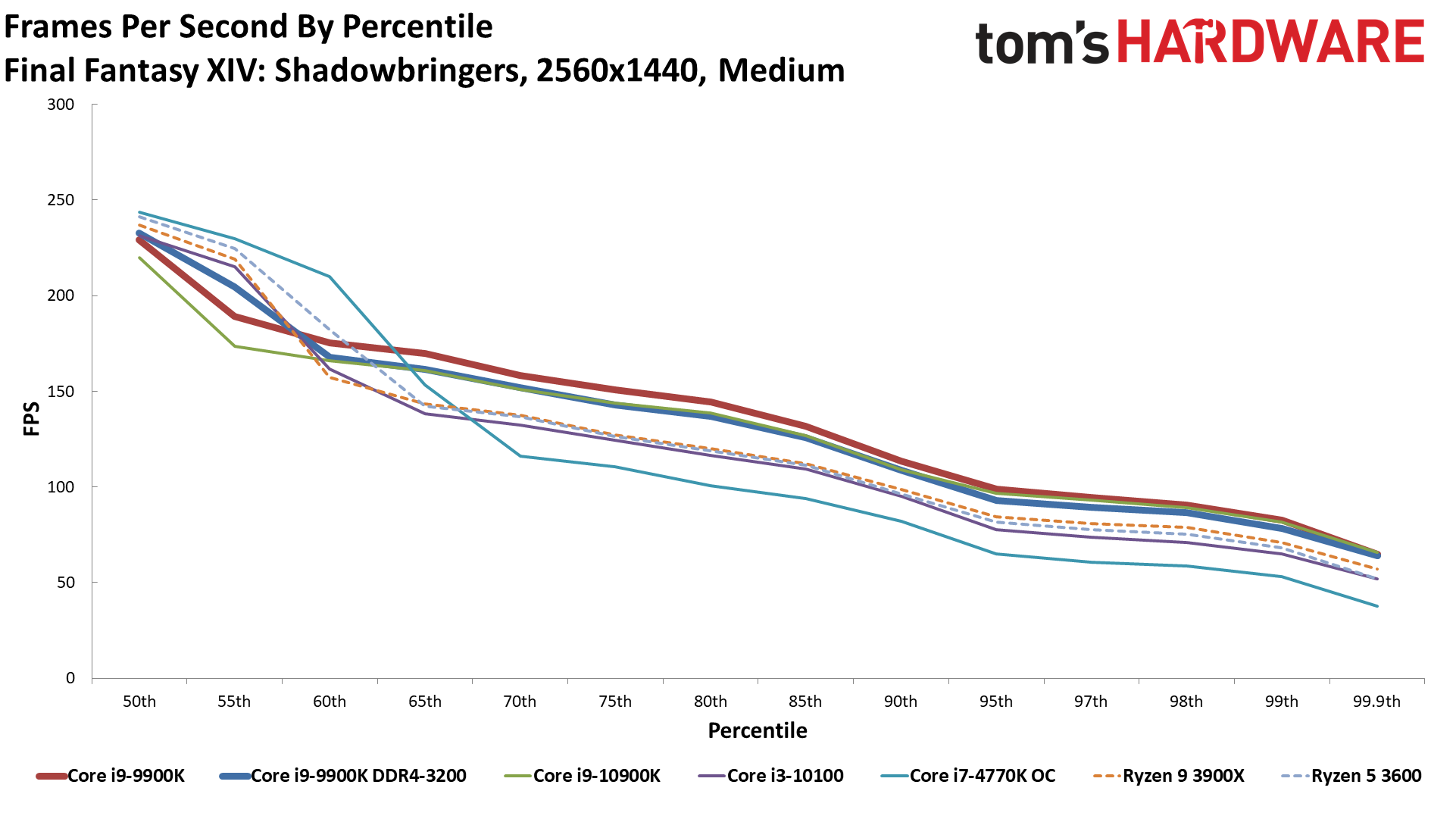

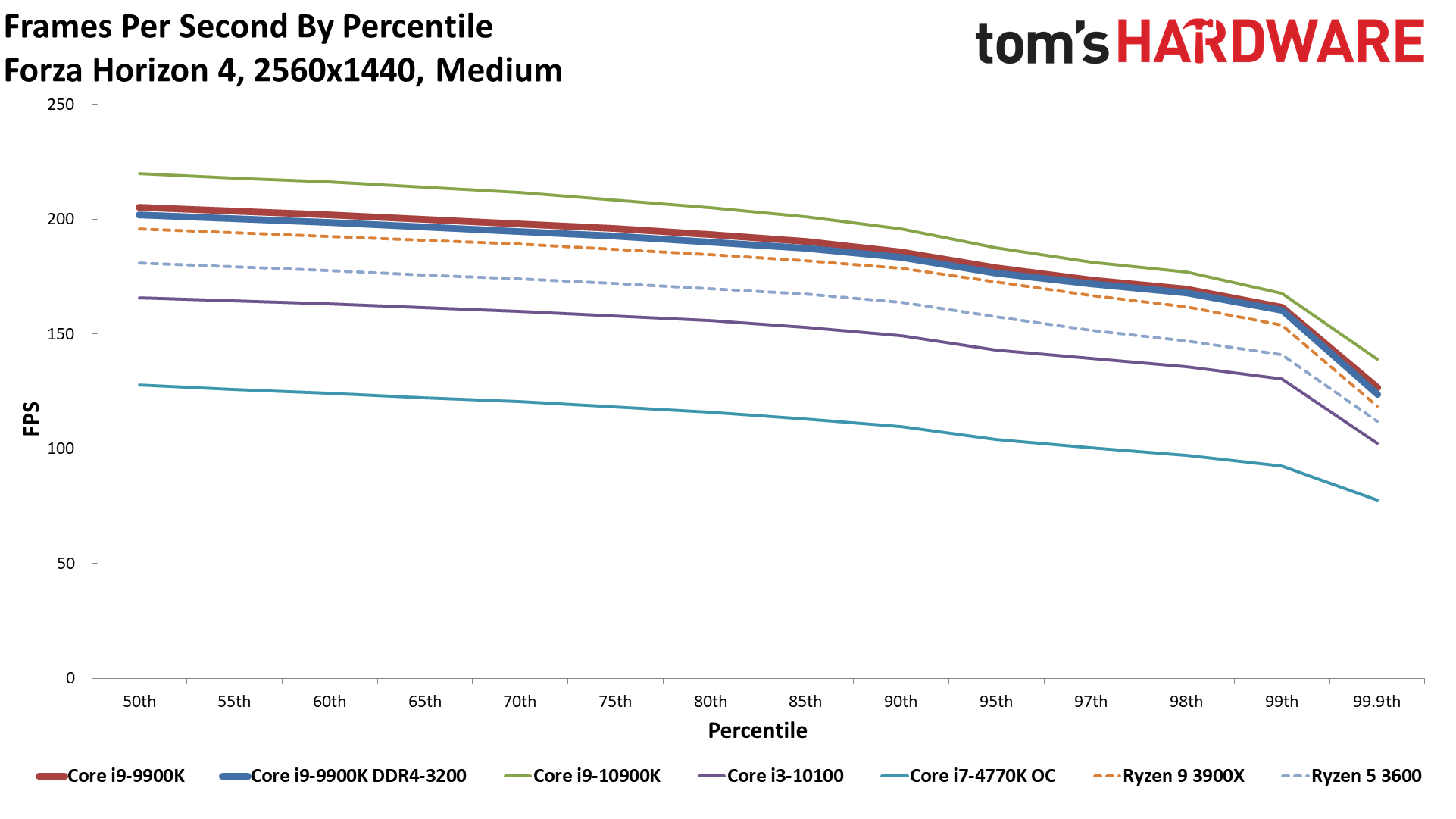

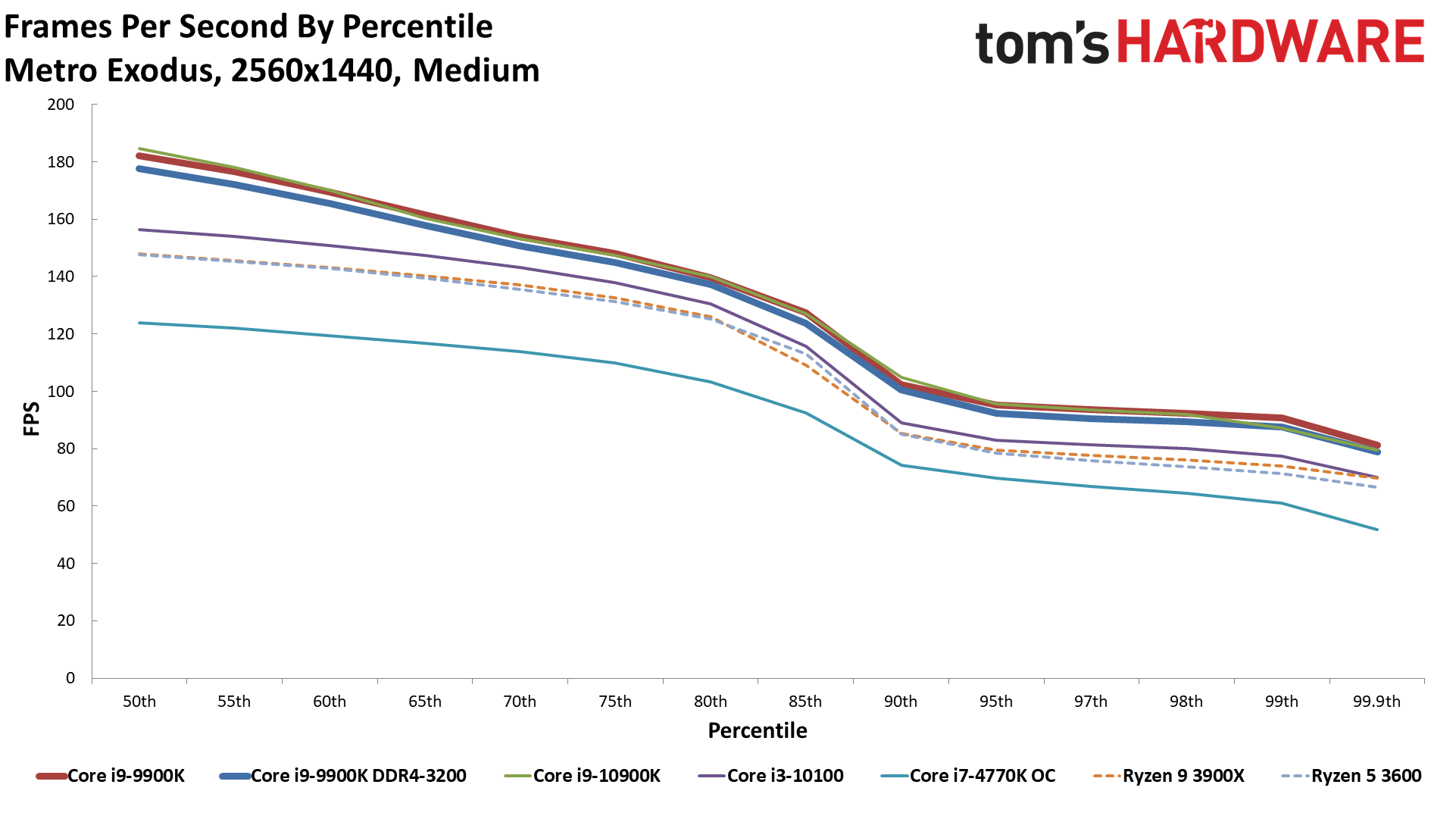

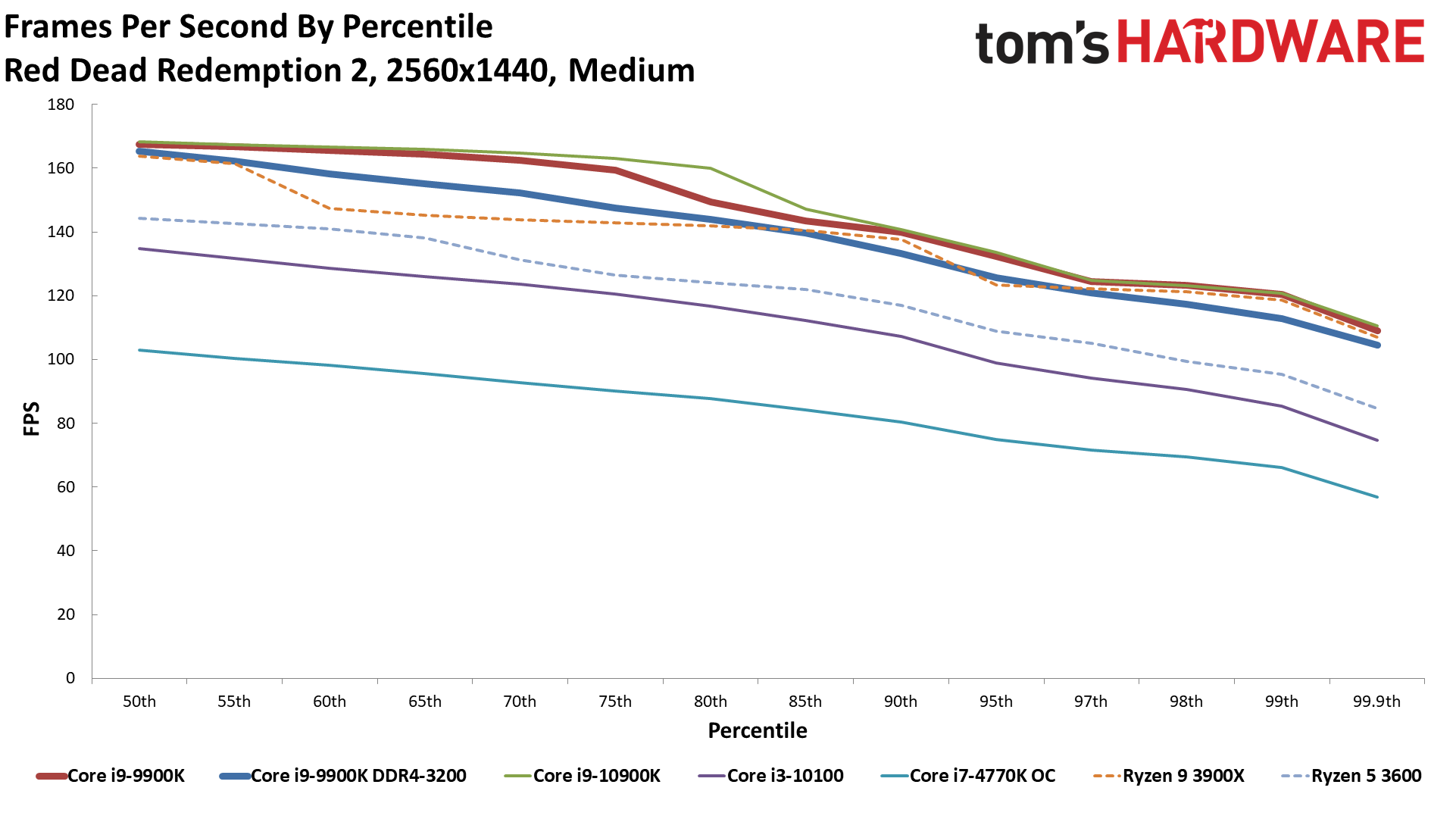

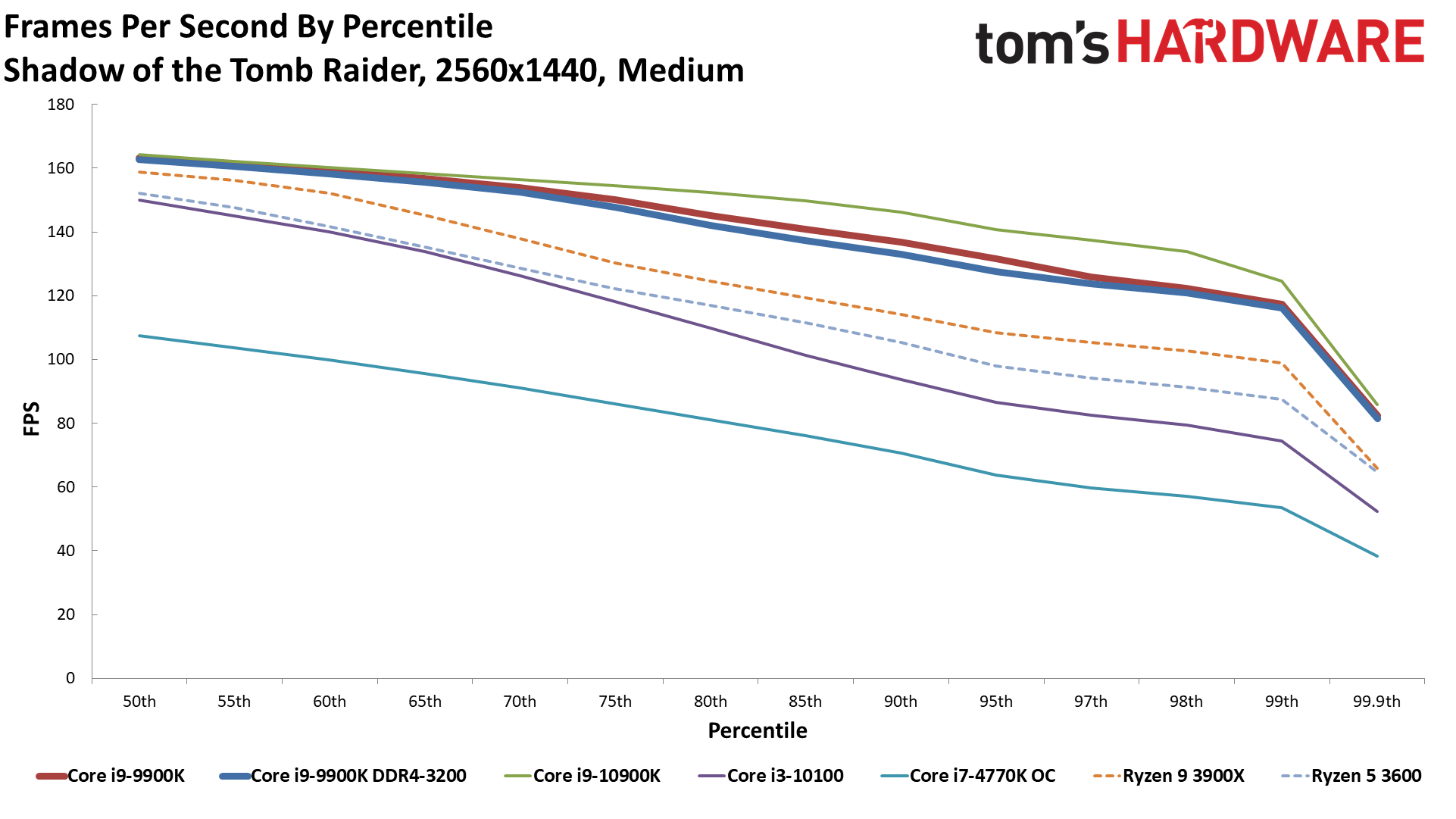

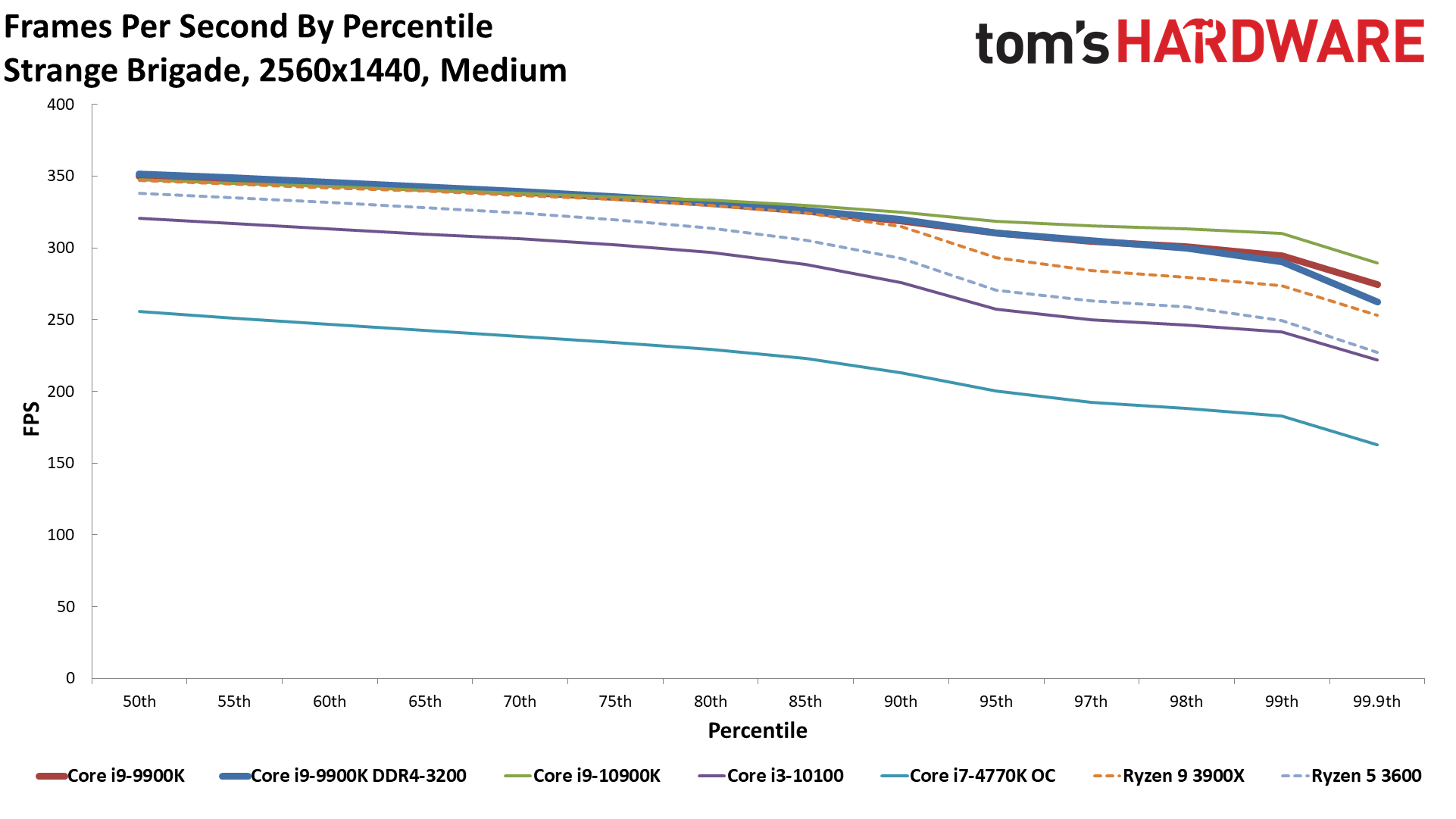

1440p Medium Percentiles

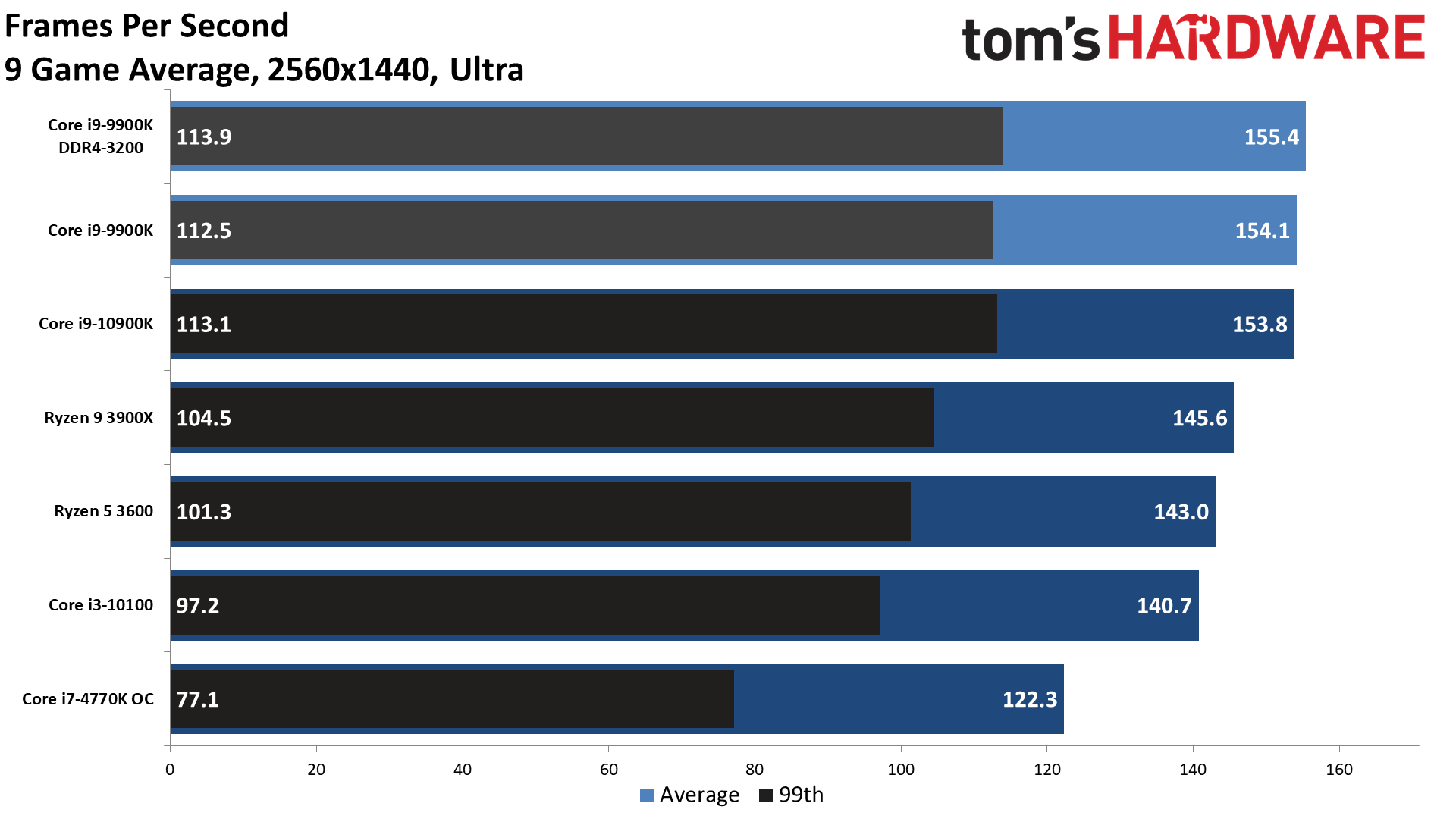

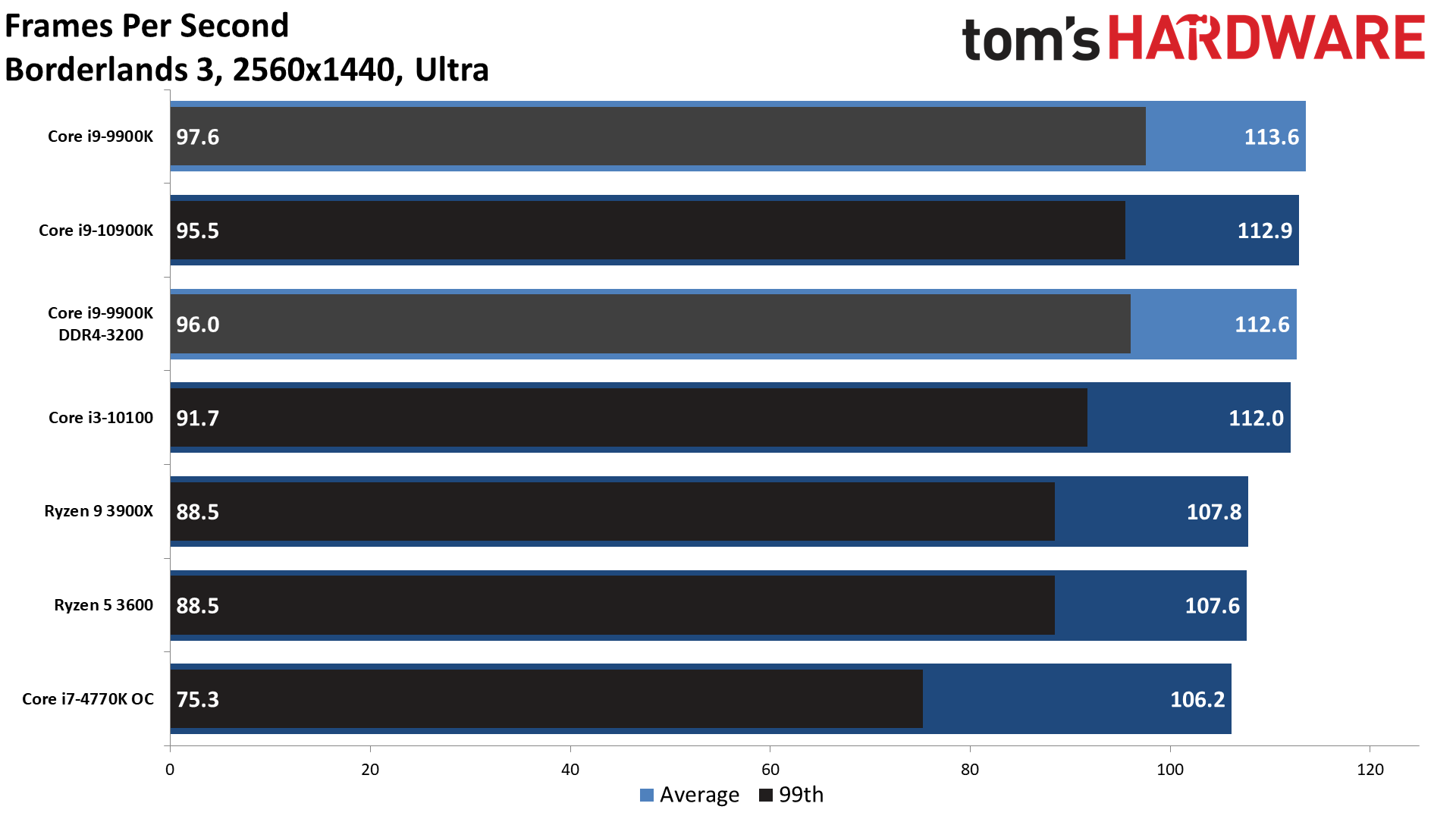

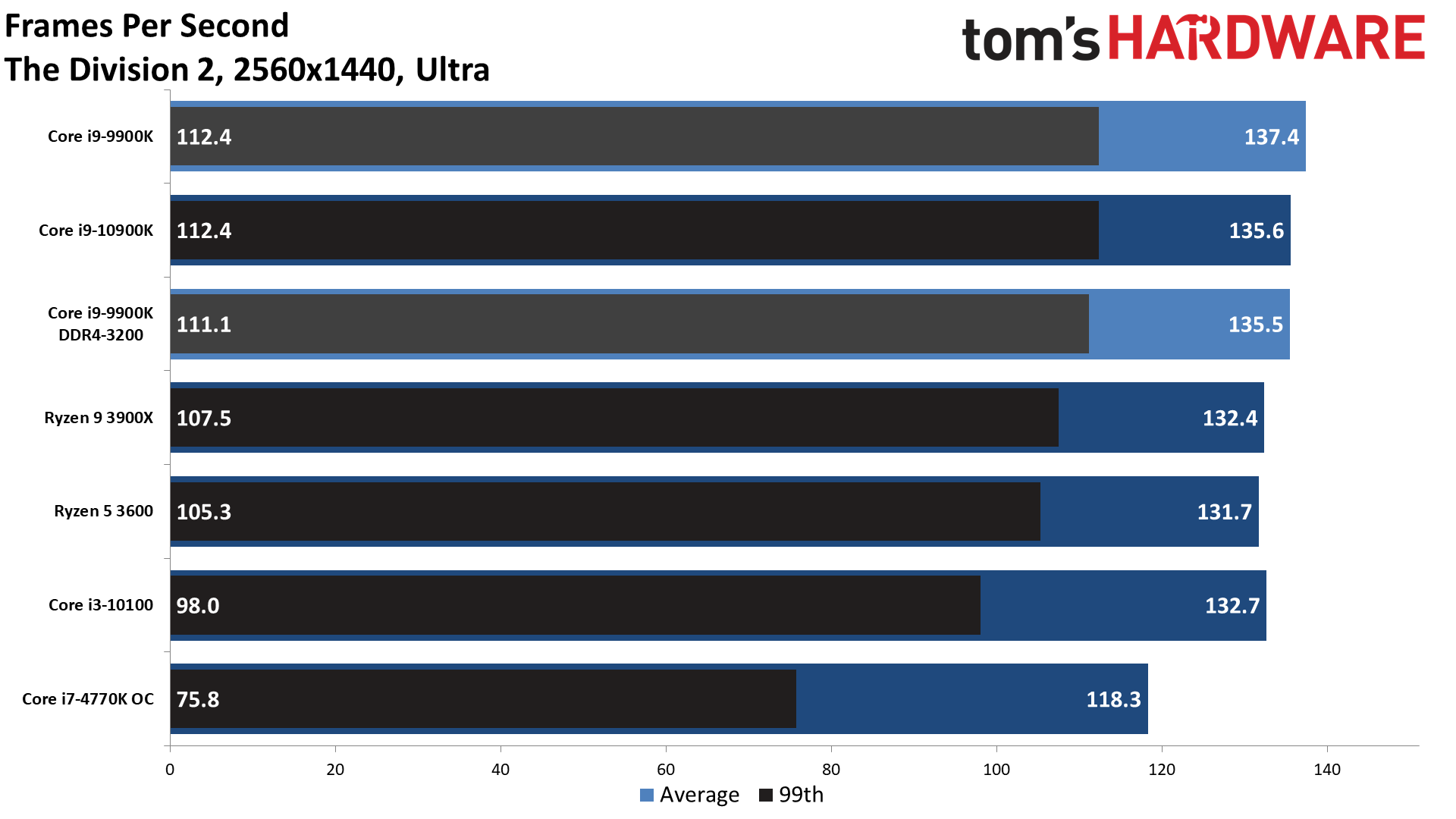

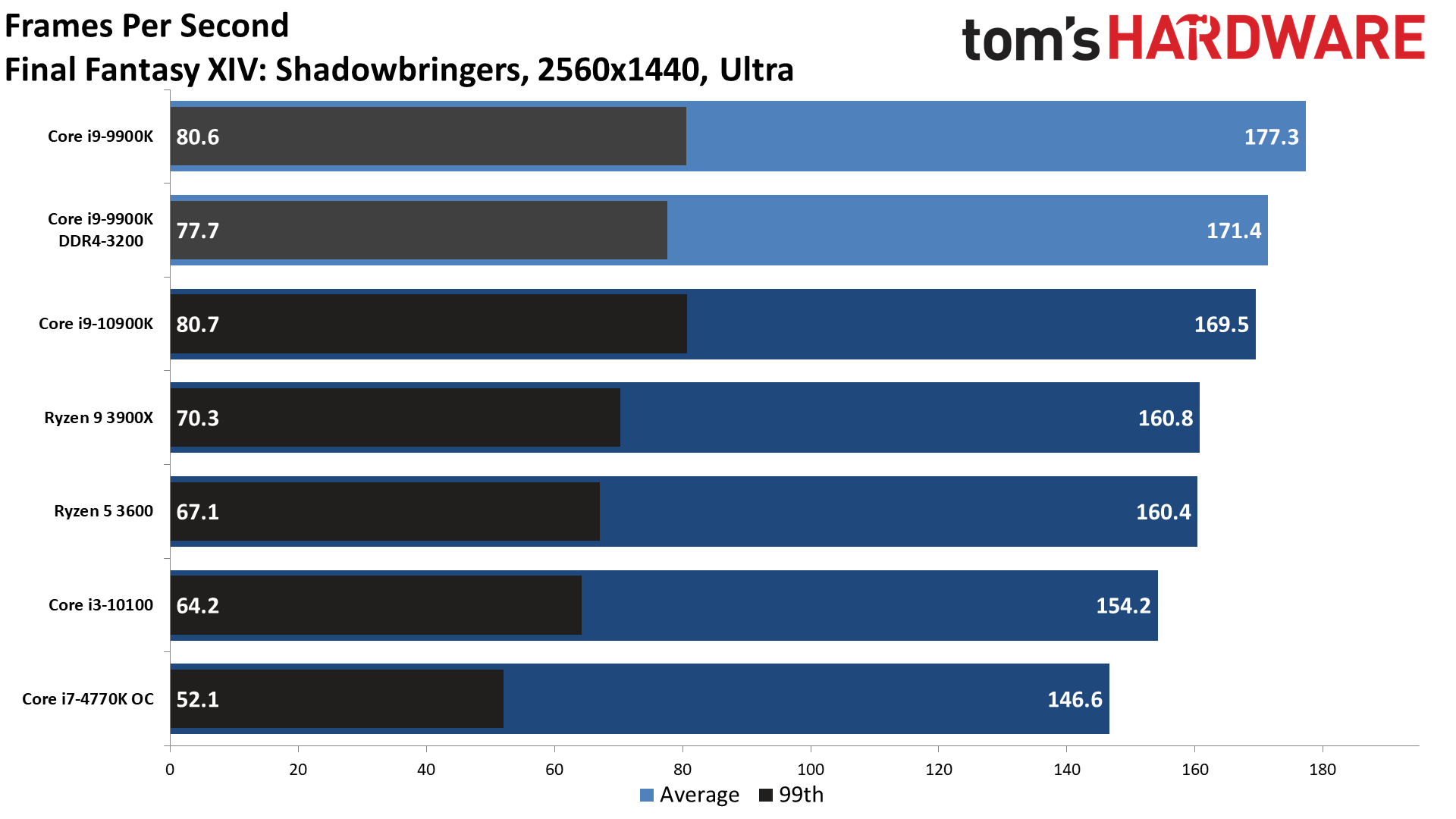

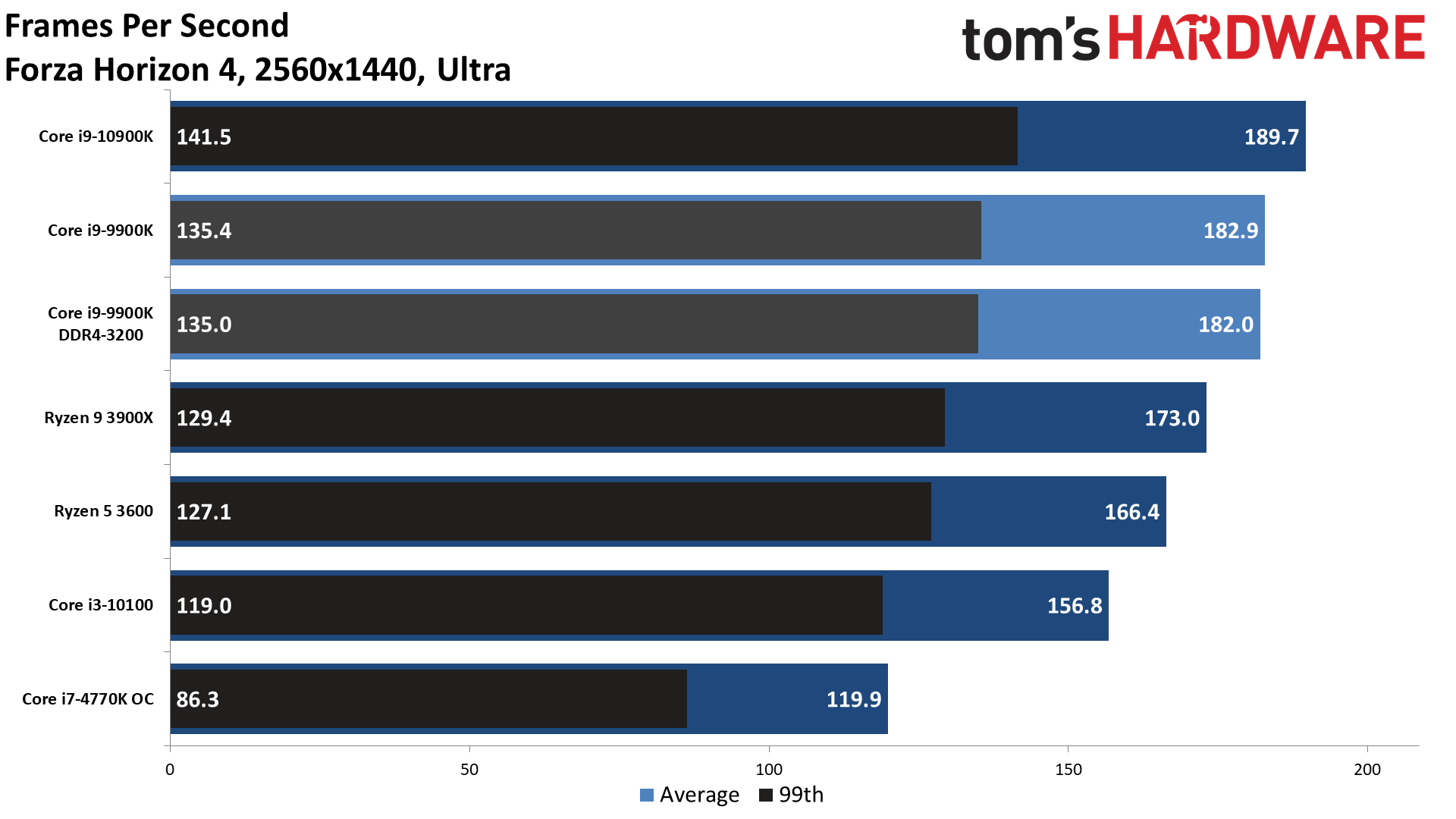

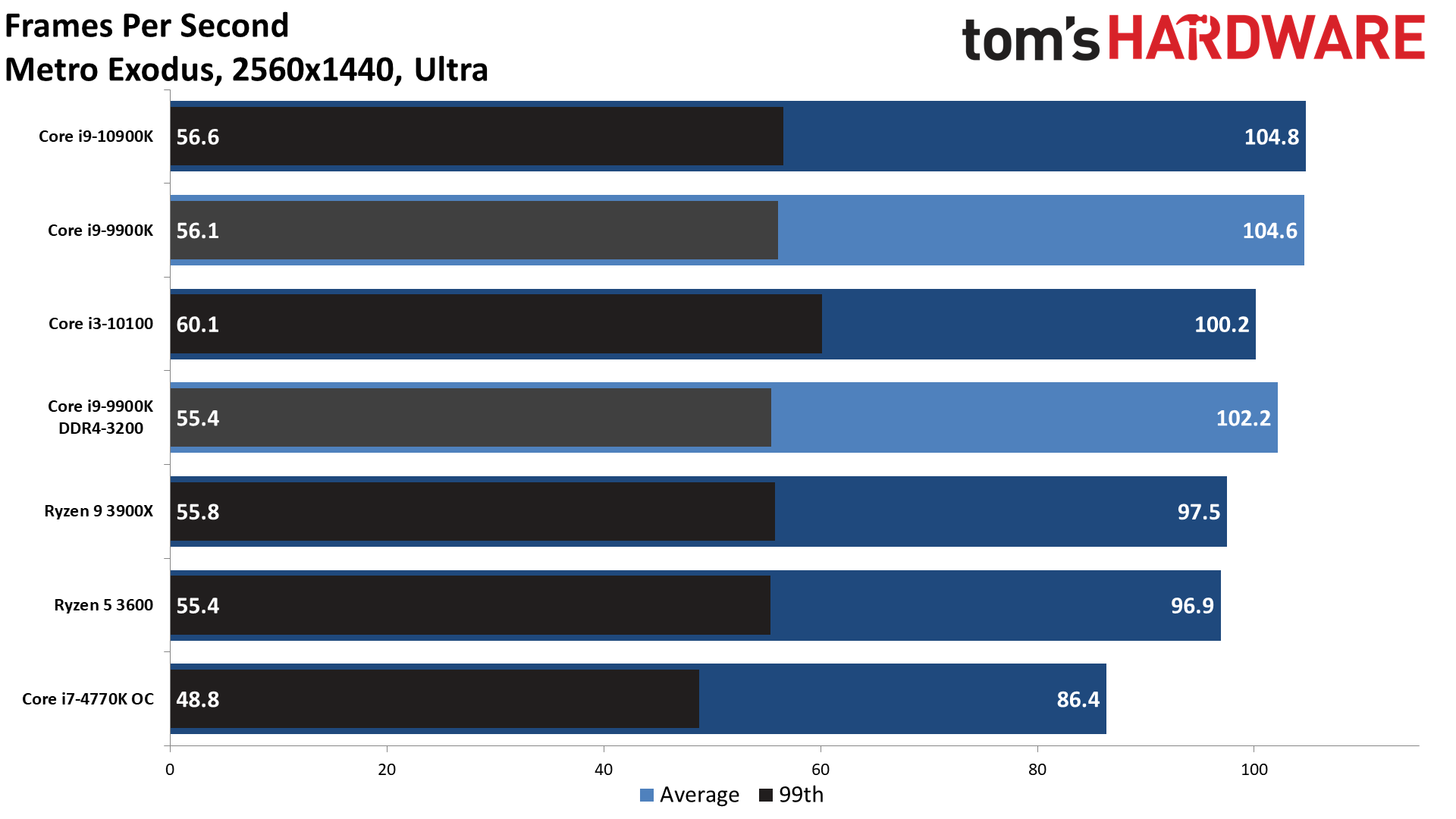

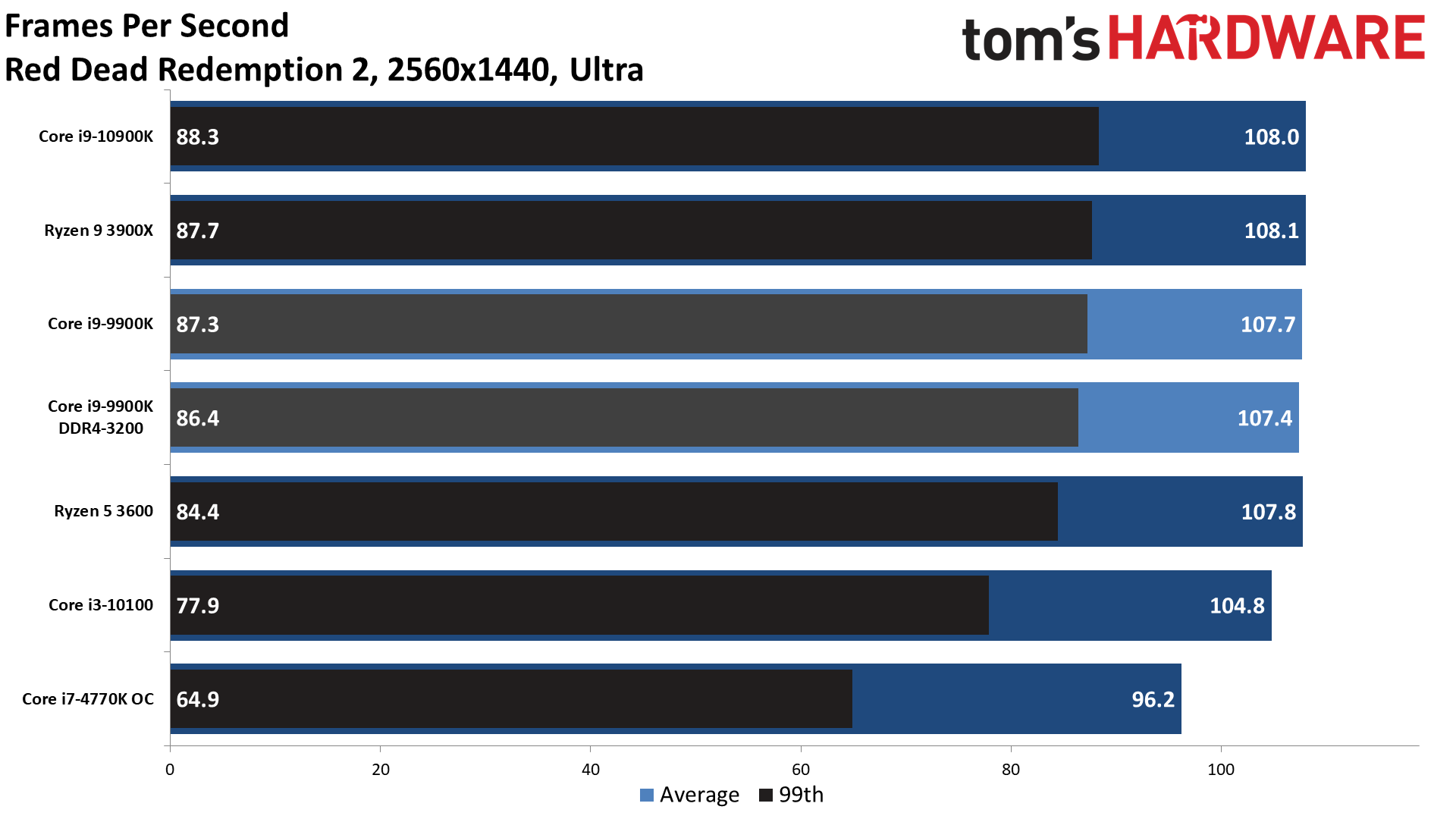

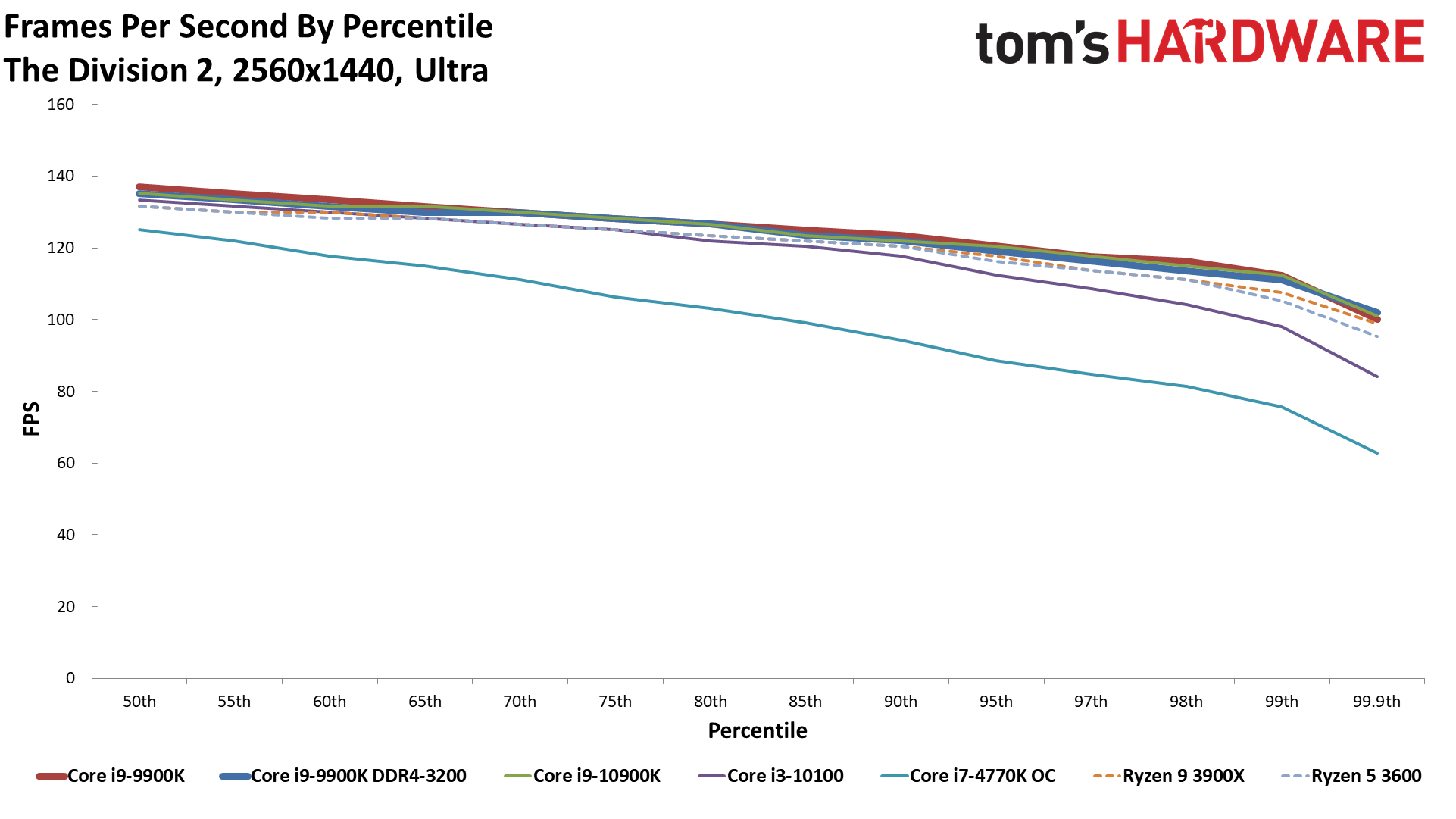

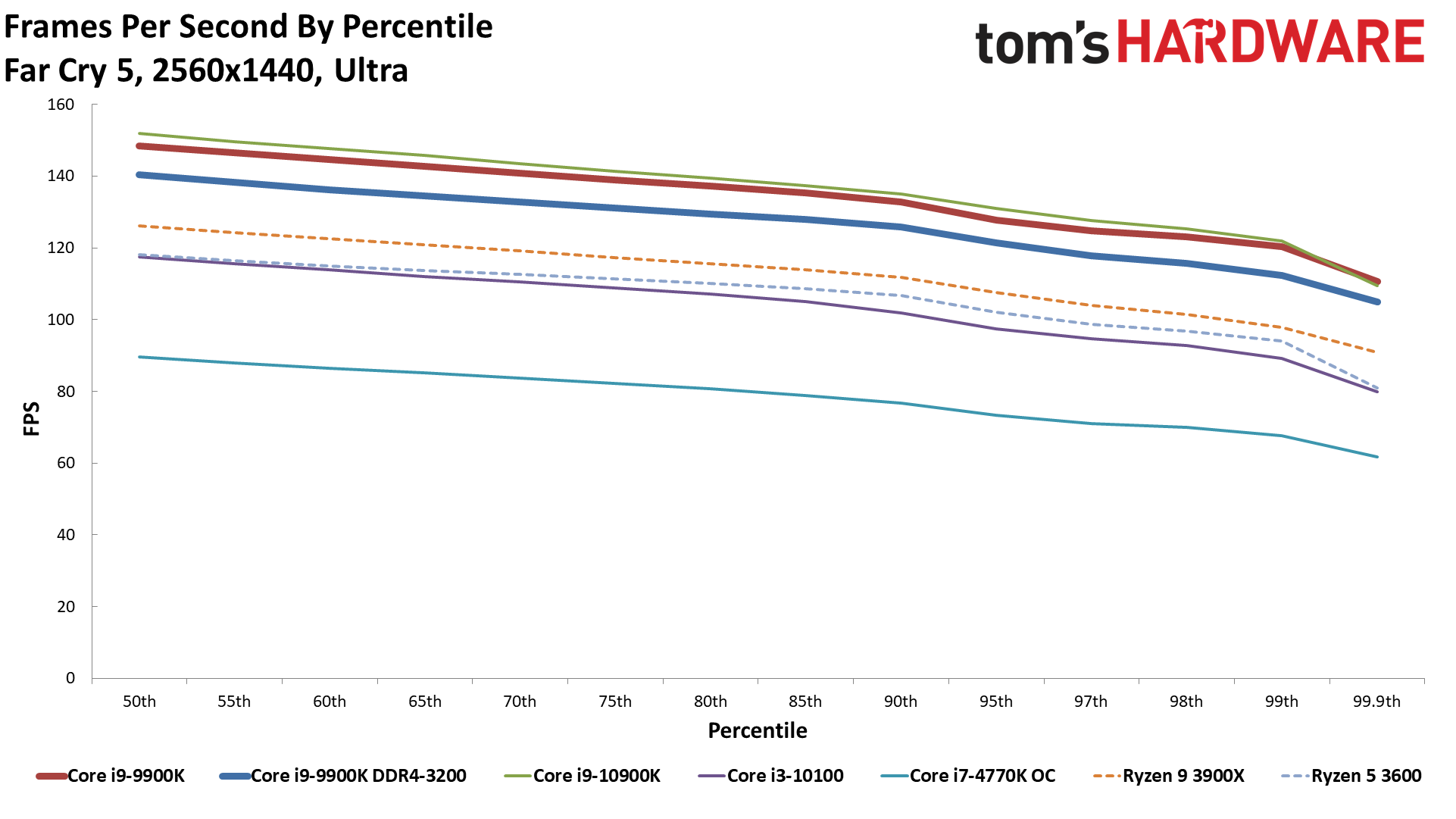

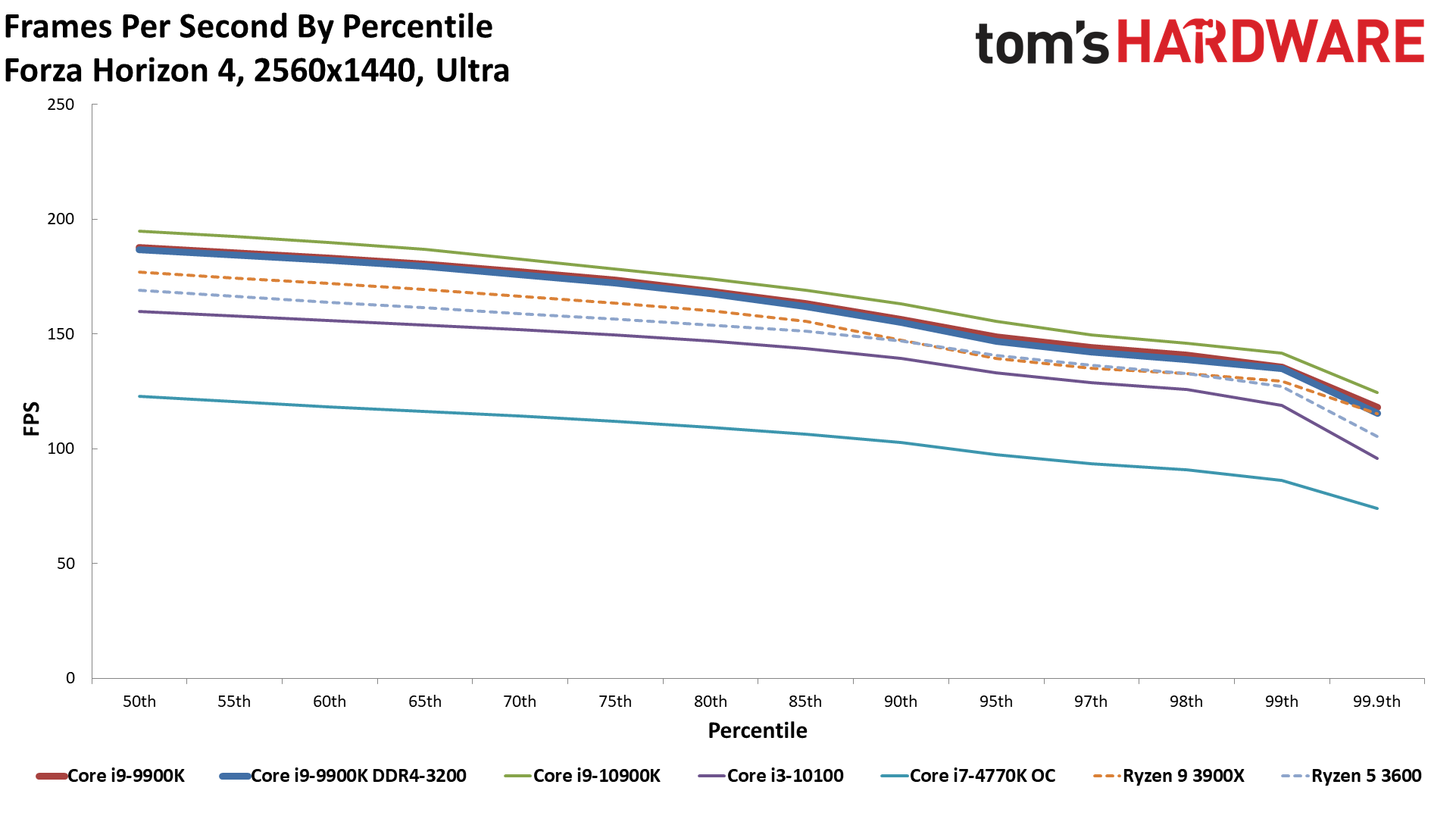

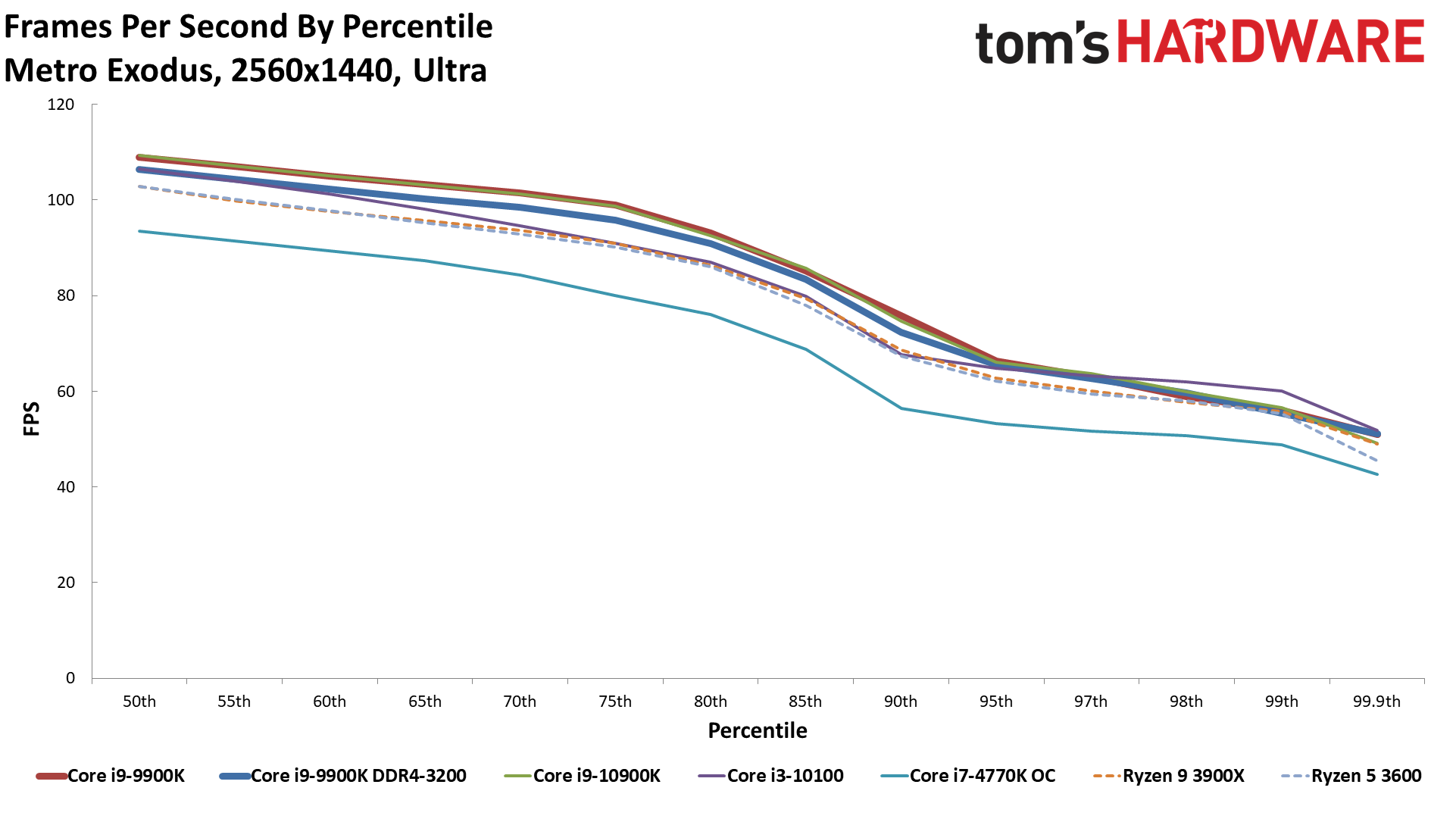

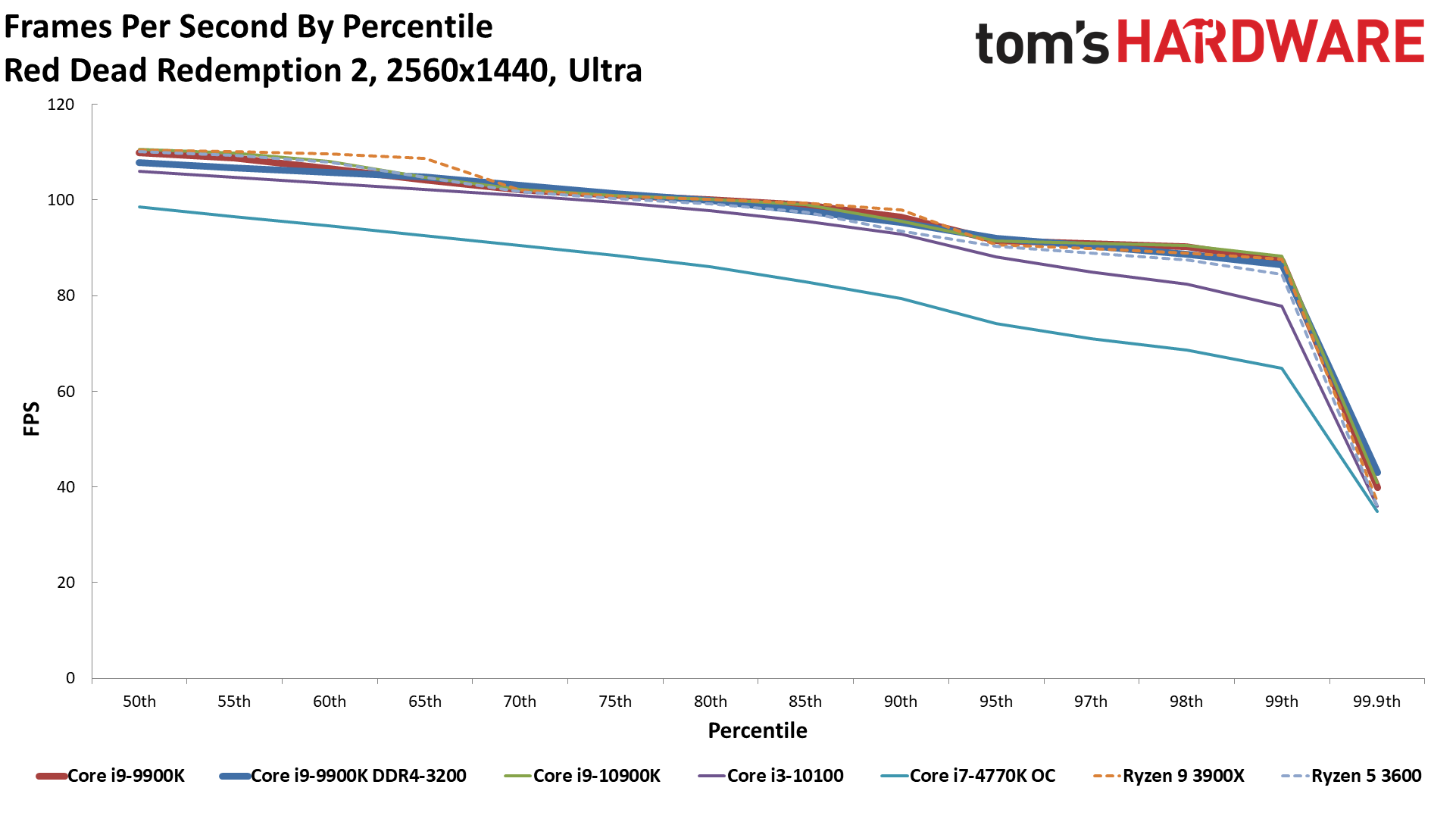

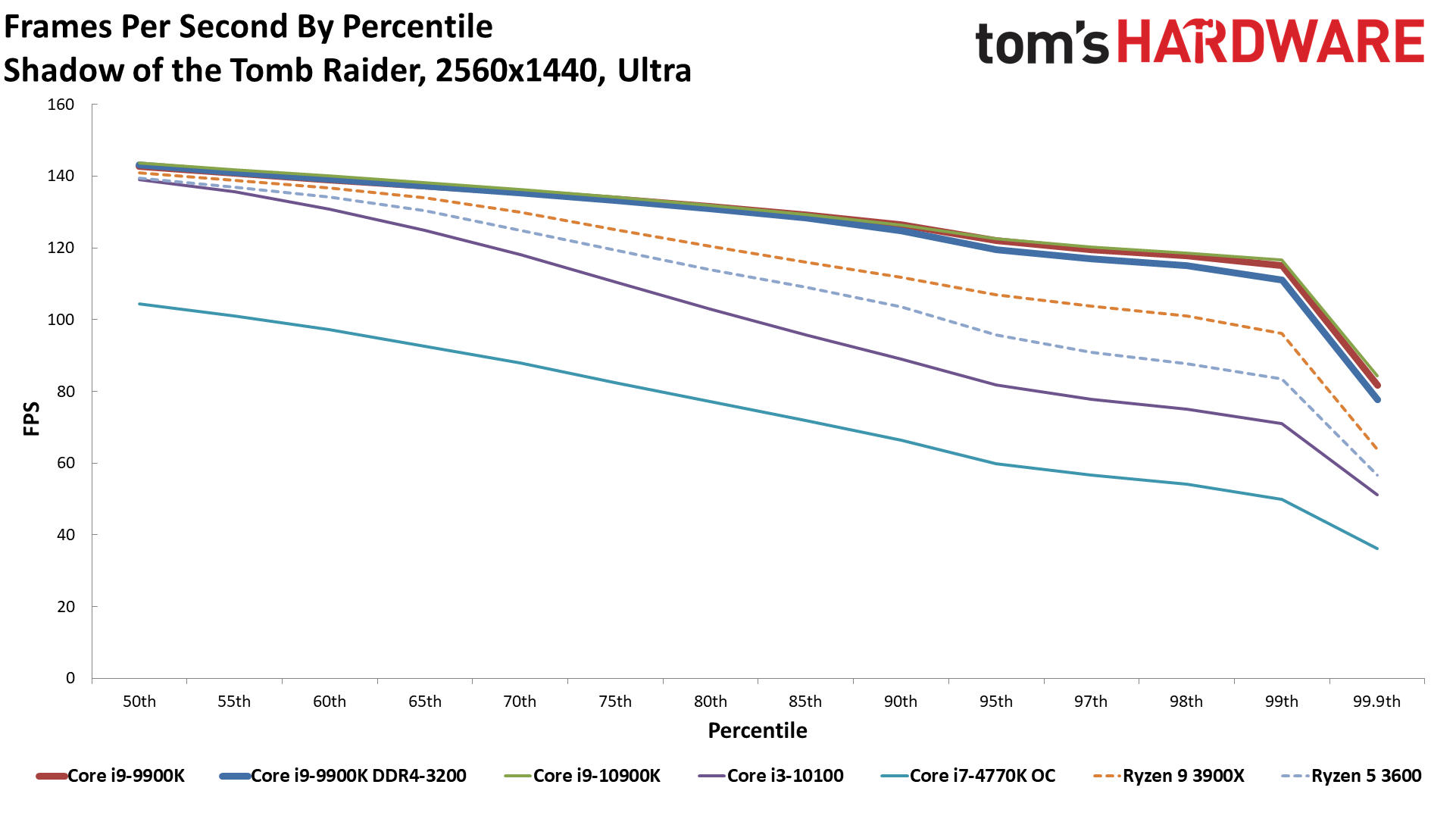

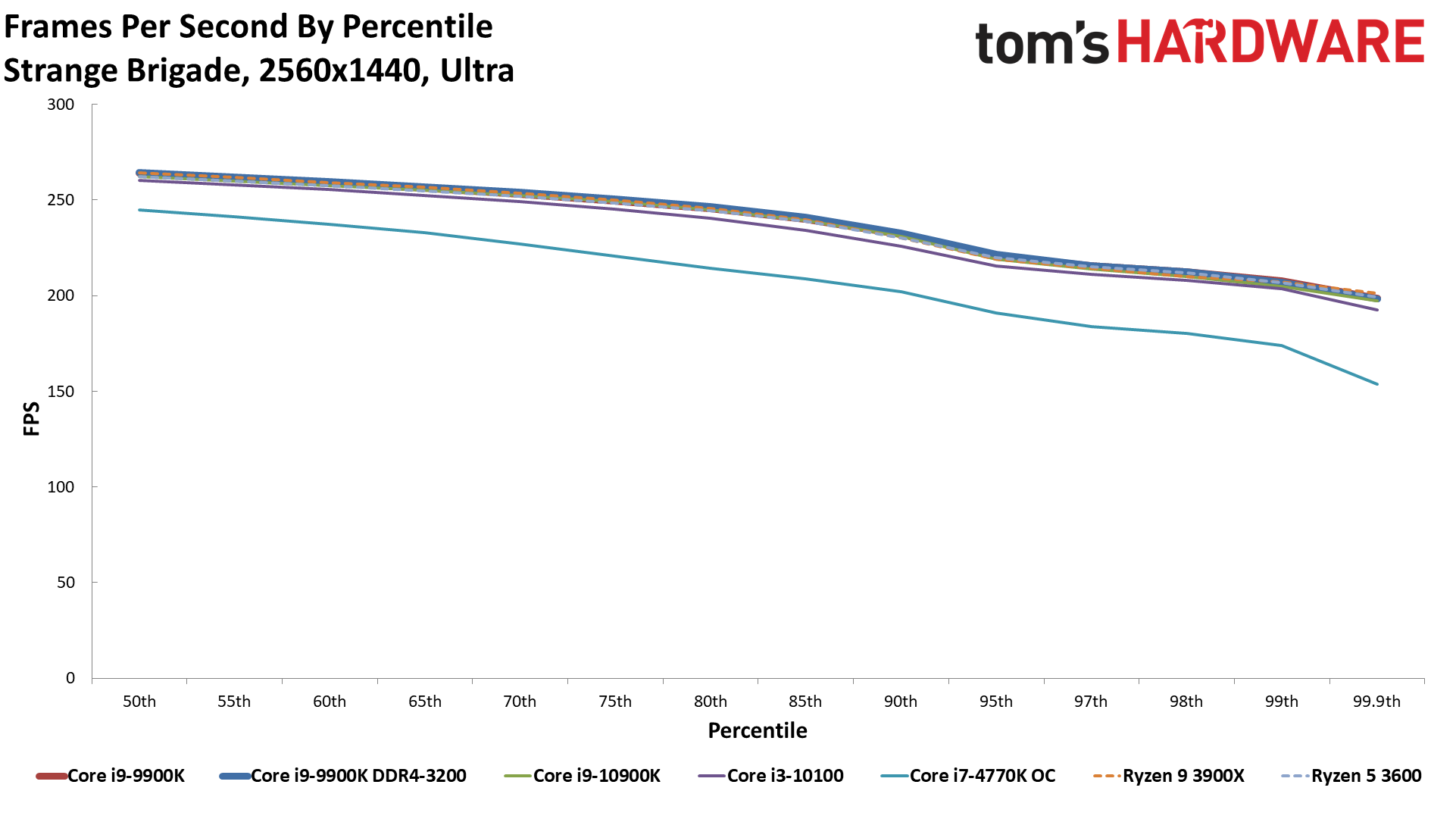

1440p Ultra

1440p Ultra Percentiles

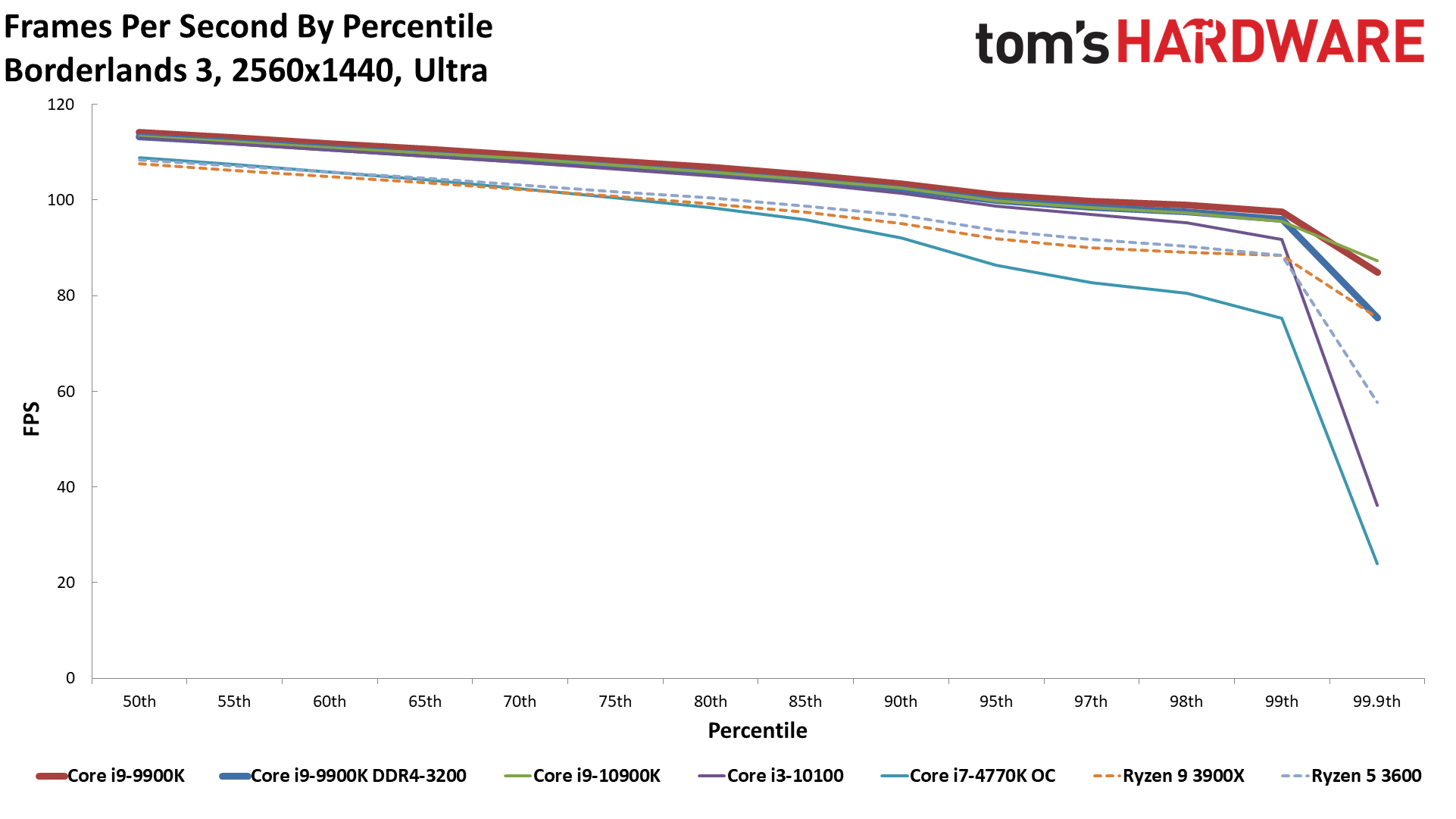

The jump to 1440p represents 78% more pixels, but performance only drops by 15% on average at medium settings, and 20% at ultra settings. In other words, 1080 was very much running into CPU bottlenecks, and shifting to a higher resolution doesn't hurt performance all that much even with the fastest CPUs. And if you're using a slower CPU, in some cases, the change in performance is negligible.

Take the i7-4770K, for example. The 1440p medium results are only 3% lower than 1080p medium, and the 1440p ultra results are 6% lower than 1080p ultra. If you plan on buying an RTX 3080 and running at 1440p ultra with an older generation CPU, you'll lose some performance, but not that much. Well, maybe. It's worth noting that the i9-9900K with a 2080 Super is faster overall at 1440p medium and below compared to the i7-4770K with a 3080, but the 4770K does at least get a small boost to performance. Or with a 2080 Ti, the 9900K even wins at 1440p ultra.

Take just one step up the CPU ladder, like moving to a Ryzen 5 3600, or even a Core i3-10100 (which should be about the same level of performance as a Core i7-6700K), and there's at least some benefit to buying an RTX 3080. Still, if you're running less than a 6-core CPU, we'd definitely recommend upgrading your CPU and perhaps even the rest of your PC before taking the RTX 3080 plunge at 1440p.

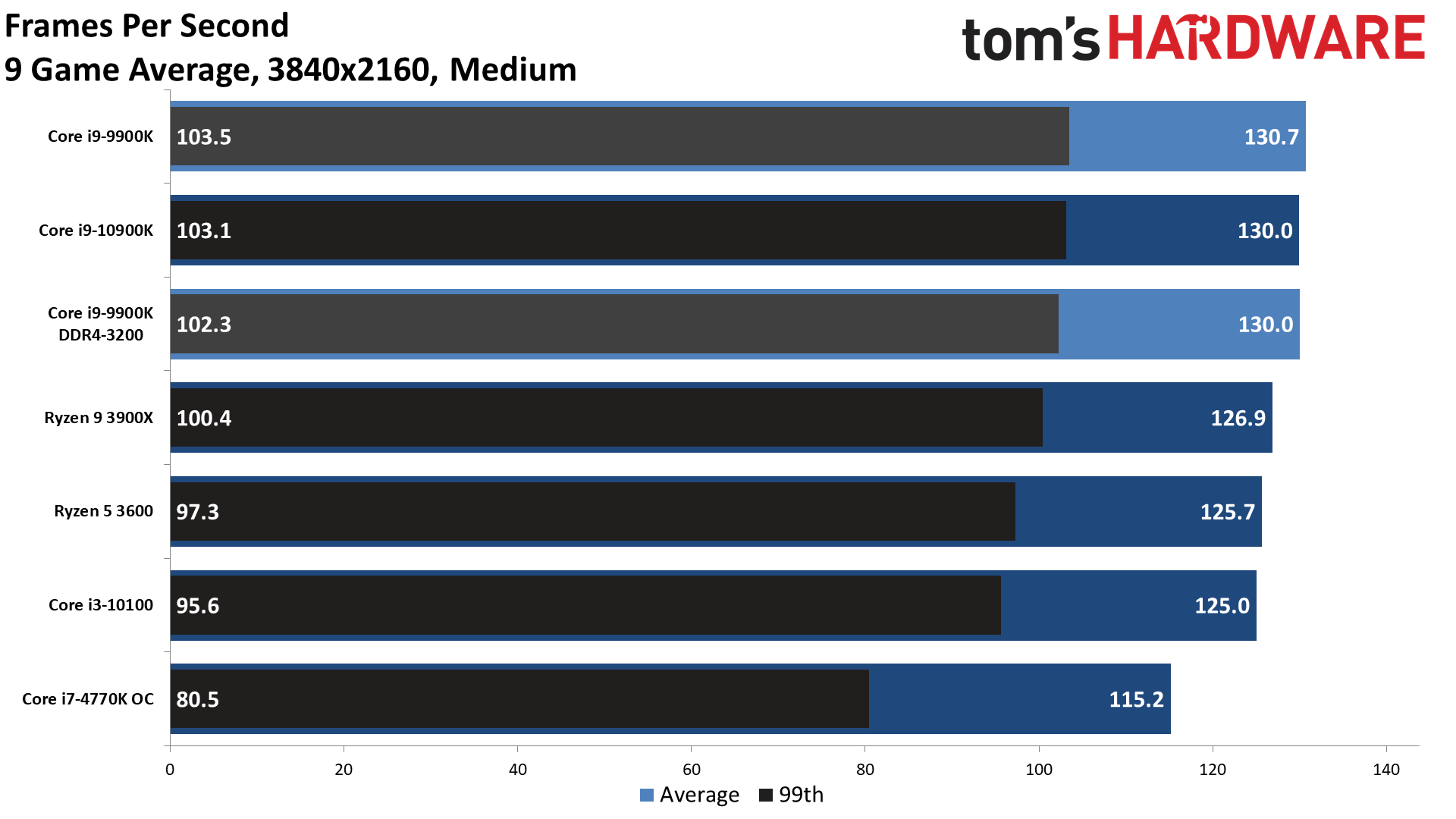

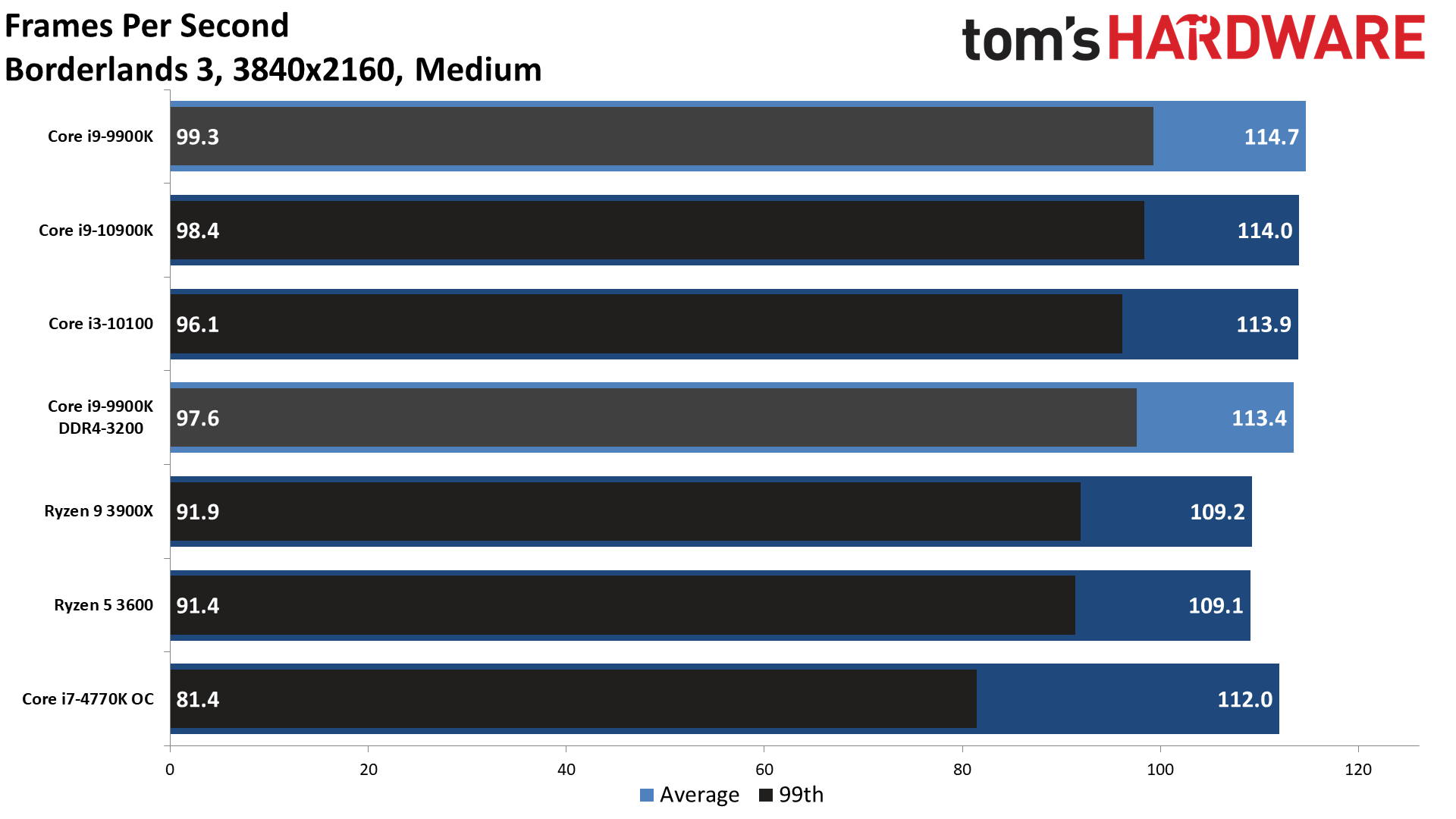

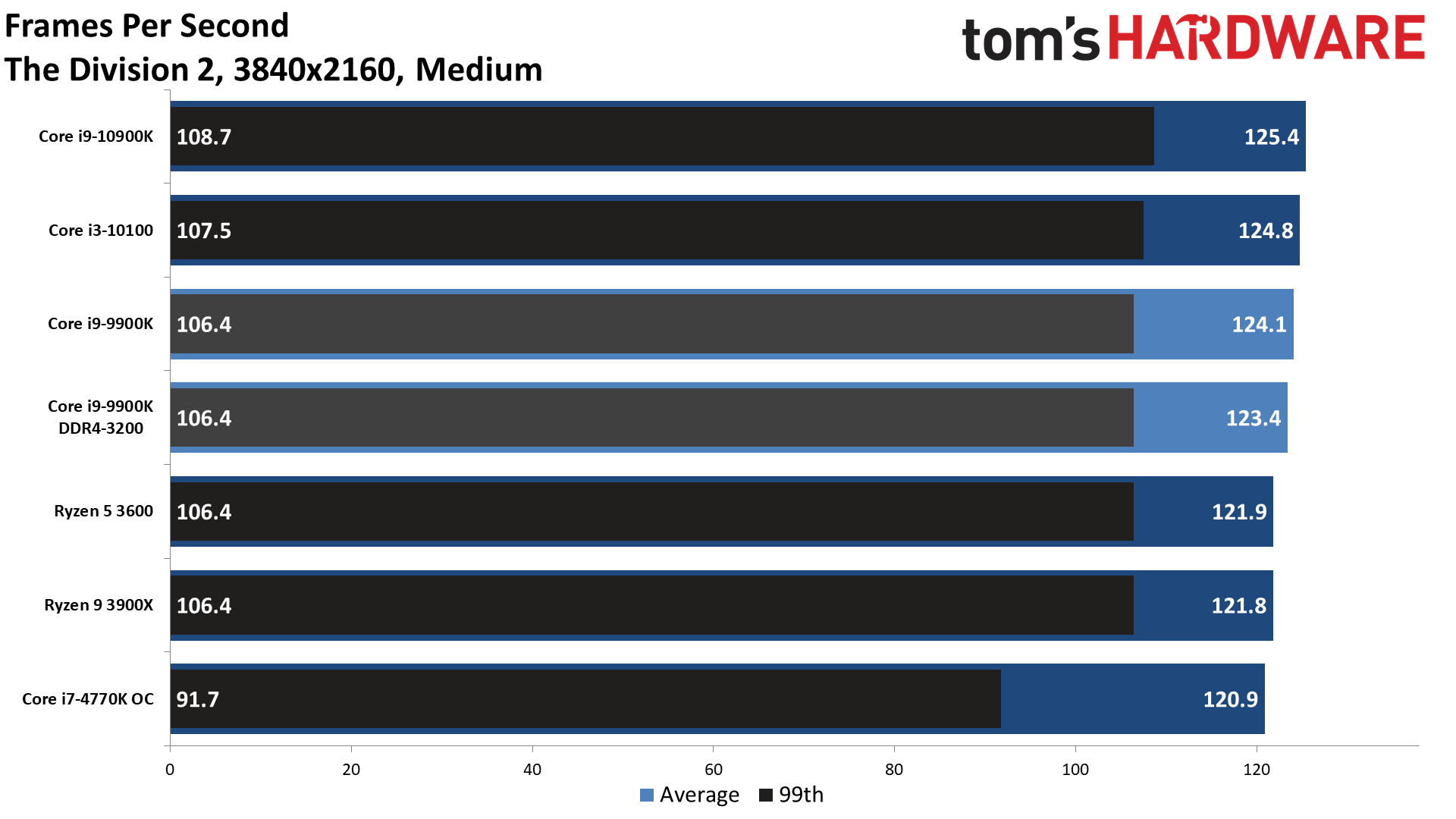

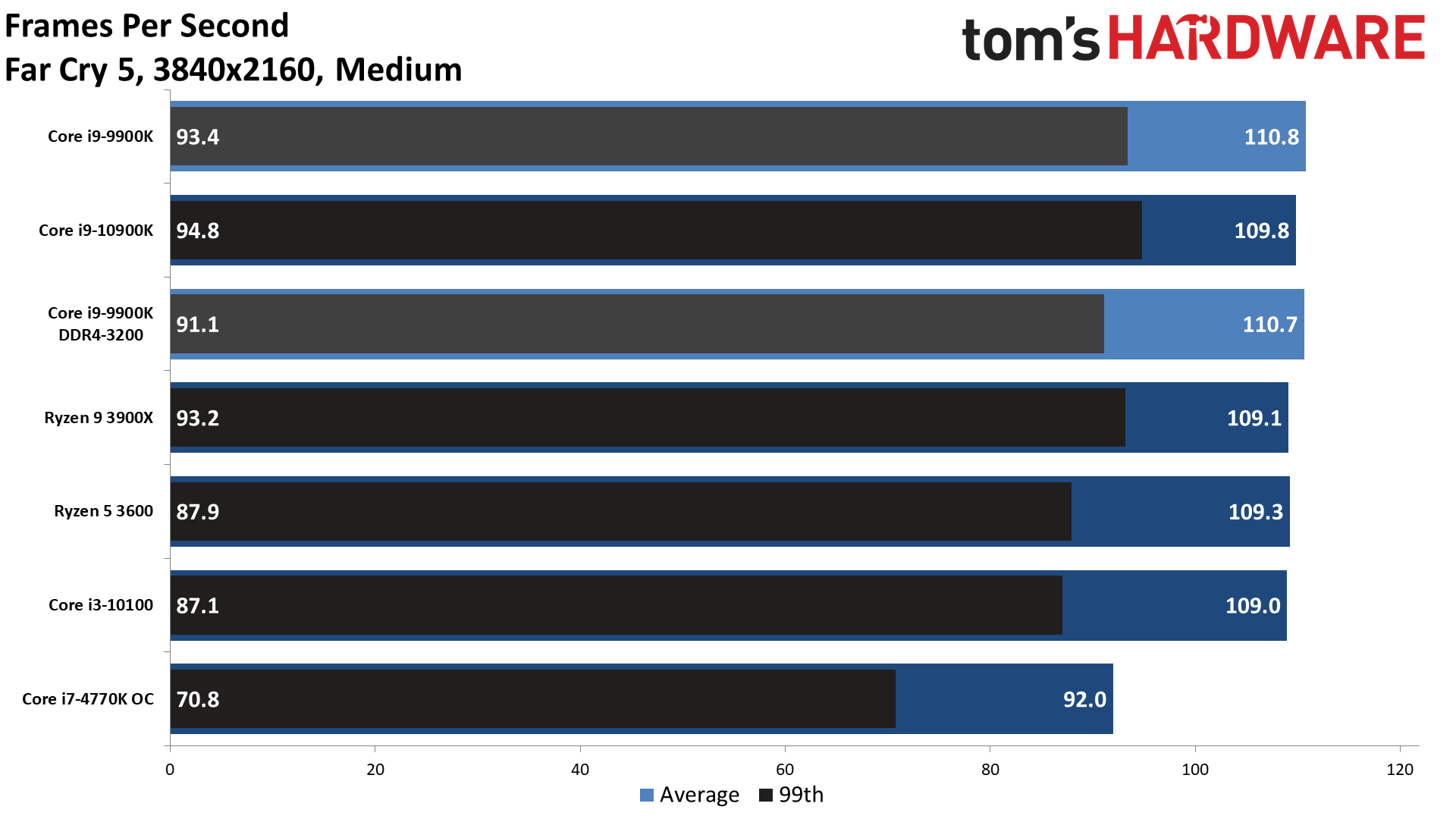

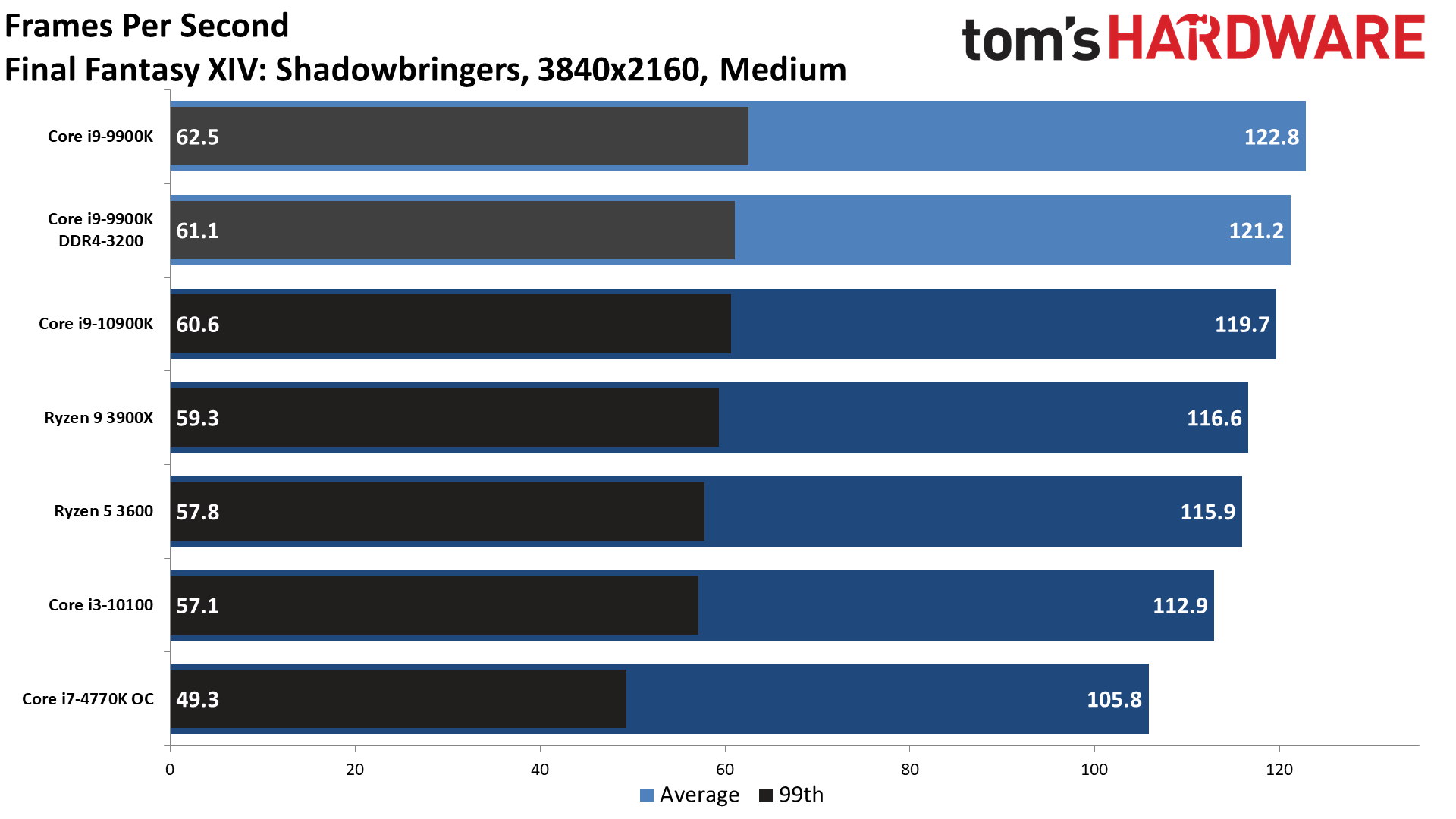

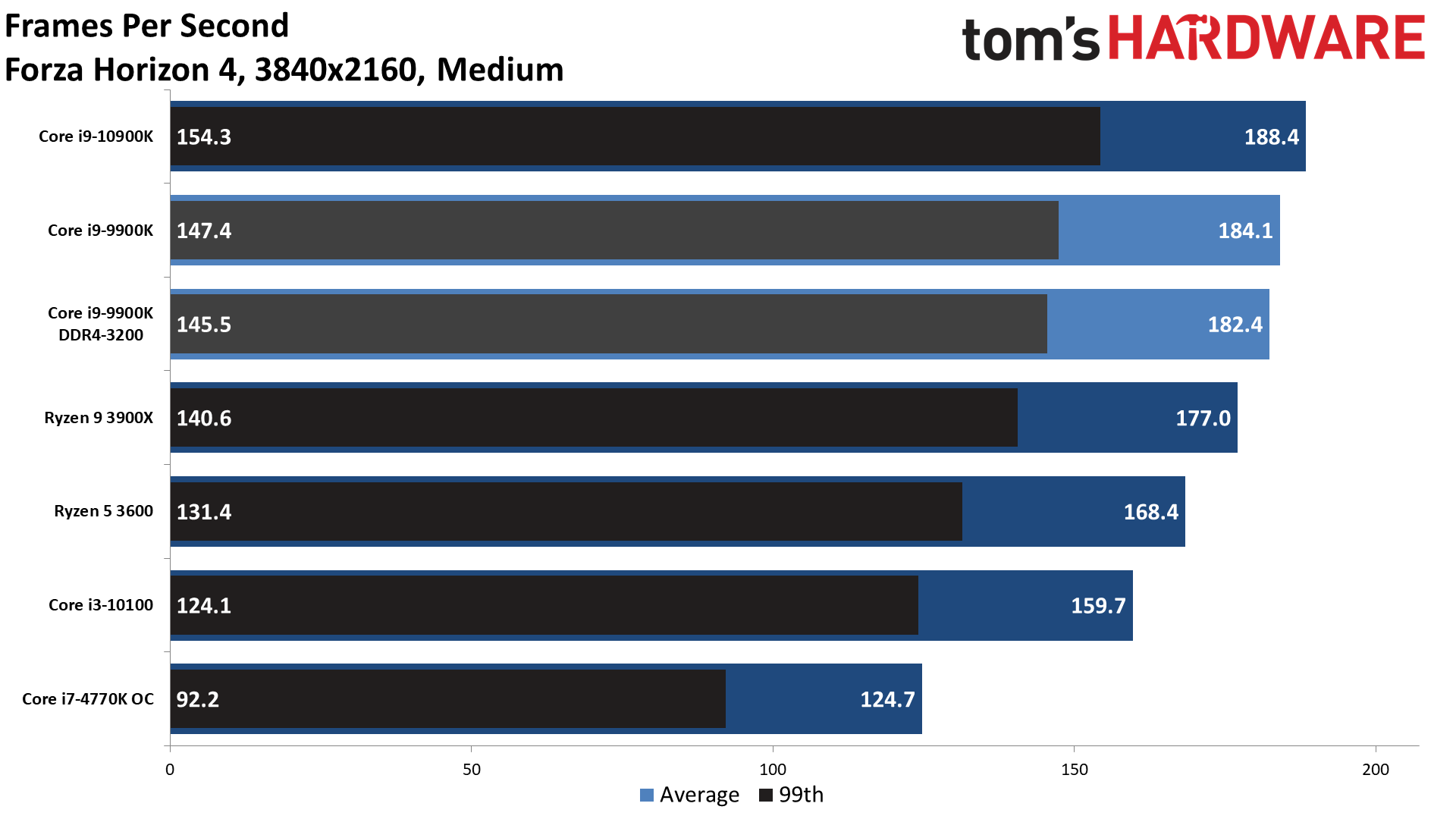

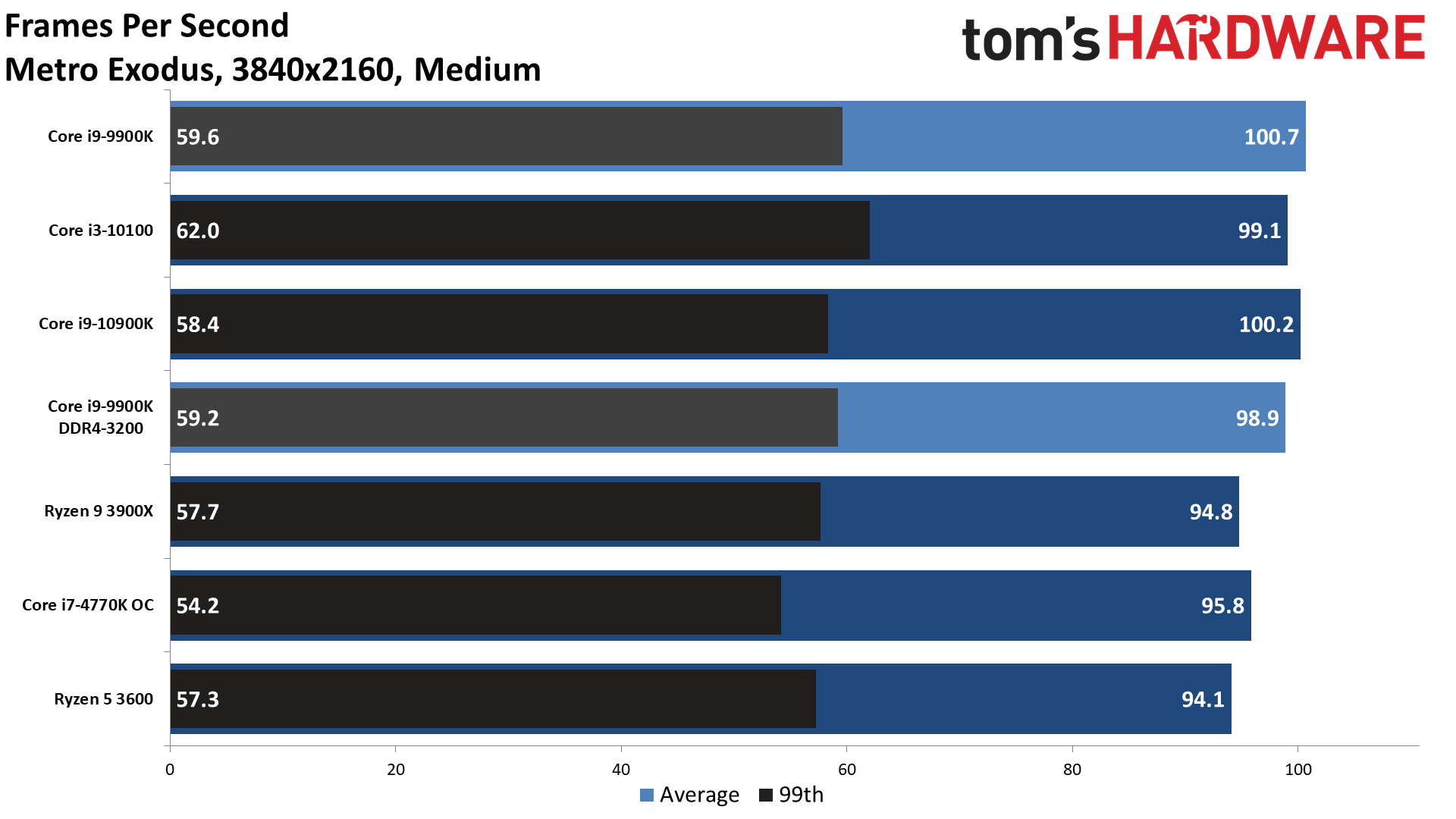

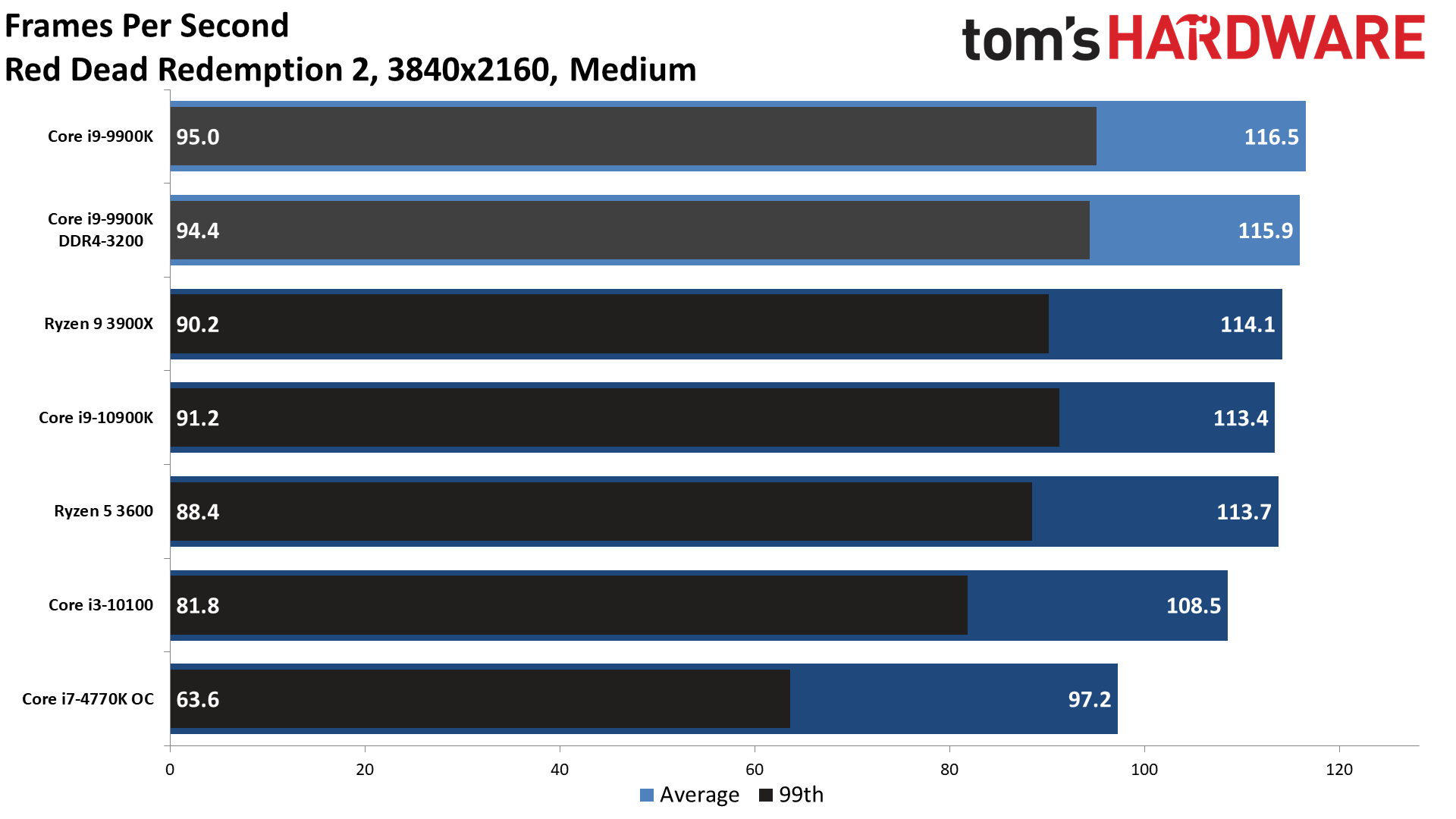

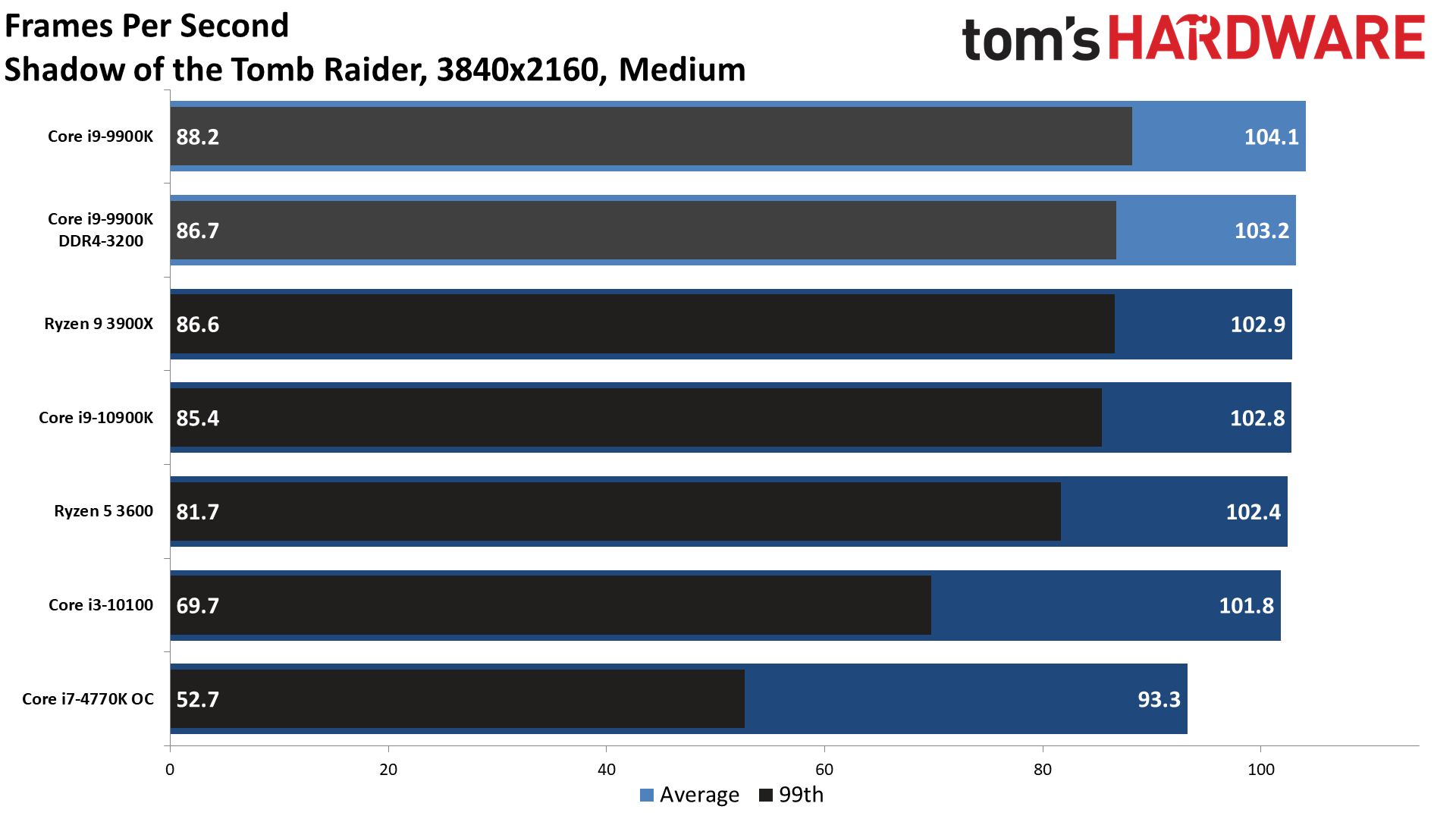

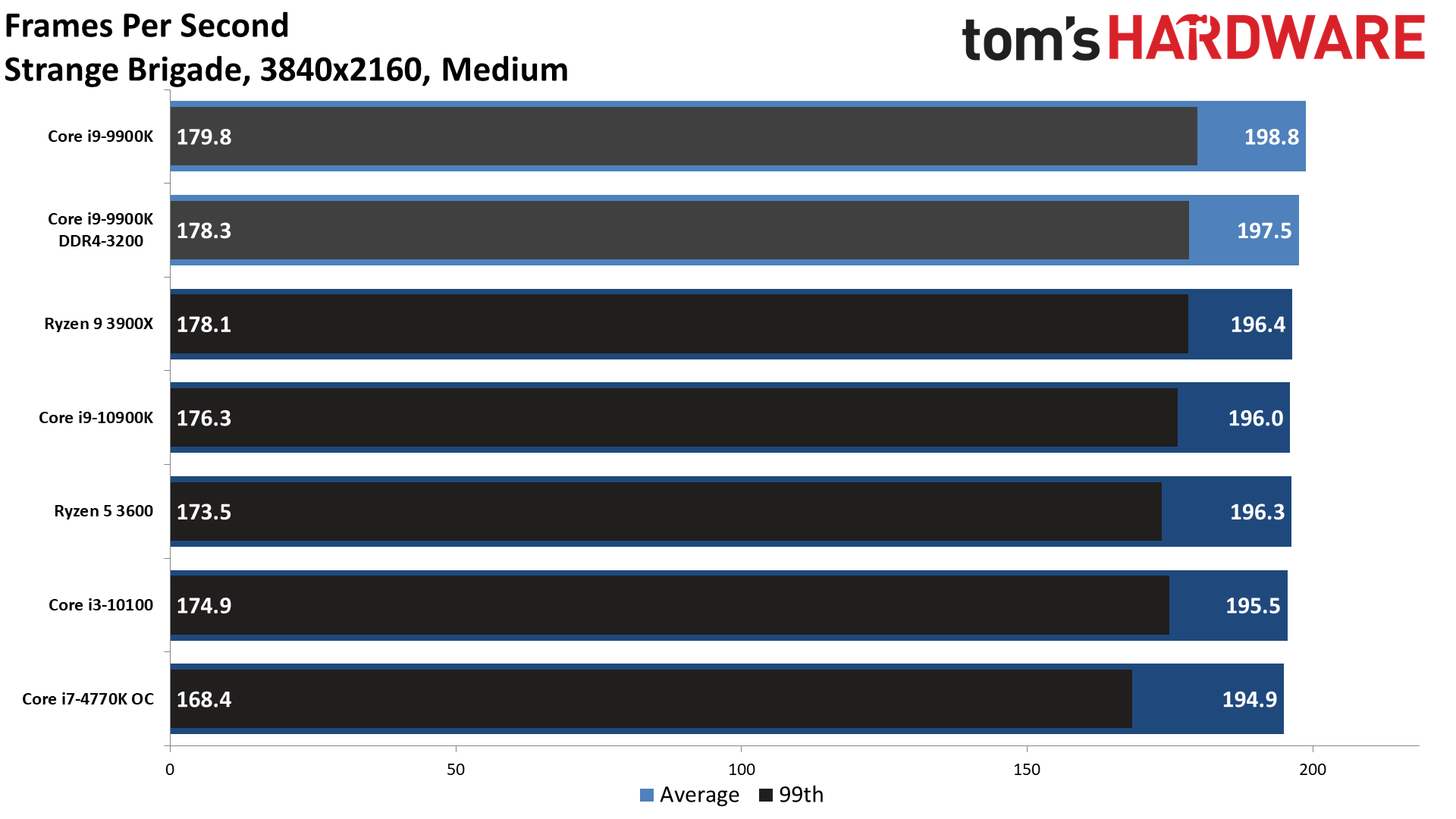

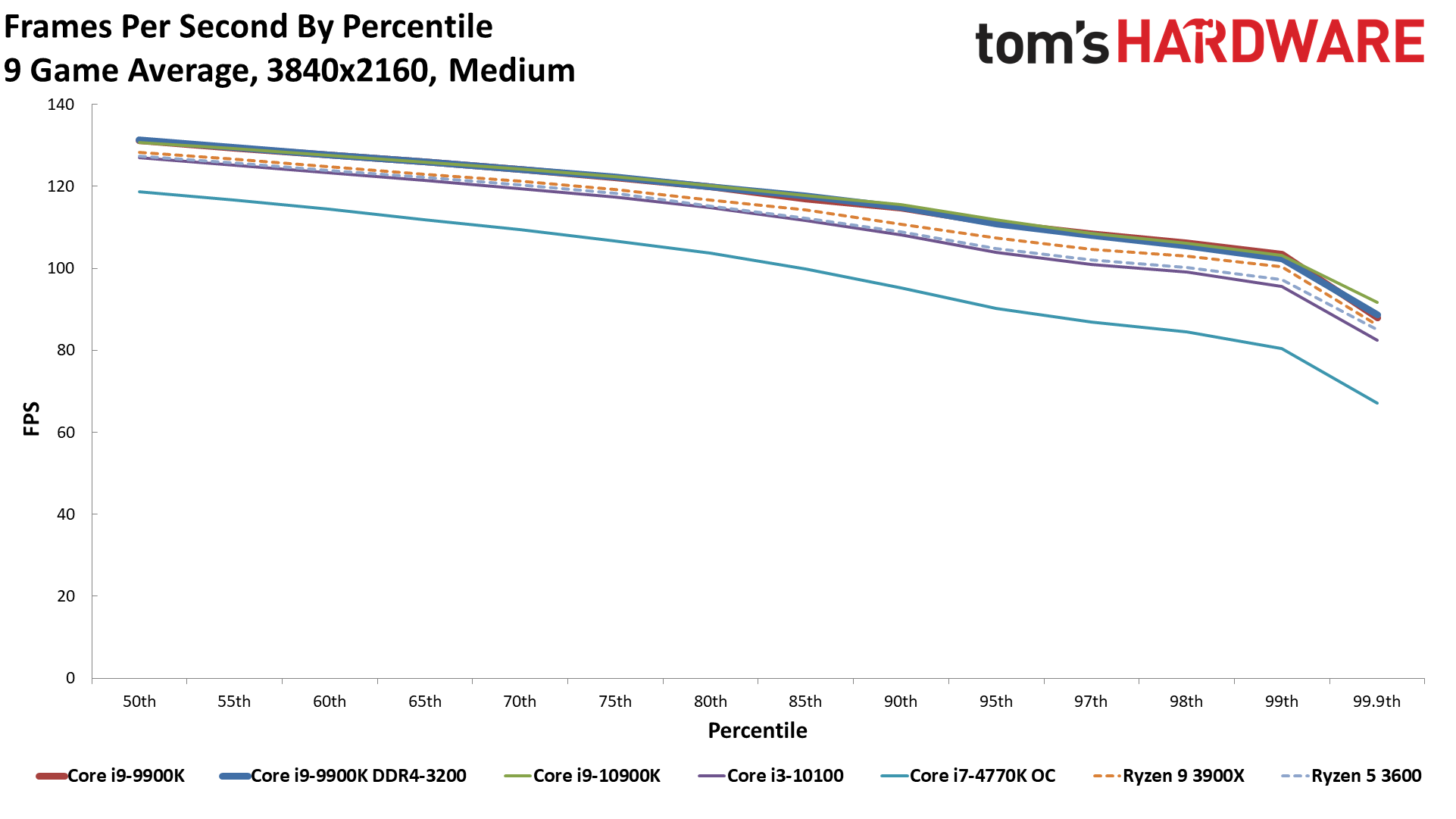

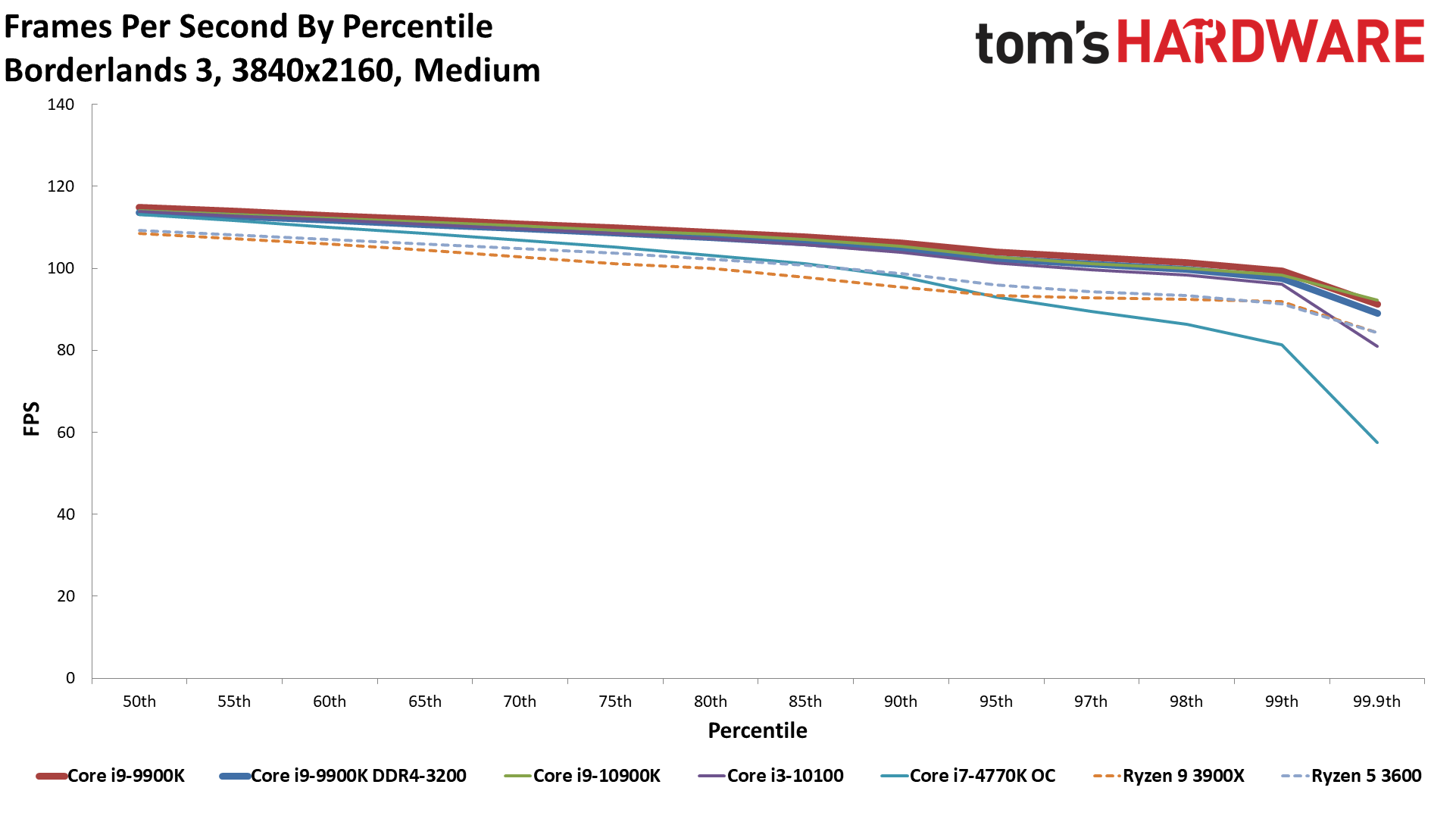

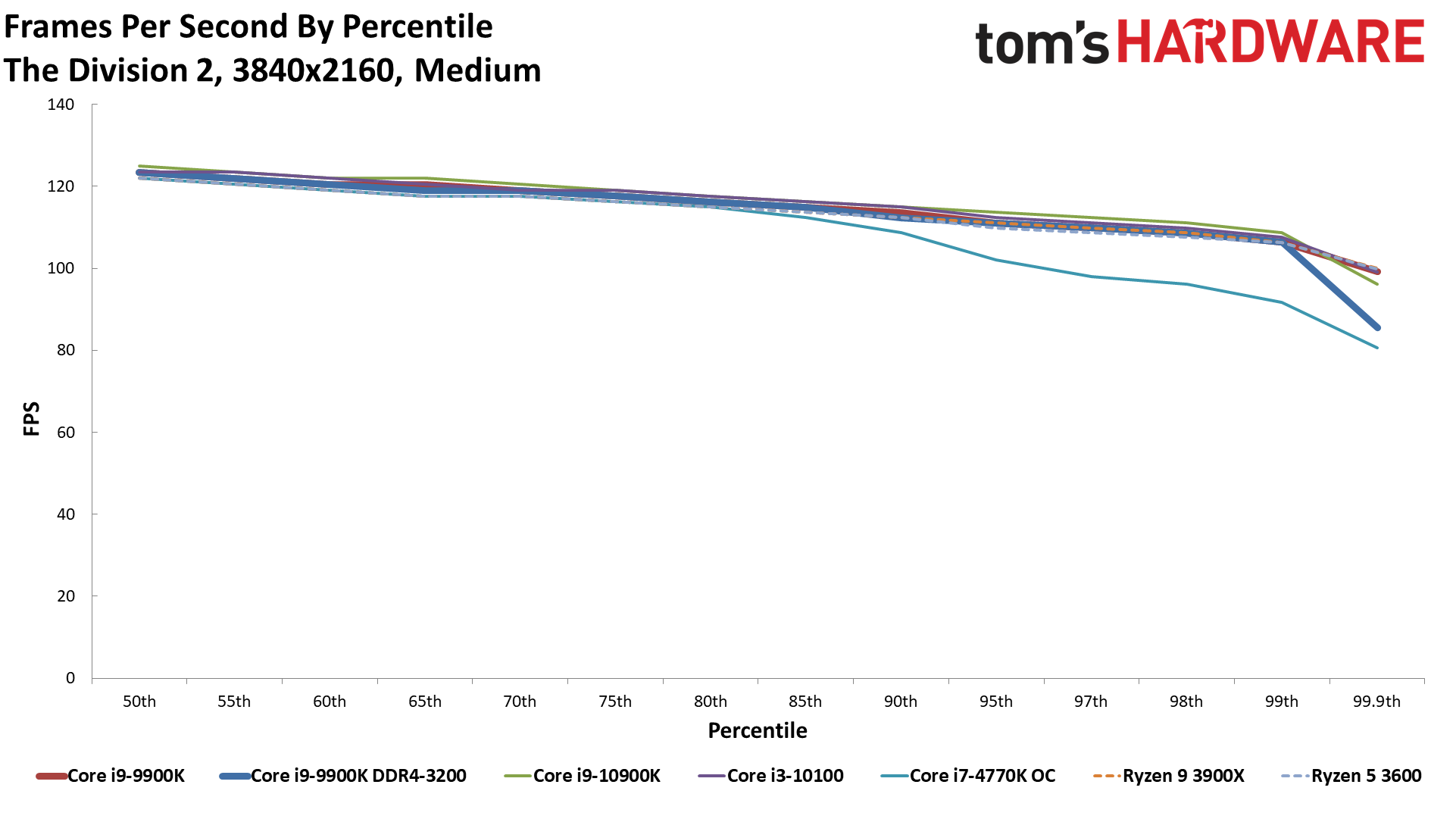

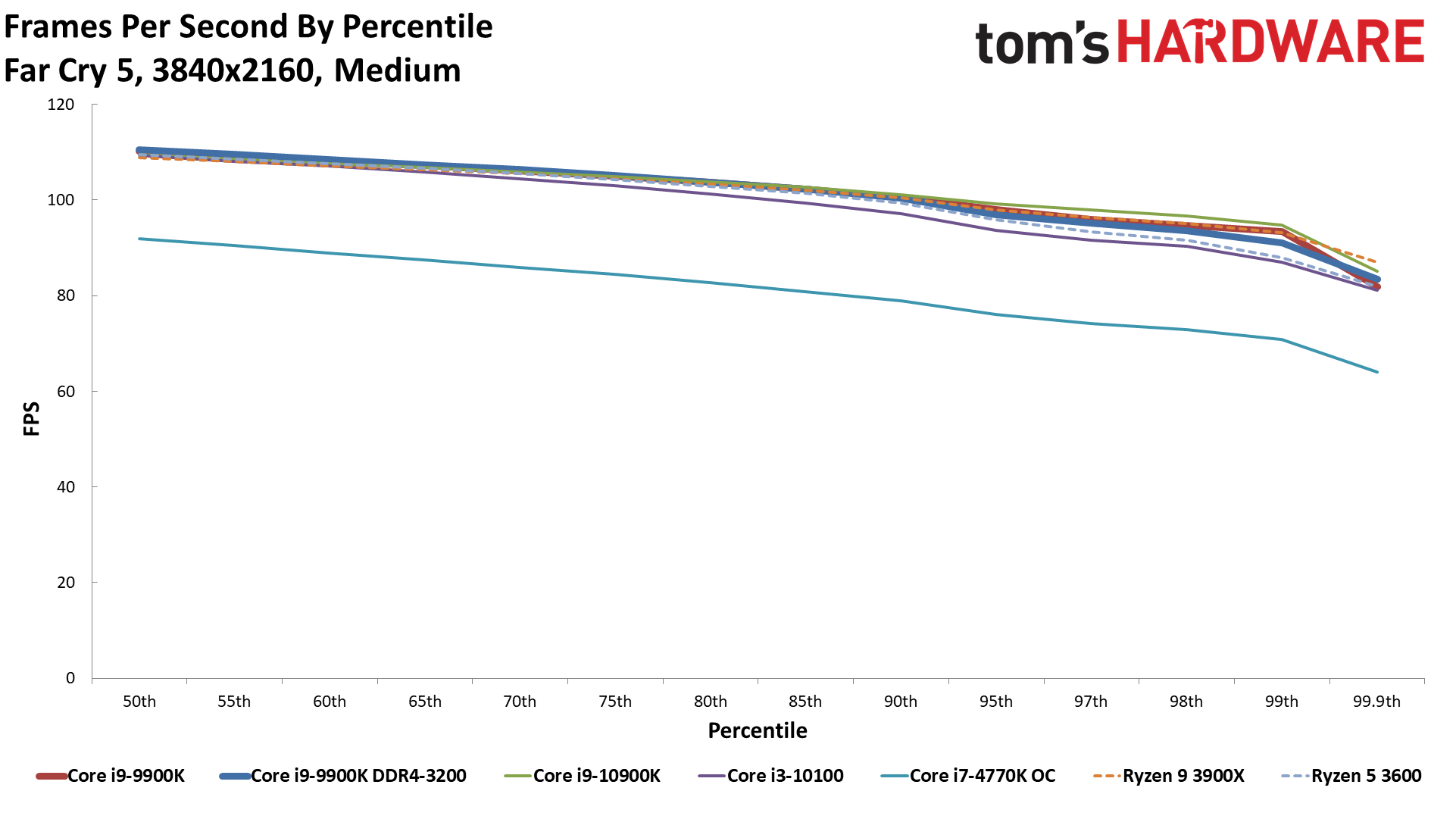

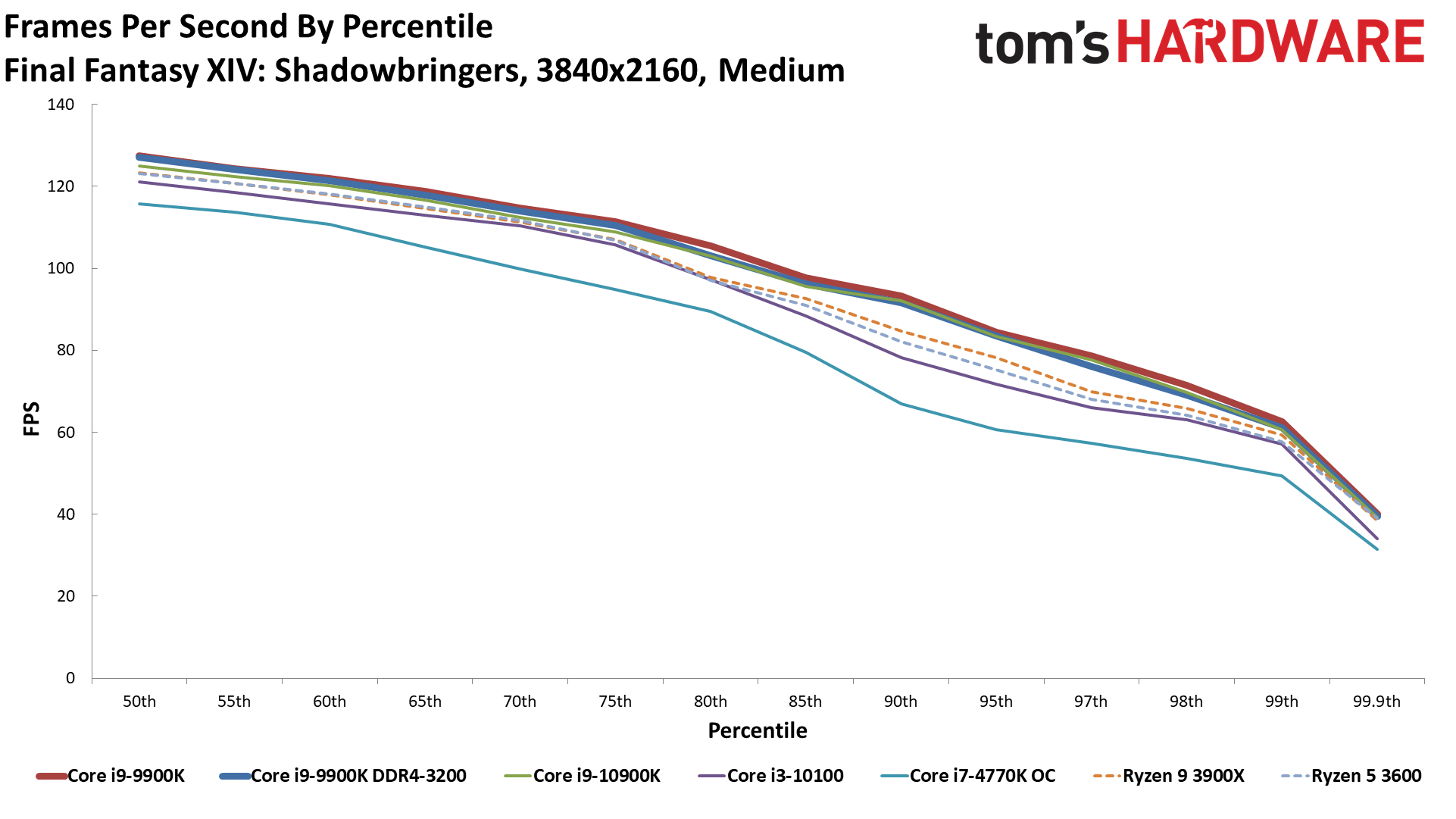

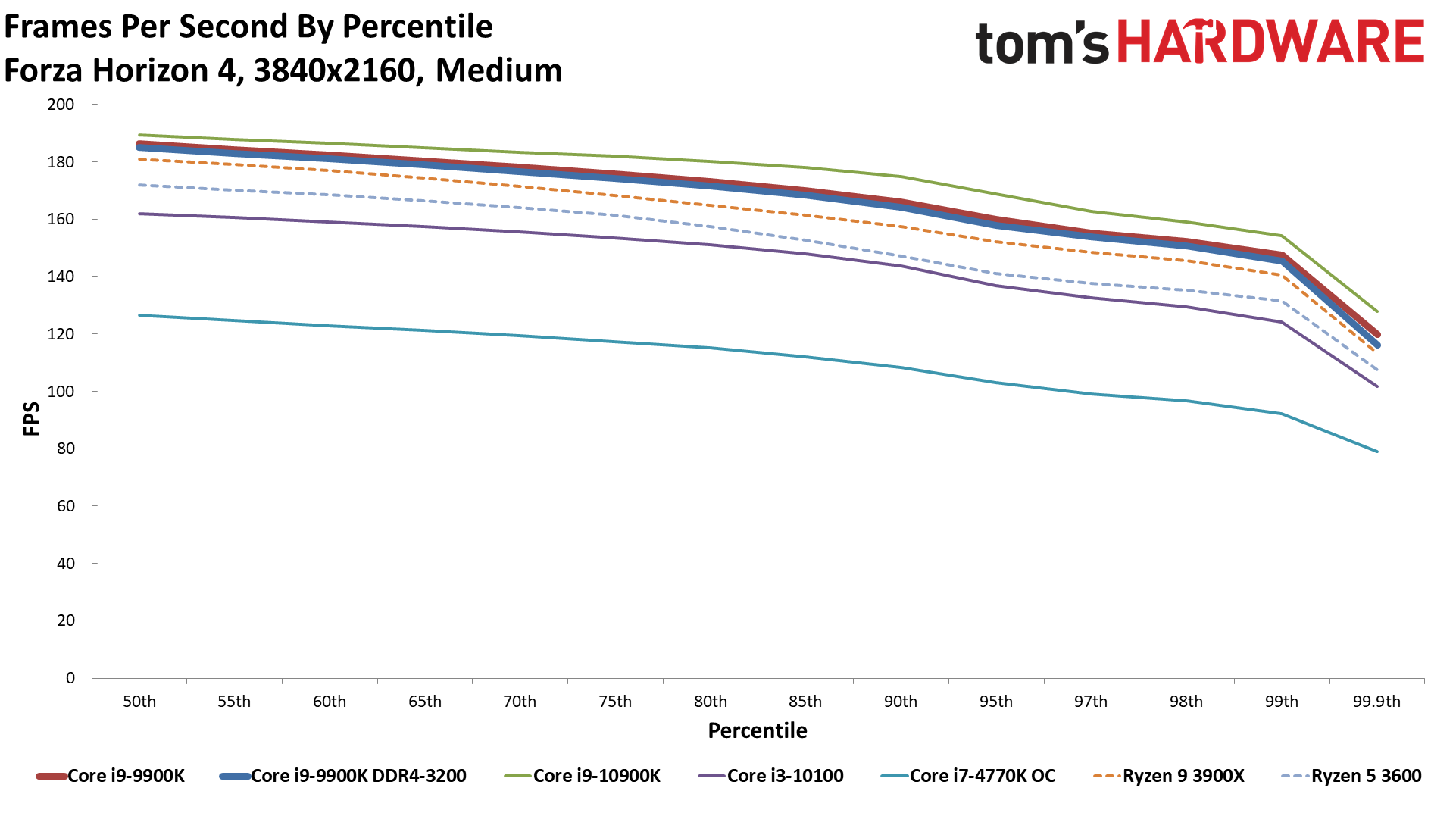

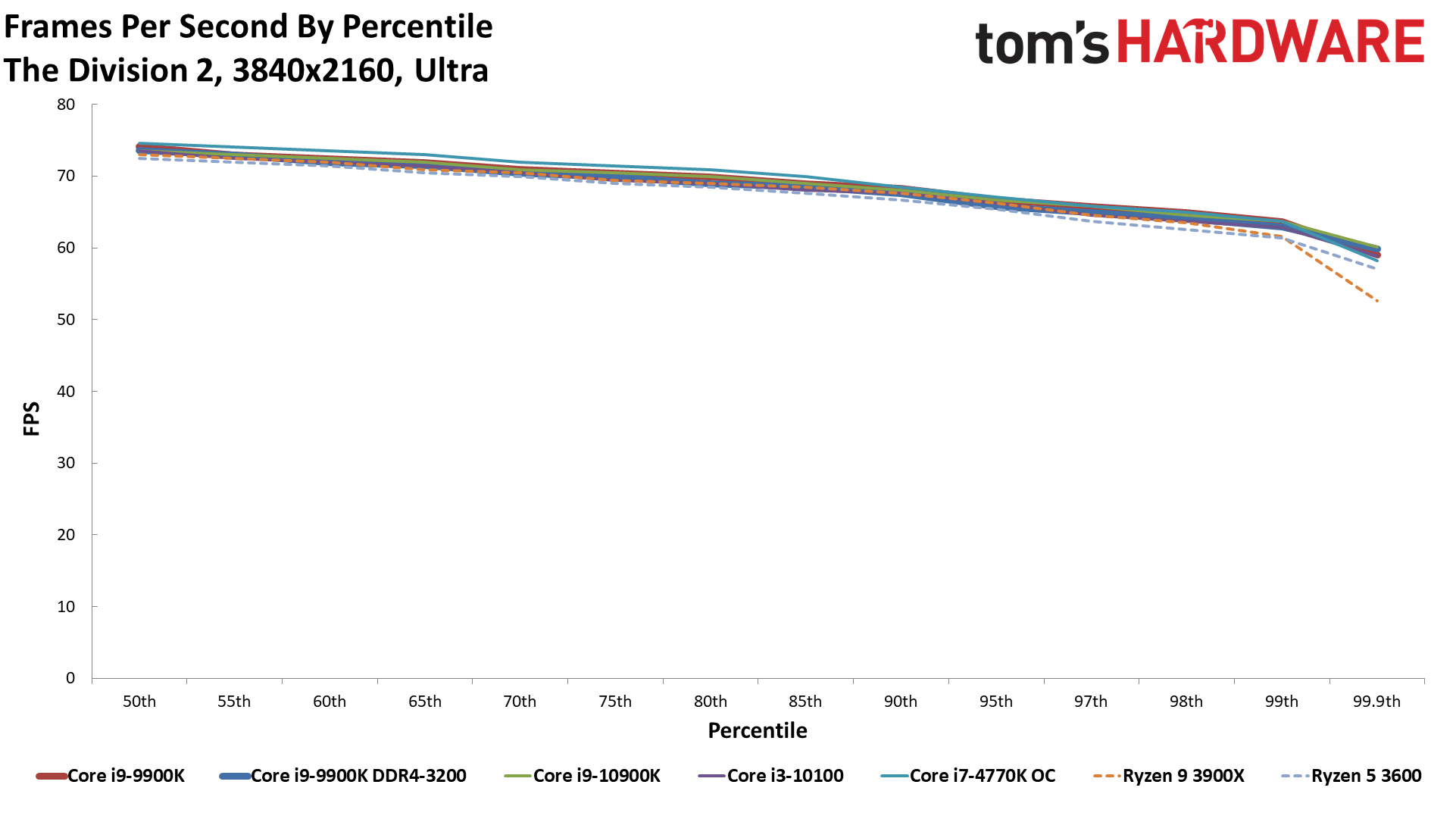

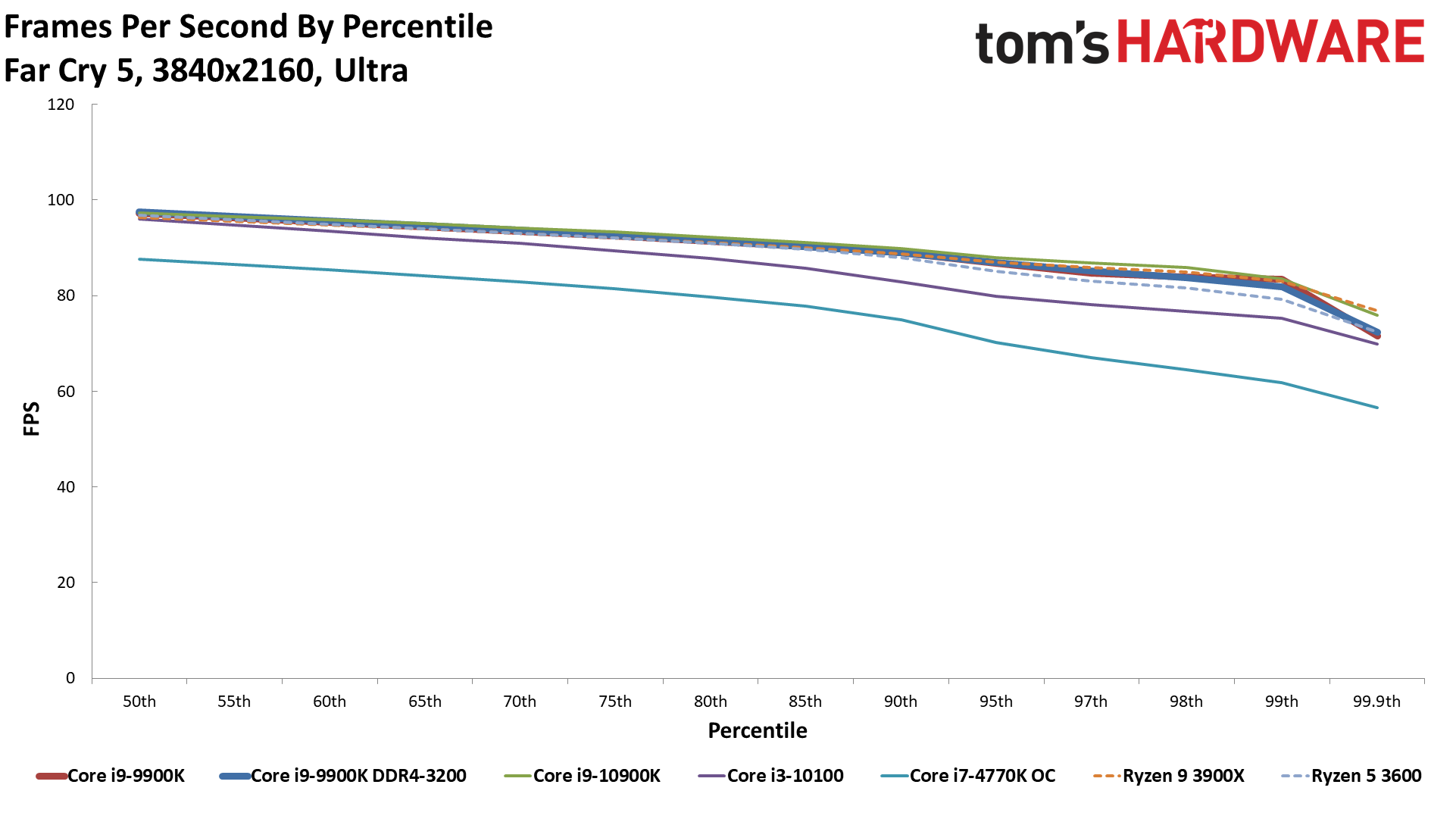

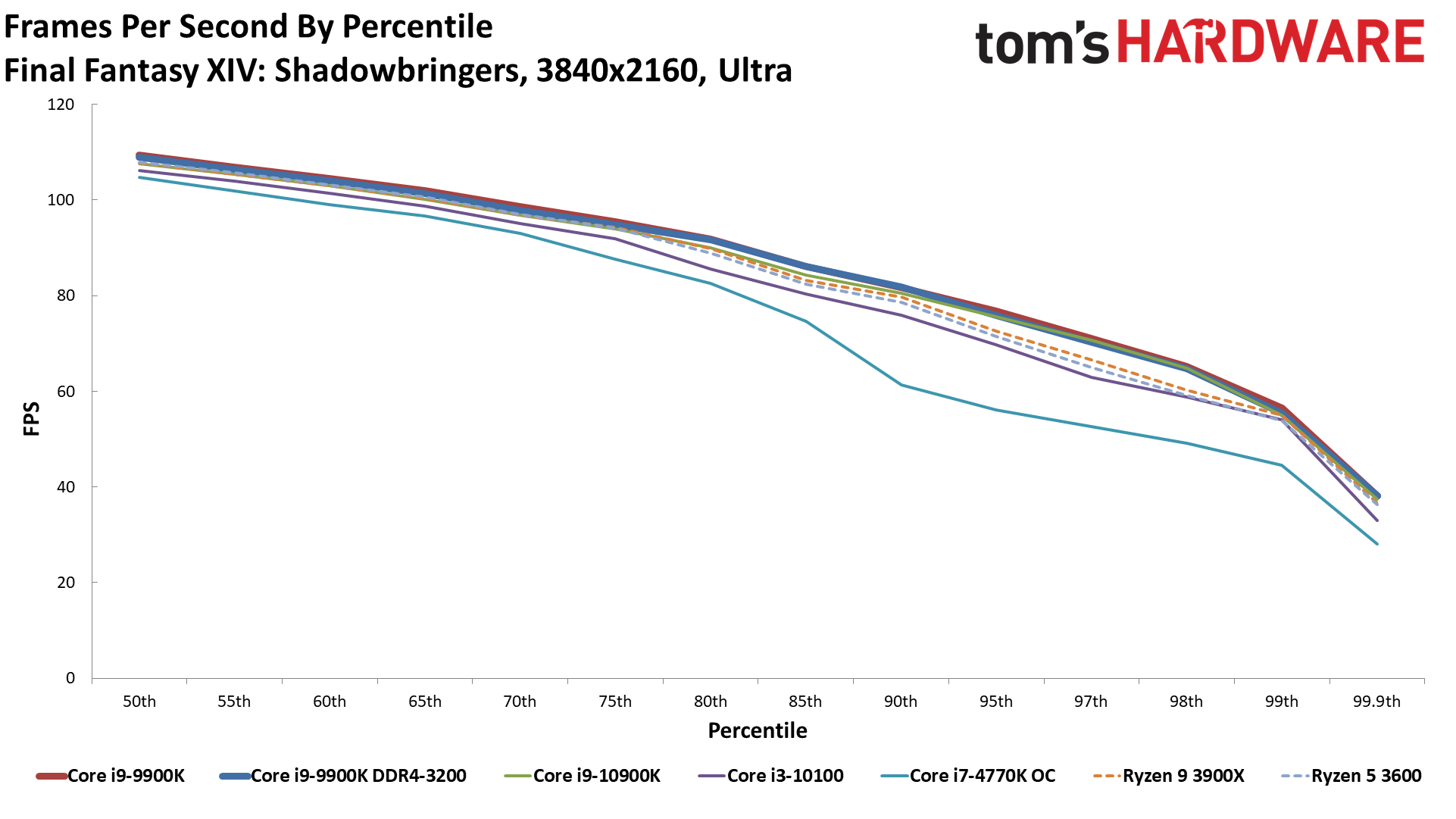

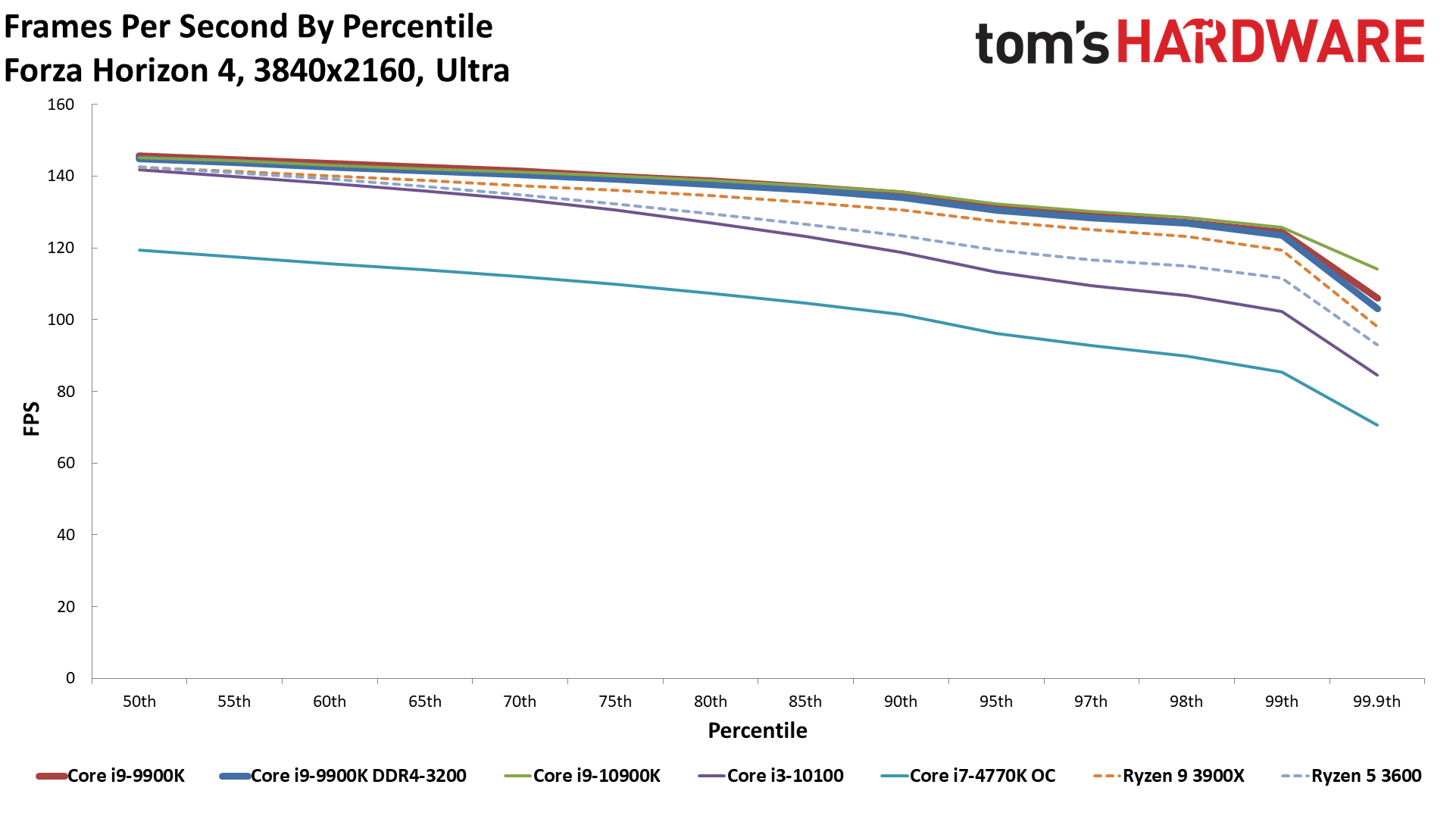

GeForce RTX 3080 FE: 4K CPU Benchmarks

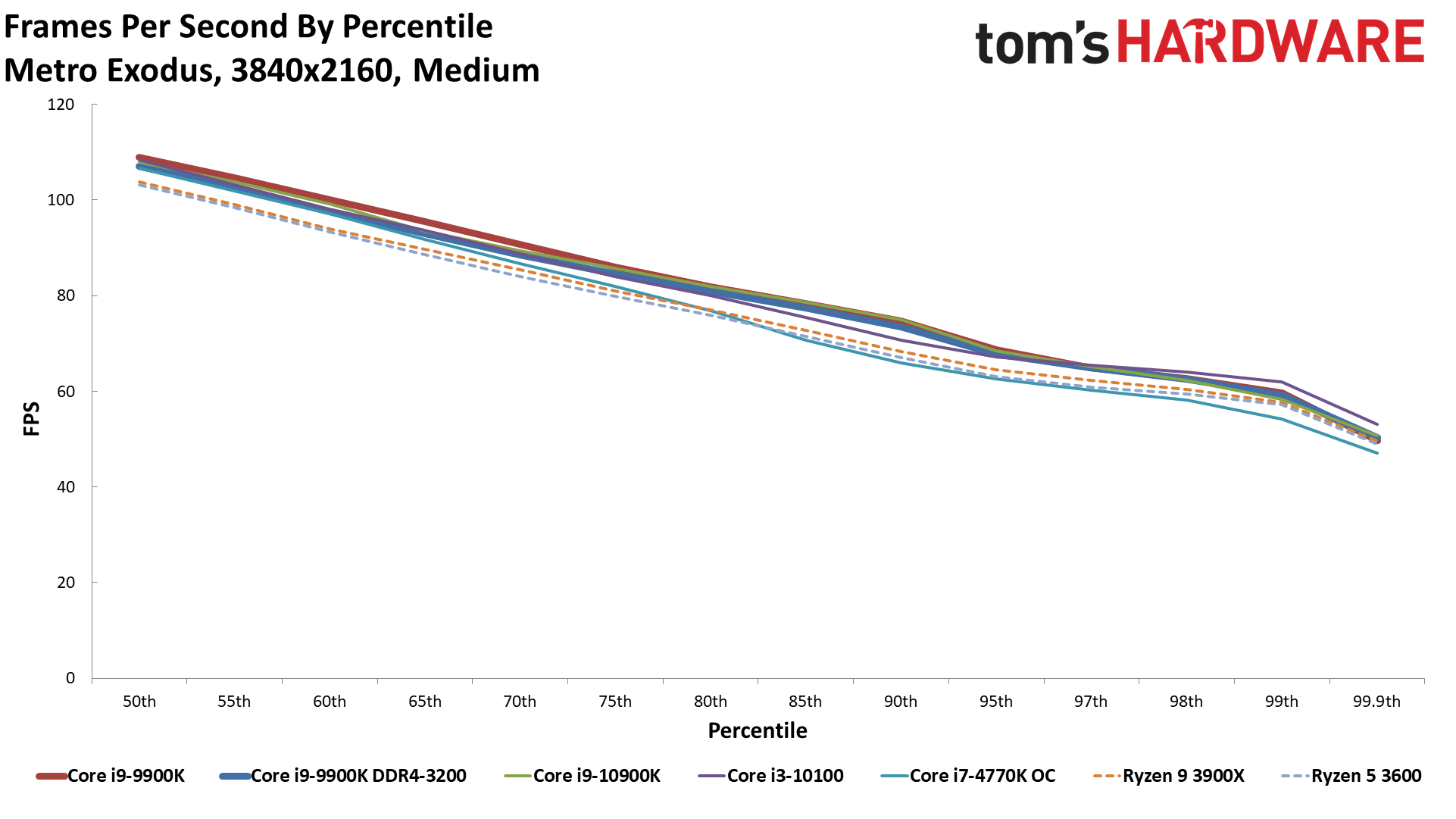

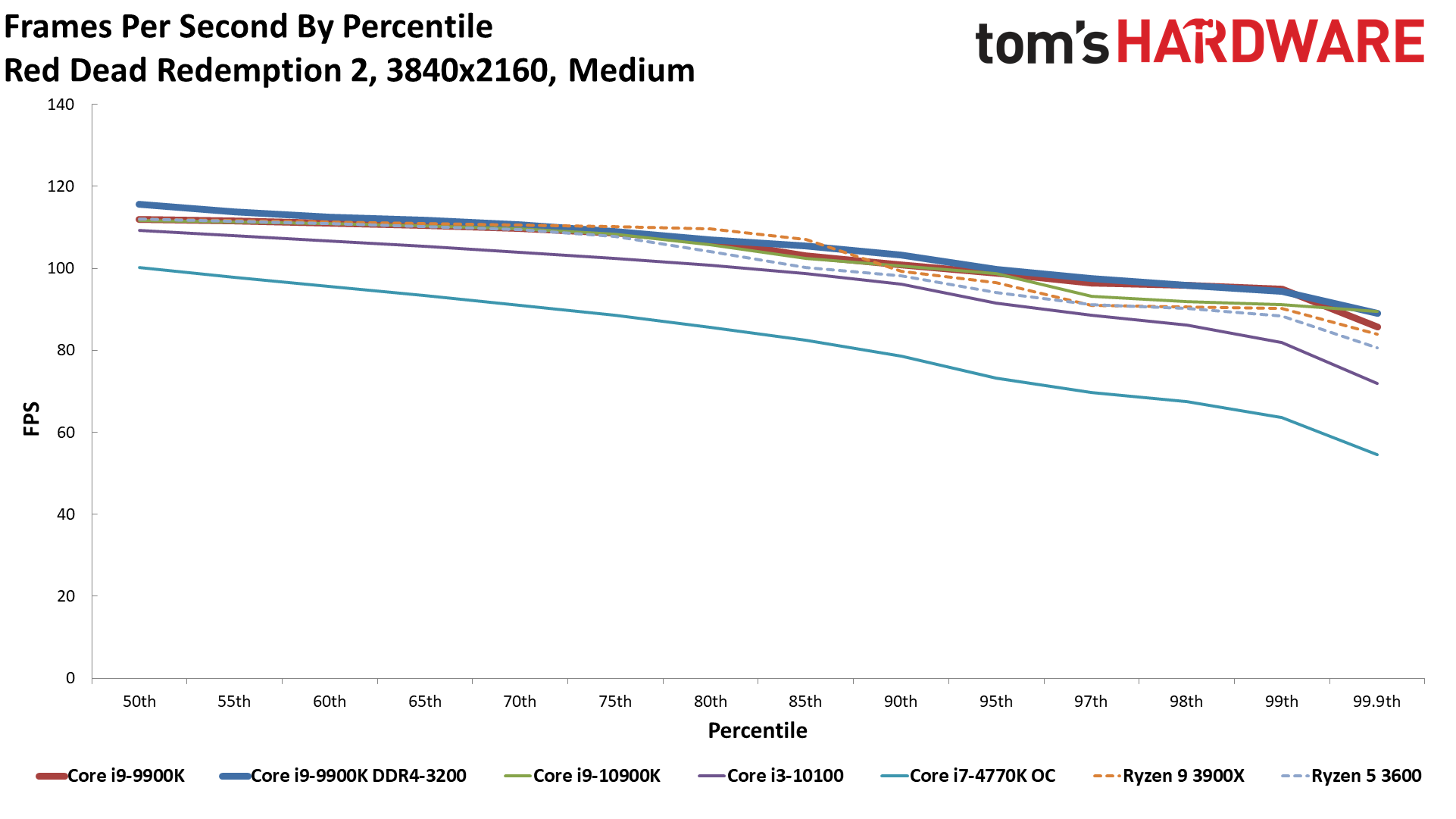

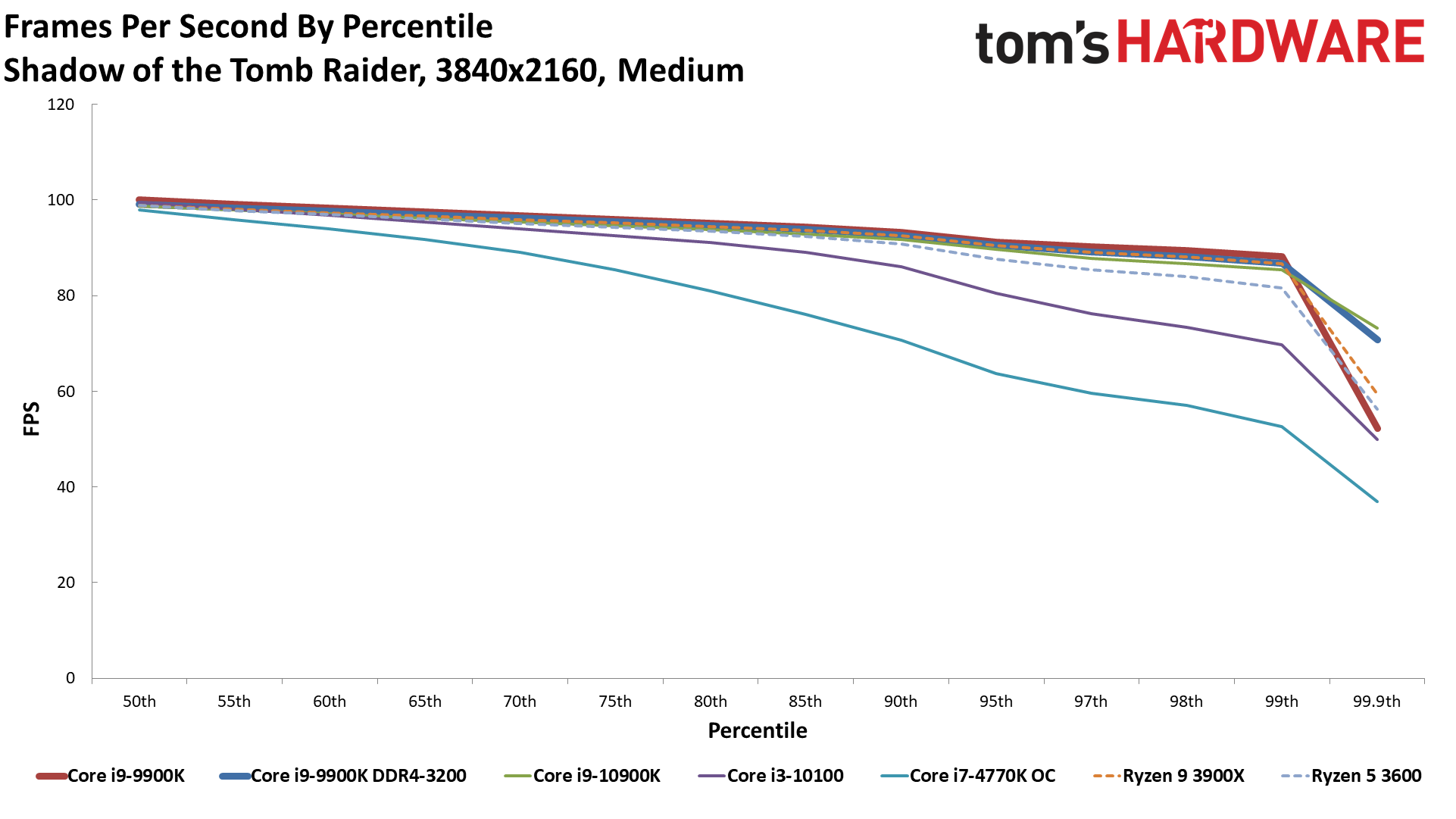

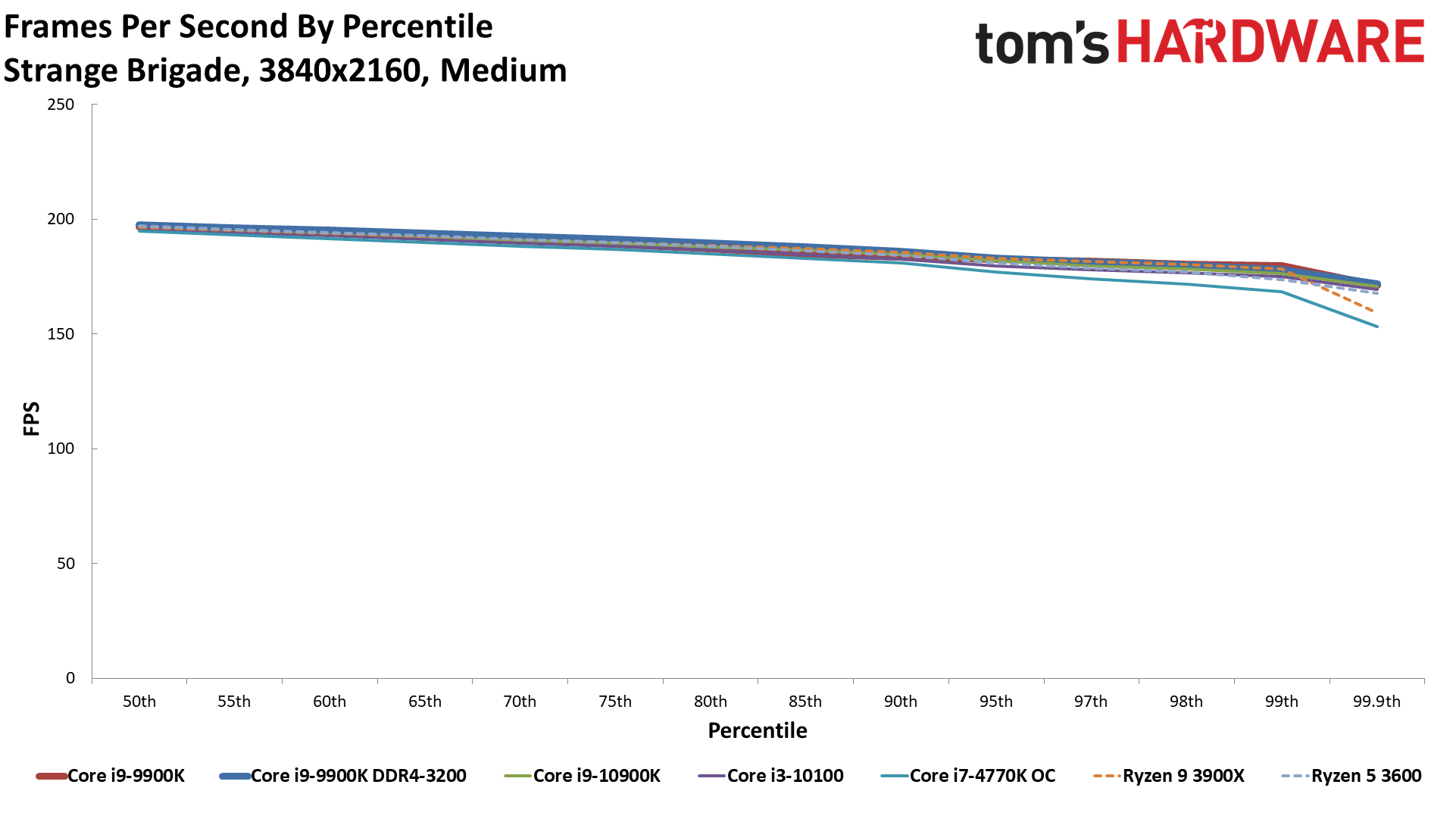

4K Medium

4K Medium Percentiles

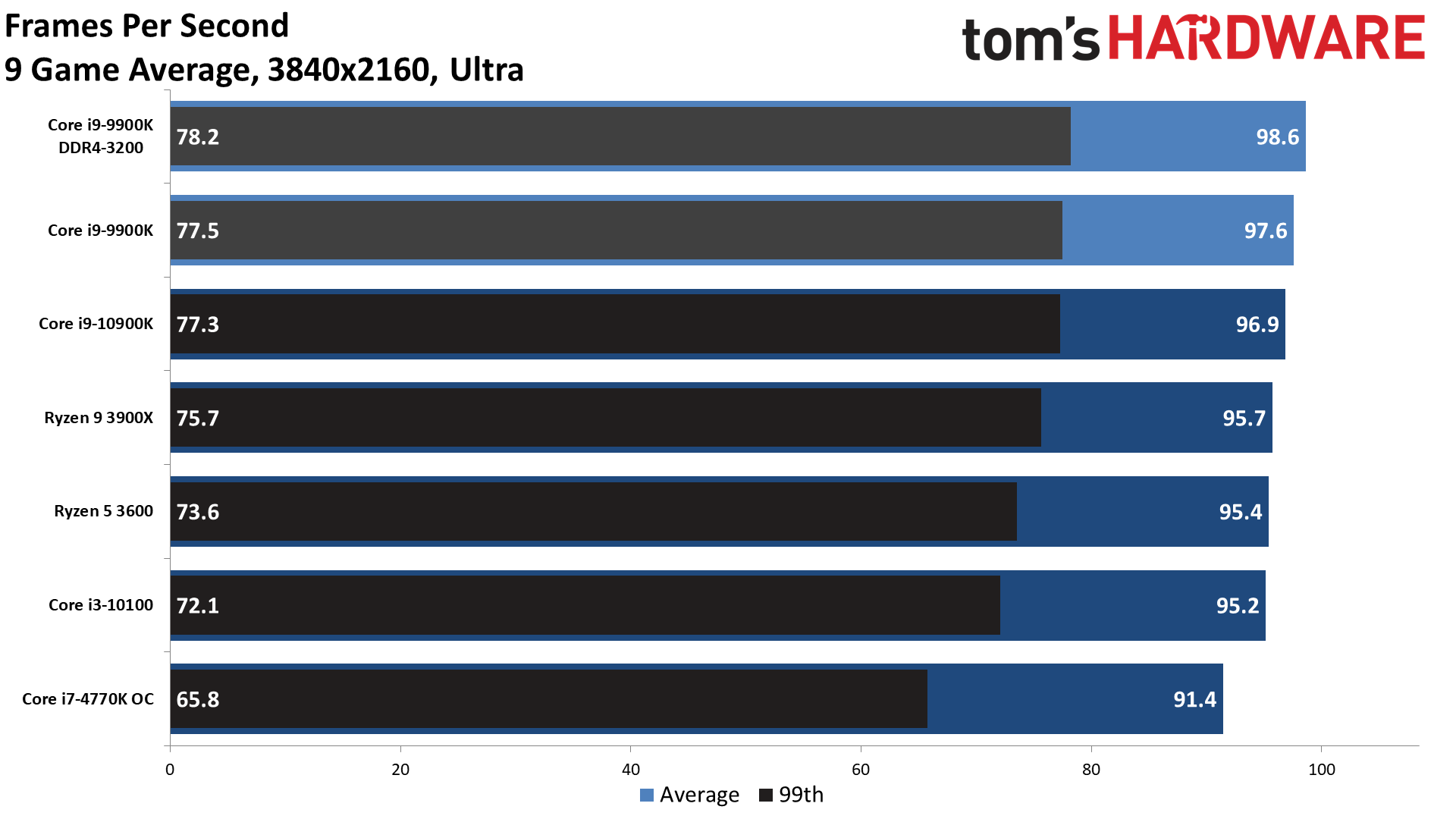

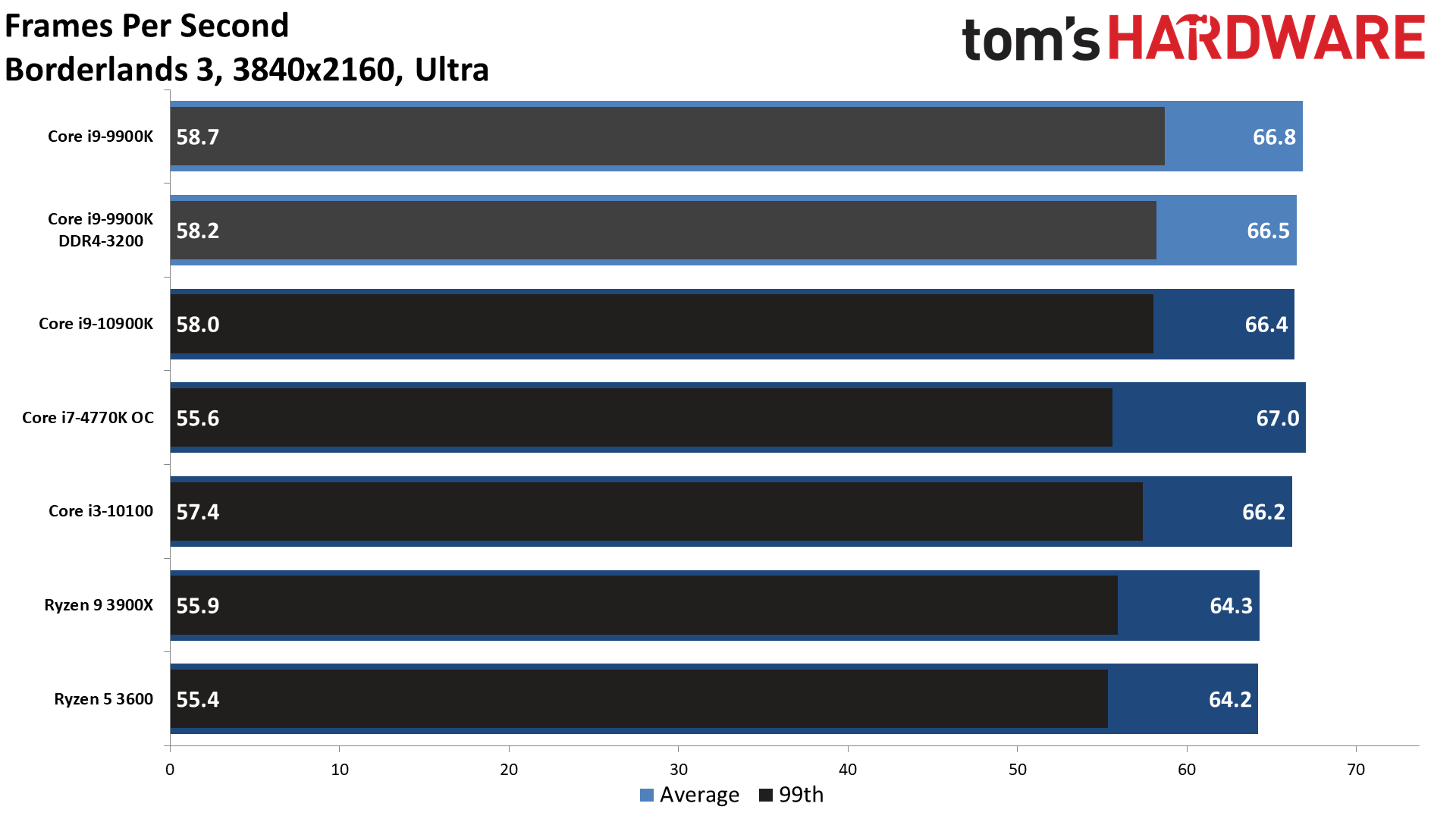

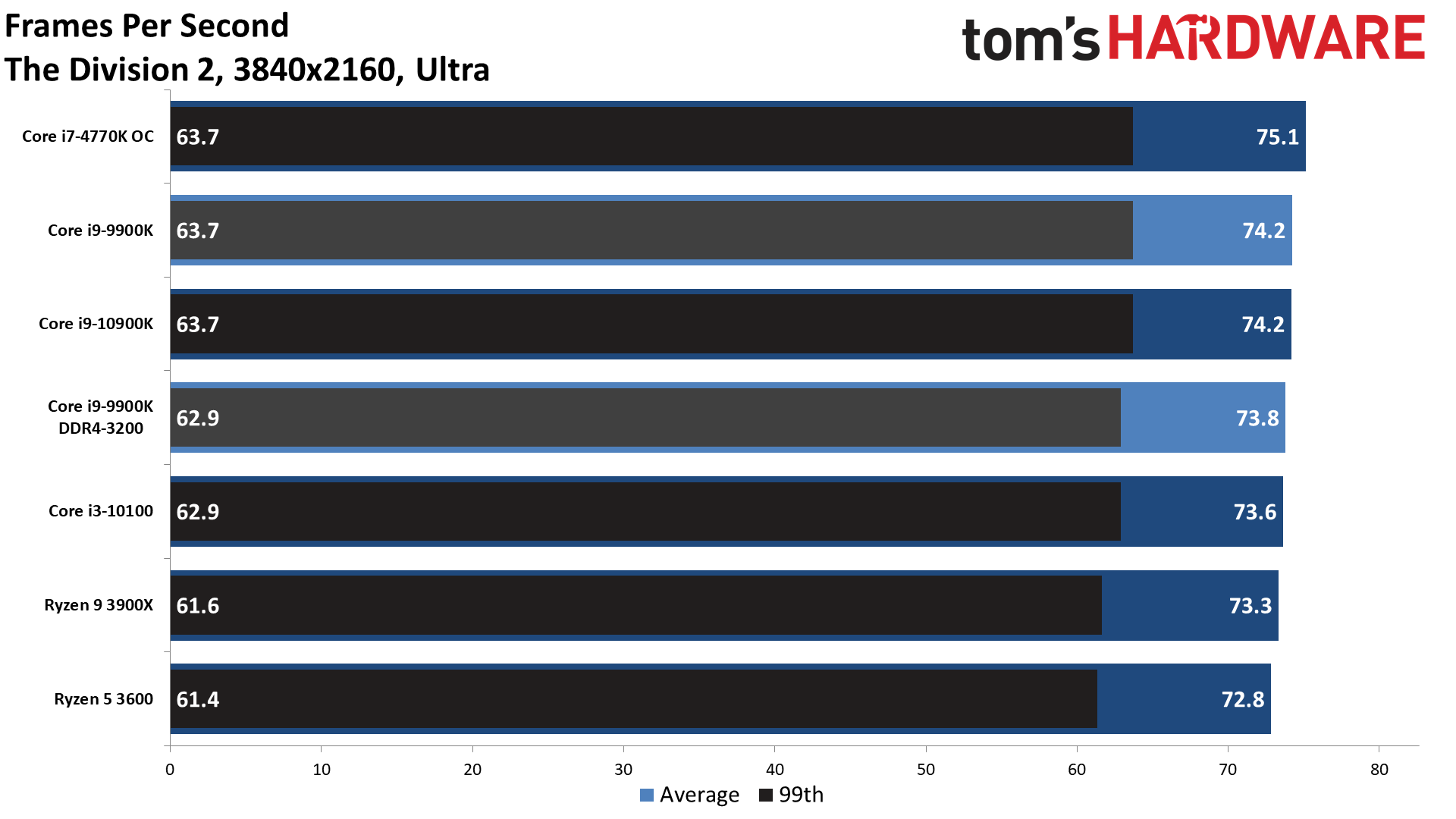

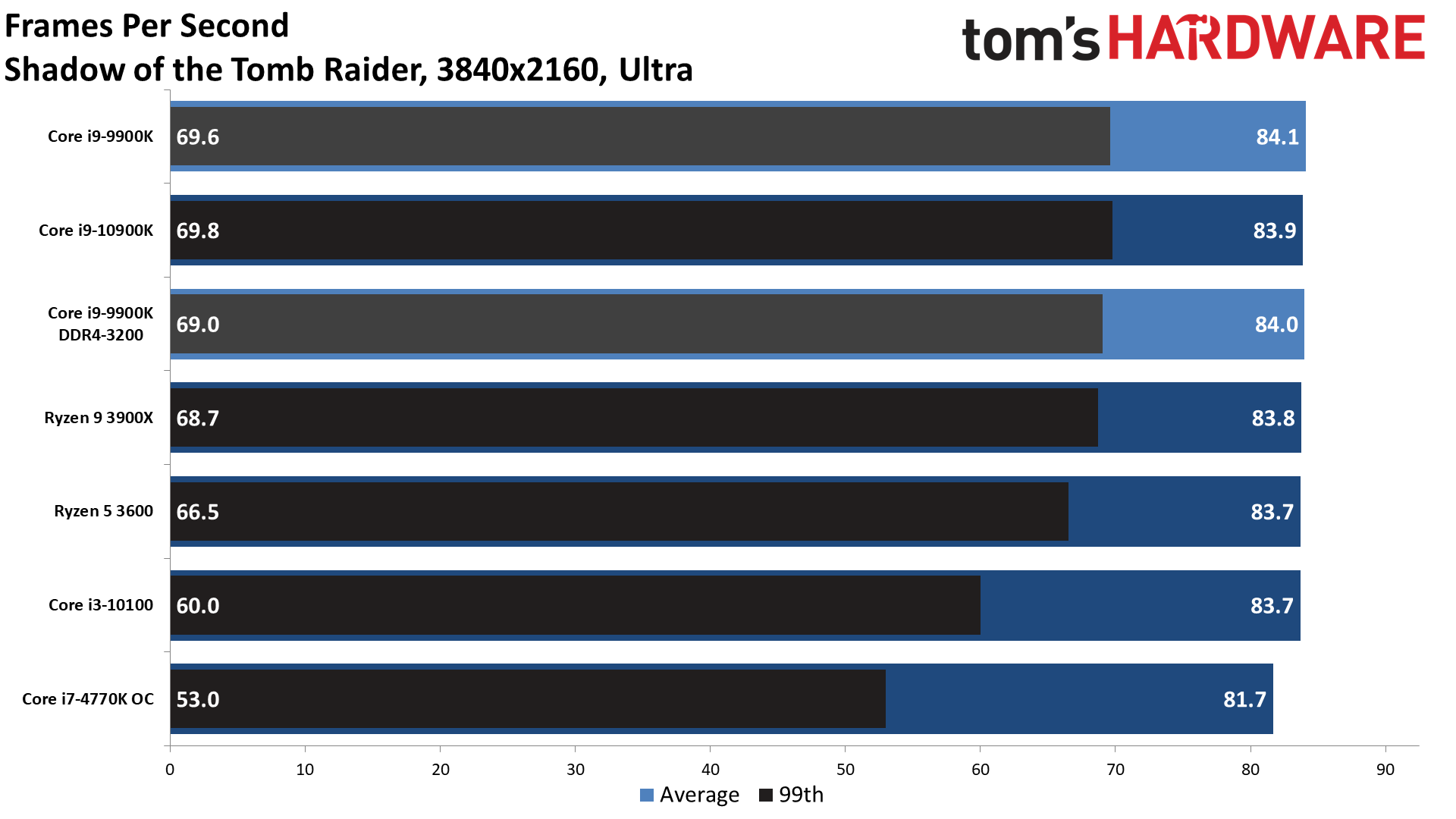

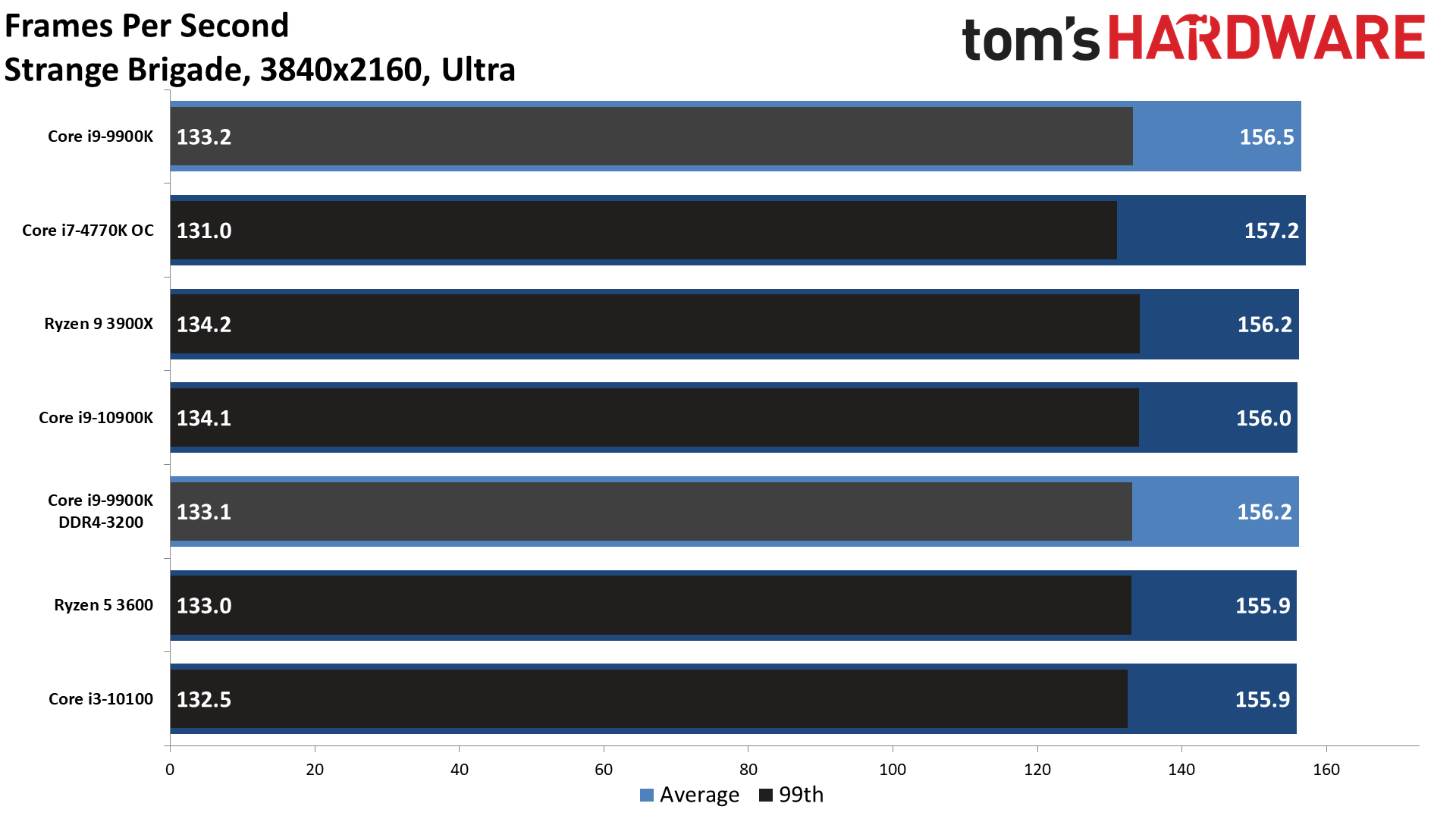

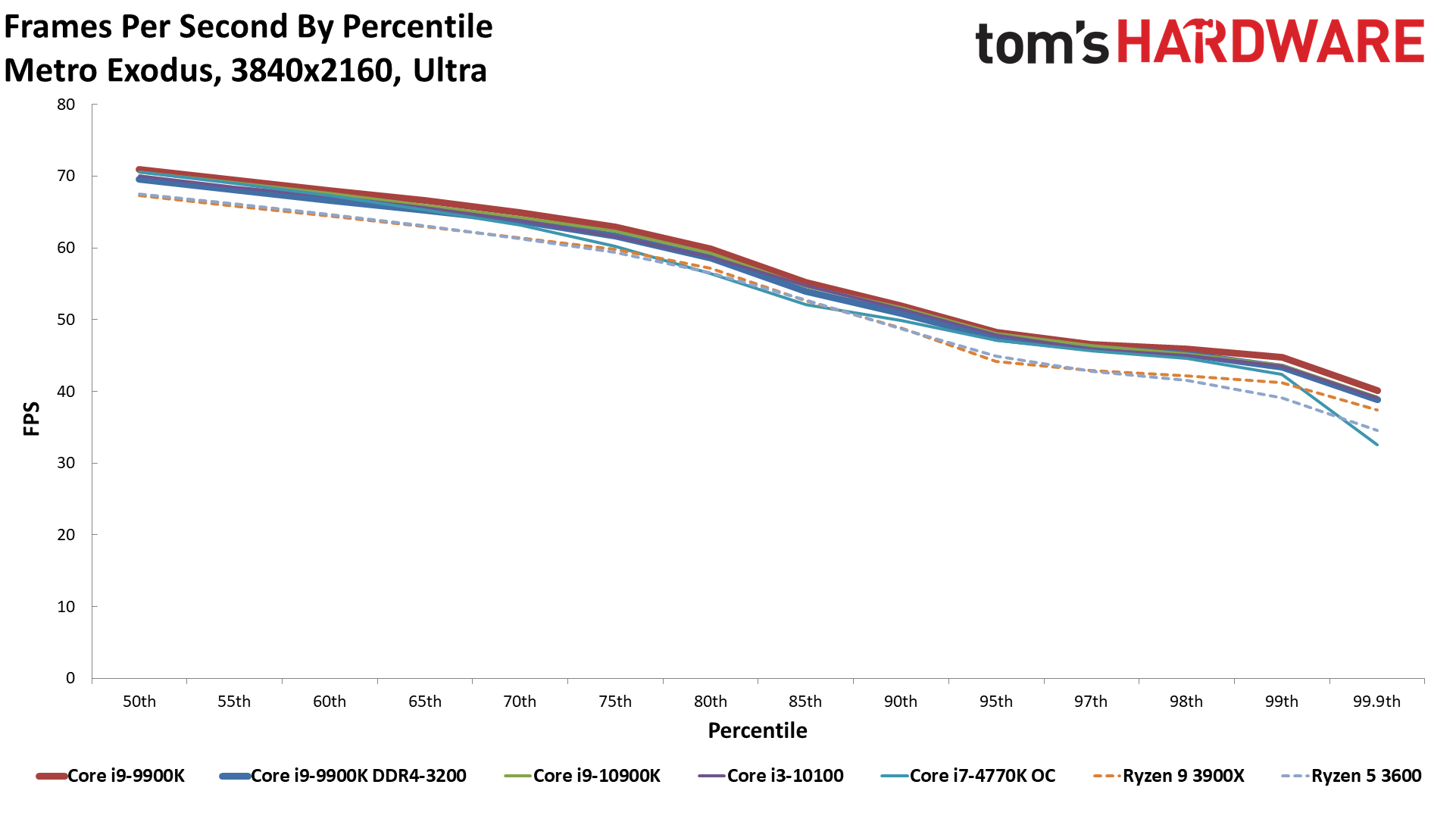

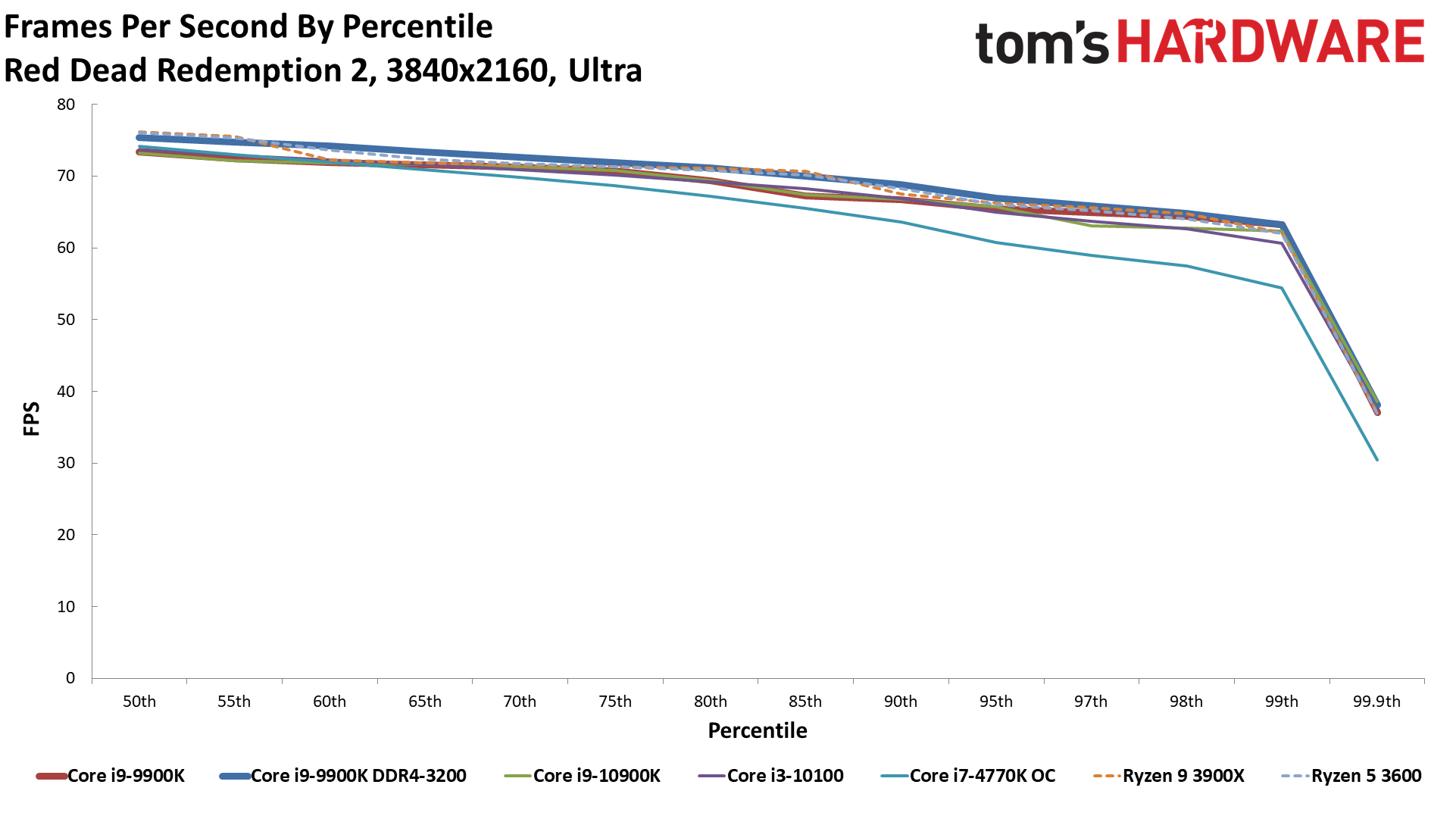

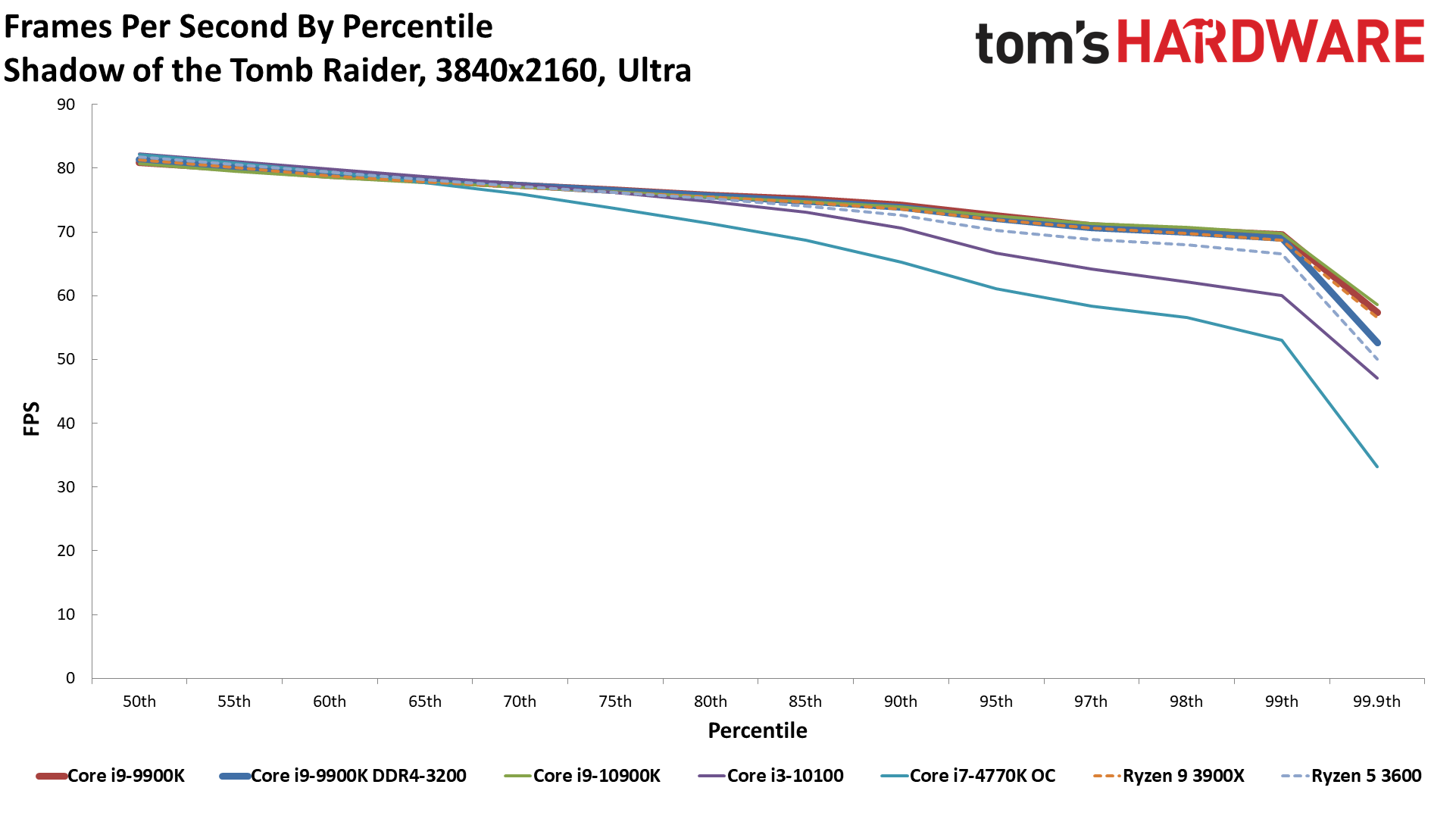

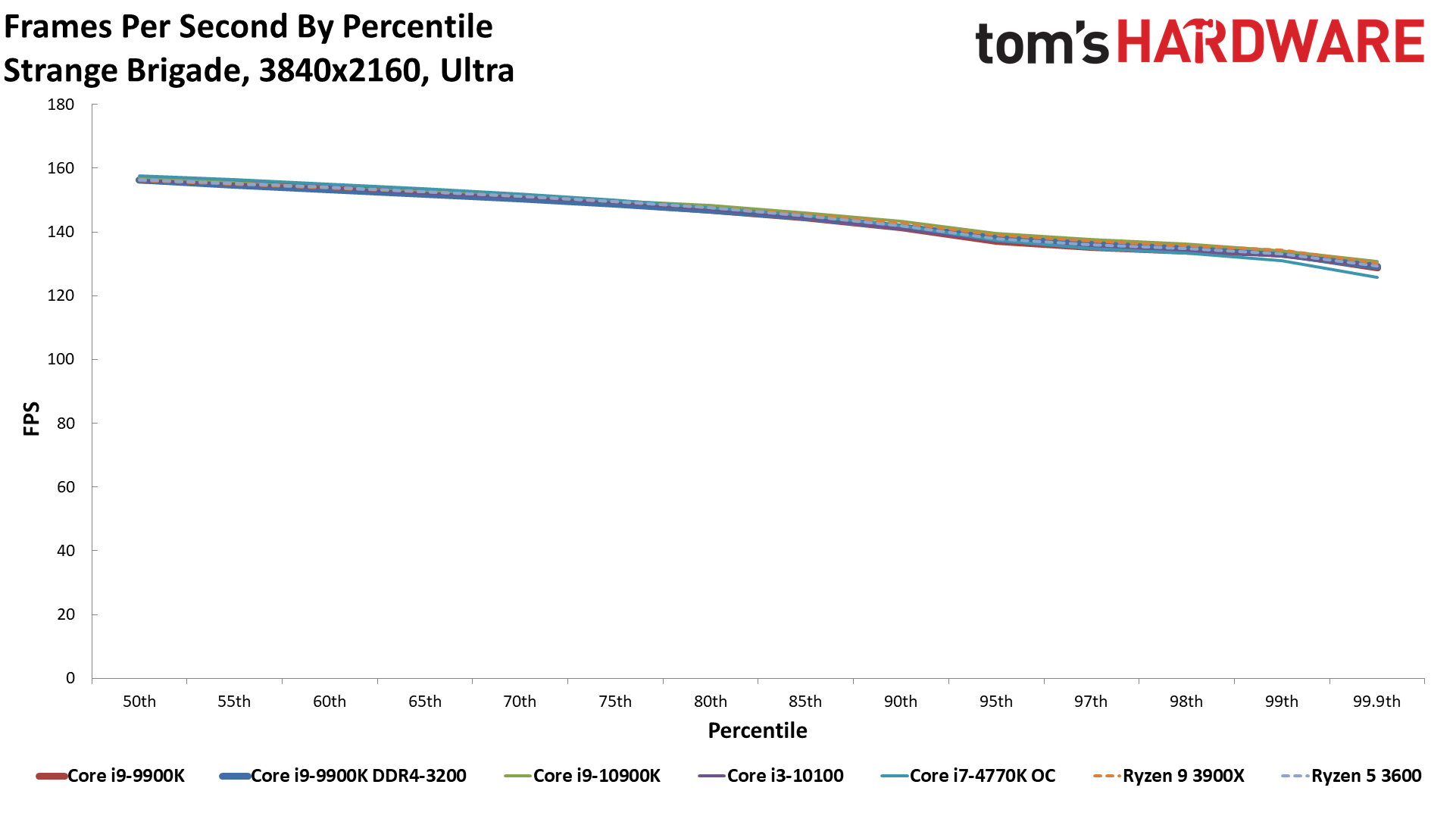

4K Ultra

4K Ultra Percentiles

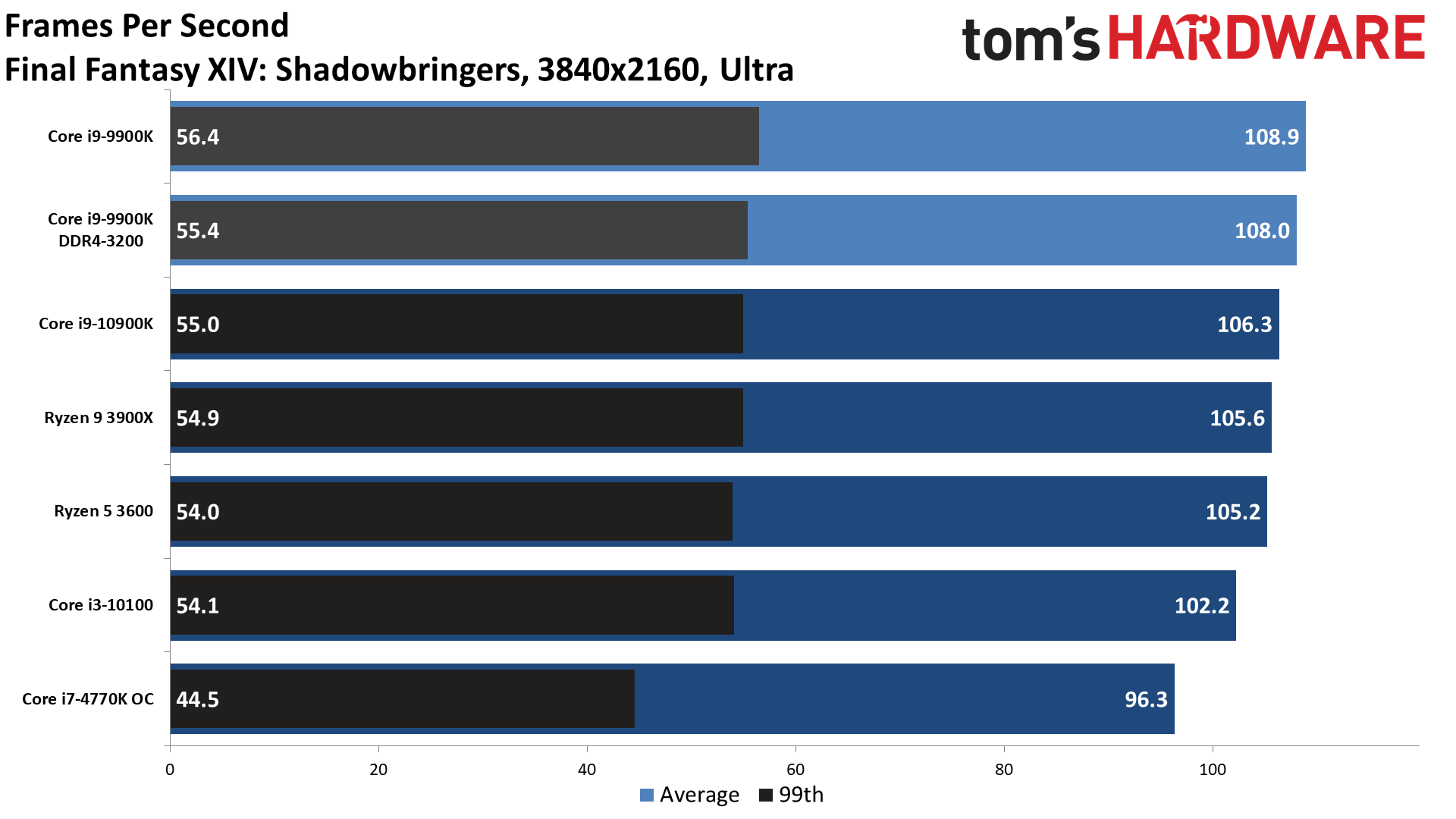

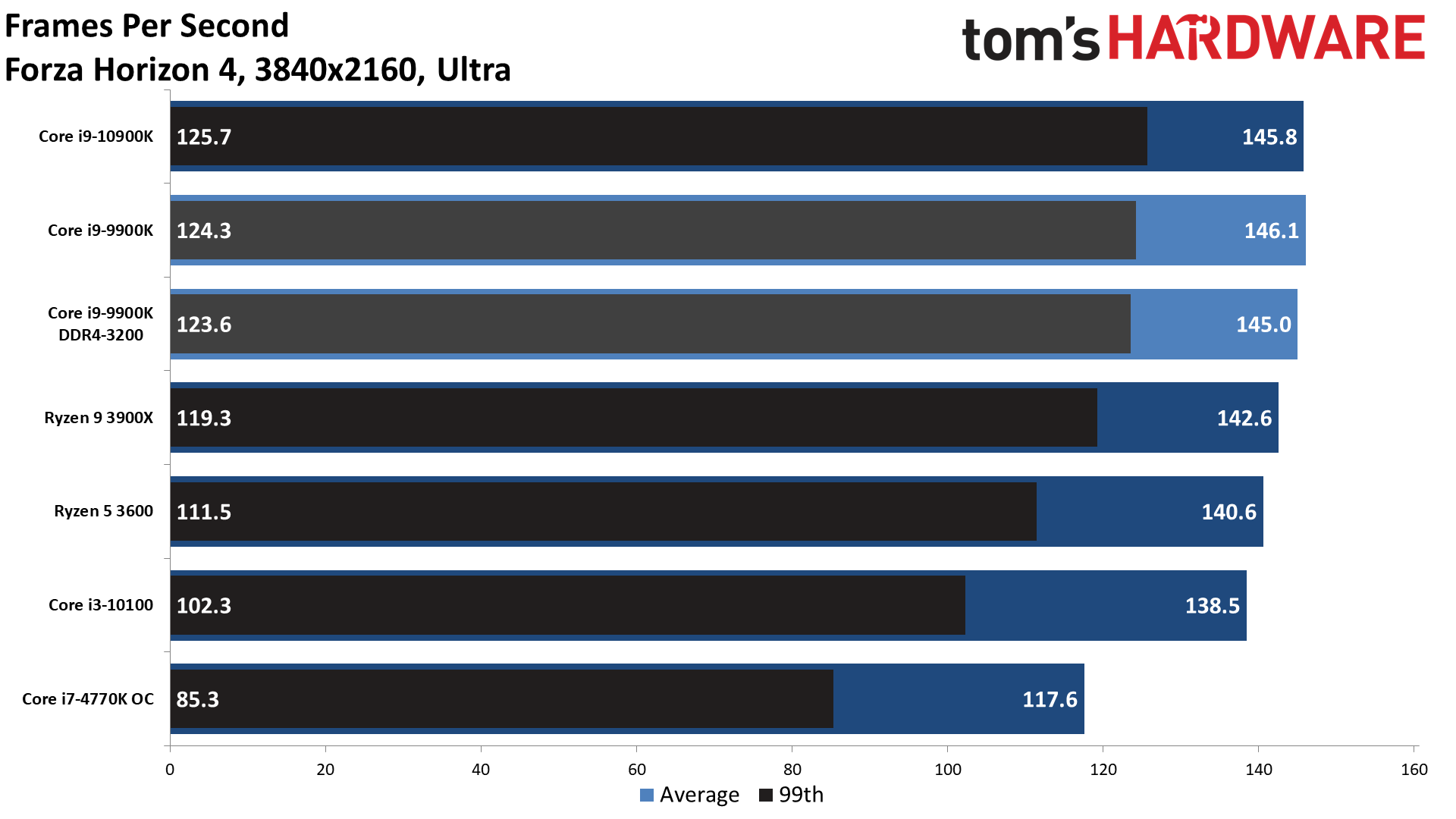

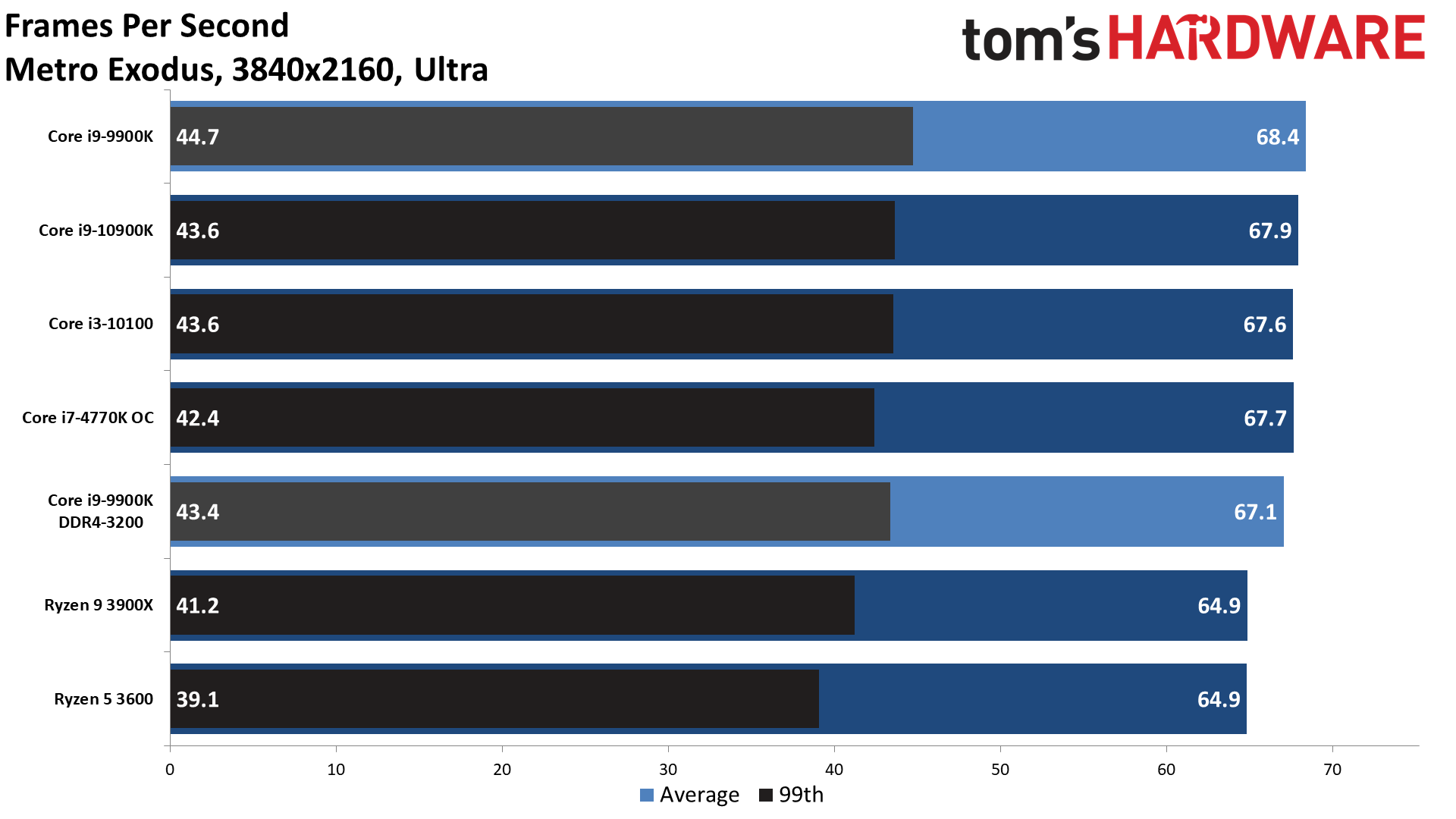

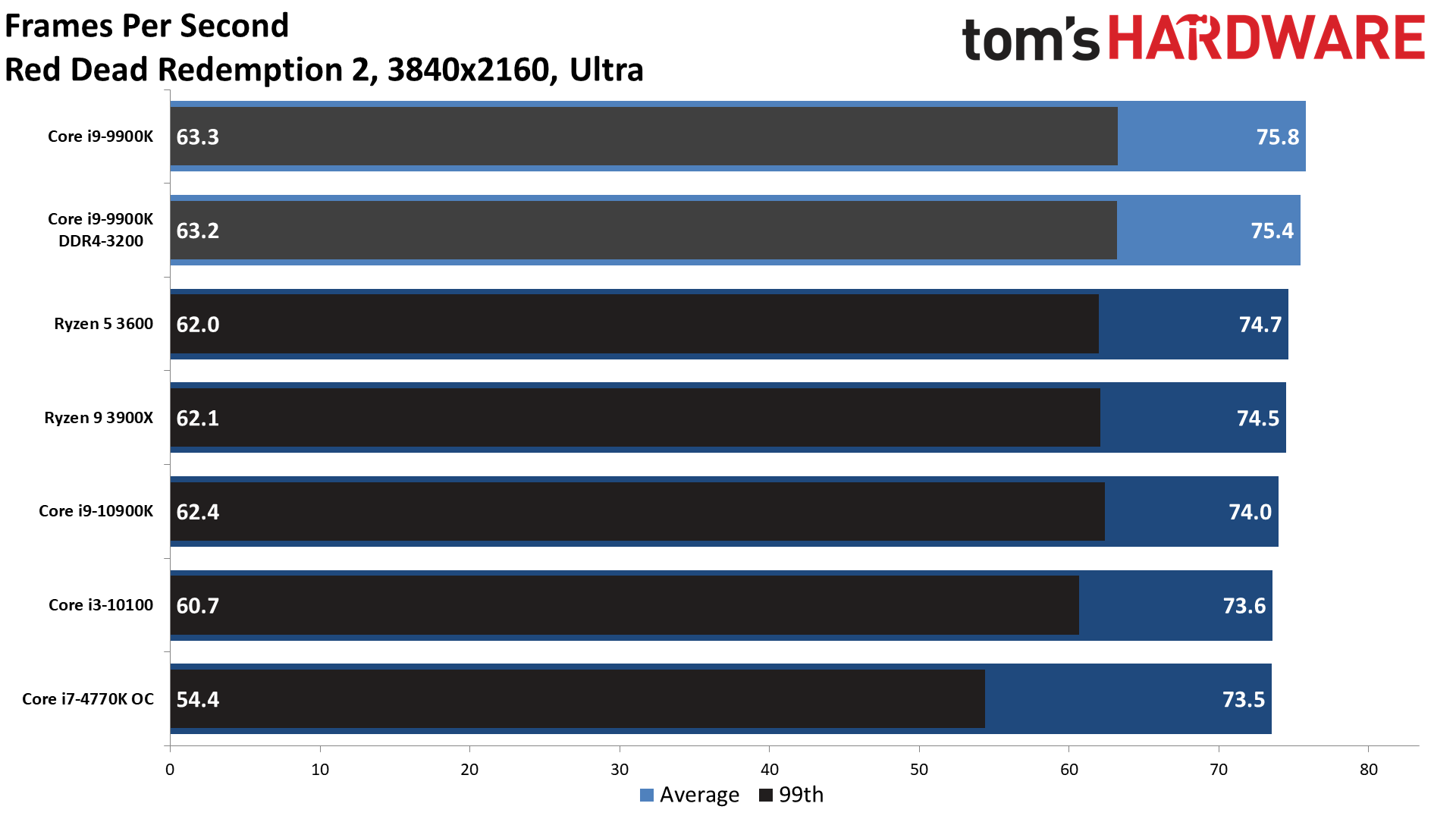

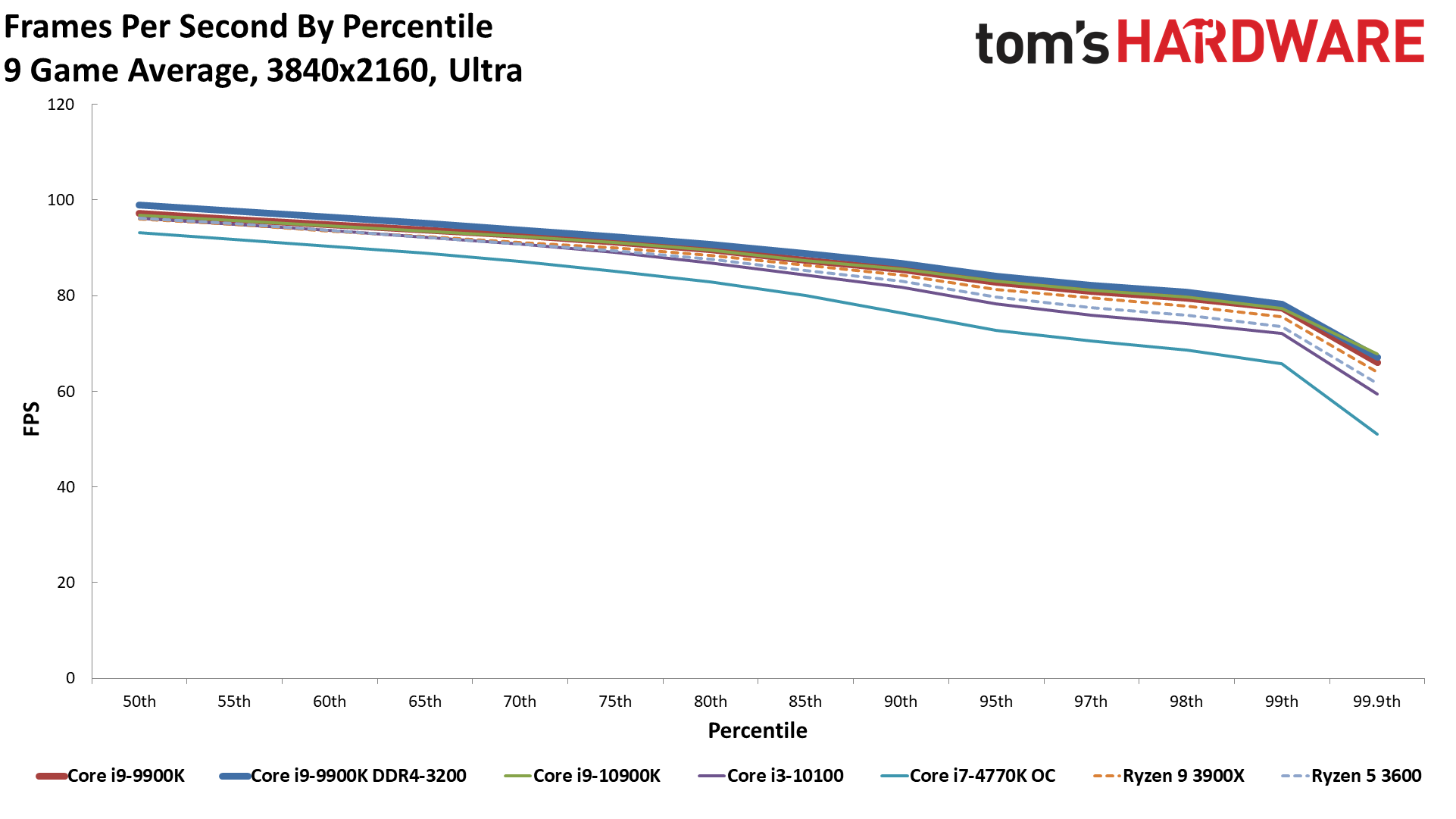

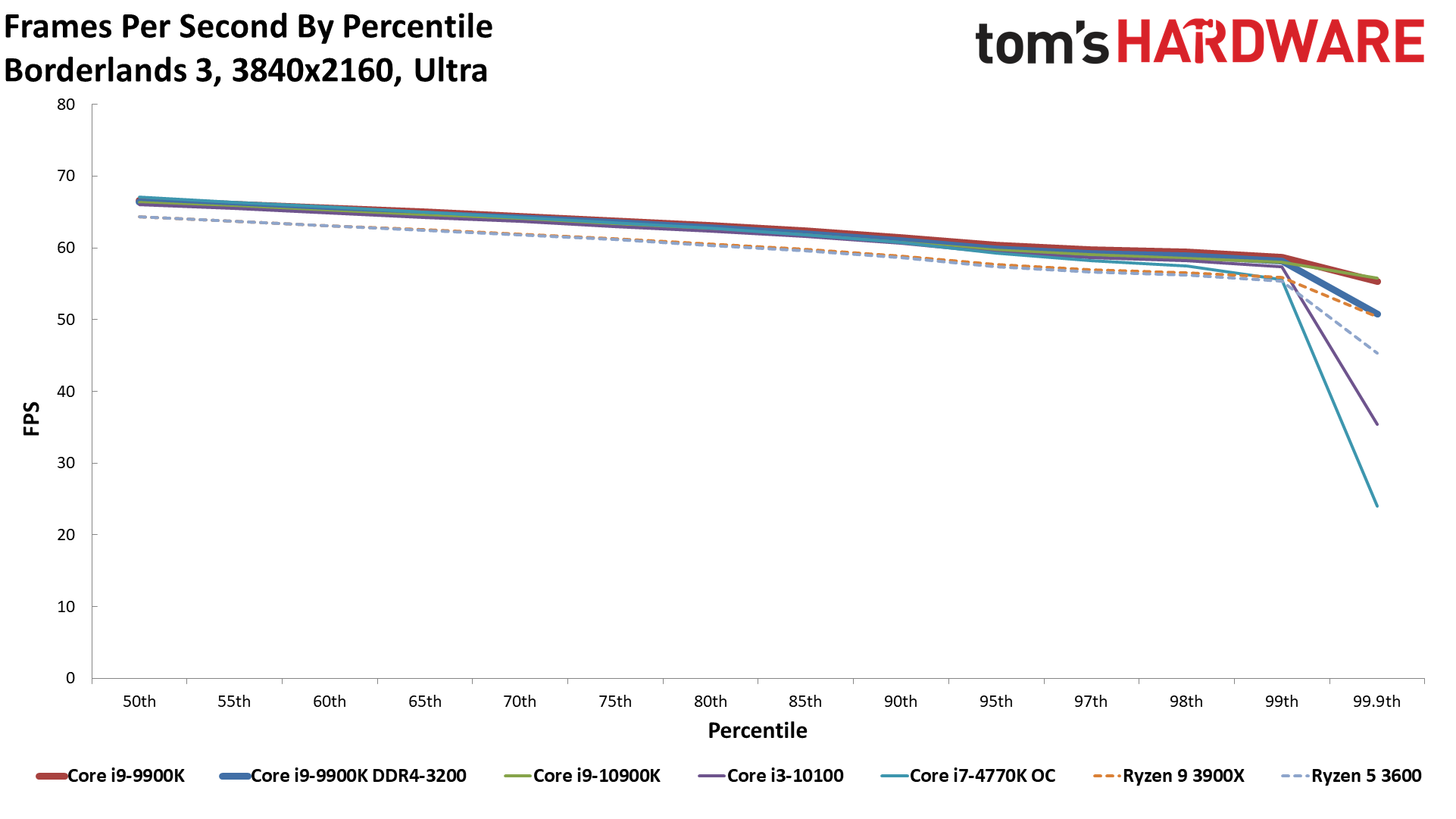

As usual, 4K is the great CPU equalizer. The demands on the GPU are so high that even a seven-year-old Core i7-4770K now only trails the Core i9-10900K by 8% at 4K ultra. It's still a noticeable 13% behind at 4K medium, but hopefully you get the point we're trying to make: The RTX 3080 is very much a graphics card designed to tackle high-resolution gaming. 4K ultra at 60 fps or more is now totally possible, and you can do it with a Core i7-4770K, Ryzen 5 3600, or go all the way and pair it with a Core i9-10900K or Ryzen 9 3900X.

The gap between current AMD and Intel CPUs at 4K is basically nonexistent. In fact, minor differences in board design, firmware, or just 1% variability in benchmark runs all become factors. The 9900K, for example, now comes out 'ahead' of the 10900K at 4K medium, and the gap is even larger at 4K ultra. That shouldn't happen, except it frequently does when you're looking at different motherboards - even with the exact same memory. Maybe the differences in SSD play a role as well.

The point isn't that the 9900K is the fastest CPU at 4K ultra by 1%. The real point is that the difference between the fastest and slowest CPU we tested is only 8% — and if we drop the 4770K, the gap shrinks to just 3.5%.

Note that minimum (99th percentile) framerates do show a larger difference. The spread on minimum fps is 19%, and still 8.5% if we ignore the 4770K. Also, while we don't have exact numbers, some of the games definitely take noticeably longer to load on the slower CPUs. Not minutes longer, but probably 5-10 seconds more.

In short, it's not that the CPU doesn't matter at all at 4K, but it doesn't matter very much if all you're doing is playing a game. Of course, you might be doing other tasks as well, like streaming and encoding your gameplay, listening to music, chatting on Discord, etc. We've isolated gaming performance here as much as possible, with a 'clean' OS and testing environment. If you're running any background tasks, particularly heavier tasks like streaming, having a faster CPU becomes much more important.

GeForce RTX 3080 CPU Scaling: The Bottom Line

We fully expected the launch of the GeForce RTX 3080 to push the bottleneck for gaming PCs very much onto the CPU, particularly at lower settings and resolutions. That does happen to some extent, but not as much as we anticipated. There are other factors to consider as well, like the type of games you play. We didn't include testing of Microsoft Flight Simulator 2020 here, for example, but that game is currently very much CPU (and API) limited, even at ultra settings. Ray tracing effects can also make even 1080p performance far more GPU dependent.

If you're thinking about purchasing the RTX 3080, we still maintain that having a good CPU is important, meaning at least a six-core Intel or AMD Ryzen processor. Of course, it's all relative as well. Maybe you're looking to upgrade your entire PC in the coming months, but you want to wait for Ryzen 5000 to launch. Or maybe you just can't afford to upgrade everything at once. Depending on your current graphics card, making the jump to the 3080 might be warranted even on an older PC, especially if it's only going to be a temporary solution. How you want to upgrade is a personal decision, and it's entirely possible to stuff the RTX 3080 into an old 2nd Gen Core or AMD FX build if you want. We wouldn't recommend doing that, but it's possible.

While the biggest differences in performance occurred at lower resolutions and settings, it's also important to look at the bigger picture. The Core i9-9900K and 10900K might 'only' be 10% faster than a slower CPU, but that's almost a whole step in GPU performance. RTX 2080 Ti was 15-20% faster than the RTX 2080 Super, but it cost 70% more. If you're running an older GPU with an older CPU and you're planning to upgrade your graphics card, it's probably a good idea to wait and see what the GeForce RTX 3070 brings to the table, as well as AMD's Big Navi. You might not miss out on much in terms of performance while saving several hundred dollars, depending on the rest of your PC components.

As always, the sage advice for upgrades is to only make the jump when your current PC doesn't do what you want it to do. If you're pining for 4K ultra at 60 fps, or looking forward to games with even more ray tracing effects, the GeForce RTX 3080 is a big jump in generational performance. It's clearly faster than the previous king of the hill (the RTX 2080 Ti, or even the Titan RTX), at a substantially lower price. Just don't expect to make full use of the 3080 if you pair it up with an old processor.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Crazy Dodo I'm curious why the i-5 10600k wasn't part of this test. In this article you list it as your top pick for gaming PC's, and I would have liked to see how it compares to the i-9 at high/ultra settings.Reply -

IceKickR I'm also very interested in i5 10600k. It seemed a little strange that i3 was included and such a great CPU as 10600k was not.Reply -

SpitfireMk3 Thank you for this great analysis. Obviously you have to consider all aspects before upgrading and I'm glad that I can still use my PC for a while before being forced to upgrade. Seeing the 9 series with other RAM outperform the 10 in some tests, how much difference do you think we'll see when DDR5 hits the scene?Reply -

Kiril1512 This reminds me about that meme where an Nvidia engineer explains about RTX 3000 cpu bottleneck xDReply -

JarredWaltonGPU Reply

The biggest reason: I don't have one. Paul has a 10600K, as he's the CPU guy. And I bought the i3-10100 because I was more interested in seeing what happened to a 4-core/8-thread modern Intel CPU (and it was only $130 with tax). I might have an i7-8700K around that I could test, but I've been running a ton of benchmarks for the 3080 launch, and still have lots of things to take care of, so probably it's not happening any time soon.Crazy Dodo said:I'm curious why the i-5 10600k wasn't part of this test. In this article you list it as your top pick for gaming PC's, and I would have liked to see how it compares to the i-9 at high/ultra settings. -

JarredWaltonGPU Reply

DDR5 will probably be like the DDR3/DDR4 rollout: not much faster at first, but over time the increased bandwidth becomes more important. A lot will depend on the CPU architecture it's paired with of course, and we don't have Zen 4 or Alder Lake yet. :)SpitfireMk3 said:Thank you for this great analysis. Obviously you have to consider all aspects before upgrading and I'm glad that I can still use my PC for a while before being forced to upgrade. Seeing the 9 series with other RAM outperform the 10 in some tests, how much difference do you think we'll see when DDR5 hits the scene? -

TerryLaze Christ, so even the 3080 is incapable of keeping up with modern CPUs at 1080 ultra.Reply

That's a 25% improvement in FPS for the 9900k going to 1080 medium (daheck?! did you run them at stock with TDP enforced? ) -

JarredWaltonGPU Reply

It's 25% faster at 1080p medium because both the CPU and GPU are doing less work overall. There are a few games where the ultra vs. medium stuff still hits similar speeds, but you'd need significantly more CPU performance to avoid at least a moderate drop in performance. There are games where 1080p ultra is almost completely CPU limited (MS Flight Simulator for sure), others where the graphics complexity is high enough that they're only partially CPU limited, and still others where they're basically GPU limited even at 1080p. Enabling ray tracing in most games pushes them into the last category.TerryLaze said:Christ, so even the 3080 is incapable of keeping up with modern CPUs at 1080 ultra.

That's a 25% improvement in FPS for the 9900k going to 1080 medium (daheck?! did you run them at stock with TDP enforced? )

As for TDP, the 10900K TDP is very loosely defined and the MSI board almost certainly runs it with PL2 constraints rather than restricting it to 125W. For games, it's not coming close to 250W, but it might be breaking 125W. The same applies to the other CPUs to varying degrees. I don't have proper CPU power testing equipment, however, so at best I could try and do Outlet Power - GPU Power. Which I don't have time to try and get done right now. -

greenmrt For me, looking forward to playing raytraced games in Ultra at 1440p, I'm gleaming from this that my 3700x will work until I upgrade to the next gen CPU. I'm buying a new GPU for the "purdy" :)Reply -

Zarax Another excellent analysis, Jarred.Reply

Any chance to see how Ryzen XT CPUs behave or some Ashes of the Singularity benchmark?

Thanks!