Matrox Launches Single-Slot Intel Arc GPUs

Intel certainly has a way with old-school brands. First, it was Sparkle, and now Matrox has jumped on Intel's Arc Alchemist bandwagon. The latter has announced the brand's new Luma series of graphics cards, leveraging Intel's Arc A310 and Arc A380, one of the best graphics cards on the market.

The Arc A3 series utilizes the ACM-G11 silicon. The difference between the Arc A310 and Arc A380 comes down to the former having two fewer Xe cores and 2GB less GDDR6 memory on a limited 64-bit memory interface. As a result, both graphics cards hit the market without much fanfare. For instance, the Arc A380 was only initially available in China, and Intel subsequently launched the Arc A310 in the most silent way the chipmaker could. Matrox is one of the few, if not the first vendor, to release an Arc A310, and not just one but two of them.

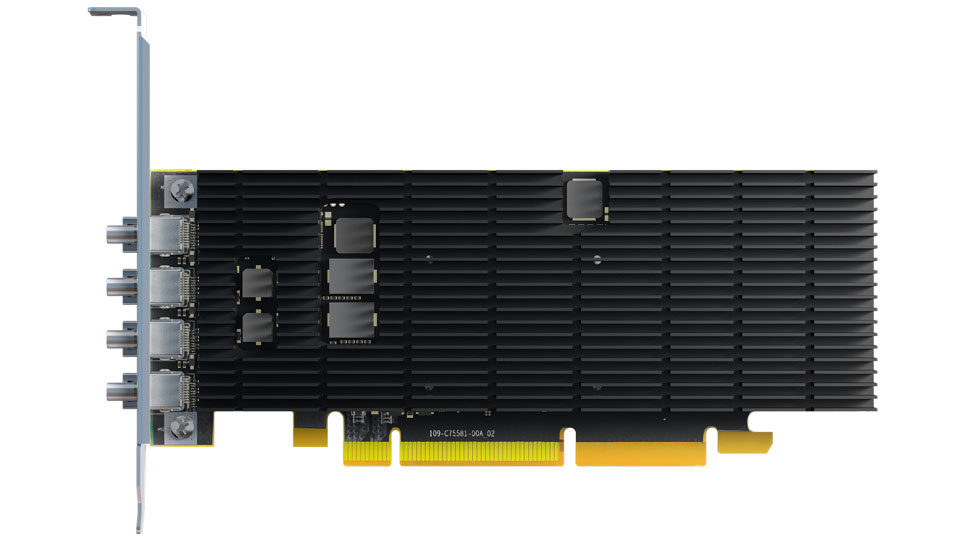

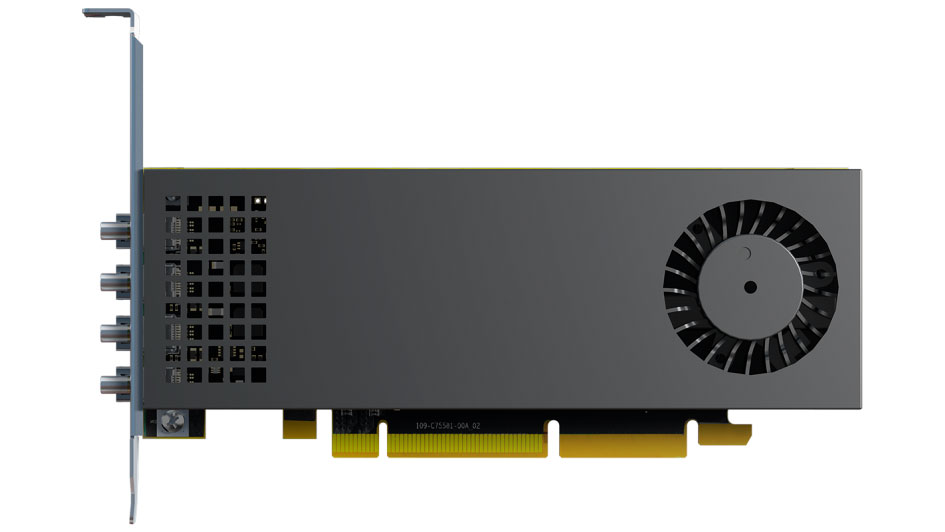

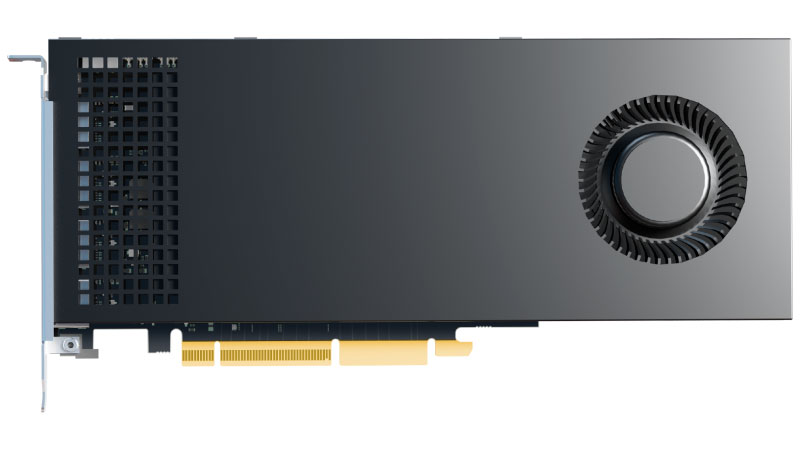

The Luma A310 and Luma A310F are low-profile graphics cards that will easily fit into any small-form-factor (SFF) system. Matrox includes low-profile brackets with these two specific SKUs. The graphics cards stick to a single-slot design with a width of 16.76cm (6.6 inches). In addition, the Luma A310 uses a passive cooler, whereas the Luma A310F relies on a blower-type design with a small cooling fan. The Luma A380 also conforms to a single-slot design. However, it's a full-size graphics card with a length of 25.38cm (9.99 inches), so it's not the best option for SFF systems.

Matrox Luma Specifications

| Header Cell - Column 0 | Luma A310 | Luma A310F | Luma A380 |

|---|---|---|---|

| GPU | Intel Arc A310 | Intel Arc A310 | Intel Arc A380 |

| Memory | 4GB GDDR6 | 4GB GDDR6 | 6GB GDDR6 |

| Interface | PCIe 4.0 x16 (x8 electrical) | PCIe 4.0 x16 (x8 electrical) | PCIe 4.0 x16 (x8 electrical) |

| Cooling | Passive | Active | Active |

| Power Consumption | 30W | 50W | 75W |

| Video Outputs | 4 x Mini DisplayPort | 4 x Mini DisplayPort | 4 x DisplayPort |

| Form Factor | Low Profile, Single Slot | Low Profile, Single Slot | Full Height, Single Slot |

| Dimension | 16.76 x 6.86cm | 16.76 x 6.86cm | 25.38 x 12.68cm |

All three Luma graphics cards leverage the PCIe 4.0 x16 interface. However, it's important to underline that they're limited to x8 operating electrically, thus only taking advantage of half the bandwidth of the expansion slot.

The Arc A310 and Arc A380 have the same 75W TDP. In Matrox's case, the Luma A310 and Luma A310F are 30W and 50W TDP graphics cards, respectively. On the other hand, the Arc A380 adheres to Intel's 75W reference specifications. In any event, the graphics cards draw all their power from the expansion slot, so no external PCIe power connectors are needed.

Although you can use Matrox's Luma graphics card for gaming, the products aim for industrial, digital signage, and medical clients. The Luma A310 and Luma A310F sport four Mini DisplayPort 2.1 (secure) outputs, while the Luma A380 has four standard DisplayPort outputs. The combination allows them to accommodate up to four displays simultaneously. Matrox sells a separate Mini DisplayPort to a standard DisplayPort (CAB-MDP-DPF) cable for $29.99 if users prefer the latter.

Customers can opt for two 8K displays at 60 Hz or 5K at 120 Hz in a dual-display setup. However, in a quad-display configuration, the resolutions are restricted to 5K at 60 Hz with HDR 12b support. In addition, the manufacturer includes the Matrox PowerDesk and Matrox MuraControl for managing multi-display setups.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Matrox backs Luma products with a three-year warranty, but buyers can extend it for an added cost. The graphics cards have a life cycle of seven years.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

kjfatl This makes a lot of sense. Intel is aiming at the 90% of the market (by volume) that AMD and NVIDIA are ignoring. With this they can sell a few million of these and get the $30 or so profit that these boards bring in. Not bad when you sell 10,000 boards at a time to companies like UPS, Home Depot, Starbucks or Target for internal use.Reply -

InvalidError If Matrox decided to pick up the A750, maybe I'd try my luck checking if one of the few people I remember the names of (I'm pathologically bad at remembering names) are still there to ask what the employee shop prices are like :)Reply -

Eximo Quad DP2.1 is pretty snazzy and that seems to be the main drive behind these. I have the ASRock A380, and it still has HDMI which is just handy to have, sadly not 2.1 though.Reply

I'm actually looking forward to Battlemage and Celestial. If they are anywhere near decently equipped when they come out, I might make the switch. -

waltc3 Did anyone here also own a Matrox Millennium GPU, back in the days of yore, pre-3dfx? I owned a couple, IIRC. Best 2D GPU available at the time. Everyone expected Matrox to do really well with its 3d card--the Matrox Mystique--which I also owned--it was very poor, actually. Matrox just sort of faded away in that market. 3dfx walked off with it. The Matrox are still good for 2d displays today, I hear. Just thought I'd ask! Looks like that is where they are shooting for with the Intel chips and architectures.Reply -

King_V I know the gaming market isn't really the target, but I'm actually glad to see Matrox is still around, and, especially, doing something like this - single slot, no auxiliary power.Reply

Kudos! -

Amdlova Matrox make some cards to multi displays... uses amd chip but I think will be intel on next year'sReply

Quad display single slot.... that's good -

Eximo Replywaltc3 said:Did anyone here also own a Matrox Millennium GPU, back in the days of yore, pre-3dfx? I owned a couple, IIRC. Best 2D GPU available at the time. Everyone expected Matrox to do really well with its 3d card--the Matrox Mystique--which I also owned--it was very poor, actually. Matrox just sort of faded away in that market. 3dfx walked off with it. The Matrox are still good for 2d displays today, I hear. Just thought I'd ask! Looks like that is where they are shooting for with the Intel chips and architectures.

My brother bought it, and while the 2D performance wasn't amazing there were a few game titles that made good use of it for about 6 months. I got his voodoo 2 and purchased a whole back catalog of Glide titles over the next few years.

Not like 3DFX lasted much longer than them either. My last 3dFX card was the Voodoo 3 3000. I do now own a Voodoo 5 5500 I got on ebay for when I get the urge to pwn some noobs in classic UT. I think I bought that back in 2008 or 2009. PCI version so it works with most of my old machines. I don't think I have a working AGP motherboard anymore. -

digitalgriffin Reply

I was owned the millennium 1 millennium 2 using good old window ram (wram) Mystique m3d was the power VR chip debut.waltc3 said:Did anyone here also own a Matrox Millennium GPU, back in the days of yore, pre-3dfx? I owned a couple, IIRC. Best 2D GPU available at the time. Everyone expected Matrox to do really well with its 3d card--the Matrox Mystique--which I also owned--it was very poor, actually. Matrox just sort of faded away in that market. 3dfx walked off with it. The Matrox are still good for 2d displays today, I hear. Just thought I'd ask! Looks like that is where they are shooting for with the Intel chips and architectures.

The 480 was actually highly competitive for the day and they were neck and neck with the best of cards then. After that they just gave up. It takes tremendous investment and talent to keep up. And matrox didn't want to play that game. They concentrated on multi displays niche for digital signage. Shortly there after I bought my first ATi. 7800. Then I bough the 9800pro. Those were the days you could blow $200 and get top of the line. -

HWOC Reply

I was a big fan of Matrox back in the day. I didn't have any of the Matrox 2D cards, but I did own the Matrox Millennium G200 AGP with 8MB SGRAM, and after that the G400 16 MB SDRAM, and after that the G400 with 32 MB. All of the above were used mostly for gaming. I long for the old days when there were so many different companies producing chips and graphics cards.waltc3 said:Did anyone here also own a Matrox Millennium GPU, back in the days of yore, pre-3dfx? I owned a couple, IIRC. Best 2D GPU available at the time. Everyone expected Matrox to do really well with its 3d card--the Matrox Mystique--which I also owned--it was very poor, actually. Matrox just sort of faded away in that market. 3dfx walked off with it. The Matrox are still good for 2d displays today, I hear. Just thought I'd ask! Looks like that is where they are shooting for with the Intel chips and architectures. -

Dr3ams Back in the late 90s I had a Matrox Mystique 220 GPU. It had 8MBs of SGRAM and ran at 220 MHz. It came with three games, but the only one I can remember is MechWarrior 2.Reply