Chip Fights: Nvidia Takes Issue With Intel's Deep Learning Benchmarks

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Intel recently published some Xeon Phi benchmarks, which claimed that its “Many Integrated Core” Phi architecture, based on small Atom CPUs rather than GPUs, is significantly more efficient and higher performance than GPUs for deep learning. Nvidia seems to have taken issue with this claim, and has published a post in which it detailed the many reasons why it believes Intel’s results are deeply flawed.

GPUs Vs. Everything Else

Whether they are the absolute best for the task or not, it’s not much of a debate that GPUs are the mainstream way to train deep learning neural networks right now. That’s because training neural networks requires low precision computation (as low as 8-bit), and not high-precision computation, for which CPUs are generally built. Whether GPUs will one day be replaced by more efficient alternatives for most customers, it remains to be seen.

Nvidia has not only kept optimizing its GPUs for machine learning over the past few years, but it has also invested many resources into the software that makes it easy for developers to train their neural networks. That’s also one of the main reasons why researchers usually go with Nvidia for machine learning rather than AMD. Nvidia said that the performance of its software has improved by an order of magnitude when comparing the Kepler software era to the Pascal one.

However, GPUs are not the only game in town when it comes to training deep neural networks. As the field seems to be booming right now, there are all sorts of companies, old and new, trying to take a share of this market for deep learning-optimized chips.

There are companies that focus on FPGAs for machine learning, but also companies that create custom deep learning chips such as Google, CEVA, and Movidius. Then, there’s also Intel, which wants to compete against GPUs by using dozens of small Atom (Bay Trail-T) cores instead under the Xeon Phi brand.

Intel’s Claims

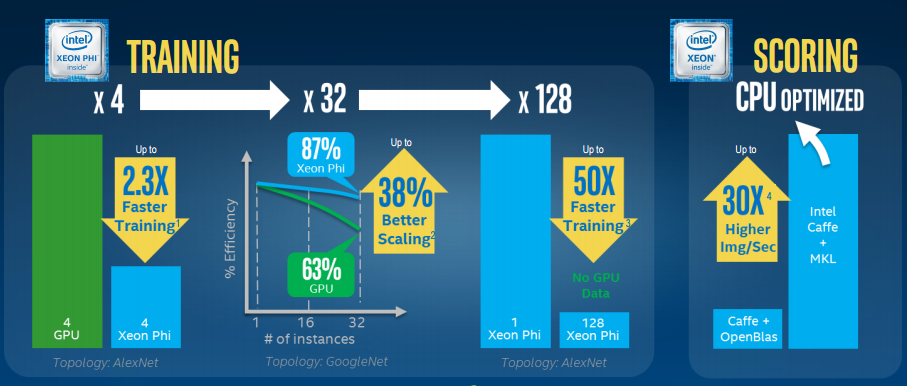

In its paper, Intel claimed that four Knights Landing Xeon Phi chips were 2.3x faster than “four GPUs.” Intel also claimed that Xeon Phi chips could scale 38 percent better across multiple nodes (up to 128, which according to Intel can’t be achieved by GPUs). Intel said systems made out of 128 Xeon Phi servers are 50x faster than single-Xeon Phi servers, implying that Xeon Phi servers scale rather well.

Intel also said in its paper that when using an Intel-optimized version of the Caffe deep learning framework, its Xeon Phi chips are 30x faster compared to the standard Caffe implementation.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Nvidia’s Rebuttals

Nvidia’s main arguments seem to be that Intel was using old data in its benchmarks, which can be misleading when comparing against GPUs, especially because Nvidia’s GPUs saw drastic increases in performance and efficiency once they moved from a 28nm planar process to a 16nm FinFET one. Not only that, but in the past few years, Nvidia has also optimized various software frameworks for its GPUs.

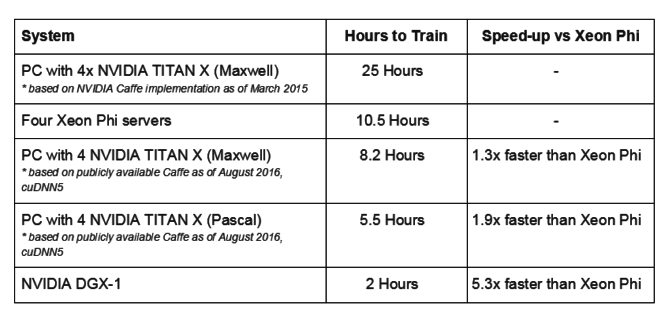

That’s why now Nvidia claims that if Intel had used a more recent implementation of the Caffe AlexNet test, it would’ve seen that four of Nvidia’s previous-generation Maxwell GPUs were actually 30% faster than four of Intel’s Xeon Phi servers, according to Nvidia.

In regards to Xeon Phi’s “38% better scaling,” Nvidia also said that Intel’s comparison includes its latest Xeon Phi servers with the latest interconnect technology, which Intel pitted against four-year-old Kepler-based Titan X systems. Nvidia mentioned that Baidu has already proven that, for instance, speech training workloads scale almost linearly across 128 Maxwell GPUs.

Nvidia also believes that, for deep learning, it’s better to have fewer strong nodes than more weaker nodes anyway. It added that a single one of its latest DGX-1 “supercomputer in a box” is slightly faster than 21 Xeon Phi servers, and 5.3x faster than four Xeon Phi servers.

Considering the OpenAI non-profit just became the first ever customer of a DGX-1 system, it’s understandable that Intel couldn’t use one to compare its Xeon Phi chips against. However, Maxwell-based systems are quite old by now, so it’s unclear why Intel decided to test its latest Xeon Phi chips against GPUs from a few generations ago with software from 18 months ago.

AI Chip Competition Heating Up (In A Good Way)

It’s likely that Xeon Phi is still quite behind GPU systems when it comes to deep learning, in both the performance and software support dimensions. However, if Nvidia’s DGX-1 can barely beat 21 Xeon Phi servers, then that also means the Xeon Phi chips are quite competitive price-wise.

A DGX-1 currently costs $129,000, whereas a single Xeon Phi server chip costs anywhere from $2,000 to $6,000. Even when using 21 of Intel’s highest-end Xeon Phi chips, that system still seems to match the Nvidia DGX-1 on price.

Although the fight between Nvidia and Intel is likely to ramp up significantly over the next few years, what’s going to be even more interesting is whether ASIC-like chips like Google’s TPU can actually be the ones to win the day.

Intel is already using more “general purpose” cores for its Phi coprocessor, and Nvidia still has to think about optimizing its GPUs for gaming. That means the two companies may be unable to follow the more extreme optimization paths of custom deep learning chips. However, software support will also play a big role in the adoption of deep learning chips, and Nvidia arguably has the strongest software support right now.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

bit_user Thanks for the article. I appreciate your coverage of Deep Learning, Lucian, and generally find your articles to be both well-written and accessible. A couple minor points, though...Reply

First, please try to clarify which Xeon Phi product you mean. I wish Intel had called the new chip Xeon Theta, or something, but they didn't. Xeon Phi names a product line, which now has 2 generations. The old generation is code named Knight's Corner, and the new one is Knight's Landing. Intel is referring to the new one, while Nvidia is probably referring to the old one, as Knight's Landing isn't yet publicly available.

four-year-old Kepler-based Titan X

Titan X is neither Kepler-based nor four-years-old.

Intel's paper references a "Titan" supercomputer, containing 32 K20's, which are Kepler-based. The paper also mentions K80 GPUs (also Kepler-based). They don't appear to compare themselves to a Titan-series graphics card, at any point (not least of all, because it's a consumer product, and much cheaper than Nvidia's Tesla GPUs).

It added that a single one of its latest DGX-1 “supercomputer in a box” is slightly faster than 21 Xeon Phi

Which generation? It might be the same thing Intel did - comparing their latest against 4-year-old hardware. Given that the new Xeon Phi generation hasn't yet launched, this seems likely.

It’s likely that Xeon Phi is still quite behind GPU systems when it comes to deep learning, in both the performance and software support dimensions.

The new Xeon Phi did take one significant step backward, which is dropping fast fp16 support - something they even added to their Gen9 HD graphics GPU. Knight's Corner had it, but It doesn't appear to exist, in AVX-512 (what Knights Landing uses).

Anyway, one way to see through the smokescreen of each company's PR is to simply look at the specs. Both Knights Landing and the GP100 have 16 GB of HBM2-class memory (although Knights Landing has an additional 6-channel DDR-4 interface). The rated floating-point performance is 3/6 and 4.7/9.3 TFLOPS (double/single-precision), respectively. So, I'm expecting something on the order of a 2-3x advantage for Nvidia (partly due to their superior fp16). But, maybe Intel can close that gap, if they can harness their strong integer performance on the problem.

One thing is for sure: this beef isn't new, and it's not going away anytime soon. Intel has been comparing Xeon Phi to Tesla GPUs since Knights Corner launched, and Nvidia has been making counter-claims, thereafter. -

I love it when the mud slinging starts. Means lower prices and higher performance. Ahhh, the beauty of capitalism. Why can't more people see it? Competition is good, folks.Reply

-

ZolaIII Both of tham are just bitching around to sell their bad, overpriced un effective products!Reply

Atoms are even uncompetitive in mobile space, that is why Intel retreated from that market. ARM Cortex A72—73s are much better & A32 is most efficient A cortex ever, ARM even puts it against microcontroller last M series by efficiency. They are also available as POP IP ready to print designs. Still general purpose cores are not suitable for deap learning because their design. Only thing where Intel PHI's have architectural advantage compared to single on die compiutional pice of hardware up to date in mani tasks simultaneous execution because their is so much of them naturally. It is possible that Intel used high optimized math libs for the benchmarking but it still isn't a game changer. GPU's also aren't best architectural match for a deep learning because they are graphics processors in the first place. I would come along that they culd be used as comercial already included hardware but that simply isn't the case because commercial consumer ones come with purposely crippled computing performance. All do I would like to see how last ARM Mali generation will perform. DSP's are for a time being the most suitable for the task. New ones from Synopsis, Tensilica, CEVA (possibly others that I am not aware of) that are redesign for deep learning calculation purposes are; from much more efficient (5—8 times), cheaper to produce & available for costume implementations (IP, POP IP blocks), ready to use non property visual computing & deap learning Open Source software they are also reprogramable. ASICS simply can not fit the purpose because they are basically hard printed functions with no flexibility what so ever & even they are most cost/performance effective they still aren't suited for something like deep learning (because tomorrow you may need to perform other functions or altered algorithm's for same/other purpose). FPGA's especially new ones with couple of general purpose cores & DSP's (you can trow in & basic GPU if you need it) & huge programmable gate arias are best suitable for general all around purpose accelerators along with visual processing, deep learning, simultaneously multi processing. Problem with them is they aren't neither cheep (no much design if any offered as IP's) nor fast/easy to reprogram.

Just a grain of salt from me without going into details to make some sense of this hollow, empty discussion about CPU's/GPU's & deep learning. -

bit_user Reply

Basically, using big neural networks capable of higher-level abstractions. Not human-level consciousness or structured thought, but certainly human-level pattern recognition and classification.18449756 said:May I ask, what is "deep learning"?

It was facilitated mainly by GPUs and big data. The industry is already starting to move past GPUs, into specialized ASICs and neural processing engines, however. Big data is a significant part of it, given that it takes a huge amount of data to effectively train a big neural network. -

bit_user Reply

They certainly aren't bad, if you need the flexibility. Intel's is a general-purpose x86-64 chip that can run off-the-shelf OS and software (so, yeah, it can run Crysis).18452103 said:Both of tham are just bitching around to sell their bad, overpriced un effective products!

In contrast, Nvidia's GPUs and Tesla accelerators (technically, their GP100 isn't a GPU, since it lacks any graphics-specific engines) must be programmed via CUDA, and are applicable to only a narrower set of tasks.

Your error is extrapolating from mobile and old-generation Atom cores to HPC and the new Atom cores. These have 4 threads and two 512-bit vector engines per core. With so many cores, SMT is important for hiding memory access latency, yet A72/A73 have just one thread per core. Regarding vector arithmetic, ARMv8 has only 128-bit vector instructions, and those have probably just 1 per core. Also, I don't know how many issue slots the Knights Landing cores have, but A72 is 3-way, while A73 is only 2-way.18452103 said:ARM Cortex A72—73s are much better

I can't comment much on FPGAs, but they won't have 16 GB of HBM2 memory. Deep learning is very sensitive to memory bandwidth. And if you need full floating-point, I also question whether you can fit as many units as either the GP100 or Knights Landing pack.

-

Gzerble Deep learning is loosely based on neural networks; it's an iterative method of stochastic optimization. These have achieved some very good results in things computers are traditionally very bad at by finding a "cheap" solution that is close enough to accurate (object recognition being a clear example; the latest software of the Kinect is obviously based on this). This can be abstracted to plenty of single precision mathematical actions over a decent chunk of memory; a domain in which GPUs excel. Caffe, the software they are using, is the most popular deep learning software out there. It has been optimized to work very well with Cuda, which is why Nvidia cards are so very popular with the deep learning community.Reply

Now, the Xeon Phi has a lot working for it; Intel are about to release a 72 core behemoth, with each core capable of four threads, and AVX512 operations which allow insane parallelization over what will now be extremely high performance memory. Caffe is obviously not optimized for this architecture yet, but it is obvious that Intel have put a lot of thought into this market, and it seems that the Xeon Phi may offer a higher throughput per watt.

As for people thinking that ARM chips are suited to these kinds of workloads: their memory controller is too weak, they don't have the right instruction sets for this kind of work, and you need an entire platform for this kind of work - and ARM's weakness is that each platform is custom built, rather than having a standardized offer which can promise long term support and service. ARM servers are not for these kinds of applications. -

FUNANDJAM I like the part where a company that does shady things gets mad at another company for pulling one on them.Reply -

bit_user Reply

I might be mistaken, but I think Nvidia's GP100 has 224 cores, each with one 512-bit vector engine. Unlike Knights Landing, I think these are in-order cores. Traditionally, GPU threads weren't as closely bound to cores as in CPUs, but I need to refresh myself on Maxwell/Pascal.18453185 said:Now, the Xeon Phi has a lot working for it; Intel are about to release a 72 core behemoth, with each core capable of four threads, and AVX512 operations which allow insane parallelization over what will now be extremely high performance memory.

Well, there is an OpenCL fork of Caffe, but their whitepaper (see article, for link) says they used an internal, development version of Caffe. I'm going to speculate that it's not using the OpenCL backend.18453185 said:Caffe is obviously not optimized for this architecture yet

I doubt that. It has the baggage of 6-channel DDR4, it lacks meaningful fp16 support, has lower peak FLOPS throughput, and its cores are more optimized for general-purpose software. I'm not a hater, though. Just being realistic.18453185 said:Xeon Phi may offer a higher throughput per watt.

Machine learning doesn't play well on the advantages Knights Landing has over a GPU, as it mostly boils down to vast numbers of dot-products. It's quite a good fit for GPUs, really. Even better than bitcoin mining, I'd say.

There are a few chips with comparable numbers of ARM cores, and scaled-up memory subsystems. But I'm not aware of any with HBM2 or HMC. Nor do they have extra-wide vector pipelines (I don't even know if ARM would let you do that, and still call it an ARM core).18453185 said:As for people thinking that ARM chips are suited to these kinds of workloads: their memory controller is too weak, they don't have the right instruction sets for this kind of work, and you need an entire platform for this kind of work - and ARM's weakness is that each platform is custom built, rather than having a standardized offer which can promise long term support and service. ARM servers are not for these kinds of applications.

I see Knights Landing as a response not only to the threat from GPUs, but also from the upcoming 50-100 core ARM chips. Already, we can say that it won't kill off the GPUs. However, it might grab a big chunk of the market targeted by the many-core ARMs.

Yeah, definitely amusing. What we need is some good, independent benchmarks. Especially for Capitalism to work as well as Andy Chow would like to believe it does. Not to knock capitalism, but you can't really point to this he-said/she-said flurry of PR-driven misinformation as any kind of exemplar.18453644 said:I like the part where a company that does shady things gets mad at another company for pulling one on them. -

Zerstorer1 This is all classical computing which is fruitless for deep learning. You need quantum computing to build these neural nets. Why do you think Google, NASA, NSA, Apple are using D-Wave quantum computers that scale vastly for real Deep learning which is actually another name for artficial intelligence.Reply