Why you can trust Tom's Hardware

Our HDR benchmarking uses Portrait Displays’ Calman software. To learn about our HDR testing, see our breakdown of how we test PC monitors.

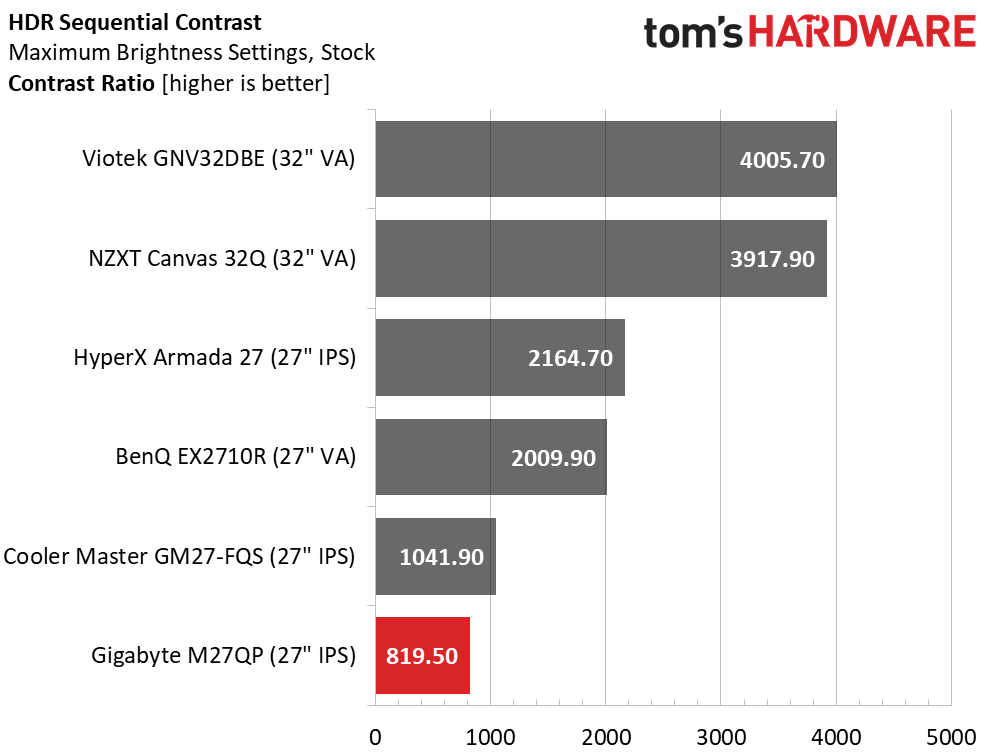

The M27QP carries a VESA DisplayHDR 400 certification, giving it decent, if not extreme brightness for HDR content. I was disappointed not to see some sort of dynamic contrast option like an edge-zone or field-dimming backlight.

HDR Brightness and Contrast

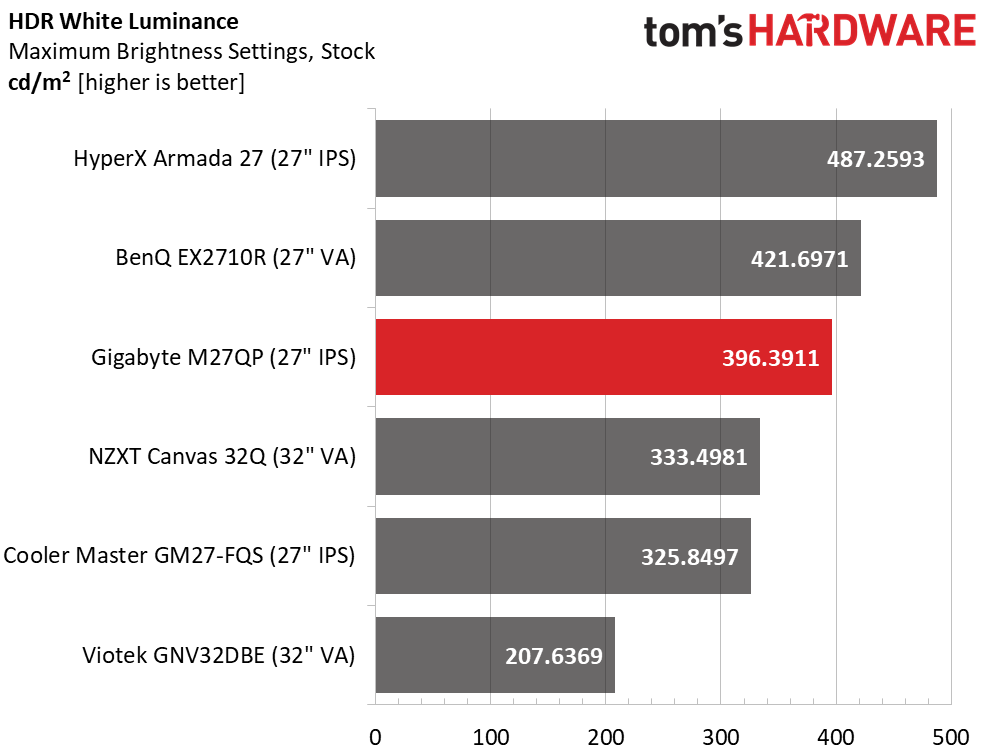

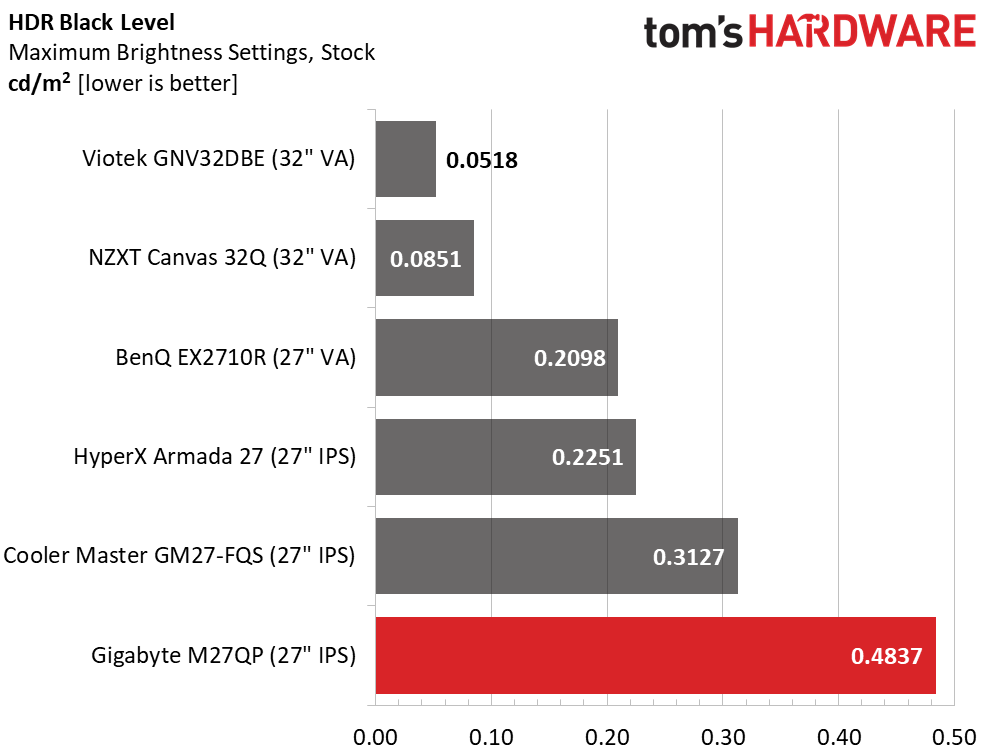

The M27QP comes up a tad short of 400 nits in my test, but no one will be able to spot a 3.6089-nit discrepancy. Black levels are about as high as they are in SDR mode, so contrast is only a tad better at 819.5:1. In practice, HDR content pops a little more because the backlight is locked at its maximum output and color saturation is high. But there is a lot of unused potential; even a field-dimming feature would make a positive impact.

Grayscale, EOTF and Color

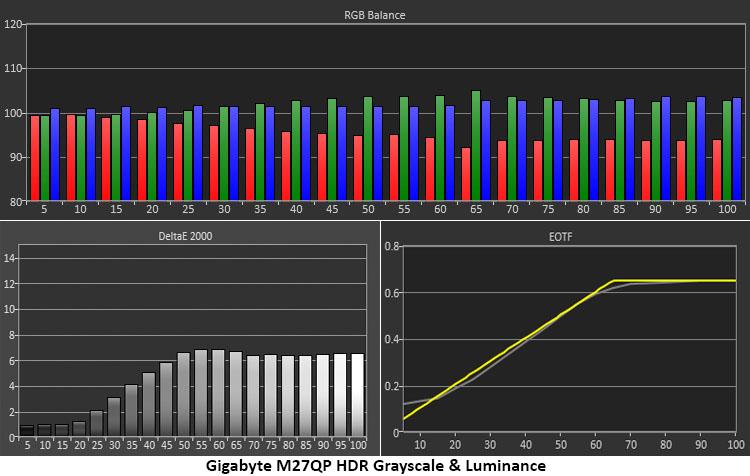

The M27QP’s overall HDR accuracy is good but not great. The settings are fixed, so you can’t calibrate the grayscale which runs cool from 30 to 100%. Luminance tracking is accurate after the 15% step with little deviation from the reference and a transition to tone-mapping at 65%. The darkest steps are rendered too brightly, robbing the image of depth. Better black levels would be welcome.

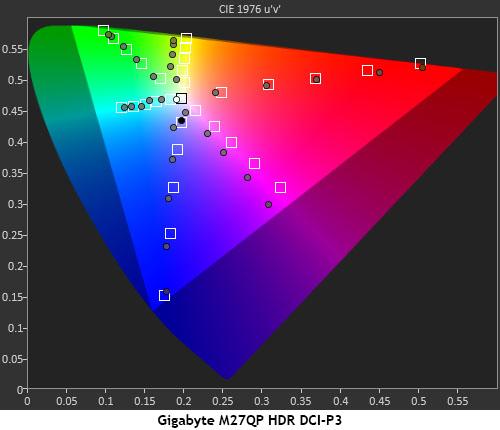

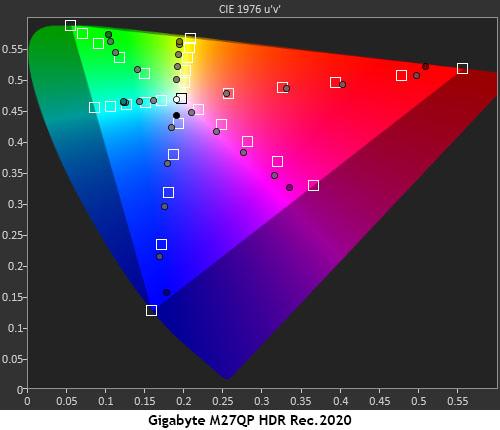

In the color tests, the M27QP does very well. Aside from slight hue errors in magenta and yellow, the measurements are nearly all on-target. Green is only a tad shy of full saturation, which is better than most wide gamut displays can boast. When measured against Rec.2020, the M27QP tracks the saturation targets correctly until it runs out of color at around 95%. This means all HDR content will have its color accurately rendered, whether mastered to DCI-P3 or Rec.2020.

MORE: Best Gaming Monitors

MORE: How We Test PC Monitors

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: How to Buy a PC Monitor: A 2022 Guide

MORE: How to Choose the Best HDR Monitor

Christian Eberle is a Contributing Editor for Tom's Hardware US. He's a veteran reviewer of A/V equipment, specializing in monitors. Christian began his obsession with tech when he built his first PC in 1991, a 286 running DOS 3.0 at a blazing 12MHz. In 2006, he undertook training from the Imaging Science Foundation in video calibration and testing and thus started a passion for precise imaging that persists to this day. He is also a professional musician with a degree from the New England Conservatory as a classical bassoonist which he used to good effect as a performer with the West Point Army Band from 1987 to 2013. He enjoys watching movies and listening to high-end audio in his custom-built home theater and can be seen riding trails near his home on a race-ready ICE VTX recumbent trike. Christian enjoys the endless summer in Florida where he lives with his wife and Chihuahua and plays with orchestras around the state.

-

truerock In 2012 I built a PC with an nVidia GeForce GTX 690 video card.Reply

USB 3.0, PCIe 3.0, SATA III, DDR3 memory, 120GB SSD, etc.

It runs a 27" monitor at 1080p, 8-bits, 60Hz.

I can't believe PCs have advanced so little in 10 and 1/2 years.

I guess when PCs have moved up to USB 4 and PCIe 4, DDR 4... I'll be ready to upgrade.

If it will run a 4k, 10-bits, 120Hz monitor (80 Gb/s).

I guess that will be in 2024? 2025? I hope I don't have to wait until 2026. -

SyCoREAPER Replytruerock said:In 2012 I built a PC with an nVidia GeForce GTX 690 video card.

USB 3.0, PCIe 3.0, SATA III, DDR3 memory, 120GB SSD, etc.

It runs a 27" monitor at 1080p, 8-bits, 60Hz.

I can't believe PCs have advanced so little in 10 and 1/2 years.

I guess when PCs have moved up to USB 4 and PCIe 4, DDR 4... I'll be ready to upgrade.

If it will run a 4k, 10-bits, 120Hz monitor (80 Gb/s).

I guess that will be in 2024? 2025? I hope I don't have to wait until 2026.

What on earth are you rambling about? None of what you said has any relevance to this monitor.

Can I call you a cab to take you home? -

truerock Replysycoreaper said:What on earth are you rambling about? None of what you said has any relevance to this monitor.

Can I call you a cab to take you home?

Fair point... I guess it was off topic.

My "ramble" was trying to say, "170Hz QHD... so what". I was trying to put that in context. -

SyCoREAPER Replytruerock said:Fair point... I guess it was off topic.

My "ramble" was trying to say, "170Hz QHD... so what". I was trying to put that in context.

Makes more sense.

The 170hz is the big deal. It wasn't until fairly recent that monitors moved above 144hz which was a big deal. IIRC there are monitors that go above that but you are getting into build multiple computers for the price territory.

As for resolutions, 4K isn't really that prevalent, at least not with high refresh rate and affordable prices mainly because most cards until this Gen simply could barely get triple digits at 1440 in AAA titles. -

truerock Replysycoreaper said:Makes more sense.

The 170hz is the big deal. It wasn't until fairly recent that monitors moved above 144hz which was a big deal. IIRC there are monitors that go above that but you are getting into build multiple computers for the price territory.

As for resolutions, 4K isn't really that prevalent, at least not with high refresh rate and affordable prices mainly because most cards until this Gen simply could barely get triple digits at 1440 in AAA titles.

I absolutely agree with you.

I'm expressing an emotional impatience with how slowly PC technology has advanced over the last 10 years.

I'm kind of the opposite of a lot of people who want future technology to support old technology standards.

I think Apple is good at getting rid of the old and moving more quickly to new technology.

I occasionally will attach a 4k Samsung TV to my 10-year-old PC just to get a feel of the experience. It is very cool. Unfortunately, on my 10-year-old PC 4k-video runs at 30Hz, 8-bit.

Oh... I just rambled aimlessly again... my bad. -

SyCoREAPER Replytruerock said:I absolutely agree with you.

I'm expressing an emotional impatience with how slowly PC technology has advanced over the last 10 years.

I'm kind of the opposite of a lot of people who want future technology to support old technology standards.

I think Apple is good at getting rid of the old and moving more quickly to new technology.

I occasionally will attach a 4k Samsung TV to my 10-year-old PC just to get a feel of the experience. It is very cool. Unfortunately, on my 10-year-old PC 4k-video runs at 30Hz, 8-bit.

Oh... I just rambled aimlessly again... my bad.

Moore's Law is dead, has been for a while unfortunately. -

Wimpers Replysycoreaper said:Moore's Law is dead, has been for a while unfortunately.

What did you expect? We can't keep cramming more and more transistors on the same space and/or ramp up the frequency, there actually are physical constraints to about everything.

We haven't reached them yet when it comes to storage capacity and perhaps memory and network or bus speeds but for a lot of other things only parallelisation is an option but this is not possible everywhere and sometime requires a redesign and adds some overhead. -

SyCoREAPER ReplyWimpers said:What did you expect? We can't keep cramming more and more transistors on the same space and/or ramp up the frequency, there actually are physical constraints to about everything.

We haven't reached them yet when it comes to storage capacity and perhaps memory and network or bus speeds but for a lot of other things only parallelisation is an option but this is not possible everywhere and sometime requires a redesign and adds some overhead.

Congrats?

I know that, I was explaining to OP why he feels that PC components haven't evolved further than they thought by now.