Early Verdict

Until a formidable x86 competitor emerges, Intel’s Scalable Processor series will remain the lineup to beat. Overall, it delivers in more areas than we expected, and a host of new networking and storage options provides nearly everything on a single platform.

Pros

- +

Strong Multi-Threaded and single-threaded performance

- +

Memory throughput and capacity

- +

Hardware accelerators

Cons

- -

Price

- -

Segmentation

Why you can trust Tom's Hardware

Intel Redefines Xeon

Intel dominates in the data center. And although AMD's EPYC is on the horizon, for now, the company primarily competes with itself. Intel has to give its customers a reason to upgrade, and adding more cores typically helps. Unfortunately, the weight of legacy interconnects has slowed progress on that front. Clearly, architectural changes need to happen, even if there are growing pains to contend with.

That's where we find Intel and its Xeon Scalable Platform Family. Look beyond the obviously re-conceptualized branding for a moment. The real improvements come from a reworked cache topology and, perhaps more important, a new mesh interconnect that increases processor scalability and performance. These alterations, some of which surfaced in the Core i9-7900X, set the stage for a more significant speed-up than we expected from the Skylake architecture.

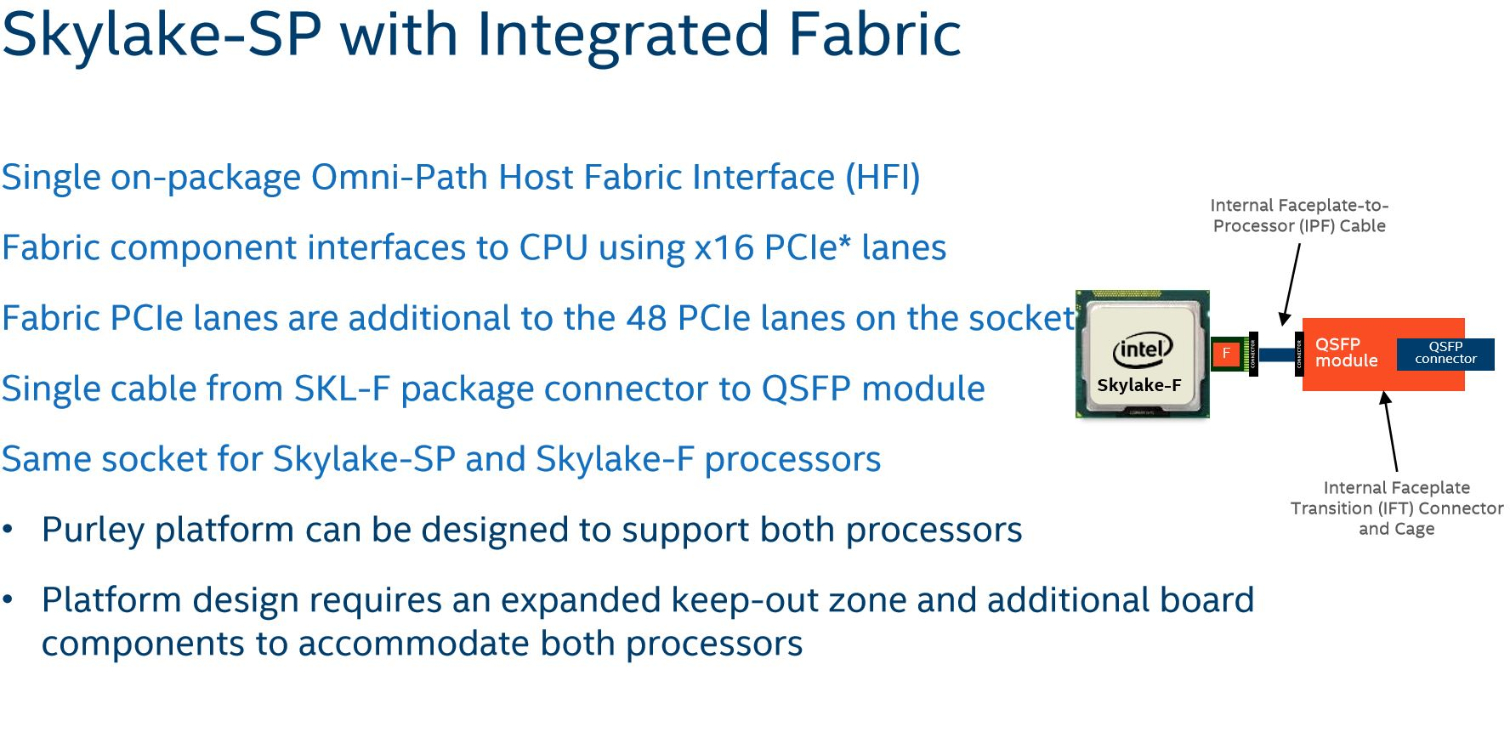

Perhaps you already know about the support for quicker DDR4 memory modules, a lot more PCIe connectivity, and AVX-512 extensions. There are also expanded QuickAssist Technology capabilities. In an effort to create new opportunities, Intel adds other features that go beyond what you'd expect from a typical host processor. It builds a 100 Gb/s Omni-Path networking fabric directly onto the Xeon package, which is complemented by a quad-10Gb Ethernet controller on the platform controller hub. We're even told to expect integrated FPGAs in the future.

All of this functionality leads to an overwhelming product stack of 58 new Xeon processors. If that isn't enough differentiation for you, enjoy choosing between seven PCH options.

The Xeon Platinum 8176 Scalable Processor

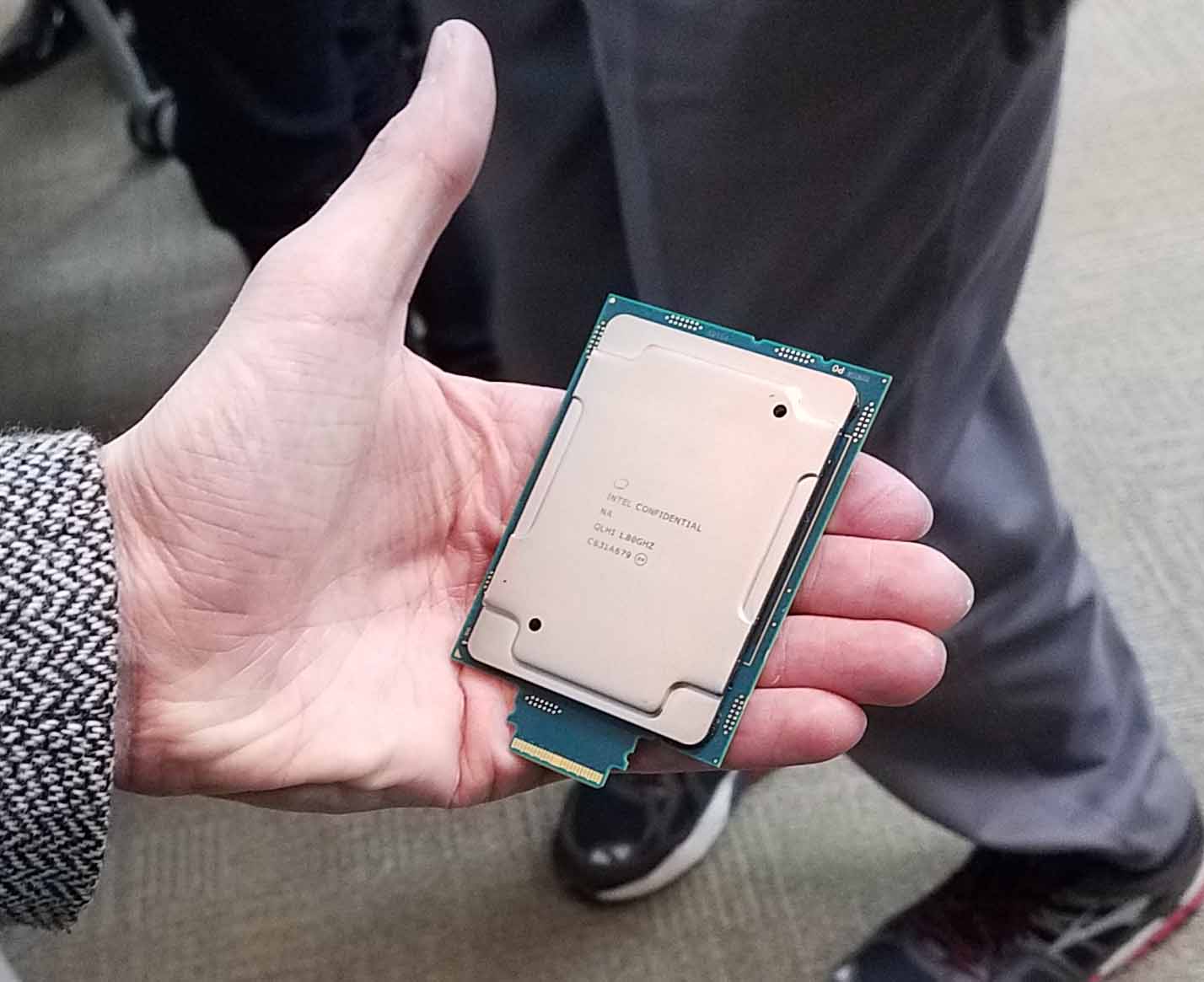

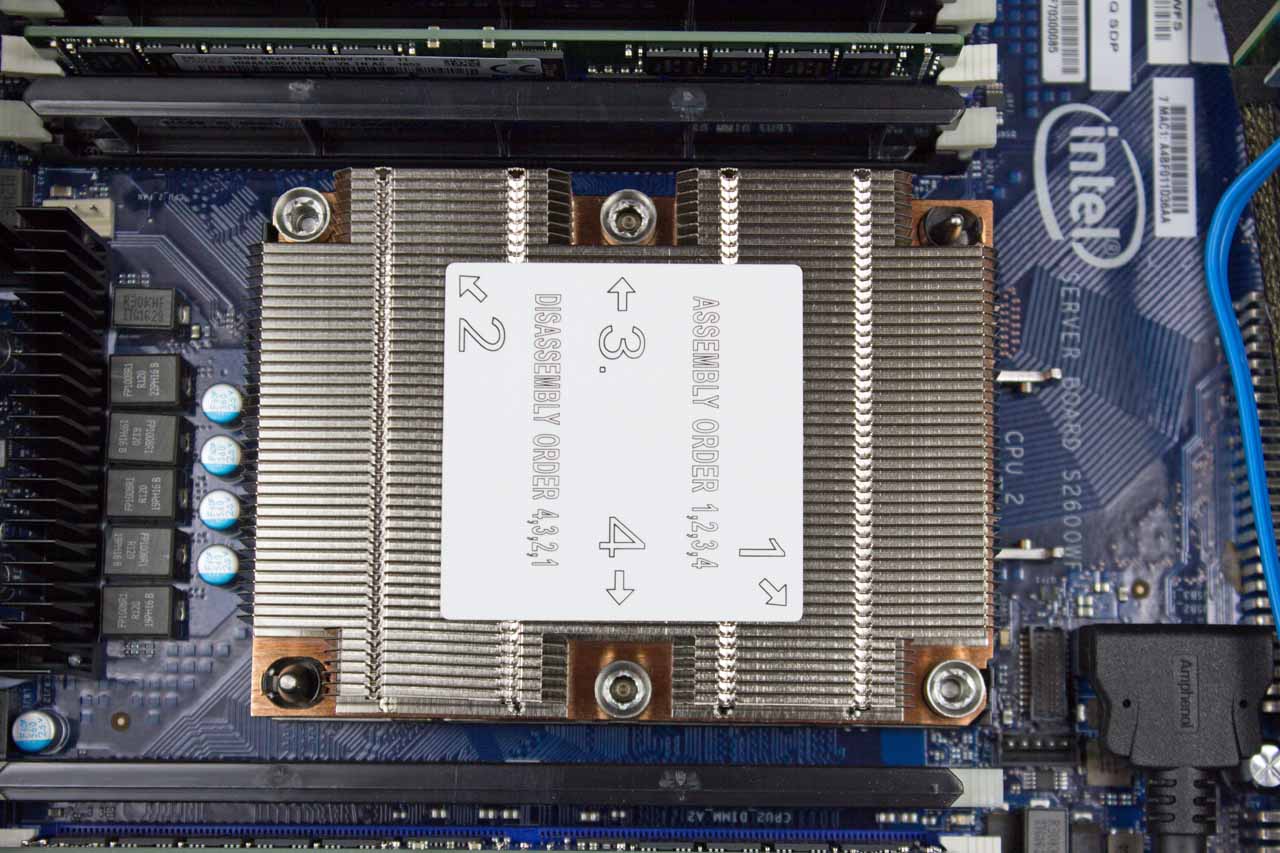

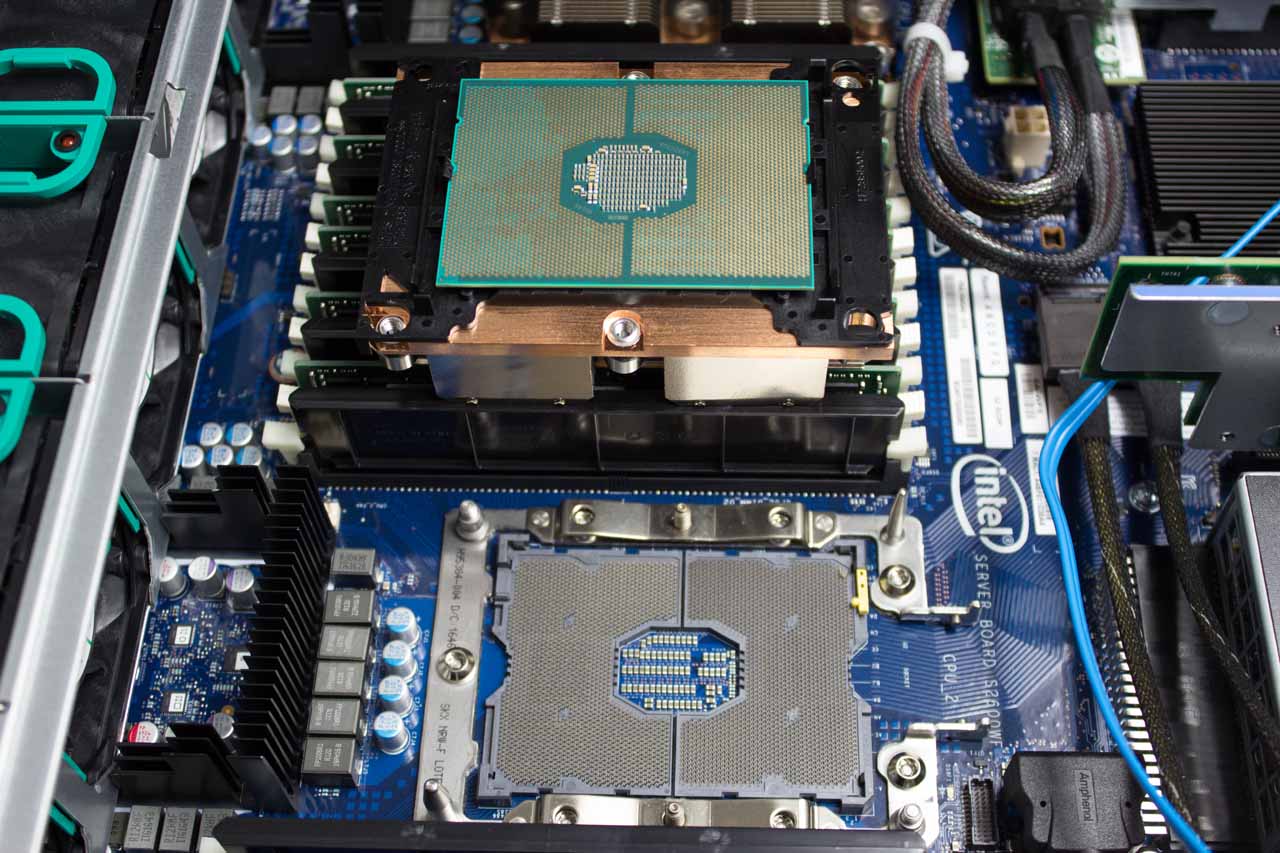

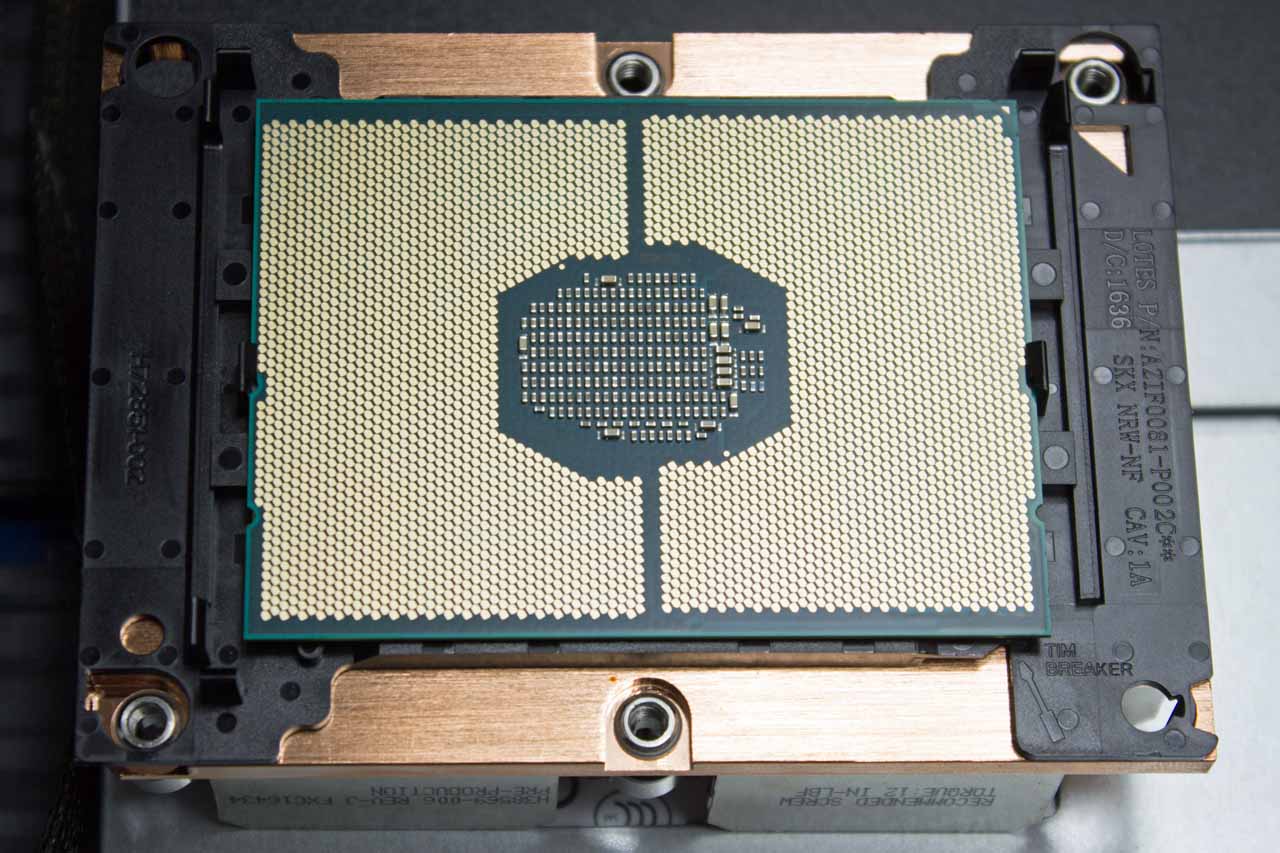

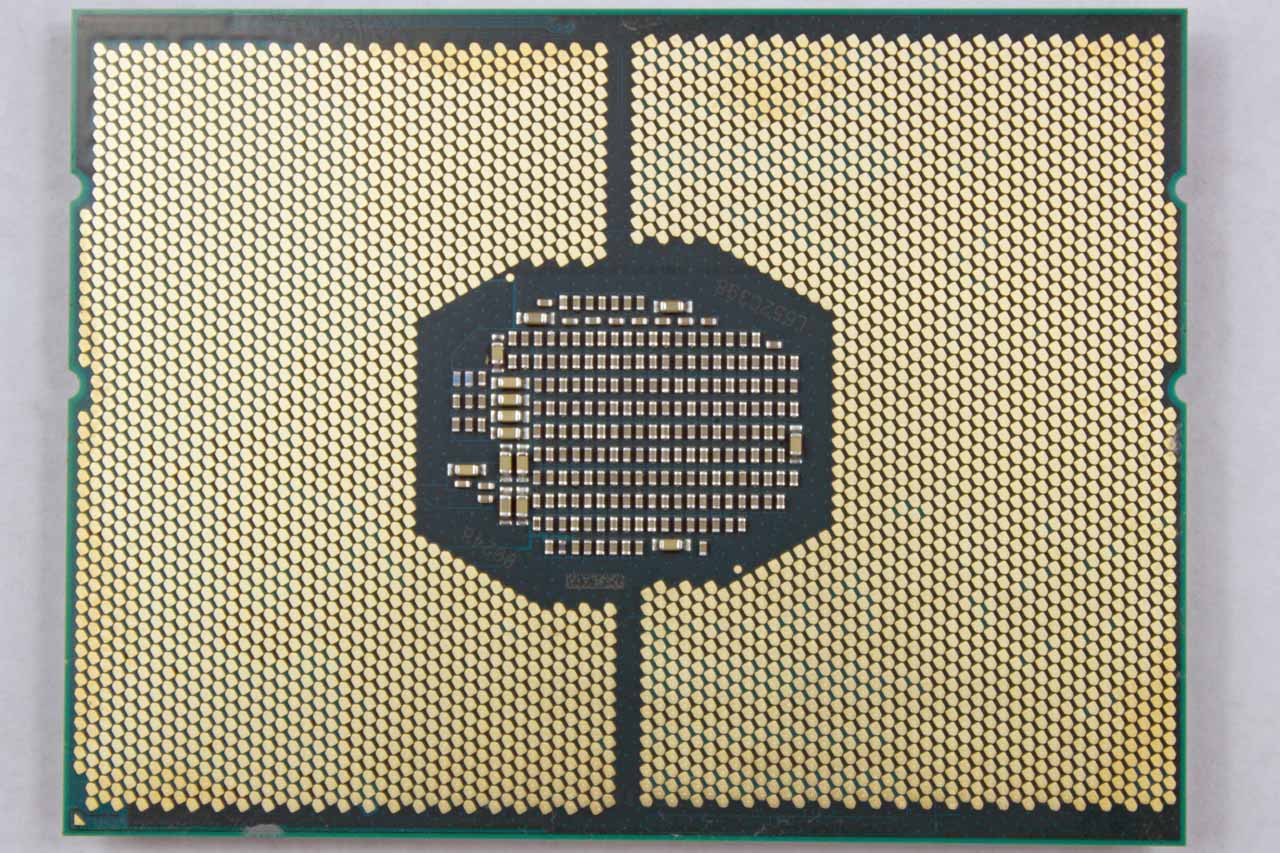

The Platinum 8176 processor is a big step forward. Here, we see it flanked by Intel's desktop Core i7 and HEDT Core i9 CPUs. Although we're no longer dealing with the socket latching mechanism Intel previously employed, these chips still use a Land Grid Array (LGA) interface. Interestingly, you first snap the processor into the heat sink, where two plastic clamps hold it tight. A pair of guide pins on the socket ensure correct sink and processor placement. Then, once installed, you secure the heat sink with four Torx screws. The processors drop into Socket P, otherwise known as LGA 3647, on platforms with Intel's C610 Lewisburg family of platform controller hubs.

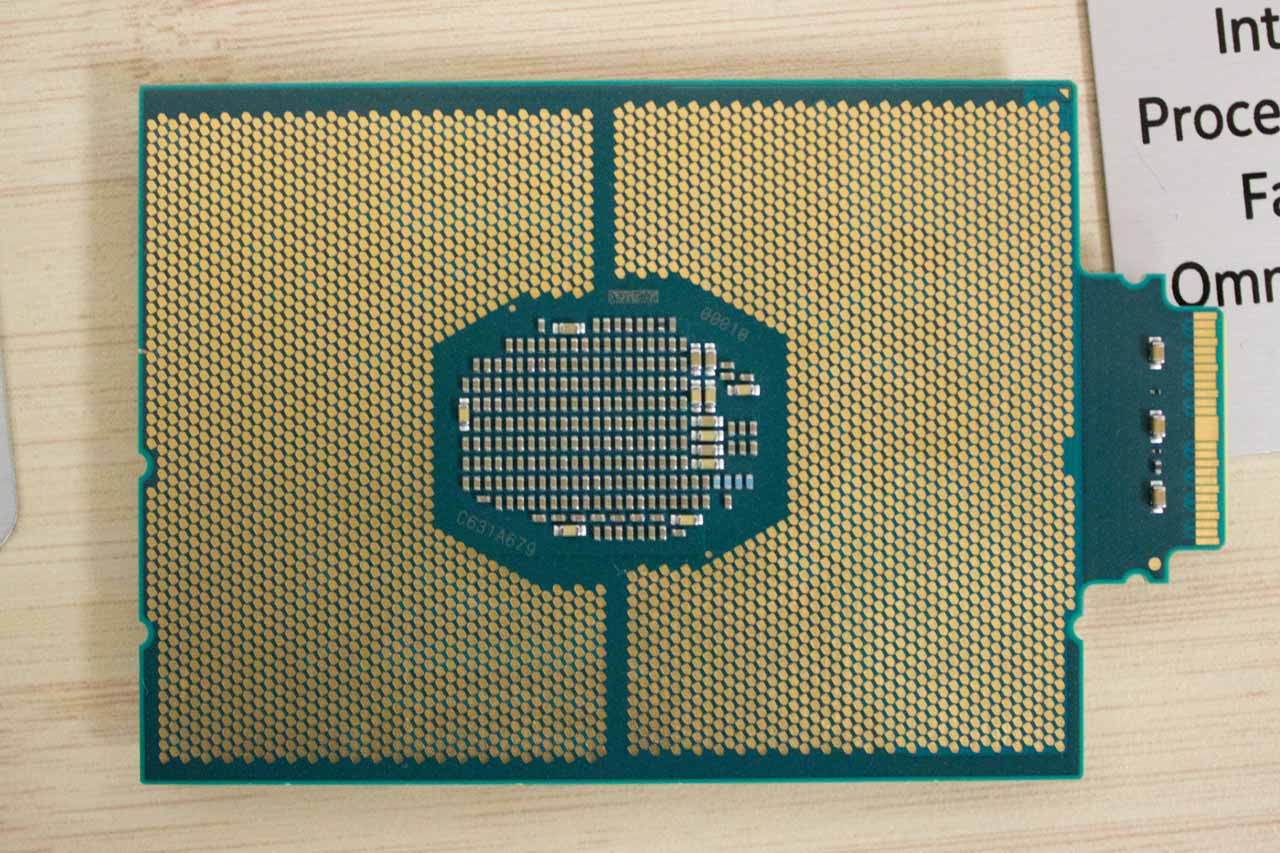

That massive expanse of 3647 pins, representing a huge jump from last gen's 2011-pin arrays, helps enable a host of fresh functionality. The socket mounting mechanism also has a large gap on one end to accommodate an Internal Faceplate-to-Processor cable that plugs into Omni-Path-enabled processors. Plugging networking cables into CPUs? Intel pioneered that with its Xeon Phi processors, which share the same socket design. We've included a picture of a Xeon processor with Omni-Path connectivity, and we can see the PCB extension that houses the Host Fabric Interface (HFI). This enables 100 Gb/s of network throughput through a dedicated on-chip PCIe 3.0 x16 connection. You're going to have to pay dearly for it; we just don't know how much yet.

Even without Omni-Path, you can expect a lot of these Xeons to cost an arm and a leg. The 28C/56T Platinum 8176 sells for no less than $8719, and if you want the maximum per-core performance, get ready to drop $13,011 for each Platinum 8180M. Most of these models will find homes in OEM systems, so there are plenty of deployment options; the processor supports from two to eight+ sockets.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Specifications

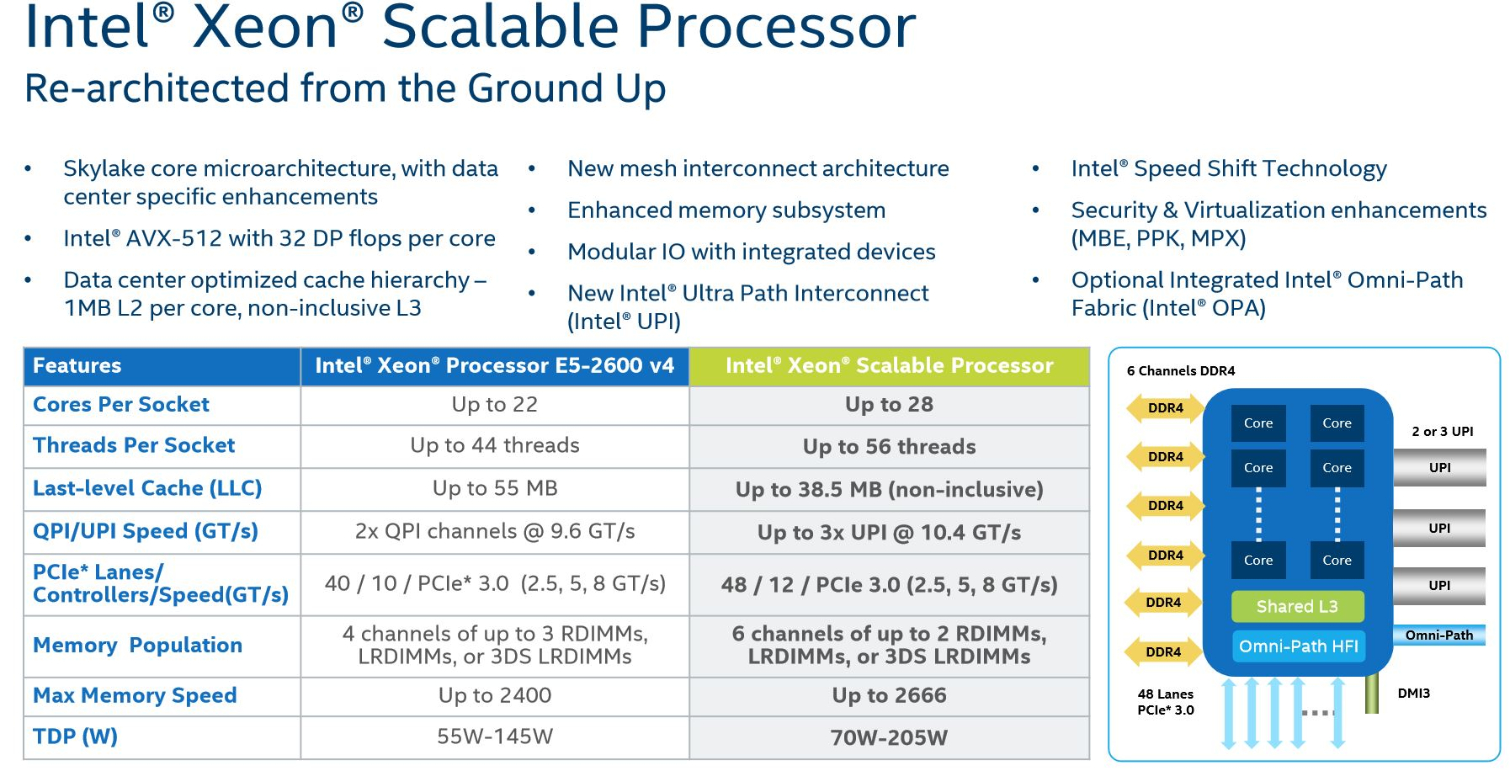

The Platinum family's higher core counts definitely sets it apart from the previous-gen Xeon E5-2600 v4 line-up, based on Broadwell-EP. Intel went from a 22-core ceiling to 28, and Hyper-Threading doubles that difference per socket when you're looking at available threads to schedule. As with Core i9-7900X, memory support goes from DDR4-2400 to DDR4-2666. More important, though, and unlike Skylake-X, we're now working with as many as six memory channels, rather than four. This facilitates up to 768GB of DDR4 for the standard models and up to 1.5TB for the enhanced "M" models. The six-channel design also provides a >60% improvement in theoretical bandwidth, which is a necessary upgrade to feed the additional cores.

Intel scales back on last-level cache: the E5-2600 family came with up to 55MB, while the Platinum series tops out at 38.5MB. However, it quadruples the L2 cache, ramping up from 256KB per core to 1MB. Purportedly, that helps in a majority of data center-oriented workloads, which tend to prize locality.

| 165W 8176 | Active Cores --> | 1-2 | 3-4 | 5-8 | 9-12 | 13-16 | 17-20 | 21-24 | 25-28 |

|---|---|---|---|---|---|---|---|---|---|

| 2.1 GHz Non-AVX Base | Non-AVX Turbos | 3.8 | 3.6 | 3.5 | 3.5 | 3.4 | 3.1 | 2.9 | 2.8 |

| 1.7 GHz AVX 2.0 Base | AVX 2.0 Turbos | 3.6 | 3.4 | 3.3 | 3.3 | 2.9 | 2.7 | 2.5 | 2.4 |

| 1.3 GHz AVX-512 Base | AVX-512 Turbos | 3.5 | 3.3 | 3 | 2.6 | 2.3 | 2.1 | 2 | 1.9 |

The 165W Platinum 8176, like most Intel processors, has various Turbo Boost frequencies dictated by active core count and instruction stream composition. Intel's Turbo Boost algorithms are powerful; they allow the processor to produce more work per-core with both non-AVX and "denser" AVX-based workloads. There are also different base frequencies corresponding to the various instruction sets. This matrix gets pretty complex, especially if you want to compare multiple SKUs. We compiled the 8176's various clock rates into the table above as an example.

In this generation, Intel transitions from the QuickPath Interconnect (QPI), a dedicated bus that allows one CPU to communicate with other CPUs, to its Ultra Path Interconnect. The UPI increases bandwidth to 10.4 GT/s per channel and features a more efficient protocol. You'll notice the Platinum 8176 has three UPI links, much like the old Xeon E7s.

Intel also claims AVX-512 support enables up to a 60% increase in compute density. Moreover, the step up to 48 PCIe 3.0 lanes offers up to 50% higher aggregate bandwidth. This increase is important, as a proliferation of NVMe-based storage drives more traffic across PCI Express. Intel also offers integrated QuickAssist Technology in the chipset to accelerate cryptographic workloads and compression/decompression performance.

Intel's new vROC (Virtual RAID On CPU) technology also surfaces, allowing you to create RAID volumes with up to 24 SSDs. The CPU manages this array instead of the PCH, improving performance and enabling boot support. Counter to most speculation, the technology accommodates any SSD vendor that chooses to support it; you're not confined to Intel SSDs. Simply pay $100 for a RAID 0- and 1-capable key or $250 for RAID 5.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPU Content

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

the nerd 389 Do these CPUs have the same thermal issues as the i9 series?Reply

I know these aren't going to be overclocked, but the additional CPU temps introduce a number of non-trivial engineering challenges that would result in significant reliability issues if not taken into account.

Specifically, as thermal resistance to the heatsink increases, the thermal resistance to the motherboard drops with the larger socket and more pins. This means more heat will be dumped into the motherboard's traces. That could raise the temperatures of surrounding components to a point that reliability is compromised. This is the case with the Core i9 CPUs.

See the comments here for the numbers:

http://www.tomshardware.com/forum/id-3464475/skylake-mess-explored-thermal-paste-runaway-power.html -

Snipergod87 Reply19926080 said:Do these CPUs have the same thermal issues as the i9 series?

I know these aren't going to be overclocked, but the additional CPU temps introduce a number of non-trivial engineering challenges that would result in significant reliability issues if not taken into account.

Specifically, as thermal resistance to the heatsink increases, the thermal resistance to the motherboard drops with the larger socket and more pins. This means more heat will be dumped into the motherboard's traces. That could raise the temperatures of surrounding components to a point that reliability is compromised. This is the case with the Core i9 CPUs.

See the comments here for the numbers:

http://www.tomshardware.com/forum/id-3464475/skylake-mess-explored-thermal-paste-runaway-power.html

Wouldn't be surprised if they did but also wouldn't be surprised in Intel used solder on these. Also it is important to note that server have much more airflow than your standard desktop, enabling better cooling all around, from the CPU to the VRM's. Server boards are designed for cooling as well and not aesthetics and stylish heat sink designs -

InvalidError Reply

That heat has to go from the die, through solder balls, the multi-layer CPU carrier substrate, those tiny contact fingers and finally, solder joints on the PCB. The thermal resistance from die to motherboard will still be over an order of magnitude worse than from the die to heatsink, which is less than what the VRM phases are sinking into the motherboard's power and ground planes. I wouldn't worry about it.19926080 said:the thermal resistance to the motherboard drops with the larger socket and more pins. This means more heat will be dumped into the motherboard's traces.

-

bit_user ReplyThe 28C/56T Platinum 8176 sells for no less than $8719

Actually, the big customers don't pay that much, but still... For that, it had better be made of platinum!

That's $311.39 per core!

The otherwise identical CPU jumps to a whopping $11722, if you want to equip it with up to 1.5 TB of RAM instead of only 768 GB.

Source: http://ark.intel.com/products/120508/Intel-Xeon-Platinum-8176-Processor-38_5M-Cache-2_10-GHz -

Kennyy Evony jowen3400 21 minutes agoReply

Can this run Crysis?

Jowen, did you just come up to a Ferrari and ask if it has a hitch for your grandma's trailer? -

bit_user Reply

I wouldn't trust a $8k server CPU I got for $100. I guess if they're legit pulls from upgrades, you could afford to go through a few @ that price to find one that works. Maybe they'd be so cheap because somebody already did cherry-pick the good ones.19927274 said:W8 on ebay\aliexpress for $100

Still, has anyone had any luck on such heavily-discounted server CPUs? Let's limit to Sandybridge or newer. -

JamesSneed Reply19927188 said:The 28C/56T Platinum 8176 sells for no less than $8719

Actually, the big customers don't pay that much, but still... For that, it had better be made of platinum!

That's $311.39 per core!

The otherwise identical CPU jumps to a whopping $11722, if you want to equip it with up to 1.5 TB of RAM instead of only 768 GB.

Source: http://ark.intel.com/products/120508/Intel-Xeon-Platinum-8176-Processor-38_5M-Cache-2_10-GHz

That is still dirt cheap for a high end server. An Oracle EE database license is going to be 200K+ on a server like this one. This is nothing in the grand scheme of things.

-

bit_user Reply

A lot of people don't have such high software costs. In many cases, the software is mostly home-grown and open source (or like 100%, if you're Google).19927866 said:An Oracle EE database license is going to be 200K+ on a server like this one. This is nothing in the grand scheme of things.