GeForce RTX 3080 20GB GPUs Emerge For Around $575

A cool but pointless SKU in the face of the GeForce RTX 3080 12GB — for gaming, at least

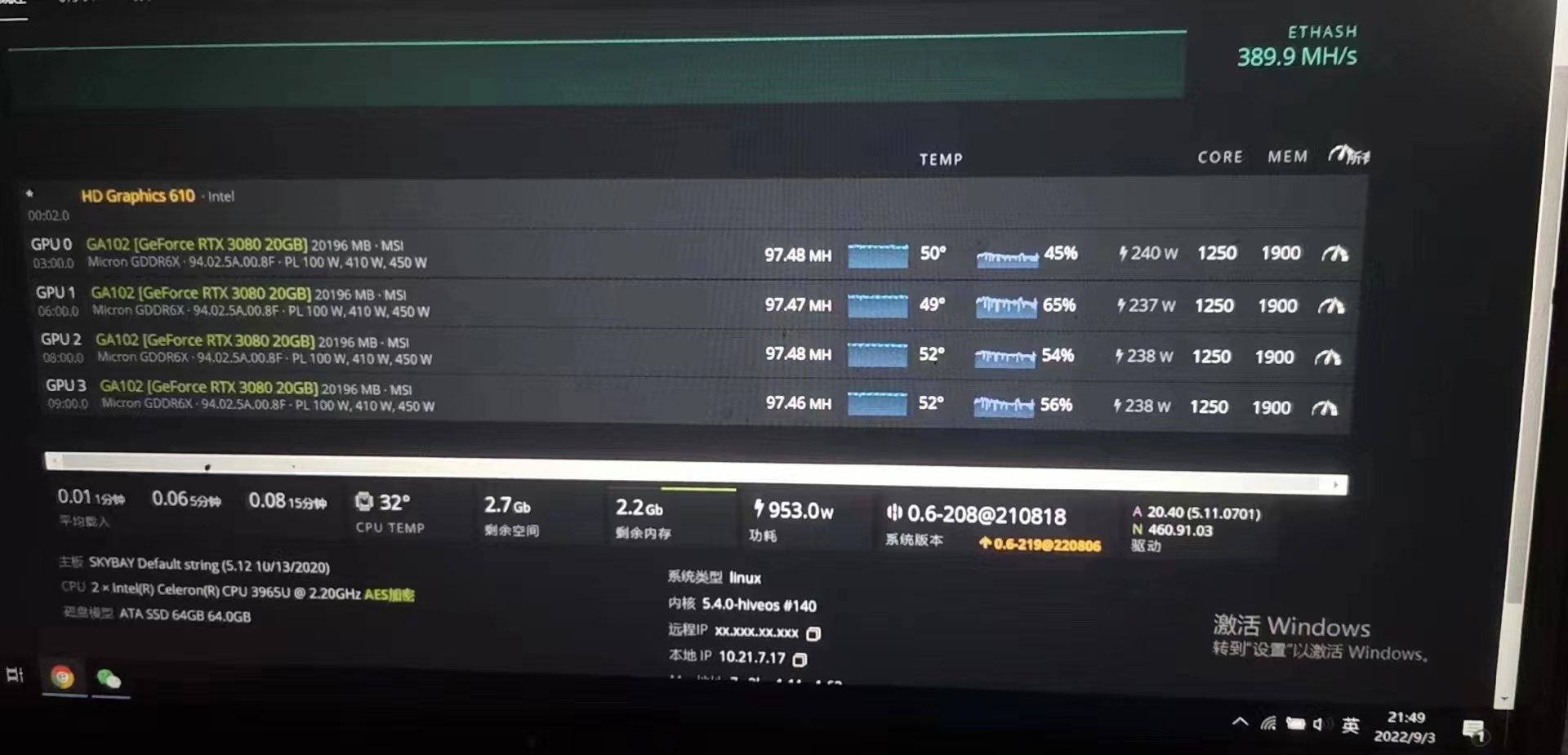

A Twitter user named @hongxing2020 recently shared photos of a mysterious MSI GeForce RTX 3080 Ventus 3X 20G OC, packing an impressive but unusual memory capacity of 20GB. The user claims that up to 100 units are available for between $432 to $576, insinuating that these could be leftover cards from mining operations. Core specifications are unknown, but the graphics card is likely the long-rumored 20GB variant of the vanilla RTX 3080, modified to support Micron memory chips with double the capacity.

It wouldn't be the first time Nvidia has reportedly messed around doubling VRAM capacities on its high-end GPUs. Almost every generation of Nvidia graphics cards has featured some rumor or suspicion pointing to a 2x higher capacity variant well after the GPU's initial release.

In the past, Nvidia has sold different memory capacity variants of the same GPU model, with one variant featuring 2x the memory capacity over the other. This strategy was prevalent during the Kepler generation and older models, where cards like the GTX 780 3GB and GTX 770 2GB had additional 6GB and 4GB models, respectively.

Making a new GPU with 2x the memory capacity isn't hard; it's one of the easiest ways to add additional VRAM capacity to a GPU. All Nvidia has to do is swap out memory ICs with ones featuring two times the capacity of the previous variant. Adding other levels of VRAM lower or higher than 2x on the other hand gets much more complicated. That would involve modifying the memory bus width and memory configuration, as memory manufacturers almost always make memory ICs based on 512MB, 1GB, or 2GB memory configs with no in between capacities.

More often than not, higher memory configurations come on higher tier products, like the RTX 3090 and RTX 3090 Ti with 24GB of VRAM. However, the RTX 3060 comes with 12GB of memory, even though the faster RTX 3060 Ti, RTX 3070, and RTX 3070 Ti have 8GB. That's perhaps because Nvidia deemed 6GB too little for a modern RTX 3060, unlike the previous generation RTX 2060. But the 3060 has a narrower 192-bit bus, where the 3060 Ti, 3070, and 3070 Ti have a 256-bit bus.

If Nvidia was thinking about an RTX 3080 20GB, it likely didn't make sense. The RTX 3080 Ti has 12GB, and later we got the RTX 3080 12GB. Nvidia seems to have gone with more memory bandwidth rather than more memory capacity for the current RTX 3080 series.

The RTX 3080 12GB wasn't a straight upgrade in memory, either. Nvidia had to upgrade the RTX 3080 12GB with additional memory controllers, and it enabled a few more more CUDA cores (two SMs) to go along with the extra 2GB. While the core configuration is on paper only 3% faster than the RTX 3080 10GB, the additional 20% memory bandwidth proved very beneficial, potentially matching the RTX 3080 Ti (depending on core clocks).

An RTX 3080 20GB likely would have been a downgrade for gamers, as it would have remained with a 320-bit bus. Another issue with a 20GB model is memory utilization in games. Only a select few titles running at very high resolutions and maxed-out texture packs can break 12GB of VRAM. As a result, the RTX 3080 20GB's additional 8GB of VRAM would rarely be helpful for most gamers.

A 20GB SKU might be a good upgrade over a 10GB model, but with a 12GB variant already existing, a 20GB model for gamers makes no sense. The only place an RTX 3080 20GB would make sense is in the prosumer side of the market, where gobs of memory capacity are beneficial for non-gaming tasks such as 3D rendering and high-resolution video timelines with tons of different visual effects. But Nvidia already has the RTX 3090 and RTX 3090 Ti to bridge this gap, making the RTX 3080 20GB even more pointless.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

Amdlova Sound like damage control, nvidia can't give 20gb for gamer and prosumers but give that amount for the miners. I am ready to pay whatever sum intel want on new graphics or moving to Mac. Nvidia and amd scalped the marketReply -

renz496 Reply

Miner only needs 6GB. That's the reason why nvidia cancels the plan for 3080 20GB and 3070 16GB. This 20GB cards that end up in the miners hands is the prototype unit that AIB can't sell to retail market.Amdlova said:Sound like damage control, nvidia can't give 20gb for gamer and prosumers but give that amount for the miners. I am ready to pay whatever sum intel want on new graphics or moving to Mac. Nvidia and amd scalped the market -

Squirrel Lee Posted this on Graphically Challenged YouTube of this article:Reply

What gets me is the article on Tom's Hardware about the configuration requiring a core change for the 12GB models and other component changes over the 10GB model. What? My specialty is definitely not computer architecture or chip design; however I have played with the idea of increasing video RAM by adding an additional module on the PCB that was left vacant. Surprise surprise; I wasn't the only to think of it. It was done by a modder that had previously performed the hack/mod on a previous generation GPU. On a 3080, it required some BIOS hacking IIRC, but that was it. The writer and most of the community is steaming. Whatever; from what I recall, the 3090's hadve RAM mounted on both sides of the PCB because of the lack of 2GB modules. Many many woes were cleaned up in the release of the 3090Ti which I believe carries over to the 4000 series. I post this tidbit, because these 20GB models according to availability had to have been released much later during the shortage and scalping. This ignorant rhetoric has gone too far folks. That author posted hogwash about what an extra 2GB required. Common sense says it was already in the works. Youtube gaming influencers for the most are rumor mill folk with some if not many being competent testers and reviewers. How many have programmed in VHDL, taken computer architecture at an engineering graduate level, or even designed a basic adder or understand a scheduler or even NAND implementation? Leave out the fill and stick to the rumors leaving design speculation and its implentation to those with the background to do so. Even then, it could be a crap shoot, because you were not part of the engineering or design of such a complex development. -

JarredWaltonGPU Reply

Sorry, the main staff was off yesterday for Labor Day, so this slipped through. I've edited the text to remove any suggestion that CUDA core counts are somehow tied to VRAM capacity or memory interfaces.Squirrel Lee said:Posted this on Graphically Challenged YouTube of this article:

What gets me is the article on Tom's Hardware about the configuration requiring a core change for the 12GB models and other component changes over the 10GB model. What? My specialty is definitely not computer architecture or chip design; however I have played with the idea of increasing video RAM by adding an additional module on the PCB that was left vacant. Surprise surprise; I wasn't the only to think of it. It was done by a modder that had previously performed the hack/mod on a previous generation GPU. On a 3080, it required some BIOS hacking IIRC, but that was it. The writer and most of the community is steaming. Whatever; from what I recall, the 3090's hadve RAM mounted on both sides of the PCB because of the lack of 2GB modules. Many many woes were cleaned up in the release of the 3090Ti which I believe carries over to the 4000 series. I post this tidbit, because these 20GB models according to availability had to have been released much later during the shortage and scalping. This ignorant rhetoric has gone too far folks. That author posted hogwash about what an extra 2GB required. Common sense says it was already in the works. Youtube gaming influencers for the most are rumor mill folk with some if not many being competent testers and reviewers. How many have programmed in VHDL, taken computer architecture at an engineering graduate level, or even designed a basic adder or understand a scheduler or even NAND implementation? Leave out the fill and stick to the rumors leaving design speculation and its implentation to those with the background to do so. Even then, it could be a crap shoot, because you were not part of the engineering or design of such a complex development.

At the same time, take a chill pill. It was a minor error, by one of our freelancers. The main point is that adding more cores or memory capacity without increasing the memory interface width won't improve performance in many situations, especially gaming. The RTX 3080 10GB already runs into bandwidth limitations at higher resolutions, which is why the 12GB model performs so much better (with its 20% boost to bandwidth and capacity). 100% more capacity with the same bandwidth wouldn't do much. -

bigdragon Are these legit cards or something modified by miners? I don't recall MSI cards ever making their way to stores during the crypto boom.Reply

I agree that the 20 GB doesn't make a lot of sense as an official SKU unless it was cheaper for Nvidia than making modifications to support 16 GB. What a weird card. I'd be worried about support over the next several years. Sometimes low quantity products get dropped or sent inappropriate updates by mistake. -

JarredWaltonGPU Reply

I sort of wonder if MSI did a prototype run and then when Nvidia canned the 20GB SKU, it just sold the cards to miners. Probably at a price of $1500+ each. All indications are that MSI and Gigabyte were particularly bad about selling huge quantities of GPUs direct to miners.bigdragon said:Are these legit cards or something modified by miners? I don't recall MSI cards ever making their way to stores during the crypto boom.

I agree that the 20 GB doesn't make a lot of sense as an official SKU unless it was cheaper for Nvidia than making modifications to support 16 GB. What a weird card. I'd be worried about support over the next several years. Sometimes low quantity products get dropped or sent inappropriate updates by mistake. -

SSGBryan I'd love to have one of these - I do 3d art & the 20Gb would be great for rendering larger scenes.Reply -

JarredWaltonGPU Reply

That's retail pricing, not buying some used potentially prototype stuff from a questionable source in China or Japan or whatever.thisisaname said:Yet the price of the 3080 in another story dropped to $740... -

ikernelpro4 Remember when NVIDIA cut the 3090? Model VRAM by half in order to have more supply during the supply-chain issues?Reply

Interesting that they now increase the amount unnecessarily....If I had a conspiracy I would say they want to create an artificial supply-chain issue again.