How to Choose the Best HDR Monitor: Make Your Upgrade Worth It

Understand HDR displays and how to find the best one for you

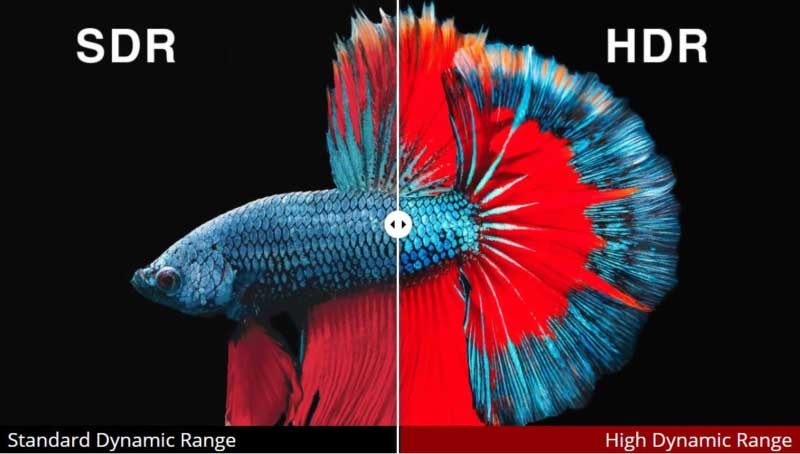

For gamers, movie-watchers or creative professionals, monitors that support high dynamic range (HDR) content are the next step toward a more lifelike display. When you play a familiar game or movie in HDR for the first time, you’ll likely notice more colors and detail, such as brighter highlights and deeper shadows, than what you were able to see on your regular standard dynamic range (SDR) display.

But there are many misconceptions around HDR, its benefits, who needs an HDR display and what makes a good HDR display for PCs. After you read this guide, you’ll be able to decide if you actually want an HDR monitor and how to pick the best one for your rig so you’ll actually notice the difference between SDR and HDR.

Quick Tips

Why you can trust Tom's Hardware

When picking an HDR monitor, these should be your top considerations:

- Brighter is better. HDR monitors can get much brighter than SDR ones. If you’re a general user, opt for a monitor that’s VESA-certified for at least DisplayHDR 500 (a minimum max brightness of 500 nits with HDR media), while gamers will probably want DisplayHDR 600 or greater. Creative professionals like video editors should get at least DisplayHDR 1000.

- Backlight dimming type is crucial. FALD > edge-lit dimming > global dimming. If you go for DisplayHDR 500 or higher, you’ll know you have at least edge-lit dimming. And when it comes to FALD (full-array local dimming) or edge-lit dimming, more zones are better

- The more DCI-P3 coverage, the better. Shoot for at least 85%. But you’ll also want to check our reviews for color accuracy.

- As usual, higher contrast ratios are best. High contrast is an area where HDR displays shine over their SDR counterparts.

- HDR10 is the only HDR format Windows users need unless they plan to hook their display up to something like a Blu-ray player.

- Gamers should still prioritize things like refresh rate, response time and the Nvidia G-Sync or AMD FreeSync range.

Best HDR Monitors

If you're already prepared to buy an HDR monitor, we’ve highlighted our top recommendations below.

Acer Predator X35

If you want something bigger than those two 27-inchers above, the Acer Predator X35 also uses a FALD backlight that boasts 512 zones. Our Acer Predator X35 review found the 35-inch monitor to deliver impeccable HDR with DisplayHDR 1000 certification and contrast levels only bested by pricey OLED and mini-LED displays. It’s one of the best gaming monitors currently available and bosts a 180 Hz refresh rate that’s overclockable to 200 Hz and G-Sync.

Acer Predator X35

Alienware AW5520QF

OLED HDR displays are great at fighting the halo effect, according to VESA, and the 55-inch Alienware AW5520QF effectively has over 8 million dimming 'zones.' It can only hit 400 nits brightness with HDR, making it best in darker rooms, but its theoretically infinite contrast meant HDR content looked stunning in our Alienware AW5520QF review. This is a display for gamers, boasting 4K resolution at a 120 Hz refresh rate and AMD FreeSync with an lower-than-low 0.5ms response time.

Alienware AW5520QF

Asus ProArt PA32UCX

The Asus ProArt PA32UCX is one of the best HDR monitors for creative professionals. With a mini-LED panel, it’s able to pack an impressive 1,152 FALD zones in its 32-inch, 4K screen. It also includes DisplayHDR 1000 ceritifcation, plus HLG and is Dolby Vision, a rarity in PC monitors. In our Asus ProArt PA32UCX review, we praised its color accuracy that makes it a reference display fit for studio production.

Asus ProArt PA32UCX

Apple Pro Display XDR

The Apple Pro Display XDR is the best HDR monitor for Mac users. According to VESA, it’s one of the best HDR monitors it’s tested for avoiding the halo effect (along with the Asus ROG Swift PG27UQ mentioned above). It also uses a FALD backlight, this time packing 576 zones. The 32-inch monitor features a mind-blowing 6K resolution (6016 x 3384) and is also specced to hit up to 1,600 nits max brightness, making it a great fit for video editing. Bonuses include support for Dolby Vision and HLG in addition to HDR10. Just don’t forget the stand will cost you an extra $999.

Apple Pro Display XDR

What’s Does HDR Actually Mean?

We’ve already done an in-depth breakdown of the meaning of HDR, but here’s a quick rundown.

HDR content looks different from SDR content when viewed on an HDR display, and a large part of that is due to how much brighter HDR displays can get. While the best SDR monitors typically max out at around 300 nits brightness, HDR monitors can get much brighter, which means what you see is closer to the massive dynamic range (a cloud can appear to be 10,000 nits, for example) that we see in real life. In fact, how bright an HDR monitor can get and how it gets there should be your first priority when buying an HDR monitor (more on that later).

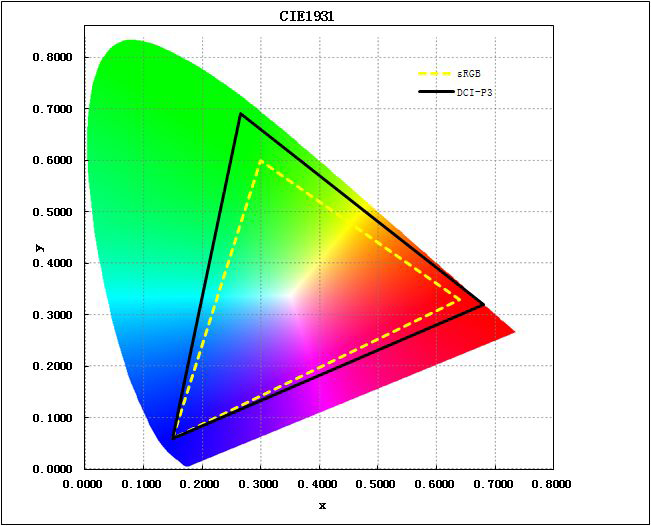

That’s not all. HDR monitors also have a wider range of colors available. A display’s color gamut tells you the range of colors it can produce. Ideally, this range would cover every shade the human eye is able to see, but we’re not there yet. SDR displays use the sRGB color space, which covers approximately one-third of the colors we can see in real life, according to VESA, a non-profit group with membership from hundreds of corporations (like Dell, LG, Sony, Intel and AMD) that creates open standards for displays. HDR displays use the DCI-P3 color space, which covers closer to 50% of the colors we can see.

The DCI-P3 color space, named by the Digital Cinemas Initiative (DCI), is used by the U.S. film industry, so you can expect many movies viewed in HDR on an HDR display to look closer to how their creators intended and more colorful than it would on a regular display.

HDR vs. 4K

Comparing HDR to 4K is like comparing apples to oranges. As just described, the primary benefits of HDR are greater brightness and color capabilities. 4K (also known as UHD), on the other hand, is a resolution, so it tells you how many pixels a display has in width x height format. The more pixels you have, the sharper the image; a 4K monitor has a resolution of 3840 x 2160 pixels and will look sharper than a 2K / QHD / 1440p display (2560 x 1440). But that has zero implications regarding brightness or colors.

That said, the sharpness of a 4K display combined with the abilities of HDR support are a powerful combination, and you’ll find many a Blu-ray offering both 4K and HDR.

What Do I Need to Enjoy HDR?

If you’re a creative professional, like a video or photo editor, your need for HDR is clear. But general users and gamers should make sure they have a use for HDR and everything required to enjoy it.

To get the image quality gains HDR offers, you must play HDR content using an HDR display and hardware, such as a PC, that supports HDR. There are PC games available in HDR and even PlayStation 4 games and Xbox One games. Some streaming services, like Netflix, let you stream content in HDR. And, of course, there are plenty of HDR Blu-rays.

You can connect an HDR display to an HDR Blu-ray player, gaming console or streaming box or you can play all this content off a PC. For Windows, you need a PC with an Intel 7th Generation Core CPU or later with HD 620 integrated graphics. Nvidia’s GeForce GTX 10-series and newer also support HDR, as does AMD’s Radeon RX Vega integrated graphics and Radeon RX 5000-series and newer graphics cards.

You’ll also need to connect the monitor via either:

- USB-C port with DisplayPort Alt Mode

- Thunderbolt 3 or 4 USB-C port

- HDMI 2.0a or 2.1 port

- DisplayPort 1.4 or later

For help selecting which port to use for connecting your HDR display, check out our DisplayPort vs. HDMI analysis.

Meanwhile, Apple macOS users require the latest version of their respective OS and an Apple product that supports HDR. You can find a list of Mac models supporting HDR here. These systems can play HDR either on their integrated screen or via a Thunderbolt 3 USB-C port.

HDR Monitor Brightness

The max brightness of a monitor and how its backlight works should be your top priority when considering an HDR display -- the brighter the better. Remember: the focus here is mimicking real life as closely as possible, and the range of what humans see, like the sun, can extend dramatically, over 1,000,000 nits (depending on atmospheric conditions), according to Roland Wooster, the chairman of VESA's DisplayHDR and Intel principal engineer and display and platform technologist at the Client Architecture and Innovation Group.

For HDR monitor shoppers, the key work VESA, (which also makes standards for DisplayPort and monitor mounts) does is its DisplayHDR certifications. Many HDR monitors boast these specs, which revolve around the monitor’s minimum maximum brightness level with HDR content, in their spec sheets. We recommend opting for an HDR monitor with one of these certifications -- and beware of monitors that claim a “HDR400” spec, for example, instead of “DisplayHDR 400,” as VESA hasn’t tested these.

| Spec Name | Minimum Max Brightness | Range of Color Gamut | Minimum Color Gamut | Typical Backlight Dimming Technology |

|---|---|---|---|---|

| DisplayHDR 400 | 400 nits | sRGB | 95% sRGB | Screen-level |

| DisplayHDR 500 | 500 nits | Wide color gamut | 99% sRGB and 90% DCI-P3 | Zone-level |

| DisplayHDR 600 | 600 nits | Wide color gamut | 99% sRGB and 90% DCI-P3 | Zone-level |

| DisplayHDR 1000 | 1,000 nits | Wide color gamut | 99% sRGB and 90% DCI-P3 | Zone-level |

| DisplayHDR 1400 | 1,400 nits | Wide color gamut | 99% sRGB and 95% DCI-P3 | Zone-level |

If you can afford it, DisplayHDR 1000 or greater is definitely the way to go, and if you’re a video editor, this is pretty much a hard requirement, Wooster says.

For more on HDR, check out our interview with Wooster on The Tom's Hardware Show.

But what about the lower levels of DisplayHDR? For general users and gamers, a monitor that’s DisplayHDR 600 or 500-certified is still capable of providing a noticeable difference over an SDR monitor, and that’s because VESA’s DisplayHDR specs also provide information about another crucial characteristic of an HDR monitor: backlight dimming technology.

HDR Backlight Dimming

Backlight dimming technology, which is how a monitor adjusts its brightness, has a great impact on your monitor’s contrast ratio and, thus, your HDR experience. In our HDR monitor reviews, we always tell you what type of backlight dimming technology the unit uses in the first line of the specs table.

For an LCD monitor, ideally, you want a type of local dimming -- FALD or edge-lit (also called edge array) -- over global dimming. These panels work with individually controllable ‘zones,’ that let the display simultaneously output different levels of brightness on different parts of the screen.

But why would you want this? Because there are situations where you want your monitor to be its brightest in some parts of the image and not others.

“Let’s say you’re playing a game, and there’s a gun fired and you see the explosion coming out of the barrel of the gun. That’s not something that fills the screen; that’s a small patch of a screen.” VESA’s Wooster explained. “If you have a display that can’t offer to you full luminance unless it's full-screen, you're not going to be able to render that bright area in just a smaller part of the screen.”

Sure, 1,000 nits brightness is great, but without any local dimming capabilities, your black levels are also high (the lower, the better with black levels). Blacks will look light gray, and the actual dynamic contrast ratio of the monitor will be no better than before, according to Wooster.

“By having local dimming, you can reduce the backlight power and you can have this wonderful dynamic range of super bright patches in some areas, then very dark blacks in other parts of the image,” he said.

Full-array Local Dimming (FALD)

Full-array local dimming (FALD) is the best backlight dimming technology. These monitors typically have 384, 512 or 1,152 zones, (such as the mini-LED Asus ProArt PA32UCX). The image above shows a FALD panel with hundreds of zones, allowing for a varying brightness across hundreds of different areas of the panel simultaneously.

But FALD HDR monitors are the most expensive type, and, generally speaking, the more zones the pricier. For example, the Asus ROG Swift PG27UQ (384 zones, $1,270 at the time of writing), Acer Predator X35 (512 zones, $2,000) are both HDR displays with FALD backlights (and two of the best gaming monitors too).

Edge-lit Dimming

If that’s too rich for your blood, you can opt for edge-lit or edge array local dimming, which has its zones on one edge of the panel. These monitors will have fewer zones than FALD ones, typically 8, 16 or 32. As you can see in the picture above, this panel’s ability to display different brightness levels is more limited than a FALD one.

Global Dimming

The least impactful type of HDR backlight dimming is global dimming. Here, the panel only has one dimming zone. According to VESA, this type of dimming is suitable for scenes with a limited dynamic range, but the contrast ratio typically never surpasses 1,000:1, which is comparable to some good SDR monitors.

OLED

Unlike LCD monitors, OLED panels, which are also quite expensive, don’t use a backlight. Instead, each pixel makes its own light and is, therefore, like a zone in its own regard. The downside to using an OLED monitor with HDR is that these displays can’t get as bright as their LCD counterparts, often maxing out at 500 nits. The Alienware AW5520QF OLED gaming monitor, for example, only goes up to 400 nits.

But OLED is also known for providing extraordinary contrast. You also won’t have to worry about the halo effect (more on that in the dedicated section below) with OLED. Gamers should weigh the pros and cons of OLED and LED, and your choice may depend on the environment you typically game in (if you usually play in dark rooms, less luminance may not be a big deal). If you’re a professional picking between the two, Wooster generally recommends OLED for photo editing and an LCD HDR display for video editing, where you should aim for 1,000 nits.

Is DisplayHDR 400, 500 or 600 Worth It?

We’ve heard a lot of tech enthusiasts argue that an HDR monitor is virtually useless unless it can hit 1,000 nits brightness. And we agree that 1,000 nits is ideal (and crucial if you’re doing video editing), but general users and gamers can get by with a DisplayHDR 500 or 600-certified monitor, because those specs also include that all-important local (FALD or edge-lit) backlight dimming we discussed above.

According to VESA’s Wooster, the DisplayHDR 500 and 600 specs, while not as amazing a jump from SDR as DisplayHDR 1000, can still yield a noticeable improvement over SDR because those specs require local dimming to meet the specs’ contrast requirements. He said that DisplayHDR 500 or 600 monitors will still have a “dramatically and very visibly better” result than an SDR display; however, he recommended that gamers go for DisplayHDR 600 or greater.

“If you don’t have the budget for a 1,000-nit display but you do for DisplayHDR 600, it will give you a good experience,” Wooster said. “DisplayHDR 600 still has local dimming and the same color characteristics as the DisplayHDR 1000 spec, and it’s going to give you a result dramatically different than what you’re used to on an SDR display,” Wooster said.

The story gets less appealing with DisplayHDR 400, however, since that spec does not require local dimming. Wooster said the amount of improvement you’d see from an SDR monitor to a DisplayHDR 400-certified one will depend on how good of an SDR monitor you’re used to.

“If you took an average mainstream monitor that might only be 270 nits, fairly deficient in color, may not have a particularly high-contrast panel, you could see that when you go the DisplayHDR 400 tier that you may have 20% more color, 50% more contrast and also active dimming,” he said, referring to the ability to dim based on real-time analysis of the content instead of just when metadata changes. “So in scenarios where all of the screen goes dark in a movie, for example, you will gain active dimming HDR benefits as well.”

But Wooster noted that the difference between HDR and SDR “isn’t going to be nearly as big” with DisplayHDR 400 compared to if you went up to DisplayHDR 500.

What About the Halo Effect?

The halo effect is something encountered with HDR monitors using a local dimming system, such as FALD or edge-array, and happens when one zone is brighter than the adjacent one and causes a glow bleeding into that adjacent segment.

Unfortunately, there’s isn’t a spec right now that’ll help you determine if an HDR monitor you’re thinking of buying may be a culprit of the halo effect.

If you want to avoid this, you can opt for an expensive OLED monitor, (which doesn’t use any backlight). Wooster also recommended the Asus ROG Swift PG27UQ and Apple Pro Display XDR, which “have a unique and special film in the polarizer stack and achieve nearly identical levels of halo reduction.”

HDR Color

After brightness and backlight type, color accuracy is the next most important factor for choosing an HDR display. As discussed, with DCI-P3 as their native color gamut, HDR displays are able to produce a wider range of colors than SDR’s sRGB color space. But you need more than just a wide-color space; you also want content to look the way its creators intended, and for that, you need a color-accurate HDR monitor. Our monitor reviews (HDR or otherwise) always feature extensive testing around color accuracy.

Long story short: Windows for a long time used the sRGB color space, but since HDR came onto the scene, Microsoft switched the color model to BT.2020, which is almost twice the size of sRGB, according to Wooster.

“Everything we’ve done for the last 25 years or so [was] limited to that and by moving to HDR and adopting the BT2020 color space, we’re now able to ultimately get displays up to approximately 70% of the color range we can see,” he said.

While 100% sRGB coverage is good for Windows apps, you’ll want more colors for HDR content. The key color space here is DCI-P3, a subset of BT2020 (targeting the central triangle) used in the film industry.

“Although all of the data from Windows and movies and all HDR content is communicated in a BT 2020 format, the vast majority of applications and content doesn’t use the full color spectrum yet but rather uses a subset encapsulated within DCI-P3,” Wooster explained.

When perusing a display’s spec sheet, look for as close to 100% DCI-P3 color coverage as possible. Still, Wooster said that for games where all of the colors are artificial this may not matter as much. But if you want your colors to pack a punch, you’ll want high DCI-P3 coverage and accuracy, and both become critical if you’re a content creator.

Resolution

The next thing to keep in mind is your monitor’s resolution, or how many pixels it has in width x length format. Naturally, a 4K (3840 x 2160) monitor will generally cost more than a similarly specced monitor at 1440p (aka QHD) or 1080p (FHD) resolution. The higher the resolution, the sharper the image (assuming you have content in that resolution as well).

| 4K / UHD | 3840 x 2160 |

| 1440p / QHD | 2560 x 1440 |

| 1080p / FHD | 1920 x 10810 |

But outside of budget, there are other things to consider before picking a resolution.

Gamers should think of the graphics card prowess they’ll need to push frames at anything over 1080p. Taking advantage of a 4K gaming display (check our our Best 4K Gaming Monitors list for recommendations) at a speedy refresh rate of 144 Hz will require a more powerful graphics card than running a game at 60 frames per second (fps) at 1080p, for example.

You should also consider how close you’ll be sitting to your HDR monitor and how good your eyesight is. If you plan on sitting pretty close to your monitor and want one that’s 27 inches or larger, you may want to go up to 4K. If you go smaller than 27 inches, the visible benefit of going up to 4K becomes more debatable.

“There may be a better use for your budget in getting higher brightness HDR, a higher quality screen or greater color gamut then potentially chasing a resolution that you may not be able to see,” Wooster said in relation to these smaller monitors.

HDR10 vs. HDR10+ vs. Dolby Vision vs. Hybrid Log Gamma

For an HDR display to work with a Windows PC, it must support the HDR10 protocol. There are three other HDR protocols -- HDR10+, Dolby Vision and Hybrid Log Gamma (HLG) -- but HDR10 is the only one Windows PCs support.

HDR10+ is a proprietary protocol from Samsung promising “even better and brighter colors” than HDR10. You can find this HDR10+ content on Amazon Prime Video, but if you use a Windows PC to access it, your PC will just convert it to regular HDR10 anyway.

Dolby Vision is another HDR protocol you’ll find on TVs mostly. The Asus ProArt PA32UCX, Asus ProArt PQ22UC portable monitor and Apple Pro Display XDR are currently the only PC monitors with Dolby Vision. It’s different from HDR10 in its approach to tone mapping. Instead of applying a generic map, it tone-maps to each individual display, and this information is in the content’s metadata. The result is use of the display’s full dynamic range. HDR10 is mastered to a fixed peak level and won’t look the same on every monitor. When we watched a movie on HDR10 and then in Dolby Vision, the latter experience showed noticeably more detail, such as dark objects against bright, white backgrounds and textures. Colors also seemed more powerful. However, it’s harder to find Dolby Vision content.

Finally, HLG comes from the BBC and Japanese broadcaster NHK and “was specially developed for television,” so if you plan on streaming BBC content you may want to look for a TV or one of the rare PC monitors with HLG support, (such as Asus’ ProArt PA32UCX). But, again, if you stream this off a browser in Windows, your PC will just convert it to HDR10.

The only HDR formats available for Apple macOS users are HDR10 and Dolby Vision (see supporting devices in the What Do I Need to Enjoy HDR section).

Bottom Line

HDR support can greatly improve the experience of a PC monitor if you have the right type of content and buy a display with the proper specs and features that will lead to a marked improvement in image quality over an SDR monitor. That's how you make graduating to HDR worth your investment.

But there’s also the debate of monitors versus TVs. HDR TVs tend to be more affordable than HDR monitors. In that case, you'd still be looking for the same capabilities as you would with an HDR monitor. However, keep in mind that just like when buying an SDR TV as a display for your system, you’ll have to with deal with cons like lower refresh rates, greater input lag and, likely, a smaller variety of ports, such as DisplayPort.

MORE: Best Gaming Monitors

MORE: Best 4K Gaming Monitors

MORE: HDMI vs. DisplayPort: Which Is Better For Gaming?

MORE: All Monitor Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Scharon Harding has over a decade of experience reporting on technology with a special affinity for gaming peripherals (especially monitors), laptops, and virtual reality. Previously, she covered business technology, including hardware, software, cyber security, cloud, and other IT happenings, at Channelnomics, with bylines at CRN UK.

-

rigo007 Thanks for the article. Really useful.Reply

Could you please advice me which is the best monitoring for PS4 Pro gaming combined with the possible to use the monitor for connecting my laptop which i will use for generic work (Outlook, MS office) use.

I recall that PS4 Pro has 60Hz of output and, i am not interest in NVIDIA or AMD grafic option but, i am interested in the following characteristics:

- 4k resolution @ 3,840 x 2,160

HDR

#2 HDMI 2.0 ports - 60Hz

- Curved type

<5 ms response/input lag

IPS

USB portThanks,

Rigo007 -

mkosto ReplyIf you want something bigger than those two 27-inchers above...

And if not? Where are those 2 27-inchers they talk about? -

klavs Reply

But with a 60Hz display you are going to get 17ms between frames:rigo007 said:I am interested in the following characteristics:

- 60Hz

- Curved type

<5 ms response/input lag

1s/60 = 0.016666666s = 17ms -

klavs I would want a monitor that lasts for a long time.Reply

For how long is the initial quality of the display of the PG27AQDM OLED expected to last? One or two years? More?