New Chinese GPU arrives to challenge Nvidia's AI dominance but falls woefully short - Loongson unveils LG200 GPGPU, up to 1 Tflops of performance per node

AI comes to the fore.

Hot on the heels of releasing surprisingly competitive new CPUs, Loongson also announced that it is developing a new GPGPU -- but its performance specs fall far below competing GPUs from Nvidia.

With demand for artificial intelligence (AI) and high-performance computing (HPC) accelerators on the rise as US sanctions severely impact China's access to the fastest GPUs, multiple domestic companies are trying to address the market with specialized processors. Loongson, a prominent CPU developer from China, recently introduced its LG200 accelerator that can process AI, HPC, and graphics workloads, reports El Chapuzas Informático.

At a high level, the Loongson LG200 is a highly parallel processor akin to AI and HPC GPUs by AMD and Nvidia. Loongson's LG200 supports the OpenCL 3.0 application programming interface (API) for compute, which is good enough for high-performance workloads including AI and HPC. It's not enough to run an operating system, but support for OpenGL 4.0 for graphics workloads is good enough for some games.

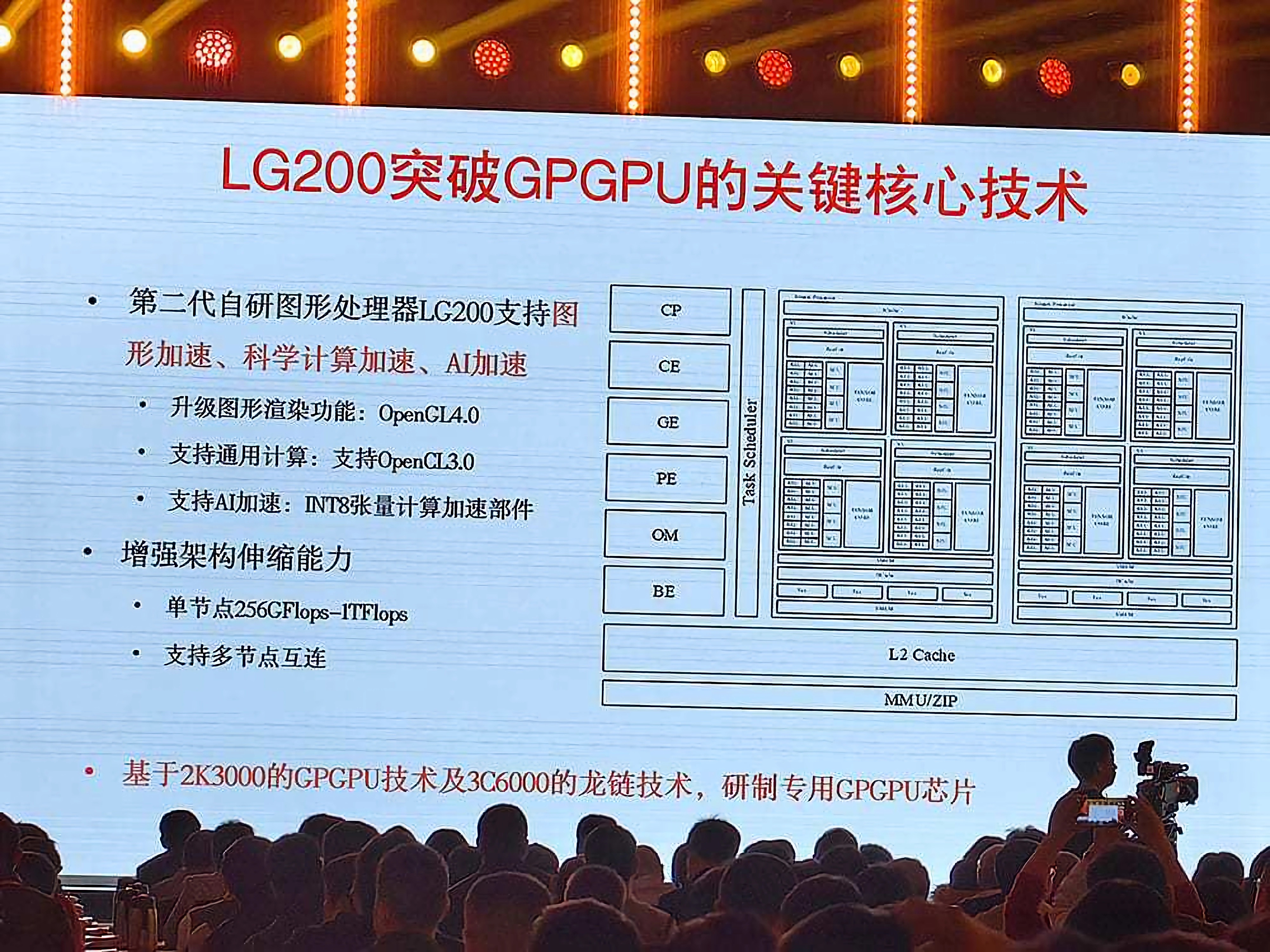

The block diagram of Loongson's LG200 depicts a processor organized in four clusters, each featuring 16 small ALUs, four bigger ALUs, and one huge ALU or a special-purpose unit. Unfortunately, we cannot draw any conclusions from analyzing the diagram, as it is light on actual technical detail.

Loongson has yet to disclose the specifications of its LG200 processor. We know it supports INT8 data format for AI workloads and probably FP32 and FP64 for graphics workloads, respectively. Also, Loongson claims that the LG200's compute performance is from 256 GFLOPS to 1 TFLOPS per node, though it didn't disclose the precision it used for the metric.

Even if the company used FP64 for its performance claims, the processor is dramatically slower than modern GPUs. For instance, Nvidia's H100 delivers 67 FP64 TFLOPS. That means this GPU could be targeted more at lower-power inference applications.

Loongson calls its LG200 accelerator a "GPGPU," which certainly implies that the part not only supports AI, HPC, and graphics workloads but can also perform general-purpose computing. But we can only wonder what the company means by general-purpose computing. We are unsure if the processor can run an operating system, though OpenCL can be used for certain general-purpose workloads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user I just tried uploading that slide image to Google Translate, which seems to work surprisingly well. It revealed basically the same information included in the article, so maybe the author already did exactly that. Something funny: it seems they refer to their multi-node link as "Dragon Chain technology".Reply

The article does contain a glaring error:

Nvidia's H100 delivers 67 FP64 TFLOPS.

No, that's the fp32 rate. Its fp64 throughput is exactly half of that, at 33.5 TFLOPS. It is a lot, and a huge leap from even the previous generation's A100 (which managed only 9.7). I'm guessing they got embarrassed by AMD leap-frogging them on this metric, in the previous generation.

https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units#Data_Center_GPUs

Also:

Loongson calls its LG200 accelerator a "GPGPU," which certainly implies that the part not only supports AI, HPC, and graphics workloads but can also perform general-purpose computing. But we can only wonder what the company means by general-purpose computing.

No, "GPGPU" just means it's more general than graphics. That extends as far as HPC and AI, but usually not much beyond. Anything GPU-like is still practically limited to workloads that are number-crunching, highly parallel, and SIMD-friendly.

GPGPU is an old term, going back at least 20 years. It just referred to using a GPU for computations other than graphics.

Sorry to bother you, but I'm sure @JarredWaltonGPU can set Anton straight on these points. -

collin3000 Should be noted that Teraflops aren't everything. But assuming that the 256 giga flops to 1 Teraflops has the Teraflops as the fp32 rate (although I'd guess it's int8) that puts this card's compute power on par with the Nvidia Quadro 6000 that was released back in 2010.Reply

I love competition in markets with few suppliers. But they've got a long long way to go to be competitive. Especially when the RTX 4080 which can still be shipped into China has a FP32 of 48.74 Teraflops and even a GTX 1060 can pull 4.38 Teraflops. So even if all GPU shipments into China were banned, the government will be better off commandeering every single gamers graphics card even if it was old. Compared to currently using this chip. -

ivan_vy think about this like a proof-of-concept, a can-be-done and boy they will, Loongson CPUs are improving at a surprising rate, GPUs from MooreThreads are great on HW side (awful on SW) but this means the bottom and medium segment will be served, high end users can still buy and import from 3rd parties but soon will be feed too, how? huge R&D budgets paid by a captive (by chinese government and US sanctions) huge medium and low consumer markets.Reply -

bit_user Reply

Given that int8 performance is called out separately, I think they mean floating-point when they say FLOPS. That is what it stands for, after all!collin3000 said:assuming that the 256 giga flops to 1 Teraflops has the Teraflops as the fp32 rate (although I'd guess it's int8) that puts this card's compute power on par with the Nvidia Quadro 6000 that was released back in 2010.

Typically, there's a 2:1 ratio between fp32 and fp64. Likewise, there's usually a 2:1 ratio between fp16 and fp32. So, that would neatly cover the spread of 256 GFLOPS to 1 TFLOPs, where the low end is fp64 and the high end is fp16.

They do talk about multi-node scalability, although that's going to be more for AI than most other workloads.collin3000 said:the RTX 4080 which can still be shipped into China has a FP32 of 48.74 Teraflops and even a GTX 1060 can pull 4.38 Teraflops. So even if all GPU shipments into China were banned, the government will be better off commandeering every single gamers graphics card even if it was old. Compared to currently using this chip.

Anyway, this is a start. It's probably a good sign to see them put forth some plausible-sounding claims, rather than how MooreThreads way over-promised and under-delivered. Even if they delivered far more competitive hardware today, it would take a while for the software to catch up. If they deliver something modest now, it can at least be used as a development vehicle to build up the software stack & ecosystem. -

bit_user Reply

Show me evidence the hardware is actually good. I've seen their claims about its specs, but not a single benchmark even remotely close to proving them.ivan_vy said:GPUs from MooreThreads are great on HW side (awful on SW)

I suspect the MTT S80 hardware is probably full of bugs and bottlenecks that the software has to work around, and that's one reason it's taken them so long to get the drivers in half-decent shape. Even with the best drivers, those GPUs probably won't perform anything like their specs suggest.

Didn't they just have a round of layoffs? I'm having some trouble finding the article, but it was within the last month.ivan_vy said:huge R&D budgets paid by a captive (by chinese government and US sanctions) huge medium and low consumer markets.

As for consumers propping up the low-end, that only works if the cards are cost-competitive with options from Intel, AMD, and Nvidia, which the S80 isn't (even after all the markdowns). -

ivan_vy Reply

"The GPU's clock speed is set at 1.8 GHz, and maximum compute performance has been measured at 14.2 TFLOPS. A 256-bit memory bus grants a bandwidth transfer rate of 448 GB/s. PC Watch notes that the card's support for PCIe Gen 5 x 16 (offering up to 128 GB/s bandwidth) is quite surprising, given the early nature of this connection standard."bit_user said:Show me evidence the hardware is actually good.

https://www.techpowerup.com/310130/moore-threads-mtt-s80-gpu-benchmarked-by-pc-watch-japanquite a beast , on paper...not so good on real world scenarios, drivers are improving

https://www.tomshardware.com/news/chinese-gpus-made-by-moore-threads-get-a-big-performance-boost-from-latest-driver?utm_source=Newswav&utm_medium=Website

remember ARC by Intel? 750% boost, why is relevant? first GPU in decades

https://www.pcworld.com/article/2124163/intel-claims-up-to-750-improvement-with-latest-arc-drivers.html

MTT founded by Zhang Jianzhong the former global vice president of NVIDIA and general manager of Nvidia China, the team is not a bunch of guys in a garage or the likes from Ouya.

about the layoff, 1% seems not so dramatic

"Moore Threads Intelligent Technology Beijing Co. plans to cut a single-digit percentage of its roughly 1,000 employees,"

https://finance.yahoo.com/news/china-ai-chipmaker-moore-threads-053729469.html?.tsrc=fin-srchfor 3.4 billion value company

https://finance.yahoo.com/news/chinas-moore-threads-valued-3-104700851.html

No need to be too competitive when the only GPU sold in China will be domestic cards - a race spearheaded by MTT- and the sanctions are drawing exactly this scenario. -

bit_user Reply

That's not evidence. You're just quoting specs. I'm looking for proof the hardware isn't hopelessly borked. Are there any synthetic tests that show it nearing even a single one of its theoretical performance limits?ivan_vy said:"The GPU's clock speed is set at 1.8 GHz, and maximum compute performance has been measured at 14.2 TFLOPS. A 256-bit memory bus grants a bandwidth transfer rate of 448 GB/s. PC Watch notes that the card's support for PCIe Gen 5 x 16 (offering up to 128 GB/s bandwidth) is quite surprising, given the early nature of this connection standard."

And yet the card still performs like hot garbage.ivan_vy said:drivers are improving

These guys aren't Intel - they haven't earned any benefit of the doubt. Intel had over a decade of building iGPUs, so it's a fair chance that even if Intel messed up a few things about Alchemist, they probably at least got most of the important stuff right - and yet, the A770 underperforms its specs by about 2x.ivan_vy said:remember ARC by Intel?

Right now, they look like a bunch of absolute clowns, especially when you go back and look at the promises and hype they created leading up to its launch.ivan_vy said:MTT founded by Zhang Jianzhong the former global vice president of NVIDIA and general manager of Nvidia China, the team is not a bunch of guys in a garage or the likes from Ouya.

That doesn't look set to happen any time soon.ivan_vy said:No need to be too competitive when the only GPU sold in China will be domestic cards -

ivan_vy Reply

"In some very specific synthetic benchmarks, the MTT S80 GPU does better than an RTX 3060."bit_user said:Are there any synthetic tests that show it nearing even a single one of its theoretical performance limits?

https://www.guru3d.com/story/the-mtt-s80-chinese-gpu-has-the-performance-of-a-geforce-gtx-1060/again, drivers are the biggest problems.

this is more an opinion. MTT poached AMD and Nvidia engineers, you don´t look for the worse if you want to attract talent.bit_user said:they look like a bunch of absolute clowns,

No need to be too competitive when the only GPU sold in China will be domestic cards

looks like it will get worse before (if ever) gets better.bit_user said:That doesn't look set to happen any time soon.

https://www.tomshardware.com/news/us-govt-warns-sanctions-swerving-gpus-will-fall-under-their-controlyes, I know she is talking about AI, but GPUs are so entrenched with AI that it will inevitable limit availability in China as already happening. -

bit_user Reply

How do you actually know that?ivan_vy said:again, drivers are the biggest problems.

Intel poached Raja Koduri from AMD. Doesn't mean anything.ivan_vy said:this is more an opinion. MTT poached AMD and Nvidia engineers, you don´t look for the worse if you want to attract talent.

Unless you've worked in these organizations, you don't know which engineers actually know their stuff and which are full of hot air. -

ivan_vy Reply

simply wouldn't improve once produced.bit_user said:How do you actually know that?

as much as we make fun of Raja, he has been with S3, Apple, AMD and Intel; with AMD landed PS5, XBOX winning GPU designs. I don't think too many people were wrong with him.bit_user said:Raja Koduri

I understand your point, HW blueprints can be copied (stolen?), read a bunch of whitepapers and so, the success is in the software: looking at CUDA, how the PS3 weird architecture shinned in the end of lifecycle, how AMD ages like fine wine, etc... these fast times are unrelenting and competition is hard.

The hardware race is tight and China eventually will catch on, the software will be the deciding factor in years to come.