SK hynix expands U.S. presence with new Bellevue, Seattle office in efforts to get closer to its largest customers — offices near Nvidia, Amazon, and Microsoft highlight co-designed HBM efforts

New Bellevue office places the memory supplier closer to its largest AI customers as HBM demand reshapes the semiconductor industry.

SK hynix is expanding its U.S. presence with a new office in the Seattle metropolitan area, placing the world’s leading HBM supplier within minutes of Nvidia, Amazon, and Microsoft.

According to industry sources cited by DigiTimes, the South Korean company has leased approximately 5,500 square feet at City Center Bellevue, just east of Seattle. While modest in size, the location and timing make the expansion far more consequential than a routine regional office opening.

SK hynix is sitting squarely in the middle of the ongoing AI hardware boom, supplying the majority of the HBM used in Nvidia’s data center accelerators and increasingly serving hyperscale customers building their own AI silicon. Establishing a physical foothold in the Pacific Northwest puts the company closer to the customers driving its fastest-growing and most important business.

Moving closer to the center of AI development

SK hynix has spent the past two years transforming itself from a cyclical commodity DRAM vendor into a leading supplier for AI infrastructure. That's visible in High Bandwidth Memory (HBM), where SK hynix was first to mass-produce HBM3 and has remained ahead in yield and volume as customers transitioned from HBM2E. In August 2025, the company overtook Samsung in global DRAM revenue for the first time, a change largely attributed to HBM shipments for Nvidia’s H100 and H200 AI accelerators.

Seattle and its surrounding suburbs have become one of the densest concentrations of the AI industry ecosystem outside of Silicon Valley. While Nvidia maintains a significant engineering presence in the region, Amazon’s AWS Skills Center is based nearby, and Microsoft’s Azure silicon and systems groups are spread across Redmond and Bellevue.

HBM is not a plug-and-play component. Memory stacks are co-designed with GPUs and AI accelerators, and performance, power, and reliability targets are refined through repeated cycles of joint validation. Physical proximity will allow faster iteration when issues arise, whether related to signal integrity, thermals, or packaging tolerances.

Amazon’s recent launch of its Trainium3 AI accelerator, which integrates 144GB of HBM3E, shows how quickly hyperscalers are increasing their reliance on stacked memory. Microsoft and Google are following similar paths with custom accelerators. Each of those programs depends on close coordination between the silicon designer and the memory supplier; a Seattle-area office gives SK hynix a seat at that table.

Broader U.S. localization

The Bellevue office also fits into a wider U.S. expansion that extends beyond customer support. In 2024, SK hynix announced plans for a $4 billion advanced packaging facility in Indiana, its first manufacturing investment in the United States. That site is intended to handle advanced HBM packaging and testing, with production targeted for 2028. While the Indiana project focuses on manufacturing, it appears the Seattle office will be oriented toward R&D, applications engineering, and customer engagement.

Taken together, these moves suggest SK hynix is deliberately localizing more of its AI-related operations in the U.S., where the bulk of demand is being generated. Advanced packaging has become as important as wafer fabrication for AI accelerators, and the ability to package memory near customers reduces both logistical complexity and geopolitical risk. It also aligns with U.S. industrial policy aimed at securing domestic supply chains for critical technologies.

There has been periodic speculation that SK hynix could eventually build a full DRAM fab in North America, though the company has not committed publicly to such a plan. Even without a fab, expanding engineering and packaging capabilities in the U.S. strengthens SK hynix’s position with American customers at a time when memory supply is extremely tight, thereby making long-term capacity planning a competitive differentiator.

The race to HBM4

SK hynix’s Seattle expansion also reflects intensifying competition in the HBM market. Samsung remains a formidable rival with deep manufacturing resources and its own U.S. investments, including advanced packaging capacity tied to its Texas operations. Micron, the sole U.S.-based DRAM manufacturer, is pursuing high-end server and automotive markets and sampling its own HBM4 designs, though its near-term capacity expansion is more constrained.

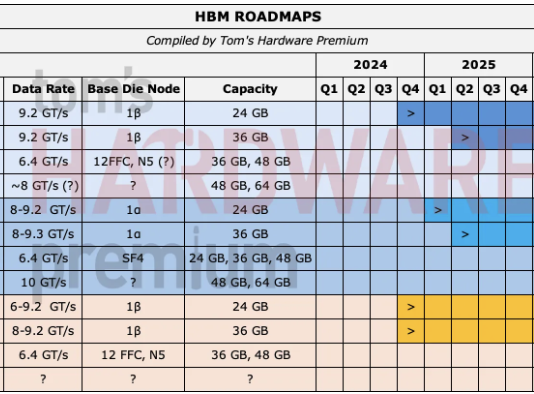

Meanwhile, SK hynix has already completed development of HBM4 and is understood to have delivered samples to Nvidia. Early engagement is particularly important with HBM4 because the transition involves higher stack counts, tighter power budgets, and more complex thermal challenges. Winning those designs early could easily lock in multi-year supply relationships.

Ultimately, with its Seattle expansion, SK hynix is telling Samsung and Micron that it intends to be embedded in the AI ecosystems of its largest customers rather than a distant component supplier. Being physically close to Nvidia and Amazon’s engineering teams during the transition to HBM4 will greatly improve the company’s odds of maintaining its lead, while also supporting adjacent efforts such as joint work with Nvidia on AI-optimized SSDs.

Luke James is a freelance writer and journalist. Although his background is in legal, he has a personal interest in all things tech, especially hardware and microelectronics, and anything regulatory.