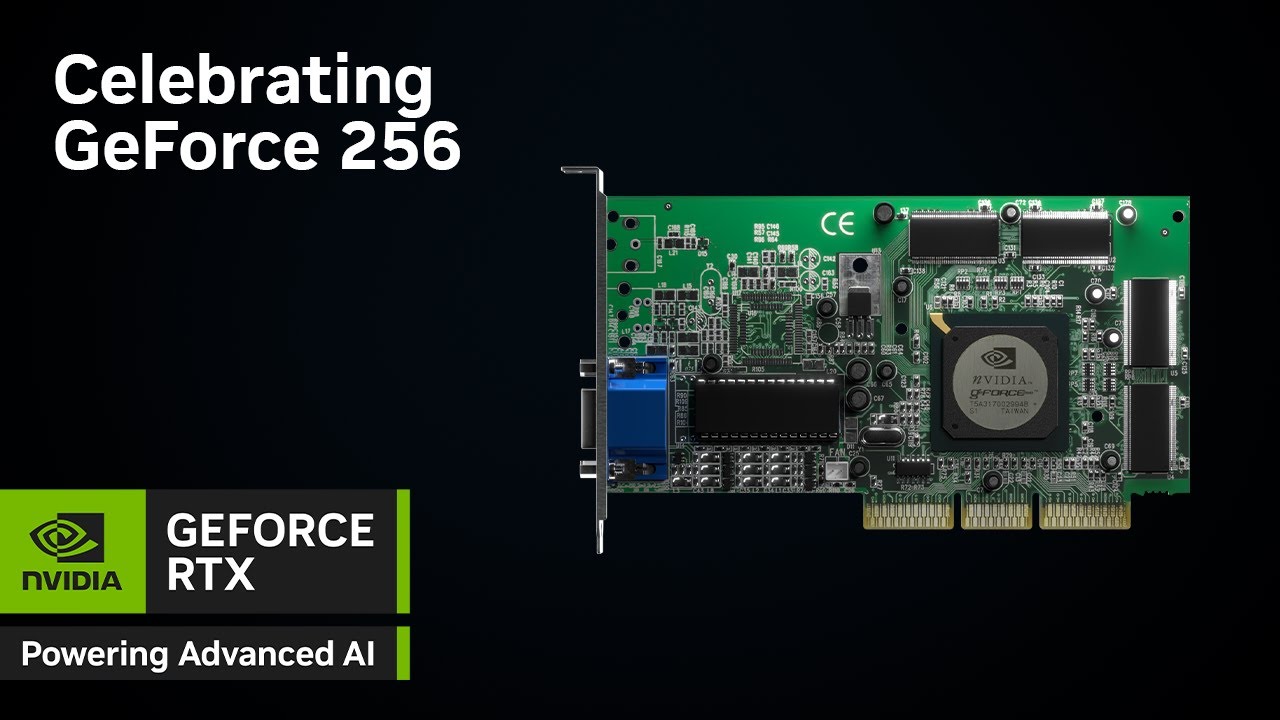

Nvidia GeForce 256 celebrates its 25th birthday — company talks about a quarter century of GPU progression

Nvidia has a blog, YouTube videos, and green popcorn to celebrate the anniversary.

25 years ago today, Nvidia released its first-ever GeForce GPU, the Nvidia GeForce 256. Despite the existence of other video cards at the time, this was the first desktop card to be advertised as a "GPU" — a graphics processing unit. Not that it really changed what the video cards were doing, though there were a few new features for DirectX 7, but the marketing move certainly caught on. At the time, there were a lot of different companies making video card hardware, but many have since been acquired by AMD or Nvidia (R.I.P. S3, Matrox, Number Nine, Rendition, Trident...). Now, Nvidia is celebrating the GeForce anniversary with several tie-ins, including a promotional YouTube video, a blog post, and even Nvidia-green popcorn.

What makes this particular chip so historic besides being the first GeForce graphics card? It's not like it was the first graphics card, but rather that Nvidia made a distinction and called its first GeForce chip a GPU. This was in reference to the unified single-chip graphics processor, and a marketing choice as much as anything to call attention to the fact that it was like a CPU (central processing unit), only focused on graphics.

The GeForce 256 was one of the first video cards to support the then-nascent hardware transform and lighting (T&L) technology, a new addition to Microsoft's DirectX API. Hardware T&L reduced the amount of work the CPU had to do for games that used the feature, providing both a visual upgrade and better performance. Modern GPUs have all built on that legacy, going from a fixed-function T&L engine to programmable vertex and pixel shaders, to unified shaders, and now on to features like mesh shaders, ray tracing, and AI calculations. All modern graphics cards owe a lot to the original GeForce, though that's not really why consumers fondly remember the GeForce 256.

Nvidia now presents the GeForce 256 as the first step on the long road toward AI PCs. It claims that the release "[set] the stage for advancements in both gaming and computing," though headers like "From Gaming to AI: The GPU's Next Frontier" are sure to raise some eyebrows. The ever-rising prices and decreasing availability of Nvidia GPUs, particularly on the high-end, reflect a major change over time in Nvidia's target audience — one that not all fans appreciate, particularly in the context of the original GeForce 256 that launched at just $199 in 1999; it would still only cost around $373 today, after accounting for inflation.

But Nvidia has also pushed the frontiers of graphics over the past 25 years. The original GeForce 256 used a 139 mm^2 chip packing 17 million transistors, fabricated on TSMC's 220nm process node. Contemporary CPUs of 1999 like the Pentium III 450 used Intel's 250nm node and packed 9.5 million transistors into a 128 mm^2 chip — with a launch price of $496. Yikes! For those bad at math, that means the GeForce 256 was less than 10% larger, with 80% more transistors, for less than half the price of a modest CPU.

Today? A high-end CPU can still be found for far less than $500 — Intel's Core i7-14700K costs $354, for example. It has a die size of 257 mm^2 and around 12 billion transistors, made using the Intel 7 process. Nvidia's RTX 4090 meanwhile packs an AD102 chip with 76 billion transistors in a 608 mm^2 die, manufactured on TSMC's 4N node. And it currently starts at around $1,800. My, how things have changed in 25 years!

GeForce 256 marked the beginning of the rise of the GPU, and graphics cards in general, as the most important part of a gaming PC. It had full DirectX 7 and OpenGL support, and graphics demos like Dagoth Moor showed the potential of the hardware. With DirectX 8 adding programmable shaders, the GPU truly began to look more like a different take on compute, and today GPUs offer up to petaflops of computational performance that's powering the AI generation.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Christopher Harper has been a successful freelance tech writer specializing in PC hardware and gaming since 2015, and ghostwrote for various B2B clients in High School before that. Outside of work, Christopher is best known to friends and rivals as an active competitive player in various eSports (particularly fighting games and arena shooters) and a purveyor of music ranging from Jimi Hendrix to Killer Mike to the Sonic Adventure 2 soundtrack.

-

bourgeoisdude My first graphics card (that wasn't S3 in a pre-built PC) was the ATI All-In-Wonder Pro 128 that competed with the GeForce 256. It was me and my brother's first PC build, using 1.2GHz AMD Thunderbird CPU in an MSI mobo. We picked that card for the connectivity and having no idea about graphics speeds or anything. It had 32MB of VRAM so how could it be any worse than the more expensive GeForce with the same memory, right? :)Reply

Of course, now I know the GeForce model was quite a bit better at the time and have learned a bit more about GPUs. -

Kamen Rider Blade If the GeForce 256 was a actual person, it would be old enough to rent a car!Reply -

DS426 My first graphics card wasn't a GPU -- ATI Radeon 7000 64MB SDR PCI. Finally got a "real GPU" years later, a Radeon 9600. I'm thinking a GeForce 6600 GT (actually twins for SLI) came after that, and on and on. Back then, upgrades were huge gen-on-gen and only took 12-18 months. Yes, top of the line cards were much more affordable back then than they have been in recent years, but they also grew in die size and shaders (using that generically) and therefore PCB size, cooler size (1-slot was the standard for some time, then 1.5 came, then 2-slot was wild, and so on). I'm sure AMD and nVidia have much larger development headcounts today.Reply

Those were good days back when nVidia and ATI were trading blows. -

epobirs That brings back memories. Instead of the huge press events Nvidia holds today, this was tucked in a little room at the Intel Developer forum in Palm Springs, CA. There were perhaps three dozen people in the room. They served cake and Jensen went into his presentation about why this wasn't just a new graphics card. It was quite engaging and yet I had no idea this was an inflection point for the field. They gave us long sleeve jerseys with a slogan about "today the world has changed..." Still have it stored away in a bin somewhere in the house.Reply -

First build was a 1.53ghz Athlon XP 1800+ with a GeForce3 Ti 200 in 2001. Upgraded to the GeForce4 Ti 4600 the following year.Reply

Still have both the chameleon demo and the wolfman demo on my current build for nostalgia. -

jamieharding71 My first Nvidia GPU was a Creative Labs 256 Blaster, or something similar. Before that, I had cards from ATI, Matrox, S3 Virge, and 3DFX. I loved the competition back then, but even more, I enjoyed going to my local hardware store and checking out the graphics card boxesReply -

My first GeForce, was 6800, back in 2005. I was a university student at the time and i bought it for the sole purpose of maxing out the graphics of Splinter Cell: Chaos Theory. Those were the days, man!Reply

-

snapdragon-x My first Nvidia card was the RIVA 128, replacing the ATI 3D RAGE with its buggy OpenGL driver. I was planning to replace it with the Rendition Vérité V1000, but the actual cards had too many months of delays. The RIVA 128 was pretty good and could actually run Softimage|3D.Reply