ASRock Phantom Gaming X Radeon RX580 8G OC Review: A Solid Rookie Effort

Why you can trust Tom's Hardware

Power Consumption

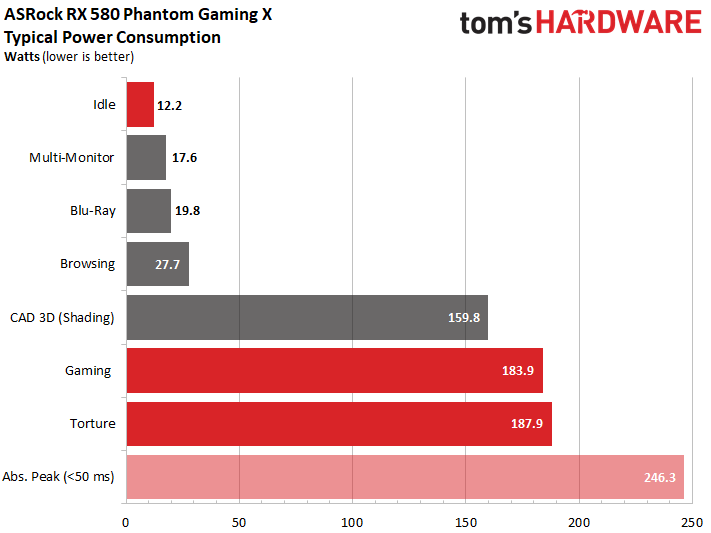

Our exploration of power consumption begins with a look at the loads while running different tasks. Significant improvements were made between when AMD launched its Radeon RX 580 and now, particularly in our multi-monitor and hardware-accelerated video playback workloads. No doubt, refined drivers are responsible.

Even at idle, ASRock's card impresses with a mere 12.2W of power consumption.

But we also see that the Radeon RX 580's higher clock rate imposes elevated power use under load. Our gaming benchmark reflects a roughly 184W average, while the stress test pushes 188W. This is naturally a consequence of the increased voltage needed to sustain more aggressive frequencies.

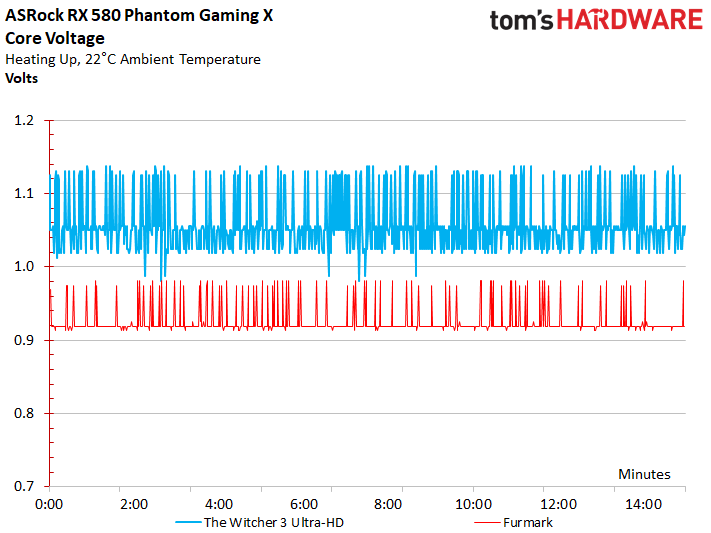

At a maximum of 1.14V and an average of about 1.06V, there's nothing alarming to report. ASRock goes the conservative route with its Phantom Gaming X Radeon RX580 8G OC. We think enthusiasts will favor that approach over flogging the mainstream GPU with more voltage, heat, and, ultimately, noise. The only drawback is a lightweight cooling solution, which won't allow the GPU to maintain its peak clock rate over time.

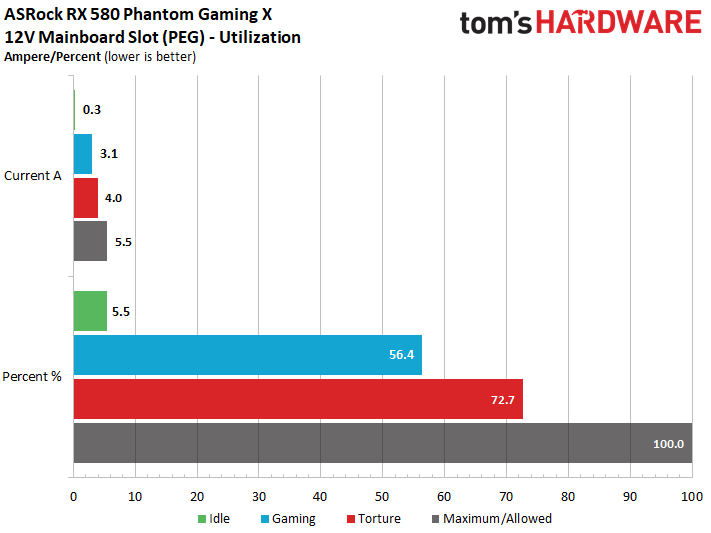

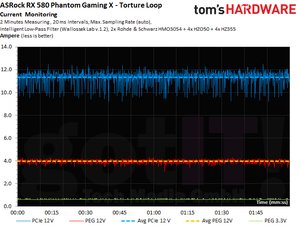

Load On The Motherboard Slot

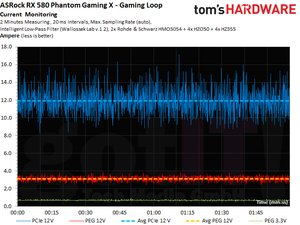

At a peak of 4A through our stress test, ASRock's Phantom Gaming X Radeon RX580 8G OC falls well under the 5.5A ceiling defined by the PCI-SIG for a motherboard's 12V rail. A mere 3.1A during the gaming loop is even more conservative.

If we convert those loads into a percentage utilization of current available on the PCIe slot, it becomes even clearer that ASRock's implementation complies with the specification we've seen other AMD cards run afoul of.

Power Consumption In Detail

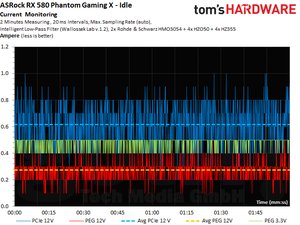

Registering a mere 12W at idle, the corresponding graph of current over time remains under 1A across all rails.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

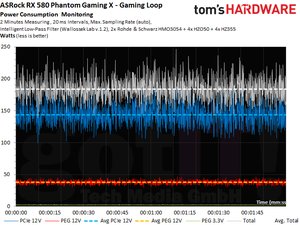

Our gaming loop shows how AMD's PowerTune technology keeps a lid on consumption. Although a lot of those spikes are well above the average power use we're reporting, a good power supply should have no trouble handling them.

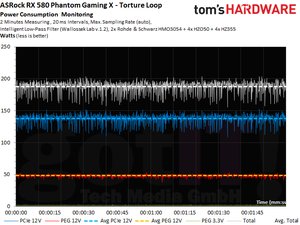

It may seem counterintuitive, but the stress test reflects much smaller peaks and valleys. Its load is more consistent, and PowerTune has less room for optimization. In short, the card is pegged against its upper limit.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Power Consumption

Prev Page Gaming Benchmarks Next Page Temperatures & Thermal Image Analysis

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

Ulikedat At least it's a looker and the competition just got a bit more heated (no pun intended).Reply -

alextheblue I have to wonder, since aiming for a cut-down budget design, why not an RX570? An entry level RX580 with halfway decent cooling can be had new for $270. The cheapest 570 I've seen is a reference model for $250. Push a budget aftermarket RX570 closer to $200 and undercut everyone else. Clocks and TDP would be lower (especially if they stuck close to reference), which would have further reduced board and cooler costs.Reply

Or perhaps yields are so good at this point, that there just aren't many cut-down Ellesmere chips getting pushed out the door? -

BulkZerker @alextheblue they are pushing the bang for buck at every point it seems. It begs to wonder if some "cheap" (like as5) thermal paste would help or not. Certainly an aftermarket heatsink would help the temps.Reply -

TJ Hooker If this card is like most 580s, it will respond quite well to lowering the core voltage. With some undervolting (and maybe a slight underclock), you could likely improve power, thermals and noise noticeably with no (or little) impact on performance.Reply -

AnimeMania What's going on with the numbers for GTX 1060 3GB with certain games like Hitman and DOOM, they can't really be that bad, can they?Reply -

Sleepy_Hollowed This is quite a nice card, especially for those looking to switch to FreeSync and the open drivers that AMD provides (For accelerating stuff like data compression or video encoding).Reply

Like all cards, I just wish it was available, this crypto craziness is on the downslope for now, but you never know when cards are just going to be missing from the shelves for months. -

Olle P Reply

Around here there's almost no price difference between a 570 (4GB) and 580 (8GB). The latter is significantly better at the all important 1080p so that's where to make profit.21064926 said:I have to wonder, since aiming for a cut-down budget design, why not an RX570? ...

One can only hope. The 180W drawn is a bit steep IMO.21065443 said:If this card is like most 580s, it will respond quite well to lowering the core voltage. ...

Seems off topic...21065545 said:What's going on with the numbers for GTX 1060 3GB with certain games like Hitman and DOOM, they can't really be that bad, can they?

The 3GB is a cut down version of the 6GB, with fewer ROPs and less memory bandwidth. 3GB VRAM is also insufficient to run newer games efficiently. -

AnimeMania Reply

According to another review on Tom's Hardware, the GTX 1060 3GB had 68.1 FPS on Hitman at Ultra Levels.21066401 said:

Seems off topic...21065545 said:What's going on with the numbers for GTX 1060 3GB with certain games like Hitman and DOOM, they can't really be that bad, can they?

The 3GB is a cut down version of the 6GB, with fewer ROPs and less memory bandwidth. 3GB VRAM is also insufficient to run newer games efficiently.

https://www.tomshardware.com/reviews/nvidia-geforce-gtx-1060-graphics-card-roundup,4724-2.html

The GTX 1060 3GB in this article had 21.2 FPS on Hitman at Very High Levels. I don't think it is off topic if I am questioning the reliability of the benchmarking process used here. I was just wondering why the results seem to fluctuate so wildly. One value is 3 times higher than the other. -

alextheblue Reply

That was my point. There's less competition for cheap RX 570s. Their 580 design cuts down on costs across the board (pun intended). They could cut down even further with the lower-TDP (and presumably cheaper) RX 570 and undercut the entire field substantially. An RX 570 at ~$200 would be enticing for budget builds.21066401 said:Around here there's almost no price difference between a 570 (4GB) and 580 (8GB). The latter is significantly better at the all important 1080p so that's where to make profit.

When they first came out there was often a substantial price difference between full Ellesmere and cut-down Ellesmere. That's why I'm speculating that there just isn't enough supply of 570 chips to make this possible.