System Builder Marathon, Sept. 2011: System Value Compared

Power And Heat

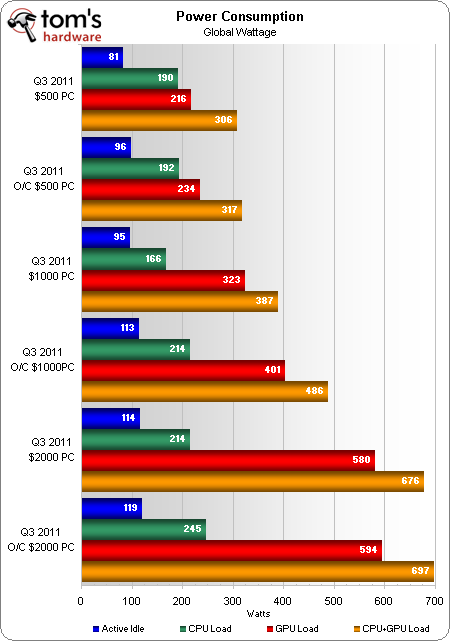

The GeForce GTX 580 GPU consumes so much energy that Nvidia implemented a feature that throttles the GPU in unrealistic workloads like FurMark. You might have heard that AMD did the same thing with its Radeon HD 6970, but this editor’s tests have shown that capability can be worked around if a thermal issue isn't encountered.

For the first time, we're having to use 3DMark to measure power use on our $2000 machine rather than FurMark. With so much going on with the GeForce GTX 580’s voltage regulator, the peak power numbers from the other machines are more reliable.

A pair of GeForce GTX 580s consumes monster power, even at this questionably lighter load. Idle power is part of the mix, however, since we believe most users don't use 100% of their system’s performance every possible minute of its operation.

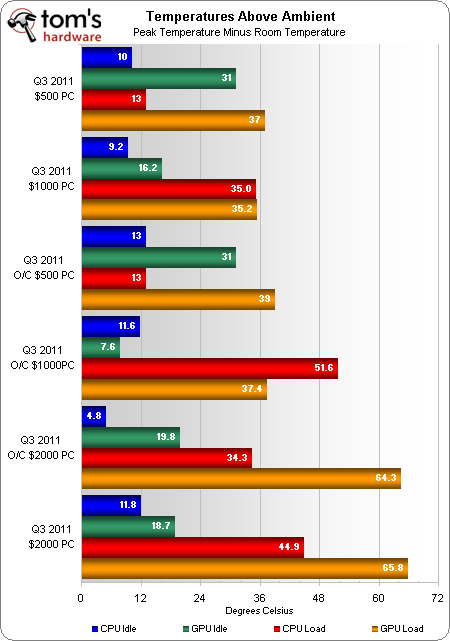

Anyone questioning the $2000 PC’s use of a mediocre CPU cooler as a potential reason for its poor overclock can stop here, as we’ve reached far higher overclocks with Sandy Bridge-based processors running over 30° warmer.

The $1000 machine’s CPU temperature goes up significantly with overclocking due to the added stress on its small cooler, while the $2000 PC gets cooler when overclocked due to smart fan function being disabled. The overclocked $500 machine's temperature results are less surprising for those who remember that it was overclocked without a voltage increase.

Current page: Power And Heat

Prev Page Benchmark Results: Productivity Next Page Average Performance And EfficiencyGet Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

revjacob Actually what we need now are more affordable 2560x1600 monitors for these enthusiast PCs.Reply -

compton I think the next quarter SBM should utilize an SSD at all segments. Its just about time when no one should seriously think of not including a SSD a build. There are great values out there and even the budget system deserves some love. If a small increase in price segments is necessary, so be it. Going from a HDD to a SSD is like going from IGP to discrete class graphics.Reply

Also, as a result, more emphasis should be placed on the storage sub system. I know these are gaming configurations, but I'd give up my GPU in a nanosecond if it meant I could keep my SSDs. Fortunately, I don't have to choose, but I would if I had too, and I'm not alone out there. Budget systems don't feel so budget-y with even a modest SSD. -

chumly Maybe the value of the $1000 PC would go up if you weren't wasting money on unnecessary or poorly chosen parts. You could add another 4 GB of ram, and swap out the twin stuttering 460's for 6870's (and still have enough money to add a better, modular PSU).Reply

Here:

http://i.imgur.com/g22Bq.jpg -

jprahman comptonI think the next quarter SBM should utilize an SSD at all segments. Its just about time when no one should seriously think of not including a SSD a build.Reply

Yeah, good luck fitting an SSD into a $500 gaming build.

-

Kamab jprahmanYeah, good luck fitting an SSD into a $500 gaming build.Reply

there have been 64GB Vertex Crucial drives on sale for < 79$. Which isn't bad. -

compton jprahmanYeah, good luck fitting an SSD into a $500 gaming build.Reply

That's why I think the $500 system should be closer to $600, maybe like $550. 30GB Agility drives were going for $40 yesterday at the Egg, so its not like you have to spend $300 to get a tangible benefit. That one addition would have contributed a significant performance benefit and the budget category used to be $650 anyway. -

nd22 I would have stick to 1 gpu in the 1000 S build. Instead of 2 gf 460/radeon 6850 I would have used 1 radeon 6970/ geforce gtx570 - from persoanl experience 1 gpu = less problems!Reply -

mayankleoboy1 i think quicksync should be included in the final score as video conversion is something that everyone of us do. and if we buy a SB cpu, then we would surely use quicksync.Reply

maybe also include windows boot time.