Gigabyte GeForce GTX 950 Xtreme Gaming Review

Nvidia's GeForce GTX 950 offers great value, performing nearly as well as the 960 for less money. Can Gigabyte's aggressively overclocked GeForce GTX 950 Xtreme Gaming outperform the more expensive GPU?

Why you can trust Tom's Hardware

Overclocking, Noise, Temperature And Power

When it comes to overclocking, nothing beyond the factory frequencies are guaranteed. Picking a card like Gigabyte's GeForce GTX 950 Xtreme Gaming, which sports a cherry-picked GPU, certainly helps. But it still comes down to the silicon lottery.

OC Guru II

My go-to utility for overclocking graphics cards is MSI's Afterburner, which was designed to support almost any GPU. But when companies go through the effort of creating their own tools, I like to give them a shot.

Gigabyte provides OC Guru II with its GeForce GTX 950 Xtreme Gaming card. This software lets you monitor temperatures, clock rates, voltage levels and fan speeds. OC Guru II also enables GPU clock, memory clock, core voltage and fan speed adjustments. Plus, it gives you control over the Windforce logo's LEDs.

Much like Afterburner, OC Guru II lets you adjust the GPU and memory frequencies in granular steps. Every click on the arrow buttons move the clock rate by 1MHz, and voltage adjustments are applied in 0.0125V increments.

Unlike most GPU overclocking tools, the temperature and power target values are not linked by default. You're able to increase the power target an additional eight percent, and the temperature can be increased by 15 degrees Celsius to a threshold of 95 degrees. Either option can be maxed out without affecting the other. OC Guru can also be configured to prioritize the GPU temperature over the power limit.

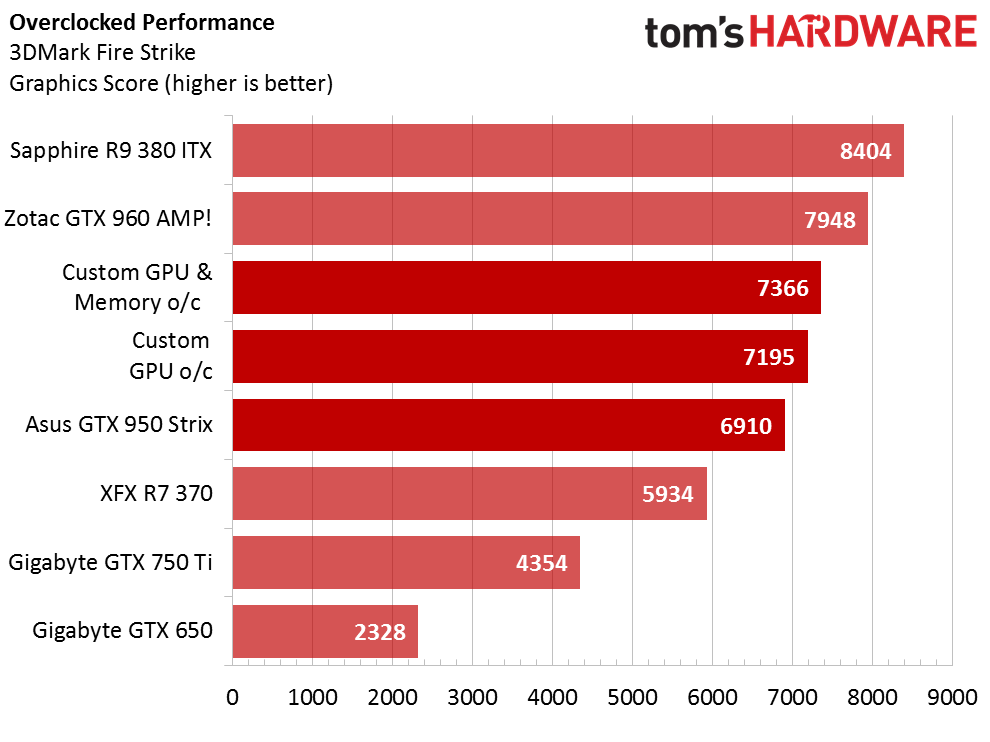

Overclocking

To overclock Gigabyte's GeForce GTX 950 Xtreme Gaming, we first adjusted the power limit. We didn't bother with the temperature limit because the GPU wasn't even approaching 80 degrees under extreme load. From there, we played with the core clock in 5MHz increments until the card crashed, after which point we used single-MHz tweaks. Ultimately, we saw stability at 1273MHz, or 70MHz higher than Gigabyte's shipping frequency. Increasing the voltage a few notches proved to be no help in achieving higher clock speeds.

Gigabyte includes the same memory found in Nvidia's GeForce GTX 960 specification. With the GDDR5 already running at its peak rate, overclocking was bound to be a struggle. In the end, we achieved an additional 20MHz.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The overclocked settings were stable, except for in Shadow of Mordor. For some reason, that title penalizes our tuned configuration with lower performance than the factory setup. There were no crashes or artifacts, just a lower frame rate.

Noise, Power and Temperature

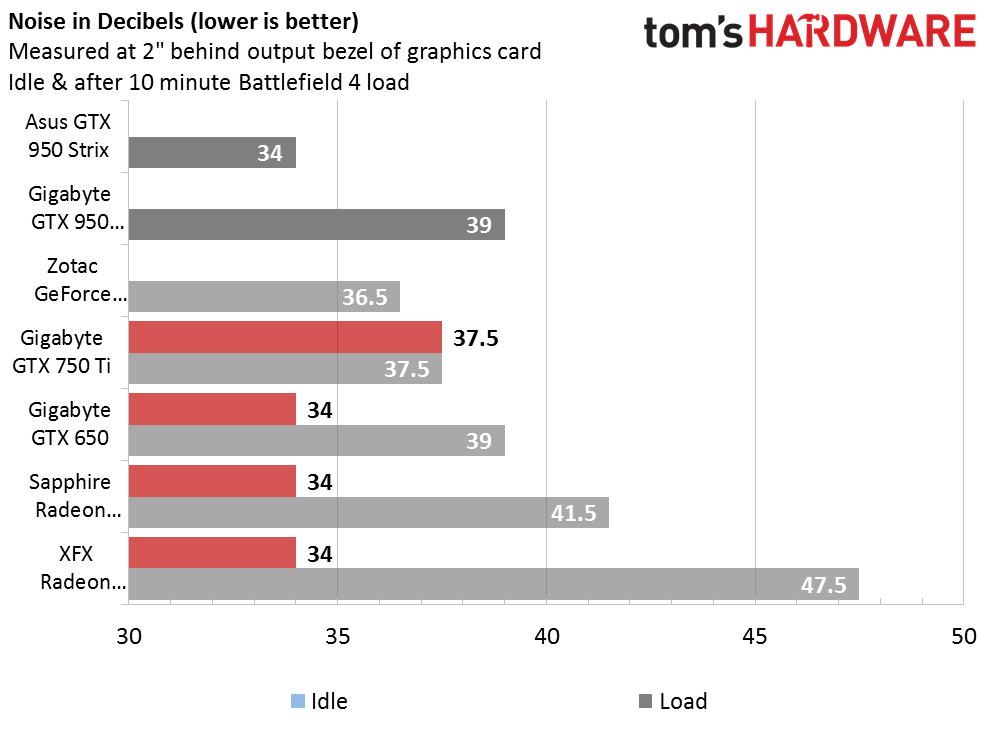

Noise

Gigabyte's GTX 950 may have outperformed Asus in the gaming tests, but if you want the quietest card available, Gigabyte does fall short. A reading of 39 decibels is certainly not loud, and you probably won't notice it. However, the measurement is still higher than Asus' card.

Interestingly, before the driver was installed, the card's fans were spinning particularly quickly, causing a slight "tinging" sound. There were no indications of a loose component or bad bearing, so I suspect it was caused by vibrations from wind turbulence. During normal operation, this behavior was not observed.

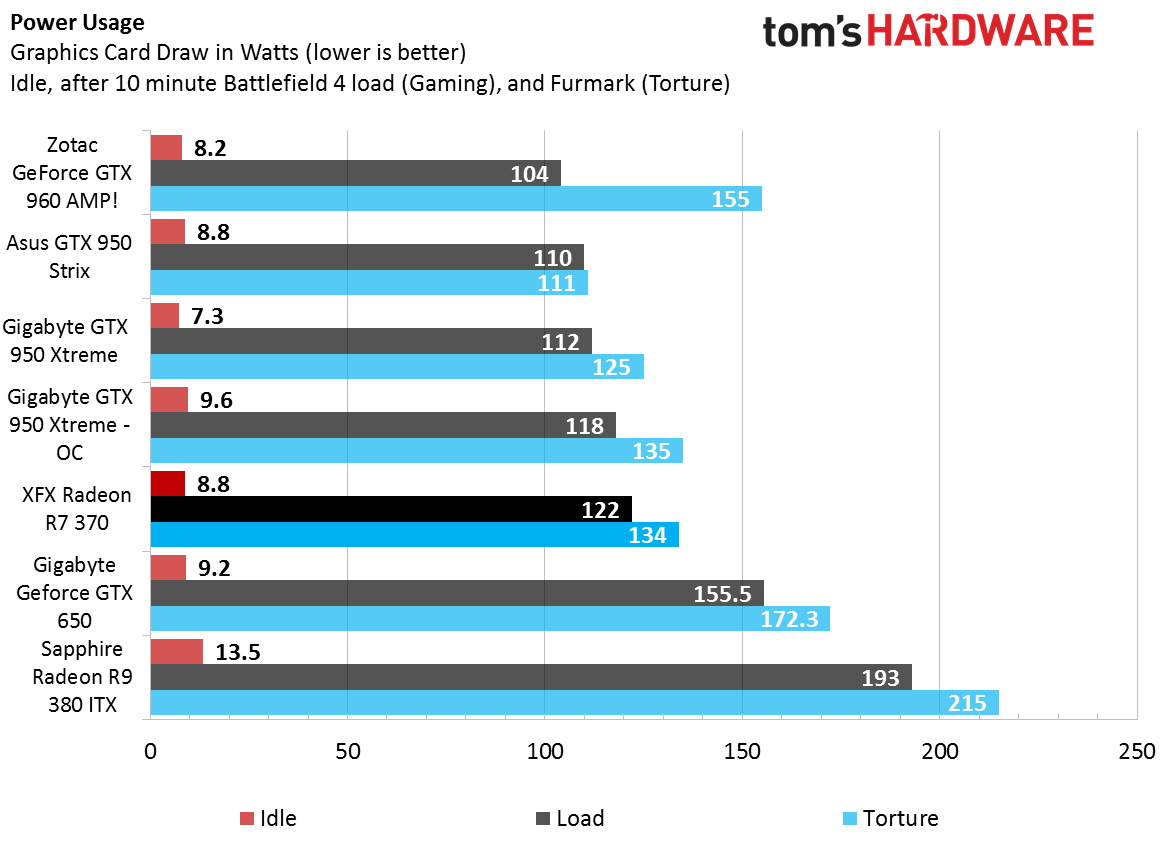

Power

The power consumption numbers were a little bit surprising. The consequence of overclocking was expected, but presenting the lowest idle consumption figure at stock speeds was not. Under load, Gigabyte's card uses quite a bit of power. At idle, though, it registers 1.5W less than Asus' GTX 950 Strix.

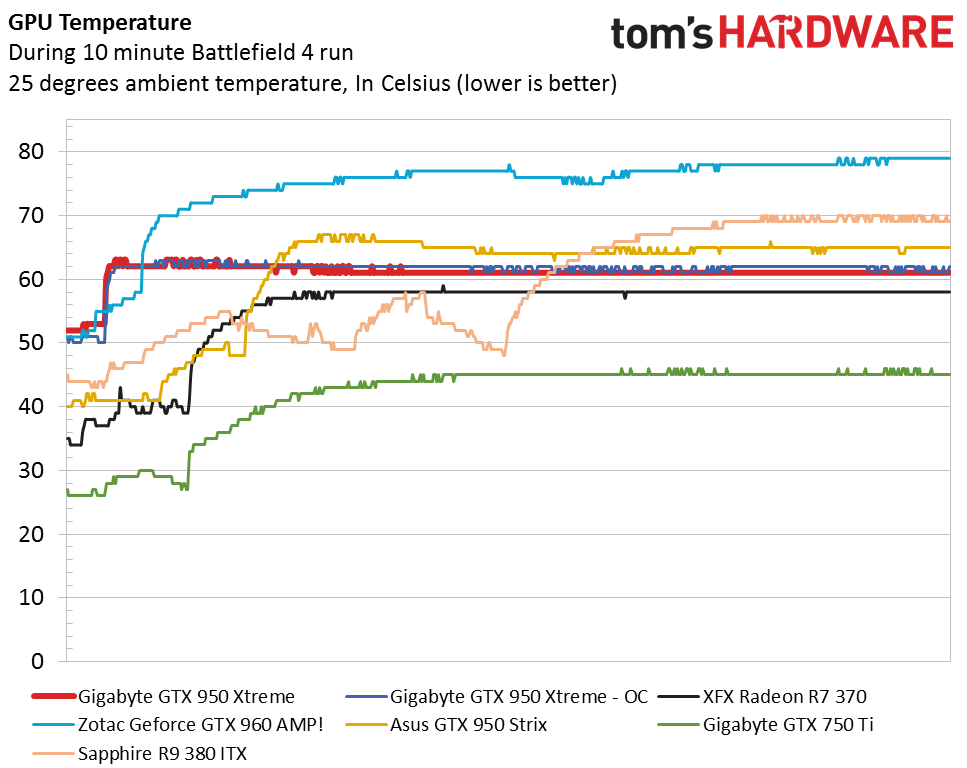

Temperature

Gigabyte's Windforce cooling solution does a great job keeping the GPU temperature under control. During a 10-minute run of Battlefield 4, the processor barely crept up over the 60-degree mark. Curiously, the GPU on Gigabyte's card heated up rapidly and then stabilized once the fan kicked on. In contrast, the GTX 950 Strix took a couple of minutes to heat up, after which it maintained a higher peak temperature.

Even after we applied our overclocked settings, the GeForce GTX 950 Xtreme Gaming managed to hold essentially the same temperature pattern through the test.

MORE: Best Graphics CardsMORE: All Graphics Content

Current page: Overclocking, Noise, Temperature And Power

Prev Page Gaming Benchmarks Next Page ConclusionKevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

chaosmassive for future benchmark, please set to 1366x768 instead of 720p as bare minimumReply

because 720p panel pretty rare nowadays, game with resolution 720p scaled up for bigger screen, its really blur or small (no scaled up) -

kcarbotte Replyfor future benchmark, please set to 1366x768 instead of 720p as bare minimum

because 720p panel pretty rare nowadays, game with resolution 720p scaled up for bigger screen, its really blur or small (no scaled up)

All of the tests were done at 1366x768.

Where do you see 720p?

-

rush21hit I have been comparing test result for 950 from many sites now and that leaves me to a solid decision; GTX 750Ti. I'm having the aging HD6670 right now.Reply

Even the bare bone version still needed 6pin power and still rated 90Watt, let alone the overbuilt. As someone who uses a mere Seasonic's 350Watt PSU, I find the 950 a hard sell for me. Add in CPU OC factor and my 3 HDD, I believe my PSU is constrained enough and only have a little bit more headroom to give for GPU.

If only it doesn't require any additional power pin and a bit lower TDP.

Welp, that's it. Ordering the 750Ti now...whoa! it's $100 now? yaayyy -

ozicom I decided to buy a 750Ti past but my needs have changed. I'm not a gamer but i want to buy a 40" UHD TV and use it as screen but when i dig about this i saw that i have to use a graphics card with HDMI 2.0 or i have to buy a TV with DP port which is very rare. So this need took me to search for a budget GTX 950 - actually i'm not an Nvidia fan but AMD think to add HDMI 2.0 to it's products in 2016. When we move from CRT to LCD TV's most of the new gen LCD TV had DVI port but now they create different ports which can't be converted and it makes us think again and again to decide what to buy.Reply -

InvalidError Reply

There are adapters between HDMI, DP and DVI. HDMI to/from DVI is just a passive dongle either way.17165047 said:now they create different ports which can't be converted

-

Larry Litmanen Obviously these companies know their buying base far better than i do, but to me the appeal of 750TI was that you did not need to upgrade your PSU. So if you have a regular HP or Dell you can upgrade and game better.Reply

I guess these companies feel like most people who buy a dedicated GPU probably have a good PSU. -

TechyInAZ Looks great! Right off the bat it was my favorite GTX 950 card since Gigabyte put some excellent aesthetics into the card, but I will still go with EVGA.Reply -

matthoward85 Anyone know what the SLI equivalent would be comparable to? greater or less than a gtx 980?Reply