Tom's Hardware Verdict

Intel's Second Generation Xeon Scalable processors build on the success of its first-gen models and add support for the innovative Optane DC Persistent Memory DIMMs. The line-up does offer significant price-to-performance improvements, though less expensive processors from AMD are worth considering for some applications.

Pros

- +

Strong performance in a broad range of workloads

- +

Higher Turbo Boost frequencies than previous gen

- +

Higher memory throughput than previous gen

Cons

- -

High power consumption

- -

Over-segmented CPU portfolio

- -

High price compared to the competition

Why you can trust Tom's Hardware

Second Generation Intel Xeon Scalable

The Cascade Lake-based Xeons, officially referred to as Second Generation Xeon Scalable processors, arrive at a critical time for the company. They offer the promise of more cores and more performance at similar price points compared to a lot of the mainstream models.

Intel's Xeon powers an estimated ~96% of the world's servers. However, AMD's first-gen EPYC processors are starting to nibble away market share. Big businesses tend to wait for architectures to mature before adopting them, which is why the second-gen EPYC Rome models pose a real threat to Intel's dominance. They'll utilize a 7nm process that is denser than Intel's 14nm node, while purportedly offering better power efficiency. That smaller manufacturing process will enable up to 64 cores and 128 threads in a single package, besting Intel's finest.

Those CPUs are expected to surface later this year, leaving Intel with a big gap to plug as it awaits the arrival of its 14nm Cooper Lake processors, and then the repeatedly delayed 10nm Ice Lake Xeon chips in 2020.

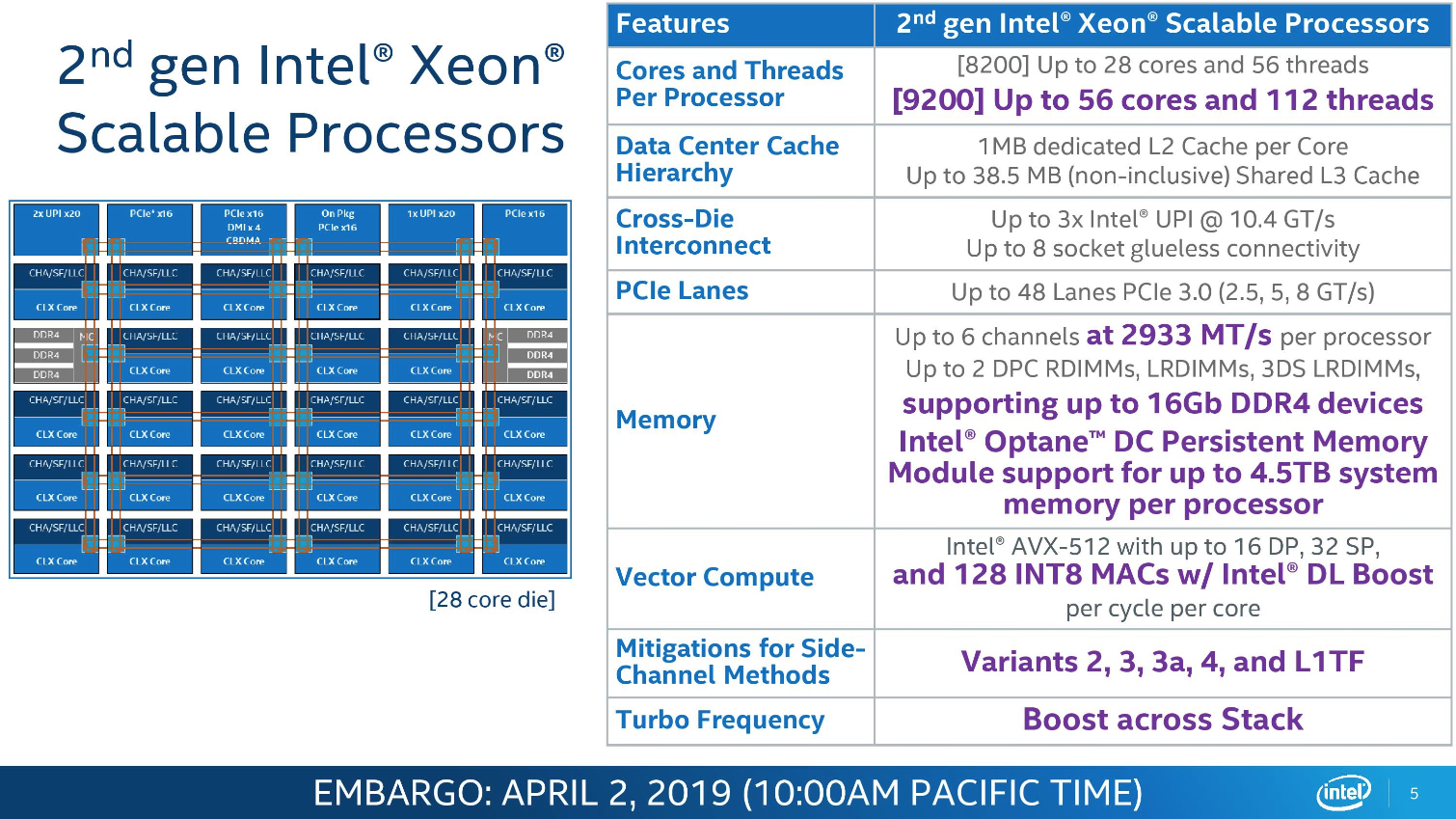

Faced with EPYC Rome's market-topping 64 cores and 128 threads, Intel also introduced its new Xeon Platinum 9000-series, armed with as many as 56 cores, 112 threads, and 12 memory channels crammed into a package that dissipates up to 400W. These new behemoths, which are essentially two Skylake-SP CPUs in a single socket, only come in OEM servers. They aren't available on their own.

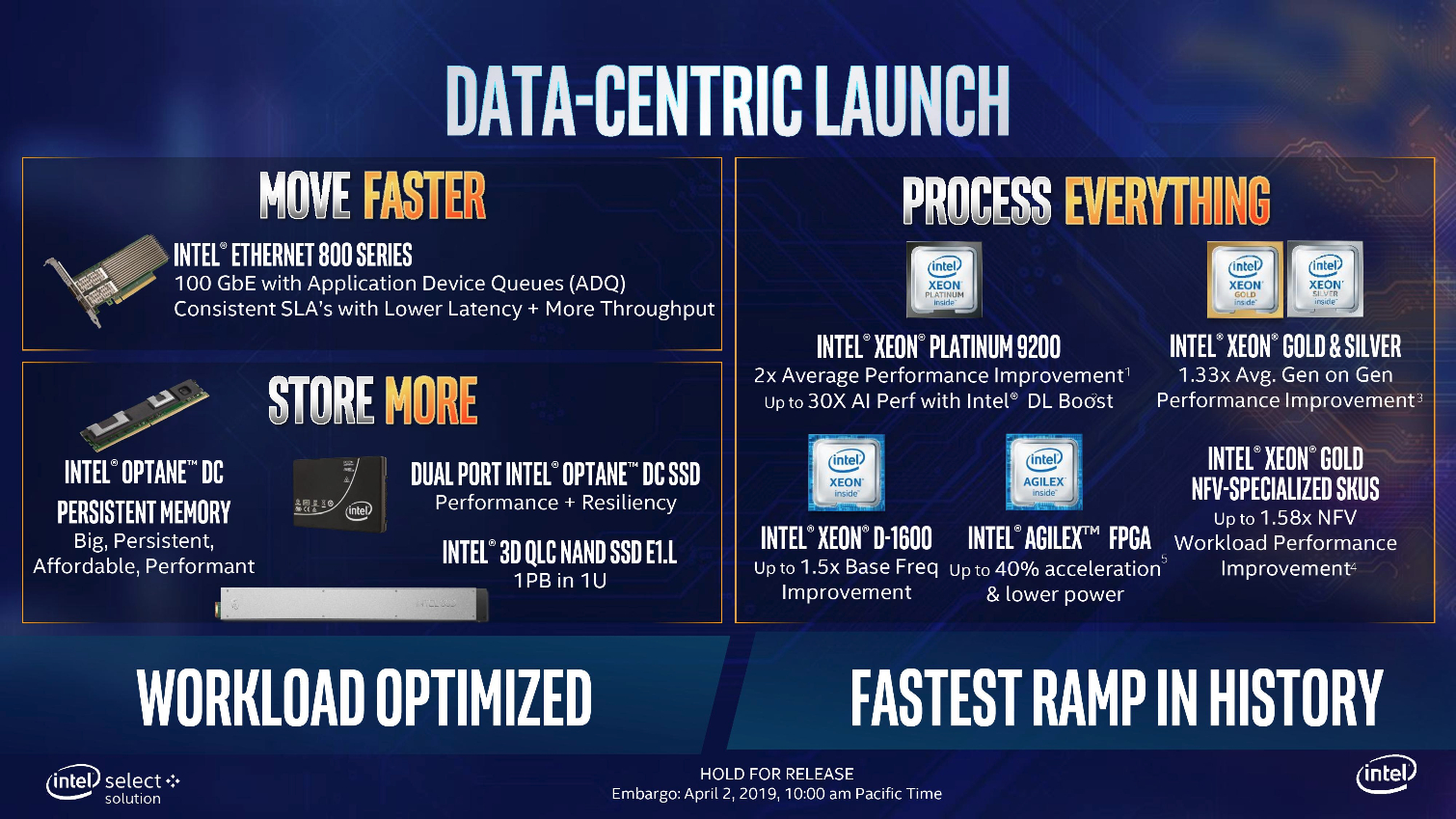

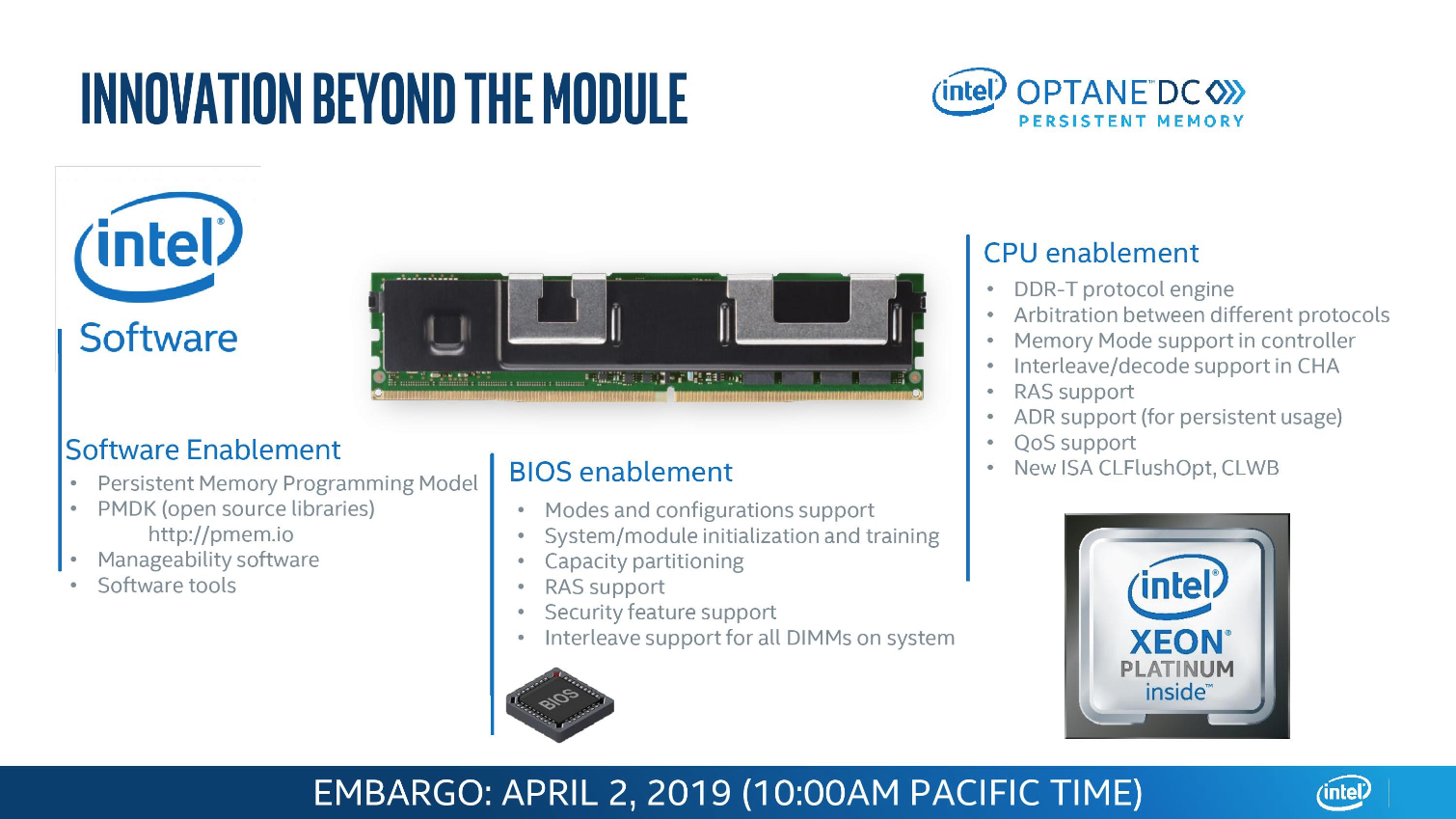

Despite the impressive arsenal of Cascade Lake chips we're being introduced to, this launch is most recognizable as another evolutionary step forward for Intel's ambitions to become a platform company rather than just a peddler of data center chips. The addition of Optane DC Persistent Memory DIMMs opens up new avenues for Intel in the memory market. Moreover, the company is expanding upon complementary businesses with new SSDs, in both NAND and Optane flavors, along with 100G Columbiaville networking solutions.

Even with Intel's obvious goal of becoming a full solutions provider, at the end of the day, its success depends on the ability to deliver compelling processors. Let's take a look at the latest CPUs in our lab.

Intel Cascade Lake Xeon Platinum 8280, Platinum 8268, and Gold 6230

Cascade Lake Xeons employ the same Skylake-SP microarchitecture as their predecessors, meaning we won't see performance improvements attributable to underlying design changes. Intel does offer a few enhancements to woo new customers, such as support for faster DRAM, support for up to 4.5TB of Optane DC Persistent Memory DIMMs on the Platinum and Gold models, higher maximum memory capacity, more L3 cache on many mid-range models, the 14nm++ process that Intel says improves frequencies and power consumption, and support for new instructions tailored for AI workloads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Intel also uses the same die configurations (XCC, HCC, LCC) with the mesh interconnect for its mainstream Platinum, Gold, Bronze, and Silver models. As a result, core counts still top out at 28, trailing AMD's EPYC Naples line-up that comes with up to 32 cores and 64 threads.

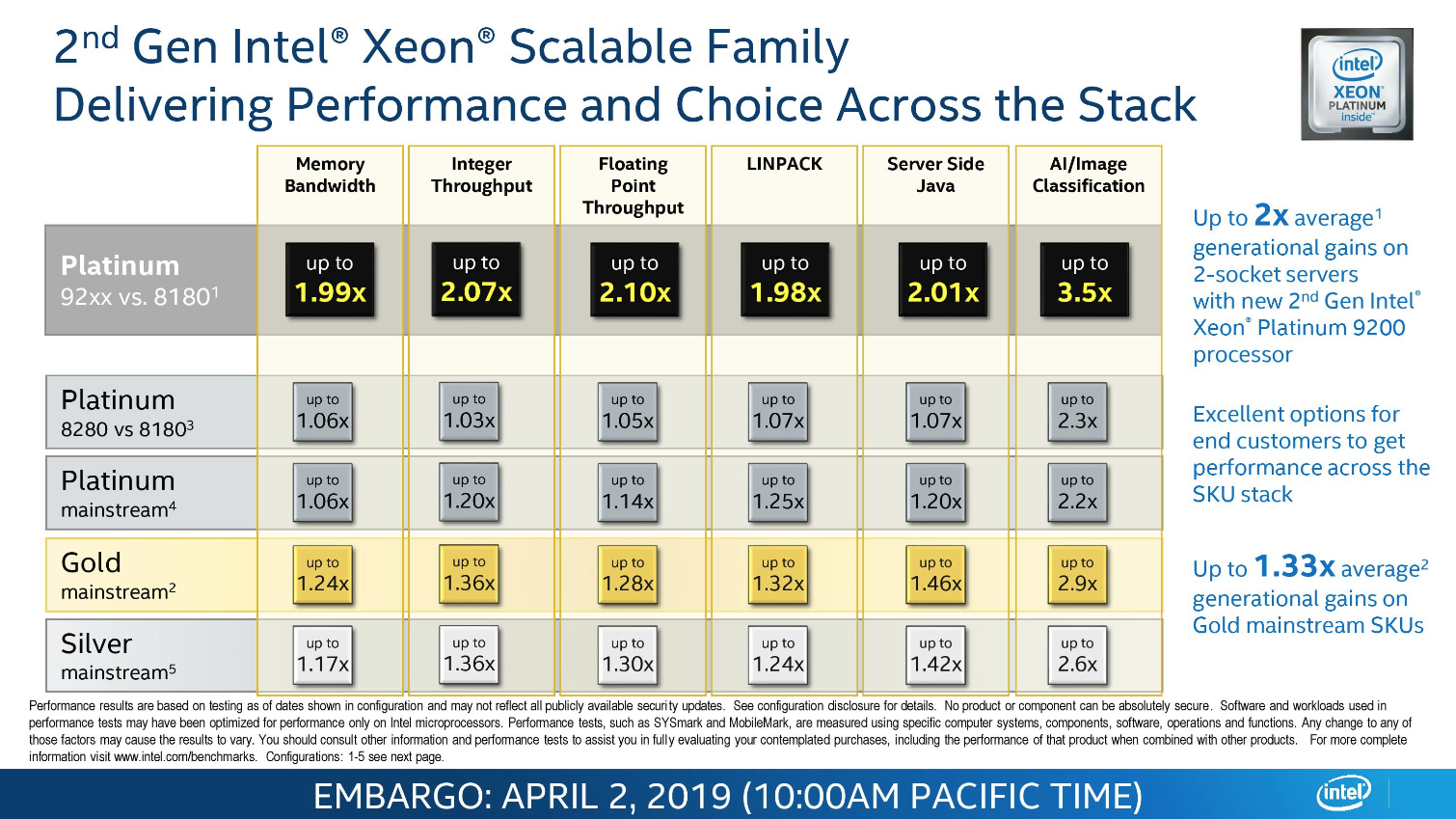

The key message this time around is that you get more performance at every price point. We can see that delivered across the stack in the form of an extra 200 MHz of base/Turbo Boost frequency over the Skylake-SP models, along with the step up to six-channel DDR4-2933 (instead of DDR4-2666). Memory capacity is now up to 1TB per chip, with more expensive models supporting either 2TB or 4.5TB. It's noteworthy, though, that the base models' 1TB of memory support per socket still trails the EPYC's 2TB.

Overall, Intel claims that its new chips offer a 30% gen-on-gen performance increase. The Gold 6230 is representative of many of the company's improvements. It comes with higher Turbo Boost frequencies, four additional Hyper-Threaded cores, and more L3 cache, all at the same $1,894 price point as its predecessor.

| Row 0 - Cell 0 | Cascade Lake Platinum 8280 | Skylake-SP Platinum 8180 | AMD EPYC 7601 | Cascade Lake Platinum 8268 | Skylake-SP Platinum 8168 | Cascade Lake Gold 6230 | Skylake-SP Gold 6130 |

| Price (RCP) | $10,009 | $10,009 | $4,500 | $6,302 | $5,890 | $1,894 | $1,894 |

| Socket | LGA 3647 | LGA 3647 | SP4 | LGA 3647 | LGA 3647 | LGA 3647 | LGA 3647 |

| Cores/Threads | 28 / 56 | 28 / 56 | 32 / 64 | 24 / 48 | 24 / 48 | 20 / 40 | 16 / 32 |

| TDP | 205W | 205W | 180W | 205W | 205W | 125W | 125W |

| Base Freq. | 2.7 GHz | 2.5 GHz | 2.2 GHz | 2.9 GHz | 2.7 GHz | 2.1 GHz | 2.1 GHz |

| Turbo Freq. | 4.0 GHz | 3.8 GHz | 3.2 GHz | 3.9 GHz | 3.7 GHz | 3.9 GHz | 3.7 GHz |

| L3 Cache | 38.5MB | 38.5MB | 64MB | 35.75MB | 33MB | 28MB | 22MB |

| Memory Support | 6-Channel DDR4-2933 | 6-Channel DDR4-2666 | 8-Channel DDR4-2666 | 6-Channel DDR4-2933 | 6-Channel DDR4-2666 | 6-Channel DDR4-2933 | 6-Channel DDR4-2666 |

| PCIe Lanes | 48 | 48 | 128 | 48 | 48 | 48 | 48 |

| Scalability (up to) | 8-Socket | 8-Socket | 2-Socket | 8-Socket | 8-Socket | 4-Socket | 4-Socket |

| Memory Capacity | 1TB | 768GB | 2TB | 1TB | 768GB | 1TB | 768GB |

Like the previous-gen Xeon Scalable processors, Intel's Cascade Lake models drop into an LGA 3647 interface (Socket P) on platforms with C610 (Lewisburg) platform controller hubs, and the processors are compatible with existing server boards. Intel's OEM partners have also released a wave of new platforms that support the latest technologies, including Optane DC Persistent memory.

By virtue of the same underlying microarchitecture, Intel's Cascade Lake processors still offer 48 lanes of PCIe 3.0. AMD's EPYC processors come with 128 lanes, which turns into a big advantage for dense NVMe storage servers and the multi-GPU setups used for deep learning. But Intel is working to chip away at its connectivity shortcoming. A new DL Boost suite adds support for multiple features that the company says give it a 14x speed-up in AI inference workloads.

Intel also adds support for VNNI (Vector Neural Network Instructions), which optimize instructions for the smaller data types commonly used in machine learning tasks. VNNI fuses three instructions together to boost int8 (VPDPBUSD) performance or a pair of instructions to boost int16 (VPDPWSSD) throughput. These operations still conform to the familiar AVX-512 voltage/frequency curve.

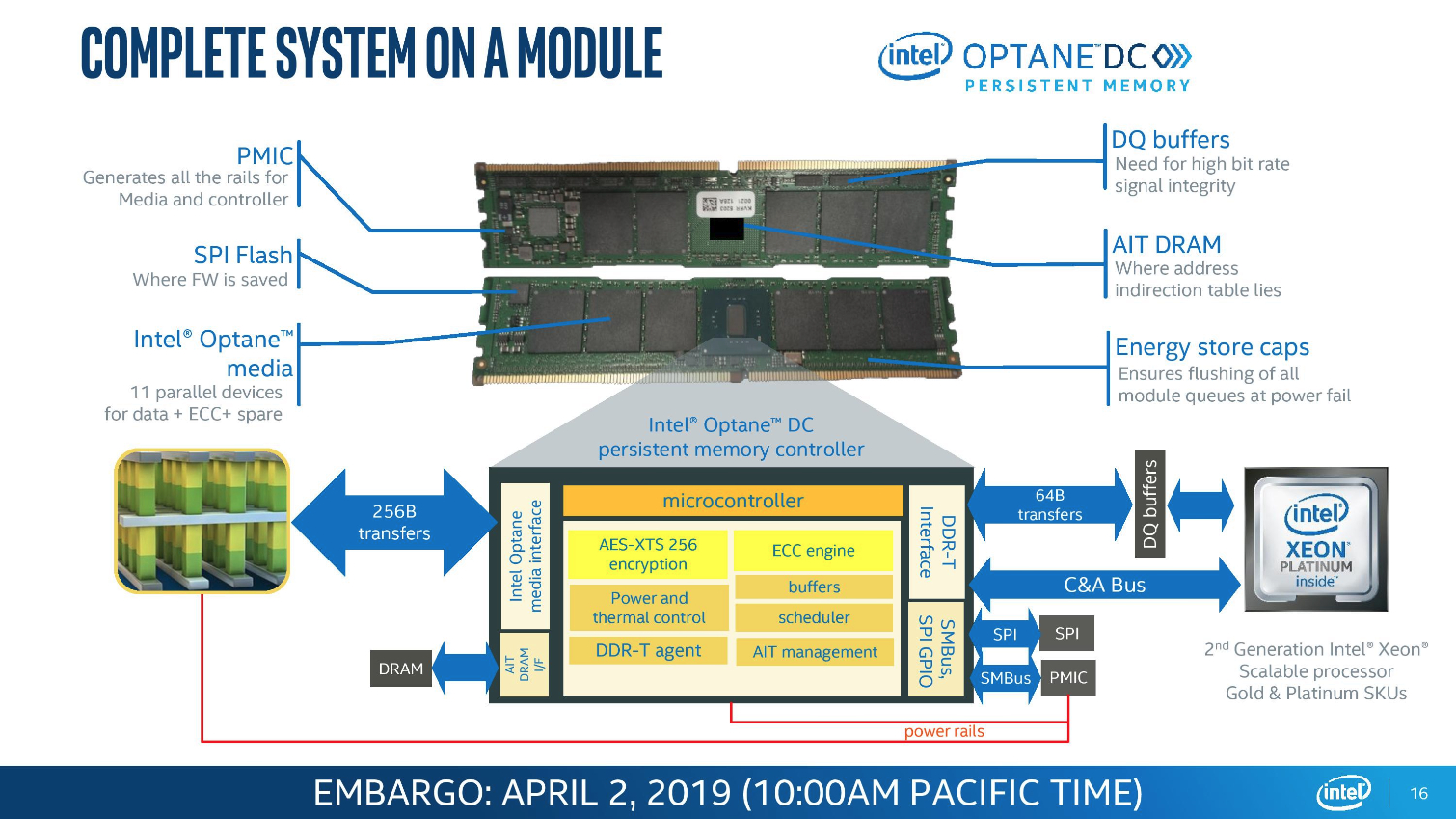

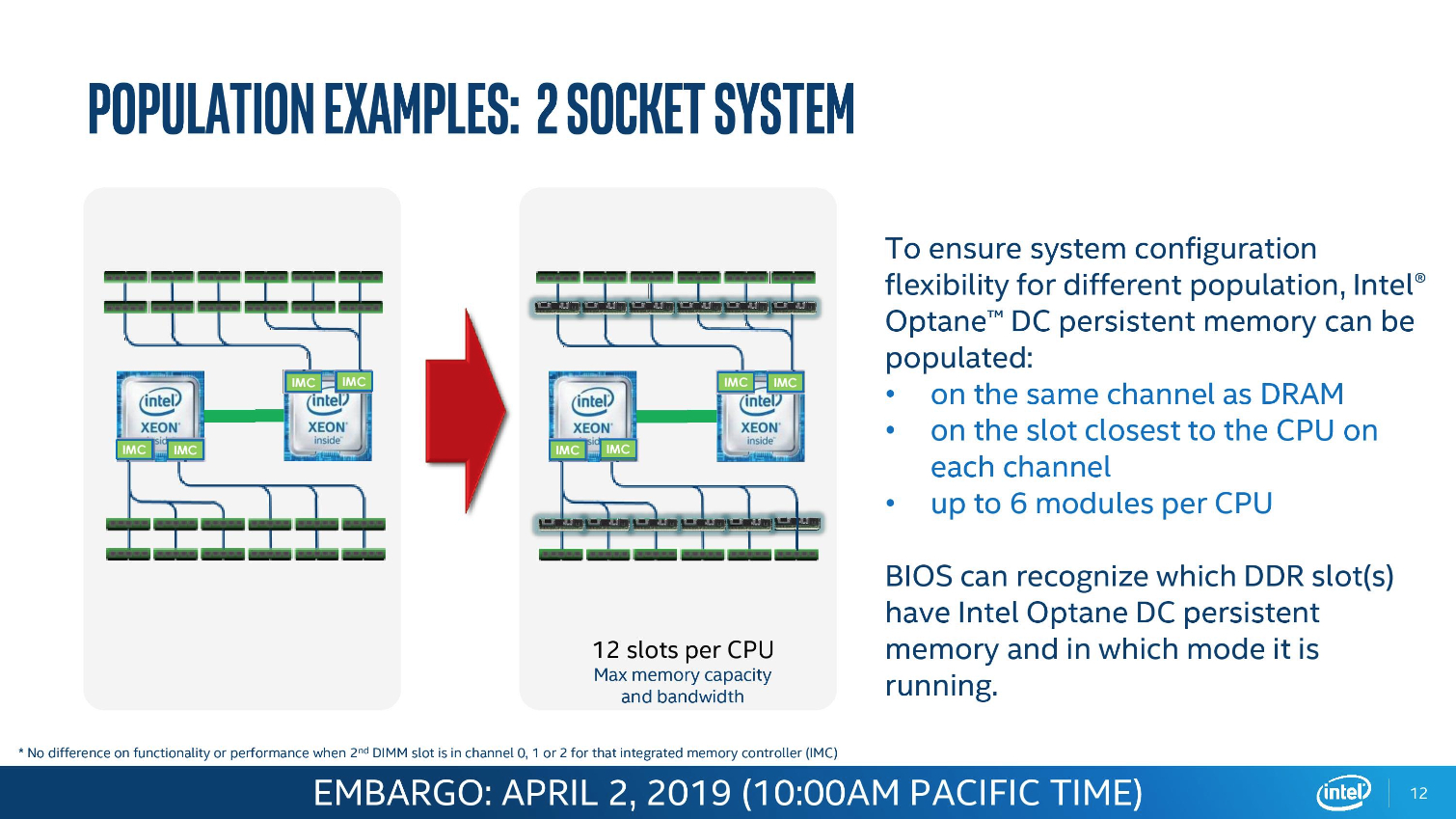

Intel's Optane DC Persistent Memory also makes its debut with the Cascade Lake platform. These new DIMMs slot into the DRAM interface, just like a normal memory module. They are available in 128, 256, and 512GB capacities, and can be used as either memory or storage. Unlike DRAM, 3D XPoint retains data after power is removed, thus enabling radical new use cases. The goal is to bridge the gap between storage and memory, potentially boosting capacity up to 6.5TB in a dual-socket server at a much friendlier price point.

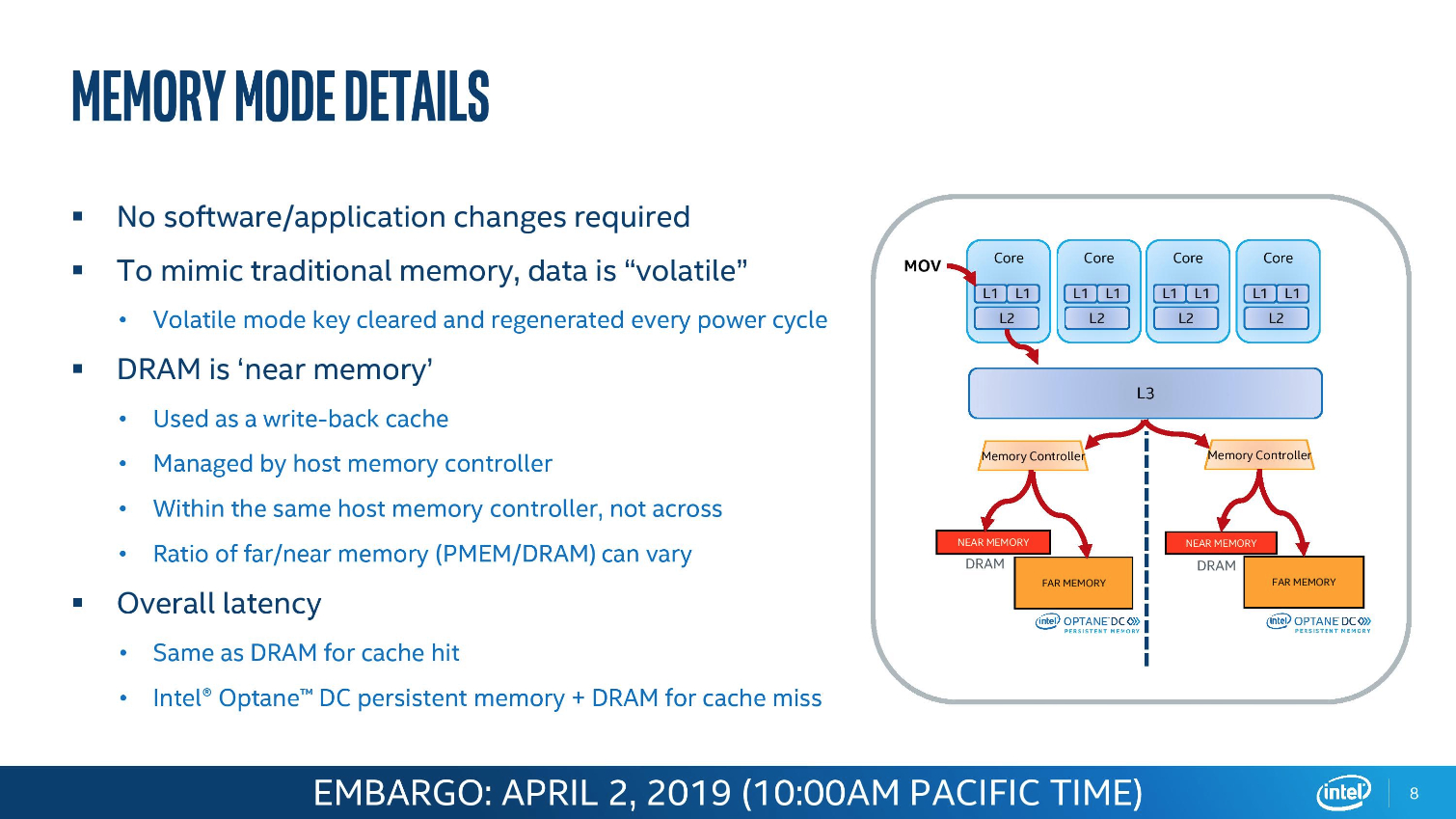

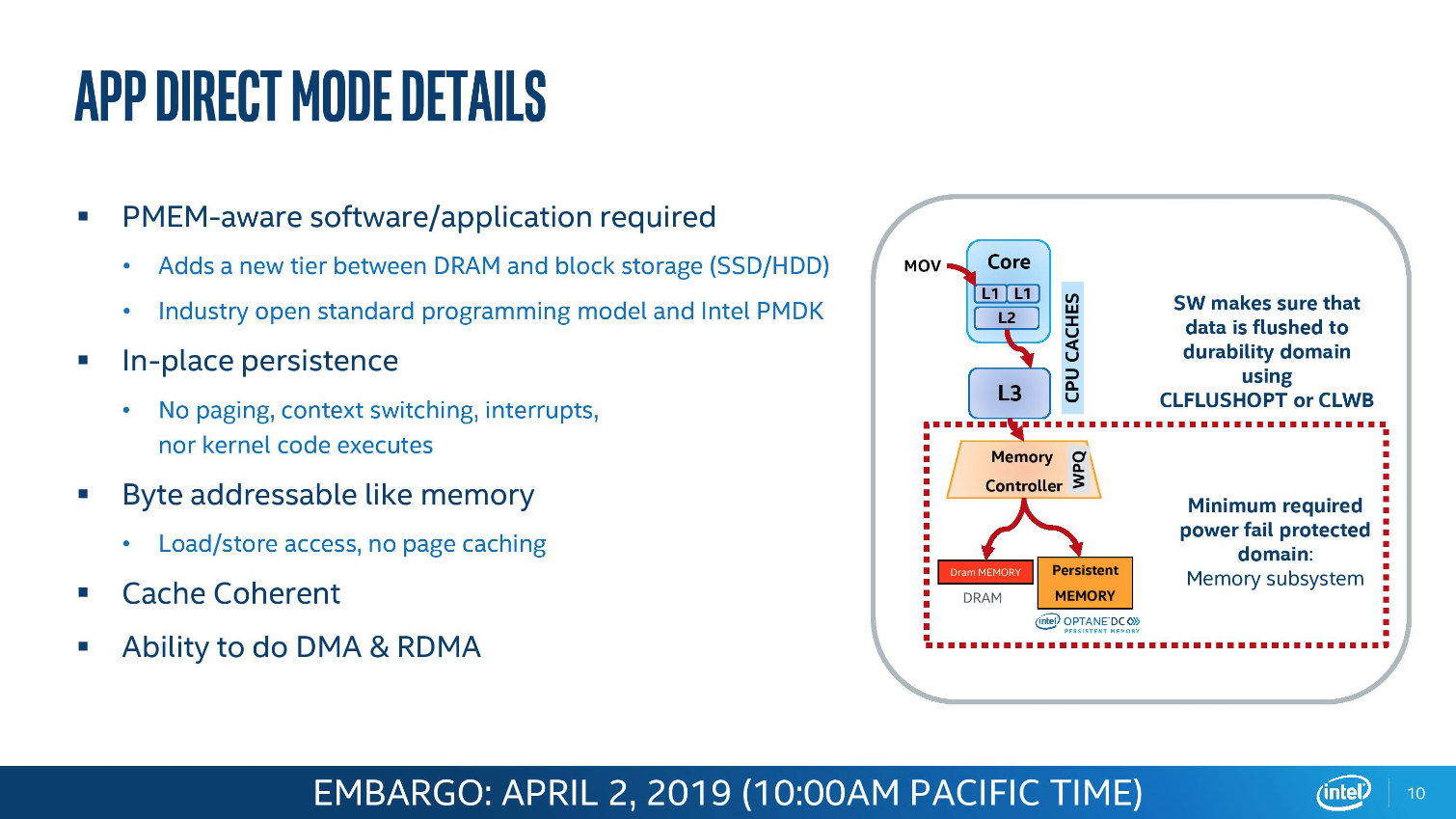

The DIMMs are addressable in either App Direct or Memory Mode. The former exposes the DIMMs as a storage device and the latter allows applications to use Optane DIMMs as a slower tier of memory. In that mode, Optane Memory can hold "cold" data typically stored in main memory, while frequently-accessed data is held in system memory, meaning it essentially serves as a cache for the Optane DIMMs. Applications can also directly control data placement into the DRAM and Optane Memory tiers, though they have to be tuned for maximum benefit.

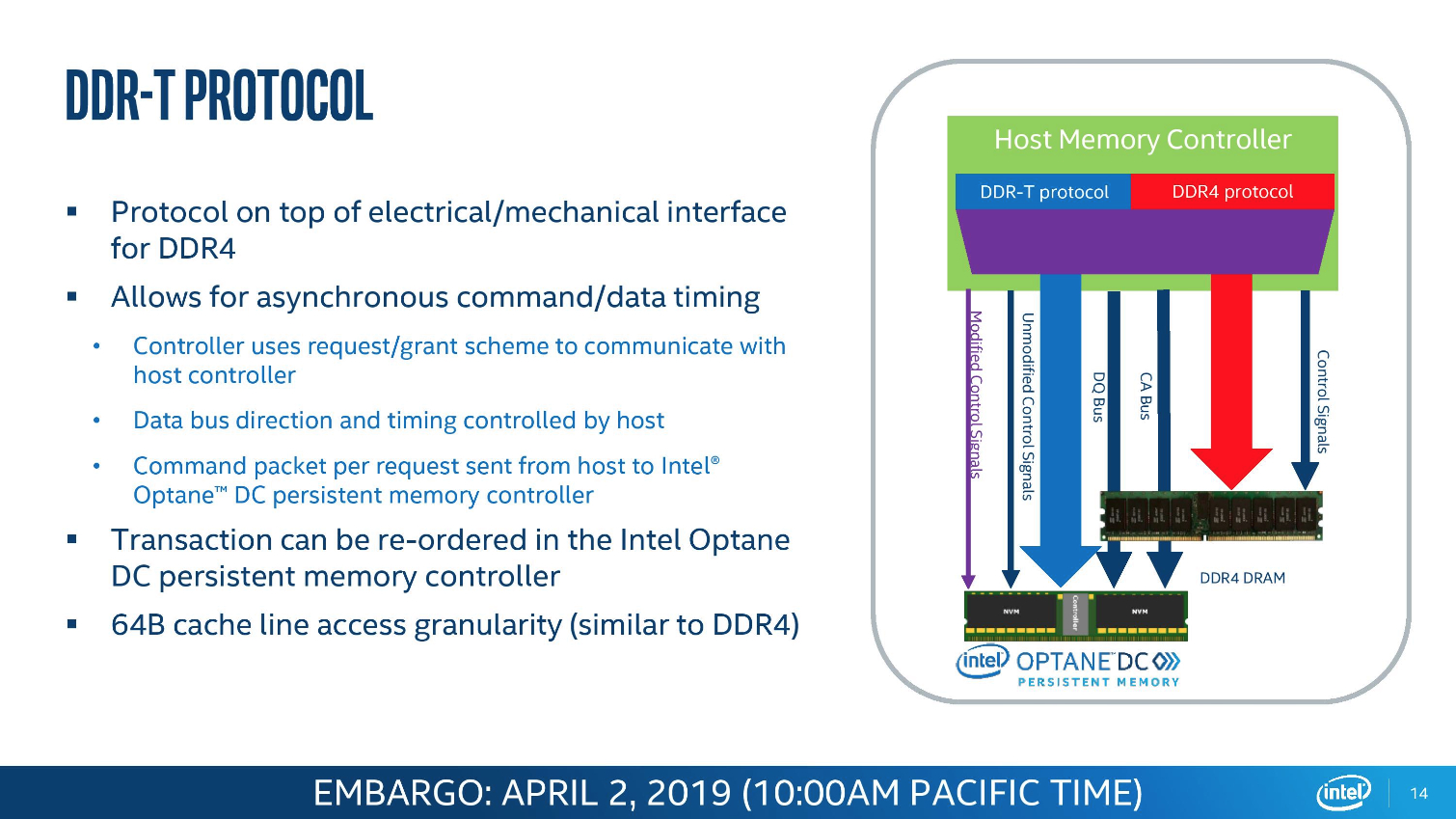

Intel also designed a new memory controller to support the DIMMs and assist in identifying data for caching. However, it isn't sharing the finer architectural details. We know that the DIMMs are physically and electrically compatible with the JEDEC standard DIMM slot, but use an Intel-proprietary DDR-T protocol to deal with the uneven latency that stems from writing data to persistent memory. Optane DIMMs share a memory channel with normal DIMM slots, and they are only compatible with Intel's Cascade Lake (and newer) Xeons. Of the caveats to be aware of, the most important is that Optane DIMMs only run at DDR4-2666, which limits all memory in the system to the same speed.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Current page: Second Generation Intel Xeon Scalable

Next Page Silicon Mitigations, Product Stack and Turbo Boost Frequencies

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Murissokah In the first page there's a paragraph stating "Like the previous-gen Xeon Scalable processors, Intel's Cascade Lake models drop into an LGA 4637 interface (Socket P) on platforms with C610 (Lewisburg) platform controller hubs, and the processors are compatible with existing server boards."Reply

Wouldn't that be LGA 3647? And shouldn't it read C620-famliy chipset for Lewisburg? -

JamesSneed It just hit me how big of a deal EPYC will be. AMD is already pulling lower power numbers with the 32 core 7601 however AMD will have a 64 core version with Zen2 and has stated the power draw will be about the same. If the power draw is truly is the same, AMD's 64 core Zen2 parts will be pulling less power than Intel's 28 core 8280. That is rather insane.Reply -

Amdlova lol 16x16gb 2666 for amdReply

8x32gb intel 2400

12x32gb intel 2933

How To incrase power compsumation to another level. add another 30w in memory for the amd server and its done. -

Mpablo87 The promise or realityReply

Cascade Lake Xeons employ the same microarchitectur as their predecessors, but, we can hope, it is promising!) -

The Doodle Slight problem this author fails to tell you in this article and that's OEM's can only buy the Platinum 9000 processor pre-mounted on Intel's motherboards. That's correct. None of the OEM's are likely to ship a solution based on this beast because of this fact. So at best you will be able to buy it from an Intel reseller. So forget seeing it from Dell, HPE, Lenovo, Supermico and others. your in white box territory.Reply

Besides, why would they? A 64C 225W AMD Rome will run rings around this space heater and cost a whole lot less.