Intel's ATX v3.0 PSU Standard Has More Power for GPUs

There is a new PSU standard from Intel, leading to huge changes in the IT market!

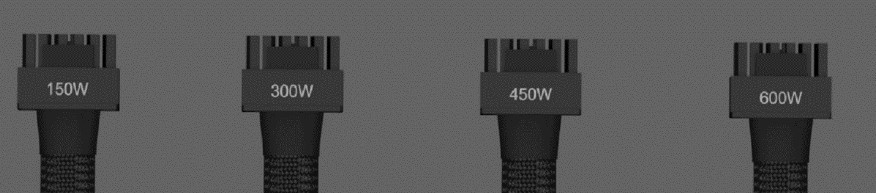

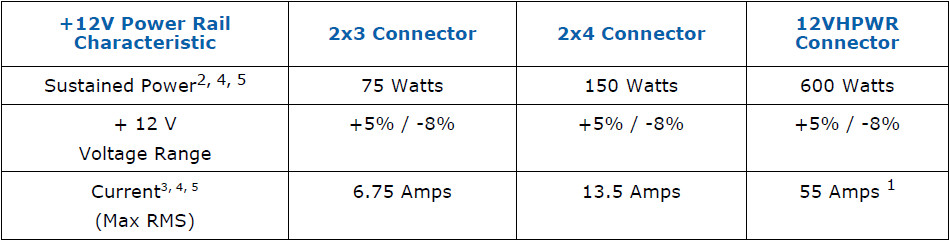

A new Intel ATX12V spec, version 3.0, brings some significant changes. After many years, we finally have a new 12+4 pin connector, also named 12VHPWR. This connector and its power rating bring some major changes to PSUs from now on. First of all, it is mandatory for all PSUs with more than 450W power ratings, so most PSUs will have one.

Thanks to the 600W maximum that the 12VHPWR can deliver, in reality, it is even more, there have to be some changes in the majority of existing PSU platforms allowing for increased tolerance to power spikes.

The Nvidia RTX 3000 series has several compatibility issues even with powerful PSUs because of the increased power spikes. It seems the new generation of GPUs will be even worse, so Intel took the PCIe CEM (Card Electromechanical specification) in mind and came up with some new requirements, which will ask for some modification in older platforms primarily to meet them.

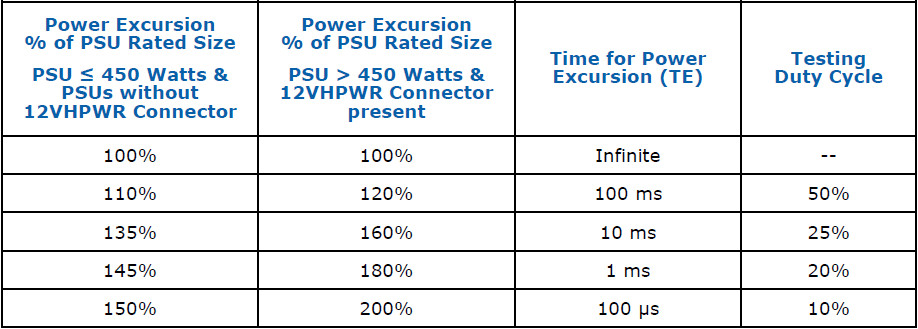

The most important change is that PSUs with over 450W capacity, which should have a 12VHPWR installed, have to be able to withstand various power spikes levels with the highest being 200% of their max-rated capacity for a period of 100μs and a 10% testing duty cycle. There are three more levels, at 180/160/120% with the power spike period increasing to 1/10/100ms and the testing duty cycle also increasing to 20/25/50%.

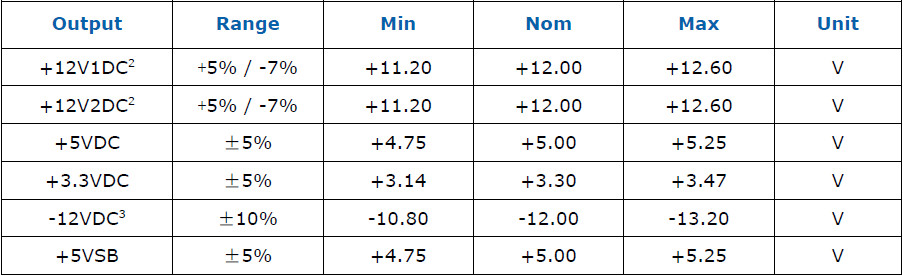

There is a change in the load regulation at +12V allowing for -8% on the PCIe connectors, to cope with the increased transient load levels and -7% for the other connectors. Moreover, this is the first time that the ATX spec allows for a higher than 12V nominal voltage, at up to 12.2V.

Another significant change affecting all manufacturers is that they have to include in the PSU's power spec label extra information regarding the T1 and T3 timings and also label the 12VHPWR connectors, according to the max power that they can deliver.

Finally, from now on, the GPU power limits will be adjusted accordingly based on the power supply's capabilities to avoid compatibility issues. There can be issues for multi-GPU systems if the optional CARD_CBL_PRES# signal is not present, to inform the PSU to adjust the sense wires on its 12VHPWR connectors accordingly. In any case, you will need 1800-2000W PSUs to exploit 600W graphics cards fully.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: Best Power Supplies

MORE: How We Test Power Supplies

MORE: All Power Supply Content

Disclaimer: Aris Mpitziopoulos is Tom's Hardware's PSU reviewer. He is also the Chief Testing Engineer of Cybenetics and developed the Cybenetics certification methodologies apart from his role on Tom's Hardware. Neither Tom's Hardware nor its parent company, Future PLC, are financially involved with Cybenetics. Aris does not perform the actual certifications for Cybenetics.

Aris Mpitziopoulos is a contributing editor at Tom's Hardware, covering PSUs.

-

Elisis It seems the link to Page 6 of the article is bugged, as it just redirects you to the 1st.Reply -

2Be_or_Not2Be Were there any estimates on when we might see these new PSUs hit the market, and/or info on the GPUs that require them?Reply -

digitalgriffin Good article. But the IC's to catch the power spikes and duty cycle time will require a lot of re-engineering. I expect a significant premium on these new models.Reply -

jeremyj_83 Reply

My guess is that the PSU manufacturers have had the final specs for a good 6 months now to be able to make their new products. Same with AMD/nVidia for their GPUs. I suspect that we will see these PSUs come out with the next GPU and CPU releases with PCIe 5.0 support.2Be_or_Not2Be said:Were there any estimates on when we might see these new PSUs hit the market, and/or info on the GPUs that require them?

I do like that the new spec includes the CPU continuous power for HEDT CPUs. Makes the decision on a PSU much easier for people who need those CPUs. Also helps Intel as their CPUs are quite power hungry during boosting. -

digitalgriffin Replyjeremyj_83 said:My guess is that the PSU manufacturers have had the final specs for a good 6 months now to be able to make their new products. Same with AMD/nVidia for their GPUs. I suspect that we will see these PSUs come out with the next GPU and CPU releases with PCIe 5.0 support.

I do like that the new spec includes the CPU continuous power for HEDT CPUs. Makes the decision on a PSU much easier for people who need those CPUs. Also helps Intel as their CPUs are quite power hungry during boosting.

The over power safety IC logic is a lot more complicated and has to be a lot more accurate. 200% power for 100us 10% duty is a nebulous one as I don't think there's anything that fast out there. But also what happens when you start getting mixed spikes like 150% mixed with 200% and 110%?

Power = V*V/R. So you cut the resistance in half and your amps shoot through the roof. The cables in the spec will heat up QUICKLY. As the power grows, the amount of power lost to heat exponentially grows. So you are dealing with an exponential heat problem with mixed amperages and duty cycles. It will require a total waste heat power table that tracks over time. -

2Be_or_Not2Be Replydigitalgriffin said:The over power safety IC logic is a lot more complicated and has to be a lot more accurate. 200% power for 100us 10% duty is a nebulous one as I don't think there's anything that fast out there. But also what happens when you start getting mixed spikes like 150% mixed with 200% and 110%?

Power = V*V/R. So you cut the resistance in half and your amps shoot through the roof. The cables in the spec will heat up QUICKLY. As the power grows, the amount of power lost to heat exponentially grows. So you are dealing with an exponential heat problem with mixed amperages and duty cycles. It will require a total waste heat power table that tracks over time.

Yeah, maybe since I don't see any announced in conjunction with the spec finalization, it's probably a bigger task for the PSU OEMs along with little need for it now. That, combined with parts shortages, probably means we won't see these new PSUs until late 2022 or even 2023. -

Co BIY The binary specification of the "Sense" pins seems over-simplified to me. Allowing these to carry a "bit" more data would make make more "sense".Reply -

wavewrangler Peace! Thanks for readin'.Reply

I wish we could get a proper write up on this like igorslab did (igorslab insight into atx 3.0) I am really... doubly so, shocked...that this is being championed, like more electricity for seemingly no real good reason is a good thing (along with 600watt cards), not to mention increased costs, increased complexity, complete redesigns, more faulty DOA components, list goes on. Those tables Intel put out are far out.

At the very least, I would have liked to have seen some questioning as to why this is a necessity and what some of the ramifications are going to be at the consumer level. Some of these things I have no idea how manufacturers will implement, and how they do it is the only thing I look forward to seeing. I mean, 200%. Why would I need 200% power for any amount of time, regardless of boost?? How do the components handle that? That's cutting amps in half. I feel like this is pretty much just starting over, not building upon.

Moore's Law has now become a doubling of power every 2 years. We'll call it More's Law. This is kind of what happens after you break physics, it collapses in on itself and goes inverse... Intel will soon get into the nuclear energy business to complement their CPUs needs. There are some things I like, but...what I would have liked more is some transparency and communication as to why the world needs this. Nvidia, Intel, AMD, et. al., always could have created 600-watt CPUs/GPUs. Actually, Pentium comes to mind... The real story here, I'm guessing, is that microarchitecture design and innovation has plateaued, and they all know more power will soon be needed to achieve any meaningful performance gain. Meanwhile, look at what DLSS and co. and a little (a lot) of hardware-software complimented innovation did for performance. Christopher Walken would approve, at least. Moar. Moar Pow-uh!

Never thought I'd need more than 1KW, what with the whole "efficiency" thing. Why not just make SLI, CrossFire, SMP work better at this point?! This is wardsback. Hello, a few thoughts on this. Mostly personal rambles.

-

digitalgriffin Replywavewrangler said:Peace! Thanks for readin'.

I wish we could get a proper write up on this like igorslab did (igorslab insight into atx 3.0) I am really... doubly so, shocked...that this is being championed, like more electricity for seemingly no real good reason is a good thing (along with 600watt cards), not to mention increased costs, increased complexity, complete redesigns, more faulty DOA components, list goes on. Those tables Intel put out are far out.

At the very least, I would have liked to have seen some questioning as to why this is a necessity and what some of the ramifications are going to be at the consumer level. Some of these things I have no idea how manufacturers will implement, and how they do it is the only thing I look forward to seeing. I mean, 200%. Why would I need 200% power for any amount of time, regardless of boost?? How do the components handle that? That's cutting amps in half. I feel like this is pretty much just starting over, not building upon.

Moore's Law has now become a doubling of power every 2 years. We'll call it More's Law. This is kind of what happens after you break physics, it collapses in on itself and goes inverse... Intel will soon get into the nuclear energy business to complement their CPUs needs. There are some things I like, but...what I would have liked more is some transparency and communication as to why the world needs this. Nvidia, Intel, AMD, et. al., always could have created 600-watt CPUs/GPUs. Actually, Pentium comes to mind... The real story here, I'm guessing, is that microarchitecture design and innovation has plateaued, and they all know more power will soon be needed to achieve any meaningful performance gain. Meanwhile, look at what DLSS and co. and a little (a lot) of hardware-software complimented innovation did for performance. Christopher Walken would approve, at least. Moar. Moar Pow-uh!

Never thought I'd need more than 1KW, what with the whole "efficiency" thing. Why not just make SLI, CrossFire, SMP work better at this point?! This is wardsback. Hello, a few thoughts on this. Mostly personal rambles.

Power gating is a technique that powers down part of the gpu to save power. This allows it to run cooler. But reinitiating those stages of circuits often creates a massive power inrush. A GPU hardware scheduler can see certain circuits are needed and flip the switch to power up those sections well before the instruction is dispatched. But a high inrush current is needed to make sure said circuit is initialized in time.

Second some mimd matrix operations / tensor ops are massive power hogs.

These two things together result in current influx. When you have a massive use inrush of energy, resistance drops to zero. V = IR. That means voltage drops the zero and why you have all these capacitors which store up energy to reduce voltage swings. Voltage swings are what create instability, and in rare cases, damage.

Now these huge currents are nothing new. They have been around for a decade. However, the sudden burst though and sensitivity to them is new.

As circuits get smaller, the transistor threshold for operating voltage is smaller. Also the number of transistors firing goes exponentially up. Thus lower more sensitive voltage but more current.