Best GPUs for Crypto Mining

Test Results and Bottom Line

How We Tested: Ethereum

Because Bitcoin is mined almost exclusively using ASICs, systems designed for that purpose, it's of no interest to anyone mining cryptocurrency on a PC. So, for our tests, we compared performance mining Ethereum (ETH), the number-two cryptocurrency.

We used a variety of AMD and Nvidia cards with at least 4GB of memory. We chose that amount because the Directed Acyclic Graph file, which you need for mining, is larger than 2GB right now and could soon get bigger than 3GB. To account for future growth of the file, we're running our benchmarks at a DAG epoch of 190, which is fine for cards with 4GB or more of onboard memory. If you want to take a look at the current DAG size, visit this page.

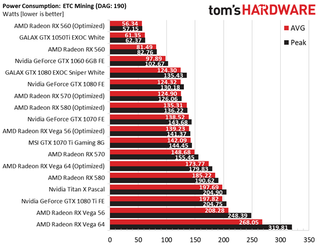

Power Consumption

Power consumption means everything to a graphics card, since it affects performance, noise output, and reliability (which is directly tied to operating temperature). To keep themselves from being damaged, all modern GPUs will throttle back their frequency and voltage when they pass a manufacturer-determined heat threshold.

To see how much electricity our test cards use, we used Powenetics and GPU-Z to log the wattage while we mined Ethereum. We tested some cards with both stock and optimized settings.

The Radeon RX 560 with optimized settings achieved the lowest power consumption, with the GeForce GTX 1050 Ti right behind. The GeForce GTX 1060 6GB exhibited relatively low power use, needing less than 100W during our mining workload. From Nvidia's line-up, the GeForce GTX 1080 Ti Founders Edition registers the highest consumption in every test, even exceeding the higher-end Titan Xp.

AMD's high-end cards are even more power-hungry at their stock settings. But our measurements dropped once we cranked up the memory frequency, dialed back core clock rates, and decreased voltage to the GPU. The Radeon RX 570 and 580 show us why they're such popular choices with miners, while Radeon RX Vega 64 uses almost 100W less after we tune it for mining.

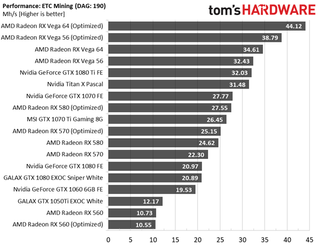

Raw Performance

Professional miners consider "hash rate," measured in MH/s (million hashes per second), to be the definitive performance metric. In our tests, we used Claymore's Dual Ethereum AMD+Nvidia GPU Miner in "-benchmark" mode, specifying a DAG epoch of 190.

High-end AMD cards play at another performance level, leaving the corresponding Nvidia models behind before we even touch a stock setting. Once the clock rates and voltages get dialed in, though, the gap grows even larger.

In the case of Radeon RX Vega 64, hash rate increased by more than 27% (and we just saw the card's optimized power drop 35%) after we tweaked the settings. But not every optimization is performance-focused. AMD's Radeon RX 560 slows down a bit when optimized, but cuts its power consumption by 31 percent to save on electricity costs.

At stock settings, Nvidia's performance king is the GeForce GTX 1080 Ti, with the company's much-more-expensive Titan Xp following closely. The GTX 1070 Founders Edition takes the lead from MSI's 1070 Ti, while both GeForce GTX 1080s that we tested are left way behind due to their GDDR5X memory and its inferior timings. Finally, the GTX 1060 with 6GB of memory (the 3GB wouldn't run under DAG epoch 190) fares pretty well, given its price. We have not yet tested Nvidia cards with optimized settings.

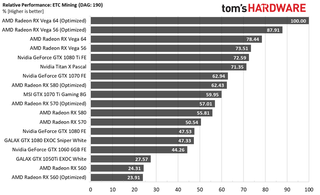

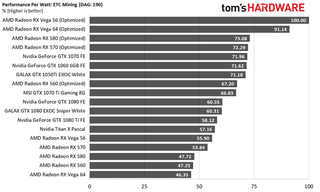

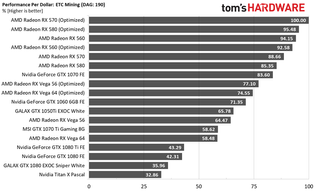

Performance Per Watt And Dollar

Once we fold performance and power into the same chart, we get a clearer picture of efficiency. That's depicted in terms of hash rate over energy consumption.

The finishing order changes completely when we start looking at efficiency rather than raw performance. Now, it's the Radeon RX Vega 56 with optimized settings that takes the lead. Third and fourth place go to the Radeon RX 580 and 570 models with optimized settings.

Out of the box, the RX Vega 64 serves up strong performance at such high power consumption that efficiency appears downright awful. But by dialing back its core voltage by -200mV, pulling the GPU's clock rate down to 852 MHz, and nudging the HBM2 to 1150 MHz, we can propel the Vega 64 into second place behind Radeon RX Vega 56.

From the Nvidia models, GeForce GTX 1070 Founders Edition shines the brightest, with the GeForce GTX 1060 6GB Founders Edition following. The 1050 Ti fares surprisingly well too, thanks to its low power consumption.

The prices of graphics cards are all over the place these days, making it hard to pin down good figures for our performance-per-dollar metric. As a result, we stuck with AMD's and Nvidia's MSRPs in the hopes that the twin forces of rising difficulty and receding valuations of Ether knock miners out of the market, allowing some return to pricing normalcy.

AMD models take the lead again, with only two Nvidia cards landing in the top 10 spots (GeForce GTX 1070 and 1060). Given high value scores, it's only natural that the Radeon RX 570 and 580 were previously so hard to find, and still marked up significantly.

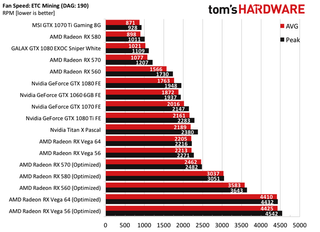

Fan Speeds

Mining rigs typically aren't built for silent running, so more noise is often a side-effect of a graphics card tuned for performance. However, fan speed gives us a good indicator of just how loud you can expect your rig to get.

Thanks to its superb cooling solution, MSI's GTX 1070 Ti Gaming registers the lowest fan speeds of all the cards we tested. Subjectively, it's also among the quietest. The stock Radeon RX 580 follows, with the Galax GTX 1080 EXOC in third place. However, that card's performance is weak as a result of GDDR5X memory timing issues faced by all GeForce GTX 1080 cards.

The optimized AMD cards come in last here because their fans are configured for maximum cooling and the best possible performance. So, if you can either live with the noise or put your mining rig somewhere that you can't hear it, you'll appreciate the efficiency.

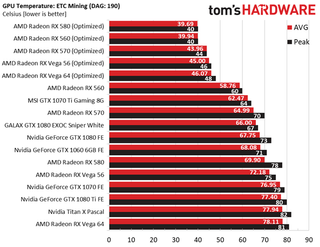

GPU Temperatures

You don't want a card that gets too hot, because it will lower its own performance and possibly even get damaged over time.

The optimized AMD cards achieved the lowest GPU temperatures on our tests, since we cranked their fans up for improved cooling. On the Nvidia side, MSI's GeForce GTX 1070 Ti Gaming not only demonstrates the lowest fan speeds, but also achieves the lowest operating temperatures. It might not take any of the top places in our performance per watt metric, but the card is good for stressful applications like mining because of MSI's highly capable thermal solution.

Bottom Line

Graphics card prices remain stratospheric, and availability is spotty, but for now, the optimized AMD Radeon RX 580/570/560 have the best mining performance per dollar. Nvidia's top Ethereum mining card looks to be the GeForce GTX 1070 Founders Edition.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

-

abryant Archived comments are found here: http://www.tomshardware.com/forum/id-3675386/gpus-ethereum-mining-tested-compared.htmlReply -

Stevemeister We should be reporting that the worst cards for mining are high end graphics cards . . . .maybe then demand will drop and prices come back to earth. . . . . . I've seen GTX970's selling used for more than I paid new a few years ago . . . .something is wrongReply -

Myrmidonas Any article about cryptocurrency, that helps miners further, is against gamers since it promotes cryptocurrency and affects prices go higher and drop availability of GPUs.Reply

As a gamer, I do not like that. -

salgado18 These articles are a disservice to the gamer community.Reply

On the other hand, it's their business to speak about hardware in every area, not just games.

As sad as it makes us, that's just Tom's doing their job. We shouldn't pick on them, we should pick on miners and maybe manufacturers (who don't make specialized hardware and let gaming cards take the price hit). -

yoncenmild Gamers complaining about crypto mining need to reevaluate their perspective. You can either complain that the situation is unfair and demand that someone else do something about it. Or you can accept the situation as reality and figure out a way to use it to your advantage.Reply

I've never been able to justify spending too much on the GPU for my personal rig. I only play an hour or so a week and mostly older RTS games.

I've been able to upgrade my GPU for free several times just by mining crypto overnight. I went from a R9 290x to a RX580 4GB to dual 580 8GB for free. I purchased the 290X used for $213 before the mining craze really took off so I basically got my 580s for $111.5 each and I'll be upgrading to a pair of Vega 64s before the end of the year.

Buy an "overpriced" GPU mine with it overnight and it will eventually pay for itself. Or just complain about how unfair it is, your choice. -

justin.m.beauvais Just as an experiment, I'd like to see how the Titan V mines. The thing is a monster, and very power efficient.Reply -

theyeti87 Reply20956945 said:Gamers complaining about crypto mining need to reevaluate their perspective. You can either complain that the situation is unfair and demand that someone else do something about it. Or you can accept the situation as reality and figure out a way to use it to your advantage.

I've never been able to justify spending too much on the GPU for my personal rig. I only play an hour or so a week and mostly older RTS games.

I've been able to upgrade my GPU for free several times just by mining crypto overnight. I went from a R9 290x to a RX580 4GB to dual 580 8GB for free. I purchased the 290X used for $213 before the mining craze really took off so I basically got my 580s for $111.5 each and I'll be upgrading to a pair of Vega 64s before the end of the year.

Buy an "overpriced" GPU mine with it overnight and it will eventually pay for itself. Or just complain about how unfair it is, your choice.

Not everyone is in your situation, though. To say "I only play 1 hour of RTS so I don't need a fancy GPU" is your personal perspective. "Mine overnight, things will pay for itself" - seriously doubt your claims here.