PCI Express 3.0: On Motherboards By This Time Next Year?

After an unfortunate series of untimely delays, the folks behind PCI Express 3.0 believe they've worked out the kinks that have kept next-generation connectivity from achieving backwards compatibility with PCIe 2.0. We take a look at the tech to come.

PCI Express 3.0: Built For Speed

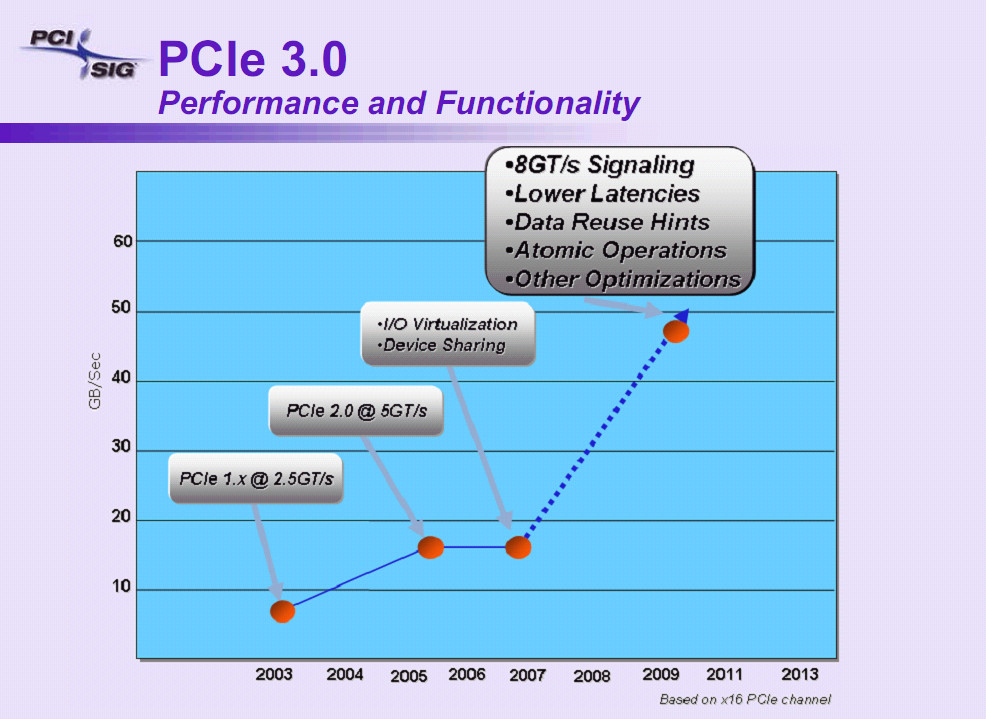

The primary difference for end users between PCI Express 2.0 and PCI Express 3.0 will be a marked increase in potential maximum throughput. PCI Express 2.0 employs 5 GT/s signaling, enabling a bandwidth capacity of 500 MB/s for each “lane” of data traffic. Thus, a PCI Express 2.0 primary graphics slot, which typically uses 16 lanes, offers bidirectional bandwidth of up to 8 GB/s.

PCI Express 3.0 will double those numbers. PCI Express 3.0 uses an 8 GT/s bit rate, enabling a bandwidth capacity of 1 GB/s per lane. Accordingly, a 16-lane graphics card slot will have a bandwidth capacity of up to 16 GB/s.

On the surface, the increase from 5 GT/s to 8 GT/s doesn’t quite sound like a doubling of speed. However, PCI Express 2.0 uses an 8b/10b encoding scheme, where 8 bits of data are mapped to 10-bit symbols to achieve DC balance. The result is 20% overhead, cutting effective bit rate.

PCI Express 3.0 moves to a much more efficient 128b/130b encoding scheme, eliminating the 20% overhead. So, the 8 GT/s won’t be a “theoretical” speed; it will be the actual bit rate, comparable in performance to 10 GT/s signaling with 8b/10b.

PCI-SIG states that it chose the route of eliminating overhead instead of increasing to 10 GT/s because “8 GT/s represents the most optimal tradeoff between manufacturability, cost, power, and compatibility.” The group further states that bumping the speed to 10 GT/s creates “prohibitive penalties” including “design complexity and increased silicon die size and power.” PCI-SIG’s Al Yanes added, “The magic is in the electrical stuff. These guys have really come through for us.”

I asked Yanes what devices he anticipates will require the increase in speed. He replied that these will include “PLX switches, 40 Gb Ethernet, InfiniBand, solid state devices, which are becoming very popular, and of course, graphics.” He added “We have not exhausted innovation, it’s not static, it’s a continuous stream,” clearing the way for even more enhancements in future versions of the PCI Express interface.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: PCI Express 3.0: Built For Speed

Prev Page PCI Express 3.0: The Timeline Next Page Analysis: Where We’ll Use PCI Express 3.0-

cmcghee358 Good article with some nice teases. Seems us regular users of high end machines won't see a worth until 2012. Just in time for my next build!Reply -

tony singh What the..... pcie3 already devoloped & most games graphics are still of geforce 7 level thnk u consoles..Reply -

iqvl Good news to peoples like me who haven't spent any money on PCIE 2.0 DX11 card due to nVidia's delay in shipping GTX460.Reply

Can't wait to see PCIE 3.0, native USB3/SATA3, DDR4, quad channel and faster&cheaper SSD next year.

In addition, I hate unreasonably priced buggy HDMI and would also like to see the Ethernet cable(cheap, fast and exceptional) based monitors as soon as possible.

http://www.tomshardware.com/news/ethernet-cable-hdmi-displayport-hdbaset,10784.html

One more tech that I can't wait to see: http://www.tomshardware.com/news/silicon-photonics-laser-light-beams,10961.html

WOW, so much new techs to be expected next year! -

ytoledano Processor speed *is* increasing exponentially! Even a 5% year-on-year increase is exponential.Reply -

Casper42 I havent read this entire article but on a related note I was told that within the Sandy Bridge family, at least on the server side, the higher end products will get PCIe 3.0.Reply

And if you think the Core i3/5/7 desktop naming is confusing now, wait till Intel starts releasing all their Sandy Bridge Server chips. Its going to be even worse I think.

And while we're talking about futures, 32GB DIMMs will be out for the server market most likely before the end of this year. If 3D Stacking and Load Reducing DIMMs remain on track, we could see 128GB on a single DIMM around 2013, which is when DDR4 is slated to come out as well. -

JonnyDough ReplyAfter an unfortunate series of untimely delays, the folks behind PCI Express 3.0 believe they've worked out the kinks that have kept next-generation connectivity from achieving backwards compatibility with PCIe 2.0. We take a look at the tech to come.

It's nice to see the backwards compatibility and cost be key factors in the decision making. Especially considering that devices won't be able to saturate it for many years to come. -

rohitbaran ReplyNothing in the world of graphics is getting smaller. Displays are getting larger, high definition is replacing standard definition, the textures used in games are becoming even more detailed and intricate.

Even the graphics cards are getting bigger! :lol: -

iqvl rohitbaranEven the graphics cards are getting bigger!I believe that he meant gfx size per performance. :)Reply -

Tamz_msc ReplyWe do not feel that the need exists today for the latest and greatest graphics cards to sport 16-lane PCI Express 3.0 interfaces.

Glad you said today, since when Crysis 3 comes along its all back to the drawing board, again.