Intel's Next IGP Slated to Run Sims 3

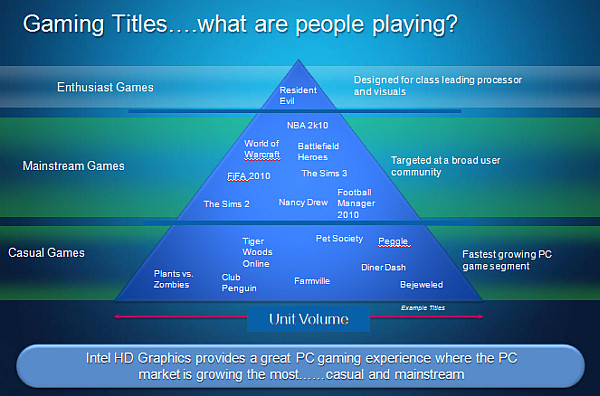

Intel's next IGP is geared towards the mainstream and casual gamer.

Is Intel now focusing on the PC gamer? That's what a recent slide reveals, posted over on Donanimhaber.com. According to the image, Intel's next-generation integrated graphics processor will focus on the larger mainstream and casual gaming markets. Simply called the Intel HD within the slide, the next-generation IGP will be capable of playing The Sims 3, World of Warcraft, Battlefield Heroes, and even a Nancy Drew title.

While it may sound like we're dripping with sarcasm, we're really not. Previous helpings of Intel-based IGPs haven't been real winners, especially when it comes to PC gaming. If Intel has any hope of gaining some kind of market in the gaming industry, it will need to crank out an IGP capable of 1080p video and DirectX 11-capable graphics.

As it stands now, the upcoming Intel HD Graphics IGP will accompany Intel's Westmere CPUs. The "enthusiast" gamer may be left in the dark however, left to choose between ATI and Nvidia hardware. As for cost, the slideshow didn't specify, however if it's slated for the mainstream and casual gamer, the cost should be relatively cheap.

We're betting more info will appear next month at CES 2010.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Kevin Parrish has over a decade of experience as a writer, editor, and product tester. His work focused on computer hardware, networking equipment, smartphones, tablets, gaming consoles, and other internet-connected devices. His work has appeared in Tom's Hardware, Tom's Guide, Maximum PC, Digital Trends, Android Authority, How-To Geek, Lifewire, and others.

-

jincongz Even if you're not dripping with sarcasm, you should be.Reply

The Mac version of Sims 3's minimum requirements:

ATI X1600 or NVIDIA 7300 GT with 128 MB RAM or Intel Integrated GMA X3100

WoW on PC:

32 MB 3D video card with Hardware T&L or better

Battlefield Heroes:

DirectX compatible 64 MB graphics card with Pixel Shader 2.0 or better

As far as I'm concerned, their X3100 already hits all of their targets. Congratz. -

makotech222 my 4500mhd cant even run runescape, europa universalis 3, and most basic games without serious lag. i hate it and regret going integrated.Reply -

What does Intel mean by "run". So far their definition of "run" seems to encompass a slideshow with minimal graphic quality. I mean sure they can "run" a game but who wants to play at 10 fps and watch their character bounce randomly around the screen or watch a blu-ray movie and miss half of the dropped frames?Reply

-

kravmaga Sounds like an invitation for jeers but intel's actually doing good work here.Reply

Integrating better minimalist gpus into mainstream machines will ensure a leveled entry-level standard for pc gaming. Comparing these things with nvidia or amd gpus is like comparing a stock camry engine with an aftermarket custom ordered bmw supertech engine.

There's a huge population of people who go for the cheap computer using an IGP and would never think of buying separate parts just for gaming. An improvement on the performance of those machines would create a wider userbase for a ton of titles that are conservative on requirements... PC gaming would benefit way more from a moderate bump in gpu performance of 90% of the low-end beaters out there than an extra bit of performance on the 1% very high end gpus. Think of upcoming titles like starcraft2 or diablo3 where the fun isn't just in the eye candy; if these titles run correctly on these chips, I'm sold. -

JustinHD81 At the moment, for the most part, you can only get Intel integrated graphics with Intel CPU's and you might get a choice with AMD CPU's as Hypertransport is a little more open than Intel's FSB/QPI. So, what Intel is really trying to do is up their performance so that even the low end Nvidea and ATI GPU's don't have a market anymore. Still if you're into gaming and want integrated, better off with AMD, at least you get some choice.Reply -

anonymousdude I wonder how Intel defines the word "run". I define run as you can play it on your PC whether it is smooth or not. I define "playable" as at least 30 FPS. Also what resolution are they using because even my laptop using an AMD IGP can play crysis on 800x600 on low settings, but it is still choppy in parts.Reply -

ta152h You'll always have the kiddies that don't really understand games, and throw around gay terms like "eye candy" so they sound cute, but the reality is fancy graphics don't make a fun game. If you are complete moron, sure. If you're a simpleton, you bet. If you're completely superficial ...Reply

That stuff is relatively easy. But, actually making a mentally stimulating game is quite hard. It's not about resolution, it's about thought. There were old games that were completely text based that were fun. Ms. Pacman was a Hell of a lot more popular than any modern title, although I never fancied it. Defender and Gauntlet were atrociously addicting arcade games, that by today's standards would be ancient. Defender would even slow down the game at certain points, that became one of the charms of the games.

One thing is clear though. Power hungry, noisy, ovens that run in computers are never desirable. They are expensive to run, and are unpleasant to be around, and cost a lot of money. For some people, they're worth it, but for the vast majority of people, Intel solutions are more than they need. You can play a lot of really fun games, without paying massive amounts of money for your computer, or electrical bills, and not having a noisy oven in your office.

Also keep in mind, Intel IGPs of today are just as fast as old discrete cards that played games you thought were really fun years ago. Did those games suddenly become less fun? They didn't change, and human nature doesn't change so fast, so they're still plenty fun.

I still like playing games from the 1980s.

So, if Intel can boost performance without boosting cost, power use, and noise, it's a really good thing for way more people than ATI producing a $500 card that runs like a raped ape. Both are good, of course, it's just that the Intel solution will effect more people. It's not a trivial improvement. Counter-intuitively, the barn burners from ATI and NVIDIA are, since they effect relatively so few people. -

liquidsnake718 Larrabee is dead... another flimsy integrated gpu.... well if they could work with Nvidia for hybrid SLI thatwould be great.... hybrid sli that can actually change between gpgpu processors without having to reboot.. if they could do this in an efficient manner without having to tax the processor or the gpu while switching then this would be great. I dont think Pcie2.0 is capable of that though...Reply -

warezme Good gawd ta152h, you won't get any sympathy around here with your pathetic tirade of excuses for your slow antiquated excuse for modern hardware. You don't like to play games from the 80's.., you ARE from the 80's!, put away your Flock of Seagulls CD's, Deloreon posters and step into the modern world.Reply -

brockh ta152hYou'll always have the kiddies that don't really understand games, and throw around gay terms like "eye candy" so they sound cute, but the reality is fancy graphics don't make a fun game. If you are complete moron, sure. If you're a simpleton, you bet. If you're completely superficial ...That stuff is relatively easy. But, actually making a mentally stimulating game is quite hard. It's not about resolution, it's about thought. There were old games that were completely text based that were fun. Ms. Pacman was a Hell of a lot more popular than any modern title, although I never fancied it. Defender and Gauntlet were atrociously addicting arcade games, that by today's standards would be ancient. Defender would even slow down the game at certain points, that became one of the charms of the games.One thing is clear though. Power hungry, noisy, ovens that run in computers are never desirable. They are expensive to run, and are unpleasant to be around, and cost a lot of money. For some people, they're worth it, but for the vast majority of people, Intel solutions are more than they need. You can play a lot of really fun games, without paying massive amounts of money for your computer, or electrical bills, and not having a noisy oven in your office.Also keep in mind, Intel IGPs of today are just as fast as old discrete cards that played games you thought were really fun years ago. Did those games suddenly become less fun? They didn't change, and human nature doesn't change so fast, so they're still plenty fun. I still like playing games from the 1980s. So, if Intel can boost performance without boosting cost, power use, and noise, it's a really good thing for way more people than ATI producing a $500 card that runs like a raped ape. Both are good, of course, it's just that the Intel solution will effect more people. It's not a trivial improvement. Counter-intuitively, the barn burners from ATI and NVIDIA are, since they effect relatively so few people.Reply

I understand what you're saying, but I think it's a bit unnecessary to call everyone who likes new games simpletons while trying to preach equality for the actual content of the game over superficial qualities. There's horrible looking, horrible playing games as well. Everyone has their things.