AMD Boasts About RDNA 3 Efficiency as RTX 40-Series Looms

AMD says big bets on design and efficiency have paid off

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

AMD's latest blog discusses the performance per watt gains across the past several Radeon GPU generations. AMD's Sam Naffziger, who spoke with us about RDNA 3's chiplet design and efficiency gains in June, says AMD made some ‘big bets’ on GPU design and efficiency, and they are now paying off in very meaningful ways. He asserts that current and future AMD GPUs are meeting the needs of demanding games but doing so without sky-high power budgets. This is a timely reminder, if you need it, that energy prices are rocketing and Nvidia is expected to launch some very hefty graphics cards tomorrow at its GeForce Beyond event.

With PC gamers demanding better, faster visuals at ever higher resolutions, it's no surprise that GPUs have started to push up and beyond 400W, writes Sam Naffzinger, an SVP, Corporate Fellow, and Product Technology Architect at AMD. Naffzinger says AMD has tuned its new RDNA architectures to break away from the ever increasing power consumption cycle using “thoughtful and innovative engineering and refinement.” Increased energy efficiency means lower-power graphics cards should run cooler, quieter, and be more readily available in smaller or mobile form factors.

AMD provided some evidence of its RDNA GPU architecture progress, a series which will begin its third generation later this year. For example, the transition from GCN to RDNA “delivered an average 50 percent performance-per-watt improvement,” according to Naffzinger’s stats. Moving from the Radeon RX 5000 (RDNA) to Radeon RX 6000 (RDNA 2) generation, AMD claims that it provided “a whopping 65 percent better performance per watt.”

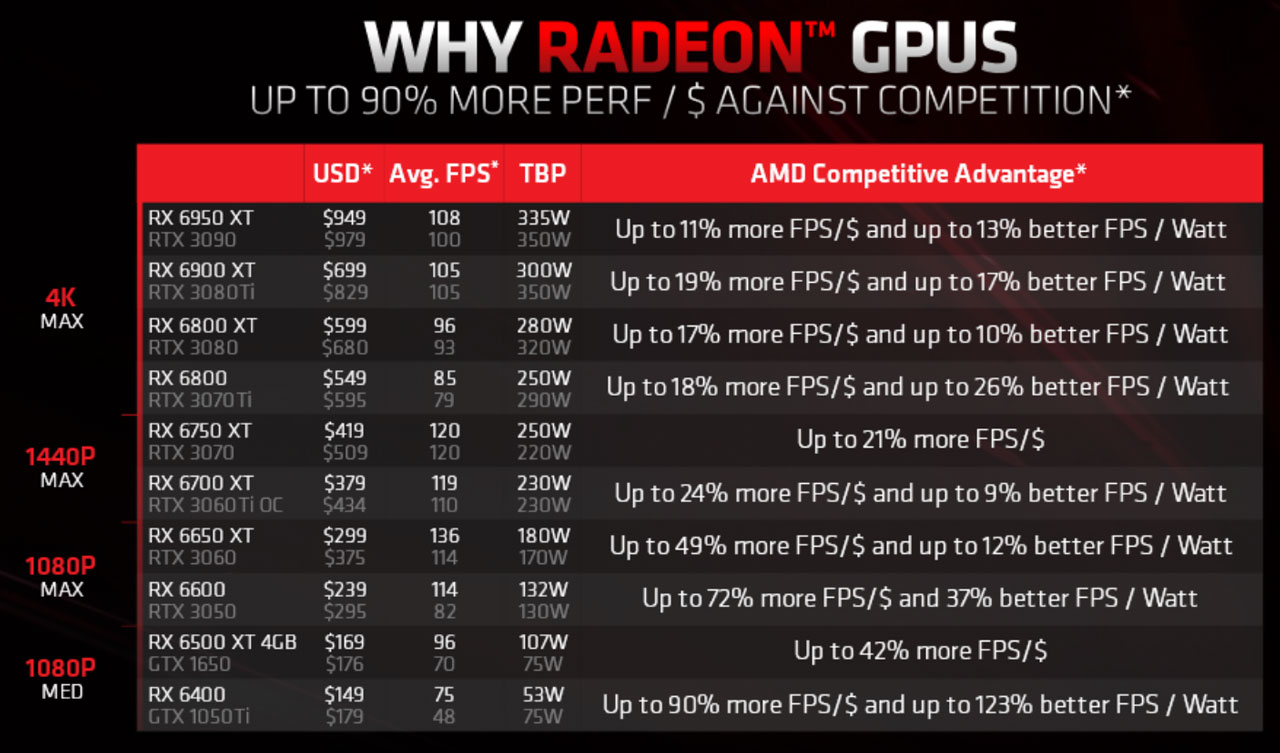

To spice up the comparisons, AMD provided an interesting chart illustrating its RDNA 2 vs Ampere “competitive advantage.” Obviously, these figures are cherry picked to make AMD look good, and note the "up to" language. Check our GPU benchmarks hierarchy and our list of the best graphics cards for an independent look at how things stack up..

This slide is arguably misleading at best. If you ignore the table and just look at the headline, it sounds like AMD delivers a massive increase in value — up to 90% more FPS per dollar! Except, that comparison uses the recent Radeon RX 6400 and pits it against the six-years-old GTX 1050 Ti, which also happens to cost more than the newer GTX 1650. Hmmm. At least the GPU prices AMD lists look reasonably accurate (other than the inclusion of the GTX 1050 Ti), and if you omit ray tracing games the performance isn't too far off our own results either.

For example, the RX 6950 XT averaged 115.4 fps at 1440p ultra in our tests, while the RTX 3090 averaged 106.5 fps. That matches the 8% performance difference AMD shows in the slide — though we tested a reference RTX 3090 Founders Edition against an overclocked Sapphire RX 6950 XT Nitro+ Pure. Another point of contention would be showing RX 6600 versus RTX 3050, even though as we pointed out recently the RTX 2060 is Nvidia's best value option — it's faster and cheaper than the RTX 3050. But let's move on.

AMD’s blog explains that RDNA 2’s progress over RDNA was largely due to optimized switching, a high-frequency design, and smarter power management. Then it talks about how it will continue to push forward with RDNA 3. Key technology refinements and introductions in RDNA 3 include the 5nm process, chiplet packaging tech, adaptive power management, and a next generation Infinity Cache.

AMD claims RDNA 3 will offer an “estimated >50 percent better performance per watt than AMD RDNA 2 architecture.” As we've noted before, that's likely more of a best-case scenario rather than a universal 50% improvement that you might see when comparing any two GPUs.

If much of this sounds familiar, that's not too surprising. We discussed our interview with Sam Naffziger in the above video, over two months ago. It also retreads a lot of the ground covered in our regularly updated AMD Radeon RX 7000-Series and RDNA 3 GPUs: Everything We Know feature.

AMD has repeatedly stated it will launch RDNA 3 GPUs before the end of the year. Our best guess right now is that we'll likely see the first arrivals in November. The rumored specifications are more than a little enticing, and we're very much looking forward to seeing how the two GPU behemoths match up in the coming months.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

aalkjsdflkj I hope the hype from AMD is somewhat close to accurate. With rising energy costs for both running my gaming PC and A/C in summer, efficiency is my #1 consideration after price/performance. I have to turn down a lot of knobs to get my 1070 to hit 60 fps in new games at 3440x1440 UWQHD. I'd love to stay near that 150/170W range with either an NVIDIA 4xxx or RDNA3 GPU but with a lot more eye candy on than I can currently run.Reply -

renz496 Replyaalkjsdflkj said:I hope the hype from AMD is somewhat close to accurate. With rising energy costs for both running my gaming PC and A/C in summer, efficiency is my #1 consideration after price/performance. I have to turn down a lot of knobs to get my 1070 to hit 60 fps in new games at 3440x1440 UWQHD. I'd love to stay near that 150/170W range with either an NVIDIA 4xxx or RDNA3 GPU but with a lot more eye candy on than I can currently run.

Just go back to 1080p 60hz and use more power efficient gpu. -

hotaru251 Reply

depending on monitor not an option w/o killing visuals.renz496 said:Just go back to 1080p 60hz and use more power efficient gpu. -

renz496 Reply

6600XT /6600 should be good for 1080p 60hz. Also no need to go ultra on everything.hotaru251 said:depending on monitor not an option w/o killing visuals. -

TheOtherOne Reply

I would like to have some of that whatever you are having plz! o_Oaalkjsdflkj said:I'd love to stay near that 150/170W range with either an NVIDIA 4xxx or RDNA3 GPU but with a lot more eye candy on than I can currently run. -

hotaru251 Reply

not even the issue.renz496 said:Also no need to go ultra on everything.

if you have a larger 1440/4k monitor and try to play at 1080.....its look god awful. -

aalkjsdflkj Reply

I'm honestly curious about your comment. What about hoping for better performance at 150/170W in the upcoming generations is unreasonable? I had been looking at the 6600XT (160W TBP) and 3060 (170W) for a 50-70% increase in framerates at 1440. Both of those cards would meet my needs but have been priced too high. Do you think the 4xxx series and RDNA 3 will be worse than those cards? People are undervolting 3070s to get in that range as well.TheOtherOne said:I would like to have some of that whatever you are having plz! o_O

Efficiency (more eye candy or FPS per watt) increases each generation. I'm just looking to stay on the lower-powered part of the range. -

digitalgriffin Replyalgibertt said:seems AMD will never realize that it's not hardware but software make them fall way behind NVIDIA....

Since when? AMD has made huge strides over the years. I've had 3 AMD GPU's since the 7970, RX580, 5700XT. If you include the APU's I've had a 2400G and 3400G. I have had zero driver/hardware issues. ZERO.

That's an old statement from 10 years ago about buggy drivers. While they aren't perfect, with isolated side cases, I honestly believe them to be no better nor worse than NVIDIA. -

renz496 Replyhotaru251 said:not even the issue.

if you have a larger 1440/4k monitor and try to play at 1080.....its look god awful.

i mean just go back to the regular 1080p 60hz monitor. if 27 inch 1080p look awful then just get 24 or 21 inch one. -

aalkjsdflkj Reply

I "need" an ultrawide (upgraded from dual-head 1080p) for work. Most of my work involves having detailed engineering drawings on the left half of the screen with documents on the right. A large UWQHD is perfect for my workflow. And most of the games I play benefit more from more real-estate than FPS.renz496 said:i mean just go back to the regular 1080p 60hz monitor. if 27 inch 1080p look awful then just get 24 or 21 inch one.