Intel Patent Confirms Work On Multi-Chip-Module GPUs

Borrowing pages from AMD's playbook

A recent patent published by Intel (via Underfox) may be the keystone for its future graphics accelerator designs - and it utilizes the Multi-Chip Module (MCM) approach. Intel describes a series of graphics processors working in tandem to deliver a single frame. Intel's design points towards a hierarchy in workloads: a primary graphics processor coordinates the entire workload. And the company frames the MCM as a whole approach as a required step to guide silicon designers away from manufacturability, scalability, and power delivery problems that arise from increasing die sizes in the eternal search for performance.

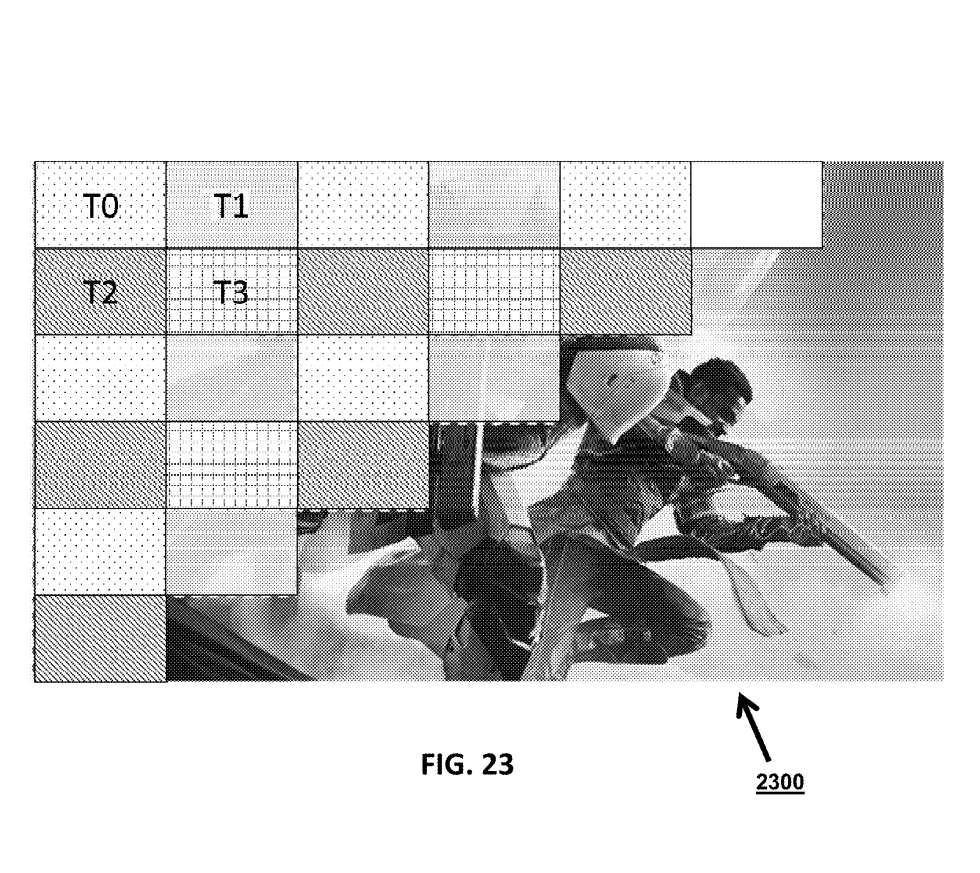

According to Intel's patent, several graphics draw calls (instructions) travel to "a plurality" of graphics processors. Then, the first graphics processor essentially runs an initial draw pass of the entire scene. At this point, the graphics processor is merely creating visibility (and obstruction) data; it's deciding what to render, which is a high-speed operation to do on modern graphics processors. Then, a number of the tiles generated during this first pass go to the other available graphics processors. According to that initial visibility pass, they would be responsible for accurately rendering the scene corresponding to their tiles, which indicates what primitive is in each tile or shows where there is nothing to render.

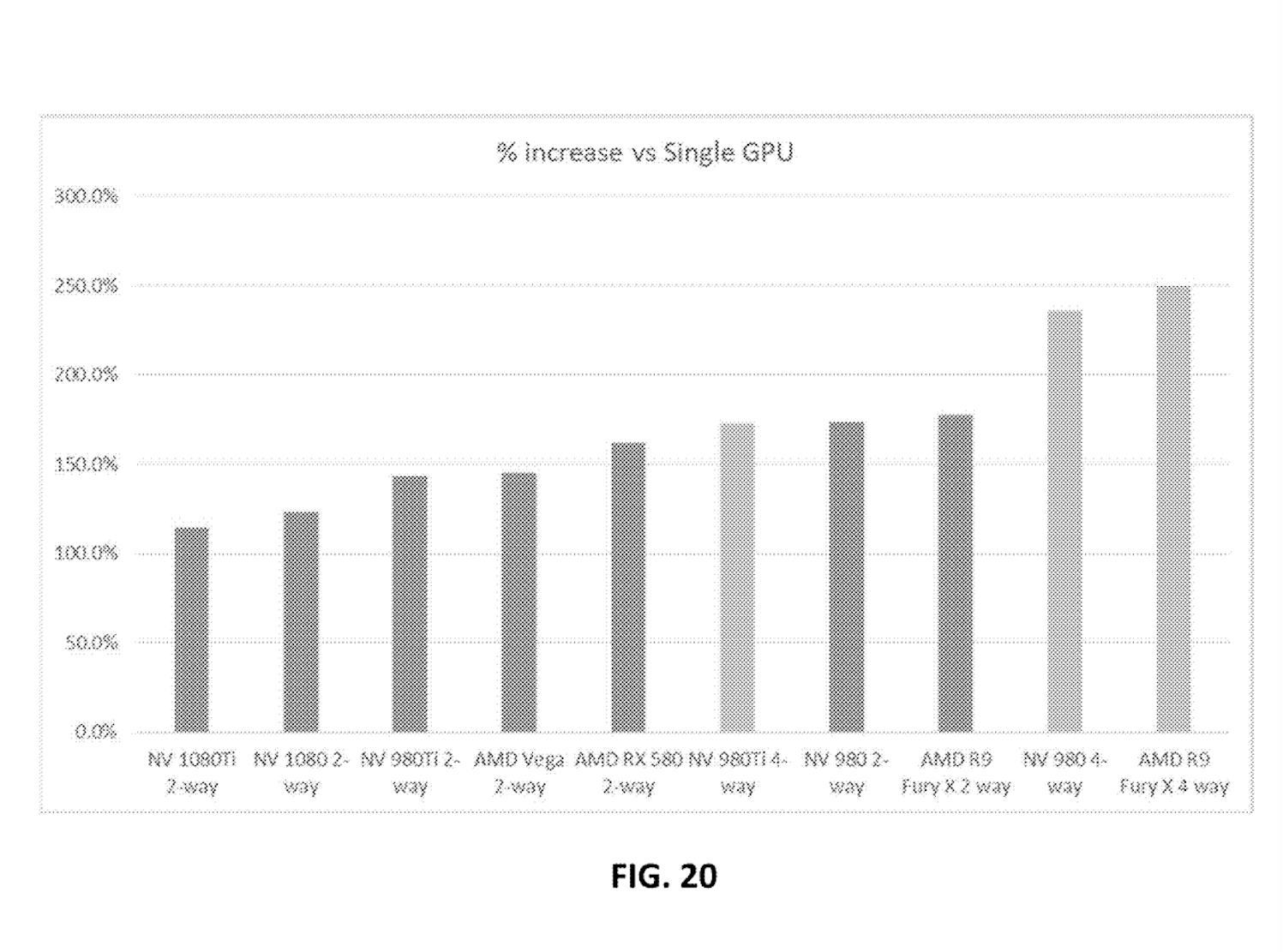

It thus seems that Intel is looking at integrating tile-based checkerboard rendering (a feature used in today's GPUs) alongside distributed vertex position calculation (out of the initial frame pass). Finally, when all graphics processors have rendered their piece of the puzzle that is a single frame (including shading, lighting, and raytracing), their contributions are stitched up to present the final image on-screen. Ideally, this process would occur 60, 120, or even 500 times per second. Intel's hope for multi-die performance scaling is thus laid bare in front of us. Intel then uses performance reports from AMD and Nvidia graphics cards working in SLI or Crossfire modes to illustrate the potential performance increases in classical multi-GPU configurations. But, of course, it will always be lower than an authentic MCM design.

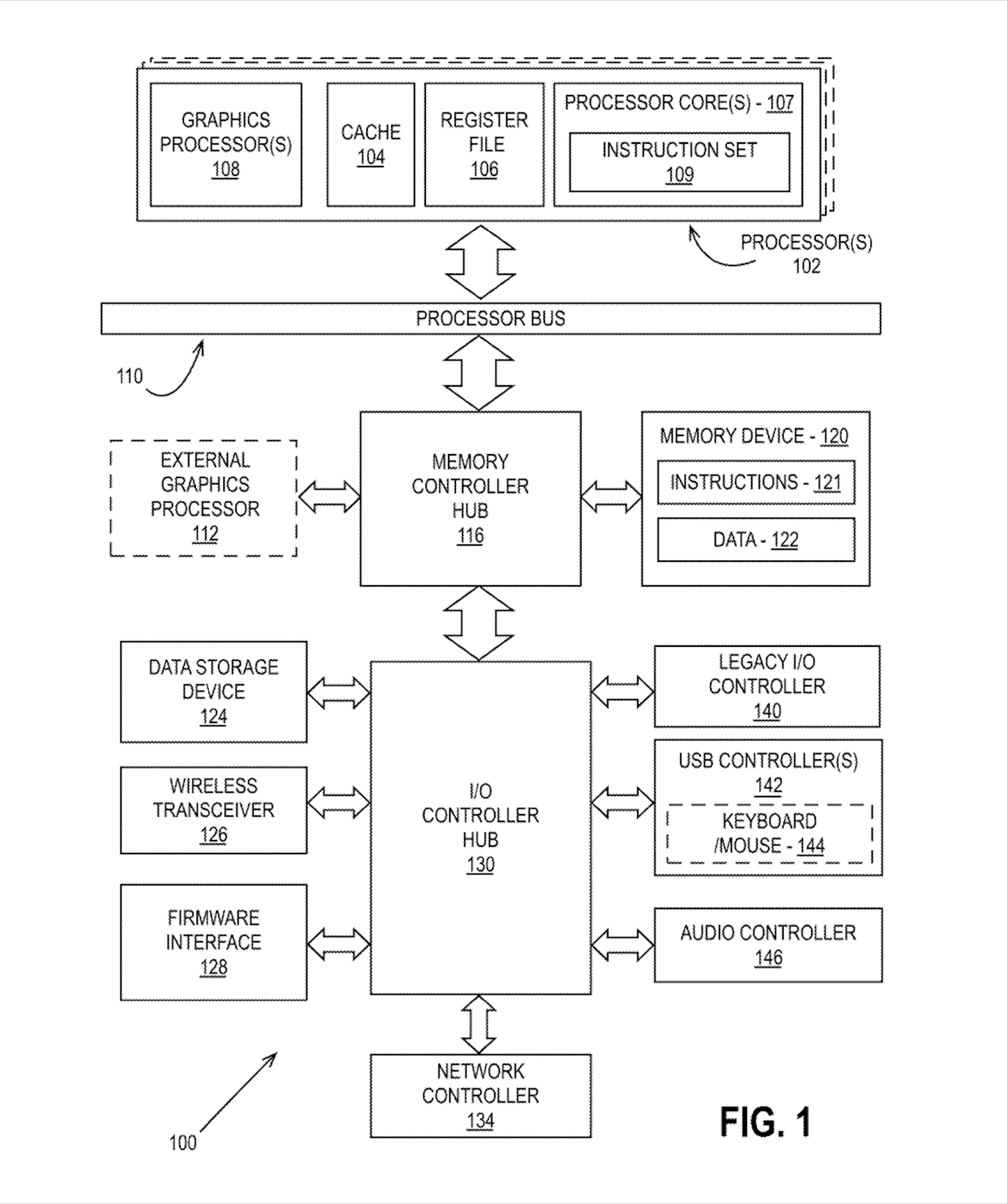

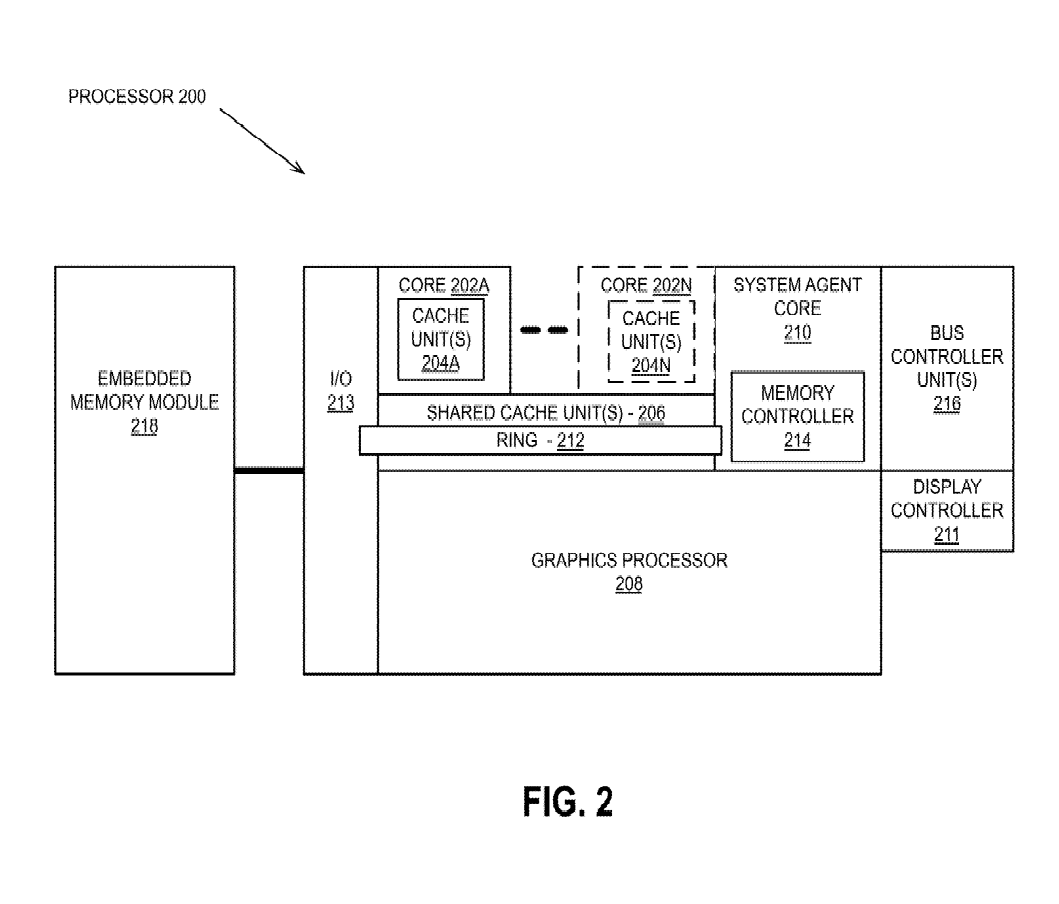

Intel's patent is fuzzy in details as to the architecture level, however, and covers as much ground as it possibly can - which, again, is usual in this space. For example, it allows for designs that even include multiple graphics processors working in tandem or just sections of graphics processors. The method applies to "a single processor desktop system, a multiprocessor workstation system, a server system," as well as within a system-on-Chip design (SoC) for mobile. These graphics processors or embodiments, as Intel calls them, are even described as accepting instructions from RISC, CISC, or VLIW commands. But Intel seems to be taking a page straight out of AMD's playbook, explaining that their MCM design's "hub" nature could include a single die aggregating the Memory and I/O controllers.

As the rate of semiconductor miniaturization slows (and continues to slow), companies have to find ways to scale performance while maintaining good yields. At the same time, they have to innovate on architecture, semiconductor manufacturing processes are getting more and more complex and exotic, with a higher number of required manufacturing steps, a higher number of masks, and finally integrating Extreme Ultraviolet Lithography (EUV) applications. We've been surfing the diminishing returns part of the equation for a while now: it's getting harder and harder to increase transistor density, and increasing die areas further would incur penalties on wafer yields. The only solution is to pair several smaller dies together: it's easier to have two functioning 400 mm squared dies than it is to have one fully working 800 mm one.

AMD, for one, has found great success with its MCM-based Ryzen CPUs ever since their first generation. The red company still delivers MCM-based GPUs, but their next-gen Navi 31 and Navi 32 may feature that technology. And we know Nvidia, too, is actively exploring MCM designs for its future graphics products, following its new Composable On Package GPU (COPA) design approach. The race has been on for a long time, even before AMD came out with Zen. The first company to deploy an MCM GPU design should have an advantage over its competitors, with higher yields facilitating higher profits - or lower market pricing. And with all three AMD, Intel, and Nvidia contracting the same TSMC manufacturing nodes in the foreseeable future, each slight advantage could have a potentially high market impact.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

rluker5 "Borrowing pages from AMD's playbook"?Reply

Pentium D in 2005 was mcm. Saying that is like claiming the native Americans are borrowing pages from the European settlers playbook by living in the U.S.

Also Intel seems to be following the proper method for gaming gpu mcm with a master chiplet and slave chiplets. Software can't act as hardware and just tossing chiplets next to each other on a substrate won't do the job for games, just compute. There has to be a chip in charge of the output.

Which company is the first to implement this is yet to be seen. -

VforV ReplyThe first company to deploy an MCM GPU design should have an advantage over its competitors

Yes and we already know which will be 1st, AMD with RDNA3, so why the question?

It's not like intel and nvidia can change their plans and press the Super Advance Tech Progress button to launch an MCM GPU before RNDA3 launches before the end of the year... :rolleyes: -

rluker5 Reply

IF AMD does MCM it doesn't appear to be any more effective for games than previous MCM like 295x2, 7990, etc. The rumors out there portray a normal gpu next to a normal gpu. With compute the cpu is the master chip in charge of the singular output and this can work. With games the cpu can only be the master through fickle software tricks that are currently the purview of the game devs and MCM can't work.VforV said:Yes and we already know which will be 1st, AMD with RDNA3, so why the question?

It's not like intel and nvidia can change their plans and press the Super Advance Tech Progress button to launch an MCM GPU before RNDA3 launches before the end of the year... :rolleyes:

This patent by Intel makes it look like it is already the gaming industry leader in MCM and AMD can't press the super advance tech progress button to fix their rumored misguided failure of MCM RDNA3. -

hotaru251 ah yes....the same exact thing (mcm) that intel jabbed at amd back when epyc was new and called it "glued together".Reply

not 1st time intel's tried it. likely wont be last. -

VforV Reply

Are you living in fantasy land? Intel simp fantasy land? Looks that way...rluker5 said:IF AMD does MCM it doesn't appear to be any more effective for games than previous MCM like 295x2, 7990, etc. The rumors out there portray a normal gpu next to a normal gpu. With compute the cpu is the master chip in charge of the singular output and this can work. With games the cpu can only be the master through fickle software tricks that are currently the purview of the game devs and MCM can't work.

This patent by Intel makes it look like it is already the gaming industry leader in MCM and AMD can't press the super advance tech progress button to fix their rumored misguided failure of MCM RDNA3.

Meanwhile the rest of the world knows how good Zen CPUs are already and some of us that follow the leaks also know about the at least 2x performance if not more that RDNA3 with MCM (which follows on the footsteps of Zen tech, but adjusted for GPUs) will have over RDNA2.

Watch how AMD retakes the gaming crown next gen at the end of 2022, you and intel and nvidia fanbois are all invited. My popcorn is ready.

In the mean time intel still needs to prove 1) they can actually launch Arc and 2) that Arc is not horrible and at least decent.

You can keep your supremacy wishes and dreams of intel in GPU space inside your head for at least 2 years, the battle is between nvidia and AMD until maybe 2nd or 3rd gen intel GPUs. So let's see how they do with the 1st gen, first. Pffft. -

rluker5 Reply

Have you seen rumors of AMD MCM gpus where it isn't just 2 gpu dies next to each other? If so, please post the link and educate me.VforV said:Are you living in fantasy land? Intel simp fantasy land? Looks that way...

Meanwhile the rest of the world knows how good Zen CPUs are already and some of us that follow the leaks also know about the at least 2x performance if not more that RDNA3 with MCM (which follows on the footsteps of Zen tech, but adjusted for GPUs) will have over RDNA2.

Watch how AMD retakes the gaming crown next gen at the end of 2022, you and intel and nvidia fanbois are all invited. My popcorn is ready.

In the mean time intel still needs to prove 1) they can actually launch Arc and 2) that Arc is not horrible and at least decent.

You can keep your supremacy wishes and dreams of intel in GPU space inside your head for at least 2 years, the battle is between nvidia and AMD until maybe 2nd or 3rd gen intel GPUs. So let's see how they do with the 1st gen, first. Pffft.

If that is all that they are doing then it is driver and/or game directed MCM aka CFX.

I've used that, you probably have as well, and it isn't the same as cpu driven MCM compute, or the hardware driven, game agnostic MCM you are hoping AMD will implement while going only by rumors of the rebirth of CFX. AMD mgpu is likely for compute. In 2022 CFX sucks.

This Intel patent is detailing a vague, yet likely viable approach to that MCM you are hoping for. Hopefully one of the three builds it. -

saltweaver Reply

Most likely AMD shall launch Zen4 while Nvidia shall launch Next-gen RTX 4000 cards built on the 5nm process node. RDNA 3 series are hiting 2023 window. Suddenly is getting crowded.VforV said:Watch how AMD retakes the gaming crown next gen at the end of 2022, you and intel and nvidia fanbois are all invited. My popcorn is ready. -

InvalidError Reply

While AMD may be first with a consumer MCM GPU, Intel has its 40+ tiles Ponte Vecchio compute monster for the Aurora supercomputer coming up this year taking MCM integration to a whole new massive scale.VforV said:Yes and we already know which will be 1st, AMD with RDNA3, so why the question?

It's not like intel and nvidia can change their plans and press the Super Advance Tech Progress button to launch an MCM GPU before RNDA3 launches before the end of the year... :rolleyes: -

VforV Reply

I remember MLiD saying in one of his leaks in 2021 about future AMD GPUs, that they have more than 2 chiplets, like they have for CPUs and that they are also working on tiles at the same time.rluker5 said:Have you seen rumors of AMD MCM gpus where it isn't just 2 gpu dies next to each other? If so, please post the link and educate me.

If that is all that they are doing then it is driver and/or game directed MCM aka CFX.

I've used that, you probably have as well, and it isn't the same as cpu driven MCM compute, or the hardware driven, game agnostic MCM you are hoping AMD will implement while going only by rumors of the rebirth of CFX. AMD mgpu is likely for compute. In 2022 CFX sucks.

This Intel patent is detailing a vague, yet likely viable approach to that MCM you are hoping for. Hopefully one of the three builds it.

I really don't think AMD is doing just one thing and will fall behind, when now they are actually ahead...

Anyting is possible, but actually most likely will not be like you say. Dr. Lisa Su herself said so a few days ago that their planed AMD products, Zen4 and RDNA3, both are coming in 2022. It was in the news on all sites, here too.saltweaver said:Most likely AMD shall launch Zen4 while Nvidia shall launch Next-gen RTX 4000 cards built on the 5nm process node. RDNA 3 series are hiting 2023 window. Suddenly is getting crowded.

Sure, but in 2021 it was AMD with the 1st exascale supercomputer Frontier. So you see, this is how tight competition works, so who will take 2023 then?InvalidError said:While AMD may be first with a consumer MCM GPU, Intel has its 40+ tiles Ponte Vecchio compute monster for the Aurora supercomputer coming up this year taking MCM integration to a whole new massive scale. -

jp7189 Reply

I agree that a cfx like implementation would be a huge let down at this point in time, but amd's work with infinity cache in rdna2 gives us a hint that multiple gpus are likely to share the same memory pool. It could be extrapolated that other mcm quirks could be hidden behind a similar caching mechanism. We shall see. Keep an eye on frametime consistency metrics. I think consumer gaming will be more sensitive to consistent, real-time output.rluker5 said:Have you seen rumors of AMD MCM gpus where it isn't just 2 gpu dies next to each other? If so, please post the link and educate me.

If that is all that they are doing then it is driver and/or game directed MCM aka CFX.

I've used that, you probably have as well, and it isn't the same as cpu driven MCM compute, or the hardware driven, game agnostic MCM you are hoping AMD will implement while going only by rumors of the rebirth of CFX. AMD mgpu is likely for compute. In 2022 CFX sucks.

This Intel patent is detailing a vague, yet likely viable approach to that MCM you are hoping for. Hopefully one of the three builds it.