Nvidia Ampere Graphics Cards May Use New 12-Pin PCIe Power Connector

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

A rumor has been spreading around the hardware circles that Nvidia's upcoming Ampere graphics cards might use a 12-pin PCIe power connector. Some news outlets are reporting the rumor as fact, while others are treating it as a sham. Our own insider source has confirmed that the connector is indeed real and has been submitted to the PCI-SIG standards body. However, it remains to be seen whether it will pass approval.

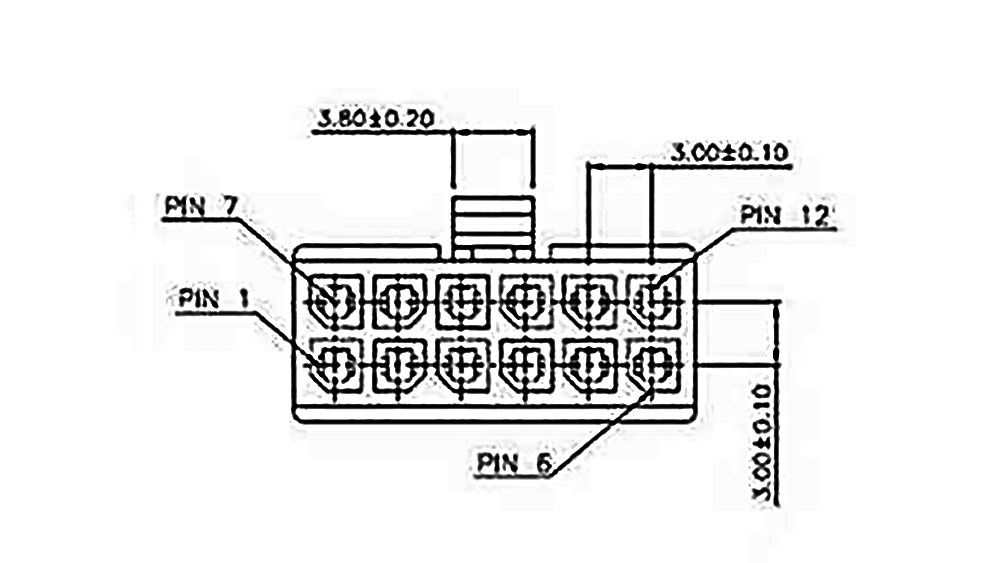

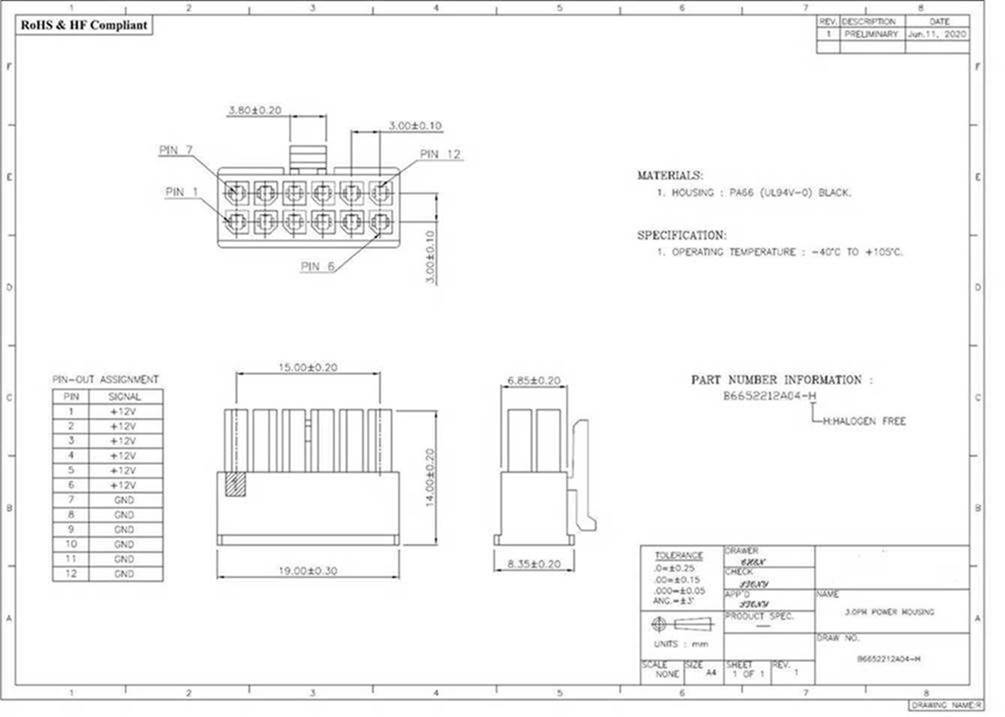

The 12-pin PCIe power connector measures 19 x 14 x 8.35mm, give or take 0.30mm. If you want to get an idea of its size, the 12-pin connector should be roughly equivalent of putting two normal 6-pin PCIe power connectors side by side.

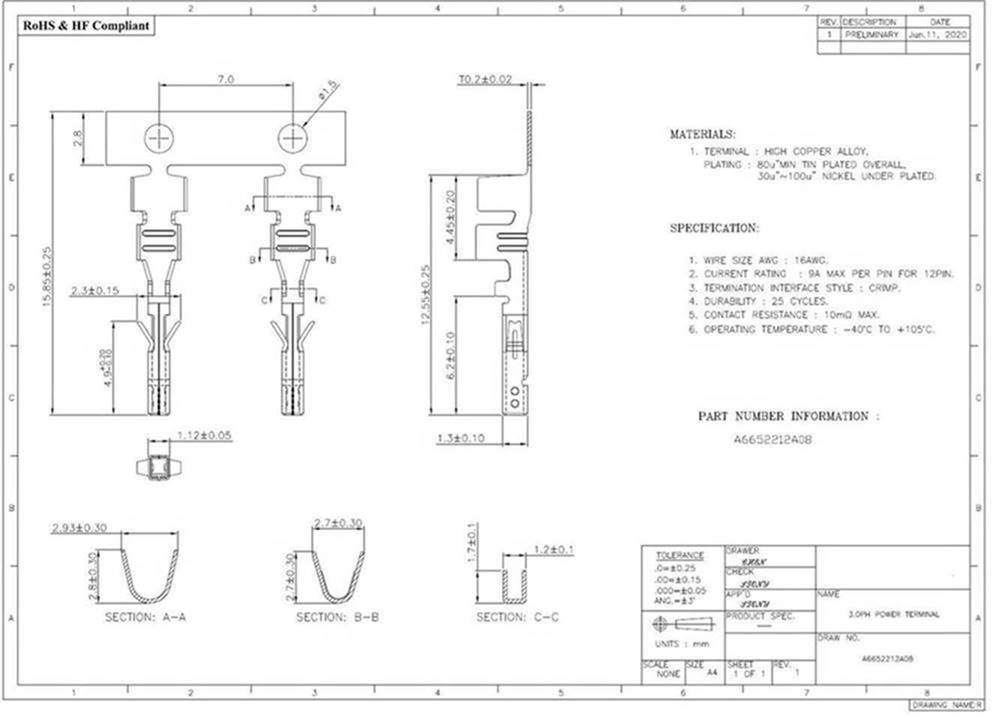

According to the submitted technical illustrations, the 12-pin PCIe power connector would come equipped with six 12V pins and six ground pins. Each 12V pin is rated for a maximum of 9A. Therefore, a single 12-pin PCIe power connector can theoretically provide a power input up to 648W, which blows every existing PCIe power connector out of the water.

The 12-pin PCIe power connector is essentially a combination of two 6-pin PCIe power connectors albeit the different pin layout. As a result, power supply vendors can get away with just swapping the cable with its corresponding connector to the new 12-pin PCIe layout. This would allow them to save money since it wouldn't consist in touching the power supply's PCB.

The wire gauge will play a significant role in power delivery. While the pins on the connector support 648W, you'll need 16AWG cables to get there. This shouldn't be a problem since we don't expect Ampere to draw that much power. The majority of modern power supplies employ 18AWG cables, which have a maximum ampacity of 7A. Even with 18AWG cables, the 12-pin PCI power connector can supply up to 504W of power and should be more than enough for any graphics card.

Ampere graphics cards are rumored to pull up to 350W, which could explain Nvidia's insistence on a new PCIe power connector. Even with the current PCIe power connectors, it's possible to feed a graphics card more than 350W. The PCIe slot provides 75W and a single 8-pin PCIe power connector delivers up to 150W. So a combination of the PCIe slot with two 8-pin PCIe power connectors is good for 375W. If required, you could also add another 6-pin or 8-pin PCIe power connector to the mix. High-end graphics cards, such as the MSI GeForce RTX 2080 Ti Lightning Z or EVGA GeForce RTX 2080 Ti K|NGP|N depend on three 8-pin PCIe power connectors, so it's not uncommon to find graphics card with three power connectors.

Perhaps it isn't a matter of whether current offerings can provide enough power for Ampere – it more likely comes down to marketing. Having multiple PCIe power connectors can give a bad first impression that the graphics card is a power-hungry one. To the untrained eye, a graphics card with a single PCIe power connector infuses an illusion of its power efficiency.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

At the moment, it's uncertain if the 12-pin PCIe power connector will be exclusive to Nvidia's Founders Edition models or the same for all aftermarket models. At any rate, we shouldn't be despair since the PCI-SIG group might not even approve the 12-pin PCIe power connector.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

nofanneeded The problem is not in the number of pins in GPU connectors , it is in its SIZE and the huge wires used ...Reply

The wires used for the connectors are huge , if you calculate it right you can use thinner wires. and this is visible in all compact desktops that uses non standard power supply and non sandard cables and still house high end GPU... -

Pat Flynn If I'm not mistaken, doesn't DC current flow from negative to positive? If that's the case, would this connector (like all other 12v connectors) thus have a maximum of 9A per pin, for half the pins? Aka, ~320W instead of the 648W?Reply -

awolfe63 Reply

6 pins (half) * 12V * 9A = 648W.Pat Flynn said:If I'm not mistaken, doesn't DC current flow from negative to positive? If that's the case, would this connector (like all other 12v connectors) thus have a maximum of 9A per pin, for half the pins? Aka, ~320W instead of the 648W? -

Gillerer First: While the PCIe slot may supply a total of 75W, only 5.5A may be drawn from 12V, so that maxes out at 66W. (I suppose you could use the 3.3V for things like RGB...)Reply

*

Second: The PCIe power connectors have a level of redundancy built in, so you can't assume you can just add multiple ones together:

6-pin has 2x +12V and 2x ground, but only needs one of each to supply the rated 75W. This means one wire/connector of each can be damaged and it will still meet any safety guidelines.

8-pin has 3x +12V and 3x ground; one extra of each. The number of "needed" wires doubled from the 6-pin, so the power rating also doubles.

*

If the 12-pin were to have the same 1 extra wire (for both +12V and ground), that leaves 10 pins. It's likely there will be a couple of sense pins, so you'd be left with 4 pairs for power delivery (5 pairs connected of which 1 for redundancy) and you'd have 4x the capacity of the 6-pin, or 300W. -

jasonf2 I have been watching this blow up with headlines like the "ampere cards will require you to replace most of your computer". What a joke. Even if you have to replace a psu to support the new header you are out somewhere between $250-$450 (ultra premium psu) for a video card upgrade that will more than likely run north of $1500 in the first place. While I am sure that there are some people out there that are moving from the 1080ti - 2080ti - 3080ti sequentially the cost of doing so in today's graphics card market is cost prohibitive for most. For me that means that my higher end graphics card purchase is typically on a new build and at that point I am buying a psu anyway. Other than cost replacing a psu takes like 10 minutes and a screwdriver and certainly isn't replacing most of my computer. The ideal that this will move people to AMD is just clickbait. People that are cycle upgrading like this are going after the performance crown. If AMD comes out with something that actually competes with the 3080(ti) they will sell cards to this group. If not they are going to be where they are today in the graphics card arena regardless of a power connector. Personally my bet is that these cards are going to ship with an adapter to hook into the header especially after all of this hype. While the spec itself may need the ability to feed 600 watts the cards won't need it and the adapter can be made to the card spec. I would bet on a splitter that will combine a number of existing psu cables. Going forward developing a standardized high wattage GPU power connector makes sense for the industry anyways. The new lightweight psu standard coming out (12 volt only) is going to make a mess for those trying to upgrade factory built pc's. Requiring a dedicated discrete gpu plug would at least cleanup the upgrade path going forward for those purposes and at those wattage requirements should suit the gpu purposes for the foreseeable future.Reply -

Diceman_2037 Considering its ATX standards body that would yay or nay the introduction of a new socket and connector, this fake news is debunked.Reply -

Droidfreak Reply

I actually did a new build in Q4 2019/Q1 2020 and spent almost 2K € without the GPU. ROG Strix Helios case, i9 9900KF, Maximis XI Hero Wifi, ROG Strix RGB 360 AIO, 1TB 970 Pro, 32 GB TridentZ Royal.. Now the fun fact: I'm still running a GTX 970, lol. And yes, I did buy a premium PSU (ROG Thor 850P). Cmon, my CPU draws up to 340 Watts (!) during a P95 stress run at 5.1 GHz, maybe ASUS et. al. need to introduce a new CPU PWR connector?jasonf2 said:I have been watching this blow up with headlines like the "ampere cards will require you to replace most of your computer". What a joke. Even if you have to replace a psu to support the new header you are out somewhere between $250-$450 (ultra premium psu) for a video card upgrade that will more than likely run north of $1500 in the first place.

So if I was forced to replace the PSU for an Ampere card to work I would seriously consider AMD. Because guess what? It would still be waay faster than my GTX 970 😁 -

spongiemaster Reply

If this rumor is true, the adapter will come in the box of every video card that needs one. Nvidia is not going to be stupid enough to ship a card that either requires a new power supply that they make no money from the sales of or requires an adapter that may or may not be readily available from third party vendors on launch day.Gillerer said:I'm sure there will be adapters for 2 x 8-pin --> 12-pin, or something.