ViewSonic Upgrades LG’s 1ms IPS Panel With Mouse Bungees, Headset Hook, G-Sync

When shopping different monitor panel types, gamers seeking speed will typically opt for TN. LG in June shook up this conversation, though, by introducing the first IPS gaming monitor with a 1ms response time, along with the image quality and superior viewing angles presumed of IPS technology. Now, ViewSonic is taking this same groundbreaking panel enhancing its package with thoughtful gaming perks, like a mouse bungee and true G-Sync, rather than G-Sync Compatibility.

The 27-inch model in the LG UltraGear Nano IPS gaming monitor lineup has QHD resolution and 144 Hz refresh rate. It’s G-Sync Compatible, meaning it’s been certified to successfully run Nvidia G-Sync, despite lacking Nvidia's proprietary module. A ViewSonic exec told me that the gaming community is asking for G-Sync in favor of features like overdrive and ultra low motion blur that you can get run with G-Sync Compatibility, plus the ability to run adaptive sync at lower refresh rates.

The ViewSonic Elite XG270QG, which I got to check out (without a connection to a PC) in-person, instead uses G-Sync with the same LG panel. It’s also QHD but can hit a 165 Hz refresh rate when overclocked. With an identical “IPS Nano Color” LG panel, both monitors cover 98% of the DCI-P3 color gamut. LG’s Nano Color technology means each particle is treated with an extra phosphorous layer, allowing it to absorb more light and colors for greater color gamut coverage.

In addition to true G-Sync, the Elite XG270QG also stands out from the LG with bonus features of convenience, namely two mouse bungees (for right and left handers) to prevent your gaming mouse’s cable from snagging during aggressive gaming, as well as a hook so you have somewhere to stash your gaming headset. This all points to a cleaner desk that lets you focus on what matters: gaming.

Like the LG UltraGear 27GL850-B, the Elite X6270QG comes with RGB lighting. There’s an RGB hexagon on the back of the aluminum build, but the ViewSonic exec we spoke with admitted that it may not be bright enough to make a huge impact on your wall. So ViewSonic also incorporated an RGB strip at the bottom of the panel, which cast a nice light on our office’s conference room table, even with the lights at max brightness. You can easily turn off either (or both) RGB effects with a joystick on the bottom of the monitor should those lights distract you.

In that same vein, ViewSonic told us that it wanted to keep things more subtle than the aggressive, colorful and angular looks of other gaming monitors, so the Elite XG270QG could be versatile, perhaps even fitting in an office setting and with older users. In fact, ViewSonic’s more monotone approach will likely be the Elite’s look for the foreseeable future. The stand was also redesigned, including a hook handle up top, while you can also run wires through its central loop.

Additionally, the lighting will be controllable via the Elite RGB Controller on-screen display (OSD) software, which will be the first app for ViewSonic’s Elite line when it debuts in November. It lets you pick colors and browse through different lighting modes.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The XG270QG will debut worldwide in November for an estimated $599.99, about $100 more than the LG UltraGear 27GL850-B with the same panel.

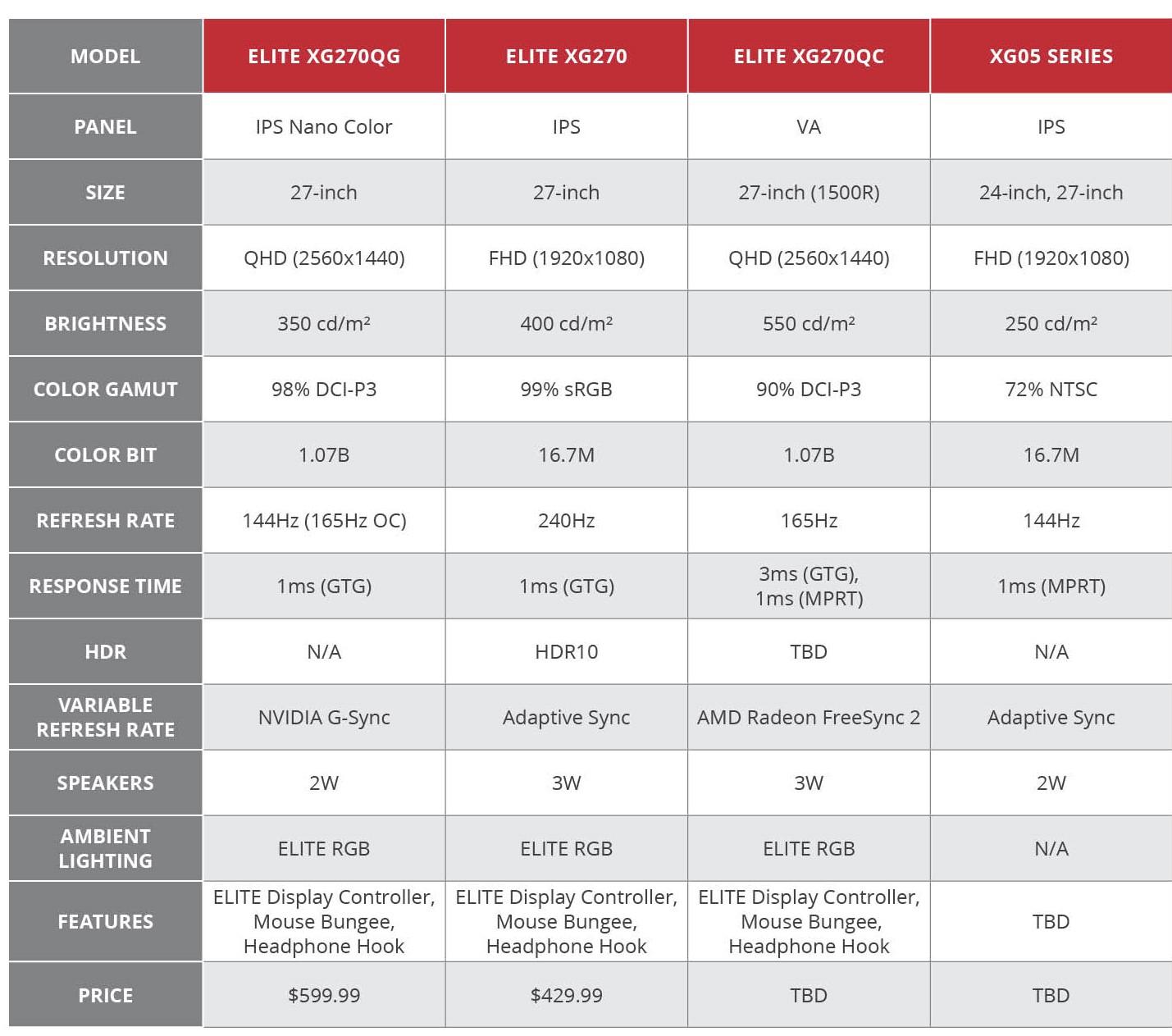

ViewSonic is also releasing two other Elite monitors with the Elite Display Controller, mouse bungee and headphone hook (the XG270 in November and the XG270QX in Q1 2020), plus its XG05 series (in North America in Q1 2020). You can see full specs for all the upcoming Elite monitors below.

ViewSonic Elite Monitors Specs

An Elite Ally

ViewSonic is also sharing details on a new touchscreen peripheral called the Elite Ally. According to the vendor, gamers will be able to use the device to for easy access to OSD settings, like game modes, FreeSync or G-Sync, RGB lighting, brightness, HDR and contrast. The accessory will only work with the Elite line of monitors and will come out in November. The price wasn’t disclosed.

Scharon Harding has over a decade of experience reporting on technology with a special affinity for gaming peripherals (especially monitors), laptops, and virtual reality. Previously, she covered business technology, including hardware, software, cyber security, cloud, and other IT happenings, at Channelnomics, with bylines at CRN UK.

-

Giroro Does anyone actually know when the release date is for LG's UltraGear 27GL850-B ?Reply

In the past couple weeks B&H has pushed back availability from early October to November, and dropped Best Buy from their "Where to Buy" section. All the early reporting for this monitor said it would come this summer.... which is over.

I'm similarly frustrated that TCL's new 55R625 series 6 TV's were also supposed to come out "this summer" and still haven't surfaced. -

bit_user Reply

Allegedly, it's already shipping. You can find reviews of it and some people claim to have them, even though availability is spotty.Giroro said:Does anyone actually know when the release date is for LG's UltraGear 27GL850-B ? -

bit_user I'm disappointed the table indicates no HDR. I'd also like to know whether this monitor include FreeSync/FreeSync2 support.Reply

What's attracting me to LG's monitor is the combination of high refresh rate + HDR. For me, 2560x1440, 27", and non-curved are also requirements. For a larger display, I'd go with curved, but I'd also want 4k and I don't care to spend on a graphics card that can drive it well. So, if it doesn't have quality issues (and I'm reading some people are experiencing bleed), I think LG's is the monitor for me.

BTW, I'm skeptical of array backlighting and I don't even want a monitor so bright that it'd hurt my eyes. Two more points in favor of this panel, vs. some of the more "premium" options. -

cryoburner Reply...and true G-Sync, rather than G-Sync Compatibility.

I would rather have a "G-Sync Compatible" (FreeSync) display than one with "True G-Sync". With FreeSync over DisplayPort, you get a screen that should support adaptive sync with cards from all the major vendors, including Nvidia, AMD and likely Intel once they enter the graphics card market, along with some titles for the Xbox One for screens that support the feature over HDMI, and I would bet that the upcoming generation of consoles from Microsoft and Sony will both offer wider support for it. With "True G-Sync" you get what amounts to the same thing, only locked to Nvidia graphics cards and nothing else, and there's no telling who will be providing the best options for graphics hardware a few years down the line.

Yeah, I'm not sure I'd be all that fond of super-bright full-array backlighting on an HDR screen, at least in a desktop environment where its filling one's field of view in what may be a relatively dimly lit environment.bit_user said:What's attracting me to LG's monitor is the combination of high refresh rate + HDR...

...BTW, I'm skeptical of array backlighting and I don't even want a monitor so bright that it'd hurt my eyes.

However, full array backlighting would pretty much be a necessity to get an HDR experience out of a panel like this. Judging by the TechSpot/HardwareUnboxed review for the LG 27GL850, that screen's panel has a relatively weak native contrast ratio at under 800:1, placing its contrast even below most of the recent TN panels they tested, despite being IPS, which are usually a bit better...

T5Loh7vOcVMView: https://www.youtube.com/watch?v=T5Loh7vOcVM

https://www.techspot.com/review/1908-lg-27gl850

Even if you don't want parts of a scene in HDR content to be uncomfortably bright, one of the primary points of HDR is to offer an increased range of brightness levels, and you are unlikely to see any benefit from that without either making the brightest parts of a scene brighter, or the dimmest parts dimmer. A panel like this isn't going to provide decent HDR output using a standard backlight, since it doesn't have enough contrast on its own. A VA panel provides around 3 to 4 times the native contrast, and would almost certainly be a better starting point for HDR without array backlighting.

The screen's backlight apparently doesn't even meet the requirements for HDR400 certification, only offering up to 350 nits of peak brightness, so while it technically may "support" an HDR input, it would be a stretch to consider the output "HDR".

The main draw of that LG screen (and this one that apparently uses the same panel), is that they offer some of the fastest refresh rates for IPS. Again, it's probably a stretch to consider it a "1ms" refresh rate, since that apparently requires setting overdrive to a level that causes substantial inverse ghosting, but even at normal overdrive levels, they perform pixel transitions faster than other IPS displays. Of course, again, that results in below normal contrast for an IPS screen, so there's a tradeoff there in terms of image quality. -

bit_user Thanks for the wealth of info.Reply

What I really care about is reducing banding. That's why I want a 10-bit panel, even if it's 8-bit + FRC (the artifacts of which I feel should be diminished with a higher refresh rate).cryoburner said:Even if you don't want parts of a scene in HDR content to be uncomfortably bright, one of the primary points of HDR is to offer an increased range of brightness levels, and you are unlikely to see any benefit from that without either making the brightest parts of a scene brighter, or the dimmest parts dimmer. A panel like this isn't going to provide decent HDR output using a standard backlight, since it doesn't have enough contrast on its own. A VA panel provides around 3 to 4 times the native contrast, and would almost certainly be a better starting point for HDR without array backlighting.

The screen's backlight apparently doesn't even meet the requirements for HDR400 certification, only offering up to 350 nits of peak brightness, so while it technically may "support" an HDR input, it would be a stretch to consider the output "HDR".

To the extent that I care about HDR, it might be just to dabble with it from a programming perspective. And for that, as long as I can see a discernible difference on the monitor, I don't really care if it's subtle. I still don't know a whole lot about HDR, however (i.e. getting into the nitty gritty of how to actually use it).

It seems like HDR has been coming for more than a decade - I can remember back when the PS3 rolled in firmware updates that enabled deep color (up to 16-bit per channel!) and it seemed like that + xvYCC was gonna be the next big thing. And when AMD and Nvidia's pro cards started adding support for 10-bit and 12-bit. At my office, we even have monitors from 7-8 years ago that are 8-bit + 2-bit FRC - so old, I'm pretty sure they still have fluorescent backlights! -

SirGalahad Replycryoburner said:I would rather have a "G-Sync Compatible" (FreeSync) display than one with "True G-Sync". With FreeSync over DisplayPort, you get a screen that should support adaptive sync with cards from all the major vendors, including Nvidia, AMD and likely Intel once they enter the graphics card market, along with some titles for the Xbox One for screens that support the feature over HDMI, and I would bet that the upcoming generation of consoles from Microsoft and Sony will both offer wider support for it. With "True G-Sync" you get what amounts to the same thing, only locked to Nvidia graphics cards and nothing else, and there's no telling who will be providing the best options for graphics hardware a few years down the line.

I much prefer True Gsync as it’s far superior to freesync and freesync 2. There is a lot of misinformation out there with people thinking that freesync and gsync are the same thing. Mostly because Nvidia has down a terrible job at actually explaining what their technology does and why it’s better. Gsync compatible and freesync are the same mostly. But actual gsync is different.

Gsync works from 1 hz to the max refresh rate of the monitor (say 144 hz or 240 hz) whereas freesync is within a window. such as 60 - 144hz. If you go over or under that it introduces tearing, stutter, and latency.

The majority of freesync monitors are less than 75 hz. With the next step being 76 - 144 with only a small margin being over 144hz. Whereas gsync is almost all 144 - 240 hz.

Gsync goes through a rigorous certification Process that freesync doesn’t. Although some freesync 2 monitors go through more of a process but still not to the same standard as gsync.a. This includes 300 plus image tests (there are a ton of freesync monitors that suffer from image artifacts and flickering and stuff. They also struggle a ton with ghosting)

b. variable overdrive (1hz - max refresh rate) not available on freesync.

c. factory color calibrated (you’d be shocked how bad color is for the majority of monitors and what you are missing due to that).

d. Handles frametime variance, frame time spikes, and ghosting a lot better for way more consistent and smoother results.

e. Has increased scanout speed (meaning on the NES there is a game that is 60.1 hz. Which gsync can do. It also decreases input lag from 16.9 ms to 6.9 ms)

f. There is no latency increase when setup correctly.

yes they both provide a tear free and stutter free experience. But that should be with an * as freesync isnt as consistent or smooth even under ideal conditions as gsync. You can take a gsync monitor in the poorest of conditions and it will perform better than freesync in the best of conditions. As gsync has so many more things happening behind the scenes with their chip that is talking to the graphics card. -

cryoburner Reply

Misinformation, indeed. : DSirGalahad said:There is a lot of misinformation out there...

That's not exactly accurate. Both AMD's FreeSync and Nvidia's "G-Sync Compatible" implementation support Low Framerate Compensation (LFC), so long as the screen's maximum refresh frequency is at least 2.4x above its minimum, which applies to nearly all 144+Hz FreeSync displays. In the event that the framerate drops below the minimum refresh rate window supported by the hardware, the refresh rate is simply doubled to stay within the supported range, effectively removing that lower limit.SirGalahad said:Gsync works from 1 hz to the max refresh rate of the monitor (say 144 hz or 240 hz) whereas freesync is within a window. such as 60 - 144hz. If you go over or under that it introduces tearing, stutter, and latency.

This is not meaningful or particularly accurate either. Sure, there are plenty of FreeSync displays with standard refresh rates available, but those are in addition to a wide selection of high refresh rate displays. There are simply far more FreeSync options available, ranging all the way from budget $100 75Hz screens up to $1000+ premium high refresh rate screens. In fact, even just looking at high refresh rate displays, there are significantly more available with FreeSync than with G-Sync. Let's see what's currently available for FreeSync and G-Sync screens in Newegg's Gaming Monitor section (limited to new hardware, in stock and sold by Newegg)...SirGalahad said:The majority of freesync monitors are less than 75 hz. With the next step being 76 - 144 with only a small margin being over 144hz. Whereas gsync is almost all 144 - 240 hz.

(True) G-Sync:

(3) 200-240Hz models

(8) 144-165Hz models

(4) 100-120Hz models

(1) 60 Hz model

FreeSync:

(10) 200-240Hz models

(44) 144-165Hz models

(5) 100-120Hz models

(20) 60-75Hz models

So sure, there's a bunch of standard refresh rate screens that support FreeSync (actually Newegg has more than what's shown here, since a number of them are not listed in their Gaming Monitor section), but those are in addition to a wider selection of high refresh rate displays. In fact, Newegg stocks around four times as many 100-240Hz FreeSync displays than they do G-Sync, before we even get into the lower refresh rate options. And while those low refresh rate options might not provide an ideal adaptive sync range, that's still better than the range offered by true G-Sync hardware anywhere near their price points, which is nothing at all. G-Sync displays tend to be priced well outside what the vast majority of people are willing to pay for a monitor, so they are not particularly relevant to most people.

Again, if we're talking "G-Sync Compatible" displays, Nvidia certifies those to meet their standards for adaptive sync and refresh rate capabilities as well. And their standards appear to primarily amount to just keeping pixel response times within a certain threshold, not having any obvious flaws, and likely paying them a decent amount for the certification process that probably takes one guy an afternoon's worth of testing. It's not a bad thing that they have a certification process, but G-Sync certification alone doesn't necessarily mean that a screen has good all-around image quality or design, just that it meets certain arbitrary standards set by Nvidia, so one should still check out detailed reviews to determine how good a monitor is.SirGalahad said:Gsync goes through a rigorous certification Process that freesync doesn’t. Although some freesync 2 monitors go through more of a process but still not to the same standard as gsync.

This is not true. Many manufacturers produce both FreeSync and G-Sync versions of a display that use the same panels and are nearly identical outside of pricing. There are undoubtedly a number of screens that don't meet Nvidia's specifications for G-Sync at the lower end, but again, those are not priced anywhere remotely close to what a comparable G-Sync screen costs. At a similar price, a FreeSync screen should typically be very similar to its G-Sync counterpart, and one might even be able to use the money saved to move up to a "better" screen.SirGalahad said:You can take a gsync monitor in the poorest of conditions and it will perform better than freesync in the best of conditions. -

SirGalahad Replycryoburner said:Misinformation, indeed. : D

Indeed indeed. Unfotunately, we are both guilty. I apologize my last post was not written well.

I have nothing against FreeSync. I'm happy Freesync is a thing. I'm also happy they are making improvements. But when people say they are the same thing as G-Sync they are wrong. That's like saying a Ford is the same as an Audi. Yes, they are both cars, yes they both have similar features, and yes they both get you from point A. to point B. However, there is a difference in quality that separates them both. There is a reason why you pay more for G-Sync than FreeSync. I also think a lot of people should buy FreeSync or FreeSync 2. Especially depending on their budget. But if you want the best experience G-Sync is the one to buy.

With that said FreeSync and G-Sync Compatible are relatively the same thing. It could be argued that FreeSync is superior to G-Sync Compatible. But it's not superior to Actual G-Sync. I would like more options available for actual G-Sync. As currently many of the models that are out have been out for a long time. I also would like this because there is an oversaturation of the market with more and more FreeSync Monitors that vary greatly in quality.

That's not exactly accurate. Both AMD's FreeSync and Nvidia's "G-Sync Compatible" implementation support Low Framerate Compensation (LFC), so long as the screen's maximum refresh frequency is at least 2.4x above its minimum, which applies to nearly all 144+Hz FreeSync displays. In the event that the framerate drops below the minimum refresh rate window supported by the hardware, the refresh rate is simply doubled to stay within the supported range, effectively removing that lower limit.

Not all FreeSync monitors support LFC. Unless that has changed. Whereas all G-Sync Monitors do. Even if it does support Low Framerate Compensation it doesn't work on the entire range like G-Sync. It also doesn't work as well. It may double the refresh rate to stay within the supported range to prevent tearing but it is not as smooth once you're outside that range. Where for G-sync it doesn't matter it's always smooth and consistent.

For example, if your FreeSync monitor's range is 60 - 144 hz and you're at 30 fps you won't get tearing but it won't be as smooth as if it were between 60 - 144 hz. Additionally even if it were between 60 - 144 hz it wouldn't be as smooth as G-sync. This has been tested in studies and people preferred the G-Sync over the Freesync.

In addition games (such as Escape From Tarkov) which are horribly optimized and vary in frame rate tremendously (say 1 - 144 fps) on a FreeSync display, will not feel nearly as good as on a G-Sync display. As this is when you will really be taxing the modules in both and since G-Syncs are more robust the experience will be much better on it as it has better LFC implementation (among other things) and it is what G-Sync was made for and it shines in that regard. However, either option is better than no FreeSync or G-Sync.

Plus sometimes the frequency range that FreeSync gives is simply ridiculous. Such as with the Asus MG279Q where it only works between 35 - 90 hz. Even though it is a 144hz panel. So, with any Freesync Montior you have to look at what the range is. Which they don't make readily available. Which makes me concerned that the average user may not even notice before buying the monitor and then end up with an inferior product. With G-Sync you don't have to worry. If it's a 144 hz monitor you're good to go in that entire range.

There are simply far more FreeSync options available, ranging all the way from budget $100 75Hz screens up to $1000+ premium high refresh rate screens. In fact, even just looking at high refresh rate displays, there are significantly more available with FreeSync than with G-Sync.

(True) G-Sync:

(3) 200-240Hz models

(8) 144-165Hz models

(4) 100-120Hz models

(1) 60 Hz model

FreeSync:

(10) 200-240Hz models

(44) 144-165Hz models

(5) 100-120Hz models

(20) 60-75Hz models

That's correct. There are a lot more FreeSync monitors than G-Sync monitors. This is for two reasons. 1. FreeSync is free and has no regulations (but Freesync 2 does). 2. Nearly all of those monitors wouldn't pass G-Syncs rigorous testing. More doesn't necessarily mean better.

G-Sync displays tend to be priced well outside what the vast majority of people are willing to pay for a monitor, so they are not particularly relevant to most people.

I agree. G-Sync monitors are very expensive. However, recently they have gotten more affordable. I expect this trend to continue due to the competition with FreeSync/FreeSync 2 since they can't go crazy with their pricing like they were previously when they were unchecked and the only game in town. Which I think is fantastic. That's why I love competition.

But with that said the majority of time with monitors you get what you pay for. The more expensive the monitor the better it will be. Obviously there is diminishing returns here. But an expensive monitor should blow an inexpensive monitor out of the water.

The reason why a lot of G-Sync monitors are more expensive are 1. Due to the G-Sync module itself, but more importantly 2. They are more expensive monitors with more features before the G-Sync module is even added which already make them more expensive. This is likely since a more inexpensive monitor wouldn't pass Nvidia's testing. Meaning if you took the modules out of the monitor and compared the average G-Sync Monitor to the average FreeSync monitor I would imagine the G-Sync monitors would be more expensive as they are using more quality parts. Which isn't to say FreeSync monitors are bad or cheap. But there are a lot that are which brings the average down.

Again, if we're talking "G-Sync Compatible" displays, Nvidia certifies those to meet their standards for adaptive sync and refresh rate capabilities as well. And their standards appear to primarily amount to just keeping pixel response times within a certain threshold, not having any obvious flaws, and likely paying them a decent amount for the certification process that probably takes one guy an afternoon's worth of testing. It's not a bad thing that they have a certification process, but G-Sync certification alone doesn't necessarily mean that a screen has good all-around image quality or design, just that it meets certain arbitrary standards set by Nvidia, so one should still check out detailed reviews to determine how good a monitor is.

FreeSync and G-Sync Compatible are practically the same thing. I totally agree. They go through a lot less testing than actual G-Sync. The things I have mentioned that are FreeSyncs weaknesses are the same with G-Sync Compatible. I 100% agree, one should always check out detailed reviews before buying a monitor of any kind.

As for the testing for actual G-Sync is rigorous. It takes a long time. Nvidia is also part of the process from the start to the end of the development. Does that mean it is a perfect monitor no. There are some bad G-Sync displays (in the sense of contrast and color). But you have a better chance of finding a solid G-Sync Monitor than a FreeSync monitor but you will be paying more. But if you're interested in the testing that Nvidia does check out this video by Linus Tech Tips (Um0PFoB-Ls4View: https://www.youtube.com/watch?v=Um0PFoB-Ls4).

This is not true. Many manufacturers produce both FreeSync and G-Sync versions of a display that use the same panels and are nearly identical outside of pricing.

When I said that the G-Sync Monitor will perform better in the worst of conditions compared to FreeSync in the best of conditions I may have been being hyperbolic. However, blind testing has shown that people will pick a G-Sync monitor over a FreeSync monitor because it feels smoother and more consistent. Also, testing has shown that G-Sync Monitors are more consistent and smoother than FreeSync monitors. I'm not talking about Contrast or Color here. Just how the actual Variable Refresh Rate tech works. As sometime the contrast and color in a G-Sync display is disappointing. Which is why it is important to look at reviews before buying any monitor.

You are correct though that there are FreeSync and G-Sync monitors that use the same display but they can still widely differ from each other. Just because that's the life of monitor technology, no two monitors are the same. As for them being nearly identical outside of pricing isn't correct. As the screen may be the same but the way it is utilized and put together with the rest of the parts of a monitor are usually different. Plus the Nivida G-Sync Module uses the FPGA 768 mg DDR3 memory which means all the processing is actually done on the module itself which gives you better performance as your graphics card isn't doing any of the work. Where FreeSync doesn't do this.

Last, all actual G-Sync monitors avoid blanking, pulsing, flickering, ghosting, or other artifacts during variable refresh rate (vrr) gaming. This isn’t true with all Freesync displays or G-Sync compatible displays. -

cryoburner Reply

I'm not going to respond to all points, but it's not really accurate to say that some "G-Sync Compatible" monitors may suffer from "blanking, pulsing, flickering, ghosting, or other artifacts". At least according to Nvidia's own marketing materials, screens certified as "G-Sync Compatible" are specifically tested to not have any "flicker, blanking or artifacts". In any case, even among FreeSync displays that are not certified as being G-Sync Compatible, things like flickering or blanking issues are extremely uncommon, and were primarily just seen on a few early models that poorly implemented the feature back when FreeSync first came out several years ago. So, it's pretty much a non-issue, despite what Nvidia's marketing department would want people to believe.SirGalahad said:This isn’t true with all Freesync displays or G-Sync compatible displays.

Now, ghosting is possibly worth mentioning, since it's technically something that all current monitors experience to some degree, and the question comes down to what extent. With modern TN panels, it's possible for ghosting to be practically nonexistent due to their fast response times, but TN panels tend to experience other issues like limited viewing angles with less accurate colors and mediocre contrast. IPS panels experience a bit more ghosting, but tend to offer superior color accuracy and viewing angles. And then there's VA panels, that tend to experience similar or slightly more ghosting than IPS, but typically offer deeper blacks with around 2 to 3 times the contrast of IPS or TN, with color accuracy and viewing angles generally in-between the two. Pixel response times are only one part of image quality, so even if a screen doesn't quite meet Nvidia's arbitrary standards for how much ghosting is allowed in a G-Sync display, it could still be close, while being superior in other areas, and in general few modern gaming monitors should show "major" issues in terms of ghosting. And of course, there's nothing keeping manufacturers from using the same design for both the G-Sync and FreeSync versions of a display, outside of the scaling hardware, which is often what happens.

Again, we're talking about an early FreeSync display from 2015, more than four years ago, and odd ranges like that are extremely rare. And that limited range is clearly mentioned near the top of its product description on both Amazon and Newegg, as well as in the monitor's specifications on its official product page, so I would hardly say the information isn't readily available. With the vast majority of 144+Hz displays, the range is wide enough to where LFC can take over when frame rates get low, making the exact lower range not particularly important, and the upper range almost always matches the screen's maximum refresh rate.SirGalahad said:Plus sometimes the frequency range that FreeSync gives is simply ridiculous. Such as with the Asus MG279Q where it only works between 35 - 90 hz. Even though it is a 144hz panel. So, with any Freesync Montior you have to look at what the range is. Which they don't make readily available. Which makes me concerned that the average user may not even notice before buying the monitor and then end up with an inferior product.

As for why Asus went with that odd variable refresh range for that display, AMD didn't add LFC to FreeSync until the following year, so at the time when the screen came out, a 35-90fps range likely seemed more practical for a 1440p display than something like 60-144 at that resolution. It's worth noting that the screen does now support LFC at frame rates below 35fps, though realistically games will tend to look choppy at frame rates that low, whether G-Sync or FreeSync is being used.

It's an interesting video, though monitor companies are likely to perform a good amount of their own testing and in many cases full calibration for premium displays. And again, really, someone buying a monitor in the price range of those with G-Sync hardware probably should check professional reviews either way.SirGalahad said:But if you're interested in the testing that Nvidia does check out this video by Linus Tech Tips

Please link to these supposed results. For it to be an accurate test, it would need to use a G-Sync and a FreeSync version of the same display, and also be performed on the same graphics card, since some games may perform differently on different hardware. So, that arguably voids any such test done before January of this year, when Nvidia finally added DisplayPort Adaptive Sync support to their cards. And ideally you would want to perform the test on more than one pair of monitors. Unfortunately, I haven't seen any test that properly does this so far. Tests from prior years had to use different graphics cards, with different performance characteristics, and often they have used totally different monitors, or in some cases didn't even share identical settings between the systems. In general though, the typical outcome tends be that the test subjects struggle to notice any significant difference as far as the adaptive sync implementation is concerned.SirGalahad said:However, blind testing has shown that people will pick a G-Sync monitor over a FreeSync monitor because it feels smoother and more consistent.