Why you can trust Tom's Hardware

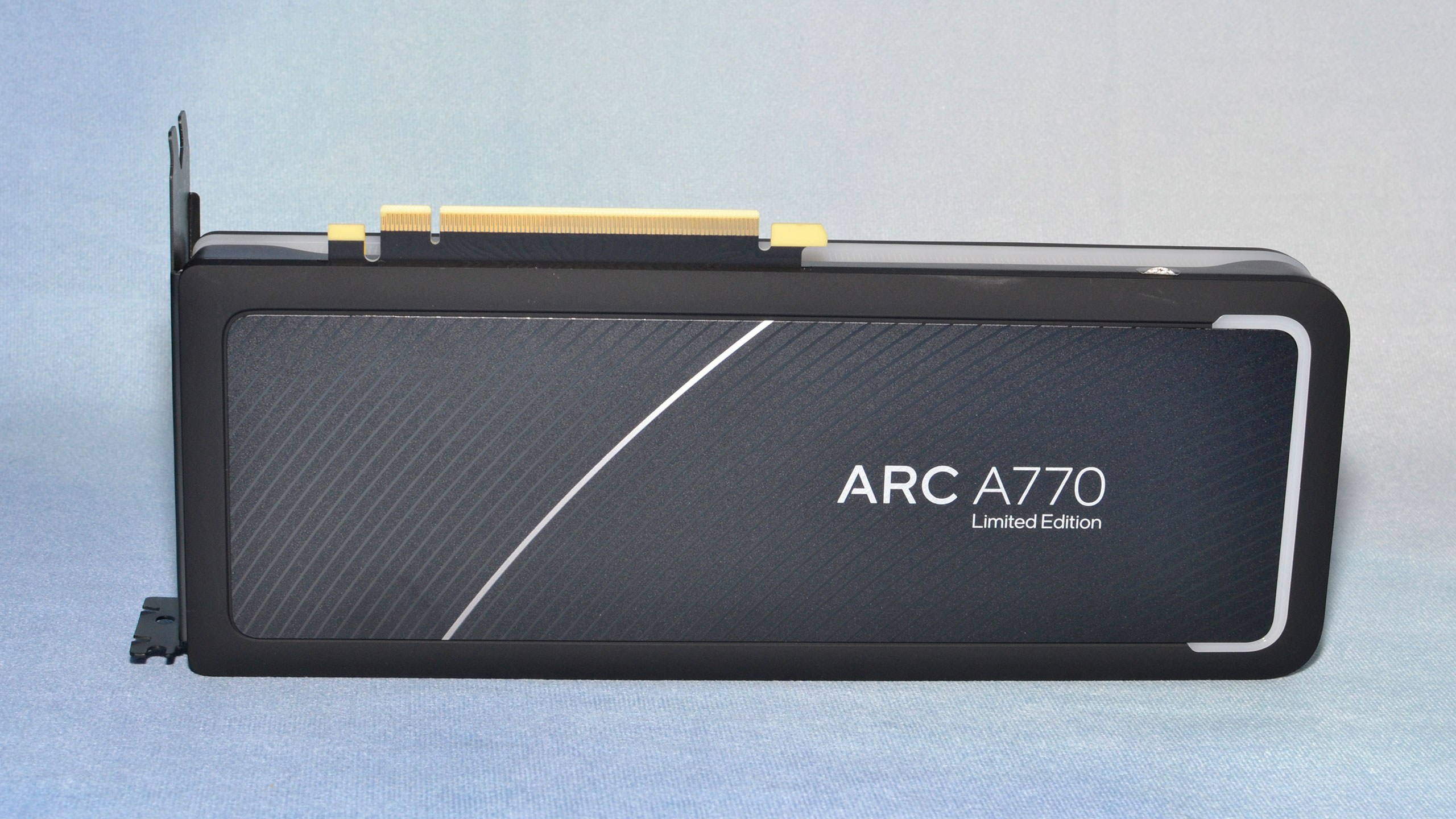

Intel might be new to the graphics card market. Still, it's had motherboards, NUCs, and numerous other products over the years and obviously has plenty of expertise when it comes to manufacturing. That's definitely apparent when you look at the Arc A770 Limited Edition and consider this is the first consumer graphics card Intel has shipped in over two decades.

Intel will sell cards under its own label under the "Limited Edition" brand. However, think of them as vehicles with an "LE" suffix because these won't be limited production runs. As long as Intel continues to sell Arc GPUs, it intends to sell Limited Edition cards. The A770 Limited Edition represents the current halo product from the Arc family, with the fully enabled ACM-G10 die along with 16GB of 17.5 Gbps GDDR6 memory. There will also be partner cards, but those may opt for either 16GB like Intel's card, or 8GB — with a presumably lower price. Frankly, considering Intel only recommends a $20 difference in pricing between the 8GB and 16GB A770 cards, we see little reason to bother with an 8GB model.

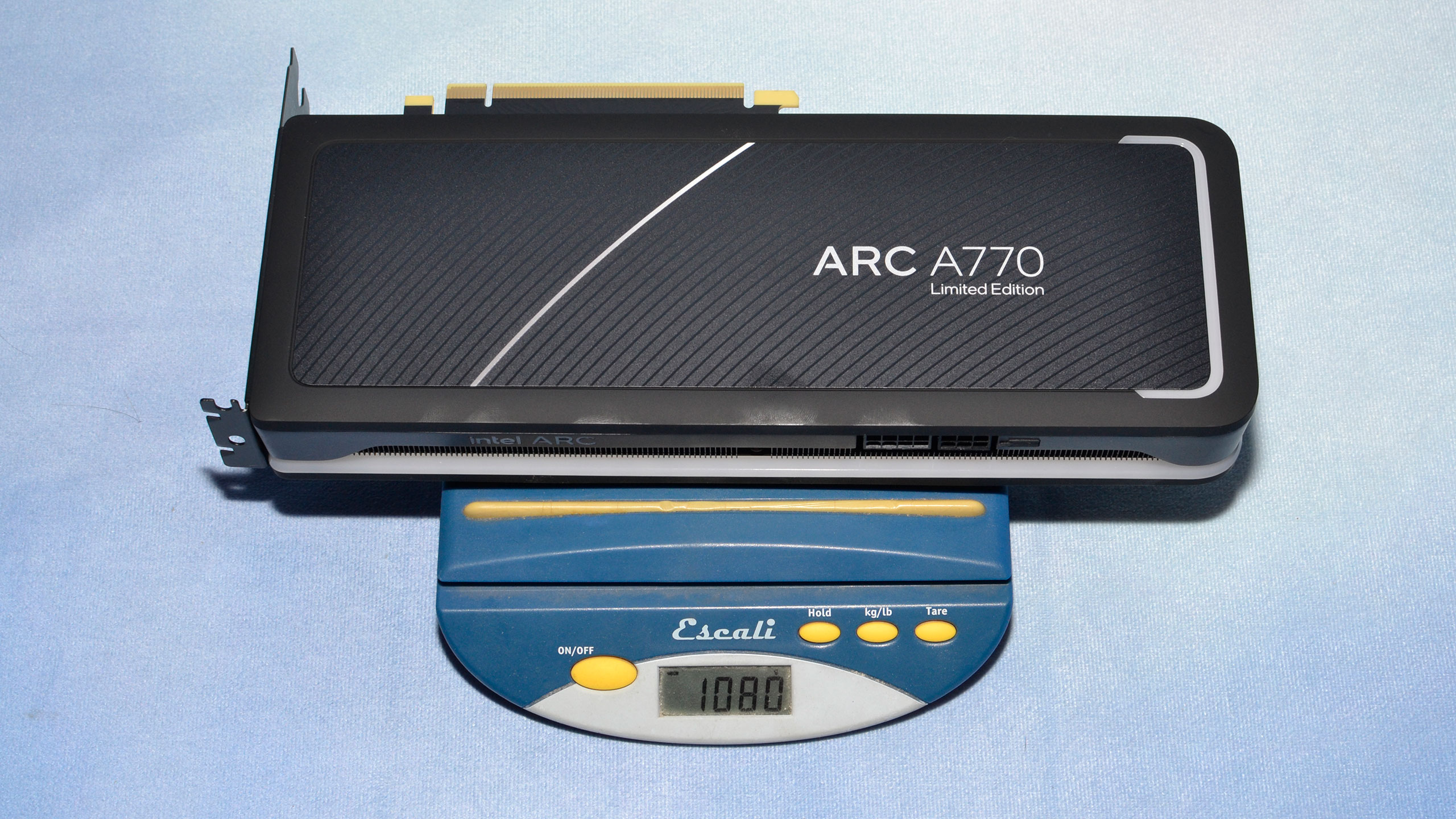

The A770 LE has two custom 85mm fans with 15 blades each. The card weighs 1080g and measures 268x110x38mm, a traditional 2-slot design. Compared to some of the other monster cards we've seen in the past generation, the A770 LE is relatively compact, though the competing RTX 3060 and RX 6650 XT are similarly sized and use less power. We'll get to power and noise measurements later, but the fans and cooler do appear to do a good job.

The A770 LE comes with a decent amount of RGB lighting, including a USB cable that connects to a motherboard port that allows control of the lighting effects. In addition, there are glowing rings around the fans, a lighting strip that extends around the outside edge of the card, and an additional lighting strip on the side opposite the fans. The default color scheme is blue, with purple/pink segments lit up that move around the card.

Intel's card comes with 8-pin and 6-pin PEG power connectors. Note that on the review samples, these are colored gray and black (or at least darker gray), but the retail products will have two black connectors. The card runs with a 225W TBP (Total Board Power) at stock, with a decent amount of headroom for overclocking should you want to boost the power limit.

Internally, the heatsink uses a large vapor chamber to cool the GPU and memory, which is a higher-end cooling solution and not something we normally see on midrange and lower cards. Even though the card isn't particularly large and has a higher power draw than competing GPUs, the overall results during testing have been good.

Video outputs consist of the typical triple DisplayPort and a single HDMI that's become almost universal among modern GPUs. Note that this is the first GPU with DisplayPort 2.0 support, sort of, which should allow for higher refresh rates and color depths over a single cable. Meanwhile, the HDMI port is 2.0b, though a DisplayPort to HDMI 2.1 adapter may be provided with some cards.

Intel Arc GPUs can support up to DisplayPort 2.0 UHBR 10 data rates, but not UHBR 13.5 or UHBR 20.

It's important to note that Intel classifies the DisplayPort as "1.4a, DP 2.0 10G Ready" — likely due to the lack of DP 2.0 displays. Intel Arc can support up to UHBR 10 data rates, but not UHBR 13.5 or UHBR 20. That makes the DP 2.0 10G a modest 24% improvement in bandwidth over DP1.4a. However, UHBR 10 and above switch from 8b/10b encoding to 128b/130b encoding, which increases the maximum data rate from DP1.4a's 25.92 Gb/s up to 39.38 Gb/s — a 52% increase in usable bandwidth.

The important thing (for some) will be that Arc can support up to 8K 60 Hz resolutions, using DSC (Display Stream Compression) — 4:2:2 (maybe) or 4:2:0 (for sure) encoding. The display engines can handle up to two 8K 60 Hz displays, or four 4K 120 Hz displays. 1440p at up to 360 Hz is also supported.

Overall, the Arc A770 Limited Edition is an attractive design that should please anyone who's disgruntled with the overly gaudy and bulky graphics cards that have become so common. And if you don't like Intel's particular design, Arc A700-series cards from ASRock and Gunnir are apparently on the way, and Acer's first graphics card ever will be an Arc A770 with a unique radial blower plus axial fan design.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Meet the Intel Arc A770 Limited Edition Card

Prev Page Intel Arc A770 Review Next Page Intel Arc A770 Test Setup and Driver Discussion

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

ingtar33 When Intel can deliver drivers that don't crash simply opening a game i might be interested; however until the software side is figured out (something intel hasn't done yet in 20+ years of graphic drivers) I simply can't take this seriously.Reply -

edzieba If you're getting a headache with all the nebulously-pronounceable Xe-ness (Xe-cores, Xe Matrix Engines, Xe kitchen sink...) imagine it is pronounced "Ze" in a thick Hollywood-German accent. Much more enjoyable.Reply -

I'd like to see benchmarks cappped at 60fps. Not everyone uses high refresh rate monitor and today, when electricity is expensive and most likely will be even more in the near future, I'd like to see how much power a GPU draws when not trying to run the game as fast as possible.Reply

-

AndrewJacksonZA FINALLY, Intel is back! Or at least, halfway back. It's good seeing them compete in this midrange, and I hope that they flourish into the future.Reply

And I really want an A770, my i740 is feeling lonely in my collection. ;-) -

AndrewJacksonZA Reply

The "EKS-E" makes it sound cool!edzieba said:If you're getting a headache with all the nebulously-pronounceable Xe-ness (Xe-cores, Xe Matrix Engines, Xe kitchen sink...) imagine it is pronounced "Ze" in a thick Hollywood-German accent. Much more enjoyable.

-

-Fran- Limited Edition? More like DoA Edition...Reply

Still, I'll get one. We need a strong 3rd player in the market.

I hope AV1 enc/dec works! x'D!

EDIT: A few things I forgot to mention... I love the design of it. It's a really nice looking card and I definitely appreciate the 2 slot, not obnoxiously tall height as well. And I hope they can work as secondary cards in a system without many driver issues... I hope... I doubt many have tested these as secondary cards.

Regards. -

LolaGT The hardware is impressive. It looks the part, in fact that looks elegant powered up.Reply

It does look like they are trying to push out fixes, unfortunately when you are swamped with working on fixes optimization takes a back seat. The fact that they have pushed out quite a few driver updates shows they are spending resources on that and if they keep at that.....we'll see. -

AndrewJacksonZA Reply

Same. I have a BOATLOAD of media that I want to convert and rip to AV1, and my i7-6700 non-K feels sloooooowwww, lol.-Fran- said:I hope AV1 enc/dec works! -

rluker5 Looks like this card works best for those that want to max out their graphics settings at 60 fps. Definitely lagging the other two in driver CPU assistance.Reply

And a bit of unfortunate timing given the market discounts in AMD gpu prices. The 6600XT for example launched at AMD's intended price of $379. The A770 likely had it's price reduced to account for this, but the more competitors you have, generally the more competition you will have.

I wonder how many games the A770 will run at 4k60 medium settings but high textures? That's what I generally play at, even with my 3080 since the loss in visual quality is worth it to reduce fan noise.