GeForce GTX Titan X Review: Can One GPU Handle 4K?

Introduction

Confidence is sexy, I’m told.

Nvidia showed serious confidence in GeForce GTX Titan X when the company shipped over one card and one monitor—an Acer XB280HK bprz—the first G-Sync-equipped 4K display. Does Nvidia expect its new single-GPU flagship to drive a 3840x2160 resolution with performance to spare? How cool would that be? My little Tiki, tucked out of sight, would plow through the most taxing games at Ultra HD. Oh lawdy. And here I thought I’d be stuck at 2560x1440 forever.

The 980 didn’t get us to a point where one graphics processor could handle the demands of 4K at enthusiast-class detail levels. But the GeForce GTX Titan X is based on GM200, composed of eight billion transistors. Eight. Billion. That’s almost three billion more than the GeForce GTX 980’s GM204 and one billion more than GK110, the original GeForce GTX Titan’s cornerstone.

In their final form, those transistors manifest as a 601mm² piece of silicon, which is about nine percent larger than GK110. The GM200 GPU is manufactured on the same 28nm high-k metal gate process, so this is simply a larger, more complex chip. And yet it’s rated for the same 250W maximum board power. With higher clock rates. And twice as much memory. Superficially, the math seems off. But it isn’t.

Inside Of GM200

In-Depth On The SMM

For more information about the efficiency-oriented improvements Nvidia made to its Streaming Multiprocessor, check out the first page of GeForce GTX 750 Ti Review: Maxwell Adds Performance Using Less Power. In short, the company says it sought to increase utilization of the SMM by reconfiguring its resources.

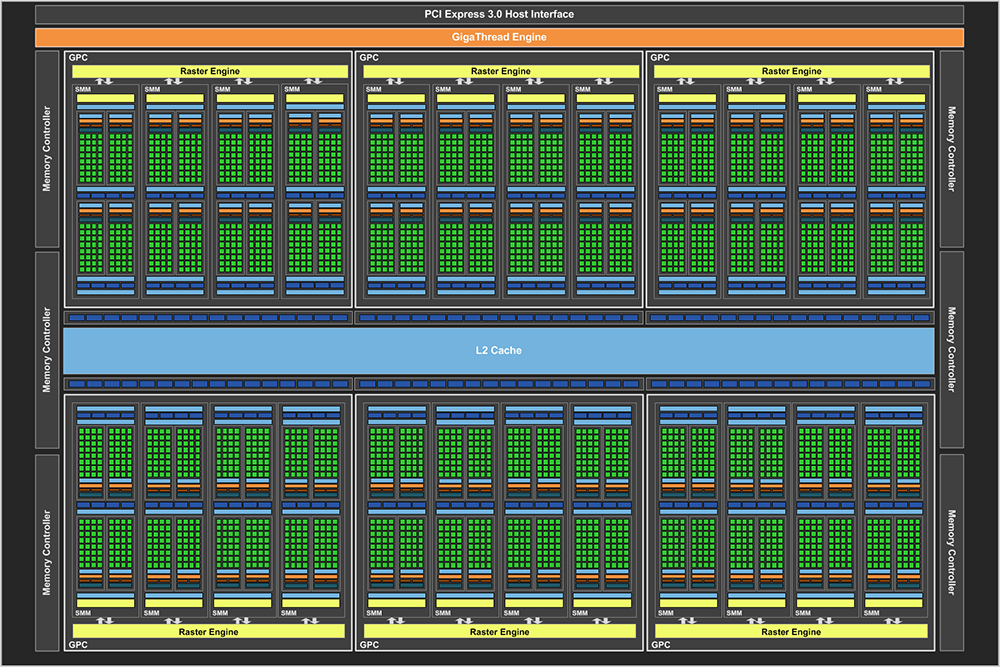

Like the GeForce GTX 980 and 970 we were introduced to last September, GM200 is based on Nvidia’s efficient Maxwell architecture. Instead of GM204’s four Graphics Processing Clusters, you get six. And with four Streaming Multiprocessors per GPC, that adds up to 24 SMMs across the GPU. Multiply out the 128 CUDA cores per SMM and you get GeForce GTX Titan X’s total of 3072. Eight texture units per SMM add up to 192—with a base core clock rate of 1000MHz, that’s 192 GTex/s (the original GeForce GTX Titan was rated at 188, despite its higher texture unit count).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Like the SMMs found in GM204, GM200 exposes 96KB of shared memory and 48KB of texture/L1 cache, doubling what it had in the GeForce GTX 750 Ti’s GM107. The other architectural elements are similar though; each SMM is broken up into four blocks, with their own instruction buffer, warp scheduler and pair of dispatch units. In fact, so much is carried over that double-precision math is still specified at 1/32 the rate of FP32, even though GM200 is the Maxwell family’s big daddy. Incidentally, an upcoming Quadro card based on the same GPU shares this fate. If FP64 performance is truly important to you, Nvidia would likely suggest one of its Tesla boards.

GeForce GTX 980’s four ROP partitions grow to six in GeForce GTX Titan X. With 16 units each, that’s up to 96 32-bit integer pixels per clock. The ROP partitions are aligned with 512KB slices of L2 cache, totaling 3MB in GM200. When it introduced GeForce GTX 750 Ti, Nvidia talked about a big L2 as a mechanism for preventing bottlenecks on a relatively narrow 128-bit memory interface. That’s not as big of a concern with GM200, given its 384-bit path populated by 7 Gb/s memory. Maximum throughput of 336.5 GB/s matches the GeForce GTX 780 Ti, and exceeds GeForce GTX Titan, GeForce GTX 980 and Radeon R9 290X.

On-Board GeForce GTX Titan X

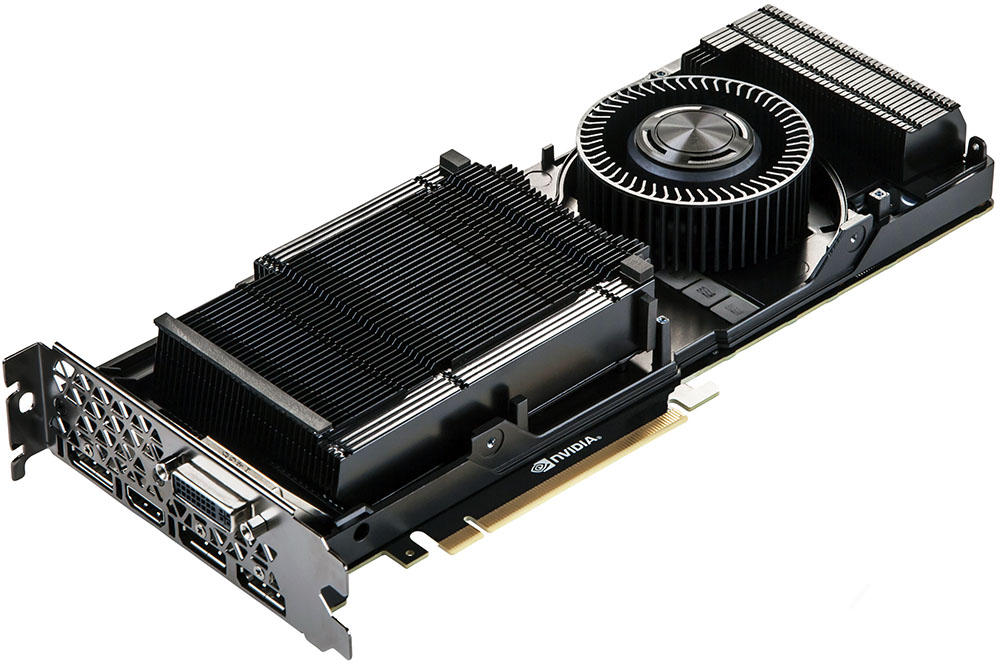

Nvidia drops GM200 on a 10.5”-long PCB that resembles the high-end boards we’ve been seeing for more than two years now. Model-specific differences are apparent when you look more closely, but we’re talking about the same dimensions here, which undoubtedly makes life easier for system builders who were nervous about integration.

The same number of single-die memory ICs surround the GPU. However, now we’re looking at 4Gb (512MB) packages of SK hynix’s fastest GDDR5, totaling 12GB. Even for 4K, that’s really quite overkill. Still, Nvidia says it’s going for future-proofing, and if there’s a future where Ultra HD displays in Surround are driven by three or four Titan X boards in SLI, 6GB wouldn’t have been enough.

A plate sits on top of the PCB, cooling a number of the surface-mounted components. There’s a copper vapor chamber mounted to that, topped by a two-slot-tall aluminum heat sink. Nvidia’s reference design remains faithful to the centrifugal fan, which pulls in air from your chassis, pushes it over the plate, through the heat sink and out the back. Although blower-style fans tend to create more noise than axial coolers, we’ve seen enough cards based on this same ID to know they’re acoustically-friendly. GeForce GTX Titan X is no exception.

An aluminum housing covers the internals. It’s more textured than we’ve seen in the past, and Nvidia paints the enclosure black. Do you remember this picture from The Story of How GeForce GTX 690 And Titan Came To Be? It’s sort of like that, except without green lighting under the fins.

Also, the backplate present on GeForce GTX 980 is missing. Although part of the plate was removable to augment airflow in arrays of multiple 980s, Titan X is a more power-hungry card. To give adjacent boards as much breathing room as possible, Nvidia got rid of it entirely. I don’t personally mind the omission, but Igor was fond of the more polished look.

GeForce GTX Titan shares the 980’s display outputs, including one dual-link DVI port, one HDMI 2.0-capable connector and three full-sized DisplayPort interfaces. Between those five options, you can drive as many as four displays at a time. And if you’re using G-Sync-enabled displays, that trio of DisplayPort 1.2 outputs makes Surround a viable choice.

A Mind To The Future

Beyond the Titan X’s 12GB of GDDR5 memory, Nvidia calls out a number of the GPU’s features said to make it more future-proof.

During this year’s GDC, Microsoft mentioned that 50% of today’s graphics hardware is covered by DirectX 12, and by the holiday season, two-thirds of the market will be compatible. That means a lot of graphics cards are going to work with the API. But there will be different feature levels, which group together DirectX 12’s features: 12.0 and 12.1. According to Microsoft’s Max McMullen, 12.0 exposes a lot of the API’s CPU-oriented performance advantages, while 12.1 adds Conservative Rasterization and Rasterizer Ordered Views (ROVs) for more powerful rendering algorithms.

As you might expect from a GPU said to be built with the future in mind, GM200 supports feature level 12.1 (as does GM204). Everything older, including the GM107 found in GeForce GTX 750 Ti, is limited to feature level 12.0. We also asked AMD whether its GCN-based processors support 12.0 or 12.1; a representative said he could not comment at this time.

The Maxwell architecture enables a number of other features, some of which are exploitable today, while others require developer support to expose. For more information about Dynamic Super Resolution, Multi-Frame Samples Anti-Aliasing (a great way to diminish the performance impact of AA at 4K on Titan X, by the way), VR Direct and Voxel Global Illumination, check out this page from Don’s GeForce GTX 980 review.

-

-Fran- Interesting move by nVidia to send a G-Sync monitor... So to trade off the lackluster performance over the GTX980, they wanted to cover it up with a "smooth experience", huh? hahaha.Reply

I'm impressed by their shenanigans. They up themselves each time.

In any case, at least this card looks fine for compute.

Cheers! -

chiefpiggy The R9 295x2 beats the Titan in almost every benchmark, and it's almost half the price.. I know the Titan X is just one gpu but the numbers don't lie nvidia. And nvidia fanboys can just let the salt flow through your veins that a previous generation card(s) can beat their newest and most powerful card. Cant wait for the 3xx series to smash the nvidia 9xx seriesReply -

chiefpiggy ReplyInteresting move by nVidia to send a G-Sync monitor... So to trade off the lackluster performance over the GTX980, they wanted to cover it up with a "smooth experience", huh? hahaha.

Paying almost double for a 30% increase in performance??? Shenanigans alright xD

I'm impressed by their shenanigans. They up themselves each time.

In any case, at least this card looks fine for compute.

Cheers! -

rolli59 Would be interesting to comparison with cards like 970 and R9 290 in dual card setups, basically performance for money.Reply -

esrever Performance is pretty much expected from the leaked specs. Not bad performance but terrible price, as with all titans.Reply -

dstarr3 I don't know. I have a GTX770 right now, and I really don't think there's any reason to upgrade until we have cards that can average 60fps at 4K. And... that's unfortunately not this.Reply -

hannibal Well this is actually cheaper than I expected. Interesting card and would really benefit for less heat... The Throttling is really the limiting factor in here.Reply

But yeah, this is expensive for its power as Titans always have been, but it is not out of reach neither. We need 14 to 16nm finvet GPU to make really good 4K graphic cards!

Maybe in the next year...

-

cst1992 People go on comparing a dual GPU 295x2 to a single-GPU TitanX. What about games where there is no Crossfire profile? It's effectively a TitanX vs 290X comparison.Reply

Personally, I think a fair comparison would be the GTX Titan X vs the R9 390X. Although I heard NVIDIA's card will be slower then.

Alternatively, we could go for 295X2 vs TitanX SLI or 1080SLI(Assuming a 1080 is a Titan X with a few SMMs disabled, and half the VRAM, kind of like the Titan and 780). -

skit75 ReplyInteresting move by nVidia to send a G-Sync monitor... So to trade off the lackluster performance over the GTX980, they wanted to cover it up with a "smooth experience", huh? hahaha.

Paying almost double for a 30% increase in performance??? Shenanigans alright xD

I'm impressed by their shenanigans. They up themselves each time.

In any case, at least this card looks fine for compute.

Cheers!

You're surprised? Early adopters always pay the premium. I find it interesting you mention "almost every benchmark" when comparing this GPU to a dual GPU of last generation. Sounds impressive on a purely performance measure. I am not a fan of SLI but I suspect two of these would trounce anything around.

Either way the card is way out of my market but now that another card has taken top honors, maybe it will bleed the 970/980 prices down a little into my cheapskate hands. -

negevasaf IGN said that the R9 390x (8.6 TF) is 38% more powerful than the Titan X (6.2 TF), is that's true? http://www.ign.com/articles/2015/03/17/rumored-specs-of-amd-radeon-r9-390x-leakedReply