Nvidia Outlines Jensen 'Huang's Law' of Computing

It is claimed that process gains are much less important than last decade's 1,000x GPU inference performance improvements.

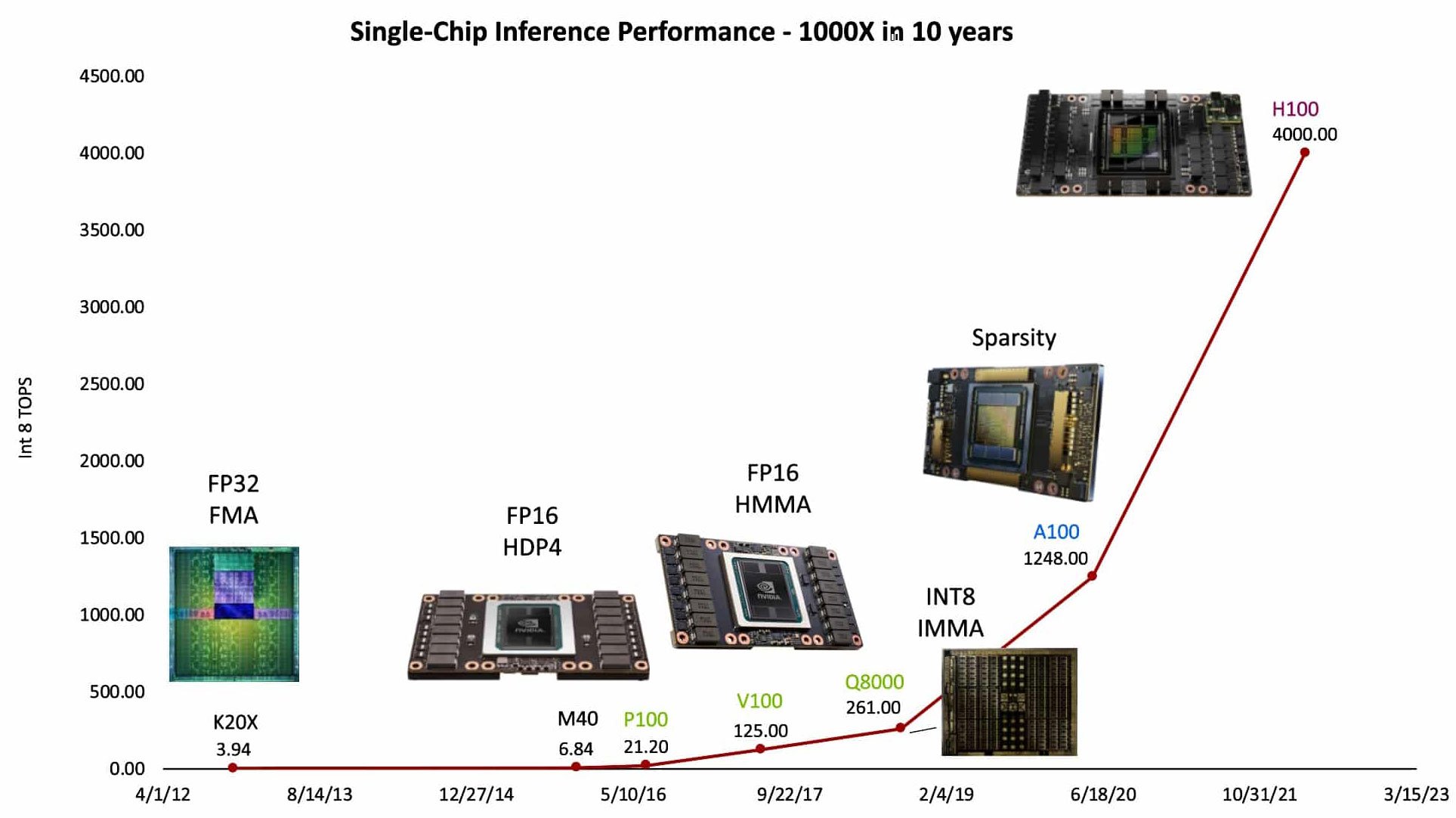

As people debate whether Moore’s Law is slowing, remains applicable, or is even dead or alive in the 2020s, Nvidia scientists herald the impressive momentum behind Huang’s Law. Over the last decade, Nvidia GPU AI-processing prowess is claimed to have grown 1000-fold. Huang’s Law means that the speedups we have seen in “single chip inference performance” aren’t now going to peter out but will keep on coming.

Nvidia published a blog post about Huang’s Law on Friday, outlining the belief and the work practices behind it. What Nvidia Chief Scientist Bill Dally describes as a “tectonic shift in how computer performance gets delivered in a post-Moore’s law era” is interestingly primarily based on human ingenuity. This characteristic seems somewhat unpredictable to establish a law upon, but Dally believes that the impressive chart below marks just the beginning of Huang’s Law.

According to Dally's recent Hot Chips 2023 conference talk, the chart above shows a 1000-fold increase in GPU AI inference performance in the last ten years. Interestingly, unlike Moore's Law, process shrinking has had little impact on the progress of Huang's Law, said the Nvidia Chief Scientist.

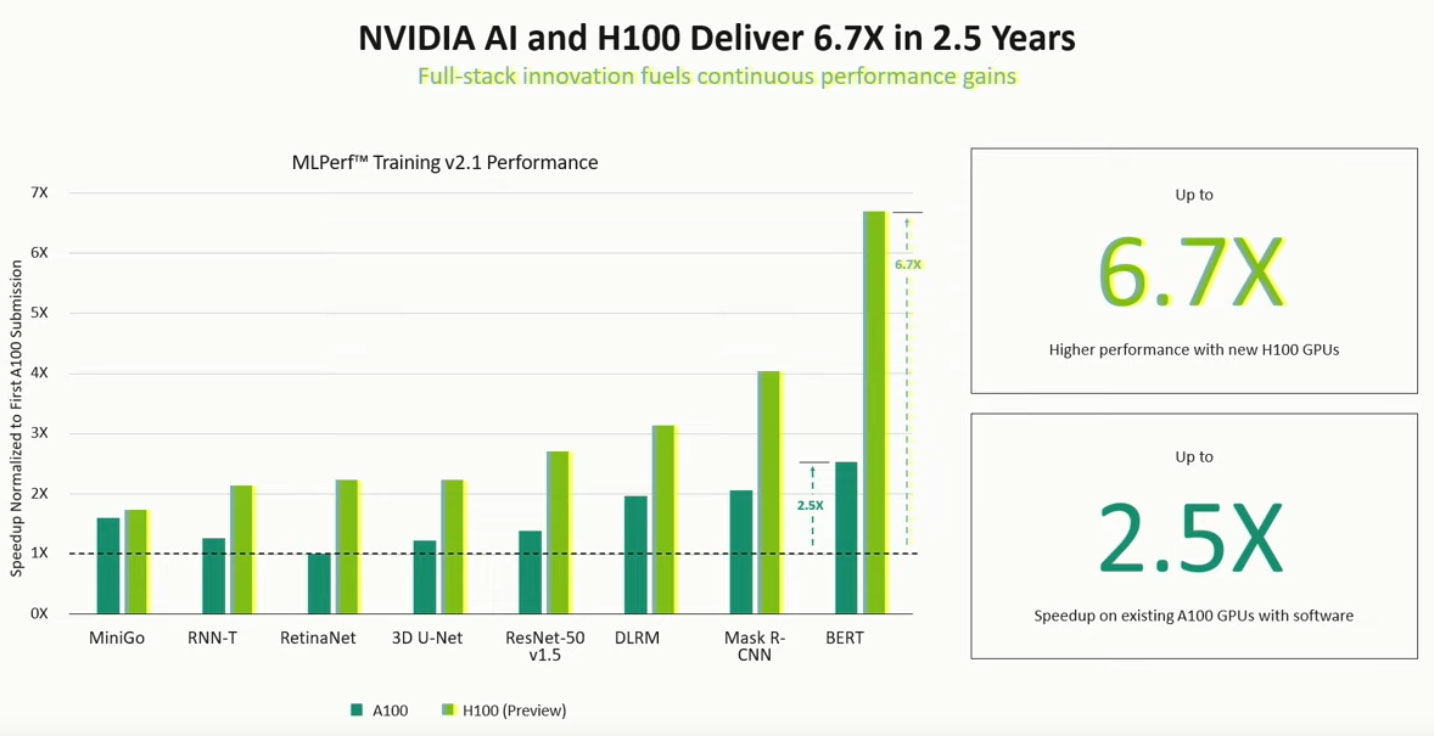

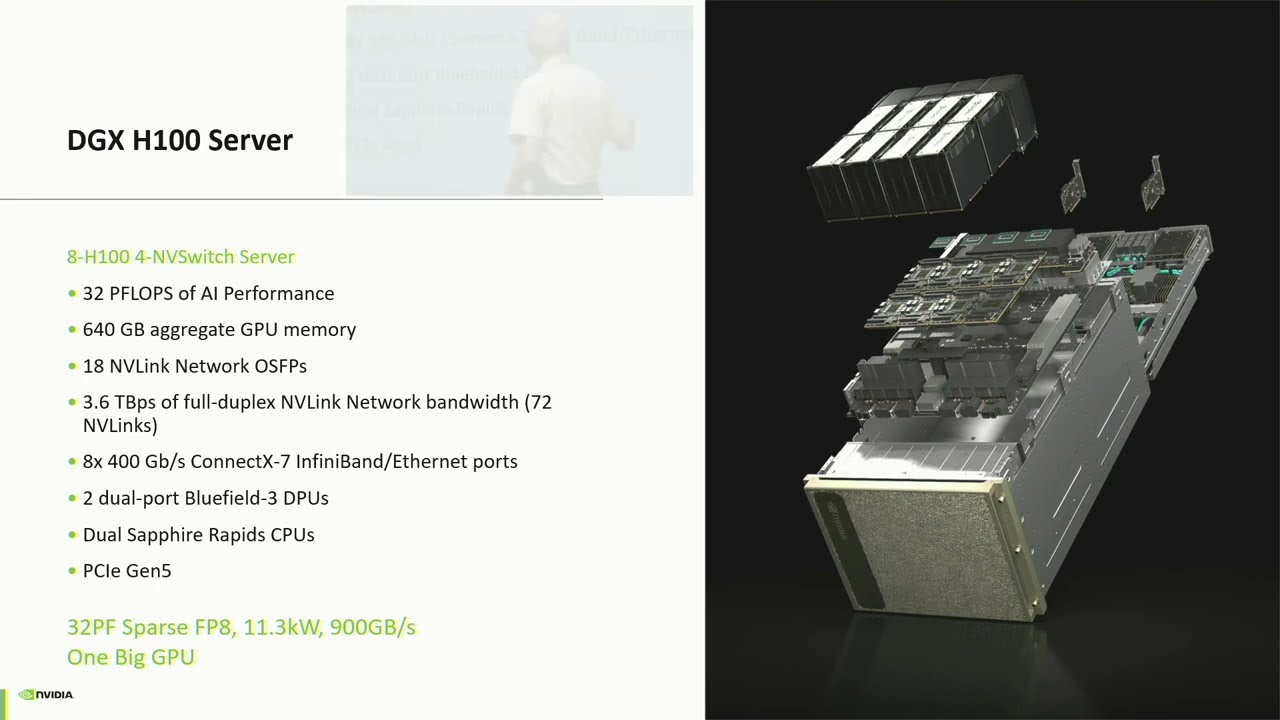

Dally recalls how a 16x gain was achieved from changing Nvidia GPU underlying number handling. Another big boost was delivered with the arrival of the Nvidia Hopper architecture, wielding the Transformer Engine. Hopper uses a dynamic mix of eight- and 16-bit floating point and integer math to deliver a 12.5x performance leap - as well as save energy - it is claimed. Previously, Nvidia Ampere introduced structural sparsity for a 2x performance increase, said the scientist. Advances like NVLink and Nvidia networking technology have further bolstered these impressive gains.

One of Dally's most eyebrow-raising claims was that the above 1000x compounded gains in AI inference performance contrast starkly with gains attributed to process improvements. Over the last decade, as Nvidia GPUs shifted from 28nm to 5nm processes, the semiconductor process improvements have "only accounted for 2.5x of the total gains," asserted Dally at Hot Chips.

With concepts such as "ingenuity and effort inventing and validating fresh ingredients" behind it, how will Huang's Law continue apace? Thankfully, Dally indicates that he and his team still see "several opportunities" for accelerating AI inference processing. Avenues to explore include "further simplifying how numbers are represented, creating more sparsity in AI models and designing better memory and communications circuits."

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Kamen Rider Blade Huang's Law: Innovation only happens in Jensen's massive kitchen with countless spatulas.Reply -

InvalidError Huang's law: Charge more for less raw performance, then convince clients that they still get better value per dollar using AI-inflated DLSS figures.Reply -

Ogotai its kind of pathetic that nvida is going that way. make video cards with less raw performance, so you have to use one of their techs to give you the performance you should be getting in the 1st place.. good job nvidia.... good job. rip off your costumers even more.....Reply

its a good thing i am still looking at getting one of amds cards instead... -

bit_user The reality-distortion field is strong, in this guy.Reply

'Over the last decade, as Nvidia GPUs shifted from 28nm to 5nm processes, the semiconductor process improvements have "only accounted for 2.5x of the total gains," asserted Dally at Hot Chips.'That's if you only look at the frequency gains from process advancement! If you also account for density, it's waaaay higher!

Basically, they're just claiming to have rediscovered something we knew all along, since well before the dawn of hardware-accelerated graphics chips, which is that fixed-function, purpose-built logic is a lot faster than general-purpose computers!

All of the stuff about custom data formats was true of graphics, back in the old days (and even to some extent, today). Things like 16-bpp color by packing (5, 5, 6)-bit tuples into a 16-bit integer. What happened since the early days of 3D graphics cards is that the silicon improvements came so fast that GPU makers were able to offer a lot of programmability and generality. Then, AI came along, and was sufficiently compute-bound and bottlenecked on specific types of computation that it made sense to have specialized, fixed-function logic for it, just as we have for texturing, ROPs, ray-tracing, etc.

So, just multiply together the 2.5x frequency boost, the additional cores enabled by process density improvements, and the improvements by making fixed-function logic for AI, and that gets you most of the way to their 1000x number. The rest comes from things like larger caches and various other tweaks.

'Huang's Law' - what self-congratulatory nonsense. All he really pointed out was something the supercomputer industry knew since the 60's, which is that you can scale faster by also adding/increasing parallelism than simply relying on scalar performance improvements. -

xmod Why do people doubt Jensen so much but believed Jobs and Gates at face value in addition to putting them on pedestals?Reply -

bit_user Reply

They have different strengths. Jobs was visionary (also a tyrant) while Gates pioneered much of the pre-cloud software business model and was pretty ruthlessly competitive.xmod said:Why do people doubt Jensen so much but believed Jobs and Gates at face value in addition to putting them on pedestals?

Jensen might have a knack for business, and certainly has a flair for cut-throat competition, but he's no technical genius. So, calling it "Huang's Law" is utterly laughable, not only because he's far from the first to observe it, but because he's not even the same level of technical mind as Gordon Moore. It's 100% PR.

One thing Jensen shares with Bill Gates is that they both started as hardware or software developers. From what I've picked up, Jobs mooched off the technical work of others, his entire career. Even back when he worked at Fairchild, word has it he basically just got Wozniak to do his work for him. Not that Jobs didn't have strengths, but they just weren't technical and he didn't truly start in the trenches the way Gates, Huang, Page, and Brin did. Heck, even Zuckerberg started out coding in his dorm room.

The thing that bugs me about Huang is that he's so bombastic and really doesn't sound particularly smart, to me. That doesn't mean he doesn't have a real knack for business, but I find it absurd to talk about him like a technical genius - he's not. -

vertuallinsanity "As people debate whether Moore’s Law is slowing, remains applicable, or is even dead or alive in the 2020s, Nvidia scientists herald the impressive momentum behind Huang’s Law".Reply

This is written and reads the same exact way Tesla and Apple "our leader is infallible " PR nonsense does.

Jensen's days at Nvidia are numbered. Same with Cook, Musk and others. They're not people defining tech evolution. They're modern day crooks.