Next-gen Nvidia GeForce gaming GPU memory spec leaked — RTX 50 Blackwell series GB20x memory configs shared by leaker

The GeForce RTX 50 series could feature memory interfaces similar to the current GeForce RTX 40 series.

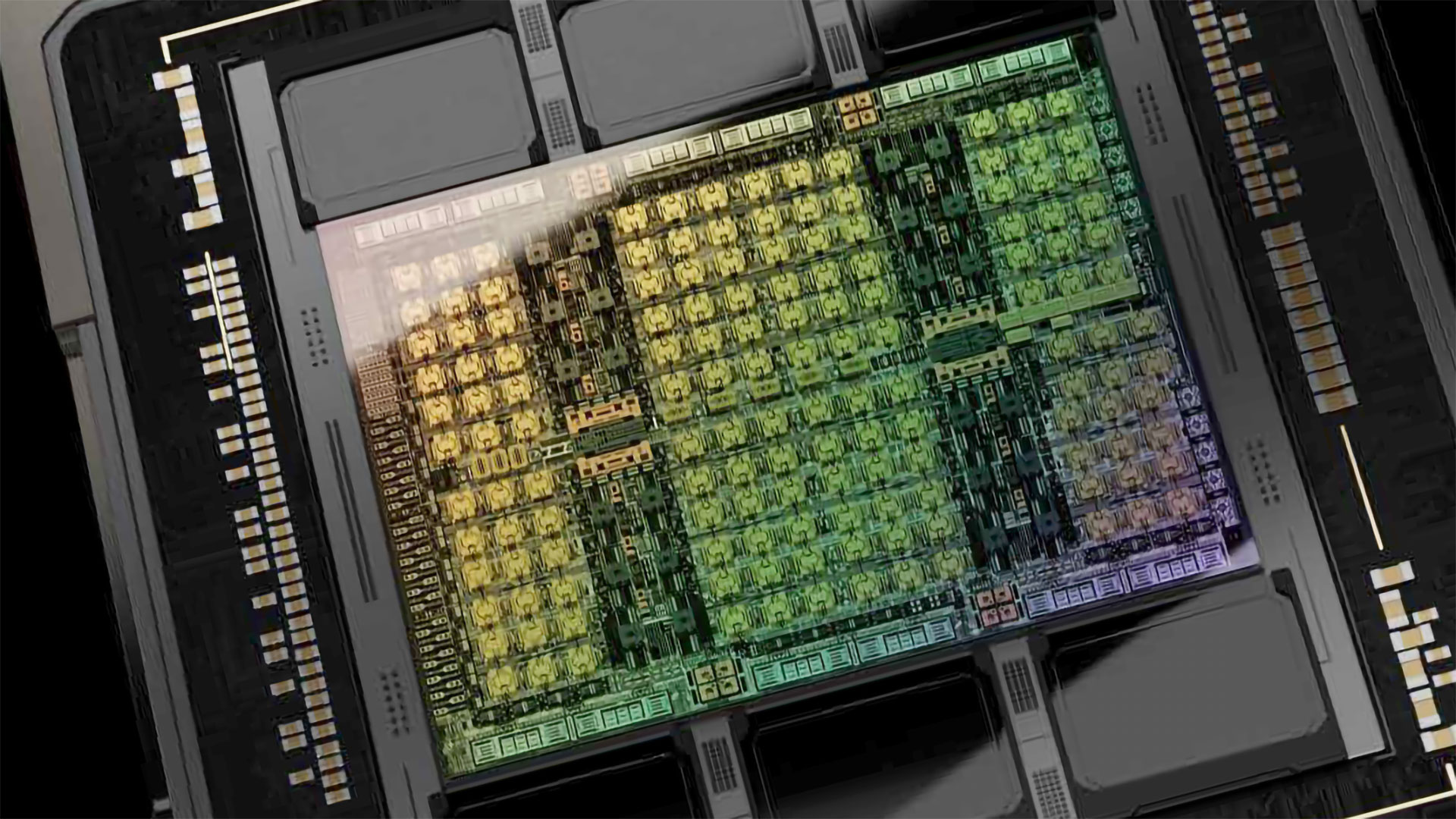

Nvidia is readying the company's GeForce RTX 50-series (Blackwell) products to rival the best graphics cards. While the launch date is still uncertain, renowned hardware leaker @kopite7kimi claims that based on his information, the memory interface configurations of the Blackwell family will not be too much different from the Ada Lovelace series. Since this is a leak, take it with a grain of salt. We gather from some previously released leaks that the company plans to retain a 384-bit memory bus with its next-generation range-topping GB202 GPU based on the Blackwell architecture.

Nvidia's Blackwell will likely be the company's first family to support GDDR7 memory, whose higher data transfer rates and architectural peculiarities promise to significantly increase performance compared to existing GDDR6 and GDDR6X-base memory solutions. Given that the 1st Generation GDDR7 SGRAM ICs will feature a data transfer rate of 32 GT/s, a 384-bit memory subsystem featuring these chips would offer around 1,536 GB/s of bandwidth, so a 512-bit memory interface will hardly be missed.

Although I still have fantasies about 512 bit, the memory interface configuration of GB20x is not much different from that of AD10x.March 9, 2024

Micron says that 16 Gb and 24 Gb GDDR7 chips will be available in 2025, though its roadmap does not indicate whether these devices will be launched simultaneously or 16 Gb will come out earlier. That said, what remains to be seen is whether Nvidia will use 16 Gb or 24 Gb GDDR7 memory ICs with its initial GeForce RTX 50-series graphics boards.

GeForce RTX 50-series Blackwell GPU Memory Configurations*

| Blackwell GPU | Width | Type | Capacity (16 Gb | 24 Gb) | Ada GPU | Width | Type | Capacity | Ampere GPU | Width | Type | Capacity |

|---|---|---|---|---|---|---|---|---|---|---|---|

| GB202 | 384-bit | GDDR7 | 24 GB | 36 GB | AD102 | 384-bit | GDDR6X | 24 GB | GA102 | 384-bit | GDDR6X | 24 GB |

| GB203 | 256-bit | GDDR7 | 16 GB | 24 GB | AD103 | 256-bit | GDDR6X | 16 GB | GA103 | 256-bit | GDDR6X | 16 GB |

| - | - | - | - | AD104 | 192-bit | GDDR6X | 12 GB | GA104 | 256-bit | GDDR6X | 16 GB |

| GB205 | 192-bit | GDDR7 | 12 GB | 18 GB | - | - | - | - | - | - | - | - |

| GB206 | 128-bit | ? | ? | AD106 | 128-bit | GDDR6 | 16 GB | GA106 | 192-bit | GDDR6 | 16 GB |

| GB207 | 128-bit | ? | ? | AD107 | 128-bit | GDDR6 | 8-16 GB | GA107 | 128-bit | GDDR6 | 8-16 GB |

*Specifications are unconfirmed.

With Nvidia's Blackwell family being at least two or three-quarters away, it is hardly a good business to make predictions by now. Yet, we have outlined possible memory configurations of the GB200-series powered offerings in the table.

For several generations now, Nvidia's top-of-the-range consumer graphics cards have used a 384-bit memory interface (AD102, GA102), which has proven to be optimal from a performance and cost point of view. Cut-down versions of Nvidia's range-topping consumer graphics products featured a 320-bit memory interface, whereas high-end GPUs featured a 256-bit bus (e.g., AD103, GA103, and GA104). Meanwhile, there are also GPUs in performance mainstream segments with a 192-bit memory bus (e.g., AD104, GA106) and a mainstream segment with a 128-bit memory interface (e.g., AD106, AD107, GA107).

While the comment by the leaker indicates the Blackwell family will largely retain memory interface configurations of the current Ada Lovelace family, it should be kept in mind that based on the same leaker, the Blackwell series will lack the GB204 GPU. In contrast, the rumored GB205 will likely not directly succeed AD104.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

My 4090 is plenty good for 4K 60 and I can definitely see skipping the RTX 50 series, barring widespread Unreal Engine 5 gaming in the next 12-18 months.Reply

-

Notton Sweet, we finally get to see 3GB chip densities so that 192-bit memory bus comes with a useful 18GB configuration.Reply

aaaaand I expect it'll cost an extra $200 for that version. -

emike09 Reply

Agreed. If anything, I'm a little CPU bound. Most games I can play at 4K max settings and get around 90-120fps. That's often with DLSS on Quality. I'll probably still upgrade to hold the 4090's resell value. And we have the PS5 Pro coming out later this year, devs will be ramping up what their games are capable of. Xbox needs to get an upgrade out soon as well with GTA 6 coming out next year.valthuer said:My 4090 is plenty good for 4K 60 and I can definitely see skipping the RTX 50 series, barring widespread Unreal Engine 5 gaming in the next 12-18 months. -

Replyemike09 said:Agreed. If anything, I'm a little CPU bound. Most games I can play at 4K max settings and get around 90-120fps. That's often with DLSS on Quality. I'll probably still upgrade to hold the 4090's resell value. And we have the PS5 Pro coming out later this year, devs will be ramping up what their games are capable of. Xbox needs to get an upgrade out soon as well with GTA 6 coming out next year.

Now that you mentioned it, DLSS has evolved into a pretty useful tool. Being a 4K Ultra RT gamer, i have used it in 2 occasions so far: the Path Tracing mode of Cyberpunk 2077/Alan Wake II and Unobtainium settings of Avatar: Frontiers of Pandora.

If it can help us get through the upcoming generation of PC games, it will turn 4090 into an even better investment.

And, if you take into account that, according to Nvidia's roadmap, RTX 50 series will hit the shelves on 2025, 4090 will stay on top even longer. -

hotaru251 if nvidia attaches the pisspoor bus on the 60 tiers in 50 series they might as well just delete the sku.Reply

just release it as a 50 sku and make a 70 sku with an actual decent bus. -

hollywoodrose Reply

So do you think it would be worth it to get a 5090 when they come out? I just finished my first build last summer (7800x3d, 3060ti, Gigabyte Aorus Elite 650b ax, 32GB GSkill Trident @ 6000, 2TB Samsung 980 pro m.2., Be Quiet! dark rock pro cpu cooler and 500FX case, Corsair 750rmx psu) and it runs great.hotaru251 said:if nvidia attaches the pisspoor bus on the 60 tiers in 50 series they might as well just delete the sku.

just release it as a 50 sku and make a 70 sku with an actual decent bus.

But because I was on a budget I could only afford a 3060ti. I wanted to grab a 4090 FE but they were so difficult to find, I decided to wait for the 5090. I’ve been hearing a lot of good news about the 5090 so this is the first negative thing that I’ve read. I’m kind of surprised actually.

Do you think it’s a major drawback or does it just mean it will be a few fps slower? I can see why someone might not want to upgrade if they have a 4090. But having a 3060ti, the 5090 seems like it would be a really nice upgrade. -

hotaru251 Reply

the 90 cards are always "good" (just expensive) and most people shouldn't get it as 80 tier is generally good for majority of ppl.hollywoodrose said:so this is the first negative thing that I’ve read.

when i say 50sku i mean the 1050, 2050, 3050.

The 4060 is special becasue its got a gimped memory bus which gutted its performance & why ppl hate the thing...if they gave it the same 192bit bus as prior 60's the gpu would of been an amazing gpu for $400.