AMD Ryzen AMA

Last week AMD joined us for a very special Ryzen AMA. Dive into our lengthy digest to learn about the past, present, and future of Ryzen.

Versus Kaby Lake, FreeSync TVs, and Frame Latency

PC-Cobbler: Many corporations have discovered to their great dismay that China does not respect IP ownership. Last April, AMD signed a technology transfer agreement with THATIC, a subsidiary of the Chinese Academy of Sciences, one of China’s national research institutions. Did AMD cut its own throat with this deal?

DON WOLIGROSKI: This really isn't in my purview. I can say that AMD has good relationships with its partners that we build on trust. I can also say my colleagues are sharp and know what they're doing.

hendrickhere: Big AMD fan - always have been. Why should a standard PC user who is primarily interested in gaming and graphic software performance chose Ryzen and it's AM4 platform over a competing platform of a similar caliber?

DON WOLIGROSKI: Simple questions can be the most nuanced to answer, so bear with me. You've got two use cases here: Graphics Software and Gaming.

1. Graphics Software

This isn't even a contest. We absolutely crush Intel at every price point if you're doing any graphics rendering. We have three times the threads in the Core i5 segment, and double the threads of the Core i7 Kaby Lake segment in the consumer desktop space. We have very high single threaded performance, combined with a massive multi-threading advantage, and this makes Ryzen a very deadly foe when it comes to productivity/rendering/encoding/encryption application performance.

2. Gaming

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

I don't know how old you are, but I'll date myself. Back in the old days of PC gaming, it didn't really matter what kind of CPU you had because everything out there was graphics card bottlenecked. You'd buy the cheapest CPU out there and spend the rest of your money on the graphics card. A Duron with a Radeon 8500 performed the same as an Athlon with a Radeon 8500. Gamers didn't need to waste extra money on the CPU.

As time went on, developers started to make advanced AI, more demanding assets. Things started to shift back to the CPU and platform. Now in 2017, you want a decent 4-core CPU minimum for serious gaming. Even game consoles run 8-core processors. IPC has become a lot more important to gaming, as has platform speed if you want the highest frame rates at 1080p.

With the introduction of Ryzen, AMD is back in the high-end gaming segment. The graphics card is still the bottleneck in a practical sense, but primarily only at HD+ resolutions (1440p, 4K, and VR) So, if you're playing games at 1440p and above (and you really should be with a decent processor, because HD+ is so pretty), Ryzen is fast enough to move that gaming bottleneck back to the graphics card where it belongs. It's the good old days again, baby!

If you're playing at 1080p (and let's be fair, that's still the most prevalent resolution out there), the bottleneck gets shifted back to the platform and CPU. That's where we see Intel's Kaby Lake pull ahead of Ryzen in some cases. This surprised a lot of people, because Ryzen is such a dominating force in applications, why do we drop behind in some outliers?

Let's talk about that. A few points to frame this 1080p gaming conversation:

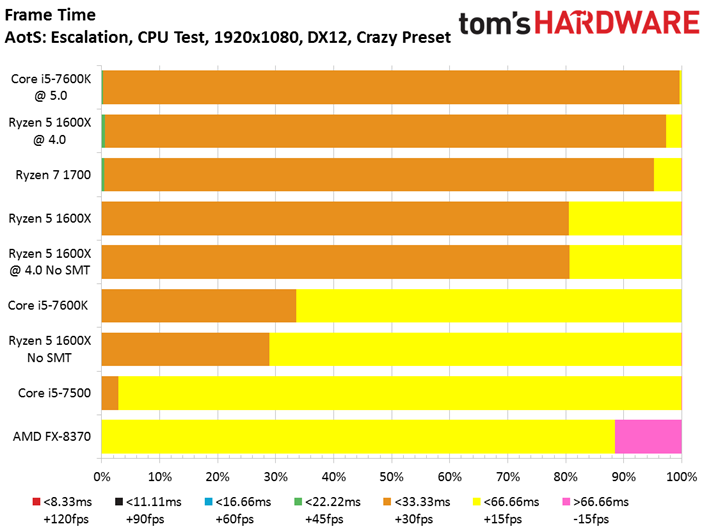

- Ryzen is never slow at gaming in 1080p, it's just not as fast as Kaby Lake in certain game benchmarks. For example, if the Core i7-7700K gets 200 FPS, and Ryzen gets 150 FPS, that's a technical loss of 25%. In real world terms there's no practical advantage to 200 FPS over 150 FPS. Hell, most 1080p monitors are 60 Hz, which means you can't really get a meaningful benefit from higher frame rates than 60 FPS.

- At 1080p, I'm not aware of any game that is so limited by Ryzen that 60 FPS is not achievable. In many games, Ryzen's 1080p performance is well above 80 FPS and 120 FPS. Even for people with ultra-high-end 144Hz monitors, Ryzen can get the job done if you're willing to adjust detail settings, which you’ll often have to do on Kaby Lake to get those frame rates.

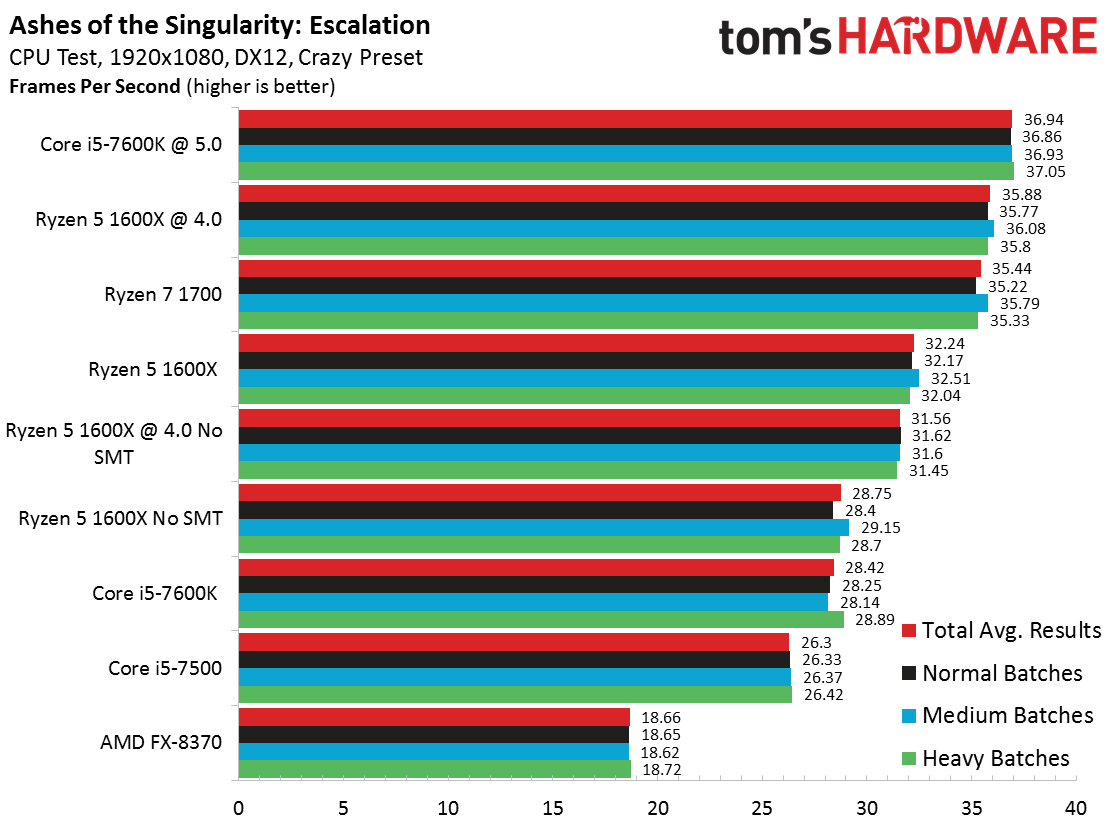

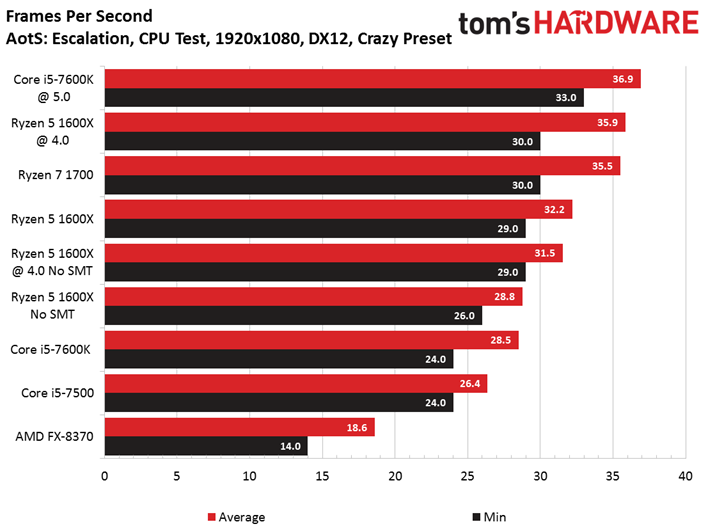

- Ryzen is getting a lot faster at 1080p gaming. Ryzen is a brand-new CPU, and in the month since we launched we engaged developers to address DOTA2, Ashes of the Singularity, and Warhammer: Total War to deliver faster Ryzen performance. That's in a month. At the same time, platform limited titles are gaining a benefit from our RAM-speed ramp. And we're delivering other updates like a better Windows power plan and a Ryzen Master Overclocking Utility that doesn't require HPET clock to be enabled, which also helps performance. You're going to see an uplift in Ryzen 1080p game performance in the April 11th launch day articles, and we're just getting started.

- Developers tend to make use of as many resources as you provide - over time. You will see games take advantage of more cores and threads organically, especially now that we have new graphics APIs like DirectX 12 and Vulkan to take advantage of them.

So, to Summarize:

1. Graphics software: Clean Kill for Ryzen. Your productivity/render/encoding/encryption wait times will be significantly longer on similarly-priced Intel competition.

2. Gaming: Virtually identical 1440p, 4K, VR game performance as the competition, and extremely smooth high-performance 1080p gaming (if not the fastest), combined with better prospects for the future thanks to advanced graphics APIs like DirectX 12 and Vulkan. Advantage: Ryzen!

tredeuce: How long do you and others at AMD get to celebrate the success before you have to move on to the next task or project? I know innovation and competition never ends, but surely I hope y'all can enjoy how well AMD is doing right now.

DON WOLIGROSKI: Wait, we get to sit back celebrate our success? Being in the tech industry means you're never coasting. I don't see a significant reduction in the foreseeable future. But better busy than bored!

redgarl: Are there any plans to add FreeSync technology to TVs? Can we expect a console like Scorpio to have positive effect for AMD GPU performance on the PC?

DON WOLIGROSKI: We will probably see television manufacturers adding FreeSync technology to their products. It seems like an inevitable no-brainer to me, but you never know. I think it's a much clearer path to monitor domination for FreeSync.

I’m not sure what you mean by your second question. Are you asking if our experience working with console manufacturers gives us design and engineering insights that we use for future PC products? If that’s your question, then yes, I think our engineers take all the lessons they learn from console gaming and apply those lessons to the PC where it makes sense. We're a very gaming focused company here at AMD, so it's a natural progression.

jaymc: There have been many online reports of a "Silky Smooth" gaming experience. Is this a real phenomenon or a placebo effect? If it is real then what do you think causes this affect? Is it mouse latency, more cores and threads, or a combination of the two? Also, can you verify if it is possible for gamers to reduce their mouse latency by bypassing the chipset, and connecting the mouse directly to the CPU via USB 3.1?

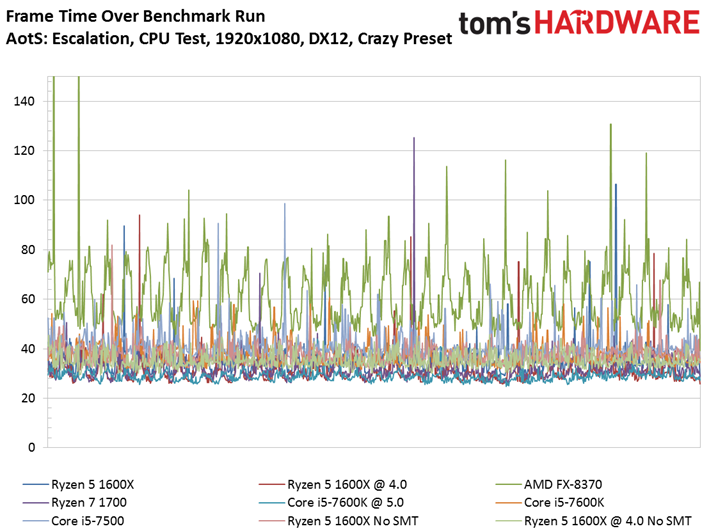

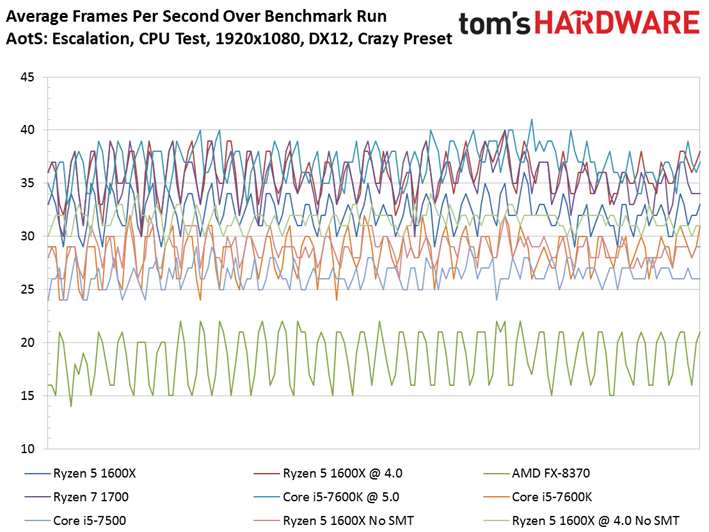

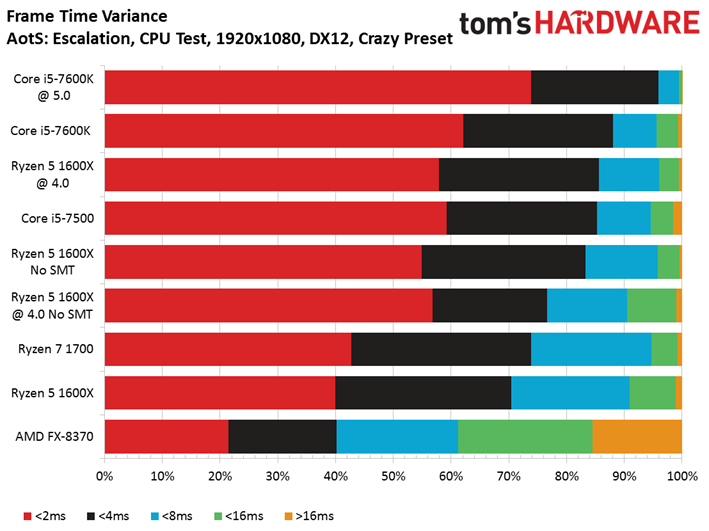

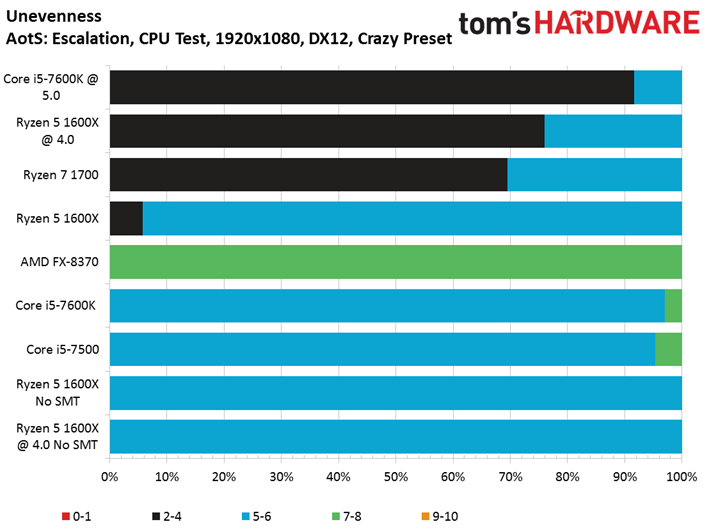

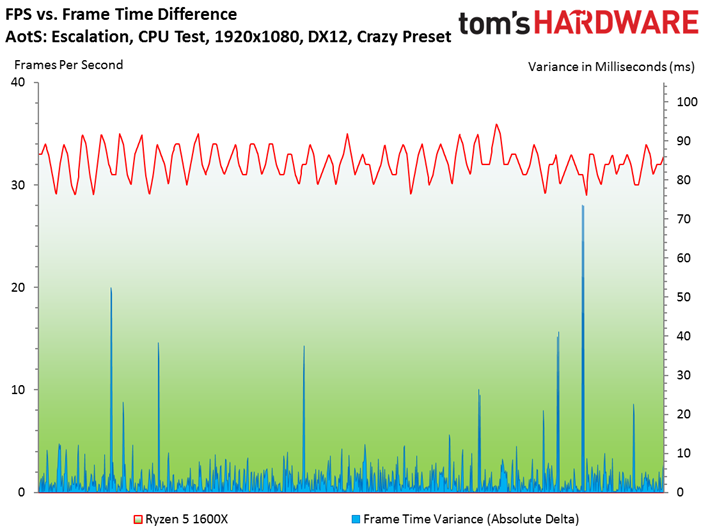

DON WOLIGROSKI: I personally believe that having all of those extra core/thread Ryzen resources at the PC's disposal means that the windows scheduler can throw requests at resources without affecting the game, where it otherwise might have had the slightest impact on the experience. I've personally noticed that Ryzen gaming has been very smooth for me, but is there placebo there? Hard to say. I do plan to address this with testing in the future, to see if we can quantify this objectively. We do know that Ryzen's 99th percentile frame times are very good.

As for mouse latency, I don't have any numbers on this. I'd love to see someone dig in to this. If there's placebo anywhere, though, I suspect this is where it is. Even the 500Hz mouse polling rate on USB 2.0 seems like it should be sufficient to me, but admittedly I'm not a mouse performance purist and haven't looked deeply at this, or run any compares myself.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPU Content

Current page: Versus Kaby Lake, FreeSync TVs, and Frame Latency

Prev Page Ryzen 2, Wraith Coolers, and Infinity Fabric Next Page Cooler Brackets, Gaming, and Console LessonsThe Tom's Hardware forum community is a powerful source of tech support and discussion on all the topics we cover from 3D printers, single-board computers, SSDs, and GPUs to high-end gaming rigs. Articles written by the Tom's Hardware Community are either written by the forum staff or one of our moderators.

-

BugariaM Many people ask clear and technically interesting questions, hoping to get the same answers ..Reply

And they are answered by a person who is far from the technical plane and the engineering questions.

He is a manager, he is a salesman.

His task is more blah-blah, only for the sake of an even greater blah-blah.

Thanks, of course, but alas, I found nothing interesting for myself here. -

genz I intensely disagree Bugariam. All the info he could provide is provided and he asked people actually close to the metal when he did not know. You will not get tech secrets or future insights from ANY AMD or Intel rep on tomshardware; Its far too public and every drop of information here is also given to Intel, Nvidia, and any other competitors hoping to steal AMDs charge. What we did get is a positive outlook on AMD's products.... when you compare that to what we already had from Toms and other publishers who have spent years watching Intel lead and thus don't have faith (or simply got their jobs for their love of Intel) was major.Reply

I personally think he did not remind us that the current crop of 8 core consoles will inevitably force AMD's core advantage to eat all the competition Intel currently has. In 5 years every single Ryzen 1 processor will terrorize the Intel processors they competed with.... Ryzen 5s will have 50% performance gains over Kaby i7 etc etc.

Intel knew this was the future, that is why all Intel consumer processors have stuck to 4 cores to try and keep the programming focus on their IPC lead. Now that that lead is only 6% and the competition has more cores, we will see the shift toward 6+ cores that we saw when Core 2 Duo came and made dual FX and Dual Pentiums viable mainstream gaming chips, and when Core Quad and Nehalem made quad cores viable gaming chips.

As the owner of a 3930k, you can read my past posts and see I have always said this is going to happen. Now, a month after you are seeing the updates come out already. Wait till there are 12 threaded games on the market (this year I expect) and you will see just how much the limitation of the CPU industry's progress was actually created by Intel's refusal to go over 4 cores in the mainstream.

For all the talk of expense creating 6 and 12 core processors, Intel could have had consumer 8 core low clock chips in mainstream for prosumers and home rendering types years ago and they didn't. My theory is that they are scared of heavily threaded applications in the mainstream creating opportunity for competition to outmanouvre their new chips based on slower, more numerous cores. It's not like a 2ghz 6 or 8 core in the mainstream was never an option. -

Calculatron I remember being really excited for the AMD AMA, but could not think of anything different from what everyone else was already asking.Reply

In retrospect, because hindsight is always 20/20, I wish I would have asked some questions about Excavator, since they still have some Bristol Ridge products coming out for the AM4 platform. Even though Zen is a new architecture, there were still some positive things that carried over from the Bulldozer family that had been learned through-out its process of evolution. -

TJ Hooker "TDP is not electrical watts (power draw), it's thermal watts."Argh, this kind of annoys me. "Electrical watts" and "thermal watts" are the same thing here, power draw = heat generated for a CPU. There are reasons why TDP is not necessarily an accurate measure of power draw, but this isn't one of them.Reply -

Tech_TTT Reply19562297 said:Many people ask clear and technically interesting questions, hoping to get the same answers ..

And they are answered by a person who is far from the technical plane and the engineering questions.

He is a manager, he is a salesman.

His task is more blah-blah, only for the sake of an even greater blah-blah.

Thanks, of course, but alas, I found nothing interesting for myself here.

I agree with you 100% ... Ask me anything should include people from the R&D department and not only sales person. or maybe a team of 2 people , Sales and Research. or even better? the CEO him/herself included.

-

genz Reply19566458 said:"TDP is not electrical watts (power draw), it's thermal watts."Argh, this kind of annoys me. "Electrical watts" and "thermal watts" are the same thing here, power draw = heat generated for a CPU. There are reasons why TDP is not necessarily an accurate measure of power draw, but this isn't one of them.

That is simply not true.

Here's an example. 22nm and 18nm TDP is usually far higher than actual draw because the chip is so small any cooling solution has a much smaller surface area to work with. Another example: When Intel brought over onboard memory controllers from the bridge to the CPU socket, the TDP of their chips went unchanged because (thermally speaking) the controller was far away enough from the chip to never contribute to thermal limitations... despite the temperature of the chip rising much faster under OC because of the additional bits, and the chips themselves drawing more power due to more components. A final example: I have a 130W TDP chip that without overvolting simply cannot reach a watt over 90 even when running a power virus (which draws the max power the chip can draw - more than burn-in or SuperPi). The TDP rating is directly connected to the specific parts of the chip that run hot and how big they are, not their true power draw. This is why so many chips of the same binning have the same TDP despite running at lower clocks and voltages than each other.

Further to that, TDP is rounded up to fixed numbers to make it easy to pick a fan. True power draw is naturally dependent on how well a chip is binned, and super badly binned chips may still run with enough volts so they usually add 10 to 20 watts for the thermal headroom to make that possible. -

TJ Hooker @genz I never said TDP is equal to power draw, in fact I explicitly said there are reasons why it isn't. I simply said that "thermal watts" (heat being generated by the CPU) are equivalent to "electrical watts" (power being consumed by the CPU). At any given moment, the power being drawn by the CPU is equal to the heat being generated.Reply

I'll admit, I'm sort of nitpicking a small part of the answer given in the AMA regarding TDP, I just felt the need to point it out because this is a misconception I see on a semi regular basis.