Xeon Woodcrest Preys On Opteron

Advanced Smart Cache

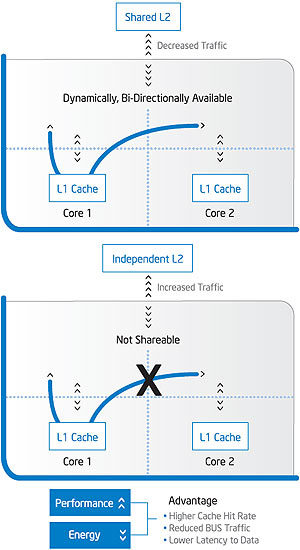

The unified L2 cache probably is the feature that is mentioned first. It allows for a large L2 cache to be shared by two processing cores (2 MB or 4 MB). Caching can be more effective because data is no longer stored twice into different L2 caches any more (no replication). The full L2 cache is highly dynamic and can adapt to each core's load, which means that one single core may allocate 100% of the L2 cache area dynamically if this is required (on a line by line basis).

Sharing data also is more efficient now, because no front side bus load is generated while reading or writing into the cache (which is the case with the Pentium D), and there is no stalling when both cores are trying to access it. A good example that shows the advantages in multi-threaded environments is one core writing data into the cache, while the other may read something else at the same time. Cache misses are reduced, latency goes down, and access by itself also is faster now, because the Front Side Bus definitely was a limiting factor.

Smart Memory Access

After developing a clearly more efficient processing architecture and a powerful L2 cache, Intel wanted to make sure that these units get used as efficiently as possible. Each Core dual-core processor comes with a total of eight prefetcher units: two data and one instruction prefetcher per core and two prefetchers as part of the shared L2 cache. Intel says they can be fine-tuned for each of the Core processor models (Merom/Conroe/Woodcrest) in order to prefetch data differently, whether it is for mobile-, desktop- or server-class usage models.

A prefetcher gets data into a higher level unit using very speculative algorithms. It is designed to provide data that is very likely to be requested soon, which can reduce latency and increase efficiency. The memory prefetchers constantly have a look at memory access patterns, trying to predict if there is something they could move into the L2 cache - just in case that data could be requested next. At the same time, prefetchers are highly tuned to watch for demand traffic, which can be a sequential data flow. In this case, prefetched caching would not make much sense.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Advanced Smart Cache

Prev Page Power Saving Mechanisms Next Page Digital Media Boost... Let's Call It SSE4?

Patrick Schmid was the editor-in-chief for Tom's Hardware from 2005 to 2006. He wrote numerous articles on a wide range of hardware topics, including storage, CPUs, and system builds.