HBM4 mass production delayed as Nvidia pushes memory specs higher — production to come ‘no earlier’ than late Q126

Timeline shift driven by Rubin GPU platform requirements and sustained Blackwell demand.

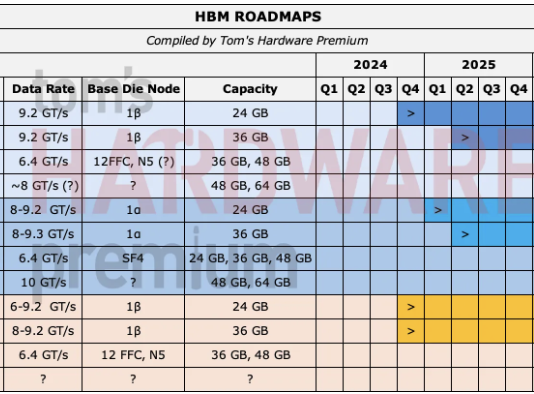

HBM4 memory is now expected to reach volume production no earlier than the end of Q1 2026, according to a new report from TrendForce. The delay stems from two converging factors: Nvidia’s decision to revise its memory requirements upward for its next-gen Rubin GPU platform, and the company’s short-term strategy to aggressively extend shipments of its current Blackwell architecture. All three major HBM suppliers have been forced to redesign their HBM4 products to meet the new specifications, pushing mass manufacturing back by at least one quarter.

This shift keeps HBM3 and HBM3e as the prevailing standards across AI and high-performance GPU deployments through at least Q1 2026. Samsung may be first to qualify, given that it has reportedly passed Nvidia’s qualification tests, but SK hynix is still expected to maintain the majority share as the primary supplier to Nvidia. Micron, a more recent entrant in the HBM market, has already begun sampling 11 Gbps-class HBM4 parts, but is still building out volume readiness.

Nvidia delays HBM mass production

The changes also realign Nvidia’s internal cadence, with the Rubin GPU line, which will use HBM4 exclusively, now set for volume availability in the second half of 2026. Rubin’s target specs are the main reason HBM4 is behind schedule. According to TrendForce, Nvidia pushed for speeds higher than 11 Gbps per pin, which required all three vendors to retool their designs. Each HBM4 stack carries 2,048 data I/Os, so a 13 Gbps upgrade pushes aggregate per-stack bandwidth to over 2.6 TB/s. That level of throughput places new stress on base die logic and thermals.

SK hynix and Samsung began delivering engineering samples to Nvidia in late 2025, but with Nvidia demanding last-minute spec changes, those parts will now be insufficient for Rubin's requirements. Samsung is said to have a slight edge on qualification, due to its newer base die process and integration stack. Still, SK hynix is expected to retain the bulk of Nvidia’s business into 2026, given its existing allocation contracts.

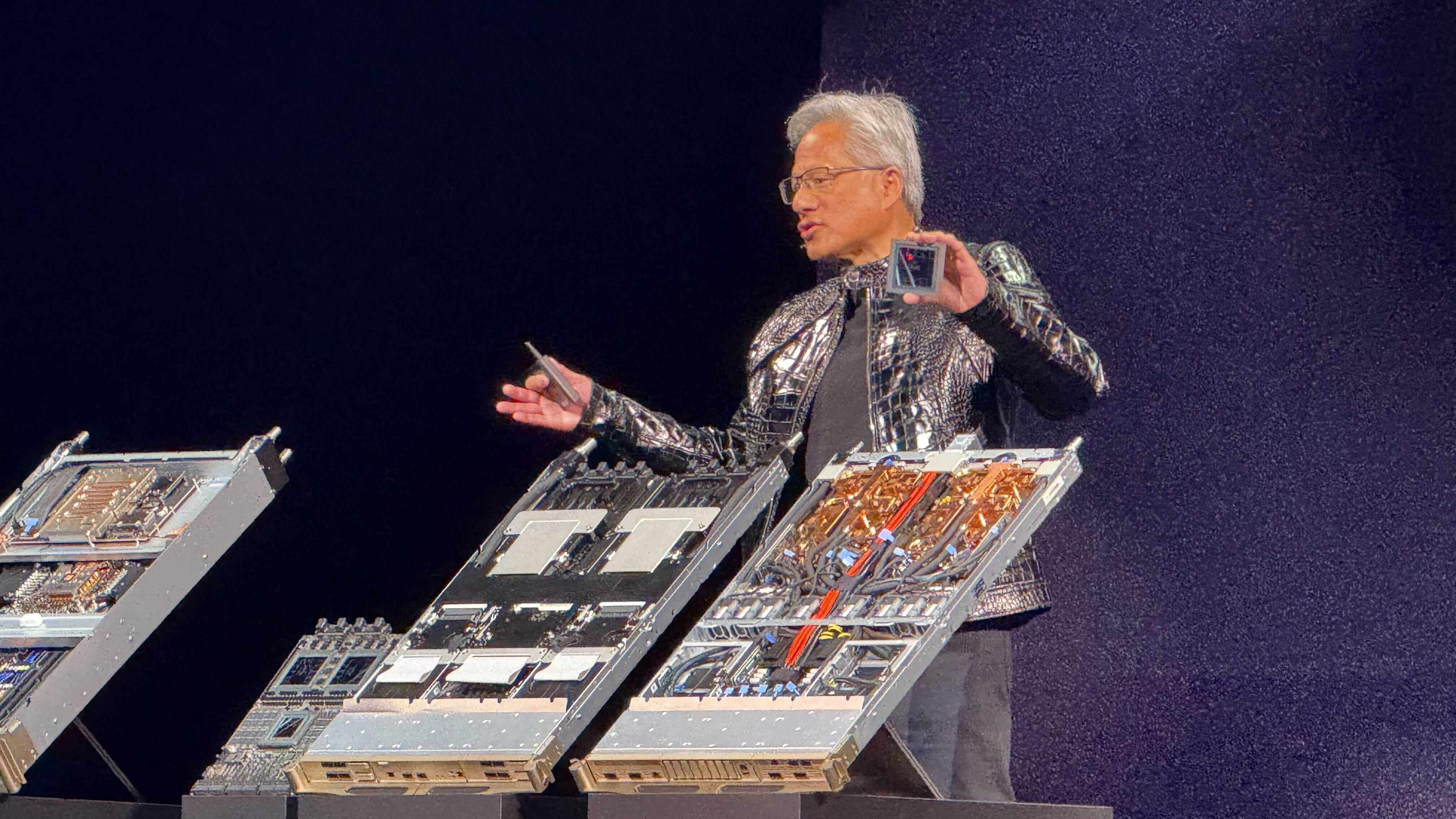

This is not just about specs, however, but also Nvidia's broader control over memory ecosystems. Its sheer amount of purchasing power gives the company the leverage to shape JEDEC standards, dictate packaging needs, and pace supplier production cycles. NVIDIA accounts for over 60% of global HBM consumption in 2024, according to Morgan Stanley, and TSMC’s advanced packaging nodes — especially CoWoS — are already fully committed to Nvidia’s Blackwell and Grace Hopper-class parts. Moving to Rubin and HBM4 implies even greater substrate complexity, requiring further capacity expansion at both the foundry and substrate partner levels

Nvidia confirmed at CES that Rubin silicon is already in full production; however, system-level availability won’t follow until much later in the year, largely due to memory and interconnect bottlenecks. Rubin will ship with up to 288 GB of HBM4 and will rely on revised versions of Nvidia’s NVLink interconnect, optimized for the increased bandwidth profile. Early Rubin configurations are expected to pair with Grace CPUs via a refreshed NVLink architecture, allowing up to 900 GB/s of coherent bandwidth per link.

HBM suppliers recalibrate for 2026 volumes

The delay offers both a challenge and a reprieve for vendors. The challenge lies in redesigning HBM4 dies to meet Nvidia’s updated timing and signal integrity requirements, but the reprieve comes in the form of extra runway — most HBM3 and HBM3e nodes are now sold out through late 2026, and the additional time allows vendors to optimize yields and scale packaging operations.

SK hynix will continue shipping the lion’s share of HBM volume throughout the quarter; it has a deep allocation pipeline with Nvidia and has committed the majority of its high-end DRAM lines to HBM production. Samsung, which initially expected HBM4 to arrive by 2025, has increased its cadence significantly and is now expected to reach high-volume HBM4 qualification sometime in Q2 2026. Micron is simultaneously ramping 11 Gbps-class HBM4 and sampling early HBM4E products with up to 16 dies and extended bandwidth ceilings.

JEDEC ratified the HBM4 standard in April 2025, specifying 2048-bit interfaces and per-pin speeds beginning at 6.4 Gbps, scaling up to over 12 Gbps. With Rubin and other high-performance AI accelerators now targeting 13 Gbps or higher, vendors are pushing the upper limits of thermal and power envelopes. Micron has said that it expects 64GB stacks to become common with HBM4E sometime after late 2027. Meanwhile, each Rubin GPU package on the NVL72 will have eight stacks of HBM4 memory delivering 288GB of capacity and 22 TB/s of bandwidth.

The delay also allows some equilibrium to form in packaging. TSMC’s CoWoS-L capacity has been under severe pressure due to Nvidia’s Blackwell and AMD’s MI300 ramp. By spacing out Rubin’s arrival, Nvidia is implicitly giving its suppliers time to expand interposer and bumping operations without triggering yield degradation or substrate shortages.

Implications for AMD, Intel, and downstream designs

Nvidia may exert outsized influence over HBM4 production, but it is not the only company exposed to delays. AMD has leaned heavily on HBM3 and HBM3e across the MI300 and MI350, with the upcoming MI400 designed around 432 GB of HBM4. If HBM4 volume production slips further, it would not just reshape Nvidia’s cadence but also place direct pressure on AMD’s MI400 rollout.

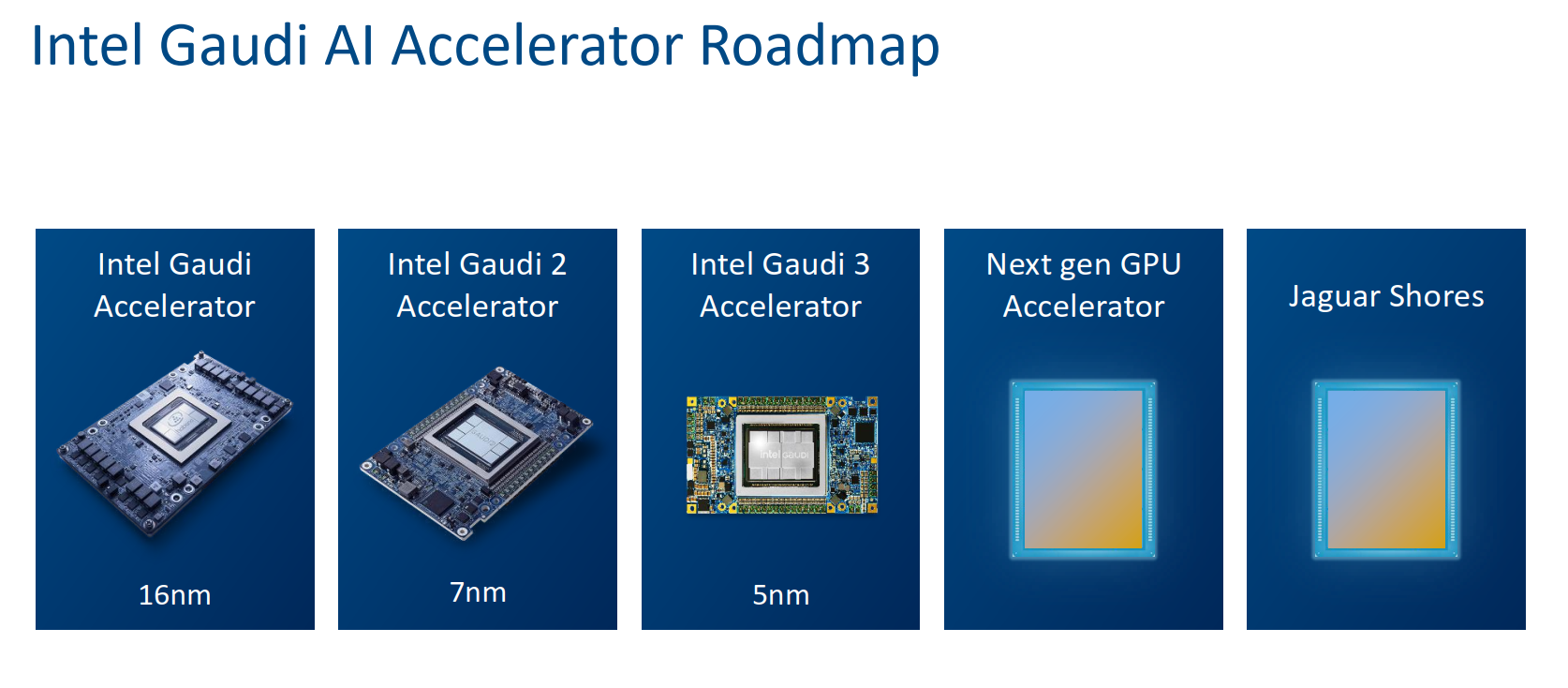

Intel’s Habana Gaudi line is still anchored on HBM2e, with 128 GB per accelerator in the Gaudi 3. The company is known to be planning a Gaudi 4-class device codenamed “Jaguar Shores”, likely for release in 2027, using HBM4E, so its current timeline remains unaffected by Nvidia’s spec shift. Intel’s packaging flows for AI silicon are distinct from Nvidia’s, and its later entry into HBM4 adoption may allow it to bypass early yield limitations.

The real downstream impact may surface in how HBM availability shapes product segmentation. Nvidia’s highest-end Blackwell and Rubin GPUs will continue to monopolize advanced memory stacks and interposer capacity, effectively limiting HBM4 to premium datacenter SKUs. There is currently no sign of HBM4 migrating into consumer GPUs or gaming cards, given the absence of any new GPU announcements at CES. Even as GDDR7 supply tightens, Nvidia has not shown any intent to merge AI and GeForce memory standards.

With Rubin silicon now in full production and mass memory availability locked to late Q1 or early Q2 2026, the HBM race continues — just a quarter later than planned.

Luke James is a freelance writer and journalist. Although his background is in legal, he has a personal interest in all things tech, especially hardware and microelectronics, and anything regulatory.